Homework Assignment 1

There are many published papers on the topic of P1 and the authors cite some of them. Explain

what the authors do differently from these papers they cite, and how do they show/argue that

their approach is a better choice?

Comparison with paper [3] Muhammad et al.

In paper [3] author does not provide explanation in natural language. It compares

the recommended item on how it is better than other alternatives in percentage%.

But in the paper [1] author provides a natural language justification using automatic

text summarization using relevant aspects and evaluation results showed that

people like long justification about recommendation and find it more trustworthy

and engaging.

In paper [3] authors took both positive and negative reviews to present explanation for

recommendations and provided pros and cons of particular item with other alternatives. In

paper [1] author only considers positive sentiments of people as normally we see

recommendation of items that are good and gives justification about that recommendation.

Comparison with paper [4] Chang et al.

Paper [4] also provides natural language explanation for justification of

recommendation but uses crowd-sourcing platform to write explanation and

manually annotate sentences. They use unsupervised learning to generate topical

dimension and data mine relevant quotes for selected dimension. Based on user

interest of topical dimension justification is provided in natural language. In paper

[1] author provides an automated pipeline for providing natural language

justification instead of manually annotating sentences by crowd.

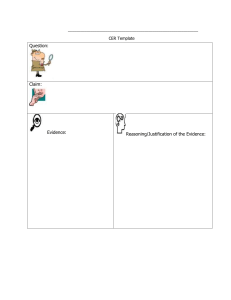

The approach in P1 has several components. Briefly explain these components and draw a

picture that shows what inputs they take, how they interact with each other and what output is

finally produced.

Input

Review Set R = {r1,r2,…rn}

Aspect Extraction

KL Divergence method to extract aspects from reviews

image source – [1]

Tuple Output ={𝑟𝑖, 𝑎𝑖𝑗, 𝑟𝑒𝑙(𝑎𝑖𝑗, 𝑟𝑖), 𝑠𝑒𝑛𝑡(𝑎𝑖𝑗, 𝑟𝑖)}

Aspect Ranking

Global score for each aspect is calculated for ranking

image source- [1]

Top-k main aspects

Sentence Filtering

Review 𝑟𝑖 ∈ 𝑅 in sentences 𝑠𝑖1 … 𝑠𝑖𝑚

Keep sentences matching the criteria:

o si contains main aspect a1,a2….ak

o si is no longer than 5 tokens

o si expresses a positive sentiment

o si does not contain first-person personal or possessive

pronuns

Potential candidate sentences

o

o

o

Text Summarization

Centroid Vector Building

Sentence Scoring

Sentence Selection

Output

Summary for justification of a recommendation

In the methodology part author have mentioned 4 phases of pipeline used to natural language

justification for recommendation for items. These are explained below:

Aspect Extraction

o

o

o

o

Goal of this phase was to find aspects with positive sentiments which distinguishing

properties of an item and are worth to be included in recommendation justification.

When the review ri is given as input all the nouns were extracted using POS tagging. Then

using KL divergence is calculated for each noun by using set of movie reviews corpora and

British National Corpus.

Nouns whose KL divergence score is greater than a threshold ∈ are marked as aspect and

their KL divergence score is used as relevance score rel(aij, ri) for that aspect.

Finally using sentiment analysis algorithm sentiment sent(aij, ri) relate to each aspect aij in

review ri is generated and stored. This phase outputs a set of 4 tuples (ri, aij, rel(aij,ri),

sent(aij,ri).

Aspect Ranking

o

o

o

Goal is to identify most relevant aspect that describes an item and merge information

extracted from every review.

For each aspect a global score is calculated using the formula given in figure 1 (where naj ,ri is

the number of occurrences of aspect aij in review ri) and it gives higher score to the aspects

that are mentioned often with a positive sentiment.

The aspects are ranked using global score and top-k aspects are labelled as main aspect and

is given as output to next phase.

Sentence Filtering

o

o

o

Goal is to filter out non-compliant sentence that will not be useful for final justification for

recommendation.

Each review ri is split into sentences si1,…sim and for each sentence si compliancy is verified

using criteria mentioned in figure 1. We keep only those sentences that are compliant.

In justification we only include relevant aspect and expressing a positive sentiment about

the item. Filter out short, non-informative and those using first person.

Text Summarization

o

o

o

o

Goal is to generate unique summary to be used as justification for recommendation which

covers main contents in reviews about a particular item.

Centroid Vector Building: Information coming from reviews are summarized in a centroid

vector. Centroid vector is built in 2 steps. First, the most meaningful words are selected

using tf-idf weighing scheme. Second, centroid embeddings are calculated using sum of

embedding of top ranked words in the reviews.

Sentence Scoring: We need to identify sentences which we need to include in final

justification for particular recommendation. We calculate cosine similarity between centroid

and each sentence.

Sentence Selection: Sentences are sorted in descending order of their similarity and we

select top ranked sentence and add to the summary until we have reached the limit of

maximum number of terms.

To evaluate their method, the authors of P1 consider various factors that affect performance

and set up alternative configurations. List and explain the evaluation criteria and

configurations considered by the authors, and elaborate on the winner configuration.

o

There were 2 experiments conducted to evaluate the methods. Goal of 1st

experiment was to test different configurations for justification on text

summarization and the goal of 2nd experiment was to compare methodology

mentioned in paper to baseline that make use of user’s reviews without text

summarization.

o

For evaluating different configurations author used combination of varying length of

justification (short justification of 50 words and long justification of 100 words) and

number of aspects (top-10 and top-30 aspects)

o

Evaluation criteria for experiments [2]:

Transparency: Explain how a particular justification is generated

Persuasiveness: Convince users to try the system

Engagement: How much a user is interacting with the system

Trust: How much surety this system provides about a justification

Effectiveness: Help users to take good decisions.

For Experiment 1 between-subject

Each user was selected to randomly test different configuration of pipeline with varying

justification length and number of aspects and user evaluated the justification. Results were

evaluated on 5-point scale (1-strongly disagree,5-strongly agree). Results are in figure 3.

Figure 2—image source- [1]

Results: Values in figure 2 for metrics are average scores for that particular metric. Users

liked longer justification provided by the system as it provided clearer understanding of

suggestion and results were more satisfying. In relation to number of aspects best results

were for top-10 aspects meaning using 10 relevant aspects system was able to provide

quality suggestions to users.

For Experiment 2 within-subject:

In this experiment evaluation was done by comparing 2 different styles of justification.

Summary generated by the system discussed in paper and review based baseline was

presented on the same screen and results from best performing configuration (Aspect top10 and length -long) are displayed in below figure 3.

Figure 3- image source [1]

Results: Most of the users preferred technology discussed in paper which is based on

automatic text summarization and have higher trust, engagement and motivated to use this

system compared to baseline review-based technique.

References:

[1] Musto C, Rossielo G, de Gemmis M, Lops P, Semeraro G. Combining Text Summarization

and Aspect-based Sentiment Analysis of Users’ Reviews to Justify Recommendations. In

Proceedings of the RECSYS 2019 Int. Conference (pp. 383-387)

[2] Nava Tintarev and Judith Masthof. 2012. Evaluating the effectiveness of explanations for

recommender systems. UMUAI 22, 4-5 (2012), 399–439.

[3] Khalil Ibrahim Muhammad, Aonghus Lawlor, and Barry Smyth. 2016. A Live-User Study of

Opinionated Explanations for Recommender Systems. In Proceedings of the 21st

International Conference on Intelligent User Interfaces. ACM, 256–260

[4] Shuo Chang, F Maxwell Harper, and Loren Gilbert Terveen. 2016. Crowd-based

personalized natural language explanations for recommendations. In Proceedings of the

10th ACM Conference on Recommender Systems. ACM, 175–182.