Dreams of a Final Theory by Steven Weinberg [Weinberg, Steven] (z-lib.org).epub

advertisement

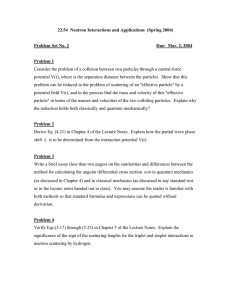

![Dreams of a Final Theory by Steven Weinberg [Weinberg, Steven] (z-lib.org).epub](http://s2.studylib.net/store/data/025678862_1-1a5661ac0e20df4049ac0df6b70c79ab-768x994.png)