Exercises in Stochastic Analysis

by V.M. Lucic1

November 16, 2020

1

Macquarie, London, United Kingdom, vladimir.lucic@btinternet.com

Preface

This, somewhat unusual collection of problems in Measure-Theoretic Probability and

Stochastic Analysis, should have been more appropriately called: “The Problems I

Like”. Problems borrowed from the existing literature typically have solutions different

to the ones in the original sources, and for those full references and occasional historical

remarks are provided. There are also original problems: Problem 3, Problem 12,

Problem 23, Problem 25 (due to V. Shmarov), Problem 26 (jointly worked out with

V. Kešelj), Problem 28 (jointly worked out with Y. Dolivet), Problem 38, Problem 41,

Problem 51, Problem 52, Problem 53, Problem 54, Problem 59, Problem 61 (due to

A. Duriez), Problem 62, and Problem 68 are original. As this collection is work in

progress, and any comments from the readers will be greatly appreciated.

1

I look for puzzles. I look for

interesting problems that I can

solve. I don’t care whether

they’re important or not, and so

I’m definitely not obsessed with

solving some big mystery. That’s

not my style.

Freeman Dyson

2

1

Measure Theory

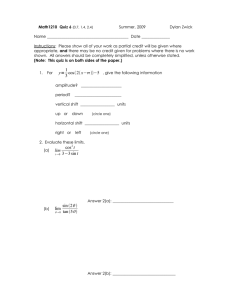

Problem 1 (Äàâûäîâ Í.À. et al. (1973), Problem 275.). Circles of identical size are

inscribed inside equilateral triangle (Figure 1). Find the limit of the ratio of the area

covered by the circles and the area of the triangle as the number of circles tends to

infinity.

Figure 1

Solution. The limit in question equals the proportion of the area of triangle conπ

necting the centers of three inner circles covered by those three circles, which is 2√

.

3

Problem 2 (Mathematics Magazine, Problem 1919). For the two pictures below, let

Pn denote the proportion of the big circle covered by the small circles as function of

the number of the small circles in the outer layer. Find the expressions for Pn and

compute limn→∞ Pn .

3

Solution. Let R be the radius of the big circle, rn be the radius of the small circles

in the outer layer, and Rn be the radius of the circle encompassing all small circles

except those in the outer layer. Then in both cases Pn R2 π = nrn2 π + Pn Rn2 π (?) and

rn

. In the first case Rn = R − 2rn , giving

sin πn = R−r

n

n sin πn

nrn

π

=

, lim Pn = .

n→∞

4(R − rn )

4

4

p

In the second case we have (R − rn ) cos πn − (rn + r̂n )2 − rn2 + r̂n = Rn , where r̂n is the

n

. The last two relations yield

radius of the circle in the second outer layer, sin πn = Rnr̂−r̂

n

π

R2 to be substituted in (?), whence we get an expression for Pn and limn→∞ Pn = 2√

3

(which is, interestingly, the same result as in Problem 1).

Pn =

Problem 3. Suppose we play the game where I draw a N (0, 1) random number A,

show it to you, then draw another independent N (0, 1) one B, but before showing it

to you ask you to guess whether B will be larger than A or not. If you are right, you

win if you are wrong you lose. What is your strategy, and what will be your long term

success rate if we keep playing?

Solution. Since the numbers are zero-mean, the strategy is to guess the sign of the

difference B − A as positive if A < 0, and negative otherwise. The long-term success

rate will be

1

1

P (B − A > 0|A < 0) + P (B − A ≤ 0|A ≥ 0).

2

2

Due to the symmetry, and the fact that P (A = 0) = 0, the above expression simplifies

to P (B − A > 0|A < 0). Plotting on the (a, b) Cartesian plane the part of the region

a < 0 where b − a > 0, we see that this is one and a half quadrants out of the two

comprising the full a < 0 region. Therefore, due to the radial symmetry of the Gaussian

two-dimensional distribution with zero correlation, we conclude that the success rate

is 34 .

Problem 4 (AMM, Problem 12150). Let X0 , X1 , . . . , Xn be independent random variables, each distributed uniformly on [0, 1]. Find

E min |X0 − Xi | .

1≤i≤n

Solution. Let L be the expression in question. Then

Z 1 Z 1Z 1

L=

E min |x − Xi | dx =

[P (|X0 − x| ≥ u)]n du dx.

0

1≤i≤n

0

4

0

Since P (|X0 − x| ≥ u) = max(1 − u − x, 0) + max(x − u, 0), x, u ∈ [0, 1], we have

0 ≤ u < x,

1 − 2u,

1 − u − x, x ≤ u < 1 − x,

x ∈ [0, 1/2],

P (|X0 − x| ≥ u) =

0,

1 − x ≤ u ≤ 1,

so

Z

1/2

Z

x

n

Z

(1 − 2u) du +

L=2

0

0

1−x

(1 − u − x) du dx =

n

x

n+3

.

2(n + 1)(n + 2)

Problem 5. Suppose X1 , X2 , . . . , Xn are independent, identically distributed random

variables. Show that

X12

,

X12 + X22 + · · · + Xn2

X22

X12 + X22 + · · · + Xn2

are negatively correlated.

Pn

Xi2

Solution. With Zi := X 2 +X 2 +···+X

2 we have covar(Z1 ,

i=1 Zi ) = covar(Z1 , 1) = 0, so

n

1

2

Pn

var(Z1 ) + i=2 covar(Z1 , Zi ) = 0. Due to symmetry covar(Z1 , Zi ) = covar(Z1 , Z2 ), i >

1)

2, which thus equals − var(Z

< 0.

n−1

Problem 6 (Kolmogorov Probability Olympiad 2017). Let X, Y be IID random

variables such that P ({X = 0}) = 0. Show that

XY

E

≥ 0.

X2 + Y 2

Solution. Put

2

f (a) := E XY exp(−a(X 2 + Y 2 )) = E X exp(−aX 2 ) ≥ 0,

a ≥ 0,

and integrate over [0, ∞).

Problem 7 (Kolmogorov Probability Olympiad 2015). Random vector X = (X1 , X2 , . . . , Xn )

is uniformly distributed on the unit sphere in Rn . Find M2 = E[X12 ] and M4 = E[X14 ].

5

P

Solution. Since Xi are identically distributed and ni=1 Xi2 = 1, it readily follows

that M2 = 1/n.

P

For M4 , first note that by squaring ni=1 Xi2 = 1 and taking expectation we obtain

nM4 + n(n − 1)V12 = 1,

(1)

where V12 = E[X12 X22 ]. Thus to complete the solution,we need another

relation

X1√

+X2 X1√

−X2

between M4 and V12 . To this end, note that (X1 , X2 ) and

,

are identi2

2

h

i

2

2

cally distributed. Hence E (X1 +X2 ) 4(X1 −X2 ) = E[X12 X22 ], which after expanding gives

M4 = 3V12 . Combined with (1) this yields M4 =

3

.

n(n+2)

Remark 1. A common way to generate uniform distribution on the sphere is to take

, where Zi are independent standard Normal random variables.

Xi = √ 2 Z2i

2

Z1 +Z2 +···+Zn

Problem 8 (Shiryaev (2012), Problem 2.13.4.). Let Zi , i = 1, 2, 3 be independent

standard Gaussian random variables. Show that

Z1 + Z2 Z3

Z := p

∼ N (0, 1).

1 + Z32

Solution. The conditional distribution of Z given Z3 is a standard Gaussian (not

depending on Z3 ), hence the claim.

Problem 9 (Torchinsky (1995), Problem VI.4.24). Suppose that Ω is a set, (Ω, G ) is

a measure space, and Z : Ω → R is a given mapping. Then Z is G measurable iff

Z=

∞

X

λi IAi

(2)

i=1

forPsome {λi } ⊂ R and {Ai } ⊂ G . From (2) deduce the First Borel-Cantelli Lemma:

if ∞

i=1 P (Ai ) < ∞, then P (lim sup An ) = 0.

Solution. The ”if” part follows directly from the observation that the

P mapping

given by (2) is the pointwise limit as n → ∞ of the G measurable mappings ni=1 λi IAi .

For the opposite direction, first suppose that Z is nonnegative. Define

Z1 := I{Z≥1} ,

n

X

1

Sn :=

Zi , Zn+1 := I{Z− 1 ≥Sn } ,

n+1

i

i=1

6

n ≥ 1.

P 1

We claim that Z = lim Sn = ∞

i=1 i Zi (note that lim Sn exists).

Using induction we first show that Sn ≤ Z, ∀n ∈ N. Clearly S1 = I{Z≥1} ≤ Z.

1

Suppose that the claim holds for some m ∈ N, and denote {Z − m+1

≥ Sm } by A.

1

Then on A we have Z ≥ m+1 + Sm , while, by the inductive hypothesis, on Ac we have

1

IA + Sm = Sm+1 , which completes the proof by induction.

Z ≥ Sm . Thus Z ≥ m+1

It remains to show that lim Sn ≥ Z. Fix an arbitrary ω ∈ Ω. If Z(ω) = ∞, we

have Zn (ω) = 1 ∀n ∈ N, and the result follows. If Z(ω) < ∞, suppose that on the

contrary Z(ω) − lim Sn > 0, and find k ∈ N such that k1 < Z(ω) − lim Sn . Then, since

1

Sn ≤ lim Sn , we have Z(ω) − n+1

≥ Sn (ω), ∀n ≥ k, and so Zn (ω) = 1, ∀n ≥ k. But

then lim Sn = ∞, which is a contradiction.

In the general case we write Z = Z + − Z − and apply the above result to both parts

(since Z + and Z − live on disjoint sets, there is no additional convergence issues in

merging the two series).

P

The First Borel-Cantelli Lemma is essentially the statement that if Z = ∞

i=1 IAi

is integrable, then Z < ∞ a.s.. Problem 10 (Doob’s Theorem). Suppose that Ω is a set, (F, F ) is a measurable

space, and X : Ω → F is a given mapping. If a mapping Z : Ω → R̄ is σ{X}

measurable, then there exists some F -measurable mapping Ψ : F → R̄ such that

Z(ω) = Ψ(X(ω)), ∀ω ∈ Ω.

Solution. By Problem 9, we have

Z(ω) =

∞

X

λi I{X∈Γi } (ω), ∀ω ∈ Ω

i=1

for some {λi } ⊂ R and {Γi } ⊂ F , and so

Z(ω) =

∞

X

λi IΓi (X(ω)), ∀ω ∈ Ω.

i=1

Define Ψ : F → R as

Ψ(x) :=

∞

X

λi IΓi (x), x ∈ F.

i=1

Since Z + and Z − live on disjoint sets, for every x ∈ F the terms of the series Ψ(x) have

the same sign, and so Ψ(x) is well defined. Furthermore, Ψ is clearly F measurable

and Z(ω) = Ψ(X(ω)), ∀ω ∈ Ω.

7

Problem 11 (Torchinsky (1995), Problem VIII.3.13). Let {Xn } be a sequence of

nonnegative random variables on a probability space (Ω, F , P ). If {Xn } is uniformly

integrable, we have

lim sup E[Xn ] ≤ E[lim sup Xn ].

Solution. Let λ > 0 be arbitrary. By Fatou’s Lemma we get

lim inf E[(λ − Xn )I{Xn <λ} ] ≥ E[lim inf(λ − Xn )I{Xn <λ} ],

which implies

E[lim sup Xn ; {Xn < λ}] ≥ lim sup E[Xn ; {Xn < λ}].

(3)

Since {Xn } is uniformly integrable for every > 0 there exists λ > 0 such that

E[Xn ] < E[Xn ; {Xn < λ}] + , ∀n ∈ N,

Using (3) we now get

lim sup E[Xn ] ≤ lim sup E[Xn ; {Xn < λ}] + ≤ E[lim sup Xn ; {Xn < λ}] + ≤ E[lim sup Xn ] + .

Since > 0 is arbitrary, the result follows.

Remark 2. A different proof and related results can be found in Darst (1980).

Remark 3. An interesting consequence of the above Problem is the following simple

proof of Vitali Convergence Theorem (see e.g. Folland (1999)): assuming the statement

of the theorem, let us define

Yn = |Xn − X|, n = 0, 1, . . . .

Then, according to Remark 2.6.6, the above sequence is uniformly integrable, so by

Problem 11 we have

lim sup E[Yn ] ≤ E[lim sup Yn ] = 0,

which in turn implies

lim E[|Xn − X|] = 0.

Since E[|X|] ≤ E[|Xn |] + E[|Xn − X|], ∀n ∈ N, from the above we also have E[|X|] <

∞, which completes the proof of the theorem.

Problem 12. Let (Xi )∞

i=1 be a sequence of uniformly integrable nonnegative random

variables with E[Xn ] = 1, n ∈ N. Then P (lim supn→∞ Xn > 0) > 0.

8

Solution. By the assumption limK→∞ supn E[(Xn − K)+ ] = 0, so for some K > 0,

> 0 we have lim inf n→∞ E[Xn − K]+ ≤ lim supn→∞ E[Xn − K]+ < 1 − . In view of

1 − E[Xn ∧ K] = E[Xn − K]+ , this gives lim supn→∞ E[Xn ∧ K] > . By Fatou lemma

then E[lim supn→∞ Xn ∧ K] > , whence the claim follows.

Problem 13 (AMM, Problem

Let (Xi )∞

i=1 be a sequence of nonnegative

√

P∞10372(a)).

random variables P

such that n=1 Mn < ∞, Mn = E[Xn ]. Show that there exists a

convergent series ∞

n=1 an and a random variable N such that P ({Xn ≤ an for n ≥

N }) = 1.

P

√

Solution. Set an = cn , En = {Xn > an }. Then P (En ) ≤ an , hence ∞

n=1 P (En ) <

∞. Therefore by the first Borel-Cantelli Lemma, P (lim supn→∞ En ) = 0, which implies

the desired result.

∞

Problem 14 (Student

P∞ Olympiad, Kiev 1983). Let (An )n=1 be events on a probability

space such that n=1 P (An ) < ∞. Put

Bn := {ω | ω ∈ An for at least m values of the index n}.

Show that Bm is an event, and that

∞

1 X

P (Bm ) ≤

P (An ), m ∈ N.

m n=1

P

and Bm =

Solution. Put Z(ω) := ∞

n=1 IAn (ω). Then Z is a random variable

P∞

{Z ≥ m}. Futhermore, from the Markov inequality mP (Bm ) ≤ E[Z] = n=1 P (An ).

Problem 15 (Student Olympiad, Kiev 1983). Let ξ, η be i.i.d. random variables with

P (ξ ∈ [0, 1]) = 1. Then for each > 0

P (|ξ − η| < ) ≥

1

1

1+

This estimate is sharp.

h i

Solution.[V. Shmarov] Put k = 1 ,

A0 = [0, ), A1 = [, 2), ..., Ak−1 = [(k − 1), k), Ak = [k, 1].

9

Then

P (|ξ − η| < ) ≥

k

X

P (ξ, η ∈ Ai ) =

i=0

k

X

P (ξ ∈ Ai )2 ≥

i=0

1

k+1

k

X

!2

P (ξ ∈ Ai )

i=0

=

1

,

1+k

where the last inequality follows by the Jensen’s inequality. The equality is attained

on the discreet uniform distribution on the set {0, , 2, ..., k}.

Remark 4. Let F denote

R 1 the common cdf of ξ and η. The probability in question

can be written as 1 − 2 F (x − ) dF (x). For the special case = 1/2, due to the

monotonicity of the integrand, the optimal F is constant on [1/2, 1), with F (1/2)

solving maxx∈[0,1] x(1 − x). Thus, the F achieving the optimum satisfies F (x) = 1/2,

x ∈ [1/2, 1), and is arbitrary otherwise. For any > 0 analogous reasoning imples that

optimal F is piecewise constant on ontervals [i, (i + 1)). Denoting by xi , the ”jumps”

of F , this leads to the optimization problem

Pk

x1 x2 + (x1 + x2 )x3 + · · · + (x1 + · · · + xk−1 )xk ,

max

i=1 xi =1, xi ≥0

whose solution is readily shown to be xi = 1/k, 1 ≤ i ≤ k.

Problem 16 (J. Karamata). Let (an )n≥0 be a real-valued sequence such that an ≥ −M ,

M ≥ 0, and

∞

X

an tn = s.

(4)

lim (1 − t)

t→1−

Then

lim (1 − t)

t→1−

∞

X

n=0

n

n

Z

an f (t )t = s

1

f (u) du

0

n=0

for every bounded, Riemann-integrable f .

Solution. For the case of monomial, f (t) ≡ tp , p ∈ N we have

lim (1 − t)

t→1−

∞

X

n=0

an f (tn )tn = lim (1 − t)

t→1−

∞

X

an tn(p+1)

n=0

Z 1

∞

X

1−t

s

p+1

p+1 n

= lim

(1 − t )

an (t ) =

=s

tp dt.

t→1− 1 − tp+1

p

+

1

0

n=0

Therefore, the claim holds for any polynomial, and, by the density argument for any

bounded, Riemann-integrable f .

10

Problem 17. Let f : [0, ∞) 7→ R be Riemann-integrable and such that f (u)eu is

bounded for some > 0. Then

Z ∞

∞

X

lim h

f (nh) =

f (x) dx.

h→0+

Solution. Put a = 1/, g(t) ≡

lim (1 − t)

t→1−

∞

X

f (−a ln(t))

.

t

n

lim (1 − t)

t→1−

n

Z

g(t )t =

According to Problem 16 we have

1

Z

g(x) dx =

0

n=0

Thus,

0

n=1

∞

X

∞

f (ax) dx.

0

Z

∞

f (ax) dx,

f (−na ln(t)) =

0

n=0

whence the result follows by putting h = −a ln(t).

Remark 5. Problem 17 complements Problem II.30 in Pólya & Szegö (1978) by replacing the requirement of monotonicity of f with the stated boundedness condition.

Other conditions can be found in Szász & Todd (1951) and references therein.

Problem 18. let S be a separable metric space and let P(S) and C̄(S) denote the

sets of probability measures on B(S) and set of all bounded continuous functions on S

respectively. A set M ⊂ C̄(S) is called separating for P(S) if whenever P, Q ∈ P(S)

and

Z

Z

f dP = f dQ, ∀f ∈ M,

we have P = Q.

Let

M0 = {f : x ∈ R 7→ f (x) ≡ (x − a)+ , a ∈ R},

M1 = span(M0 ) ∩ C̄(R).

(5)

Show that M1 is separating.

Solution. Note that for every a ∈ R the indicator function of the interval (−∞, a]

is the pointwise limit of the uniformly bounded sequence an (x) = (x − a + 1/n)+ −

(x − a)+ , x ∈ R from M1 . By the Dominated Convergence Theorem this implies that

M1 is separating.

11

Problem 19 (Ethier & Kurtz (1986), Problem 3.11.7). Let (S, r) be a separable

metric space, let X and Y be S-valued random variables defined on a probability

space (Ω, F , P ), and let G be a sub-σ-algebra of F . Suppose that M ⊂ C̄(S) is

separating and

E[f (X)|G ] = f (Y ) a.s.

for every f ∈ M . Then X = Y a.s.

Solution. The proof below proceeds from first principles. Alternatively one may

base the proof on Proposition 3.4.6 of Ethier & Kurtz (1986). Let σ(M ) denote the

σ-algebra generated by M , and for every Γ1 , Γ2 ∈ B(S) put

Q1 (Γ1 , Γ2 ) := P ({X ∈ Γ1 , Y ∈ Γ2 }),

Q2 (Γ1 , Γ2 ) := P ({Y ∈ Γ1 , Y ∈ Γ2 }).

Then for a fixed Γ2 ∈ σ(M ) the expressions µ1 (·) := Q1 (·, Γ2 ), µ2 (·) := Q2 (·, Γ2 ) define

measures on B(S), which by the initial hypothesis satisfy

Z

Z

f dµ1 = E[f (X)IΓ2 (Y )] = E[f (Y )IΓ2 (Y )] = f dµ2 , ∀f ∈ M.

Since M is separating and µ1 and µ2 have the same total mass, we conclude

Q1 (Γ1 , Γ2 ) = Q2 (Γ1 , Γ2 ),

∀ Γ1 ∈ B(S), Γ2 ∈ σ(M ).

Next, for a fixed Γ1 ∈ B(S) put ν1 (·) := Q1 (Γ1 , ·), ν2 (·) := Q2 (Γ1 , ·), so that

Z

Z

f dν1 = f dν2 , ∀f ∈ M.

(6)

Furthermore, by initial hypothesis E[f (X)] = E[f (Y )], ∀f ∈ M , and thus, since M is

separating,

P ({X ∈ Γ}) = P ({Y ∈ Γ}), ∀ Γ ∈ B(S),

(7)

which in turn gives ν1 (S) = ν2 (S). Together with (6) and the fact that M is separating

this implies ν1 = ν2 , hence Q1 = Q2 .

For an arbitrary Γ ∈ B(S) we now have

P ({X ∈ Γ, Y ∈ Γ}) = P ({Y ∈ Γ}),

which combined with (7) gives

P ({X ∈ Γ}∆{Y ∈ Γ}) = 0.

12

Therefore, for a countable base (Bi )i∈N for S we can write

X

P ({X 6= Y }) ≤

P ({X ∈ Bi }∆{Y ∈ Bi }) = 0,

i∈N

and the result follows.

Problem 20 (Statistica Neerlandica, Problem 130). Suppose X is an integrable realvalued random variable on a probability space (Ω, F , P ), and let G be a sub-σ-algebra

of F . If X and E[X|G ] have the same probability law, then

E[X|G ] = Xa.s..

(8)

If, in addition, G is P -complete, X is G -measurable.

Solution. Let M0 , M1 be defined in (5). Fix f ∈ M0 and put Y := E[X|G ]. Since

f is convex, Jensen’s inequality implies

f (Y ) = f (E[X|G ]) ≤ E[f (X)|G ].

(9)

On the other hand, by the uniqueness in law, E[f (X)] = E[f (Y )]. Thus, from (9) we

have E[f (X)|G ] = f (Y ), which in turn implies

E[φ(X)|G ] = φ(Y ),

∀φ ∈ M1 .

From Problem 19 we now get (39), hence if G is P -complete, we conclude that X is

G -measurable.

Problem 21 (Williams (1991), Exercise E9.2.). Suppose X, Y are integrable realvalued random variables on (Ω, F , P ) such that

E[X|Y ] = Y a.s. and E[Y |X] = X a.s..

Then X = Y a.s..

Solution. By Jensen’s inequality for every φ ∈ M0 we have

E[φ(Y )] = E[φ(E[X|Y ])] ≤ E[E[φ(X)|Y ]] = E[φ(X)].

By symmetry the opposite inequality also holds implying E[φ(X)] = E[φ(Y )], ∀φ ∈

M0 , hence E[φ(X)] = E[φ(Y )], ∀φ ∈ M1 . Since M1 is separating, we conclude that X

and Y have the same law, and the result follows from Problem 20.

13

Problem 22 (Kolmogorov Probability Olympiad 2006). Compute E[X|XY ] for independent N (0, 1) random variables X, Y .

Solution. E[X|XY ] = Φ(XY ) for a measurable mapping1 Φ. Hence, with X̃ :=

−X, Ỹ := −Y we get E[X̃|X̃ Ỹ ] = Φ(X̃ Ỹ ) = Φ(XY ) = E[X|XY ]. On the other hand

E[X|XY ] = −E[X̃|X̃ Ỹ ], whence E[X|XY ] = 0.

Problem 23. Suppose X, Y are nonnegative random variables, and let m := min(X, Y )

and M := max(X, Y ). Show that

E[(m + M )(E[M |m] − E[m|M ])] ≥ 0.

Solution. Since XY = mM we get E[mE[M |m]] = E[M E[m|M ]], which in turn

gives E[XE[M |m]] ≥ E[Y M E[m|M ]]. Flipping X and Y and adding up the two

inequalities gives E[(X + Y )(E[M |m] − E[m|M ]) ≥ 0, whence the result follows as

M +m=X +Y.

Problem 24. [V. Shmarov] Let F1 , F2 be two distributions with the same mean. Does

there necessarily exits a probability space (Ω, F , P ), on which we have random variable

X and two sigma algebras G , H ⊂ F such that

E[X|G ] ∼ F1

and E[X|H ] ∼ F2 ?

Solution. Let m be the common mean of F1 and F2 , and let Ω = [0, 1] × [0, 1],

with F the Borel sigma-algebra B([0, 1] × [0, 1]). Also let H and G be the sigma

algebras Ω × A, A ∈ B([0, 1]), and B × Ω, B ∈ B([0, 1]), respectivelly, and put

X = F1−1 (x1 + m − 1)F2−1 (x2 + m − 1), (x1 , x2 ) ∈ Ω. It is straightforward to check that

the above construction satisfies the required conditions.

Problem 25. [À.À. Äîðîãîâöåâ] Give an example of independent random variables

X and Y , and a Borel-measurable F : R2 → R such that 1) X and F (X, Y ) are

independent; 2) for every y the function F (·, y) is non-constant.

Solution. Let Ω = {0, 1} × {0, 1}, and for ω = (ω1 , ω2 ) ∈ Ω put P ({ω}) = 1/4,

X(ω) := ω1 , and Y (ω) := ω2 . Let F (x, y) := δx=y (x, y). Since X and Z := F (X, Y )

are binary variables, X n = X, Z n = Z, n ∈ N, so from the fact that E[XZ] =

E[X]E[Z](= 1/4) it follows that E[X n Z m ] = E[X n ]E[Z m ], n, m ∈ N, so X and Z are

independent. Since F (·, y) is constant for every y, the construction is complete.

1 C.f.

Section 9.6. ”Agreement with traditional usage” of Williams (1991).

14

Problem 26 (Counterexample related to Hunt’s lemma). Suppose (Xn )∞

n=1 is a sequence of random variables such that X := limn→∞ Xn exists a.s. and for some integrable random variable Y

|Xn | ≤ Y, a.s.,

n = 1, 2, . . . .

(10)

Then Hunt’s Lemma (e.g. Williams

(1991), Exercise E14.1.) states that for any filtra

tion {Fn }, F∞ := σ ∪n Fn we have

lim E[Xn |Fn ] = E[X|F∞ ].

n→∞

Show that the condition of uniform boundedness (10) cannot be replaced by uniform

integrability of (Xn )∞

n=1 . The counterexample constructed should have a nontrivial (i.e.

strictly increasing) filtration.

Solution. Consider a circle of perimeter 1, and let A0 an arbitrary point on the

circle. Let A1 be the point with arc 1/2 away from A0 in counter-clockwise direction,

than move further 1/3 to get A2 and so on. Let σ-algebra Fn be defined as the σalgebra generated by the first set of n segments [Ai−1 , Ai ). Thus defined sequence of

sigma algebras is clearly a filtration, Fn ⊂ Fn+1 , n ∈ N.

To define the random variable Xn , fix n ∈ N and observe that the interval [An−1 , An ) ∈

Fn may contain more boundary points Ai1 , Ai2 , . . . , Aim , ij < n−1, j = 1, . . . m, which

we denote B1 ,. . . Bm , so that [An−1 , An ) is the union of intervals [An−1 , B1 ), [B1 , B2 ),

. . . , [Bm , An ). For convenience, put B0 := An−1 and Bm+1 := An . For each interval

[Bi , Bi+1 ), let Ci be the point on this interval such that |Bi Ci | = |Bi Bi+1 |/n, where | · |

denotes the arc length between the two points. We define the random variable Xn to

be n on all intervals [Bi , Ci ) and 0 on the rest of the circle.

By construction, the probability of the set on which Xn 6= 0 is n12 . Since the series

P∞ 1

∞

n=1 n2 converges, (Xn )n=1 by the Borel-Cantelli lemma a.s. converges to X ≡ 0.

Also, as

0, n < m,

E[Xn I{Xn ≥m} ] =

n, m = 1, 2, . . . ,

1

, n ≥ m,

n

(Xn )∞

n=1 is uniformly integrable. (Note that (10) cannot hold for any integrable Y .)

On the other hand, the conditional expectation E[Xn |Fn ] on each atomic interval [Bi , Bi+1 ) is 1, hence E[Xn |Fn ] = I[An−1 ,An ) . Since lim inf n→∞ I[An−1 ,An ) = 0,

lim supn→∞ I[An−1 ,An ) = 1, the sequence E[Xn |Fn ] does not converge pointwise, while

E[X|F∞ ] = 0 a.s..

15

Remark 6. In Wei-an (1980) a related counterexample is given for the case where the

filtration {Fn } is a constant and equal to F∞ .

Problem 27 (Williams (1991), Exercise E10.2.). Let T be a stopping time on a filtered

probability space (Ω, F , Fn , P ) and supose that there exist N ∈ N and > 0 such

that

P (T ≤ n + N |Fn ) > , ∀n ∈ N.

Show that E[T ] < ∞.

Solution. We have

E[T{T >n+N } |Fn ] < 1 − ,

hence

E[T{T >n+N } |Fn ] < (1 − )I{T >n} .

Putting n = kN and taking expectation, and we obtain

P (T > kN ) < (1 − )P (T > (k + 1)N ),

giving P (T > kN ) < (1 − )k . Summing over k yields E[T /N ] < ∞. Problem 28. Let φ be the Laplace transform of a nonnegative random variable X,

φ(λ) = E[exp(−λX)], λ ≥ 0. Then for every x, y > 0

1

|φ(x) − φ(y)| ≤ | ln(y/x)|.

e

Solution. Since for x ≥ 0 we have x exp(−x) ≤ e−1 , using the fact that X is

nonnegative we obtain

X

X

dφ

1

(ξ) = E

exp −ξ

≤ , ξ > 0.

dξ

λ

λ

eξ

Thus, for every 0 < x < y

Z

y

|φ(x) − φ(y)| ≤

x

dφ

ln(y/x)

(ξ) dξ ≤

.

dξ

e

Problem 29 (Shenton’s formula, Shenton (1954)). Show that

Z ∞

Z ∞

2

x2

t2

− t2

2

e

e

dt =

e−xt e− 2 dt, x ∈ R,

x

and conclude

0

1 − N (x)

1

< ,

n(x)

x

16

x > 0.

(11)

Solution. Substituting u = t + x in the second integral in (11) yields the desired

equality. Thus

Z ∞

Z ∞

2

1 − N (x)

1

−xt − t2

=

e e

dt <

e−xt dt = , x > 0.

n(x)

x

0

0

Problem 30 (Modification of Problem X.129 in Àäàìîâ À. À. et al. (1912)). Let

Z ∞

2

Z ∞ −x2 (t2 +1)

e

−t2

F (x) :=

e dt , G(x) :=

dt, x ∈ R.

t2 + 1

x

1

R∞ 2

Show that F (x) = G(x), x ∈ R, and use that to compute 0 e−t dt.

Solution. Using the Leibnitz rule we obtain

Z ∞

2

0

0

−x2

F (x) = G (x) = −2e

e−t dt,

x ∈ R,

x

hence for some C ∈ R

F (x) = G(x) + C,

x ∈ R.

Since limx→∞ F (x) = 0 and

Z

∞

dt

→ 0 as x → ∞,

t2 + 1

1

√

p

p

R∞ 2

we get C = 0. Thus 0 e−t dt = F (0) = G(0) = 2π .

−x2

G(x) ≤ e

Problem 31 (Mitrinović (1962), Problem V.5.94). For an integrable function f put

g(x) := f (x − 1/x), x 6= 0, g(0) := 0. Show that

Z

Z

f (x) dx =

g(x) dx.

R

R

Solution. Denote by I the integral on the RHS and put x = cot u. Then

Z π

Z

f (2 cot 2u)

1 2π f (2 cot u)

I=

du =

du.

2 0 sin2 (u/2)

sin2 u

0

Splitting the interval of integration and making the substitution t = u−π in the second

integral yields

Z

Z

Z π

1 π f (2 cot u)

1 π f (2 cot t)

f (2 cot u)

du +

du,

I=

dt

=

2

2

2 0 sin (u/2)

2 0 cos2 (t/2)

sin2 u

0

R

which, after substituting x = 2 cot u, gives I = R f (x) dx.

17

Remark 7. This problem was also featured at 1976 and 1989 Student Olympiads in

USSR. The stated reference provides a different solution.

Problem 32 (J. Liouville (Kudryavtsev et al. (2003), Problem 2.220)). Show that for

every continuous f we have

Z π/2

Z π/2

f (cos2 x) cos x dx.

f (sin 2x) cos x dx =

0

0

Solution. Using

Z

π

2

(

sinm x cosn x dx =

0

(m−1)!!(n−1)!! π

,

(m+n)!!

2

(m−1)!!(n−1)!!

,

(m+n)!!

n and m are even,

else,

we can prove the identity for the polynomials, whence the result follows by the density

argument.

Remark 8. In Besge (1853) an alternative solution was given using the substitution

sin 2x = cos2 u. According to Lützen (2012), M. Besge was the pseudonym used by J.

Liouville.

Problem 33 (Wolstenholme (1878)). Show that for every continuous f we have

Z π/2

√ Z π/4

f (cos 2x) cos x dx.

f (sin 2x) cos x dx = 2

0

0

Solution. Split the integral into the integrals over [0, π/4] and [π/4, π/2], and make

substitutions t = π/4 − x and t = x − π/4 respectively.

Problem 34 (AMM, Problem 11961). Evaluate

Z π/2

sin x

p

dx.

1 + sin(2x)

0

Solution. A direct application of Problem 32 yields π/2 − 1.

18

Problem 35 (Serret (1844), also Ãþíòåð Í.Ì. & Êóçüìèí Ð.Î. (2003), pg. 401).

Evaluate

Z 1

ln(1 + x)

dx.

x2 + 1

0

Solution. Denote by I the integral in question, and let x = tan φ. Then

Z π/4

Z π/4

ln(cos φ) dφ.

ln(cos φ + sin φ) dφ −

I=

0

0

π

4

Substituting ϕ = − φ in the second integral we get

Z π/4

Z π/4 Z π/4

1

π ln 2

ln(cos φ) dφ =

ln (cos ϕ + sin ϕ) √

dϕ =

ln(cos ϕ+sin ϕ) dϕ−

,

8

2

0

0

0

whence I =

π ln 2

.

8

Problem 36 (AMM, Problem 11966). Prove that

Z 1

π 2 (ln 2)2

x ln(1 + x)

dx

=

+

.

x2 + 1

96

8

0

Solution. Put

Z

1

f (x, a) dx,

F (a) :=

f (x, a) :=

0

x ln(1 + ax)

,

x2 + 1

x, a ∈ [0, 1].

2

x

(x, a) = (1+ax)(x

Since f and ∂f

2 +1) are continuous, hence also uniformly bounded on

∂a

[0, 1] × [0, 1], F is differentiable on [0, 1], and, by the Leibniz rule, we have

Z 1

Z 1

∂f

ln(1 + a)

dx

0

F (a) =

(x, a) dx =

−

.

(12)

2

a

0 ∂a

0 (1 + ax)(x + 1)

Since

Z

1

i

dx

1 hπ a

=

−

ln

2

+

a

ln(1

+

a)

,

2

a2 + 1 4 2

0 (1 + ax)(x + 1)

integrating (12) over [0, 1] gives

Z 1

ln(1 + a)

π 2 (ln 2)2

F (1) =

da −

+

− F (1).

a

16

4

0

R1

P∞ (−1)n−1

2

Since2 0 ln(1+a)

da

=

= π12 , solving for F (1) in the above equation gives

n=1

a

n2

the desired result.

2 E.g.

Exercise 4655 in

Ãþíòåð Í.Ì.

&

Êóçüìèí Ð.Î.

(2003).

19

Problem 37 (The density of expected occupation time of the Brownian bridge has

plateau, Chi et al. (2015)). Show that the function

Z 1

1

(x − t)2

p

F (x) :=

exp −

dt

2t(1 − t)

t(1 − t)

0

is constant for x ∈ [0, 1].

Solution. Put t = cos2 u. Then

Z π

Z π

2

(cos2 u − x)2

(cos u − 2x + 1)2

F (x) = 2

exp −

du =

exp −

du,

2 sin2 u cos2 u

2 sin2 u

0

0

hence

F (x) = F (1 − x), x ∈ [0, 1] and F (1/2) = F (1) = F (0).

(13)

On the other hand, from the definition of the Hermite polynomials exp(xt − t2 /2) =

P

∞

tn

n

2

n=0 Hen (x) n! we obtain (taking z exp(−1/z ) = 0 for z = ±∞, n ≥ 0)

X

∞

x n 1

2

(cos u − x)2

− cot2 u

exp −

=

e

Hen (cot u)

, x, u ∈ R.

sin u n!

2 sin2 u

n=0

Integrating both sides gives

X

∞

x+1

an x n

F

=

, |x| < 1,

2

n!

n=0

Z

an :=

0

π

e−

cot2 u

2

Hen (cot u)

1

du,

sinn u

(14)

where the interchanging the order of integration√and summation on for |x| < 1 is

n

n

justified

using inequalities exp(−x2 /4)|Hen (x)| ≤ n!, exp(−x2 /4)|xn | ≤ (2n) 2 e− 2 ∼

√

2n n!, x ∈ R. Since He2k+1 (−x) = −He2k+1 (x), k = 0, 1, . . ., it follows that the odd

terms in (14) are zero. Next, with t = cot u we have

Z ∞

t2

a2k =

(t2 + 1)k−1 e− 2 He2k (t) dt, k = 0, 1, 2, . . .

−∞

Since for k > 0 the polynomial (t2 +1)k−1 belongs to the linear span of He0 , He1 , · · · , He2k−2 ,

from the orthogonality of the Hermite polynomials it follows that a2k = 0 for k > 0.

Therefore F is constant on (0, 1), which together with (13) gives the desired result.

20

R∞

t2

Remark 9. We have shown that F (x) = −∞ (t2 + 1)−1 e− 2 He0 (t) dt for x ∈ [0, 1],

which can be written as

F (x)

1

√

=E

, ξ ∼ N (0, 1), x ∈ [0, 1].

1 + ξ2

2π

This is the form given in Proposition 1 of Chi et al. (2015).

Problem 38 (A generalization of the “Problem of the Nile”, SIAM Review, Problem

89-6). Suppose that Pθ , θ ∈ [0, 1) is a family of probability measures on (R2 , B(R2 ))

such that (i) P0 is a product measure, (ii) Pθ is absolutely continuous

wrt the Lebesgue

measure for every θ ∈ [0, 1), (iii) limθ→1− Pθ [a, b) × [a, b) = P0 [a, b) × R . Then if

for some bounded A ∈ B(R2 ) the value of Pθ (A) does not depend on θ ∈ [0, 1), the set

A is of Lebesgue measure zero.

Solution. Fix a rectangle [a, b) × [c, d) ∈ B(R2 ) and let [ai , bi ) × [ai , bi ), i ∈ N, be

a collection of pairwise disjoint squares, such that [a, b) × [c, d) = ∪i [ai , bi ) × [ai , bi ). In

P

2

that case, from (i) we have P0 ∪i [ai , bi ) × [ai , bi ) = i P0 [ai , bi ) × R , which by (iii)

P

and the fact that Pθ (∪i [ai , bi )×[ai , bi ) is constant for θ ∈ [0, 1), implies i P0 [ai , bi )×

2 P

R = i P0 [ai , bi )×R . So each P0 [ai , bi )×R is either zero or one, the former,being

precluded by the boundedness of the intervals considered. Thus P0 [ai , bi ) × R = 0,

1 ≤ i ≤ n, so the result holds for all rectangles in B(R2 ). Finally, the collection of sets

{A ∈ B(R2 ) : A is infinite or A is a rectangle} is algebra such that the the smallest

monotone class containing it, {A ∈ B(R2 ) : A is infinite or the claim holds for A}, is

the whole B(R2 ), giving the desired result.

Remark 10. In the source above, the concrete form of Pθ was given as the twodimensional Gaussian density

Z

2θxy − x2 − y 2

1

exp

dxdy, A ∈ B(R2 ),

Pθ (A) := √

2)

2

2(1

−

θ

2π 1 − θ A

where the problem was attributed to Fisher (1936).

Problem 39 (AMM, Problem 11522). Let E be the set of all real 4-tuples (a, b, c, d)

such that if x, y ∈ R, then (ax + by)2 + (cx + dy)2 ≤ x2 + y 2 . Find the volume of E in

R4 .

Solution. Introduce the polar coordinates

x = ρ cos φ, y = ρ sin φ

a = ρ1 cos φ1 , b = ρ1 sin φ1

c = ρ2 cos φ2 , d = ρ2 sin φ2 ,

21

where the angles are taken in [0, 2π), ρ, ρ1 , ρ2 ≥ 0. Then we have

E = {(ρ1 , ρ2 , φ1 , φ2 ) : Φ(ρ1 , ρ2 , φ, φ1 , φ2 ) ≤ 1,

∀φ ∈ [0, 2π)}

where

Φ(ρ1 , ρ2 , φ, φ1 , φ2 ) := ρ21 (cos φ cos φ1 + sin φ sin φ1 )2 + ρ22 (cos φ cos φ2 + sin φ sin φ2 )2

After elementary transformations we see that (ρ1 , ρ2 , φ1 , φ2 ) ∈ E iff for every φ ∈ [0, 2π)

q

(ρ21 cos(2φ1 ) + ρ22 cos(2φ2 ))2 + (ρ21 sin(2φ1 ) + ρ22 sin(2φ2 ))2 sin(2φ + θ) + ρ21 + ρ22 ≤ 2,

where

sin θ = p

ρ21 cos(2φ1 ) + ρ22 cos(2φ2 )

(ρ21 cos(2φ1 ) + ρ22 cos(2φ2 ))2 + (ρ21 sin(2φ1 ) + ρ22 sin(2φ2 ))2

,

hence (ρ1 , ρ2 , φ1 , φ2 ) ∈ E iff

q

(ρ21 cos(2φ1 ) + ρ22 cos(2φ2 ))2 + (ρ21 sin(2φ1 ) + ρ22 sin(2φ2 ))2 + ρ21 + ρ22 ≤ 2,

so

q

2

2

4

4

2 2

E = (ρ1 , ρ2 , φ1 , φ2 ) : ρ1 + ρ2 + 2ρ1 ρ2 cos(2(φ1 − φ2 )) + ρ1 + ρ2 ≤ 2, φ1 , φ2 ∈ [0, 2π)

The integral in question can now be written as

Z 2π Z 2π

I=

Ψ(φ1 − φ2 ) dφ1 dφ2 ,

0

where

0

Z Z

Ψ(z) :=

n

√

(ρ1 ,ρ2 ) :

o

ρ41 +ρ42 +2ρ21 ρ22 cos(2z)+ρ21 +ρ22 ≤2

ρ1 ρ2 dρ1 dρ2 .

Let now u = φ1 + φ2 , v = φ1 − φ2 . Then the square [0, 2π]2 maps into the square with

vertices at (0, 0), (2π, ±2π), and (4π, 0). The Jacobian of the mapping is 21 , so since Ψ

22

is even we have

Z 4π Z 4π−u

1

1

Ψ(v) dvdu +

Ψ(v) dvdu

I=2

2

2

0

0

0

2π

Z 2π Z 2π−u

Z 2π Z u

Ψ(v) dvdu +

Ψ(v) dvdu

=

0

0

0

0

Z 2π Z 2π

Z 2π Z u

Ψ(v) dvdu +

Ψ(2π − v) dvdu

=

0

0

0

u

Z 2π Z 2π

Z 2π Z u

Ψ(v) dvdu +

Ψ(v) dvdu

=

0

0

0

u

Z 2π Z 2π

Z 2π

=

Ψ(v) dvdu = 2π

Ψ(v) dv,

Z

0

2π

Z

u

0

(15)

0

where in (15) we used Ψ(2π − v) = Ψ(v).

Put x = ρ21 , y = ρ22 . Then we have

Z Z Z

π 2π

I=

dxdydv,

2 0

Êv

p

where Êv := {(x, y) : x, y ≥ 0,

x2 + y 2 p

+ 2 cos(2v)xy + x + y ≤ 2}. Since for

0 ≤ y ≤ x we have 2x = |x − y| + x + y ≤ x2 + y 2 + 2 cos(2v)xy + x + y ≤ 2, we

conclude that x, y ∈ [0, 1], and then rewrite Êv as

2(1 − x)

Êv = (x, y) : 0 ≤ x ≤ 1, 0 ≤ y ≤

.

2 − (1 − cos(2v))x

We now have

Z 1Z

I=π

0

0

2π

1−x

dxdv = 2π

2 − (1 − cos(2v))x

Z

1

0

π

Z

0

1−x

dxdv.

2 − (1 − cos(2v))x

It is elementary to check that

Z π

0

dφ

π

=√

,

α cos φ + 1

1 − α2

|α| < 1,

so we finally obtain

I=π

2

Z

0

1

√

2

1 − x dx = π 2 .

3

23

2

Stochastic Analysis

Problem 40. Let {Xt , t ∈ [0, ∞)} be a stochastic process on a measurable space

(Ω, F ) with state space a separable metric space E, and let for some t ∈ [0, ∞) Z

be an FtX+ -measurable random variable taking values in a Polish metric space (S, r).

Then there exists an E [0,∞) -measurable function Ψ : E [0,∞) → S such that for every

s > t we have Z = Ψ(X(· ∧ s)).

X

Solution. Since Z is Ft+

1 measurable for every n ∈ N, we have that there exists

n

a sequence of E N -measurable functions Φn : E N → S so that

Z = Φn (Xtn1 , Xtn2 , . . .)

1

n

for some sequence (tni )∞

i=1 , ti ∈ [0, t + n ], ∀i ∈ N.

[0,∞)

Let us define Ψn : E

→ S as Ψn := Φn ◦ πtn , where, as usual, πtn : E [0,∞) →

E N is the projection mapping e → (e(tn1 ), e(tn2 ), . . .). From the definition of Ψn we

immediately have that Ψn is E [0,∞) measurable and

Z = Ψn (X) = Ψn (X(· ∧ t +

1

)).

n

Since all Ψn ’s are E [0,∞) measurable, the set A := {e ∈ E [0,∞) : lim Ψn (e) exists}

belongs to E [0,∞)3 , and thus some fixed a ∈ S the function

lim Ψn (e) for e ∈ A,

Ψ(e) :=

a

otherwise

is E [0,∞) measurable. Also, for every s > t and every n ∈ N so that

Z = Ψn (X(· ∧ s)), which in turn implies that Z = Ψ(X(· ∧ s)).

1

n

< s − t we have

Problem 41. Let {Ft } be a filtration in the measurable space (Ω, F ), and let T and

S be {Ft }-stopping times. Then

FS∨T = π{FS , FT }.

(16)

Solution. We first show that

FS∨T = {A ∪ B : A ∈ FT , B ∈ FS },

(17)

and then complete the proof by showing that the right-hand sides of the above relation

and (16) are equal.

3 Using

the fact that S is complete, it easily follows that A = ∩k∈N ∪n∈N ∩m∈N {e ∈ E [0,∞) : r(Ψn (e), Ψn+m (e)) <

24

1

}.

k

Let C ∈ {A ∪ B : A ∈ FS , B ∈ FT } be arbitrary. Then C = A ∪ B for some

A ∈ FS and B ∈ FT . Since

FS , FT ⊂ FS∨T ,

(18)

we have that A, B ∈ FS∨T , which in turn implies that C = A ∪ B ∈ FS∨T . Thus

{A ∪ B : A ∈ FT , B ∈ FS } ⊂ FS∨T .

For the opposite set inclusion, we proceed as follows. Let C ∈ FS∨T be arbitrary.

Since we clearly have

C = C ∩ {S ≥ T } ∪ C ∩ {T ≥ S},

in order to establish the result it suffices to show that C ∩ {S ≥ T } ∈ FS and

C ∩ {T ≥ S} ∈ FT . Since we have that {S ≥ T } ∈ FS∨T , by the choice of C we have

that C ∩ {S ≥ T } ∈ FS∨T . Hence, by definition we have

C ∩ {S ≥ T } ∩ {S ∨ T ≤ t} ∈ Ft , ∀t ∈ [0, ∞],

which clearly implies that

C ∩ {S ≥ T } ∩ {S ≤ t} ∈ Ft , ∀t ∈ [0, ∞],

and consequently C ∩ {S ≥ T } ∈ FS . By the analogous derivation we get that

C ∩ {T ≥ S} ∈ FT , which in view of the previous remark establishes (17).

To complete the proof, define

4

π c {FS , FT } = {A ⊂ Ω : Ac ∈ π{FS , FT }}.

We first observe that from (18) and the definition of a π-class, it follows that π{FS , FT } ⊂

FS∨T , and consequently

π c {FS , FT } ⊂ FS∨T .

(19)

To establish the opposite set inclusion, fix some arbitrary C ∈ FS∨T . Then, according

to (17), for some A ∈ FS and B ∈ FT we have C = A ∪ B. Since Ac ∈ FS and

B c ∈ FT , we have that Ac ∩ B c ∈ π(FS , FT ), and consequently that C = (Ac ∩ B c )c ∈

π c {FS , FT }. Therefore, we have FS∨T ⊂ π c {FS , FT }, which together with (19)

implies that

FS∨T = π c {FS , FT }.

(20)

Since π c {FS , FT } is a σ-algebra, we clearly have

π c {FS , FT } = {A ⊂ Ω : Ac ∈ π c {FS , FT }},

25

which together with the definition of π c {FS , FT } implies that4

π{FS , FT } = π c {FS , FT }.

Hence, from (20) we have that

FS∨T = π{FS , FT }.

Remark 11. Since from (16) and (18) we have

FS∨T = σ{FS , FT },

it follows that for arbitrary {Ft }-stopping times S and T we have

σ{FS , FT } = π{FS , FT }.

Problem 42. Let {Fn } be a filtration in the measurable space (Ω, F ), and let T be

an {Fn }-stopping time. Define

(

)

[

∗ 4

FT =

An : An ∈ Fn ∩ {T = n}, n = 0, 1, . . . , ∞ .

1≤n≤∞

Then we have FT∗ = FT .

Solution. Let A ∈ FT∗ be arbitrary. Then since {T = n} ∈ Fn , n = 0, 1, . . . , ∞,

we have that A ∩ {T = n} ∈ Fn , n = 0, 1, . . . , ∞, which by definition implies that

A ∈ FT . Hence we have FT∗ ⊂ FT .

For the opposite set inclusion, fix some arbitrary A ∈ FT and put

4

An = A ∩ {T = n}, n = 0, 1, . . . , ∞.

Then we have that A = ∪1≤n≤∞ An , and (since A ∈ FT ) An ∈ Fn ∩ {T = n}, n =

0, 1, . . . , ∞. Hence we have FT ⊂ FT∗ , which establishes the result.

Problem 43 (Revuz & Yor (1999), Exercise IV.1.35). If X ∈ M c ({Ft }, P ) and it is a

Gaussian process, then its quadratic variation is deterministic, i.e. there is a function

f on R+ such that [X](t) = f (t) a.s.

4 Let B, C be two arbitrary collections of subsets of Ω such that {A ⊂ Ω : Ac ∈ B} = {A ⊂ Ω : Ac ∈ C }. We claim

that B = C . Let X ∈ B be arbitrary. Then X c ∈ {A ⊂ Ω : Ac ∈ C }, which implies that X ∈ C . Hence, B ⊂ C .

Since the opposite set inclusion follows by the analogous argument, we have that the two collections of sets are equal.

26

Solution. Let us denote the variance of Xt by f (t). Since X ∈ M c ({Ft }, P ) it has

uncorrelated increments. Since X is also Gaussian so are its (zero-mean) increments,

and thus we have that X is a process with independent increments. Let us define

F˜t := σ{FtX , Z P [F ]}.

Then we have a.s.

E[(Xt − Xs )2 |F˜t ] = E[(Xt − Xs )2 ].

Clearly X ∈ M2c ({F˜t }, P ), so we have a.s.

E[Xt2 − Xs2 |F˜t ] = E[(Xt − Xs )2 |F˜t ] = E[(Xt − Xs )2 ]

= E[Xt2 − Xs2 ] = f (t) − f (s), 0 ≤ t < s < ∞.

Thus the process {(Xt2 − f (t), F˜t ); t ∈ [0, ∞)} is an L2 -martingale.

Furthermore, for each a ∈ R+ the submartingale X 2 (t∧a) converges in L1 to X 2 (a),

which according to the Scheffé’s Lemma (see Williams (1991)) implies continuity of f

on R+ . Thus {(Xt2 − f (t), F˜t ); t ∈ [0, ∞)} is a continuous L2 -martingale, and

[X](t) = f (t) − f (0) a.s.

Problem 44. Let X be a stochastic process and T a stopping time of {FtX }. Suppose

that for some ω1 , ω2 ∈ Ω we have Xt (ω1 ) = Xt (ω2 ) for all t ∈ [0, T (ω1 )] ∩ [0, ∞). Then

T (ω1 ) = T (ω2 ).

Solution. By the definition of a stopping time we have that

{T (ω) = T (ω1 )} ∈ FT (ω1 ) ,

(21)

which, implies that there exist a sequence {ti }, ti ∈ [0, T (ω1 )] ∩ [0, ∞), i = 1, 2, . . .

and a B(R∞ )-measurable mapping Ψ : R∞ → R̄, such that

I{T (ω)=T (ω1 )} = Ψ(Xt1 , Xt2 , . . .).

Using the initial assumption we now have

1 = I{T (ω)=T (ω1 )} (ω1 ) = Ψ(Xt1 (ω1 ), Xt2 (ω1 ), . . .) =

= Ψ(Xt1 (ω2 ), Xt2 (ω2 ), . . .) = I{T (ω)=T (ω1 )} (ω2 ),

and the result follows.

27

Remark 12. It is easy to see that the discrete-time analog (obtained by replacing ”t”

with ”n”) of Problem 44 holds. To establish the result we follow the same idea : the

relation (21) implies the existence of a B(RT (ω1 ) )-measurable mapping Ψ : RT (ω1 ) → R̄

such that we have

I{T (ω)=T (ω1 )} = Ψ(X1 , X2 , . . . , XT (ω1 ) ).

Similarly as above, the result follows from the equality

1 = I{T (ω)=T (ω1 )} (ω1 ) = Ψ(X1 (ω1 ), X2 (ω1 ), . . . , XT (ω1 ) (ω1 )) =

= Ψ(X1 (ω2 ), X2 (ω2 ), . . . , XT (ω1 ) (ω2 )) = I{T (ω)=T (ω1 )} (ω2 ).

Problem 45 (Galmarino’s test (discrete-time)). Let X be a discrete-parameter stochastic process, and let T be a stopping time of {FtX }. If Z is an FT -measurable random

variable, then there exists a B(R∞ )-measurable mapping Ψ : R∞ → R̄, such that

Z = Ψ(X1∧T , X2∧T , . . .).

Solution. It suffices to establish the result for the special case Z = IA , A ∈ FT ,

the general result then being an immediate consequence the monotone class theorem

and Doob’s theorem.

Let A ∈ FT be arbitrary. Then by the definition of a pre-σ-algebra, we have

A ∩ {T = n} ∈ FnX , ∀n = 1, 2, . . . ,

which by Doob’s Theorem implies existence of a sequence of functions {Φn },

Φn : Rn → R, such that for each n ∈ N Φn is B(Rn )-measurable and we have

IA I{T =n} = Φn (X1 , X2 , . . . , Xn ).

Hence, we have

P

P

IA I{T ≤n} = Pni=1 Φi (X1 , X2 , . . . , Xi ) = ni=1 Φi (X1 , X2 , . . . , Xi )I{T =i}

= ni=1 Φi (X1∧T , X2∧T , . . . , Xi∧T )I{T =i} .

(22)

We now observe the following. Since for each n = 1, 2, . . . , ∞ we have {T = n} ∈

by Exercise 1.1 we have that there exists a set Γn ∈ B(Rn ) such that {T = n} =

{(X1 , X2 , . . . , Xn ) ∈ Γn }; this in turn implies that {T = n} ⊂ {(X1∧T , X2∧T , . . . , Xn∧T ) ∈

Γn }. The opposite set inclusion holds as well: let us suppose that there exists an

ω1 ∈ {T 6= n} ∩ {(X1∧T , X2∧T , . . . , Xn∧T ) ∈ Γn }. Since on {T = n} we have

Xi = Xi∧T , i = 1, 2, . . . , n, from the definition of Γn it follows that there exists

an ω2 ∈ {T = n} such that

FnX ,

(X1∧T (ω1 ), X2∧T (ω1 ), . . . , Xn∧T (ω1 )) = (X1∧T (ω2 ), X2∧T (ω2 ), . . . , Xn∧T (ω2 )),

28

which is by Remark 12 a contradiction. Therefore, from (22), according to Doob’s

n=∞

Theorem it follows that there exists a sequence {Ψn }n=1

of functions Ψn : Rn → R

n

such that each Ψn is B(R ) measurable and we have

IA I{T ≤n} =

n

X

Ψi (X1∧T , X2∧T , . . . , Xi∧T ), ∀n ∈ N

i=1

and

IA I{T =∞} = Ψ∞ (X1∧T , X2∧T , . . .).

(23)

Hence, since for each n ∈ N the function IA I{T ≤n} is σ{XT } measurable, the limit

IA I{T ≤∞} is σ{XT } measurable as well. Together with (23), this implies that IA is

σ{XT } measurable, and the result follows by another application of Doob’s Theorem.

Problem 46. Let {Xt , t ∈ [0, ∞)} be a process ona a measurable space (Ω, F ), and

T

let T be an {FtX }-stopping time. Then T is also an {FtX }-stopping time.

Solution. Let t ∈ [0, ∞) be arbitrary. To establish the result, we show that

T

{T > t} ∈ FtX .

∞

Then there exist a sequence {ti }∞

i=1 , ti ∈ [0, t] and a set Γ ∈ B(R ) such that

{T > t} = {(Xt1 , Xt2 , . . .) ∈ Γ}.

(24)

Since on the set {T > t} we have Xti ∧T = Xti , from (24) we have

{T > t} ⊂ {(Xt1 ∧T , Xt2 ∧T , . . .) ∈ Γ}.

(25)

We now show that the opposite set inclusion holds as well. Suppose, on the contrary,

that there exists ω1 ∈ {T ≤ t} such that (Xt1 ∧T (ω1 ), Xt2 ∧T (ω1 ), . . .) ∈ Γ. Then, by the

definition of Γ, there exists ω2 ∈ {T > t} such that

(Xt1 ∧T (ω1 ), Xt2 ∧T (ω1 ), . . .) = (Xt1 ∧T (ω2 ), Xt2 ∧T (ω2 ), . . .).

But, since T (ω1 ) 6= T (ω2 ), according to Problem 44, this is a contradiction. Hence, we

T

have set equality in (25), and thus T is an {FtX }-stopping time.

Problem 47. Let {Xn , n = 0, 1, . . .} be a process on a measurable space (Ω, F ), and

T

let T be an {FnX }-stopping time. Then T is also an {FnX }-stopping time.

29

Solution. We use induction to show that for each n = 0, 1, . . ., we have {T = n} ∈

FnX .

Since we have {T = 0} ∈ σ{X0 }, the claim holds for n = 0. Suppose that the claim

holds for some n > 0. Since

I{T =n+1} = Φ(X1∧T , X2∧T , . . . , Xn+1∧T ).

(26)

Since for each integer 0 ≤ i ≤ n + 1 we have

Xi∧T = Xi I{T >i} +

n

X

Xj I{T =j} ,

j=1

by the inductive hypothesis we have that Xi∧T is FiX measurable for each integer

X

measurable, and the claim

0 ≤ i ≤ n + 1, so by (26) we have that I{T =n+1} is Fn+1

holds for n + 1.

From the above we have that {T < ∞} is F X -measurable, so we have {T = ∞} ∈

X

F .

Problem 48 (Vrkoč et al. (1978), Exercise 1). Let

Z t

Z t

1

1

1

Y0 = √ W2π , Yn (t) = √

cos ns dWs , Xn (t) = √

sin ns dWs , n = 1, 2, . . . .

π 0

π 0

2π

Then Y0 , Yn (2π), Xm (2π), n ∈ N ∪ {0}, m ∈ N are mutually independent random

variables having Normal distribution N (0, 1).

Solution. Let u, v ∈ R; n, m ∈ N be arbitrary. By Itô’s formula we have

exp[ı(uXm (t) + vYn (t))] =

Z t

Z t

exp[ı(uXm (s) + vYn (s))]dYn (s)

1 + ıu

exp[ı(uXm (s) + vYn (s))]dXn (s) + ıv

0

0

Z t

Z

1

1 2 t

− uv

exp[ı(uXm (s) + vYn (s))]d[Xm , Yn ] − u

exp[ı(uXm (s) + vYn (s))]d[Xm ]

2

2

0

0

Z

1 2 t

− v

exp[ı(uXm (s) + vYn (s))]d[Yn ], ∀t ∈ [0, ∞).

2

0

We observe that the second and third term on the right-hand side are continuous

martingales; thus, taking expectations on both sides and denoting exp[ı(uXm (t) +

30

vYn (t))] by f (t), we get

Z t

Z t

1 2

1

uvE[ f (s) sin ms cos ns ds] −

u E[ f (s) sin2 ms ds]

E[f (t)] = 1 −

2π

2π

0

Z t 0

1 2

−

v E[ f (s) cos2 ns ds].

2π

0

By Fubini’s Theorem we now have

Z t

Z

1

1 2 t

E[f (t)] = 1 −

E[f (s)] sin ms cos ns ds −

E[f (s)] sin2 ms ds

uv

u

2π

2π

0

0

Z

1 2 t

−

v

E[f (s)] cos2 ns ds,

2π

0

so we have that E[f (t)] is the solution of the differential equation

1

dE[f (t)]

= − E[f (t)](uv sin mt cos nt + u2 sin2 mt + v 2 cos2 nt), E[f (0)] = 1.

dt

2π

Thus we have

Z t

1

(uv sin ms cos ns + u2 sin2 ms + v 2 cos ns)ds],

E[f (t)] = exp[−

2π 0

which implies

1

E[f (2π)] = exp[−

2π

Z

2π

u2 sin2 ms + v 2 cos ns ds].

0

From the above we immediately have

E[exp[ı(uXm + vYn )]] = E[exp[ıuXm ]]E[exp[ıvYn ]],

and (after evaluating the integrals)

1

E[exp[ıuXn ]] = E[exp[ıuYn ]] = exp[− u2 ].

2

The argument for the case m = 0 is identical.

Problem 49 (Kailath-Segal identity, Revuz & Yor (1999), Exercise IV.3.30). Let

c

X ∈ Mloc

({Ft }, P ) such that X0 = 0, and define the iterated stochastic integrals of X

by

I0 = 1, In = (In−1 • X), n ∈ N.

Then we have

nIn = In−1 X − In−2 [X], n ≥ 2.

31

Solution. For n=2 the claim directly follows from the integration-by-parts formula.

Suppose that the claim holds for n − 1, n ≥ 3:

(n − 1)In−1 = In−2 X − In−3 [X].

(27)

Applying integration by parts we get

In−1 X =

= (In−1 • X) + (X • In−1 ) + [In−1 , X]

= In + (In−2 X • X) + [In−1 , X].

By the inductive hypothesis (27) we now have

In−1 X = In + (In−3 [X] • X) + [In−1 , X].

(28)

Since

[In−1 , X] = [(In−2 • X), X] = (In−2 • [X])

and

(In−3 [X] • X) = ([X] • In−2 ),

from (28) it follows that

nIn = In−1 X − (In−2 • [X]) − ([X] • In−2 ),

which, after another application of the inegration-by-parts formula, gives

nIn = In−1 X − In−2 [X],

and the proof by induction is complete.

Problem 50 (Chung & Williams (1990), Exercise 5.5.6). Let {(X(t), Ft ), t ∈ [0, ∞)}

and {(Y (t), Ft ) t ∈ [0, ∞)} be continuous local martingales on (Ω, F , P ), and write

FX = σ{X(t), t ∈ [0, ∞)}, FY = σ{Y (t), t ∈ [0, ∞)}.

If the σ-algebras FX and FY are independent, and F0 includes all P -null events in

F , then a.s.:

[X, Y ](t) = 0, ∀t ∈ [0, ∞).

Solution. Let us first establish the result for the case of continuous bounded

martingales. Fix some arbitrary X, Y ∈ M c ({Ft }, P ) for which F X and F Y are

independent and whose magnitudes are bounded by some C ∈ (0, ∞). With τkn =

32

k2−n , ∀k, n = 0, 1, . . . let us define ∀n = 0, 1, . . . , ∀t ∈ [0, ∞) (for the sake of brevity

we suppress the dependence on ω)

X

n

n

An (t) =

[X(t ∧ τk+1

) − X(t ∧ τkn )][Y (t ∧ τk+1

) − Y (t ∧ τkn )],

0≤k<∞

n

n

) − Y (t ∧ τkn ),

) − X(t ∧ τkn ), ∆Ykn = Y (t ∧ τk+1

= X(t ∧ τk+1

n

n

= [X](t ∧ τk+1

) − [X](t ∧ τkn ), ∆[Y ]nk (t) = [Y ](t ∧ τk+1

) − [Y ](t ∧ τkn ).

P

n

n

So An (t) = ∞

i=0 ∆Xi (t)∆Yi (t), and ∀t ∈ [0, ∞) we have

∆Xkn (t)

∆[X]nk (t)

E[A2 (t)] =

∞

X

X

∆Xin (t)∆Xjn (t)∆Yin (t)∆Yjn (t)]

= E[ (∆Xin (t)∆Yin (t))2 + 2

=

i=0

∞

X

i6=j

E[(∆Xin (t)∆Yin (t))2 ] + 2

i=0

X

E[∆Xin (t)∆Xjn (t)∆Yin (t)∆Yjn (t)].

i6=j

Using the independence we now have

E[A2 (t)] =

∞

X

X

E[∆Xin (t)∆Xjn (t)]E[∆Yin (t)∆Yjn (t)].

=

E[(∆Xin (t))2 ]E[(∆Yin (t))2 ] + 2

i=0

i6=j

Furthermore, since we have

E[(∆Xin (t))2 ] = E[∆[X]ni (t)], E[∆Xin (t)∆Xjn (t)] = 0, i 6= j,

from the above, using the Cauchy-Schwarz inequality and Monotone Convergence theorem, we get

E[A2 (t)] =

(29)

v

v

u∞

u∞

∞

X

X

u

uX

n

n

n

2

t

=

E[∆[X] (t)]E[∆[Y ] (t)] ≤

(E[∆[X] (t)]) t (E[∆[Y ]n (t)])2

i

i

i=0

i

i

i=0

i=0

v

v

v

v

u∞

u∞

u ∞

u ∞

X

X

X

u

u

u

u X

n

n

n

2

2

2

t

t

t

E[(∆[X] (t)) ]

E[(∆[Y ] (t)) ] = E[ (∆[X] (t)) ]tE[ (∆[Y ]n (t))2 ].

≤

i

i

i=0

But

i

i=0

i=0

∞

X

E[ (∆[X]ni (t))2 ] ≤ E[[X](t) sup ∆[X]nk (t)],

k

i=0

33

i

i=0

and

[X](t) sup ∆[X]nk (t) ≤ [X]2 (t),

k

so in view of uniform continuity of [X](·) on [0, t] and Dominated Convergence Theorem

(note that E[[X]2 (t)] < ∞), we have limn→∞ E[[X](t) supk ∆[X]nk (t)] = 0. Since the

analogous derivations obviously hold for Y , by (30) we have that {An (t)} converges in

probability to zero for all t ∈ [0, ∞), which (since F0 contains all P -null events in F )

implies that a.s.:

[X, Y ](t) = 0, ∀t ∈ [0, ∞).

Let’s now consider the general case. Suppose that X and Y are continuous local

martingales for which F X and F Y are independent. Then, taking in Example 3.5.5 (b)

the filtration to be {FtX } and Γ to be (−∞, −k] ∪ [k, ∞), we get that

Sk := inf{t ∈ [0, ∞) : |Xt (ω)| ≥ k}

defines an {FtX }-stopping time for each k ∈ N. Moreover, it follows that {Sk , k =

1, 2, . . .} is a localizing sequence for {(Xt , Ft ); t ∈ [0, ∞)} for which X Sk ∈ M2c ({Ft }, P )

for each k ∈ N. Repeating the above procedure for Y , we come up with the localizing

sequence {Rk , k = 1, 2, . . .} for Y with the analogous properties.

In view of the fact that {Sk } is an {FtX }-stopping time for each k ∈ N we have that

Sk

X (t) is F X measurable for each t ∈ [0, ∞) and each k ∈ N. Since the analogous

S

S

observation obviously holds for Y , we have that F X k and F Y k are independent for

each k ∈ N. Thus, since X Sk , Y Rk ∈ M c ({Ft }, P ) and are bounded, according to the

first part of the proof, we have that X Sk Y Rk ∈ M c ({Ft }, P ). This by Corollary 3.5.20

implies that X Sk ∧Rk Y Sk ∧Rk ∈ M c ({Ft }, P ) for each k ∈ N as well, so in view of the

uniqueness of co-quadratic variation process we have a.s.:

[X, Y ](t) = 0, ∀t ∈ [0, ∞).

34

(i)

Problem 51. Let Xt , i = 1, 2 be square-integrable martingales such that

h

i

h

i

(1) 2

(2) 2

E Xt

≤ E Xt

, t ≥ 0.

Then

"Z

E

t

Xu(1)

"Z

2 #

2 #

t

(2)

du

≤E

Xu du

,

0

(30)

t ≥ 0.

0

R t (i)

(i)

Solution. Put X̄t := 0 Xu du, i = 1, 2. Repeated application of Itô’s formula

gives

Z t

Z tZ u

Z tZ u

(i) 2

(i) (i)

(i) 2

X̄t

=2

Xu X̄u du = 2

Xz

dz du+2

X̄z(i) dXz(i) du, t ≥ 0, i = 1, 2.

0

0

0

0

0

In view of (30), the result now follows by taking expectation and using Fubini’s theorem

(i)

together with the fact that Xt , i = 1, 2 are martingales.

Problem 52 (Bond volatility at maturity is zero). Considered a collection of adapted,

bounded processes rt , σ(t, T ), T ∈ [0, ∞] on a filtered probability space (Ω, F , Ft , P ),

together with a continuous curve P (0, T ), T ∈ [0, ∞]. Let for every T > 0

dP (t, T ) = P (t, T ) (rt dt + σ(t, T ) dWt ) ,

0 ≤ t ≤ T.

(31)

Show that if P (T, T ) = 1 for every T ∈ [0, ∞), then σ(T, T ) = 0 for every T ∈ [0, ∞).

Solution. For every fixed T > 0 we have

Z

Z t

1 t 2

P (t, T ) = P (0, T )Bt exp −

σ (u, T ) du +

σ(u, T ) dWu ,

2 0

0

R

t

where Bt := exp 0 ru du . Since P (T, T ) = 1, we have for t ∈ [0, T ]

0 ≤ t ≤ T,

Z

Z t

1 t 2

1

σ (u, t) du +

σ(u, t) dWu ,

= P (0, t) exp −

Bt

2 0

0

so

d

log(Bt ) = · · · du − σ(t, t) dWt ,

dt

whence the result follows since log(Bt ) is a process of bounded variation.

35

Remark 13. The equation (31) defines a Price Based Formulation of the HJM model

of interest rates presented in Carverhill (1995).

Problem 53. For A < B, an arbitrary partition A = t0 < t1 < · · · < tN = B, and the

standard Wiener process Wt , compute

!2

Z ti+1

N

−1

X

1

E

Wu du .

Wti −

t

−

t

i+1

i ti

i=0

Solution. Straightforward calculation using independence of the Wiener process

increments yields B−A

(so, in particular, the result does not depend on the underlying

3

partition).

Problem 54 ( Lucic (2020): A variation on the theme of Carr & Pelts (2015)). Let

ST =

ω(Z + τ (T ))

,

ω(Z)

T ≥ 0,

(32)

where τ : R+ → R+ is an increasing function with τ (0) = 0, and Z is a random

variable with a unimodal pdf ω(·). Show that C(K, T ) := E[(ST − K)+ ], T, K ≥ 0, is

a non-decreasing function of T for every K ≥ 0, with limT →∞ C(K, T ) = 1, K ≥ 0.

Solution. We have

+

Z Z

ω(z + τ (T ))

C(K, T ) =

− K ω(z) dz =

[ω(z + τ (T )) − Kω(z)]+ dz, T ≥ 0.

ω(z)

R

R

(33)

∗

∗

Fix K ≥ 1, T0 ≥ 0, and let z denote the global maximum of ω(·). Since for z ≥ z we

have ω(z + τ (T0 )) − Kω(z) ≤ (1 − K)ω(z) ≤ 0, from (33)

Z z∗

C(K, T0 ) =

[ω(z + τ (T0 ))) − Kω(z)]+ dz.

−∞

For T1 > T0 , ∆ := τ (T1 ) − τ (T0 ) ≥ 0 we have

Z z∗ +∆

C(K, T1 ) ≥

[ω(z + τ (T1 )) − Kω(z)]+ dz

Z

−∞

z∗

=

[ω(z + τ (T0 )) − Kω(z − ∆)]+ dz ≥ C(K, T0 ),

−∞

36

where the last inequality follows from the fact that ω(·) is increasing on (−∞, z ∗ ].

For 0 < K < 1, note that ω̃(x) := ω(−x), x ∈ R is a unimodal distribution, so

applying the first part of the proof we conclude that

+

Z Z

1

1

1 C(K, T )

T 7→

ω̃(z + τ (T )) − ω̃(z) d =

[Kω(z) − ω(z + τ (T ))]+ dz = 1− +

K

K R

K

K

R

is increasing. The limiting behavior follows by the Lebesgue dominated convergence

theorem.

Remark 14. Carr & Pelts (2015) (see also Tehranchi (2020), and Antonov et al.

(2019) for a probabilistic interpretation) postulate ω(·) of the form

ω(z) = e−h(z) ,

z ∈ R,

for some convex h(·), with limz→±∞ h0 (z) = ±∞. This in nontrivial cases necessarily

implies a unique zero of h0 (·), hence unimodality of ω(·).

Problem 55. [Basket option formula, Dhaene & Goovaerts (1996)] Suppose X and Y

are nonnegative integrable random variables with cumulative density function F (x, y).

Show that for K ≥ 0,

Z K

+

F (u, K − u) du.

E[(X + Y − K) ] = E[X + Y ] − K +

0

Solution.

Write

E[(X + Y − K)+ ] = E[X + Y ] − K + E[(K − X − Y )+ ].

(34)

For x, y ≥ 0 we have

+

Z

[K − x − y] =

K

Z

I{x+y≤u≤K} du =

0

K

I{u≥x} I{u≤K−y} du,

0

which after integrating wrt the CDF of (X, Y ) gives

Z K

+

E[(K − X − Y ) ] =

F (u, K − u) du.

(35)

0

Combining (34) and (35) gives the desired result.

37

Remark 15. Since (x, y) 7→ C(x, y) ≡ [x+y−1]+ is a copula, (x, y) 7→ BK (x, y) ≡ [x+

2

K (x,y)

y−K]+ is is a quasimonotone (hence measure-inducing) map with ∂ B∂x∂y

= δ{x+y=K} ,

we can interpret the statement of Exercise 55 as the integration-by-parts formula

Z

Z

BK (x, y) F (dx, dy) = boundary terms +

F (x, y) BK (dx, dy).

R+ ×R+

R+ ×R+

Integration-by-parts for higher dimensions was developed in Young (1917) (see also

Hobson (1957)), and was used in a similar context for generalizing Hoeffding’s lemma

in Brendan (2009).

Remark 16. Related to Exercise 55 is Theorem 2.1 of Quesada-Molina (1992), which

implies the following: for nonnegative integrable random variables X and Y with

cumulative density function F (x, y), random variable Ỹ independent of X, having the

same distribution as Y we have

Z K

Z K

Z K

+

+

E[(X+Y −K) ] = E[(X+Ỹ −K) ]+

F (x, K−x) dx−

F1 (x) dx

F2 (x) dx, K ≥ 0,

0

0

0

where F1 (x) and F2 (x) are CDFs of X and Y respectively.

Remark 17. From Exercise 15 it follows that the cdf of X + Y can be written as

Z K

Z K

FX+Y (K) =

Fx (K − u, u) dx =

Fy (u, K − u) du.

0

0

Problem 56. [Spread option formula] Suppose X and Y are nonnegative integrable

random variables with cumulative density function F (x, y). Show that for K ≥ 0,

Z ∞

+

E[(X − Y − K) ] =

F2 (u) − F̃ (K + u, u) du,

0

where F2 (x) is the CDF of Y and F̃ (x, y) := F (x−, y).

Solution. We have

Z ∞

Z

+

(X − Y − K) =

I{u≥Y +K} I{u≤X} du =

0

∞

I{Y ≤u−K} − I{Y ≤u−K} I{X<u} du,

0

which after integrating wrt the CDF of (X, Y ), gives

Z ∞

Z

+

E[(X − Y − K) ] =

F2 (u − K) − F̃ (u, u − K) du =

0

∞

F2 (u) − F̃ (K + u, u) du.

0

38

Remark 18. The spread option formula of Exercise 56 appears in Andersen & Piterbarg (2010) (see also Berrahoui (2004)).

Problem 57. Suppose X is a nonegative, integrable random variable with strictly

increasing, continuous cdf. Show that

1

+

VaRα (X) = arg min K +

E(X − K)

.

1−α

K∈R

Solution. Let F be the cdf of X. Then

Z

+

K

E(X − K) = E[X] − K +

F (u) du,

0

so to establish the claim we need to show that

Z

VaRα (X) = arg min −αK +

K∈R

K

F (u) du .

0

Since the mapping

K

Z

φ(K) : K 7→ −αK +

F (u) du

0

is differentiable, with strictly increasing, continuous derivative

φ0 (K) = −α + F (K),

it reaches its unique minimum at F −1 (α).

Remark 19. Lemma 3.2 of Embrechts & Wang (2015) using different methods shows

that for an arbitrary integrable random variable

1

+

VaRα (X) ∈ arg min K +

E(X − K)

.

1−α

K∈R

Problem 58 (Value-at-Risk(VaR) inequality). Suppose X and Y are IID random

variables. Then

VaRα (X + Y ) ≥ VaR√α (X), α ∈ (0, 1).

Solution. This follows by integrating the inequality

Ix+y≤K ≤ Ix≤K Iy≤K ,

wrt the cdf of (X, Y ).

39

x, y, K ∈ R

Problem 59 (Unique characterization of VaR via loss contributions). Let L1 and

L2 be integrable random variables with strictly positive probability density function

f (l1 , l2 ). Suppose that for some differentiable R : R2 → R such that R(0, 0) = 0 we

have

∂R

(x1 , x2 ) = E Li |x1 L1 + x2 L2 = R(x1 , x2 ) , x1 , x2 ∈ R, i = 1, 2.

(36)

∂xi

Then there exists α ∈ (0, 1) such that R(x1 , x2 ) = VaRα (x1 L1 + x2 L2 ) for every

x1 , x2 ∈ R.

Solution. Fix x1 , x2 6= 0. Since

R

l1 f l1 , (R(x) − x1 l1 )/x2 dl1

R

E L1 |x1 L1 + x2 L2 = R(x) = R

,

f

(l

,

R(x)

−

x

l

)/x

dl

1

1

2

1

1

R

from (36) we have

Z R

∂R

(x) − l1 f l1 , (R(x) − x1 l1 )/x2 dl1 = 0.

∂x1

(37)

On the other hand,

Z Z

(R(x)−x1 l1 )/x2

P(x1 L1 + x2 L2 ≤ R(x)) =

f (l1 , l2 ) dl2 dl1 ,

−∞

R

so differentiating the both sides (using the integrability of L1 to justify differentiation

under the integral sign, e.g. (Billingsley 1995)[Theorem 16.8., Problem 16.5.]), from

(37) we get

∂

P(x1 L1 + x2 L2 ≤ R(x)) = 0, x1 , x2 6= 0,

(38a)

∂x1

and analogously

∂

P(x1 L1 + x2 L2 ≤ R(x)) = 0, x1 , x2 6= 0.

(38b)

∂x2

Using the continuity of R, from (38) it now follows that there exists α ∈ [0, 1] such

that

P(x1 L1 + x2 L2 ≤ R(x)) = α, x1 , x2 ∈ R.

(39)

Also, since for K ∈ R

∂

1

P(x1 L1 + x2 L2 ≤ K) =

∂K

x2

Z

f l1 , (K − x1 l1 )/x2 dl1 , x2 6= 0,

R

40

with the analogous relationship holding for x2 , from the strict positivity of f we conclude that for (x1 , x2 ) 6= 0 the cdf of x1 L1 + x2 L2 is strictly increasing. Therefore, the

claim follows from (39), the definition of VaRα , and the strict monotonicity of the cdf

of x1 L1 + x2 L2 .

Remark 20. If one drops the condition of strict positivity of f , the same argument

yields existence of α ∈ [0, 1] such that R(x) = VaRα (x1 L1 + x2 L2 ) for every x =

(x1 , x2 ) ∈ R2 such that R(x) is not the point of constancy of the cdf of x1 L1 + x2 L2 .

Remark 21. The equality in the opposite direction, i.e. showing that the partial

derivatives of VaR satisfy equality given in the statement of the exercise, was established in Gourieroux et al. (2000). In Qian (2006) it was used to give a financial

interpretation of to the concept of risk contribution.

Problem 60. Let St be a geometric Brownian motion

1 2

St = exp − σ t + σWt , t ≥ 0,

2

(40)

and for some 0 ≤ s ≤ t put

Xu =

1

u ∈ [0, s],

Su /Ss u ∈ (s, t]

.

Show that for deterministic at ≥ 0

E max (au Xu ) ≤ E max (au Su ) .

u∈[0,t]

(41)

u∈[0,t]

Solution.[K. Zhao] Let

Ŝu =

1

2

Xu e− 2 σ u+σZu u ∈ [0, s],

1 2

Xu e− 2 σ s+σZs u ∈ (s, t]

,

where Z is a Wiener process independent of W . Then Ŝ and S have the same distribution, so with u∗ := arg maxu∈[0,t] (au Xu ) for (41) we have

h

i

h

i

− 21 σ 2 u∗ +σZu∗

− 12 σ 2 s+σZs

∗

∗

∗

∗

∗

∗

RHS ≥ E au Xu e

Iu ∈[0,s] + E au Xu e

Iu ∈(s,t]

ii

h

h 1 2 ∗

i

h 1 2

= E au∗ Iu∗ ∈[0,s] E e− 2 σ u +σZu∗ u∗ + E au∗ Xu∗ Iu∗ ∈(s,t] E e− 2 σ s+σZs

= E au∗ Iu∗ ∈[0,s] + E au∗ Xu∗ Iu∗ ∈(s,t]

= LHS.

41

Problem 61 (Duriez’ inequality). Let St be a geometric Brownian motion

1 2

St = exp − σ t + σWt , t ≥ 0,

2

(42)

and put Xs,t := maxu∈[s,t] Su , X̂s,t := maxu∈[s,t] au Su , 0 ≤ s ≤ t, where at ≥ 0 are

deterministic. Show that

"

#

X̂0,t

E

Fs ≤ E[X̂0,t ] a.s., 0 ≤ s ≤ t.

X0,s

Solution. We have

"

#

"

#

max(X̂0,s , X̂s,t )

X̂0,t

Fs = E

Fs

E

X0,s

X0,s

"

#

!

X̂s,t

≤ E max max au ,

Fs

u∈[0,s]

Ss

= E max max au , max au Su−s ,

u∈[0,s]

u∈[s,t]

whence the proof follows from Problem 60.

Problem 62. Let Nt be a continuous-time taking values in {a, b}, a, b ∈ R. Show that

Nt = N0 a.s.If Nt is corlor, it is constant a.s..

Solution. If a = b the claim is trivial. Suppose a 6= b and put N̂t := (Nt −a)/(b−a),

so that N̂t ∈ {0, 1}. Then, since N̂s2 = N̂s for every s ≥ 0, we have

E[(N̂t − N̂0 )2 ] = E[N̂t ] − 2E[N̂t N̂0 ] + E[N̂0 ] = E[N̂t ] − 2E[N̂0 ] + E[N̂0 ] = 0,

t ≥ 0.

Therefore Nt = N0 a.s. If Nt is corlor, by the right-continuity we further conclude that

it is constant a.s..

Problem 63 (Feller). Consider the Feller diffusion

√

dvt = λ(v̄ − vt ) dt + η vt dWt , v0 , λ, v̄, η > 0.

Show that the origin is unattainable for vt if the Feller condition 2λv̄ > η 2 holds.

42

Solution. We put xt = − ln(vt ) and show that xt does not explode. To this end,

note that with

1

1 2

x

Af (x) :=

η − λv̄ e + λ ∂f (x) + η 2 ex ∂ 2 f (x), f ∈ C ∞ (R)

2

2

the process

t

Z

f (xt ) −

Af (xu ) du,

t≥0

0

is a martingale for every f ∈ C ∞ (R) with kf k, kAf k < ∞.

Fix a g ∈ C ∞ (R) such that5 g(x) = 1 for x ≤ 1, g(x) = 0 for x ≥ 2, and g is strictly

decreasing on [1, 2]. Let gn (x) := g(x/n), n ∈ N. Then

1 2

1 2 2

η − λv̄ ∂g(x/n) + η ∂ g(x/n) ex + λ∂g(x/n),

nAgn (x) =

2

2n

whence, since η 2 /2 − λv̄ < 0, there exists6 n0 such that n inf x Agn (x) > −λk∂gk for

every n > n0 . Therefore, since kgn k, kAgn k < ∞ for every n, and gn is a bounded

sequence converging pointwise to 1 on R with inf n>n0 inf x Agn (x) > −∞, the result

follows by Proposition 4.3.9 of Ethier & Kurtz (1986).

Problem 64. Suppose Xt , t ≥ 0 is a corlol supermartingale taking values in [0, ∞],

such that X0 6= ∞ a.s.. Let T be the first contact time with ∞,

T := inf{t : Xt = ∞ or Xt− = ∞} (inf{∅} = ∞).

Then T = ∞ a.s..

Solution. For α > 0 let

fα (x) :=

1 − e−αx , x ∈ [0, ∞),

1,

x = ∞.

Fix α > 0 and note that in view of the optional stopping theorem and Jensen’s inequality the stopped process fα (XtT ), t ≥ 0 is a corlol, bounded supermartingale. Therefore,

T

2E[fα (X0 )] ≥ E[fα (XtT ) + fα (Xt−

)] for every t > 0, whence by taking α → 0+, t → ∞

we obtain 0 ≥ P (T < ∞).

(x−1)2

the classical molifier can be used: g(x) := e (x−1)2 −1 for x ∈ [1, 2].

6 If C := min

∗

x∈[1,2] (Ag(x) − λ∂g(x)) ≥ 0 the claim is trivial, so suppose C < 0, and let x := arg minx∈[1,2] (Ag(x) −

m

l

2 2

∗

2η ∂ g(x )

λ∂g(x)). Then n0 can be taken as (2λv̄−η2 )∂g(x∗ ) (note that ∂ 2 g(x∗ ) < 0, due to strict monotonicity of g, implies

5 E.g.

∂g(x∗ ) < 0).

43

Problem 65 (Feller’s test for explosion). Suppose A ⊂ C(R̄) × B(R̄) is such that

there exists λ ≥ 0, C ∈ R and f ∈ D(A) with f (∞) = ∞, f (x) ≥ 0, Af (x) ≤ λf (x)

for every x ∈ [0, ∞). Let Xt be a R̄-valued, corlol process with X0 = 0, and put

Tn := inf{t : Xt ≥ n or Xt− ≥ n}, n ∈ N. Suppose that Xt is a solution of the

stopped martingale problem for (A, Tn ) for every n ∈ N. Then Xt is a.s. R ∪ {−∞}valued.

Solution. Fix an arbitrary n ∈ N. Let T0 := inf{t : Xt ≤ 0 or Xt− ≤ 0},

Yt := X(T0 ∧ Tn ∧ t), t ≥ 0. It is routine to verify (see, e.g. Proposition 4.3.1 of Ethier

& Kurtz (1986)) that the process

Z t

−λt

e f (Yt ) +

e−λu (λf (Yu ) − Af (Yu )) du, t ≥ 0

0

is a corlol martingale. Since Af (x) ≤ λf (x), f (x) ≥ 0, ∀x ∈ [0, ∞), the process

e−λt f (Yt ) is a nonnegative (corlol) supermartingale, and the claim follows from Problem 64.

Problem 66 (Martingale lemma of Kurtz & Ocone (1988)). Let {(Ut , Ft ), t ∈ [0, ∞)}

and {(Vt , Ft ) t ∈ [0, ∞)} be two continuous adapted processes on (Ω, F , P ) such that

Z t

Vs ds, t ≥ 0

Nt := Ut −

0

is an {Ft }-martingale. If {Gt } is a filtration with Gt ⊂ Ft , t ≥ 0, show that the process

Z t

E[Vs |Gs ] ds, t ≥ 0

Mt := E[Ut |Gt ] −

0

is a {Gt }-martingale.

Solution. Using repeadately the tower propery of conditional expectation we obtain

E[Mt − Ms |Gs ] = E[Nt − Ns |Gs ] = EE[[Nt − Ns |Fs ]|Gs ] = 0,

0 ≤ s ≤ t.

Problem 67 (Kurtz & Ocone (1988)). Let {(Ut , Ft ), t ∈ [0, ∞)} and {(Vt , Ft ) t ∈

[0, ∞)} be two continuous adapted processes on (Ω, F , P ). Show that if

Z t

f (Ut ) −

Vs f 0 (Us ) ds, t ≥ 0

0

44

is an {Ft }-martingale for each f ∈ Ĉ (1) (R), then a.s.

Z t

Ut = U0 +

Vs ds, t ≥ 0.

0

Solution. Put Tn := inf{t ≥ 0 : |Ut | > n}. Then for every f ∈ Ĉ (1) (R)

Z t

(f )

Tn

Vs I{s≤Tn } f 0 (UsTn ) ds, t ≥ 0

Mt := f (Ut ) −

(43)

0

is an {Ft }-martingale, hence by the density argument on the compact [−n, n] the

expression in (43) is also martingale for every f ∈ C (2) (R). Therefore, by Proposition VII.2.4 of Revuz & Yor (1999) it follows that [M (f ) ]t ≡ 0, so

Z t∧Tn

Tn

Vs ds, t ≥ 0,

Ut = U0 +

0

whence the result follows by taking n → ∞.

Problem 68. Let E be a metric space, A ⊂ B(E) × B(E), and suppose that Xt is a

solution of the martingale problem for A with sample paths in DE [0, ∞). Show that,

if Γ ∈ B(E) is such that (IΓ , 0) belongs to the bp-closure of A ∩ (C̄(E) × B(E)), then

P ({X ∈ DE [0, ∞)}∆{X0 ∈ Γ}) = 0.

Solution. Fix an {FtX }-stopping time T with P {T < ∞} = 1, and put Nt ≡

IΓ (Xt ). By the Optional Sampling Theorem for every (f, g) ∈ A ∩ (C̄(E) × B(E))

Z t∧T

T

f (Xt ) −

g(Xs ) ds, t ≥ 0

0

is an {FtX }-martingale. Since (IΓ , 0) belongs to the bp-closure of A ∩ (C̄(E) × B(E)),

NtT is an {FtX }-martingale, so by Problem 62 NtT = N0 a.s., whence by letting t → ∞