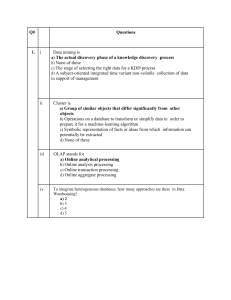

Recommendation using Frequent Itemset Mining in Big Data . Unfortunately, the memory requirements for handling the complete set of candidate itemsets blows up fast and renders Apriori based schemes very inefficient to use on single machines. Secondly, current approaches tend to keep the output and runtime under control by increasing the minimum frequency threshold, automatically reducing the number of candidate and frequent itemsets. However, studies in recommendation systems have shown that itemsets with lower frequencies are more interesting [23]. Therefore, we still see a clear need for methods that can deal with low frequency thresholds in Big Data. Parallel programming is becoming a necessity to deal with the massive amounts of data, which is produced and consumed more and more everyday. Parallel programming architectures, and hence the algorithms, can be grouped into two major subcategories: shared memory and distributed (share nothing). On shared memory systems, all processing units can concurrently access a shared memory area. On the other hand, distributed systems are composed of processors that have their own internal memories and communicate with each other by passing messages [6]. It is easier to adapt algorithms to shared memory parallelism in general, but they are typically not scalable enough Frequent itemset mining The major techniques in data mining are Association rule mining. It is used for finding associations, patterns that occur frequently from the transactional databases and other repositories. It is mainly used in inventor, retail, agriculture sector, marketing, bioinformatics etc. The major task of finding associations is to search for relationships that are interesting among itemsets from a given database. Many algorithms are proposed to find the frequents patterns[10]. One of them is apriori algorithms and the other is FP growth approach. In association rule mining, we find the patterns that occur frequently. Itemsets consists of two or more items. If an itemset occurs frequently it is called frequent itemset. There are various algorithms to find these mentioned patterns. Apriori or FP growth approach can be used. Apriori The algorithm is based on the notion of support. The support is simply the number of transactions in which a specific product (or combination of products) occurs. The first step of the algorithm is to compute the support of each individual item. This basically just comes down to counting, for each product, in how many transactions it occurs. Apriori algorithm The first step is to compute the support for each item. Once that is done the minimum support can be specified or set, so that we can filter out the less frequent items. Then filter out the values which has the support less than the minimum support. After this, we filter out the items but in pairs now, next it is repeated to datasets with many items. After all this, once we have the largest frequent itemsets, the next step is to convert them into association rules. Confidence is a measure in association rules. If it is 100% then it means that the association always occurs. If it is 50% then the association rule only holds for about 50%. Lift basically measures the importance of a rule. Frequent itemset mining: