Systematic Reviews in International Development: 10-Year Review

advertisement

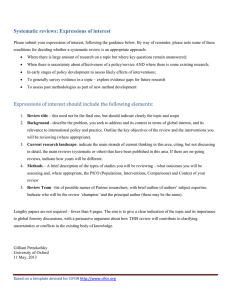

Journal of Development Effectiveness ISSN: 1943-9342 (Print) 1943-9407 (Online) Journal homepage: https://www.tandfonline.com/loi/rjde20 What have we learned after ten years of systematic reviews in international development? Hugh Waddington, Edoardo Masset & Emmanuel Jimenez To cite this article: Hugh Waddington, Edoardo Masset & Emmanuel Jimenez (2018) What have we learned after ten years of systematic reviews in international development?, Journal of Development Effectiveness, 10:1, 1-16, DOI: 10.1080/19439342.2018.1441166 To link to this article: https://doi.org/10.1080/19439342.2018.1441166 Published online: 21 Mar 2018. Submit your article to this journal Article views: 3562 View related articles View Crossmark data Citing articles: 1 View citing articles Full Terms & Conditions of access and use can be found at https://www.tandfonline.com/action/journalInformation?journalCode=rjde20 JOURNAL OF DEVELOPMENT EFFECTIVENESS, 2018 VOL. 10, NO. 1, 1–16 https://doi.org/10.1080/19439342.2018.1441166 EDITORIAL What have we learned after ten years of systematic reviews in international development? Hugh Waddingtona, Edoardo Massetb and Emmanuel Jimenezc a International Initiative for Impact Evaluation (3ie), London International Development Centre, London School of Hygiene and Tropical Medicine (LSHTM), London, UK; bCentre for Development, Impact and Learning, LSHTM, London, UK; c3ie, New Delhi, India ABSTRACT ARTICLE HISTORY The paper discusses the role of systematic evidence in helping make better decisions to reach global development targets. Coming at the end of the first decade of serious funding and support for systematic evidence generation in development economics and development studies, the paper presents opportunities and challenges for the continued development of systematic review methodologies. It concludes by introducing the papers collected in the issue, which make and demonstrate the case for theory-based approaches to evidence synthesis. Received 12 February 2018 Accepted 12 February 2018 KEYWORDS Systematic review; impact evaluation; sustainable development goals; evidence-based policy “The intellectual climate has changed quite dramatically over the last few decades. . . One set of prejudices has given way to another – opposite – set of preconceptions. Yesterday’s unexamined faith has become today’s heresy, and yesterday’s heresy is now the new superstition. . . The need for critical scrutiny of standard preconceptions and political-economic attitudes has never been stronger.” Amartya Sen, Development as Freedom (1999, 111-12). “In my view a systematic review is gold dust. A systematic review is a global public good and I would like to see these commissioned much more from communities of practice.” Richard Manning, former Chair of the OECD Development Assistance Committee and 3ie Board. 1. The central role of systematic evidence in evidence-based policy Reliable evidence is essential for making good choices in international development. Decision makers are demanding better data, partly in recognition of the need to ensure coverage and quality of programmes to meet ambitious Sustainable Development Goal (SDG) targets by 2030 (United Nations 2015a). Some Millennium Development Goals (MDGs), such as maternal and child mortality and sanitation targets, were missed by a wide margin (United Nations 2015b). Others, such as the halving of global dollar-a-day poverty and lack of access to drinking water, were reached but substantial progress will be needed to attain universal coverage and ensure ‘no one is left behind’. This implies that big improvements in resource allocation are needed over a short period of time, and a recognition of the crucial role that rigorous and relevant evidence can play in facilitating that change. Rigorous primary studies that can answer questions about ‘what difference’ development interventions make to people’s lives and ‘why’ have expanded rapidly since 2000 (Cameron, Mishra and Brown 2016). They can help improve decisions around scaling up, scaling down or redesigning projects in the contexts in which they are carried out. However, results from these CONTACT Hugh Waddington hwaddington@3ieimpact.org © 2018 Informa UK Limited, trading as Taylor & Francis Group 2 EDITORIAL primary impact studies are often not communicated in relevant formats for decision-making. Even when efforts are made to ensure results are available, the reliability and generalisability of that evidence to other contexts is uncertain. A key additional value of systematic reviews is in the work of systematically collecting, extracting and synthesising policy-important findings from these studies and presenting implications for policy and practice. Systematic reviews are not literature reviews. Literature reviews enable academics to communicate among themselves the latest developments in a field. Systematic reviews, on the other hand, are undertaken by teams of researchers primarily to help those outside research take decisions – what we may refer to as the ‘5 Ps’ (Rader et al. 2014): programme participants (and their families and communities), practitioners, policy makers, the press, and members of the public. Systematic reviewing is rather more like primary research, in the approach taken to formulating questions, collecting data, critical appraisal and analysis (Cooper 1982). This is clear, for example, from the efforts that should be made for internal-study quality assurance (double coding and checking), or to extract and to transform data reported in primary studies into policy-relevant information, and the transparency in reporting results based on analysis and policy implications based on results. For this reason, 'systematic reviews and meta-analysis... embody a scientific approach' to the synthesis of existing evidence (Littell, Corcoran, and Pillai 2008, 1). In 2012, a special issue of the Journal of Development Effectiveness was devoted to systematic reviews (White and Waddington 2012). Papers in that issue made the case for rigorous, theorybased systematic reviews that answered relevant questions using mixed-methods approaches. Now, nearing the end of this first decade of global production of systematic evidence in development studies and development economics,1 we return to the topic to provide an assessment of the progress made, drawing on examples of recent reviews. In the next sections of this introduction, we present opportunities and challenges to ensure rigour and relevance in reviewing: using programme theory; and keeping reviews up to date. The final section introduces the papers contained in this issue. 2. Fostering learning by using programme theory and telling a good story The production of systematic reviews on development topics has expanded dramatically in the past decade. The International Initiative for Impact Evaluation’s (3ie’s) Systematic Reviews Repository now contains over 600 completed reviews.2 Table 1 provides a list of completed reviews in international development, commissioned largely by bilateral and multilateral donors and produced by 3ie in partnership with the Campbell Collaboration International Development Coordinating Group (IDCG). There have been a number of calls for the incorporation of programme theory into systematic reviews over the years (for example, Pawson 2002; Davies 2006; van der Knaap et al., 2008; Waddington et al., 2009; Anderson et al. 2011; Waddington et al. 2012; Snilstveit 2012; Kneale, Thomas, and Harris 2015; Maden et al. 2017; White 2018), as well as for multi-disciplinary working (for example, Thomas et al. 2004; Snilstveit 2012; Oliver et al. 2017; White 2018). Programme theory is usually incorporated into systematic reviews through logic models (flow diagrams which present the intervention causal chain from inputs through to final outcomes) or theories of change (which articulate the assumptions underlying the causal chain and the contexts and stakeholders for whom the intervention is relevant), and sometimes through economic, social or psychological theory to help articulate programme mechanisms. As indicated in Table 1, the importance of using theory to develop relevant review questions, structure evidence collection, and present findings is well-recognised by reviewers working in international development. One often hears the argument that reviews (and primary studies) drawing solely on quantitative causal evidence from impact evaluations are unable to answer questions about why interventions are successful or not. This is not true since reviews drawing on programme theory that collect Disability Climate change Agriculture Topic or sector The impact of land property rights interventions on investment and agricultural productivity Effectiveness of agricultural certification schemes for improving socio-economic outcomes Effects of training, innovation and new technology on African smallholder farmers’ economic outcomes and food security The effectiveness of contract farming for raising income of smallholders Review title Evidence included (synthesis approach) Theory of change 20 quantitative causal studies (metaanalysis) 9 qualitative studies (views) Theory of change 43 quantitative causal studies (metaanalysis) 136 qualitative studies (thematic synthesis) Logic model 19 quantitative causal studies (metaanalysis) Programme theory Theory of change 22 quantitative causal studies (metaanalysis) 26 qualitative comparative analysis (QCA) Waddington et al. (2014) * Farmer field schools for improving Theory of change 93 quantitative causal studies (metafarming practices and farmer analysis) outcomes 20 qualitative studies (thematic synthesis) 337 project documents (portfolio review) Samii et al. (2014a) Effects of decentralised forest Logic model 8 quantitative causal studies (metamanagement (DFM) on analysis) deforestation and poverty 4 qualitative studies (contextual) Samii et al. (2014b) Effects of payment for Logic model 11 quantitative causal studies (metaenvironmental services (PES) on analysis) deforestation and poverty 9 qualitative contextual studies (narrative) Iemmi et al. (2016) * Community-based rehabilitation Logic model 15 quantitative causal studies (metafor people with disabilities analysis, narrative synthesis) Logic model 14 quantitative causal studies (metaTripney et al. (2015) Interventions to improve the analysis, narrative synthesis) labour market situation of adults with physical and/or sensory disabilities Ton et al. (2017) Stewart et al. (2016) * Oya et al. (2017) * Lawry et al. (2017) Reference Table 1. Systematic reviews produced by 3ie and the Campbell international development coordinating group. Funder/question setter 3ie Sightsavers 12/2013 (Continued ) NORAD 11/2013 07/2012 Norwegian Aid Agency (NORAD) Millennium Challenge Corporation 10/2012 11/2013 3ie Global Affairs Canada (GAC) 3ie DFID 10/2015 02/2015 07/2016 10/2012 Date of last search JOURNAL OF DEVELOPMENT EFFECTIVENESS 3 Molina et al. (2016) Doocy and Tappis (2018) Humanitarian Vaessen et al. (2014) Piza et al. (2016) * Brody et al. (2017) * Tripney et al. (2013) Interventions to improve the labour market outcomes of youth Post-basic technical and Theory of change 26 quantitative causal studies (metavocational education and analysis) training (TVET) interventions to improve employability and employment of TVET graduates Can economic self-help group Theory of change 13 quantitative causal studies (metaprogrammes improve women’s analysis) empowerment? 11 qualitative studies (participant views) Business support for small and Theory of change 36 quantitative causal studies (metamedium enterprises analysis) 72 quantitative and qualitative contextual studies (narrative synthesis) The effect of microcredit on Logic model 25 quantitative causal studies (metawomen’s control over analysis) (‘realist synthesis’ of household spending theory contained in quantitative studies) Community monitoring Theory of change 15 quantitative causal studies (metainterventions to curb corruption analysis) and increase access and quality 6 quantitative and qualitative of service delivery contextual studies (narrative synthesis) The effectiveness and efficiency of Logic model 5 quantitative causal (narrative cash-based approaches in synthesis) emergencies 10 cost studies (narrative synthesis) 108 implementation studies (narrative synthesis) Kluve et al. (2017) 11/2014 11/2013 12/2011 12/2014 02/2014 09/2012 DFID 3ie 3ie GAC 3ie AusAID (Continued ) Programme Evidence included (synthesis theory approach) Date of last search Funder/question setter Theory of change 35 quantitative causal studies (meta09/2016 GAC and UN Women analysis) 50 qualitative studies (narrative meta-synthesis) Theory of change 113 quantitative causal studies (meta01/2015 GAC analysis) Review title Vocational and business training to improve women’s labour market outcomes Reference Chinen et al. (2017) * Governance Finance and economy Topic or sector Employment and skills Table 1. (Continued). 4 EDITORIAL Schooling Nutrition Berg and Denison (2012) Public health Review title Petrosino et al. (2012) Interventions in developing nations for improving primary and secondary school enrolments Interventions to reduce the prevalence of female genital mutilation/cutting in African countries De Buck et al. (2017) * Promoting handwashing and sanitation behaviour change in low- and middle-income countries Polec Augustincic et al. Strategies to increase the (2015) ownership and use of insecticide-treated bednets to prevent malaria Welch et al. (2016; 2017) Deworming and adjuvant interventions for improving the developmental health and wellbeing of children Kristjansson et al. (2016) * Food supplementation for improving the physical and psychosocial health of socioeconomically disadvantaged children aged 3 months to 5 years Baird et al. (2014) Relative effectiveness of conditional and unconditional cash transfers for schooling outcomes Carr-Hill et al. (2018) The effects of school-based decision-making on educational outcomes Reference Topic or sector Table 1. (Continued). Evidence included (synthesis approach) 12/2009 01/2015 Theory of change 26 quantitative causal studies (metaanalysis) 9 qualitative studies (framework synthesis) Tabular theory 73 quantitative causal studies (metawith analysis) mechanisms 02/2014 01/2016 3ie DFID (Continued ) Australian Aid (AusAID) 3ie CIHR and World Health Organisation Canadian Institutes of Health Research (CIHR) 02/2013 04/2013 34 quantitative causal studies (metaanalysis) 61 quantitative and qualitative studies (realist review) 65 quantitative causal studies (metaanalysis, network meta-analysis) Water Supply and Sanitation Collaborative Council 03/2016 Funder/question setter 3ie 03/2011 Date of last search Theory of change 35 quantitative causal studies (metaanalysis) Logic model Logic model Tabular theory of 8 quantitative causal studies (metachange analysis, narrative synthesis) 27 qualitative and qualitative studies (narrative) Theory of change 42 quantitative causal studies (metaanalysis, narrative synthesis) 28 qualitative (‘best fit framework synthesis’) Logic model 10 quantitative causal studies (metaanalysis) Programme theory JOURNAL OF DEVELOPMENT EFFECTIVENESS 5 Higginson et al. (2015) Coren et al. (2014) Parental, community and familial support interventions to improve children’s literacy Services for street-connected Logic model children and young people in low- and middle-income countries A thematic synthesis Preventive interventions to reduce Logic model youth gang violence in lowand middle-income countries Spier et al. (2016) * 13 quantitative causal studies (narrative synthesis) 30 qualitative studies (thematic synthesis) 4 quantitative causal studies (thematic synthesis of views) 09/2013 07/2013 3ie 3ie Programme Evidence included (synthesis theory approach) Date of last search Funder/question setter Theory of change 238 quantitative causal studies (meta06/2015 3ie analysis) 120 qualitative studies (narrative synthesis of barriers and enablers) Logic model 10 quantitative causal studies (meta07/2013 USAID analysis) Review title The impact of education programmes on learning and school participation Reference Snilstveit et al. (2015) * * indicates 3ie Systematic Review Summary Report is available or planned. Social inclusion Topic or sector Table 1. (Continued). 6 EDITORIAL JOURNAL OF DEVELOPMENT EFFECTIVENESS 7 evidence on outcomes along the causal chain can explain heterogeneity in findings – usually variation in quality of life outcomes due to differences in rates of programme adherence (for example, Waddington and Snilstveit 2009; Welch et al. 2017). However, analysis of the rest of the causal chain usually requires turning to evidence in studies which are often excluded from systematic reviews on the grounds of study design. These mixed-methods reviews are able to answer some of the most pressing development questions for policymakers and implementers – reasons for successful implementation and participation drawing on participant or implementer views, the effectiveness of targeting, unintended or adverse outcomes for vulnerable groups, or questions about cost-effectiveness. We are learning important things from these reviews. For example, systematic reviews in agriculture show: ● Land tenure reform tends to increase agricultural productivity and incomes in Asia and Latin ● ● ● ● America, but not in sub-Saharan Africa where customary tenure may already provide tenure security, or farmers are too poor to invest without additional support (Lawry et al. 2017). Land reform may also have negative consequences, such as conflict, displacement, or reduced property rights for women, as the qualitative evidence in this review indicated. Top-down agricultural extension does not appear to be effective in improving harvests for African smallholders (Stewart et al. 2016). On the other hand, farmer field schools (FFS), a bottom-up learning approach, tend to improve outcomes along the causal chain (knowledge, adoption, yields, income) for project participants. But evidence suggests that these FFS do not work at scale due to problems in recruiting, training and supporting FFS facilitators, and they may not be cost-effective as there are no spillovers to non-participants (Waddington and White 2014). Certification schemes are effective in raising prices and income from agriculture, but do not usually improve household income and wages (Oya et al. 2017). Costs of implementing standards can prevent poor farmers joining the schemes, and training is often not oriented to the needs of smallholders and workers. Contract farming may increase farmer income substantially, between 40 and 87 per cent on average, but poorer farmers are not usually part of the schemes and biases in the primary research studies mean that impacts are likely overestimated (Ton et al. 2017). Payments for environmental services are effective in reducing deforestation and increasing forest cover, and in improving household incomes (Samii et al., 2014b). But the effects are small and unlikely to justify the costs of the schemes, and may not benefit poor people. Reviews could still do more to engage with theory and evidence on second order outcomes (see also Brown 2016). For example, in evaluating effects on net employment, wages and prices, reviews need to draw on appropriate economic theory and incorporate incorporate evidence of spillover effects collected from non-participants more consistently (assuming that the primary studies with appropriate clustering are available), or studies that measure or simulate outcomes in general equilibrium (for a forthcoming example see Dorward et al. 2014). While there has been much progress in how reviews incorporate theory to ensure relevance, there are legitimate concerns about the ways in which findings from reviews are communicated, often in formats impenetrable to decision makers or insufficiently nuanced to apply to complex interventions and contexts. Review findings need to be written in easily accessible language and available to a wide audience of policymakers, international development professionals, and other users of evidence. Campbell produces short, plain language summaries,3 and Cochrane’s summary of findings tables can effectively communicate technical information about the evidence base. These methods are useful but not sufficient to communicate the nuanced findings typical of reviews of (often, multiple) complex interventions implemented throughout the developing world. 3ie publishes a series of Systematic Review Summary reports, which present review evidence structured around the theory of 8 EDITORIAL change, and focus more clearly on how the interventions themselves work and the reasons underlying heterogeneity in findings. Examples of these summaries are in Table 1 (De Buck et al. 2017; Kristjansson et al. 2016; Oya et al. 2017; Snilstveit et al. 2016; Stewart et al., 2016; Waddington and White 2014). 3. Keeping reviews up to date Systematic reviews need to be kept up to date. The field of impact evaluation is fast expanding, with new studies produced at a rate of around 250 per year in health, nutrition and population, 100 new studies per year in education, and 50 new studies each in agriculture and social protection sectors (Cameron, Mishra, and Brown 2016). In contrast, an average systematic review in international development includes 10 to 20 studies, and often fewer. Reviews may become outdated quickly, requiring regular updates to ensure currency of searches. The evolution of the MDGs to SDGs is also likely to have caused shifts in priorities, scope, questions, outcomes and interventions that are of relevance for policy, programming and practice. The shift towards promoting universal coverage is likely to generate a change in the outcomes of interest of a review – for example greater interest in the equity of the distribution of outcomes and gender and equity-responsive sub-group analysis (for example, by sex, gender, age, ethnicity and so on). In addition, the focus of primary research is likely to shift from first generation evaluation questions (does intervention work compared to doing nothing or ‘standard practice’?) to second generation comparative questions about different ways of reaching a particular goal (is intervention a relatively more effective than intervention b?) and implementation (how to most effectively deliver the intervention?). As well as updating searches, answering these questions may require new theories of change, eligible evidence, data collection (for example, population sub-groups and moderator variables) and so on, hence updating the scope of the review. In addition, we have limited confidence in the findings of many international development systematic reviews. 3ie’s Repository of Systematic Reviews includes an assessment of the methodological quality of each review included in the database.4 Only 31 percent of reviews with information publicly available about methodological quality are rated as having minor limitations, while 69 percent have some important or major limitations. Reviews are often based on inadequate searches (for example, limited to published studies, omitting important international development databases, only searching English language sources), quality assessment (for example, lack of risk of bias analysis or inappropriate approach to critical appraisal) and synthesis methods (for example, use of statistical significance vote-counting). Finally, most systematic reviews are undertaken without planned, effective stakeholder engagement. Failure to ensure the demand for and likely usefulness of a review can consign the work to irrelevancy and limited uptake. Engaging with a range of users from the outset can ensure the review asks the right questions, and fosters ownership of the evidence in key communities and decision makers (3ie, 2015). There are different models of stakeholder participation in systematic reviews depending on the degree of control over the review, the type of engagement whether individual or group, and the type of dialogue (Rees and Oliver 2012). In sum, reviews may need to be updated against multiple criteria. We identify four main types of systematic review update: ● Update search to incorporate new evidence. Outdated reviews or reviews based on ‘old evidence’, perhaps from policy contexts which are no longer relevant, may provide biased advice to policy and practice. ● Update scope to ensure reviews answer relevant questions. For example, there may be greater demand from policy-makers for reviews which answer different evaluation questions or which pay attention to outcomes or experiences of particular groups (for example, JOURNAL OF DEVELOPMENT EFFECTIVENESS 9 disadvantaged people). In these cases it would be more efficient to expand existing reviews on similar topics rather than producing new ones from scratch. ● Update quality to ensure reviews use up-to-date methods. Reviews often omit unpublished literature, fail to conduct comprehensive critical appraisal of included studies, or use inappropriate methods of synthesis. In addition, methodological developments allow analyses that were not previously possible, or incorporate new tools or new software. ● Update engagement to improve the user experience of existing reviews and hence uptake by decision-makers. The objective of systematic reviews is to inform policy and practice and one component of that is to ensure that reviews are informed by appropriate stakeholder engagement processes and that findings are presented in user-friendly formats. There is, currently, no consensus in the literature regarding when and how a systematic review should be updated. Cochrane recommends updating systematic reviews every two years. However, the rationale for this time cut-off is not clear, and it is not normally respected – only 38 per cent of Cochrane reviews are updated within two years of publication, and only 3 per cent of reviews published in peer-review journals are updated within two years of publication (Moher et al. 2008). The Campbell Collaboration (2016) allows authors of reviews up to five years after publication of an original review to undertake an update. 3ie, a major source of systematic reviews, has proposed a policy in which development reviews (funded by 3ie or others) are ranked according to priority for the need of update (search, scope, quality or engagement). 3ie plans to publish the list and advocate for updating reviews with funders of systematic reviews in coordination with other institutions producing reviews. 3ie also incorporates updates as a means of building research capacity in its reviews programme (for example, Waddington and Snilstveit 2009). Technical developments in updates have mostly consisted of methods for incorporating new evidence from clinical trials into existing meta-analyses. Efforts have focused on producing statistical tools that assess the need for updating and its benefit on existing meta-analyses (Sutton et al. 2009). This type of research is entirely focused on the incorporation of additional effect sizes as new evidence from primary studies becomes available. In sectors where primary evidence generation is rapid, reviews of clusters of interventions that were previously ‘lumped’ together may be ‘split’ by intervention in the update, to incorporate broader mixed-methods evidence to answer questions about implementation, for example. Researchers have developed models that update quantitative as well as qualitative components of the review. These approaches take into account the policy relevance of the review and changes in the policy environments in addition to changes in review methodology. This approach has led to multicomponent decision tools based on a mix of qualitative and quantitative judgments (Takwoingi et al. 2013). More radical approaches have also been suggested. These include the production of ‘living systematic reviews’ whereby reviews are an online document which is persistent, dynamic and constantly updated as more evidence becomes available (Elliott et al. 2014). Others have proposed an automated process for updating reviews, whereby the systematic review, or part of it, such as the search and the meta-analysis, is entirely produced by machines at the press of a button (Tsafnat et al. 2013). The first generation of international development systematic reviews had relatively high fixed costs for various reasons including the need to build capacity in a community of practice. In theory, the costs of second generation reviews, especially updates, will be lower owing to that capacity being already built, or in the case of updates, the scope of the review is already well defined by answerable questions, to enable further capacity to be built more easily. A standard systematic review is completed within 12–24 months. The process is demanding and reviews can take a long time to produce findings, quickly becoming outdated in such a way that they often fail the task of informing policies in a timely manner (Whitty 2015). 3ie’s experience is that updates can be completed at a fraction of the costs (typically between 3 and 9 months) depending on the scope of the update. One way to speed up the process of knowledge translation 10 EDITORIAL from systematic searches is the evidence gap map (EGM) (Snilstveit et al. 2013). EGMs summarise the density and paucity of evidence in an interactive format and have proven incredibly popular with researchers and development organisations (Phillips et al. 2017). However, EGMs are not a substitute for systematic reviews since they are not designed to critically appraise or extract policyrelevant findings from primary studies. Rather, they are a way of scoping future review topics, and provide a more efficient way of communicating primary research gaps than ‘empty reviews’.5 Systematic reviews are produced by large teams of researchers that scan all the relevant literature and filter and quality appraise the evidence through a process of search, selection and data collection. Much of this mechanical work can take several months to complete. The process of producing systematic reviews is becoming more and more demanding as more evidence is produced and more databases that require searching become available. Hence, much of the time spent in conducting a systematic review is absorbed by the process of searching, screening and evaluating the available literature, often using word-recognition devices, with little time left for activities requiring higher order cognitive functions, such as evaluating and synthesising the evidence. Much research and a number of projects are underway that employ machine learning algorithms to assist researchers in conducting systematic reviews (O’Mara-Eves et al. 2015; Tsafnat et al. 2014; see also Snilstveit et al., 2018). In these trials, researchers screen a subset of the population of studies. The result of the screening process is fed into a machine which develops a rule to include or exclude a given study based on the information provided by the researchers. This is normally performed by a logistic regression where the dependent variable is the inclusion-exclusion of the study and the explanatory variables are words and combinations of words in the studies reviewed. The inclusion rule is then applied to a new subset of the data and the selection performed by the computer algorithm is returned to the researchers. The researchers at this point can perform an additional screening on the results of the search conducted by the computer, that can be fed back again to the machine to improve and refine the inclusion process at successive trials. In this way, the machine iteratively learns to include the studies using the criteria followed by the researchers. We hope and expect that the findings of these studies will suggest substantial savings from these approaches for updates and new reviews. 4. Overview of the papers collected in this issue The papers in this collection serve to discuss new approaches to systematic reviews in international development as well as present examples of the state of the art in experience in conducting reviews on development topics. White (2018) argues that the gold standard in systematic reviewing is not just to follow the conduct and reporting requirements of the Campbell Collaboration and Cochrane, but also to incorporate programme theory and analysis of evidence along the causal chain. White argues for the centrality of theory of change analysis in systematic reviews, and presents approaches to building different types of evidence into mixed-methods effectiveness reviews including project portfolio information and qualitative studies, and different methods of presentation including the ‘funnel of attrition’. Skalidou and Oya (2018) discuss their experiences in incorporating a large amount of qualitative evidence into an effectiveness review of agricultural certification schemes. Unlike many other mixed-methods systematic reviews, the study adopted different inclusion criteria and critical appraisal approaches for different types of evidence, enabling the incorporation of ethnographic research into the review that otherwise would have been omitted on the grounds of methods reporting. The authors report on their approach to searching, critical appraisal, data extraction and synthesis across 136 included qualitative studies. They also comment on the challenges in integrating qualitative and quantitative evidence in mixed-methods reviews where, concluding that even if evidence is not directly linked it can still illuminate issues of implementation and context which can help understand heterogeneity in impacts. JOURNAL OF DEVELOPMENT EFFECTIVENESS 11 The paper by Carr-Hill et al. (2018) serves as an example of a state of the art mixed-methods review on interventions to decentralise education decision-making. The review combines theory of change analysis, quantitative meta-analysis and qualitative framework synthesis. The authors conduct full causal chain evidence synthesis and are able to go some way to explaining the sources of heterogeneity in findings across low- and middle-income contexts. Parents are less able to hold schools accountable in contexts of low income and low levels of education, where they have low status relative to teachers and school managers. Very few systematic reviews on development topics incorporate evidence on the cost of the interventions being assessed. Masset et al. (2018) present a systematic review of systematic reviews of cost-effectiveness studies in low- and middle-income countries. The review examines the characteristics of the studies and the methods employed and discusses their relevance for decision-making. The paper notes the challenges in aggregating data across economic evaluations, due to great heterogeneity in outcomes, costs and methods, and argues for greater standardisation in data collection and reporting. The contribution of Doocy and Tappis (2018) is to provide, alongside a review of effects, systematic evidence on costs, cost efficiency, cost-effectiveness and cost benefit for cash transfer programmes in emergency settings. The study is the first published systematic review on a humanitarian topic to provide evidence together on effects and costs, to our knowledge. It provides a model for future studies incorporating evidence on value for money. Lombardini and McCollum (2018) give an example of the use of systematic review methods to inform decision-making in a programme organisation, Oxfam GB. The study is a critical appraisal and meta-analysis of impact evidence on women’s empowerment projects, and presents a fine example of the value of meta-analysis in combining results from underpowered studies. One can imagine other examples where it may be difficult to undertake large enough individual studies to detect statistically significance changes in outcomes, where meta-analysis might also be of great value (for example, detecting changes in maternal mortality). Finally, the paper by Stewart (2018), based on her opening address at the 2017 Cochrane Colloquium in Cape Town, presents the African Evidence Network (AEN), which was established in 2012 and is building a critical mass of researchers, knowledge brokers and decision makers across Africa. Drawing on the experiences of the AEN, the article presents the case for how networks bridge divides, build capacity and create readiness for change. A powerful African proverb exemplifies the work of the group and the coordinating apparatus provided by Cochrane and the Campbell Collaboration: if you want to go fast, go alone; if you want to go far, go together. In 2012, an earlier review stated that ‘efforts were being made to conduct more policy-relevant reviews drawing on a fuller range of evidence’ (White and Waddington 2012, 357). Papers in this collection should indicate to readers the developments made in systematic reviewing in international development over the past five years, and the broad range of questions that rigorous systematic reviews can answer (see also Hansen and Trifković 2015). Notes 1. Meta-analyses in economics have been around since the 1980s (Ioannides, Stanley, and Doucouliagos 2017). Systematic reviews on public health topics in low- and middle-income countries have been published since at least the 1990s (for example, Esrey et al. 1991). However, the common use of systematic reviewing in development economics and development studies dates to the 2000s. 3ie and the Department for International Development launched their first systematic review research funding programmes in 2008 and 2010, respectively. 2. The Repository was updated using systematic search methods in 2017. See: http://www.3ieimpact.org/en/ evidence/systematic-reviews (accessed 1 February 2018). 3. https://www.campbellcollaboration.org/better-evidence/plain-language-summaries.html (accessed 1 February 2018). 12 EDITORIAL 4. Studies are assessed using a checklist designed to evaluate the methods employed in reviews against a set of common standards for conducting systematic reviews (3ie n.d.). Based on this, reviews are given an overall rating of overall confidence in conclusions about effects: low confidence reviews are those in which there are major methodological limitations; medium confidence reviews are those with important limitations; and high confidence reviews are those with minor limitations. 5. The caveat is that the standards of searching undertaken in EGMs are usually not as exhaustive as those for systematic reviews. For example, sources may be limited to English language or by date; reference snowballing (citation tracing and bibliographic back-referencing) may not be undertaken. Notes on contributors Hugh Waddington is Senior Evaluation Specialist and currently Acting Head of 3ie's Synthesis and Reviews Office. He has a background in research and policy, having worked previously in the Government of Rwanda, the UK National Audit Office and the World Bank, and before that with Save the Children UK and the Department for International Development. He is managing editor of the Journal of Development Effectiveness and co-chair of the International Development Coordinating Group (IDCG) of the Campbell Collaboration. Edoardo Masset is Deputy Director of the Centre for Excellence in Development Impact and Learning (CEDIL), previously with 3ie, the Institute of Development Studies and the World Bank. He has extensive experience in international development work in Africa, Asia and Latin America, including designing and conducting impact evaluations and systematic reviews of development interventions. He is managing editor of the Journal of Development Effectiveness. Emmanuel (Manny) Jimenez is Executive Director of 3ie. He was with the World Bank for 30 years in in roles that included Director of Public Sector Evaluations and Sector Director, Human Development, in the World Bank’s East Asia Region, and has published widely on education, health finance, the private provision of social services, the economics of transfer programs and urban development. Manny led the core team that prepared the World Development Report 2007: Development and the Next Generation. He is editor in chief of the Journal of Development Effectiveness. Acknowledgements We thank Raj Popat for excellent research assistance and our colleagues at 3ie and the Campbell Collaboration International Development Coordinating Group, in particular Birte Snilstveit, who originally suggested the Sen quote in 2011 when we were establishing the Campbell Group and has made important contributions as Co-Editor, and David de Ferranti whose comments inspired the first section of this editorial. Thanks also to colleagues who have contributed to 3ie and IDCG systematic reviews secretariats including Ami Bhavsar, Zulfi Bhutta, John Eyers, Anna Fox, Marie Gaarder, Michelle Gaffey, Emma Gallagher, Betsy Kristjansson, Laurenz Langer, Beryl Leach, Kristen McCollum, Radhika Menon, Sandy Oliver, Jennifer Petkovic, Daniel Phillips, Fahad Siddiqui, Jennifer Stevenson, Emily TannerSmith, Stuti Tripathi, Stella Tsoli, Peter Tugwell, Martina Vojtkova, Vivian Welch, Howard White and Duae Zehra. Disclosure statement No potential conflict of interest was reported by the authors. References 3ie. n.d. Checklist for Making Judgements About How Much Confidence to Place in a Systematic Review of Effects (adapted version of SURE checklist). London: International Initiative for Impact Evaluation. Available at: http:// www.3ieimpact.org/media/filer_public/2012/05/07/quality_appraisal_checklist_srdatabase.pdf (accessed 9 March 2018). Anderson, L. M., M. Petticrew, E. Rehfuess, R. Armstrong, E. Ueffing, P. Baker, D. Francis, and P. Tugwell. 2011. “Using Logic Models to Capture Complexity in Systematic Reviews”. Research Synthesis Methods 2(1):33–42. Epub 2011 Jun 10. doi:10.1002/jrsm.32. Baird, S., F. H. G. Ferreira, B. Özler, and M. Woolcock. 2013. “Relative Effectiveness of Conditional and Unconditional Cash Transfers for Schooling Outcomes in Developing Countries: A Systematic Review.” Campbell Systematic Reviews 2013: 8. doi:10.4073/csr.2013.8. JOURNAL OF DEVELOPMENT EFFECTIVENESS 13 Berg, R. C., and E. Denison. 2012. “Interventions to Reduce the Prevalence of Female Genital Mutilation/Cutting in African Countries.” Campbell Systematic Reviews 2012: 9. doi:10.4073/csr.2012.9. Brody, C., T. De Hoop, M. Vojtkova, R. Warnock, M. Dunbar, P. Murthy, and S. Dworkin. 2017. “Can Economic Self-Help Group Programs Improve Women’s Empowerment? A Systematic Review.” Journal of Development Effectiveness 9 (1): 15–40. doi:10.1080/19439342.2016.1206607. Brown, A. N., 2016. “Let’s Bring Back Theory to Theory of Change”. Evidence Matters, April 19. Accessed 1 February 2018. blogs.3ieimpact.org/lets-bring-back-theory-to-theory-of-change/ Cameron, D. B., A. Mishra, and A. N. Brown. 2016. “The Growth of Impact Evaluation for International Development: How Much Have We Learned?” Journal of Development Effectiveness 8 (1): 1–21. doi:10.1080/ 19439342.2015.1034156. Carr-Hill, R., C. Rolleston, R. Schendel, and H. Waddington. 2018. “The Effectiveness of School-Based Decision Making in Improving Educational Outcomes: A Systematic Review.” Journal of Development Effectiveness 10(1): 61–94. Chinen, M., T. De Hoop, L. Alcázar, M. Balarin, and J. Sennett. 2017. “Vocational and Business Training to Improve Women’s Labour Market Outcomes in Low- and Middle-Income Countries: A Systematic Review.” Campbell Systematic Reviews 2017: 16. doi:10.4073/csr.2017.16. Cooper, H. M. 1982. “Scientific Guidelines for Conducting Integrative Research Reviews.” Review of Education Research 52 (2): 291–302. doi:10.3102/00346543052002291. Coren, E., R. Hossain, K. Ramsbotham, A. J. Martin, and J. P. Pardo. 2014. Services for Street-Connected Children and Young People in Low- and Middle-Income Countries: A Thematic Synthesis, 3ie Systematic Review 12. London: International Initiative for Impact Evaluation (3ie). Davies, P., 2006. What is Needed from Research Synthesis from a Policy-Making Perspective? In Moving beyond effectiveness in evidence synthesis - methodological issues in the synthesis of diverse sources of evidence, edited by J. Popay. London: National Institute for Health and Clinical Excellence. De Buck, E., H. Van Remoortel, A. Vande Veegaete, and T. Young. 2017. Promoting Handwashing and Sanitation Behaviour Change in Low- and Middle-Income Countries, 3ie Systematic Review Summary 10. London: International Initiative for Impact Evaluation (3ie). Doocy, S., and H. Tappis. 2018. “The Effectiveness and Value for Money of Cash-Based Humanitarian Assistance: A Systematic Review.” Journal of Development Effectiveness 10(1): 121–144. Dorward, A., E. Chirwa, P. D. Roberts, C. Finegold, D. J. Hemming, H. J. Wright, J. Osborn, et al. 2014. Agricultural Input Subsidies for Productivity, Farm Incomes, Consumer Welfare and Wider Growth in Low- and Middle- Income Countries: Protocol. Oslo: The Campbell Collaboration. Elliott, J. H., T. Turner, O. Clavisi, J. Thomas, J. P. T. Higgins, C. Mavergames, and R. L. Gruen. 2014. “Living Systematic Reviews: An Emerging Opportunity to Narrow the Evidence-Practice Gap.” PLOS Medicince 11: 1–6. Esrey, S. A., J. B. Potash, L. Roberts, and C. Schiff. 1991. “Effects of Improved Water Supply and Sanitation on Ascariasis, Diarrhoea, Dracunculiasis, Hookworm Infection, Schistosomiasis, and Trachoma.” Bulletin of the World Health Organization 69 (5): 609–621. Hansen, H., and N. Trifković. 2015. “Means to an End: The Importance of the Research Question for Systematic Reviews in International Development.” European Journal of Development Research 27 (5): 707-726. doi:10.1057/ejdr.2014.54. Higginson, A., K. Benier, Y. Shenderovich, L. Bedford, L. Mazerolle, and J. Murray. 2015. “Preventive Interventions to Reduce Youth Involvement in Gangs and Gang Crime in Low- and Middle-Income Countries: A Systematic Review.” Campbell Systematic Reviews 2015: 18. doi:10.4073/csr.2015.18. Iemmi, V., H. Kuper, K. Blanchet, L. Gibson, K. S. Kumar, S. Rath, S. Hartley, G. V. S. Murthy, V. Patel, and J. Weber. 2016. Community-Based Rehabilitation for People with Disabilities, 3ie Systematic Review Summary 4. London: 3ie. International Initiative for Impact Evaluation (3ie). 2015. Stakeholder Engagement and Communication Plan (SECP) Guidance. London: 3ie. Accessed 1 February 2018. http://www.3ieimpact.org/media/filer_public/2015/10/13/3ie_ stakeholder_engagement_and_communication_plan-secp_v2.pdf. Ioannides, J. P. A., T. D. Stanley, and H. Doucouliagos. 2017. “The Power of Bias in Economics Research.” Economic Journal 127: F236–F265. doi:10.1111/ecoj.12461. Kluve, J., S. Puerto, D. Robalino, J. M. Romero, F. Rother, J. Stöterau, F. Weidenkaff, and M. Witte. 2017. “Interventions to Improve the Labour Market Outcomes of Youth: A Systematic Review of Training, Entrepreneurship Promotion, Employment Services, and Subsidized Employment Interventions.” Campbell Systematic Reviews 2017: 12. doi:10.4073/csr.2017.12. Kneale, D., J. Thomas, and K. Harris. 2015. “Developing and Optimising the Use of Logic Models in Systematic Reviews: Exploring Practice and Good Practice in the Use of Programme Theory in Reviews.” PLoS ONE 10 (11): e0142187. doi:10.1371/journal.pone.0142187. Kristjansson, E., D. Francis, S. Liberato, T. Greenhalgh, V. Welch, M. B. Jandu, M. Batal, et al. 2016. Supplementary Feeding for Improving the Health of Disadvantaged Infants and Children: What Works and Why? 3ie Systematic Review Summary 5. London: 3ie. Lawry, S., C. Samii, R. Hall, A. Leopold, D. Hornby, and F. Mtero. 2017. “The Impact of Land Property Rights Interventions on Investment and Agricultural Productivity in Developing Countries: A Systematic Review.” Journal of Development Effectiveness 9 (1): 61–81. doi:10.1080/19439342.2016.1160947. 14 EDITORIAL Littell, J. H., J. Corcoran, and V. Pillai. 2008. Systematic Reviews and Meta-Analysis. New York, NY: Oxford University Press. Lombardini, S., and K. McCollum. 2018. “Using Internal Evaluations to Measure Organisational Impact: A Meta-Analysis of Oxfam’s Women’s Empowerment Projects.” Journal of Development Effectiveness 10(1): 145–170. Maden, M., A. Cunliffe, N. McMahon, A. Booth, G. M. Carey, S. Paisley, R. Dickson, and M. Gabbay. 2017. “Use of Programme Theory to Understand the Differential Effects of Interventions across Socio-Economic Groups in Systematic Reviews—A Systematic Methodology Review.” Systematic Reviews 6 (1): 266. doi:10.1186/s13643-0170638-9. Masset, E., G. Mascagni, A. Acharya, E.-M. Egger, and A. Saha. 2018. “Systematic Reviews of Cost-Effectiveness in Low and Middle Income Countries: A Review of Reviews.” Journal of Development Effectiveness 10(1): 95–120. Moher, D., A. Tsertsvadze, A. Tricco, M. Eccles, J. Grimshaw, M. Sampson, N. Barrowman. 2008. When and how to update systematic reviews. Cochrane Database of Systematic Reviews 2008, Issue 1. Art. No.: MR000023. doi: 10.1002/14651858.MR000023.pub3. Molina, E., L. Carella, A. Pacheco, G. Cruces, and L. Gasparini. 2016. “Community Monitoring Interventions to Curb Corruption and Increase Access and Quality of Service Delivery in Low- and Middle-Income Countries.” Campbell Systematic Reviews 2016: 8. doi:10.4073/csr.2016.8. O’Mara-Eves, A., J. Thomas, J. McNaught, M. Miwa, and S. Anaiadou. 2015. “Using Text Mining for Study Identification in Systematic Reviews: A Systematic Review of Current Approaches.” Systematic Reviews 4 (5): 1–22. Oliver, S., P. Garner, P. Heywood, J. Jull, K. Dickson, M. Bangpan, L. Ang, M. Fourman, and R. Garside. 2017. “Transdisciplinary Working to Shape Systematic Reviews and Interpret the Findings: A Commentary.” Environmental Evidence 6 (1). doi:10.1186/s13750-017-0106-y. Oya, C., F. Schaefer, D. Skalidou, C. McCosker, and L. Langer. 2017. Effectiveness of Agricultural Certification Schemes for Improving Socio-Economic Outcomes in Low and Middle-Income Countries, 3ie Systematic Review Summary 9. London: International Initiative for Impact Evaluation (3ie). Oya, C., Skalidou, D., and C. Oya. 2018. “The Challenges of Screening and Synthesising Qualitative Research in a MixedMethods Systematic Review. The Case of the Impact of Agricultural Certification Schemes.” Journal of Development Effectiveness 10(1): 39–60. Pawson, R. 2002. “Evidence-Based Policy: The Promise of ‘Realist Synthesis’.” Evaluation 8 (3): 340–358. doi:10.1177/ 135638902401462448. Petrosino, A., C. Morgan, T. A. Fronius, E. E. Tanner-Smith, and R. F. Boruch. 2012. “Interventions in Developing Nations for Improving Primary and Secondary School Enrollment of Children: A Systematic Review.” Campbell Systematic Reviews 2012: 19. doi:10.4073/csr.2012.19. Phillips, D., C. Coffey, S. Tsoli, J. Stevenson, H. Waddington, J. Eyers, H. White, and B. Snilstveit, 2017. A Map of Evidence Maps Relating to Sustainable Development in Low- and Middle-Income Countries. 3ie Evidence Gap Map Report 10. London: 3ie. Piza, C., T. Cravo, L. Taylor, L. Gonzalez, I. Musse, I. Furtado, A. C. Sierra, and S. Abdelnour. 2016. “The Impact of Business Support Services for Small and Medium Enterprises on Firm Performance in Low- and Middle-Income Countries: A Systematic Review.” Campbell Systematic Reviews 2016: 1. doi:10.4073/csr.2016.1. Polec Augustincic, L., J. Petkovic, V. Welch, E. Ueffing, E. T. Ghogomu, J. P. Pardo, M. Grabowsky, A. Attaran, G. A. Wells, and P. Tugwell. 2015. “Strategies to Increase the Ownership and Use of Insecticide-Treated Bednets to Prevent Malaria: A Systematic Review.” Campbell Systematic Reviews 2015: 17. doi:10.4073/csr.2015.17. Rader, T., J. Pardo Pardo, D. Stacey, E. Ghogomu, L. J. Maxwell, V. A. Welch, J. A. Singh, et al. 2014. “Update of Strategies to Translate Evidence from Cochrane Musculoskeletal Group Systematic Reviews for Use by Various Audiences.” Journal of Rheumatology 41 (2): 206–215. doi:10.3899/jrheum.121307. Rees, R., and S. Oliver. 2012. “Stakeholder Perspectives and Participation in Reviews”. Chapter 2 In An Introduction to Systematic Reviews, edited by D. Gough, S. Oliver, and J. Thomas. London: Sage. Samii, C., M. Lisiecki, P. Kulkarni, L. Paler, and L. Chavis. 2014a. “Effects of Decentralized Forest Management (DFM) on Deforestation and Poverty in Low and Middle Income Countries: A Systematic Review.” Campbell Systematic Reviews 2014: 10. doi:10.4073/csr.2014.10. Samii, C., M. Lisiecki, P. Kulkarni, L. Paler, and L. Chavis. 2014b. “Effects of Payment for Environmental Services (PES) on Deforestation and Poverty in Low and Middle Income Countries: A Systematic Review.” Campbell Systematic Reviews 2014: 11. doi:10.4073/csr.2014.11. Sen, A. 1999. Development as Freedom. Oxford: Oxford University Press. Snilstveit, B. 2012. “Systematic Reviews: From ‘Bare Bones’ to Policy Relevance.” Journal of Development Effectiveness 4 (3): 388–408. doi:10.1080/19439342.2012.709875. Snilstveit, B., J. Stevenson, I. Shemilt, Jimenez, E. Clarke, and J. Thomas (2018), Efficient, timely and living systematic reviews: opportunities in international development, Unpublished manuscript. London: Centre of Excellence for Development Impact and Learning. Snilstveit, B., J. Stevenson, D. Phillips, M. Vojtkova, E. Gallagher, T. Schmidt, H. Jobse, M. Geelen, M. Pastorello, M. and J. Eyers. 2015. Interventions for Improving Learning Outcomes and Access to Education in Low- and Middle- Income Countries: a systematic review, 3ie Systematic Review 24. London: International Initiative for Impact Evaluation. JOURNAL OF DEVELOPMENT EFFECTIVENESS 15 Snilstveit, B., J. Stevenson, R. Menon, D. Phillips, E. Gallagher, M. Geleen, H. Jobse, T. Schmidt, and E. Jimenez. 2016. The Impact of Education Programmes on Learning and School Participation in Low- and Middle-Income Countries: a Systematic Review Summary Report, 3ie Systematic Review Summary 7. London: International Initiative for Impact Evaluation. Snilstveit, B., M. Vojtkova, A. Bhavsar, and M. Gaarder, 2013. Evidence Gap Maps: A Tool for Promoting EvidenceInformed Policy and Prioritizing Future Research. Policy Research Working Paper WPS6725. Washington, D.C.: World Bank. Spier, E. T., P. R. Britto, T. Pigott, E. Roehlkapartain, M. McCarthy, Y. Kidron, M. Song, et al. 2016. “Parental, Community, and Familial Support Interventions to Improve Children’s Literacy in Developing Countries: A Systematic Review: A Systematic Review.” Campbell Systematic Reviews 2016: 4. doi:10.4073/csr.2016.4. Stewart, R. 2018. “Do Evidence Networks Make a Difference?.” Journal of Development Effectiveness 10(1): 171–178. Stewart, R., L. Langer, R. N. Da Silva, and E. Muchiri. 2016. Effects of Training, Innovation and New Technology on African Smallholder Farmers’ Economic Outcomes and Food Security, 3ie Systematic Review Summary 6. London: 3ie. Sutton, A. J., S. Donegan, Y. Takwoingi, O. Garner, C. Gamble, and A. Donald. 2009. “An Encouraging Assessment of Methods to Inform Priorities for Updating Systematic Reviews.” Journal of Clinical Epidemiology 62: 241–251. doi:10.1016/j.jclinepi.2008.04.005. Takwoingi, Y., S. Hopewell, D. Tovey, and A. J. Sutton. 2013. “A Multicomponent Decision Tool for Prioritising the Updating of Systematic Reviews.” British Medical Journal 347: f7191-f7191. doi:10.1136/bmj.f7191. The Campbell Collaboration. 2016. Campbell Systematic Reviews: Policies and Guidelines. Campbell Policies and Guidelines Series No. 1. doi:10.4073/cpg.2016.1. Thomas, J., A. Harden, A. Oakley, A., S. Oliver, K. Sutcliffe, R. Rees, G. Brunton and J. Kavanagh. 2004. Integrating Qualitative Research with Trials in Systematic Reviews. BMJ 2004;328;1010. doi:10.1136/bmj.328.7446.1010. Ton, G., S. Desiere, W. Vellema, S. Weituschat, and M. D’Haese. 2017. “The Effectiveness of Contract Farming for Raising Income of Smallholder Farmers in Low- and Middle-Income Countries.” a systematic review Campbell Systematic Reviews 2017: 13. doi:10.4073/csr.2017.13. Tripney, J., A. Roulstone, C. Vigurs, H. Nina, E. Schmidt, and R. Stewart. 2015. “Interventions to Improve the Labour Market Situation of Adults with Physical And/Or Sensory Disabilties in Low- and Middle-Income Countries.” Campbell Systematic Reviews 2015: 20. doi:10.4073/csr.2015.20. Tripney, J., J. Hombrados, M. Newman, K. Hovish, C. Brown, K. Steinka-Fry, and E. Wilkey. 2013. “Technical and Vocational Education and Training (TVET) Interventions to Improve the Employability and Employment of Young People in Low- and Middle-Income Countries: A Systematic Review.” Campbell Systematic Reviews 2013: 9. doi:10.4073/csr.2013.9. Tsafnat, G., A. Dunn, P. Glasziou, and E. Coiera. 2013. “The Automation of Systematic Reviews.” British Medical Journal 346: 1–2. Tsafnat, G., P. Glasziou, M. K. Choong, A. Dunn, F. Galgani, and E. Coiera. 2014. “Systematic Review Automation Technologies.” Systematic Reviews 3 (1): 1–15. doi:10.1186/2046-4053-3-74. United Nations. 2015a. “Transforming Our World: The 2030 Agenda for Sustainable Development”. Resolution adopted by the General Assembly. 25 September 2015. Accessed 4 February 2018. http://www.un.org/en/ga/search/view_ doc.asp?symbol=A/RES/70/1&Lang=E United Nations. 2015b. The Millennium Development Goals Report 2015. New York: United Nations. Accessed 4 February 2018. http://www.un.org/millenniumgoals/2015_MDG_Report/pdf/MDG%202015%20rev%20(July%201).pdf. Vaessen, J., A. Rivas, M. Duvendack, R. Palmer Jones, F. L. Leeuw, G. Van Gils, R. Lukach, et al. 2014. “The Effects of Microcredit on Women’s Control over Household Spending in Developing Countries: A Systematic Review and Meta-Analysis.” Campbell Systematic Reviews 2014: 8. doi:10.4073/csr.2014.8. Van der Knaap, L.M., F. L. Leeuw, S. Bogaerts, and L. T. J. Nijssen. 2008. Combining Campbell Standards and the Realist Evaluation Approach: the Best of Two Worlds? American Journal of Evaluation 29 (1): 48–57. Waddington, H., and B. Snilstveit. 2009. “Effectiveness and Sustainability of Water, Sanitation and Hygiene Interventions in Combating Diarrhoea.” Journal of Development Effectiveness 1 (3): 295–335. doi:10.1080/ 19439340903141175. Waddington, H., B. Snilstveit, H. White, and L. Fewtrell. 2009. Water, Sanitation and Hygiene Interventions to Combat Childhood Diarrhoea in Developing Countries. Synthetic Review 001. New Delhi: International Initiative for Impact Evaluation. Waddington, H., B. Snilstveit, J. Hombrados, M. Vojtkova, D. Phillips, P. Davies, and H. White. 2014. “Farmer Field Schools for Improving Farming Practices and Farmer Outcomes: A Systematic Review.” Campbell Systematic Reviews 2014: 6. doi:10.4073/csr.2014.6. Waddington, H., and H. White. 2014. Farmer Field Schools: From Agricultural Extension to Adult Education. Systematic Review Summary 1. London: 3ie. Waddington, H., H. White, B. Snilstveit, J. G. Hombrados, M. Vojtkova, P. Davies, A. Bhavsar, et al. 2012. “How to Do A Good Systematic Review of Effects in International Development: A Tool Kit.” Journal of Development Effectiveness 4 (3): 359–387. doi:10.1080/19439342.2012.711765. 16 EDITORIAL Welch, V. A., E. Ghogomu, A. Hossain, S. Awasthi, Z. A. Bhutta, C. Cumberbatch, R. Fletcher, et al. 2016. “Deworming and Adjuvant Interventions for Improving the Developmental Health and Well-Being of Children in Low- and Middle-Income Countries: A Systematic Review and Network Meta-Analysis.” Campbell Systematic Reviews 2016: 7. doi:10.4073/csr.2016.7. Welch, V. A., E. Ghogomu, A. Hossain, S. Awasthi, Z. A. Bhutta, C. Cumberbatch, R. Fletcher, et al. 2017. “Mass Deworming to Improve Developmental Health and Wellbeing of Children in Low-Income and Middle-Income Countries: A Systematic Review and Network Meta-Analysis.” The Lancet Global Health 5 (1): e40–e50. doi:10.1016/ S2214-109X(16)30242-X. White, H. 2018. “Theory Based Systematic Reviews.” Journal of Development Effectiveness 10(1): 17–38. White, H., and H. Waddington. 2012. “Why Do We Care about Evidence Synthesis? an Introduction to the Special Issue on Systematic Reviews.” Journal of Development Effectiveness 4 (3): 351–358. doi:10.1080/19439342.2012.711343. Whitty, C. 2015. “What Makes an Academic Paper Useful for Health Policy?” BMC Medicine 13: 301. doi:10.1186/s12916015-0544-8.