Solutions Manual

LINEAR SYSTEM THEORY, 2/E

Wilson J. Rugh

Department of Electrical and Computer Engineering

Johns Hopkins University

PREFACE

With some lingering ambivalence about the merits of the undertaking, but with a bit more dedication than

the first time around, I prepared this Solutions Manual for the second edition of Linear System Theory. Roughly

40% of the exercises are addressed, including all exercises in Chapter 1 and all others used in developments in the

text. This coverage complements the 60% of those in an unscientific survey who wanted a solutions manual, and

perhaps does not overly upset the 40% who voted no. (The main contention between the two groups involved the

inevitable appearance of pirated student copies and the view that an available solution spoils the exercise.)

I expect that a number of my solutions could be improved, and that some could be improved using only

techniques from the text. Also the press of time and my flagging enthusiasm for text processing impeded the

crafting of economical solutions—some solutions may contain too many steps or too many words. However I

hope that the error rate in these pages is low and that the value of this manual is greater than the price paid.

Please send comments and corrections to the author at rugh@jhu.edu or ECE Department, Johns Hopkins

University, Baltimore, MD 21218 USA.

CHAPTER 1

Solution 1.1

(a) For k = 2, (A + B)2 = A 2 + AB + BA + B 2 . If AB = BA, then (A + B)2 = A 2 + 2AB + B 2 . In general if

AB = BA, then the k-fold product (A + B)k can be written as a sum of terms of the form A j B k−j , j = 0, . . . , k. The

k

number of terms that can be written as A j B k−j is given by the binomial coefficient

. Therefore AB = BA

j implies

(A + B)k =

k

Σ

j =0

k j k−j

AB

j

(b) Write

det [λ I − A (t)] = λn + an−1 (t)λn−1 + . . . + a 1 (t)λ + a 0 (t)

where invertibility of A (t) implies a 0 (t) ≠ 0. The Cayley-Hamilton theorem implies

A n (t) + an−1 (t)A n−1 (t) + . . . + a 0 (t)I = 0

for all t. Multiplying through by A −1 (t) yields

A −1 (t) =

. . . − an−1 (t)A n−2 (t) − A n−1 (t)

1 (t)I −

_−a

________________________________

a 0 (t)

for all t. Since a 0 (t) = det [−A (t)], a 0 (t) = det A (t). Assume ε > 0 is such that det A (t) ≥ ε for all t. Since

A (t) ≤ α we have aij (t) ≤ α, and thus there exists a γ such that a j (t) ≤ γ for all t. Then, for all t,

a 1 (t)I + . . . + A n−1 (t)

______________________

A −1 (t) =

det A (t)

+ γ α + . . . + αn−1 ∆

_γ________________

=β

≤

ε

Solution 1.2

(a) If λ is an eigenvalue of A, then recursive use of Ap = λp shows that λk is an eigenvalue of A k . However to

show multiplicities are preserved is more difficult, and apparently requires Jordan form, or at least results on

similarity to upper triangular form.

(b) If λ is an eigenvalue of invertible A, then λ is nonzero and Ap = λp implies A −1 p = (1/ λ)p. As in (a),

addressing preservation of multiplicities is more difficult.

T

T

(c) A T has eigenvalues λ__

1 , . . . , λ__

n since det (λI − A ) = det (λI − A) = det (λI − A).

(d) A H has eigenvalues λ1 , . . . , λn using (c) and the fact that the determinant (sum of products) of a conjugate is

the conjugate of the determinant. That is

-1-

Linear System Theory, 2/E

Solutions Manual

_ ________

_

_

det (λ I − A H ) = det (λ I − A)H = det (λ I − A)

(e) α A has eigenvalues αλ1 , . . . , αλn since Ap = λp implies (α A)p = (αλ)p.

(f) Eigenvalues of A T A are not nicely related to eigenvalues of A. Consider the example

0 α 0 0 A=

, ATA =

0 0 0 α where the eigenvalues of A are both zero, and the eigenvalues of A T A are 0, α. (If A is symmetric, then (a)

applies.)

Solution 1.3

(a) If the eigenvalues of A are all zero, then det (λ I − A) = λn and the Cayley-Hamilton theorem shows that A is

nilpotent. On the other hand if one eigenvalue, say λ1 is nonzero, let p be a corresponding eigenvector. Then

A k p = λ k1 p ≠ 0 for all k ≥ 0, and A cannot be nilpotent.

_

(b) Suppose Q is real and symmetric, and λ is an eigenvalue of Q. Then λ also

_ _is_ an eigenvalue. From the

eigenvalue/eigenvector

equation Qp = λ p we get_ p H Qp = λ p H p. Also Qp = λ p, and

_

_ transposing gives

p H Qp = λ p H p. Subtracting the two results gives (λ − λ)p H p = 0. Since p ≠ 0, this gives λ = λ, that is, λ is real.

(c) If A is upper triangular, then λ I − A is upper triangular. Recursive Laplace expansion of the determinant about

the first column gives

det (λ I − A) = (λ − a 11 ) . . . (λ − ann )

which implies the eigenvalues of A are the diagonal entries a 11 , . . . , ann .

Solution 1.4

(a)

A=

0 0

1 0

implies A T A =

1 0

0 0

implies

A = 1

(b)

A=

3 1

1 3

implies A T A =

10 6 6 10 Then

det (λI − A T A) = (λ − 16)(λ − 4)

which implies A = 4.

(c)

A=

1−i 0 0 1+i implies A H A =

(1+i)(1−i)

0

=

0

(1−i)(1+i) This gives A = √2 .

Solution 1.5 Let

A=

1/α α ,

0 1/α α>1

Then the eigenvalues are 1/α and, using an inequality on text page 7,

A ≥

max

1 ≤ i, j ≤ 2

-2-

aij = α

2 0

0 2

Linear System Theory, 2/E

Solutions Manual

Solution 1.6 By definition of the spectral norm, for any α ≠ 0 we can write

A x

______

x = 1

x = 1

x

αA x

A α x

_________

________

= max

= max

x = 1/α

αx

α x = 1

α x

A =

A x =

max

max

Since this holds for any α ≠ 0,

A =

max

x

≠0

A x

A x

______

______

= max

x≠0

x

x

Therefore

A x

______

x

A ≥

for any x ≠ 0, which gives

A x ≤ A x

Solution 1.7 By definition of the spectral norm,

AB =

max

x

=1

≤ max

x

=1

(AB)x =

max

x

=1

A (Bx)

{A Bx } , by Exercise 1.6

= A max

x

Bx = A B

=1

If A is invertible, then A A −1 = I and the obvious I = 1 give

1 = A A −1 ≤ A A −1

Therefore

1

_____

A

A −1 ≥

Solution 1.8 We use the following easily verified facts about partitioned vectors:

x1

x2

≥ x 1 , x 2 ;

x1

0

= x 1 ,

0

x2

= x 2

Write

Ax =

A 11 A 12

A 21 A 22

x1

x2

=

A 11 x 1 + A 12 x 2

A 21 x 1 + A 22 x 2

Then for A 11 , for example,

A =

max

x

=1

≥ max

x 1

=1

A x ≥

max

x

=1

A 11 x 1

+ A 12 x 2

A 11 x 1 = A 11

The other partitions are handled similarly. The last part is easy from the definition of induced norm. For example

if

-3-

Linear System Theory, 2/E

Solutions Manual

0 A 12

0 0

A=

then partitioning the vector x similarly we see that

max

x

=1

A x =

max

x 2

=1

A 12 x 2 = A 12

Solution 1.9 By the Cauchy-Schwarz inequality, and x T = x ,

x T A x ≤ x T A x = A T x x

≤ A T x 2 = A x 2

This immediately gives

x T A x ≥ −A x 2

If λ is an eigenvalue of A and x is a corresponding unity-norm eigenvector, then

λ = λx = λ x = A x ≤ A x = A

Solution 1.10 Since Q = Q T , Q T Q = Q 2 , and the eigenvalues of Q 2 are λ 21 , . . . , λ 2n . Therefore

Q =

2

(Q

)

√λ max

= max

1≤i≤n

λi

For the other equality Cauchy-Schwarz gives

x T Qx

| ≤ x T Q x = Qx x

≤ Q x 2 = [ max

λi ]

1≤i≤n

x Tx

Therefore | x T Qx | ≤ Q for all unity-norm x. Choosing xa as a unity-norm eigenvector of Q corresponding to

the eigenvalue that yields max λi gives

1≤i≤n

x Ta Qxa = x Ta

Thus max

x

=1

[ max

1≤i≤n

λi ] xa

= max

1≤i≤n

λi

x T Qx = Q .

x)

T (A

x)

= √x

TA TA

x

,

Solution 1.11 Since A x = √(A

A =

=

max √xTA TA x

x

=1

max x T A T A x

x

=1

1/2

The Rayleigh-Ritz inequality gives, for all unity-norm x,

x T A T A x ≤ λmax (A T A) x T x = λmax (A T A)

and since A T A ≥ 0, λmax (A T A) ≥ 0. Choosing xa to be a unity-norm eigenvector corresponding to λmax (A T A) gives

x Ta A T A xa = λmax (A T A)

Thus

-4-

Linear System Theory, 2/E

Solutions Manual

max x T A T A x = λmax (A T A)

x

=1

T (A

A)

so we have A = √λmax

.

Solution 1.12 Since A T A > 0 we have λi (A T A) > 0, i = 1, . . . , n, and (A T A)−1 > 0. Then by Exercise 1.11,

A −1 2

= λmax ((A T A)−1 ) =

n

1

_________

λmin (A T A)

Π λi (A T A)

n −1

T

max (A A)]

i =1

_[λ____________

__________________

≤

=

(det A)2

λmin (A T A) . det (A T A)

=

A 2(n−1)

_________

(det A)2

Therefore

A −1 ≤

A n−1

________

det A

Solution 1.13 Assume A ≠ 0, for the zero case is trivial. For any unity-norm x and y,

y T A x ≤ y T A x

≤ y A x = A

Therefore

max

x , y

=1

y T A x ≤ A

Now let unity-norm xa be such that A xa = A , and let

ya =

Axa

_____

A

Then ya = 1 and

y Ta A xa =

A xa 2

x Ta A T A xa

A 2

______

________

__________

= A

=

=

A

A

A

Therefore

max

x , y

=1

y T A x = A

Solution 1.14 The coefficients of the characteristic polynomial of a matrix are continuous functions of matrix

entries, since determinant is a continuous function of the entries (sum of products). Also the roots of a

polynomial are continuous functions of the coefficients. (A proof is given in Appendix A.4 of E.D. Sontag,

Mathematical Control Theory, Springer-Verlag, New York, 1990.) Since a composition of continuous functions

is a continuous function, the pointwise-in-t eigenvalues of A (t) are continuous in t.

This argument gives that the (nonnegative) eigenvalues of A T (t)A (t) are continuous in t. Then the maximum at

each t is continuous in t — plot two eigenvalues and consider their pointwise maximum to see this. Finally since

square root is a continuous function of nonnegative arguments, we conclude A (t) is continuous in t.

However for continuously-differentiable A (t), A (t) need not be continuously differentiable in t. Consider the

-5-

Linear System Theory, 2/E

Solutions Manual

example

A (t) =

t 0

0 t2

,

A (t) =

t , 0≤t ≤1

t2 , 1 < t < ∞

Clearly the time derivative of A (t) is discontinuous at t = 1. (This overlaps Exercise 1.18 a bit.)

Also the eigenvalues of continuously-differentiable A (t) are not necessarily continuously differentiable, consider

0 1 A (t) =

−1 −t An easy computation gives the eigenvalues

λ(t) =

t 2 − 4

t

_√

______

__

±

2

2

Thus

.

λ(t) =

t

1 ________

__

±

2

2

2 √t − 4

and this function is not continuous at t = 2.

Solution 1.15 Clearly Q is positive definite, and by Rayleigh-Ritz if x ≠ 0,

0 < λmin (Q) x T x ≤ x T Q x ≤ λmax (Q) x T x

Choosing x as an eigenvector corresponding to λmin (Q) (respectively, λmax (Q)) shows that these inequalities are

tight. Thus

ε1 ≤ λmin (Q) , λmax (Q) ≤ ε2

Therefore

λmin (Q −1 ) =

1

1

___

_ ______

≥

ε2

λmax (Q)

λmax (Q −1 ) =

1

1

___

_______

≤

ε1

λmin (Q)

Thus Rayleigh-Ritz for the positive definite matrix Q −1 gives

1

1

___

___

I

I ≤ Q −1 ≤

ε1

ε2

Solution 1.16 If W (t) − ε I is symmetric and positive semidefinite for all t, then for any x,

x T W (t) x ≥ ε x T x

for all t. At any value of t, let xt be an eigenvector corresponding to an eigenvalue (necessarily real) λt of W (t).

Then

x Tt W (t) xt = λt x Tt xt ≥ ε x Tt xt

That is λt ≥ ε. This holds for any eigenvalue of W (t) and every t. Since the determinant is the product of

eigenvalues,

det W (t) ≥ εn > 0

for any t.

-6-

Linear System Theory, 2/E

Solutions Manual

Solution 1.17 Using the product rule to differentiate A (t) A −1 (t) = I yields

.

_d_ A −1 (t) = 0

A (t) A −1 (t) + A (t)

dt

which gives

_d_ A −1 (t) = −A −1 (t) A. (t) A −1 (t)

dt

Solution 1.18

Assuming differentiability of both x (t) and

x (t),

and using the chain rule for scalar

functions,

_d_

dt

_d_

dt

_d_

= 2x (t)

dt

x (t)2 = 2x (t)

x (t)

x (t)

Also we can write, using the product rule and the Cauchy-Schwarz inequality,

_d_ x (t)2 = _d_ x T (t) x (t) = x. T (t) x (t) + x T (t) x. (t) = 2x T (t) x. (t)

dt

dt

.

≤ 2x (t)x (t)

For t such that x (t) ≠ 0, comparing these expressions gives

.

_d_

dt

x (t) ≤ x (t)

If x (t) = 0 on a closed interval, then on that interval the result is trivial. If x (t) = 0 at an isolated point, then

continuity arguments show that the result is valid. Note that for the differentiable function x (t) = t, x (t) = t

is not differentiable at t = 0. Thus we must make the assumption that x (t) is differentiable. (While this

inequality is not explicitly used in the book, the added differentiability hypothesis explains why we always

differentiate x (t)2 = x T (t) x (t) instead of x (t).)

Solution 1.19 To prove the contrapositive claim, suppose for each i, j there is a constant βij such that

t

∫ fij (σ) d σ ≤ βij ,

t ≥0

0

Then by the inequality on page 7, noting that max fij (t) is a continuous function of t and taking the pointwisei, j

in-t maximum,

t

t

0

0

fij (σ) d σ

∫ F (σ) d σ ≤ ∫ √mn max

i, j

≤ √mn

t m

n

∫Σ Σ

| fij (σ) d σ

0 i =1 j =1

≤ √mn

n

m

Σ Σ βij < ∞ ,

i =1 j =1

k

The argument for

Σ F ( j) is similar.

j =0

-7-

t ≥0

Linear System Theory, 2/E

Solutions Manual

Solution 1.20 If λ(t), p (t) are a pointwise-in-t eigenvalue/eigenvector pair for A −1 (t), then

A −1 (t) p (t) = λ(t) p (t) = λ(t)p (t)

Therefore, for every t,

λ(t) =

A −1 (t)p (t)

A −1 (t) p (t)

_______________

_____________

≤α

≤

p (t)

p (t)

Since this holds for any eigenvalue/eigenvector pair,

det

A (t) =

1

1

1

___

_________________

___________

≥ n >0

=

λ1 (t) . . . λn (t)

α

det A −1 (t)

for all t.

Solution 1.21 Using Exercise 1.10 and the assumptions Q (t) ≥ 0, tb ≥ ta ,

tb

tb

tb

tb

ta

ta

ta

ta

∫ Q (σ) d σ = ∫ λmax [Q (σ)] d σ ≤ ∫ tr [Q (σ)] d σ = tr ∫ Q (σ) d σ

Note that

tb

∫ Q (σ ) d σ ≥ 0

ta

since for every x

x

T

tb

tb

ta

ta

∫ Q (σ ) d σ x = ∫ x T Q (σ ) x d σ ≥ 0

Thus, using a property of the trace on page 8 of Chapter 1, we have

tb

tb

tb

ta

ta

ta

∫ Q (σ) d σ ≤ tr ∫ Q (σ) d σ ≤ n ∫ Q (σ) d σ

Finally,

tb

∫ Q (σ ) d σ ≤ ε I

ta

implies, using Rayleigh-Ritz,

tb

∫ Q (σ) d σ ≤ ε

ta

Therefore

tb

∫ Q (σ) d σ ≤ n ε

ta

-8-

CHAPTER 2

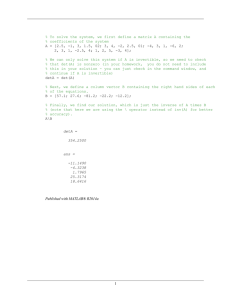

Solution 2.3

.

The nominal solution for ũ(t) = sin (3t) is ỹ(t) = sin t. Let x 1 (t) = y (t), x 2 (t) = y (t) to write the

state equation

.

x (t) =

x 2 (t)

−(4/ 3)x 31 (t) − (1/ 3)u (t)

Computing the Jacobians and evaluating gives the linearized state equation

.

x δ (t) =

0

1

x (t) +

−4 sin2 t 0 δ

0 u (t)

−1/ 3 δ

y δ (t) = 1 0 x δ (t)

where

x δ (t) = x (t) −

sin t

cos t

,

u δ (t) = u (t) − sin (3t) ,

y δ (t) = y (t) − sin t , x δ (0) = x (0) −

0

1

Solution 2.5 For ũ = 0 constant nominal solutions are solutions of

0 = x̃ 2 − 2x̃ 1 x̃ 2 = x̃ 2 (1−2x̃ 1 )

2

2

2

0 = −x̃ 1 + x̃ 1 + x̃ 2 = x̃ 1 (x̃ 1 −1) + x̃ 2

Evidently there are 4 possible solutions:

0 x̃a =

, x̃b =

0 1

,

0

x̃c =

1/ 2 ,

1/ 2 x̃d =

1/ 2 −1/ 2 Since

_∂f

__ =

∂x

−2x 2 1−2x 1

−1+2x 1 2x 2

,

∂f

___

=

∂u

0

1

evaluating at each of the constant nominals gives the corresponding 4 linearized state equations.

Solution 2.7 Clearly x̃ is a constant nominal if and only if

0 = A x̃ + bũ

that is, if and only if A x̃ = −bũ. There exists such an x̃ if and only if b ∈ Im [A ], in other words

-9-

rank A = rank [ A b ].

Also, x̃ is a constant nominal with c x̃ = 0 if and only if

0 = A x̃ + bũ

0 = c x̃

that is, if and only if

A

x̃ =

c

−bũ

0

As above, this holds if and only if

rank

A

= rank

c

A b

c 0

Finally, x̃ is a constant nominal with c x̃ = ũ if and only if

0 = A x̃ + bũ = ( A + bc ) x̃

and this holds if and only if

x̃ ∈ Ker [ A + bc ]

(If A is invertible, we can be more explicit. For any ũ the unique constant nominal is x̃ = −A −1 bũ. Then ỹ = 0 for

ũ ≠ 0 if and only if c A −1 b = 0, and ỹ = ũ if and only if c A −1 b = −1.)

Solution 2.8

(a) Since

A B

C 0

is invertible, for any K

A + BK B =

C

0

A B

C 0

I 0

K I

is invertible. Let

A + BK B C

0

R1 R2

R3 R 4

=

−1

I 0

0 I

Then the 1, 2-block gives R 2 = −(A + BK) BR 4 and the 2, 2-block gives CR 2 = I, that is, I = −C(A + BK)−1 BR 4

Thus [ C (A + BK)−1 B ]−1 exists and is given by −R 4 .

(b) We need to show that there exists N such that

0 = (A + BK)x̃ + BNũ

ũ = Cx̃

The first equation gives

x̃ = −(A + BK)−1 BN ũ

Thus we need to choose N such that

−C (A + BK)−1 BN ũ = ũ

From part (a) we take N = [−C (A + BK)−1 B ]−1 = R 4 .

-10-

Linear System Theory, 2/E

Solutions Manual

Solution 2.10 For u (t) = ũ, x̃ is a constant nominal if and only if

0 = (A + Dũ) x̃ + bũ

This holds if and only if bũ ∈ Im [ A + Dũ], that is, if and only if

rank ( A + Dũ ) = rank

A +Dũ

bũ

If A + Dũ is invertible, then

x̃ = −(A + Dũ)−1 bũ

(+)

If A is invertible, then by continuity of the determinant det (A + Dũ) ≠ 0 for all ũ such that ũ is sufficiently

small, and (+) defines a corresponding constant nominal. The corresponding linearized state equation is

.

x δ (t) = (A + Dũ) x δ (t) + [ b − D (A + Dũ)−1 bũ ] u δ (t)

y δ (t) = C x δ (t)

Solution 2.12

For the given nominal input, nominal output, and nominal initial state, the nominal solution

satisfies

.

x̃ (t) =

1

x̃ 1 (t) − x̃ 3 (t) , x̃(0) =

x̃ 2 (t) − 2 x̃ 3 (t) 0 −3

−2 1 = x̃ 2 (t) − 2 x̃ 3 (t)

Integrating for x̃ 1 (t) and then x̃ 3 (t) easily gives the nominal solution x̃ 1 (t) = t, x̃ 2 (t) = 2 t − 3, and x̃ 3 (t) = t − 2.

The corresponding linearized state equation is specified by

0 0

0 0 A = 1 0 −1 , B (t)= t , C = 0 1 −2

0 0 1 −2 It is unusual that the nominal input and nominal output are constants, but the linearization is time varying.

Solution 2.14 Compute

.

.

.

.

z (t) = x (t) − q (t) = A x (t) + Bu (t) + A −1 Bu (t)

.

= A x (t) − A[−A −1 Bu (t)] + A −1 Bu (t)

.

= A z (t) + A −1 Bu (t)

.

If at any value of ta > 0 we have x (ta ) = q (ta ), that is z (ta ) = 0, and u (t) = 0 for t ≥ ta , that is u (t) = u (ta ) for

t ≥ ta , then z (t) = 0 for t ≥ ta . Thus x (t) = q (ta ) for t ≥ ta , and q (t) represents what could be called an

‘instantaneous constant nominal.’

-11-

CHAPTER 3

Solution 3.2 Differentiating term k +1 of the Peano-Baker series using Leibniz rule gives

σ1

t

∂

___

∂τ

σ2

σk

∫τ A (σ1 ) ∫τ A (σ2 ) ∫τ

...

∫τ A (σk +1 ) d σk +1

. . . d σ1

t

= A (t) ∫ A (σ2 )

σ2

∫τ

τ

σk

∫τ A (σk +1 ) d σk +1

...

d

___

t−

dτ

. . . d σ2

σ2

σ1

t

∂

___

+ ∫ A (σ 1 )

∂τ

τ

∫τ A (σ2 ) ∫τ

σk

∂

___

= ∫ A (σ 1 )

∂

τ

τ

τ

τ

d σk +1 . . . d σ1

σ2

σ1

t

τ

∫τ A (σk +1 )

...

τ

A (τ) ∫ A (σ2 ) ∫ . . . d σk +1 . . . d σ2

∫τ A (σ2 ) ∫τ

σk

...

∫τ A (σk +1 )

d σk +1 . . . d σ1

Repeating this process k times gives

t

∂

___

∂τ

σ1

σ2

∫τ A (σ1 ) ∫τ A (σ2 ) ∫τ

σk

...

∫τ A (σk +1 ) d σk +1

. . . d σ1

t

= ∫ A (σ 1 )

σ1

τ

∫τ

t

σ1

= ∫ A (σ 1 )

τ

∫τ

t

σ1

= ∫ A (σ 1 )

τ

∫τ

σk−1

∫τ

...

A (σ k )

∂

___

∂τ

σk

∫τ A (σk +1 ) d σk +1

σk−1

...

∫τ

σk

σ2

A (σ 2 )

0 − A (τ) +

A (σ k )

∫τ

∫τ 0 d σk +1

d σk . . . d σ1

d σk . . . d σ1

σk−1

...

∫τ

A (σ k ) d σ k . . . d σ 1

− A (τ)

Recognizing this as term k of the uniformly convergent series for −Φ(t, τ) A (τ) gives

∂

___

Φ(t, τ) = −Φ(t, τ) A (τ)

∂τ

(Of course it is simpler to use the formula for the derivative of an inverse matrix given in Exercise 1.17.)

-12-

d

___

τ

dτ

Linear System Theory, 2/E

Solutions Manual

Solution 3.6 Writing the state equation as a pair of scalar equations, the first one is

.

−t

______

x 1 (t)

x 1 (t) =

1 + t2

and an easy computation gives

x 1o

_________

(1 + t 2 )1/2

x 1 (t) =

Then the second scalar equation then becomes

x 1o

.

−4t

_________

______

x 2 (t) +

x 2 (t) =

2

(1 + t 2 )1/2

1+t

The complete solution formula gives, with some help from Mathematica,

t

.

(1 + σ2 )3/2

1

_________

________

d σ x 1o

x 2o + ∫

x 2 (t) =

2 2

2 2

(1 + t )

0 (1 + t )

=

2

(t 3 /4+5t/ 8)+(3/ 8) sinh−1 (t)

1+t

1

_√

____________________________

________

x 1o

x

+

2o

(1 + t 2 )2

(1 + t 2 )2

If x 1o = 1, then as t →∞, x 2 (t) → 1/ 4, not zero.

Solution 3.7 From the hint, letting

t

r (t) = ∫ v (σ)φ(σ) d σ

to

.

we have r (t) = v (t)φ(t), and

φ(t) ≤ ψ(t) + r (t)

(*)

Multiplying (*) through by the nonnegative v (t) gives

v (t)φ(t) ≤ v (t)ψ(t) + v (t)r (t)

or

.

r (t) − v (t)r (t) ≤ v (t)ψ(t)

Multiply both sides by the positive quantity

t

− ∫ v ( τ) d τ

e

to

to obtain

t

_d_

dt

− ∫ v ( τ) d τ

r (t)e

t

to

≤ v (t)ψ(t)e

− ∫ v ( τ) d τ

to

Integrating both sides from to to t, and using r (to ) = 0 gives

σ

t

− ∫ v ( τ) d τ

r (t)e

to

t

≤ ∫ v (σ)ψ(σ)e

to

Multiplying through by the positive quantity

-13-

− ∫ v ( τ) d τ

to

dσ

Linear System Theory, 2/E

Solutions Manual

t

∫ v ( τ) d τ

t

eo

gives

t

∫ v ( τ) d τ

t

r (t) ≤ ∫ v (σ)ψ(σ)e σ

dσ

to

and using (*) yields the desired inequality.

Solution 3.10 Multiply the state equation by 2 z T (t) to obtain

.

_d_

2 z T (t) z (t) =

dt

z (t)2

n

n

n

n

=

Σ Σ 2 zi (t)aij (t) zj (t)

i =1 j =1

≤

Σ Σ 2aij (t)zi (t)zj (t) ,

i =1 j =1

t ≥ to

At each t ≥ to let

a (t) = 2n 2 max

1 ≤ i, j ≤ n

aij (t)

Note a (t) is a continuous function of t, as a quick sample sketch indicates. Then, since zi (t) ≤ z (t),

_d_

dt

z (t)2

≤ a (t)z (t)2 , t ≤ to

Multiplying through by the positive quantity

t

− ∫ a (σ) d σ

e

gives

to

t

− ∫ a (σ) d σ

_d_

dt

e

to

z (t)2

≤ 0 , t ≤ to

Integrating both sides from to to t and using z (to ) = 0 gives

, t ≥ to

z (t) = 0

which implies z (t) = 0 for t ≥ to .

Solution 3.11 The vector function x (t) satisfies the given state equation if and only if it satisfies

t

t τ

t

to

to to

to

x (t) = xo + ∫ A (σ) x(σ) d σ + ∫

∫ E (τ, σ) x(σ) d σd τ + ∫ B (σ)u (σ) d σ

Assuming there are two solutions, their difference z (t) satisfies

t

t τ

to

to to

z (t) = ∫ A (σ) z(σ) d σ + ∫

∫ E (τ, σ) z(σ) d σd τ

Interchanging the order of integration in the double integral (Dirichlet’s formula) gives

-14-

Linear System Theory, 2/E

Solutions Manual

t

t t

z (t) = ∫ A (σ) z(σ) d σ + ∫ ∫ E (τ, σ) d τ z(σ) d σ

to σ

to

t

t

∫

=

to

A (σ) + ∫ E (τ, σ) d τ z(σ) d σ

σ

t

∆

= ∫ Â(t, σ) z (σ) d σ

to

Thus

t

t

to

to

z (t) =

∫ Â(t, σ) z (σ) d σ ≤ ∫ Â(t, σ)z (σ) d σ

By continuity, given T > 0 there exists a finite constant α such that Â(t, σ) ≤ α for to ≤ σ ≤ t ≤ to + T. Thus

t

z (t) ≤

∫ α z (t) d σ ,

t ∈ [to , to +T ]

to

and the Gronwall-Bellman inequality gives

than one solution.

0 for t ∈ [to , to +T ], implying that there can be no more

z (t) =

Solution 3.13 From the Peano-Baker series,

t

t

I + ∫ A (σ 1 ) d σ 1 + . . . + ∫ A (σ 1 )

Φ(t, τ) −

τ

τ

σ1

∫τ

σk−1

...

∫τ

A (σ k ) d σ k . . . d σ 1

∞

=

Σ

t

∫ A (σ 1 )

j =k +1 τ

σ1

∫τ

σ j−1

...

∫τ

A (σ j ) d σ j . . . d σ 1

For any fixed T > 0 there is a finite constant α such that A (t) ≤ α for t ∈ [−T, T ], by continuity. Therefore

∞

Σ

t

∫ A (σ 1 )

j =k +1 τ

σ1

∫τ

σ j−1

...

∫τ

A (σ j ) d σ j . . . d σ1 ≤

∞

Σ

∞

t

j =k +1

≤

t

∫ A (σ 1 )

τ

σ1

σ j−1

∫τ

...

σ1

Σ ∫ A (σ1 ) ∫

j =k +1

τ

τ

∫τ

A (σ j ) d σ j . . . d σ1

σ j−1

...

∫τ

A (σ j ) d σ j . . . d σ1

.

.

.

≤

∞

Σ

j =k +1

∞

α ∫

αj

Σ

j =k +1

≤

Σ

j =k +1

We need to show that given ε > 0 there exists K such that

-15-

...

τ

≤

∞

σ j−1

t

j

∫τ

1 d σ j . . . d σ1

t − τ j

_______

j!

(α2T) j

______

, t, τ ∈ [−T, T ]

j!

Linear System Theory, 2/E

Solutions Manual

∞

Σ

j =K +1

2T) j

_(α_____

<ε

j!

(*)

Using the hint,

∞

Σ

j =k +1

∞

∞

(α2T)i

(α2T)k +1 . ______

(α2T)k +1+i

2T) j

________

__________

_(α_____

≤Σ

=Σ

j!

ki

i =0 (k +1)!

i =0 (k +1+i)!

If k > α2T, then

∞

Σ

j =k +1

(α2T)k +1

1

(α2T)k +1 . _ _______

2T) j ________

__________________

_(α_____

=

≤

(k−1)!(k +1)(k−α2T)

1 − α2T/k

(k +1)!

j!

Because of the factorial in the denominator, given ε > 0 there exists a K > α2T such that (*) holds.

Solution 3.15 Writing the complete solution of the state equation at t f , we need to satisfy

tf

Ho xo + H f Φ(t f , to ) xo + ∫ Φ(t f , σ)f (σ) d σ = h

to

(+)

Thus there exists a solution that satisfies the boundary conditions if and only if

tf

h − Hf

∫ Φ(t f , σ)f (σ) d σ ∈ Im[ Ho + H f Φ(t f , to ) ]

to

There exists a unique solution that satisfies the boundary conditions if Ho + H f Φ(t f , to ) is invertible. To compute

a solution x (t) satisfying the boundary conditions:

(1) Compute Φ(t, to ) for t ∈ [to , t f ]

(2) Compute Ho + H f Φ(t f , to )

tf

(3) Compute

∫ Φ(t f , σ)f (σ) d σ

to

(4) Solve (+) for xo

t

(5) Set x (t) = Φ(t, to ) xo + ∫ Φ(t, σ)f (σ) d σ, t ∈ [to , t f ]

to

-16-

CHAPTER 4

.

Solution 4.1 An easy way to compute A (t) is to use A (t) = Φ(t, 0)Φ(0, t). This gives

A (t) =

−2t −1 1 −2t This A (t) commutes with its integral, so we can write Φ(t, τ) as the matrix exponential

t

Φ(t, τ) = exp

∫τ A (σ) d σ

= exp

−(t−τ)2 −(t−τ)

(t−τ) −(t−τ)2

Solution 4.4 A linear state equation corresponding to the n th -order differential equation is

.

x (t) =

...

0

...

0

.

.

.

.

x (t)

.

.

...

1

−a 0 (t) −a 1 (t) . . . −an−1 (t) 0

0

.

.

.

0

1

0

.

.

.

0

The corresponding adjoint state equation is

.

z (t) =

0

−1

.

.

.

0

0

...

...

.

.

.

...

...

0

0

.

.

.

0

−1

a 0 (t) a 1 (t)

.

.

z (t)

.

an−2 (t)

an−1 (t)

th

To put this in the form of an n -order differential equation, start with

.

zn (t) = −zn−1 (t) + an−1 (t) zn (t)

.

zn−1 (t) = −zn−2 (t) + an−2 (t) zn (t)

These give

..

.

_d_ [ a (t) z (t) ]

zn (t) = −zn−1 (t) +

n−1

n

dt

_d_ [ a (t) z (t) ]

= zn−2 (t) − an−2 (t) zn (t) +

n−1

n

dt

Next,

-17-

Linear System Theory, 2/E

Solutions Manual

.

zn−2 (t) = −zn−3 (t) + an−3 (t) zn (t)

gives

.

d2

d3

_d_ [ a (t) z (t) ] + ____

____

[ an−1 (t) zn (t) ]

z

(t)

=

z

(t)

−

n−2

n

n

n−2

dt

dt 2

dt 3

d2

_d_ [ a (t) z (t) ] + ____

[ an−1 (t) zn (t) ]

= −zn−3 (t) + an−3 (t) zn (t) −

n−2

n

dt

dt 2

Continuing gives the n th -order differential equation

d n−2

d n−1

dn

_____

_____

____

[ an−2 (t) zn (t) ]

(t)

z

(t)

]

−

[

a

z

(t)

=

n−1

n

n

dt n−2

dt n−1

dt n

_d_ [ a (t) z (t) ] + (−1)n +1 a (t) z (t)

+ . . . + (−1)n

1

n

0

n

dt

Solution 4.6

For the first matrix differential equation, write the transpose of the equation as (transpose and

differentiation commute)

.T

X (t) = A T (t)X T (t) , X T (to ) = X To

This has the unique solution X T (t) = ΦA T (t) (t, to )X To , so that

X (t) = Xo Φ AT T (t) (t, to )

In the second matrix differential equation, let Φk (t, τ) be the transition matrix for Ak (t), k = 1, 2. Then it is easy

to verify (Leibniz rule) that a solution is

t

to )Xo Φ T2 (t, to )

X (t) = Φ1 (t,

+ ∫ Φ1 (t, σ)F (σ)Φ T2 (t, σ) d σ

to

Or, one can generate this expression by using the obvious integrating factors on the left and right sides of the

differential. equation. (To show this is a unique solution, show that the difference Z (t) between any two solutions

satisfies Z (t) = A 1 (t)Z (t) + Z (t)A T2 (t), with Z (to ) = 0. Integrate both sides and apply the Bellman-Gronwall

inequality to show Z (t) is identically zero.)

Solution 4.9 Clearly A (t) commutes with its integral. Thus we compute

exp

0 1

τ

−1 0 t

and then replace τ by ∫ a (σ) d σ. From the power series for the exponential,

0

exp

0 1

τ =

−1 0 ∞

Σ

k =0

∞

=

Σ

k =0

=

Σ

k =0

∞

1

___

k!

1

_____

(2k)!

1

_____

(2k)!

0 1k k

τ

−1 0 0 1 2k 2k

τ +

−1 0 (−1)k 0

0 (−1)k

-18-

∞

Σ

k =0

1

_ ______

(2k +1)!

τ 2k +

∞

Σ

k =0

0 1 2k +1 2k +1

τ

−1 0 1

_ ______

(2k +1)!

0

(−1)k

k +1

(−1)

0

τ 2k +1

Linear System Theory, 2/E

Solutions Manual

=

=

cos τ 0 +

0 cos τ cos τ sin τ −sin τ cos τ 0 sin τ −sin τ 0 Replacing τ as noted above gives Φ(t, 0).

For sufficiency, suppose Φx (t, 0) = T (t)e Rt . Then T (0) = I and T (t) is continuously

differentiable. Let z (t) = T −1 (t) x (t) so that

Solution 4.10

Φz (t, 0) = T −1 (t)Φx (t, 0)T (0) = T −1 (t)T (t)e Rt = e Rt

.

Thus z (t) = R z (t).

For necessity, suppose P (t) is a variable change that gives

.

z (t) = Ra z (t)

Then

Φz (t, 0) = e

Ra t

= P −1 (t)Φx (t, 0)P (0)

that is,

Φx (t, 0) = P (t)e

Ra t

P −1 (0)

Let T (t) = P (t)P −1 (0) and R = P (0)Ra P −1 (0). Then

Φx (t, 0) = T (t)P (0) e P

−1

(0)RP (0)t

P −1 (0)

= T (t)P (0) [ P −1 (0)e Rt P (0) ] P −1 (0)

= T (t)e Rt

Solution 4.11 Suppose

Φ(t, 0) = e

A1t

e

A2t

=e

A1t

Then

.

_d_

Φ(t, 0) =

dt

=e

This implies A (t) = e

A1t

A1t

e

A1t

e

A2t

( A 1 +A 2 ) e A 2 t

( A 1 +A 2 ) e −A 1 t . e A 1 t e A 2 t

−A t

[ A 1 +A 2 ] e 1 . Therefore A (0) = A 1 +A 2 is clear, and

.

A t

−A t

A t

−A t

A (t) = A 1 e 1 ( A 1 +A 2 ) e 1 + e 1 ( A 1 +A 2 ) e 1 (−A 1 )

= A 1 A (t) − A (t) A 1

Conversely, assume A 1 and A 2 are such that

.

A (t) = A 1 A (t) − A (t) A 1 , A (0) = A 1 + A 2

This matrix differential equation has a unique solution (by rewriting it as a linear vector differential equation), and

from the calculation above this solution is

A (t) = e

A1t

( A 1 + A 2 ) e −A 1 t

Since

-19-

Linear System Theory, 2/E

Solutions Manual

_d_

dt

we have that Φ(t, 0) = e

A1t A2t

e

e

A1t

e

A2t

= A (t)e

A1t A2t

e

, e

A10 A20

e

=I

.

Solution 4.13 Writing

_∂_ Φ (t, τ) = A (t)Φ (t, τ) , Φ(τ, τ) = I

A

A

∂t

in partitioned form shows that

_∂_ Φ (t, τ) = A (t)Φ (t, τ) , Φ (τ, τ) = 0

21

22

21

21

∂t

Thus Φ21 (t, τ) is identically zero. But then

_∂_ Φ (t, τ) = A (t)Φ (t, τ) , Φ (τ, τ) = I

ii

ii

ii

ii

∂t

for i = 1, 2, and

_∂_ Φ (t, τ) = A (t)Φ (t, τ) + A (t)Φ (t, τ) , Φ (τ, τ) = 0

12

11

12

12

22

12

∂t

Using Exercise 4.6 with F (t) = A 12 (t) Φ22 (t, τ) gives

t

Φ12 (t, τ) = ∫ Φ11 (t, σ) A 12 (σ) Φ22 (σ, τ) d σ

τ

Solution 4.17 We need to compute a continuously-differentiable, invertible P (t) such that

t 1

= P −1 (t)

1 t

.

0 1

P (t) − P −1 (t)P (t)

2

2−t 2 t Multiplying on the left by P (t), the result can be written as a dimension-4 linear state equation. Choosing the

initial condition corresponding to P (0) = I, some clever guessing gives

1 0 P (t) =

t 1

Solution 4.23 Using the formula for the derivative of an inverse matrix given in Exercise 1.17,

_∂_ Φ (−τ, −t) = _∂_ Φ −1 (−t, −τ) = −Φ −1 (−t, −τ)

A

A

A

∂t

∂t

= −Φ −1

A (−t, −τ)

−

= −Φ −1

A (−t, −τ)

_∂_ Φ (−t, −τ) Φ −1 (−t, −τ)

A

A

∂t

∂

_____

ΦA (−t, −τ) Φ −1

A (−t, −τ)

∂(−t)

−A (−t)ΦA (−t, −τ) Φ −1

A (−t, −τ)

= Φ −1

A (−t, −τ) A (−t) = ΦA (−τ, −t) A (−t)

Transposing gives

-20-

Linear System Theory, 2/E

Solutions Manual

_∂_ Φ T (−τ, −t) = A T (−t)Φ T (−τ, −t)

A

A

∂t

Since Φ(−τ, −τ) = I, we have F (t) = A T (−t).

Or we can use the result of Exercise 3.2 to compute:

∂

_∂_ Φ (−τ, −t) =− _____

ΦA (−τ, −t) = ΦA (−τ, −t)A (−t)

A

∂(−t)

∂t

This implies

_∂_ Φ T (−τ, −t) = A T (−t)Φ (−τ, −t)

A

A

∂t

Since Φ(−τ, −τ) = I, we have F (t) = A T (−t).

Solution 4.25 We can write

t+σ

Φ(t + σ, σ) = I +

∫σ

A (τ) d τ +

∞

t +σ

Σ ∫

k =2

σ

τ1

A (τ1 ) ∫ A (τ2 ) . . .

τk−1

σ

∫σ

A (τk ) d τk . . . d τ1

and

e

_

At (σ)t

_

= I + At (σ)t +

Then

R (t, σ) = Φ(t

+ σ, σ) − e

t +σ

∞

= Σ

k =2

∫σ

_

At (σ)t

∞

Σ

k =2

_

1 k

___

A t (σ)t k

k!

τ1

A (τ1 ) ∫ A (τ2 ) . . .

τk−1

σ

∫σ

_

1 k

___

A t (σ)t k

A (τk ) d τk . . . d τ1 −

k!

From A (t) ≤ α and the triangle inequality,

R (t, σ) ≤

∞

2

αk

Σ

k =2

k

_t__

= α2 t 2

k!

∞

Σ

k =2

2 k−2 k−2

___

α

t

k!

Using

1

2

______

___

, k ≥2

≤

(k−2)!

k!

gives

R (t, σ) ≤ α2 t 2

∞

Σ

k =2

= α2 t 2 e α t

-21-

1

______

αk−2 t k−2

(k−2)!

CHAPTER 5

Solution 5.3 Using the series definition, which involves talent in series recognition,

A 2k +1 =

0 1

, A 2k =

1 0

1 0

, k = 0, 1, . . .

0 1

gives

=

0 t ___

1

+

t 0

2!

e At = I +

−t

t 2 0 ___

1

+

0 t2 3!

−t

(e +e )/ 2 (e −e )/ 2

=

(e t −e −t )/ 2 (e t +e −t )/ 2 t

t

0 t3 + ...

t3 0 cosh t sinh t sinh t cosh t Using the Laplace transform method,

1

_____

2

s −1

s

_____

2

s −1

(sI − A)−1 =

s −1 −1 s −1

=

s

_____

2

s −1

1

_____

2

s −1

which gives again

e At =

cosh t sinh t sinh t cosh t Using the diagonalization method, computing eigenvectors for A and letting

1

1 P=

1 −1 gives

P −1 AP =

1 0 0 −1 Then

e At = P

et 0

0 e −t

P −1 =

cosh t sinh t sinh t cosh t Solution 5.4 Since

A (t) =

t 1

1 t

commutes with its integral,

-22-

Linear System Theory, 2/E

Solutions Manual

t

∫ A (σ) d σ

Φ(t, 0) = e 0

t2/2 t

t t2/2 = exp

And since

t2 / 2 0 ,

0 t2/2 0 t

t 0

commute,

Φ(t, 0) = exp

1 0 2

t / 2 . exp

0 1

0 1

t

1 0

Using Exercise 5.3 gives

Φ(t, 0) =

2

e t /2 0

2

0 e t /2

cosh t sinh t =

sinh t cosh t 2

2

e t /2 cosh t e t /2 sinh t

2

2

e t /2 sinh t e t /2 cosh t Solution 5.7 To verify that

t

A ∫ e A σ d σ = e At − I

0

note that the two sides agree at t = 0, and the derivatives of the two sides with respect to t are identical.

If A is invertible and all its eigenvalues have negative real parts, then limt → ∞ e At = 0. This gives

∞

A

∫ e Aσ d σ = − I

0

that is,

∞

0

0

∞

A −1 = − ∫ e A σ d σ = ∫ e A σ d σ

Solution 5.9

Evaluating the given expression at t = 0 gives x (0) = 0. Using Leibniz rule to differentiate the

expression gives

t

D ∫ u ( τ) d τ

.

_d_ e A (t−σ) e σ

bu (σ) d σ

x (t) =

∫

dt 0

t

t

t

_∂_

∂t

= bu (t) + ∫

0

e A (t−σ) e

D ∫ u ( τ) d τ

σ

bu (σ) d σ

t

D ∫ u ( τ) d τ

Using the product rule and differentiating the power series for e

σ

gives

t

t

.

x (t) = bu (t) + ∫

0

Ae A (t−σ) e

D ∫ u ( τ) d τ

σ

t

bu (σ) + e A (t−σ) Du (t)e

D ∫ u ( τ) d τ

σ

bu (σ) d σ

If we assume that AD = DA, then e A (t−σ) D = De A (t−σ) and

-23-

Linear System Theory, 2/E

Solutions Manual

t

t

D ∫ u ( τ) d τ

D ∫ u ( τ) d τ

.

x (t) = bu (t) + A ∫ e A (t−σ) e σ

bu (σ) d σ + Du (t) ∫ e A (t−σ) e σ

bu (σ) d σ

t

t

0

0

= A x (t) + Dx (t)u (t) + bu (t)

Solution 5.12 We will show how to define β0 (t), . . . , βn−1 (t) such that

n−1

Σ

.

βk (t)Pk =

k =0

n−1

Σ

n−1

Σ βk (0)Pk = I

βk (t)APk ,

k =0

(*)

k =0

which then gives the desired expression by Property 5.1. From the definitions,

P 1 = AP 0 − λ1 I , P 2 = AP 1 − λ2 P 1 , . . . , Pn−1 = APn−2 − λn−1 Pn−2

Also Pn = (A−λn I)Pn−1 = 0 by the Cayley-Hamilton theorem, so APn−1 = λn Pn−1 . Now we equate coefficients of

like Pk ’s in (*), rewritten as

n−1 .

n−1

Σ βk (t)Pk = Σ βk (t)[Pk+1 + λk +1 Pk ]

k =0

k =0

to get equations for the desired βk (t)’s:

.

P 0 : β0 (t) = λ1 β0 (t)

.

P 1 : β1 (t) = β0 (t) + λ2 β1 (t)

.

.

. .

Pn−1 : βn−1 (t) = βn−2 (t) + λn βn−1 (t)

that is,

.

β. 0 (t)

β1 (t)

.

.

.

.

=

βn−1 (t) λ1 0 . . .

1 λ2 . . .

0

0

.

.

.

0

0

.

.

.

0

. .

.

. .

.

. .

.

0 0 . . . λn−1

0 0 . . . 1 λn

β0 (t)

β1 (t)

.

.

.

βn−1 (t) With the initial condition provided by β0 (0) = 1, βk (0) = 0, k = 1, . . . , n−1, the analytic solution of this state

equation provides a solution for (*). (The resulting expression for e At is sometimes called Putzer’s formula.)

Solution 5.17 Write, by Property 5.11,

Φ(t, to ) = P −1 (t)e

R (t−to )

P (to )

where P (t) is continuous, T-periodic, and invertible at each t. Let

S = P −1 (to )RP (to ) , Q (t, to ) = P −1 (t)P (to )

Then Q (t, to ) is continuous and invertible at each t, and satisfies

Q (t +T, to ) = P −1 (t +T)P (to ) = P −1 (t)P (to ) = Q (t, to )

with Q (to , to ) = I. Also,

-24-

Linear System Theory, 2/E

Solutions Manual

Φ(t, to ) = P −1 (t) e

P (to )SP −1 (to ) (t−to )

= Q (t, to )e

P (to ) = P −1 (t)P (to ) e

S(t−to )

P −1 (to )P (to )

S (t−to )

Solution 5.19 From the Floquet decomposition and Property 4.9,

T

∫ tr [A (σ)] d σ

det Φ(T, 0) = det e RT = e 0

Because the integral in the exponent is positive, the product of eigenvalues of Φ(T, 0) is greater than unity, which

implies that at least one eigenvalue of Φ(T, 0) has magnitude greater than unity.Thus by the argument following

Example 5.12 there exist unbounded solutions.

Solution 5.20 Following the hint, define a real matrix S by

e S 2T = Φ2 (T, 0)

and set

Q (t) = Φ(t, 0)e −St

Clearly Q (t) is real and continuous, and

Q (t +2T) = Φ(t +2T, 0)e −S (t +2T) = Φ(t +2T, T)Φ(T, 0)e −S 2T e −St

= Φ(t +T, 0)Φ(T, 0)e −S 2T e −St = Φ(t +T, T)Φ2 (T, 0)e −S 2T e −St

= Φ(t +T, T)e −St = Φ(t, 0)e −St

= Q (t)

That is, Q (t) is 2T-periodic. (For a proof of the hint, see Chapter 8 of D.L. Lukes, Differential Equations:

Classical to Controlled, Academic Press, 1982.)

Solution 5.22

The solution will be T-periodic for initial state xo if and only if xo satisfies (see text equation

(32))

to +T

[Φ

−1

(to +T, to ) − I ] xo =

∫

Φ(to , σ)f(σ) d σ

to

This linear equation has a solution for xo if and only if

to +T

z To

∫

Φ(to , σ)f(σ) d σ = 0

(*)

to

for every nonzero vector zo that satisfies

T

[ Φ−1 (to +T, to ) − I ]

zo = 0

The solution of the adjoint state equation can be written as

T

z (t) = [ Φ−1 (t, to ) ] zo

Then by Lemma 5.14, (**) is precisely the condition that z (t) be T-periodic. Thus writing (*) in the form

-25-

(**)

Linear System Theory, 2/E

Solutions Manual

to +T

0=

∫

to +T

z To Φ(to ,

σ)f(σ) d σ =

to

∫

z T (σ)f (σ) d σ

to

completes the proof.

Solution 5.24 Note A = −A T , and from Example 5.9,

e At =

cos t sin t −sin t cos t Therefore all solutions of the adjoint equation are periodic, with period of the form k 2π, where k is a positive

integer. The forcing term has period T = 2π /ω, where we assume ω > 0. The rest of the analysis breaks down

into 3 cases.

Case 1: If ω ≠ 1, 1/ 2, 1/ 3, . . . then the adjoint equation has no T-periodic solution, so the condition (Exercise

5.22)

T

∫ z T (σ)f (σ) d σ = 0

(+)

0

holds vacuously. Thus there will exist corresponding periodic solutions.

Case 2: If ω = 1, then

T

∫z

0

T

T

(σ)f (σ) d σ = ∫ z To e A σ f (σ) d σ

0

T

T

0

0

= −zo 1 ∫ sin2 (σ) d σ + zo 2 ∫ cos σ sin σ d σ

≠0

so there is no periodic solution.

Case 3: If ω = 1/k, k = 2, 3, . . . , then since

T

T

0

0

∫ cos σ sin (σ/k) d σ = ∫ sin σ sin (σ/k) d σ = 0

the condition (+) will hold, and there exist periodic solutions.

In summary, there exist periodic solutions for all ω > 0 except ω = 1.

-26-

CHAPTER 6

If the state equation is uniformly stable, then there exists a positive γ such that for any to and xo

the corresponding solution satisfies

Solution 6.1

x (t) ≤ γxo ,

t ≥ to

Given a positive ε, take δ = ε / γ. Then, regardless of to , xo ≤ δ implies

x (t) ≤ γ δ

= ε , t ≥ to

Conversely, given a positive ε suppose positive δ is such that, regardless of to , xo ≤ δ implies x (t) ≤ ε,

t ≥ to . For any ta ≥ to let xa be such that

xa = 1

,

Φ(ta , to )xa = Φ(ta , to )

Then xo = δ xa satisfies xo = δ, and the corresponding solution at t = ta satisfies

x (ta ) = Φ(ta , to )xo = δΦ(ta , to ) ≤ ε

Therefore

Φ(ta , to ) ≤ ε / δ

Such an xa can be selected for any ta , to such that ta ≥ to . Therefore

Φ(t, to ) ≤ ε / δ

for all t and to with t ≥ to , and we can take γ = ε / δ to obtain

x (t) = Φ(t, to )xo ≤ Φ(t, to )xo ≤ γxo ,

t ≥ to

This implies uniform stability.

Solution 6.4 Using the fact that A (t) commutes with its integral,

t

Φ(t, τ) = e

∫ A (σ) d σ

τ

=I+

t−τ

e −(t−τ)

1

___

−e −(t−τ)

+

t−τ 2!

t−τ

e −(t−τ)

−e −(t−τ)

t−τ

2

+ ...

For any fixed τ, φ11 (t, τ) clearly grows without bound as t → ∞, and thus the state equation is not uniformly

stable.

Solution 6.6 Using elementary properties of the norm,

-27-

Linear System Theory, 2/E

Solutions Manual

Φ(t, τ) = I

t

t

τ

τ

σ1

+ ∫ A (σ ) d σ + ∫ A (σ 1 )

∫τ A (σ2 ) d σ2 d σ1 + . . .

t

t

τ

τ

= I + ∫ A (σ) d σ + ∫ A (σ1 )

σ1

∫τ A (σ2 ) d σ2 d σ1 + . . .

t

t

σ1

τ

τ

τ

= 1 + ∫ A (σ) d σ + ∫ A (σ1 ) ∫

A (σ2 ) d σ2 d σ1 +

...

(Be careful of t < τ.) Since A (t) ≤ α for all t,

t

Φ(t, τ) ≤

1 + α∫ 1 d σ + α

2

∫τ ∫τ 1 d σ2 d σ1 + . . .

τ

= 1 + αt−τ + α2

σ1

t

t−τ2

_|_____

+ ...

2!

For | t−τ ≤ δ,

Φ(t, τ) ≤

2 2

δ

_α

____

+ ...

2!

1+α δ+

= eα δ

Solution 6.8 See the proof of Theorem 15.2.

Solution 6.10 Write Re [λ] = −η, where η > 0 by assumption, so that

t e λt = t e −ηt ,

t ≥0

A simple maximization argument (setting the derivative to zero) gives

t e −ηt ≤

1 ∆

___

=β ,

ηe

t ≥0

so that

t e λt ≤ β ,

t ≥0

Using this bound we can write

t e λt = t e −ηt = t e −(η/2)t e −(η/2)t ≤

2 −(η/2)t

___

e

,

ηe

t ≥0

Similarly,

t 2 e λt = t 2 e −ηt ≤

4 −(η/4)t

2 . ___

2

2

___

___

___

e

,

t e −(η/4)t e −(η/4)t ≤

t e −(η/2)t =

η

e

η

e

η

e

ηe

and continuing we get, for any j ≥ 0,

j +( j −1)+

+1

j

_2___________

e −(η/2 )t , t ≥ 0

j

(η e)

...

t j e λt ≤

Therefore

-28-

t ≥0

Linear System Theory, 2/E

Solutions Manual

∞

∫ t j e λt dt ≤

0

j +( j −1)+

+1

_2___________

(η e) j

...

∞

∫ e −(η/2 )t dt

j

0

j +( j −1)+ . . . +1

≤

2j

_2___________ . ___

j

η

(η e)

=

22j +( j −1)+ +1

_____________

e j Re [λ] j +1

...

By Theorem 6.4 uniform stability is equivalent to existence of a finite constant γ such that

all t ≥ 0. Writing

Solution 6.12

e At ≤ γ for

e At =

m

σk

t j−1

λ t

______

e k

( j−1)!

Σ Σ Wkj

k =1 j =1

where λ1 , . . . , λm are the distinct eigenvalues of A, suppose

Re[λk ] ≤ 0 , k = 1, . . . , m

(*)

Re[λk ] = 0 implies σk = 1

λk t

λ t

Since t e is bounded if Re[λk ] < 0 (for any j), and e k = 1 if Re [λk ] = 0, it is clear that

bounded for t ≥ 0. Thus (*) is a sufficient condition for uniform stability.

A necessary condition for uniform stability is

j−1

e At

is

Re[λk ] ≤ 0 , k = 1, . . . , m

For if Re[λk ] > 0 for some k, the proof of Theorem 6.2 shows that e At grows without bound as t → ∞. The gap

between this necessary condition and the sufficient condition is illustrated by the two cases

0 0 0 1 A=

,

A=

0 0 0 0 Both satisfy the necessary condition, neither satisfy the sufficient condition, and the first case is uniformly stable

while the second case is not (unbounded solutions exist, as shown by easy computation of the transition matrix).

(It can be shown that a necessary and sufficient condition for uniform stability is that each eigenvalue of A has

nonpositive real part and any eigenvalue of A with zero real part has algebraic multiplicity equal to its geometric

multiplicity.)

Solution 6.14 Suppose γ, λ > 0 are such that

Φ(t, to ) ≤ γ e

−λ(t−to )

for all t, to such that t ≥ to . Then given any xo , to , the corresponding solution at t ≥ to satisfies

x (t) = Φ(t, to )xo ≤ Φ(t, to )xo ≤ γ e

−λ(t−to )

xo

and the state equation is uniformly exponentially stable.

Now suppose the state equation is uniformly exponentially stable, so that there exist γ, λ > 0 such that

x (t) ≤ γ e

−λ(t−to )

xo ,

t ≥ to

for any xo and to . Given any to and ta ≥ to , choose xa such that

Φ(ta , to )xa = Φ(ta , to ) , xa = 1

Then with xo = xa the corresponding solution at ta satisfies

-29-

Linear System Theory, 2/E

Solutions Manual

x (ta ) = Φ(ta , to )xa = Φ(ta , to ) ≤ γ e

−λ(ta −to )

Since such an xa can be selected for any to and ta > to , we have

Φ(t, τ) ≤ γ e −λ(t−τ)

for all t, τ such that t ≥ τ, and the proof is complete.

.

Solution 6.18 The variable change z (t) = P −1 (t) x (t) yields z (t) = 0 if and only if

.

P −1 (t) A (t)P (t) − P −1 (t)P (t) = 0

.

for all t. This clearly is equivalent to P (t) = A (t)P (t), which is equivalent to ΦA (t, τ) = P (t)P −1 (τ). Now, if P (t)

is a Lyapunov transformation, that is P (t) ≤ ρ < ∞ and det P (t) ≥ η > 0 for all t, then

ΦA (t, τ) ≤ P (t)P −1 (τ) ≤ P (t)

P (τ)n−1

__________

det P (τ)

∆

≤ ρn /η = γ

for all t and τ.

Conversely, suppose

ΦA (t, τ) ≤ γ for

P (t) ≤

all t and τ. Let P (t) = ΦA (t, 0). Then P (t) ≤ γ and

P −1 (t)n−1

___________

= P −1 (t)n−1 det P (t)

det P −1 (t)

for all t. Using P (t) ≥ 1/P −1 (t) gives

det

P (t) ≥

1

__________

−1

P (t)n

and since P −1 (t) = ΦA (0, t) ≤ γ,

det

P (t) ≥

1

___

γn

Thus P (t) is a Lyapunov transformation, and clearly

.

P −1 (t) A (t)P (t) − P −1 (t)P (t) = 0

for all t.

-30-

CHAPTER 7

Solution 7.3 Let  = FA, and take Q = F −1 , which is positive definite since F is positive definite. Then since F

is symmetric,

T

Q + Q = A T FF −1 + F −1 FA = A T + A < 0

This gives exponential stability by Theorem 7.4.

Solution 7.5

By our default assumptions, a (t) is continuous. Since Q is constant, symmetric, and positive

definite, the first condition of Theorem 7.2 holds. Checking the second condition,

−a (t) −a (t)/ 2 ≤0

A T (t)Q + QA (t) =

−a (t)/ 2

−1 gives the requirements

a (t) ≥ 0 , 4a (t) ≥ a 2 (t)

Thus the state equation is uniformly stable if a (t) is a continuous function satisfying 0 ≤ a (t) ≤ 4 for all t.

Solution 7.6 With

Q(t) =

.

a (t) 0 , A T (t)Q(t) + Q(t)A (t) + Q (t) =

0 1

.

a (t) 0 0 −4 we need to assume that a (t) is continuously differentiable and η ≤ a (t) ≤ ρ for some positive constants η and ρ so

.

that the first condition of Theorem 7.4 is satisfied. For the second condition we need to assume a (t) ≤ −ν, for

some positive constant ν. Unfortunately this implies, taking any to ,

t

.

a (t) = a (to ) + ∫ a (σ) d σ ≤ a (to ) + ν to − ν t , t ≥ to

to

and for sufficiently large t the positivity condition on a (t) will be violated. Thus there is no a (t) for which the

given Q (t) shows uniform exponential stability of the given state equation.

Solution 7.9 We need to assume that a(t) is continuously differentiable. Consider

Q (t) − η I =

2a (t)+1−η

1

Suppose there exists a small, positive constant η such that

-31-

1

(t)+1

_a______

−η

a (t)

Linear System Theory, 2/E

Solutions Manual

η ≤ a (t) ≤ 1/ (2η)

for all t. Then

2a (t) + 1 − η ≥ η + 1 > 1

1

(t)+1

______

_a______

= 1+η > 1

−η ≥ 1+

1/ (2η)

a (t)

and Q (t)−ηI ≥ 0, for all t, follows easily. Similarly, with ρ = (2η+1)/ η we can show ρI−Q (t) ≥ 0 using

1

η+1

___

_2____

−1 = 1

−2

η

2η

1

η+1

(t)+1 _2____

____

_a______

≥1

−1−

≥

ρ−

η

a

(t)

a (t)

ρ − 2a (t) − 1 ≥

Next consider

.

A (t)Q (t) + Q (t) A (t) + Q (t) =

.

2a (t)−2a(t)

T

0

0

.

a (t)

_____

−2a(t)− 2

a (t)

≤ −ν I

This gives that for uniform exponential stability we also need existence of a small, positive constant ν such that

.

ν a 2 (t) − 2a 3 (t) ≤ a (t) ≤ a (t)−ν/2

for all t. For example, a (t) = 1 satisfies these conditions.

Solution 7.11

Suppose that for every symmetric, positive-definite M there exits a unique, symmetric,

positive-definite Q such that

A T Q + QA + 2µQ = −M

(*)

(A + µ I)T Q + Q (A + µ I) = −M

(**)

that is,

Then by the argument above Theorem 7.11 we conclude that all eigenvalues of A +µ I have negative real parts.

That is, if

0 = det [ λI − (A +µ I) ] = det [ (λ − µ)I − A ]

then Re [λ] < 0. Since µ > 0, this gives Re [λ − µ] < −µ, that is, all eigenvalues of A have real parts strictly less

than −µ.

Now suppose all eigenvalues of A have real parts strictly less than −µ. Then, as above, eigenvalues of

A + µ I have negative real parts. Then by Theorem 7.11, given symmetric, positive-definite M there exists a

unique, symmetric, positive-definite Q such that (**) holds, which implies (*) holds.

Solution 7.16 For arbitrary but fixed t ≥ 0, let xa be such that

xa = 1

,

e At xa = e At

By Theorem 7.11 the unique solution of QA + A T Q = −M is the symmetric, positive-definite matrix

∞

Q = ∫ e A σ Me A σ d σ

T

0

Thus we can write

-32-

Linear System Theory, 2/E

Solutions Manual

∞

∞

∫ x Ta e A σ Me A σ xa d σ ≤ ∫ x Ta e A σ Me A σ xa d σ

T

T

t

0

= x Ta Qxa ≤ λmax (Q) = Q

Also, using a change of integration variable from σ to τ = σ − t,

∞

∞

∫ x Ta e A σ Me A σ xa d σ = ∫ x Ta e A (t + τ) Me A(t + τ) xa d τ

T

T

t

0

= x Ta e A t Qe At xa ≥ λmin (Q)e At xa 2 =

T

e At 2

_______

Q −1

Therefore

e At 2

_______

≤ Q

Q −1

Since t was arbitrary, this gives

Q

−1

max e At ≤ √Q

t≥0

Solution 7.17

Let F = A + (µ−ε)I. Then F ≤ A +µ−ε, all eigenvalues of F have real parts less than −ε,

and

e Ft = e At e (µ−ε)t

Thus

e At = e −(µ − ε)t e Ft

(*)

By Theorem 7.11 the unique solution of F Q + QF = −I is

T

∞

Q = ∫ e F σ e Fσ d σ

T

0

For any n × 1 vector x,

T

d T F Tσ Fσ

___

x e e x = x Te F σ [ F T + F ] e Fσ x

dσ

≥ −F T + F x T e F σ e F σ x

T

(Exercise1.9)

≥ −2(A +µ−ε) x T e F σ e F σ x

T

Thus for any t ≥ 0,

∞

−x T e F t e Ft x =

T

∫

t

d

___

dσ

x Te F σ e Fσ x

T

dσ

∞

≥ −2 (A +µ−ε) ∫ x T e F σ e F σ x d σ

T

t

≥ −2 (A +µ−ε) x T Qx

Therefore

-33-

Linear System Theory, 2/E

Solutions Manual

x T e F t e Ft x ≤ 2 (A +µ−ε) x T Qx , t ≥ 0

T

which gives

e Ft ≤

√2 ( A + µ − ε ) Q

, t ≥ 0

Thus the desired inequality follows from (*).

Solution 7.19 To show uniform exponential stability of A (t), write the 1,2-entry of A (t) as a (t), and let

Q (t) = q (t) I, where

2+e −2t , t ≥ 1/ 2

q (t) = q ⁄ (t) , −1/ 2 < t < 1/ 2

3 , t ≤ −1/ 2

1

2

Here q ⁄ (t) is a continuously-differentiable ‘patch’ satisfying 2 ≤ q ⁄ (t) ≤ 3 for −1/ 2 < t < 1/ 2, and another

condition to be specified below. Then we have 2 I ≤ Q (t) ≤ 3 I for all t. Next consider

.

.

−2q (t)+q (t) a (t)q (t)

A T (t)Q (t) + Q (t)A (t) + Q (t) =

≤ −ν I

.

a (t)q (t) −6q (t)+q (t) 1

1

2

2

We choose ν = 1 and show that

.

−2q (t)+q (t)+1

a (t)q (t)

≤0

.

a (t)q (t)

−6q (t)+q (t)+1 .

for all t. With t < −1/ 2 or t > 1/ 2 it is easy to show that q (t)−q (t)−1 ≥ 0, and a patch function can be sketched

such that this inequality is satisfied for −1/ 2 < t < 1/ 2. Then, for all t,

.

.

−2q (t)+q (t)+1 ≤ −q (t) ≤ 0 , −6q (t)+q (t)+1 ≤ −5q (t) ≤ 0

.

.

[−2q (t)+q (t)+1][−6q (t)+q (t)+1] − a 2 (t)q 2 (t) ≥ [5−a 2 (t)]q 2 (t) ≥ 4q 2 (t) ≥ 0

Thus we have proven uniform exponential stability.

To show A T (t) is not uniformly exponentially stable, write the state equation as two scalar equations to

compute

ΦA T (t) (t, 0) =

e −t

0

(e t −e −3t )/ 4 e −3t

, t ≥0

and the existence of unbounded solutions is clear.

Using the characterization of uniform stability in Exercise 6.1, given ε > 0, let δ = β−1 (α(ε)).

Then δ > 0, since α(ε) > 0, and the inverse exists since β(.) is strictly increasing. Then for any to , and any xo such

that xo ≤ δ, the corresponding solution is such that

Solution 7.20

v (t, x (t)) ≤ v (to , xo ) ≤ β(xo ) ≤ β(δ) = α(ε) , t ≥ to

Therefore

α(x (t)) ≤ v (t, x (t)) ≤ α(ε) , t ≥ to

But since α(.) is strictly increasing, this gives x (t) ≤ ε , t ≥ to , and thus the state equation is uniformly stable.

-34-

CHAPTER 8

Solution 8.3 No. The matrix

−2 √8

0 −1

A=

has negative eigenvalues, but

−4 √8

√8 −2

A + AT =

has an eigenvalue at zero.

Solution 8.6 Viewing F (t)x (t) as a forcing term, for any to , xo , and t ≥ to we can write

t

x (t) = ΦA +F (t, to ) xo = ΦA (t, to ) xo + ∫ ΦA (t, σ)F (σ) x(σ) d σ

to

which gives, for suitable constants γ, λ > 0,

x (t) ≤ γ e

−λ(t−to )

t

xo +

∫ γ e −λ(t−σ) F (σ)x(σ) d σ

to

Thus

t

e

λt

x (t) ≤ γ e

λto

xo +

∫ γF (σ) e λσ x(σ) d σ

to

and the Gronwall-Bellman inequality (Lemma 3.2) implies

t

e λt x (t) ≤ γ e

λto

xo e

Therefore

-35-

∫ γF (σ) d σ

to

Linear System Theory, 2/E

Solutions Manual

t

x(t) ≤ γ e

−λ(t−to )

∫ γF (σ) d σ

t

eo

xo

∞

≤γe

−λ(t−to )

≤γe

−λ(t−to )

∫ γF (σ) d σ

t

eo

eγ β

xo

xo

and we conclude the desired uniform exponential stability.

Solution 8.8 We can follow the proof of Theorem 8.7 (first and last portions) to show that the solution

∞

Q (t) = ∫ e A

T

(t)σ

e A (t)σ d σ

0

of

A T (t)Q (t) + Q (t) A (t) = −I

is continuously-differentiable and satisfies, for all t,

ηI ≤ Q (t) ≤ ρI

where η and ρ are positive constants. Then with

.

F (t) = A (t) − 1⁄2Q −1 (t)Q (t)

an easy calculation shows

.

F T (t)Q (t) + Q (t)F (t) + Q (t) = A T (t)Q (t) + Q (t) A (t) = −I

Thus

.

x (t) = F (t) x (t)

is uniformly exponentially stable by Theorem 7.4.

Solution 8.9 As in Exercise 8.8 we have, for all t,

ηI ≤ Q (t) ≤ ρI

which implies

Q −1 (t) ≤

1

__

η

Also, by the middle portion of the proof of Theorem 8.7,

.

.

Q (t) ≤ 2A (t)Q (t)2

Therefore

.

1⁄2Q −1 (t)Q (t) ≤

2

_βρ

___

η

for all t. Write

.

.

.

x (t) = A (t) x (t) = [ A (t) − 1⁄2Q −1 (t)Q (t) ] x (t) + 1⁄2Q −1 (t)Q (t) x (t)

.

∆

= F (t) x (t) + 1⁄2Q −1 (t)Q (t) x (t)

-36-

Linear System Theory, 2/E

Solutions Manual

Then the complete solution formula gives

t

.

x (t) = ΦF (t, to ) xo + ∫ ΦF (t, σ) 1⁄2Q −1 (σ)Q (σ) x(σ) d σ

to

and the result of Exercise 8.8 implies that there exists positive constants γ, λ such that, for any to and t ≥ to ,

x (t) ≤ γ e

−λ(t−to )

t

xo +

∫ γ e −λ(t−σ)

2

_βρ

___

x(σ) d σ

η

to

Therefore

t

e

λt

x (t) ≤ γ e

λto

xo +

∫

to

2

_γβρ

____

e λσ x(σ) d σ

η

and the Gronwall-Bellman inequality (Lemma 3.2) implies

t

e λt x (t) ≤ γ e

λto

xo e

∫ γβρ2 /η d σ

to

Thus

x (t) ≤ γ e

−(λ−γβρ2 /η)(t−to )

xo

Now, writing the left side as ΦA (t, to )xo and for any to and t ≥ to choosing the appropriate unity-norm xo gives

ΦA (t, to ) ≤ γ e

−(λ−γβρ2 /η)(t−to )

For β sufficiently small this gives the desired uniform exponential stability. (Note that Theorem 8.6 also can be

.

used to conclude that uniform exponential stability of x (t) = F (t) x (t) implies uniform exponential stability of

.

.

x (t) = [ F (t) + 1⁄2Q −1 (t)Q (t) ] x (t) = A (t) x (t)

for β sufficiently small.)

With F (t) = A (t) + (µ / 2)I we have that

F (t) satisfy Re [λF (t)] ≤ −µ / 2. The unique solution of

Solution 8.10

F(t) ≤ α + µ / 2,

.

.

F (t) = A (t), and the eigenvalues of

F T (t)Q (t) + Q (t)F (t) = −I

is

∞

Q (t) = ∫ e F

T

(t)σ

e F (t)σ d σ

0

As in the proof of Theorem 8.7, there is a constant ρ such that Q (t) ≤ ρ for all t. Now, for any n × 1 vector z,

T

d T F T (t)σ F (t)σ

___

z e

e

z = z T e F (t)σ [ F T (t) + F (t) ] e F (t)σ z

dσ

≥ −(2α + µ) z T e F

Thus for any τ ≥ 0,

-37-

T

(t)σ

e F (t)σ z

Linear System Theory, 2/E

Solutions Manual

∞

−z T e F

T

(t)τ

e F (t)τ z =

∫τ

d

___

dσ

z Te F

T

(t)σ

e F (t)σ z

dσ

∞

≥ −(2α + µ) ∫ z T e F

T

(t)σ

e F (t)σ z d σ

T

(t)σ

e F (t)σ z d σ

τ

∞

≥ −(2α + µ) ∫ z T e F

0

≥ −(2α + µ) z T Q (t) z

Thus

eF

T

(t)τ

e F (t)τ ≤ (2α + µ) Q (t) , τ ≥ 0

and using

e F(t)τ = e A(t)τ e (µ /2) τ , τ ≥ 0

gives

e A(t)τ ≤

α

+

µ

)ρ

e (−µ /2) τ ,

√(2

τ≥0

Solution 8.11 Write (the chain rule is valid since u (t) is a scalar)

.

q (t) = −A −1 (u (t))

.

. db

dA

___

___

(u (t))u (t)

(u (t))u (t) A −1 (u (t))b (u (t)) − A −1 (u (t))

du

du

.

= −B̂(t)u (t)

∆

Then

.

x (t) = A (u (t)) x (t) + b (u (t))

= A (u (t)) [ x (t) − q (t) ] + A (u (t))q (t) + b (u (t))

= A (u (t)) [ x (t) − q (t) ]

gives

_d_ [ x (t) − q (t) ] = A (u (t)) [ x (t) − q (t) ] + B̂(t)u. (t)

dt

(*)

Since

.

.

dA

dA

___

_d_ A (u (t)) = ___

(u (t))u (t)

(u (t))u (t) =

du

du

dt

.

we can conclude from Theorem 8.7 that for δ sufficiently small, and u (t) such that u (t) ≤ δ for all t, there exist

positive constants γ and η (depending on u (t)) such that

ΦA (u (t)) (t, σ) ≤ γ e −η (t−σ)

, t ≥σ≥0

But the smoothness assumptions on A (.) and b (.) and the bounds on u (t) also give that there exists a positive

constant β such that B̂(t) ≤ β for t ≥ 0. Thus the solution formula for (*) gives

x (t) − q (t) ≤ γx (0) − q (0) + γ βδ / η

for u (t) as above, and the claimed result follows.

-38-

, t ≥0

CHAPTER 9

Solution 9.7 Write

B (A−βI)B (A−βI)2 B . . .

=

B

A 2 B−2βAB+β2 B . . .

AB−βB

B AB A 2 B . . .

=

Im −β Im β 2 Im

0 Im −2βIm

0 0

Im

0 0

0

.

.

.

.

.

.

.

.

.

...

...

...

...

.

.

.

Clearly the two controllability matrices have the same rank. (The solution is even easier using rank tests from

Chapter 13.)

Solution 9.8 Since A has negative-real-part eigenvalues,

∞

Q = ∫ e At BB T e A t dt

T

0

is well defined, symmetric, and

∞

T

AQ + QA =

∫

0

∞

=

_d_

dt

∫

0

T

T

Ae At BB T e A t + e At BB T e A t A T

e At BB T e A

T

t

dt

dt

= −BB T

Also it is clear that Q is positive semidefinite. If it is not positive definite, then for some nonzero, n × 1 x,

∞

0 = x Qx = ∫ x T e At BB T e A t x dt

T

T

0

∞

=

∫ x T e At B 2 dt

0

Thus x e B = 0 for all t ≥ 0, and it follows that

T At

-39-

Linear System Theory, 2/E

Solutions Manual

dj

___

dt j

0=

x T e At B

= x TA jB

t =0

for j = 0, 1, 2, . . . . But this implies

x T B AB . . . A n−1 B

=0

which contradicts the controllability hypothesis. Thus Q is positive definite.

Solution 9.9 Suppose λ is an eigenvalue of A, and p is a corresponding left eigenvector. Then p ≠ 0, and

p TA = λ p T

This implies both

_

p HA = λ p H ,

Now suppose Q is as claimed. Then

A T p = λp

_

p H AQp + p H QA T p = λ p H Qp + λ p H Qp

= −p H BB T p

that is,

2Re [λ] p H Q p = −p H BB T p

(*)

This gives Re [λ] ≤ 0 since Q is positive definite. Now suppose Re [λ] = 0. Then (*) gives p H B = 0. Also, for

j = 1, 2, . . . ,

_

_

p H A j B = λ p H A j−1 B = . . . = λ j p H B = 0

Thus

p H B AB . . . A n−1 B

=0

which contradicts the controllability assumption. Therefore Re [λ] < 0.

Solution 9.10 Let

∆

tf

Wy (to , t f ) = ∫ C (t f )Φ(t f , t)B (t)B T (t)ΦT (t f , t)C T (t f ) dt

to

If Wy (to , t f ) is invertible, given any x(to ) = xo choose

u (t) = −B T (t)ΦT (t f , t)C T (t f )W −1

y (to , t f )C (t f )Φ(t f , to ) xo

Then the corresponding complete solution of the state equation gives

tf

y (t f ) = C (t f )Φ(t f , to ) xo − ∫ C (t f )Φ(t f , σ)B (σ)B T (σ)ΦT (t f , σ)C T (t f ) d σ W −1

y (to , t f ) C (t f )Φ(t f , to ) xo

to

=0

and we have shown output controllability on [to , t f ]..

-40-

Linear System Theory, 2/E

Solutions Manual

Now suppose the state equation is output controllable on [to , t f ], but that Wy (to , t f ) is not invertible. Then

there exists a p × 1 vector ya ≠ 0 such that y Ta Wy (to , t f )ya = 0. Using by now familiar arguments, this gives

y Ta C (t f )Φ(t f , t)B (t) = 0 , t ∈ [to , t f ]

Consider the initial state

xo = Φ(to , t f )C T (t f )[ C (t f )C T (t f ) ]−1 ya

which is well defined and nonzero since rank C (t f ) = p. There exists an input ua (t) such that

tf

0 = C (t f )Φ(t f , to ) xo + ∫ C (t f )Φ(t f , σ)B (σ)ua (σ) d σ

to

tf

= ya + ∫ C (t f )Φ(t f , σ)B (σ)ua (σ) d σ

to

Premultiplying by

y Ta

gives

0= y Ta ya

This contradicts ya ≠ 0, and thus Wy (to , t f ) is invertible.

The rank assumption on C (t f ) is needed in the necessity proof to guarantee that xo is well defined. For

m = p = 1, invertibility of Wy (to , t f ) is equivalent to existence of a ta ∈ (to , t f ) such that

C (t f )Φ(t f , ta )B (ta ) ≠ 0

That is, there exists a ta ∈ (to , t f ) such that the output response at t f to an impulse input at ta is nonzero.

Solution 9.11 From Exercise 9.10, since rank C = p, the state equation is output controllable if and only if for

some fixed t f > 0,

∆

tf

Wy = ∫ Ce

A (t f −t)

BB T e

A T (t f −t)

C T dt

0

is invertible. We will show this holds if and only if

rank

CB CAB . . . CA n−1 B

=p

by showing equivalence of the negations. If Wy is not invertible, there exists a nonzero p × 1 vector ya such that

y Ta Wy ya = 0. Thus

y Ta Ce

A (t f −t)

B = 0 , t ∈ [0, t f ]

Differentiating repeatedly, and evaluating at t = t f gives

y Ta CA j B = 0 , j = 0, 1, . . .

Thus

y Ta CB CAB . . . CA n−1 B

=0

and this implies

rank

CB CAB . . . CA n−1 B

<p

Conversely, if the rank condition fails, then there exists a nonzero ya such that y Ta CA j B = 0,

j = 0, . . . , n−1. Then

-41-

Linear System Theory, 2/E

Solutions Manual

y Ta Ce

A (t f −t)

n−1

Σ αk (t f −t) A k B = 0 ,

B = y Ta C

t ∈ [0, t f ]

k =0

Therefore y Ta Wy ya = 0, which implies that Wy is not invertible.

For m = p = 1 argue as in Solution 9.10 to show that a linear state equation is output controllable if and

only if its impulse response (equivalently, transfer function) is not identically zero.

Solution 9.17 Beginning with

y (t) = c (t)x (t)

.

.

.

y (t) = c (t)x (t) + c (t)x (t)

.

= [c (t) + c (t)A (t)]x (t) + c (t)b (t)u (t)

= L 1 (t)x (t) + L 0 (t)b (t)u (t)

it is easy to show by induction that

k−1

y (k) (t) = Lk (t)x (t) + Σ

j =0

Now if

d k −j −1

_______

[ L j (t)b (t)u (t) ] , k = 1, 2, . . .

dt k −j −1

__ −1

∆

Ln (t)M = α0 (t) α1 (t) . . . αn −1 (t)

then

n −1

Σ αi (t)Li (t) =

i =0

α0 (t)

. . . αn −1 (t)

L 0 (t) .

.

= Ln (t)

.

Ln −1 (t) Thus we can write

y (n) (t) −

n −1

n−1

αi (t)y (i) (t) = Ln (t)x (t) + Σ

Σ

i =0

j =0

−

n −1

n −1

i−1

Σ αi (t)Li (t)x (t) − iΣ=0 αi (t) jΣ=0

i =0

n−1

=

d n −j −1

_______

[ L j (t)b (t)u (t) ]

dt n −j −1

Σ

j =0

d i −j −1

______

[ L j (t)b (t)u (t) ]

dt i −j −1

n −1

i−1

d i −j −1

d n −j −1

______

_______

[ L j (t)b (t)u (t) ]

[

L

(t)b

(t)u

(t)

α

(t)

]

−

j

i

Σ

Σ

i −j −1

dt n −j −1

i =0

j =0 dt

This is in the desired form of an n th -order differential equation.

-42-

CHAPTER 10

Solution 10.2

We show equivalence of full-rank failure in the respective controllability and observability

matrices, and thus conclude that one realization is controllable and observable (minimal) if and only if the other is

controllable and observable (minimal). First,

rank

B AB

. . . A n−1 B

<n

if and only if there exits a nonzero, n × 1 vector q such that

q T B = q T AB = . . . = q T A n−1 B = 0

This holds if and only if

q T B = q T (A+BC)B = . . . = q T (A+BC)n−1 B = 0

which is equivalent to

rank

B (A+BC)B

. . . (A+BC)n−1 B

<n

Similarly,

rank

C

CA

.

.

.

CA n−1

<n

if and only if there exists a nonzero, n × 1 vector p such that

Cp = CAp = . . . = CA n−1 p = 0

This is equivalent to

Cp = C (A+BC)p = . . . = C (A+BC)n−1 p = 0

which is equivalent to

rank

C

C (A+BC)

.

.

.

C (A+BC)n−1

Solution 10.9 Since

-43-

<n

Linear System Theory, 2/E

Solutions Manual

C (t)B (σ) = H (t)F (σ)

(*)

for all t, σ, picking an appropriate to and t f > to ,

tf

Mx (to , t f )Wx (to , t f ) = ∫ C (t)H (t) dt

T

to

tf

∫ F(σ)B T (σ) d σ

(**)

to