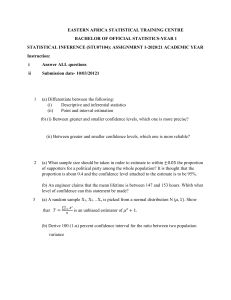

CSE 38300 Introduction to Analytical and

Quantitative Methods for Civil Engineering

Lecture 5

Ir CL KWAN

Department of Civil and Environmental Engineering

The Hong Kong Polytechnic University

1

Sampling Distribution and Estimation

2

Sampling distribution of the mean

Let X be the number you get on a dice. The

probability distribution of X is:

0.2

P(x) = 1/6

0.15

0.1

0.05

0

1

2

3

4

5

6

Population mean: E ( X ) = µ = ∑ xP(x ) = 1 ⋅ 1 + 2 ⋅ 1 + ... + 6 ⋅ 1 = 3.5

6

6

6

V ( X ) = σ 2 = ∑ ( x − µ ) P ( x ) = (1 − 3.5 )

2

Population S.D.:

σ = σ 2 = 2.92 = 1.71

2

1

2 1

+ ... + (6 − 3.5 ) = 2.92

6

6

3

Sampling distribution of the mean

Let’s say now you roll a dice two times (sample size n = 2) .

You can calculate the mean ( X ) of the two numbers:

x

x

x

With a sample size of 2, there are only 36 combinations,

and only 11 different values of x : (1, 1.5, 2, 2.5, 3, 3.5, 4,

4.5, 5, 5.5, 6)

With

x = 3.5 most likely to occur

4

Sampling distribution of the mean

We repeat the random experiment 1000 times and calculate

the sample mean X each time. The probability distribution of

the sample mean:

n=2

Note that:

1. The P.D. is centered on 3.5

2. The P.D. is not flat anymore

5

Sampling distribution of the mean

We roll a dice 12 times and calculate the sample mean X

. Then, we repeat the random experiment 1000 times

and calculate the sample mean X each time. The

probability distribution of the sample mean X :

n = 12

Note that:

1. The P.D. is centered on 3.5

2. The P.D. is not flat

3. The P.D. is narrower than

when n = 2

6

P.D. of the population

P.D. of the sample mean X

0.2

n=2

0.15

0.1

0.05

0

1

2

3

4

5

6

µ = 3.5

As n gets larger, the P.D. for X

n = 12

1. Is centered on 3.5

2. Gets narrower

3. Becomes more bell-shaped

7

Sampling distribution of the mean

As the sample size n increases, the probability

distribution of the sample mean X

1. Becomes more like a normal distribution

2. The P.D. is centered on µ

3. The P.D. gets narrower

8

Sampling distribution of the mean

Original

Normal

Uniform

Skewed

population

Sample means

(n=5)

Sample means

( n = 10 )

Sample means

normal

normal

normal

~normal

~normal

( n = 30 )

9

Central limit theorem

For a population, if the random variable X has a mean of

µ, and a standard deviation of σ. If a sample of size n is

randomly drawn from the population, no matter what is the

probability distribution of X:

1. The probability distribution of the sample mean x will

approach a normal distribution as the sample size is

increased

2. The mean of the sample mean will be the population mean

µx = µ

3. The standard deviation of the sample mean will be

σx = σ

“Standard error of the mean”

n

10

Central limit theorem

X: µ, σ

How good is X in estimating µ?

X=

1

(X1 + X 2 + ... + X n )

n

What would you expect the

value of X to be?

( )

EX =

Before collections of

data, X1 is a r.v. = X

∴ X is r.v.

nµ

1

1

E(X 1 + X 2 + ... + X n ) = (µ + ... + µ ) =

=µ

n

n

n

( )

2

σ

1

1

Var X = 2 Var ( X 1 + X 2 + ... + X n ) = 2 nσ 2 =

n

n

n

(Assume s.i. due to random sampling)

As n ↑ → Var(X) ↓

(Theorem 6

revisited)

11

Central limit theorem

What is the distribution of X?

If X is normal → X is normal

σ2

X ~ N µ ,

n

If X not normal but n is large → X is approx. normal

σ2

X ~ N µ ,

n

N is large

n1

n1 > n2

n2

µ

X

12

Central limit theorem

For most populations, if the sample size is greater than

30, the Central Limit Theorem approximation is good.

Example 1

At a large university, µ=22.3 years and σ=4 years. A

random sample of 64 students is drawn. Determine the

probability that the average age of these students is greater

than 23 years?

µ=22.3; σ2=16; n=64 is large

σ2

By the Central limit theorem, X ~ N µ , = N (22.3,0.25)

n

X − 22.3 23 − 22.3

∴ P ( X > 23) = P (

>

) = P ( Z > 1.40) = 0.0808

0.25

0.25

13

Statistical Estimation of Parameters

Classical estimation of parameters consists of two

types: (1) point estimation and (2) interval estimation

Point estimation is aimed at calculating a single

number, from a set of observational data, to

represent the parameter of the underlying population

Interval estimation determines an interval within the

true parameter lies, with a statement of “confidence”

represented by a probability that the true parameter

will be in the interval.

14

Estimating Parameters From Observation Data

REAL WORLD “POPULATION”

Theoretical Model

(True Characteristics Unknown)

Sampling

(Experimental

Observations)

Random

Variable X

Real Line -∞ < x < ∞

With Distribution fX(x)

fX(x)

Mean µ ≈ x

Variance σ 2 ≈ s 2

Sample {x1, x2, …, xn}

Inference

On fX(x)

Statistical

Estimation

1

x = ∑ xi

n

1

s2 =

xi − x

∑

n −1

(

Role of sampling in statistical inference

)

2

15

Point Estimation

Definition

If E[U(X1,X2,…,Xn)]=θ, U(X1,X2,…,Xn) is called an

unbiased estimator of θ. Otherwise, it is said to be

biased. θ is an unknown parameter of the probability

distribution.

E.g. f ( x ) = λ e − λ x

parameter

X

Example 2

Consider a random sample of size n which is drawn from a

1

1

population. The sample mean

X = X + ... + X

1

1

E ( X ) = E ( X 1 + ... + X n )

n

n

1

1

= E ( X 1 ) + ... + E ( X n )

n

n

1

1

= µ + ... + µ = µ

n

n

n

1

n

n

QED

∴ X is an unbiased estimator of µ. It is noted that the error

in the estimator X decreases as n increases.(p.11)

16

Point Estimation

Example 3

Consider a random sample of size n which is drawn from a

1 n

population. The sample variance is 2

(

)2

S =

∑

n −1

i =1

Xi − X

Prove that S2 is an unbiased estimator of σ2 .

That is, E ( S 2 ) = σ 2

(Try to derive it)

Example 4

Consider the Binomial distribution, X is the no. of success

in n trials. An estimator pˆ = X / n is unbiased.

X

1

1

E ( pˆ ) = E ( ) = E ( X ) = (np ) = p

n

n

n

∴ p̂ is an unbiased estimator of p.

QED

17

Estimation of Parameters, e.g. µ, σ2, λ, ζ etc.

a)

Method of moments: equate statistical moments

(e.g. mean, variance, skewness etc.) of the model

to those of the sample.

e.g. in normal

X : N (µ , σ ) ;

µˆ = x, σσˆ 22 = s 2

in lognormal X : LN(λ , ζ )

1 21 2 x

) =µexp

=

λE(Xln

− λ ζ+ 2 ζ ≡ x

2

2 ζ

) [2e − 1]s≡ s 2

Var (X ) = E ( Xσ

ζ 2 = ln 1 + 2 = ln (1 + δ 2 )

µ

2

Example 5

2

x

12 Data for the fatigue life of an aluminium yielded x = 26.75 million cycles

2

2

and s = 360.0 (million cycles) . Estimate λ and ζ .

1

2

λ = ln(26.75) − ς 2 = 3.083 ; ς 2 = ln(1 +

360

) = 0.4075 ⇒ ς = 0.638

26.75 2

18

Common Distributions and their Parameters

19

Common Distributions and their Parameters (Cont’d)

20

b) Method of maximum likelihood

Consider a random variable X with PDF f(x; θ), where

θ=Parameter. The idea is to estimate the parameter θ with

the value that makes the observed data x1,x2,⋅⋅⋅xn most

likely.

When a probability mass function or probability density

function is considered to be a function of the parameter, it

is called a likelihood function. Assume random sampling,

the likelihood function of obtaining a set of n independent

observations is L(θ) = fX(x1;θ) fX(x2;θ)⋅⋅⋅fX(xn;θ), where x1,x2,

⋅⋅⋅xn are observed data

The maximum likelihood estimator θˆ is the value of θ that

maximizes the likelihood function L(θ)

∂L(θ )

∂ log∂LL((θθ))

=0

= 0 → θθˆ → opt estimation of θ

∂θ

∂θ∂θ

21

Method of maximum likelihood (Cont’d):

Example 6: The times between successive occurrence of

the high-intensity earthquake were observed to be 7.3,

6.2, 5.4, 9.3, 8.3 years.

fX(x)

fX(x)

0.2

L (λ ) = (λe

=λe-λx

− λx1

)(λe

− λx 2

)...(λe

− λx n

)=λ e

n

−λ

n

∑ xi

i =1

n

log L(λ ) = n log λ − λ ∑ xi

i =1

λ = 0.2

0.1

λ = 0.1

d log L(λ ) n n

n

= − ∑ xi = 0 ⇒ λˆ = n

dλ

λ i =1

∑ xi

i =1

n

5

∴ λˆ = n

=

= 0.137 quake / year

∑ xi 36.5

i =1

X2

x

Given

X1 → λ = 0.2 more likely

Similarly,

X2 → λ = 0.1 more likely

∴ Likelihood of λ depends on fX(xi) and the xi’s

X1

22

c) Probability plots (Probability paper)

1.

2.

3.

4.

Scientists and engineers often work with data that can

be thought of as a random sample from some

population. In many cases, it is important to determine

the probability distribution that approximately describes

the population. More often, the only way to determine an

appropriate distribution is to examine the sample to find

a probability distribution that fits.

Use of Probability Paper

Arrange the data in ascending order

plotting position

x1 , x2 ,..., xm ,...x N

m

; m = 1,2,..., N

Let a cumulative probability be Pm =

N +1

Plot xm VS s

See if follows a straight line

23

Use of Probability Paper

The normal (or Gaussian) probability paper is

constructed on the basis of the standard normal CDF

s=

x−µ

σ

⇒ x = σs + µ

slope

y-intercept

The lognormal probability paper can be obtained from

the normal probability paper by simply changing the

arithmetic scale for values of X to a logarithmic scale.

s=

ln x − λ

ς

⇒ ln x = ςs + λ

slope

y-intercept

24

Example 7

Data: Time spent for 5 randomly chosen vehicles

passing through a junction 2.9s, 3.5s, 4s, 2.5s,

3.1s; N=5

m

xm

Pm

Pm

1

2

3

4

5

2.5

2.9

3.1

3.5

4

1/6

2/6

3/6

4/6

5/6

0.1667

0.3333

0.5000

0.6667

0.8333

s

-0.97

-0.43

0

0.43

0.97

It is found that the data points appear to fit the

normal distribution

25

xm

Normal Probability paper

µˆ = x0.5 = 3.2

σˆ = x0.84 − x0.5

4

3.95

= 3.95 − 3.2 = 0.75

3.2

3

2

1

s

s

pm

-1

16%

0

50%

1

84%

26

Example 8: Shear strength of concrete

13 data for shear strength of concrete were obtained

m

Shear

strength

τ

m

Shear

strength

τ

m/N+1

m/N+1

s

ln τ

1

0.35

0.0714

-1.47 -1.05

8

0.58

0.5714

0.18

-0.54

2

0.40

0.1429

-1.07 -0.92

9

0.68

0.6429

0.37

-0.39

3

0.41

0.2143

-0.79 -0.89

10

0.7

0.7143

0.57

-0.36

4

0.42

0.2857

-0.57 -0.87

11

0.75

0.7857

0.79

-0.29

5

0.43

0.3571

-0.37 -0.84

12

0.87

0.8571

1.07

-0.14

6

0.48

0.4286

-0.18 -0.73

13

0.96

0.9286

1.47

-0.04

7

0.49

0.5000

s

0

ln τ

-0.71

It is found that the data points appear to fit the

lognormal distribution

27

Normal Probability Paper

τ

1.0

0.9

0.8

0.7

0.6

0.5

0.4

0.3

s

pm

-1

16%

0

50%

1

84%

s

28

Lognormal Probability Paper

lnτ

0

-0.2

-0.22

-0.4

-0.58

-0.6

λ = −0.58

− 0.22 − (−0.58)

1− 0

= 0.36

ς=

-0.8

-1.0

-1.2

s

-1

0

pm

16%

50%

1

84%

s

29

Goodness-of-fit test of distribution

Even though the data points appear to fall on a

straight line, but how good is it?

Would it be accepted or rejected at a prescribed

confidence level?

If it appears to fit several probability models, which

one is better?

2

χ

Chi-square test ( )

Kolmogorov-Smirnov test (K-S)

30

Procedures of Chi-Square test (

χ )

2

Draw histogram from data

2.

Draw proposed distribution (frequency diagram) normalized

by no. of occurrence same area as histogram

3.

Select appropriate intervals k

4.

Determine ni = observed incidences per interval

ei = predicted incidences per interval

based on model

2

(

n

−

e

)

i

i

5. Determine

for each interval

1.

ei

(ni − ei ) 2

Z =∑

ei

i

k

6. Determine

for all intervals

Note: Larger Z less fit

7. Compare Z with the standardized value

C1−α , f

level of confidence

d.o.f. = k – 1 – m

No. of parameters in

proposed

distribution,

estimated from data

31

P=α

32

Procedures of Chi-Square test (

χ )

2

8.Check If Z < C1−α , f probability model substantiated

with confidence level 1 − α . Otherwise Model not

substantiated

Validity of method rely on k ≥ 5 , ei ≥ 5

(combine some intervals if necessary)

Example 9 – Crushing strength of

143 concrete cubes

33

Example 9 –Crushing strength of concrete (cont.)

(ni-ei)2/ei

Theoretical Frequencies, ei

Observed

frequency,ni

Interval(ksi)

Normal

Lognormal

Normal

Lognormal

<

6.75

9

11.23

10.37

0.44

0.18

6.75

-

7.00

17

13.47

14.38

0.92

0.48

7.00

-

7.25

22

20.85

22.18

0.06

0.00

7.25

-

7.50

31

25.94

26.59

0.99

0.73

7.50

-

7.75

28

25.94

25.36

0.16

0.27

7.75

-

8.00

20

20.85

19.66

0.03

0.01

8.00

-

8.50

9

20.47

19.43

6.43

5.60

>

8.50

7

4.23

5.04

1.81

0.76

143

143.00

143.00

10.85

8.03

Σ

Z N = 10.85; Z LN = 8.03; f = k − 1 − m = 8 − 1 − 2 = 5; C 0.95, 5 = 11.07

As both Z N & Z LN ≤ C 0.95, 5

Both models substantiated

(Lognormal is better than normal)

34

Kolmogorov-Smirnov (K-S) Test

Arrange the data in ascending order: x1 , x2 ,..., xk ,...x N

Sample CDF

0

x < x1

S n (x) =

{

k

n

1

xk < x ≤ xk +1

x ≥ xn

Compare S n (x) of sample with CDF, FX (x) of proposed

model.

Identify the largest discrepancy D

between the two

max

curves.

Compare Dmax with a standardized value

Dmax > Dα reject model

Dmax < Dα model substantiated

35

Example 10 – Data for fracture toughness of 26 steel

plates

α

D

Critical values n in K-S test

17

= 0.654

26

n α

0.2

0.1

0.05

0.01

5

0.45

0.51

0.56

0.67

10

0.32

0.37

0.41

0.49

0.05

≤ D26

= 0.265 15

20

0.37

0.30

0.34

0.40

0.23

0.26

0.29

0.36

25

0.21

0.24

0.27

0.32

30

0.19

0.22

0.24

0.29

Dmax = 0.654 − 0.5

= 0.154

0.5

17th data

7.9

77

36

Confidence Interval (or Interval Estimation)

•

a range (or an interval) of values likely to contain

the true value of the population parameter

As an example, Lower # < µ < Upper #

Definition - Degree of Confidence

• the probability 1 – α that the confidence interval contains

the true value of the population parameter (often

expressed as the equivalent percentage value)

usually 95% or 99%

(α = 5%) (α = 1%)

37

Confidence interval (Interval estimation) of µ

We would like to establish P(? < µ < ?) = 0.95

(Refer to p.12)

x −µ

∴y=

is N(0,1) see E4.1

σ

n

σ

,

assuming σ known → xX =~NN µµ, σ

n n

2

−

µ

x

≤ 1.96 = 0.95

P − 1.96 <

σ n

(

0.95

0.025

-1.96

k0.025

= 1.96

x −µ

σ

n

− 1.9 6σ

Similarly

n

)

< x − µ ⇒ µ < x + 1.9 6σ

x − µ ≤ 1.96 σ

n

⇒ µ ≥ x − 1.96 σ

σ

σ

∴ P x − 1.96

≤ µ < x + 1.96

= 0.95

n

n

38

n

n

Confidence interval of µ (Cont’d)

From data → n = 25; x = 5.6; assume σ = 0.65

∴ P(5.345 ≤ µ < 5.855) = 0.95

Not a r.v. → confidence interval

In short,

µ

µ

where

0.95

1-α

= x k 0.025

= x kα

σ

2

α

k α = Φ 1 -

2

2

n

σ

n

1–α

α/2

-1

kα/2

39

Example 11

Daily dissolved oxygen (DO)

n = 30 observations

s = 2.05 mg/l = σ (assumed)

x = 2.52 mg/l

Determine 99% confidence interval of µ.

k 0.005 = Φ −1 (1 − 0.005)

1 − α = 0.99 → α = 0.01 → α = 0.005

2

∴ µ

0.99

= x k 0.005

σ

n

2.05

= 2.52 2.58

30

= (1.56;3.49 )

Similarly

= Φ −1 (0.995) = 2.58

µ

0.95

= (1.76;3.25)

As confidence level ↑ → interval ↑

s ↑ → <> ↑

n ↑ → <> ↓

40

Confidence Intervals from Different Samples

µ = 2.91 (but unknown to us)

0

3.00

1.00

•

1.56

•

•

x=2.52

•

•3.49

•

•

5.00

•

•

This confidence interval

•

does not contain µ

41

Confidence Interval of µ when σ is unknown

X - µX − µ

Need the distribution of T =

S nS

n

→ student t − distribution with parameter f = n - 1

Large f → N(0,1)

Small f

0

X −µ

S n is Tn -1 ⇒ observe interval ↑ for same confidence level

µ

1− α

s

s

= x − t α ,n −1

; x + t α ,n −1

2

2

n

n

42

p

α/2

0

Go to p383

Ex.5.6,

Example

11 (Cont.)

tα/2,f

x = 2.52, s = 2.05

n − 1 = 29

99% → α = 0.005 → p = 0.995

2

∴ t α ,n −1 = t 0.005, 29 = 2.756

2

2.05

=

±

= (1.49 to 3.55)

2

.

52

2

.

756

0.99

30

wider than (1.56 to 3.49 ) (for known σ case)

∴ µ

Example 12

Traffic survey on speed of vehicles. Suppose we would like

to determine the mean vehicle velocity to within ± 1 kph

with 99 % confidence. How many vehicles should be

observed? Assume σ = 3.58 from previous study

µ

1− α

= x ± kα

σ

2

n

Scatter

k 0.005

3.58

n

= 12 → nn=85.3

= 21 or 86

2.58

What if s not known, but sample std. dev. expected to s =

3.58 and desired to be with ± 1 ?

then

→

t 0.005,n −1

t 0.005,n −1

n

=

3.58

n

= 21

21

= 00.279

.559

3.58

LHS =

=

t 0.005,89−1

89

2.6330

89

=

t 0.005,88

89

= 0.279 = RHS ∴ n = 89

Compare with n ≈ 86 for σ known

44

One - sided confidence limit

σ

= 0.95

< µ ) 0.95

for strength P µ ≥ x - k α

n

σ

= 0.95

for load

P µ ≤ x -+ k α

( µ > 0.95

n

Not α/2

x-µ

≤ kα = 1 − α

start by writing

P

α

1–

σ

α

n

kα

σ

< µ limit

; x - t α , n −1 s

)1-α is= x − kα

100(1-α)% lower confidence

n

n

σ

Upper confidence

s

>

=

+

+

x

k

(

;

x

t

µ

100(1-α)%

upper

confidence

limit

is

1-α

α

α , n −1

limit

n

n

σ known

σ unknown

45

The End

of the Session

46