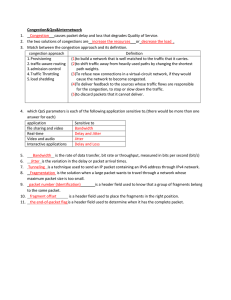

Survey on Traffic Management in Data Center Network: From Link Layer to Application Layer

advertisement