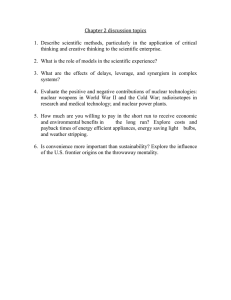

Spark Mechanics Mechanics---1NC Nuke war wouldn’t cause extinction---BUT, industrial civilization wouldn’t recover. Lewis Dartnell 15. UK Space Agency research fellow at the University of Leicester, working in astrobiology and the search for microbial life on Mars. His latest book is The Knowledge: How to Rebuild Our World from Scratch. 04-13-15. "Could we reboot a modern civilisation without fossil fuels? – Lewis Dartnell." Aeon. https://aeon.co/essays/could-we-reboot-a-modern-civilisation-without-fossil-fuels Imagine that the world as we know it ends tomorrow. There’s a global catastrophe: a pandemic virus, an asteroid strike, or perhaps a nuclear holocaust. The vast majority of the human race perishes. Our civilisation collapses. The post-apocalyptic survivors find themselves in a devastated world of decaying, deserted cities and roving gangs of bandits looting and taking by force. Bad as things sound, that’s not the end for humanity. We bounce back. Sooner or later, peace and order emerge again, just as they have time and again through history. Stable communities take shape. They begin the agonising process of rebuilding their technological base from scratch. But here’s the question: how far could such a society rebuild? Is there any chance, for instance, that a post-apocalyptic society could reboot a technological civilisation? Let’s make the basis of this thought experiment a little more specific. Today, we have already consumed the most easily drainable crude oil and, particularly in Britain, much of the shallowest, most readily mined deposits of coal. Fossil fuels are central to the organisation of modern industrial society, just as they were central to its development. Those, by the way, are distinct roles: even if we could somehow do without fossil fuels now (which we can’t, quite), it’s a different question whether we could have got to where we are without ever having had them. So, would a society starting over on a planet stripped of its fossil fuel deposits have the chance to progress through its own Industrial Revolution? Or to phrase it another way, what might have happened if, for whatever reason, the Earth had never acquired its extensive underground deposits of coal and oil in the first place? Would our progress necessarily have halted in the 18th century, in a pre-industrial state? It’s easy to underestimate our current dependence on fossil fuels. In everyday life, their most visible use is the petrol or diesel pumped into the vehicles that fill our roads, and the coal and natural gas which fire the power stations that electrify our modern lives. But we also rely on a range of different industrial materials, and in most cases, high temperatures are required to transform the stuff we dig out of the ground or harvest from the landscape into something useful. You can’t smelt metal, make glass, roast the ingredients of concrete, or synthesise artificial fertiliser without a lot of heat. It is fossil fuels – coal, gas and oil – that provide most of this thermal energy. In fact, the problem is even worse than that. Many of the chemicals required in bulk to run the modern world, from pesticides to plastics, derive from the diverse organic compounds in crude oil. Given the dwindling reserves of crude oil left in the world, it could be argued that the most wasteful use for this limited resource is to simply burn it. We should be carefully preserving what’s left for the vital repertoire of valuable organic compounds it offers. But my topic here is not what we should do now. Presumably everybody knows that we must transition to a low-carbon economy one way or another. No, I want to answer a question whose interest is (let’s hope) more theoretical. Is the emergence of a technologically advanced civilisation necessarily contingent on the easy availability of ancient energy? Is it possible to build an industrialised civilisation without fossil fuels? And the answer to that question is: maybe – but it would be extremely difficult. Let’s see how. We’ll start with a natural thought. Many of our alternative energy technologies are already highly developed. Solar panels, for example, represent a good option today, and are appearing more and more on the roofs of houses and businesses. It’s tempting to think that a rebooted society could simply pick up where we leave off. Why couldn’t our civilisation 2.0 just start with renewables? Well, it could, in a very limited way. If you find yourself among the survivors in a post-apocalyptic world, you could scavenge enough working solar panels to keep your lifestyle electrified for a good long while. Without moving parts, photovoltaic cells require little maintenance and are remarkably resilient. They do deteriorate over time, though, from moisture penetrating the casing and from sunlight itself degrading the high-purity silicon layers. The electricity generated by a solar panel declines by about 1 per cent every year so, after a few generations, all our hand-me-down solar panels will have degraded to the point of uselessness. Then what? New ones would be fiendishly difficult to create from scratch. panels are made from thin slices of extremely pure silicon, and although the raw material is common sand, it must be processed and refined using complex and precise techniques – the same technological capabilities, more or less, that we need for modern semiconductor electronics components. These techniques took a long time to develop, and would presumably take a long time to recover. So photovoltaic solar Solar power would not be within the capability of a society early in the industrialisation process. Perhaps, though, we were on the right track by starting with electrical power. Most of our renewable-energy technologies produce electricity. In our own historical development, it so happens that the core phenomena of electricity were discovered in the first half of the 1800s, well after the early development of steam engines. Heavy industry was already committed to combustion-based machinery, and electricity has largely assumed a subsidiary role in the organisation of our economies ever since. But could that sequence have run the other way? Is there some developmental requirement that thermal energy must come first? On the face of it, it’s not beyond the bounds of possibility that a progressing society could construct electrical generators and couple them to simple windmills and waterwheels, later progressing to wind turbines and hydroelectric dams. In a world without fossil fuels, one might envisage an electrified civilisation that largely bypasses combustion engines, building its transport infrastructure around electric trains and trams for long-distance and urban transport. I say ‘largely’. We couldn’t get round it all together. When it comes to generating the white heat demanded by modern industry, there are few good options but to burn stuff. While the electric motor could perhaps replace the coal-burning steam engine for mechanical applications, society, as we’ve already seen, also relies upon thermal energy to drive the essential chemical and physical transformations it needs. How could an industrialising society produce crucial building materials such as iron and steel, brick, mortar, cement and glass without resorting to deposits of coal? You can of course create heat from electricity. We already use electric ovens and kilns. Modern arc furnaces are used for producing cast iron or recycling steel. The problem isn’t so much that electricity can’t be used to heat things, but that for meaningful industrial activity you’ve got to generate prodigious amounts of it, which is challenging using only renewable energy sources such as wind and water. An alternative is to generate high temperatures using solar power directly. Rather than relying on photovoltaic panels, concentrated solar thermal farms use giant mirrors to focus the sun’s rays onto a small spot. The heat concentrated in this way can be exploited to drive certain chemical or industrial processes, or else to raise steam and drive a generator. Even so, it is difficult (for example) to produce the very high temperatures inside an iron-smelting blast furnace using such a system. What’s more, it goes without saying that the effectiveness of concentrated solar power depends strongly on the local climate. No, when it comes to generating the white heat demanded by modern industry, there are few good options but to burn stuff. But that doesn’t mean the stuff we burn necessarily has to be fossil fuels. Let’s take a quick detour into the pre-history of modern industry. Long before the adoption of coal, charcoal was widely used for smelting metals. In many respects it is superior: charcoal burns hotter than coal and contains far fewer impurities. In fact, coal’s impurities were a major delaying factor on the Industrial Revolution. Released during combustion, they can taint the product being heated. During smelting, sulphur contaminants can soak into the molten iron, making the metal brittle and unsafe to use. It took a long time to work out how to treat coal to make it useful for many industrial applications. And, in the meantime, charcoal worked perfectly well. And then, well, we stopped using it. In retrospect, that’s a pity. When it comes from a sustainable source, charcoal burning is essentially carbon-neutral, because it doesn’t release any new carbon into the atmosphere – not that this would have been a consideration for the early industrialists. But charcoal-based industry didn’t die out altogether. In fact, it survived to flourish in Brazil. Because it has substantial iron deposits but few coalmines, Brazil is the largest charcoal producer in the world and the ninth biggest steel producer. We aren’t talking about a cottage industry here, and this makes Brazil a very encouraging example for our thought experiment. The trees used in Brazil’s charcoal industry are mainly fast-growing eucalyptus, cultivated specifically for the purpose. The traditional method for creating charcoal is to pile chopped staves of air-dried timber into a great dome-shaped mound and then cover it with turf or soil to restrict airflow as the wood smoulders. The Brazilian enterprise has scaled up this traditional craft to an industrial operation. Dried timber is stacked into squat, cylindrical kilns, built of brick or masonry and arranged in long lines so that they can be easily filled and unloaded in sequence. The largest sites can sport hundreds of such kilns. Once filled, their entrances are sealed and a fire is lit from the top. The skill in charcoal production is to allow just enough air into the interior of the kiln. There must be enough combustion heat to drive out moisture and volatiles and to pyrolyse the wood, but not so much that you are left with nothing but a pile of ashes. The kiln attendant monitors the state of the burn by carefully watching the smoke seeping out of the top, opening air holes or sealing with clay as necessary to regulate the process. Brazil shows how the raw materials of modern civilisation can be supplied without reliance on fossil fuels Good things come to those who wait, and this wood pyrolysis process can take up to a week of carefully controlled smouldering. The same basic method has been used for millennia. However, the ends to which the fuel is put are distinctly modern. Brazilian charcoal is trucked out of the forests to the country’s blast furnaces where it is used to transform ore into pig iron. This pig iron is the basic ingredient of modern mass-produced steel. The Brazilian product is exported to countries such as China and the US where it becomes cars and trucks, sinks, bathtubs, and kitchen appliances. Around two-thirds of Brazilian charcoal comes from sustainable plantations, and so this modern-day practice has been dubbed ‘green steel’. Sadly, the final third is supplied by the non-sustainable felling of primary forest. Even so, the Brazilian case does provide an example of how the raw materials of modern civilisation can be supplied without reliance on fossil fuels. Another, related option might be wood gasification. The use of wood to provide heat is as old as mankind, and yet simply burning timber only uses about a third of its energy. The rest is lost when gases and vapours released by the burning process blow away in the wind. Under the right conditions, even smoke is combustible. We don’t want to waste it. Better than simple burning, then, is to drive the thermal breakdown of the wood and collect the gases. You can see the basic principle at work for yourself just by lighting a match. The luminous flame isn’t actually touching the matchwood: it dances above, with a clear gap in between. The flame actually feeds on the hot gases given off as the wood breaks down in the heat, and the gases combust only once they mix with oxygen from the air. Matches are fascinating when you look at them closely. Wartime gasifier cars could achieve about 1.5 miles per kilogram. Today’s designs improve upon this To release these gases in a controlled way, bake some timber in a closed container. Oxygen is restricted so that the wood doesn’t simply catch fire. Its complex molecules decompose through a process known as pyrolysis, and then the hot carbonised lumps of charcoal at the bottom of the container react with the breakdown products to produce flammable gases such as hydrogen and carbon monoxide. The resultant ‘producer gas’ is a versatile fuel: it can be stored or piped for use in heating or street lights, and is also suitable for use in complex machinery such as the internal combustion engine. More than a million gasifier-powered cars across the world kept civilian transport running during the oil shortages of the Second World War. In occupied Denmark, 95 per cent of all tractors, trucks and fishing boats were powered by wood-gas generators. The energy content of about 3 kg of wood (depending on its dryness and density) is equivalent to a litre of petrol, and the fuel consumption of a gasifier-powered car is given in miles per kilogram of wood rather than miles per gallon. Wartime gasifier cars could achieve about 1.5 miles per kilogram. Today’s designs improve upon this. But you can do a lot more with wood gases than just keep your vehicle on the road. It turns out to be suitable for any of the manufacturing processes needing heat that we looked at before, such as kilns for lime, cement or bricks. Wood gas generator units could easily power agricultural or industrial equipment, or pumps. Sweden and Denmark are world leaders in their use of sustainable forests and agricultural waste for turning the steam turbines in power stations. And once the steam has been used in their ‘Combined Heat and Power’ (CHP) electricity plants, it is piped to the surrounding towns and industries to heat them, allowing such CHP stations to approach 90 per cent energy efficiency. Such plants suggest a marvellous vision of industry wholly weaned from its dependency on fossil fuel. Is that our solution, then? Could our rebooting society run on wood, supplemented with electricity from renewable sources? Maybe so, if the population was fairly small. But here’s the catch. These options all presuppose that our survivors are able to construct efficient steam turbines, CHP stations and internal combustion engines. We know how to do all that, of course – but in the event of a civilisational collapse, who is to say that the knowledge won’t be lost? And if it is, what are the chances that our descendants could reconstruct it? In our own history, the first successful application of steam engines was in pumping out coal mines. This was a setting in which fuel was already abundant, so it didn’t matter that the first, primitive designs were terribly inefficient. The increased output of coal from the mines was used to first smelt and then forge more iron. Iron components were used to construct further steam engines, which were in turn used to pump mines or drive the blast furnaces at iron foundries. And of course, steam engines were themselves employed at machine shops to construct yet more steam engines. It was only once steam engines were being built and operated that subsequent engineers were able to devise ways to increase their efficiency and shrink fuel demands. They found ways to reduce their size and weight, adapting them for applications in transport or factory machinery. In other words, there was a positive feedback loop at the very core of the industrial revolution: the production of coal, iron and steam engines were all mutually supportive. In a world without readily mined coal, would there ever be the opportunity to test profligate prototypes of steam engines, even if they could mature and become more efficient over time? How feasible is it that a society could attain a sufficient understanding of thermodynamics, metallurgy and mechanics to make the precisely interacting components of an internal combustion engine, without first cutting its teeth on much simpler external combustion engines – the separate boiler and cylinder-piston of steam engines? It took a lot of energy to develop our technologies to their present heights, and presumably it would take a lot of energy to do it again. Fossil fuels are out. That means our future society will need an awful lot of timber. An industrial revolution without coal would be, at a minimum, very difficult In a temperate climate such as the UK’s, an acre of broadleaf trees produces about four to five tonnes of biomass fuel every year. If you cultivated fast-growing kinds such as willow or miscanthus grass, you could quadruple that. The trick to maximising timber production is to employ coppicing – cultivating trees such as ash or willow that resprout from their own stump, becoming ready for harvest again in five to 15 years. This way you can ensure a sustained supply of timber and not face an energy crisis once you’ve deforested your surroundings. But here’s the thing: coppicing was already a well-developed technique in preindustrial Britain. It couldn’t meet all of the energy requirements of the burgeoning society. The central problem is that woodland, even when it is well-managed, competes with other land uses, principally agriculture. The double-whammy of development is that, as a society’s population grows, it requires more farmland to provide enough food and also greater timber production for energy. The two needs compete for largely the same land areas. We know how this played out in our own past. From the mid-16th century, Britain responded to these factors by increasing the exploitation of its coal fields – essentially harvesting the energy of ancient forests beneath the ground without compromising its agricultural output. The same energy provided by one hectare of coppice for a year is provided by about five to 10 tonnes of coal, and it can be dug out of the ground an awful lot quicker than waiting for the woodland to regrow. It is this limitation in the supply of thermal energy that would pose the biggest problem to a society trying to industrialise without easy access to fossil fuels. This is true in our post-apocalyptic scenario, and it would be equally true in any counterfactual world that never developed fossil fuels for whatever reason. For a society to stand any chance of industrialising under such conditions, it would have to focus its efforts in certain, very favourable natural environments: not the coal-island of 18th-century Britain, but perhaps areas of Scandinavia or Canada that combine fastflowing streams for hydroelectric power and large areas of forest that can be harvested sustainably for thermal energy. Even so, an industrial revolution without coal would be, at a minimum, very difficult. Today, use of fossil fuels is actually growing, which is worrying for a number of reasons too familiar to rehearse here. Steps towards a low-carbon economy are vital. But we should also recognise how pivotal those accumulated reservoirs of thermal energy were in getting us to where we are. Maybe we could have made it the hard way. A slow-burn progression through the stages of mechanisation, supported by a combination of renewable electricity and sustainably grown biomass, might be possible after all. Then again, it might not. We’d better hope we can secure the future of our own civilisation, because we might have scuppered the chances of any society to follow in our wake. Can’t rebuild industrial civilization. John Jacobi 17. Leads an environmentalist research institute and collective, citing Fred Hoyle, British astronomer, formulated the theory of stellar nucleosynthesis, coined the term “big bang,” recipient of the Gold Medal of the Royal Astronomical Society, professor at the Institute of Astronomy, Cambridge University. 05-27-17. “Industrial Civilization Could Not Be Rebuilt.” The Wild Will Project. https://www.wildwill.net/blog/2017/05/27/industrial-civilization-not-rebuilt/ A suggestion, for the sake of thought: If industrial civilization collapsed, it probably could not be rebuilt. Civilization would exist again, of course, but industry appears to be a one-time experiment. The astronomist Fred Hoyle, exaggerating slightly, writes: It has often been said that, if the human species fails to make a go of it here on Earth, some other species will take over the running. In the sense of developing high intelligence this is not correct. We have, or soon will have, exhausted the necessary physical prerequisites so far as this planet is concerned. With coal gone, oil gone, high-grade metallic ores gone, no species however competent can make the long climb from primitive conditions to high-level technology. This is a one-shot affair. If we fail, this planetary system fails so far as intelligence is concerned. The same will be true of other planetary systems. On each of them there will be one chance, and one chance only. Hoyle overstates all the limits we actually have to worry about, but there are enough to affirm his belief that industry is a “one-shot affair.” In other words, if industry collapsed then no matter how quickly scientific knowledge allows societies to progress, technical development will hit a wall because the builders will not have the needed materials. For example, much of the world’s land is not arable, and some of the land in use today is only productive because of industrial technics developed during the agricultural revolution in the 60s, technics heavily dependent on oil. Without the systems that sustain industrial agriculture much current farm land could not be farmed; agricultural civilizations cannot exist there, at least until the soil replenishes, if it replenishes. And some resources required for industrial progress, like coal, simply are not feasibly accessible anymore. Tainter writes: . . . major jumps in population, at around A.D. 1300, 1600, and in the late eighteenth century, each led to intensification in agriculture and industry. As the land in the late Middle Ages was increasingly deforested to provide fuel and agricultural space for a growing population, basic heating, cooking, and manufacturing needs could no longer be met by burning wood. A shift to reliance on coal began, gradually and with apparent reluctance. Coal was definitely a fuel source of secondary desirability, being more costly to obtain and distribute than wood, as well as being dirty and polluting. Coal was more restricted in its spatial distribution than wood, so that a whole new, costly distribution system had to be developed. Mining of coal from the ground was more costly than obtaining a quantity of wood equivalent in heating value, and became even more costly as the 54 most accessible reserves of this fuel were depleted. Mines had to be sunk ever deeper, until groundwater flooding became a serious problem. Today, most easily accessible natural coal reserves are completely depleted. Thus, societies in the wake of our imagined collapse would not be able to develop fast enough to reach the underground coal. As a result of these limits, rebuilding industry would take at least thousands of years — it took 10,000 years the first time around. By the time a civilization reached the point where it could do something about industrial scientific knowledge it probably would not have the knowledge anymore. It would have to develop its sciences and technologies on its own, resulting in patterns of development that would probably look similar to historical patterns. Technology today depends on levels of complexity that must proceed in chronological stages. Solar panels, for example, rely on transportation infrastructure, mining, and a regulated division of labor. And historically the process of developing into a global civilization includes numerous instances of technical regression. The natives of Tasmania, for example, went from a maritime society to one that didn’t fish, build boats, or make bows and arrows. Rebuilding civilization would also be a bad idea. Most, who are exploited by rather than benefit from industry, would probably not view a rebuilding project as desirable. Even today, though citizens of first-world nations live physically comfortable lives, their lives are sustained by the worse off lives of the rest of the world. “Civilization . . . has operated two ways,” Paine writes, “to make one part of society more affluent, and the other more wretched, than would have been the lot of either in a natural state.” Consider the case of two societies in New Zealand, the Maori and the Moriori. Both are now believed to have originated out of the same mainland society. Most stayed and became the Maori we know, and some who became the Moriori people settled on the Chatham Islands in the 16th century. Largely due to a chief named Nunuku-whenua, the Moriori had a strict tradition of solving inter-tribal conflict peacefully and advocating a variant of passive resistance; war, cannibalism, and killing were completely outlawed. They also renounced their parent society’s agricultural mode of subsistence, relying heavily on hunting and gathering, and they controlled their population growth by castrating some male infants, so their impact on the non-human environment around them was minimal. In the meantime, the Maori continued to live agriculturally and developed into a populated, complex, hierarchical, and violent society. Eventually an Australian seal-hunting ship informed the Maori of the Moriori’s existence, and the Maori sailed to the Chathams to explore: . . . over the course of the next few days, they killed hundreds of Moriori, cooked and ate many of the bodies, and enslaved all the others, killing most of them too over the next few years as it suited their whim. A Moriori survivor recalled, “[The Maori] commenced to kill us like sheep . . . [We] were terrified, fled to the bush, concealed ourselves in holes underground, and in any place to escape our enemies. It was of no avail; we were discovered and eaten – men, women, and children indiscriminately.” A Maori conqueror explains, “We took possession . . . in accordance with our customs and we caught all the people. Not one escaped. Some ran away from us, these we killed, and others we killed – but what of that? It was in accordance with our custom.” Furthermore, we can deduce from the ubiquitous slavery in all the so-called “great civilizations” like Rome or Egypt that any attempt to rebuild a similar civilization will involve slavery. And to rebuild industry, something similar to colonization and the Trans-Atlantic Slave Trade would probably have to occur once again. After all, global chattel slavery enabled the industrial revolution by financing it, extracting resources to be accumulated at sites of production, and exporting products through infrastructure that slavery helped sustain. So, if industrial society collapsed, who would be doing the rebuilding? Not anyone most people like. It is hard to get a man to willingly change his traditional way of life; even harder when his new life is going into mines. And though history demonstrates that acts like those of the Maori or slave traders are not beyond man’s will or ability, certainly most in industrial society today would not advocate going through the phases required to reach the industrial stage of development. Mechanics---Extinction First Extinction threatens 500 trillion lives---you should vote neg if we win 1/55-thousand risk of our impacts. Math (if they win almost everyone dies): 500 trillion/9 billion = .000018 = .0018% = 1/55555 < 1/55k Seth D. Baum & Anthony M. Barrett 18. Global Catastrophic Risk Institute. 2018. “Global Catastrophes: The Most Extreme Risks.” Risk in Extreme Environments: Preparing, Avoiding, Mitigating, and Managing, edited by Vicki Bier, Routledge, pp. 174–184. A common theme across all these treatments of GCR is that some catastrophes are vastly more important than others. Carl Sagan was perhaps the first to recognize this, in his commentary on nuclear winter (Sagan 1983). Without nuclear winter, a global nuclear war might kill several hundred million people. This is obviously a major catastrophe, but humanity would presumably carry on. However, with nuclear winter, per Sagan, humanity could go extinct. The loss would be not just an additional four billion or so deaths, but the loss of all future generations. To paraphrase Sagan, the loss would be billions and billions of lives, or even more. Sagan estimated 500 trillion lives, assuming humanity would continue for ten million more years, which he cited as typical for a successful species. Sagan’s 500 trillion number may even be an underestimate. The analysis here takes an adventurous turn, hinging on the evolution of the human species and the long-term fate of the universe. On these long time scales, the descendants of contemporary humans may no longer be recognizably “human”. The issue then is whether the descendants are still worth caring about, whatever they are. If they are, then it begs the question of how many of them there will be. Barring major global catastrophe, Earth will remain habitable for about one billion more years until the Sun gets too warm and large. The rest of the Solar System, Milky Way galaxy, universe, and (if it exists) the multiverse will remain habitable for a lot longer than that (Adams and Laughlin 1997), should our descendants gain the capacity to migrate there. An open question in astronomy is whether it is possible for the descendants of humanity to continue living for an infinite length of time or instead merely an astronomically large but finite length of time (see e.g. Ćirković 2002; Kaku 2005). Either way, the lives. stakes with global catastrophes could be much larger than the loss of 500 trillion Universe Destruction First Even a small chance of Universe destruction outweighs certain human extinction. Earth is cosmically insignificant. Dr. Nick Hughes 18, Postdoctoral Research Fellow at University College Dublin, PhD in Philosophy from University of St Andrews & University of Olso, and Dr. Guy Kahane, Professor of Philosophy at the University of Oxford, D. Phil. in Philosophy from Oxford University, “Our Cosmic Insignificance”, 7-6, http://www.unariunwisdom.com/our-cosmic-insignificance/ Humanity occupies a very small place in an unfathomably vast Universe. Travelling at the speed of light – 671 million miles per hour – it would take us 100,000 years to cross the Milky Way. But we still wouldn’t have gone very far. Our modest Milky Way galaxy contains 100–400 billion stars. This isn’t very much: according to the latest calculations, the observable universe contains around 300 sextillion stars. By recent estimates, our Milky Way galaxy is just one of 2 trillion galaxies in the observable Universe, and the region of space that they occupy spans at least 90 billion light-years. If you imagine Earth shrunk down to the size of a single grain of sand, and you imagine the size of that grain of sand relative to the entirety of the Sahara Desert, you are still nowhere near to comprehending how infinitesimally small a position we occupy in space. The American astronomer Carl Sagan put the point vividly in 1994 when discussing the famous ‘Pale Blue Dot’ photograph taken by Voyager 1. Our planet, he said, is nothing more than ‘a mote of dust suspended in a sunbeam’. Stephen Hawking delivers the news more bluntly. We are, he says, “just a chemical scum on a moderate-sized planet, orbiting round a very average star in the outer suburb of one among a hundred billion galaxies.” And that’s just the spatial dimension. The observable Universe has existed for around 13.8 billion years. If we shrink that span of time down to a single year, with the Big Bang occurring at midnight on 1 January, the first Homo sapiens made an appearance at 22:24 on 31 December. It’s now 23:59:59, as it has been for the past 438 years, and at the rate we’re going it’s entirely possible that we’ll be gone before midnight strikes again. The Universe, on the other hand, might well continue existing forever, for all we know. Sagan could have added, then, that our time on this mote of dust will amount to nothing more than a blip. In the grand scheme of things we are very, very small. For Sagan, the Pale Blue Dot underscores our responsibility to treat one another with kindness and compassion. But reflection on the vastness of the Universe and our physical and temporal smallness within it often takes on an altogether darker hue. If the Universe is so large, and we are so small and so fleeting, doesn’t it follow that we are utterly insignificant and inconsequential? This thought can be a spur to nihilism. If we are so insignificant, if our existence is so trivial, how could anything we do or are – our successes and failures, our anxiety and sadness and joy, all our busy ambition and toil and endeavour, all that makes up the material of our lives – how could any of that possibly matter? To think of one’s place in the cosmos, as the American philosopher Susan Wolf puts it in ‘The Meanings of Lives’ (2007), is ‘to recognise the possibility of a perspective … from which one’s life is merely gratuitous’. The sense that we are somehow insignificant seems to be widely felt. The American author John Updike expressed it in 1985 when he wrote of modern science that: We shrink from what it has to tell us of our perilous and insignificant place in the cosmos … our century’s revelations of unthinkable largeness and unimaginable smallness, of abysmal stretches of geological time when we were nothing, of supernumerary galaxies … of a kind of mad mathematical violence at the heart of the matter have scorched us deeper than we know. In a similar vein, the French philosopher Blaise Pascal wrote in Pensées (1669): When I consider the short duration of my life, swallowed up in an eternity before and after, the little space I fill engulfed in the infinite immensity of spaces whereof I know nothing, and which know nothing of me, I am terrified. The eternal silence of these infinite spaces frightens me. Commenting on this passage in Between Man and Man (1947), the Austrian-Israeli philosopher Martin Buber said that Pascal had experienced the ‘uncanniness of the heavens’, and thereby came to know ‘man’s limitation, his inadequacy, the casualness of his existence’. In the film Monty Python’s The Meaning of Life (1983), John Cleese and Eric Idle conspire to persuade a character, played by Terry Gilliam, to give up her liver for donation. Understandably reluctant, she is eventually won over by a song that sharply details just how comically inconsequential she is in the cosmic frame. Even the relatively upbeat Sagan wasn’t, in fact, immune to the pessimistic point of view. As well as viewing it as a lesson in the need for collective goodwill, he also argued that the Pale Blue Dot challenges ‘our posturings, our imagined self-importance, and the delusion that we have some privileged position in the Universe’. When we reflect on the vastness of the universe, our humdrum cosmic location, and the inevitable future demise of humanity, our lives can seem utterly insignificant. As we complacently go about our little Earthly affairs, we barely notice the black backdrop of the night sky. Even when we do, we usually see the starry skies as no more than a pleasant twinkling decoration. This sense of cosmic insignificance is not uncommon; one of Joseph Conrad’s characters describes one of those dewy, clear, starry nights, oppressing our spirit, crushing our pride, by the brilliant evidence of the awful loneliness, of the hopeless obscure insignificance of our globe lost in the splendid revelation of a glittering, soulless universe. I hate such skies. The young Bertrand Russell, a close friend of Conrad, bitterly referred to the Earth as “the petty planet on which our bodies impotently craw.” Russell wrote that: Brief and powerless is Man’s life; on him and all his race the slow, sure doom falls pitiless and dark. Blind to good and evil, reckless of destruction, omnipotent matter rolls on its relentless way…This is why Russell thought that, in the absence of God, we must build our lives on “a foundation of unyielding despair.” When we consider ourselves as a mere dot in a vast universe, when we consider ourselves in light of everything there is, nothing human seems to matter. Even the worst human tragedy may seem to deserve no cosmic concern. After all, we are fighting for attention with an incredibly vast totality. How could this tiny speck of dust deserve even a fraction of attention, from that universal point of view? This is the image that is evoked when, for example, Simon Blackburn writes that “to a witness with the whole of space and time in its view, nothing on the human scale will have meaning”. Such quotations could be easily multiplied—we find similar remarks, for example, in John Donne, Voltaire, Schopenhauer, Byron, Tolstoy, Chesterton, Camus, and, in recent philosophy, in Thomas Nagel, Harry Frankfurt, and Ronald Dworkin. The bigger the picture we survey, the smaller the part of any point within it, and the less attention it can get… When we try to imagine a viewpoint encompassing the entire universe, humanity and its concerns seem to get completely swallowed up Over the centuries, many have thought it absurd to think that we are the only ones. For example, Anaxagoras, Epicurus, Lucretius, and, later, Giordano Bruno, Huygens and Kepler were all confident that the universe is teeming with life. Kant was willing to bet everything he had on the existence of intelligent life on other planets. And we now know that there is a vast multitude of Earth-like planets even in our own little galaxy. The experience of cosmic insignificance is often in the void. blamed on the rise of modern science, and the decline of religious belief. Many think that things started to take a turn for the worse with Copernicus. Nietzsche, for example, laments ‘the nihilistic consequences of contemporary science’, and adds that Since Copernicus it seems that man has found himself on a descending slope—he always rolls further and further away from his point of departure toward… —where is that? Towards nothingness? Freud later wrote about a series of harsh blows to our self-esteem delivered by science. The first blow was delivered by Copernicus, when we learned, as Freud puts it, that “our earth was not the centre of the universe but only a tiny fragment of a cosmic system of scarcely imaginable vastness…” It is still common to refer, in a disappointed tone, to the discovery that we aren’t at the centre of God’s creation, as we had long thought, but located, as Carl Sagan puts it, “in some forgotten corner”. We live, Sagan writes, “on a mote of dust circling a humdrum star in the remotest corner of an obscure galaxy.” Alien lives should be valued as equal to humans---anything else is arbitrary and a logic of devaluation that is at the root of violence Joe Packer 7, then MA in Communication from Wake Forest University, now PhD in Communication from the University of Pittsburgh and Professor of Communication at Central Michigan University, Alien Life in Search of Acknowledgment, p. 62-63 Once we hold alien interests as equal to our own we can begin to revaluate areas previously believed to hold no relevance to life beyond this planet. A diverse group of scholars including Richard Posner, Senior Lecturer in Law at the University of Chicago, Nick Bostrom, philosophy professor at Oxford University, John Leslie philosophy professor at Guelph University and Martin Rees, Britain’s Astronomer Royal, have written on the emerging technologies that threaten life beyond the planet Earth. Particle accelerators labs are colliding matter together, reaching energies that have not been seen since the Big Bang. These experiments threaten a phase transition that would create a bubble of altered space that would expand at the speed of light killing all life in its path. Nanotechnology and other machines may soon reach the ability to self replicate. A mistake in design or programming could unleash an endless quantity of machines converting all matter in the universe into copies of themselves. Despite detailing the potential of these technologies to destroy the entire universe, Posner, Bostrom, Leslie, and Ree’s only mention of alien life in their works is in reference to the threat aliens post to humanity. The rhetorical construction of otherness only in terms of the threats it poses, but never in terms of the threat one poses to it, has been at the center of humanity’s history of genocide, colonization, and environmental destruction. Although humanity certainly has its own interests in reducing the threat of these technologies evaluating them without taking into account the danger they pose to alien life is neither appropriate nor just. It is not appropriate because framing the issue only in terms of human interests will result in priorities designed to minimize the risks and maximize the benefits to humanity, not all life. Even if humanity dealt with the threats effectively without referencing their obligation to aliens, Posner, Bostrom, Leslie, and Ree’s rhetoric would not be “just,” because it arbitrarily declares other life forms unworthy of consideration. A framework of acknowledgement would allow humanity to address the risks of these new technologies, while being cognizant of humanity’s obligations to other life within the universe. Applying the lens of acknowledgment to the issue of existential threats moves the problem from one of self destruction to universal genocide. This may be the most dramatic example of how refusing to extend acknowledgment to potential alien life can mask humanity’s obligations to life beyond this planet. AI AI Turn Nuclear war prevents AI research. Seth Baum & Anthony Barrett 18. Global Catastrophic Risk Institute. 2018. “A Model for the Impacts of Nuclear War.” SSRN Electronic Journal. Crossref, doi:10.2139/ssrn.3155983. Another link between nuclear war and other major catastrophes comes from the potential for general malfunction of society shifting work on risky technologies such as artificial intelligence, molecular nanotechnology, and biotechnology. The simplest effect would be for the general malfunction of society to halt work on these technologies. In most cases, this would reduce the risk of harm caused by those technologies. Rapid advances in AI are coming quickly Dr. Jolene Creighton 18, Editor-in-Chief at Futurism, Co-Founder of Quarks to Quasars, Ph.D. in Digital Media & Discourse Analysis from University of Southern Mississippi, MA from SUNY Brockport, BA in English Language and Literature/Letters from Keuka College, “The “Father of Artificial Intelligence” Says Singularity Is 30 Years Away”, Futurism, 2-14, https://futurism.com/father-artificial-intelligencesingularity-decades-away/ You’ve probably been told that the singularity is coming. It is that long-awaited point in time — likely, a point in our very near future — when advances in artificial intelligence lead to the creation of a machine (a technological form of life?) smarter than humans. If Ray Kurzweil is to be believed, the singularity will happen in 2045. If we throw our hats in with Louis Rosenberg, then the day will be arriving a little sooner, likely sometime in 2030. MIT’s Patrick Winston would have you believe that it will likely be a little closer to Kurzweil’s prediction, though he puts the date at 2040, specifically. But what difference does it make? We are talking about a difference of just 15 years. The real question is, is the singularity actually on its way? At the World Government Summit in Dubai, I spoke with Jürgen Schmidhuber, who is the Co-Founder and Chief Scientist at AI company NNAISENSE, Director of the Swiss AI lab IDSIA, and heralded by some as the “father of artificial intelligence” to find out. He is confident that the singularity will happen, and rather soon. Schmidhuber says it “is just 30 years away, if the trend doesn’t break, and there will be rather cheap computational devices that have as many connections as your brain but are much faster,” he said. And that’s just the beginning. Imagine a cheap little device that isn’t just smarter than humans — it can compute as much data as all human brains taken together. Well, this may become a reality just 50 years from now. “And there will be many, many of those. There is no doubt in my mind that AIs are going to become super smart,” Schmidhuber says. Today, the world faces a number of hugely complex challenges, from global warming to the refugee crisis. These are all problems that, over time, will affect everyone on the planet, deeply and irreversibly. But the real seismic change, one that will influence the way we respond to each one of those crises, will happen elsewhere. “It is much more than just another industrial revolution. It is something that transcends humankind and life itself.” “All of this complexity pales against this truly important development of our century, which is much more than just another industrial revolution,” Schmidhuber says. Of course, the development that he is referring to is the development of these artificial superintelligences, a thing something that transcends humankind and life itself.” When biological life emerged from chemical evolution, 3.5 billion years ago, a random combination of simple, lifeless elements kickstarted the explosion of species populating the planet today. Something of comparable magnitude may be about to happen. “Now the universe is making a similar step forward from lower complexity to higher complexity,” Schmidhuber beams. “And it’s going to be awesome.” Like with biological life, there will be an element of randomness to that crucial leap between a powerful machine and artificial life. And while we may not be able to predict exactly when, all evidence points to the fact that the singularity will happen. that Schmidhuber says “is That obliterates the Universe Alan Rominger 16, PhD Candidate in Nuclear Engineering at North Carolina State University, Software Engineer at Red Hat, Former Nuclear Engineering Science Laboratory Synthesis Intern at Oak Ridge National Laboratory, BS in Nuclear Engineering from North Carolina State University, “The Extreme Version of the Technological Singularity”, Medium 11-6, https://medium.com/@AlanSE/the-extremeversion-of-the-technological-singularity-75608898eae5 In a fundamentally accurate interpretation of the singularity, there is no such thing as post-singularity. It is this point that I would like to re-focus attention back to. People who talk about post-singularity time are ignoring the basic principle of what an asymptote is. It’s not something that increases rapidly, and then increases more rapidly over time. A true asymptote increases so rapidly that it reaches infinity in finite time. I find this even more relevant as people have become concerned about Artificial Intelligence, and essentially, killer robots. The “paperclip” story is a common fallback anecdote about an AI designed to make paperclips. It goes in some steps something like: 1. We design an AI to optimize paperclip production 2. The AI improves up to the ability of self-enhancement 3. AI’s pace of improvement becomes self-reinforcing, becomes god-like 4. All humans are killed, rest of universe turned into paperclips Here, somewhere around step number 3, the “singularity” happens in its watered-down format. No true singularity happened in this story. So let’s indulge that possibility just a little bit. To take a particular point in the paperclip-ization of the universe, let’s consider the years after the AI becomes an inter-stellar space-faring entity. Now, it’s entirely reasonable to assume that it acts as Von Neumann probes. If it can reach Alpha Centauri at all, then it can multiply to exploit all of the resources in that solar system within a short period of time, due to the multiplication times for nanotechnology, yada yada. As a simple observation, the vast majority of the solar system’s energy and mass lie in the star itself. This would then imply that the AI indulges itself in star-lifting, and uses the contents of the star in fusion power plants. This process is partially ratelimited, but not to an extreme extent. The energy liberated in the use of fusion power to make paperclips would be on the scale of a supernova (in fact, vastly exceed it). As long as the AI is not operating a scrith-based society, it is also temperature-limited. This means that it will not only star-lift, but disperse the pieces in as wide of a range as possible. Given the enormous industrial capabilities of the AI, pieces of the star will mutually fan outward in all directions at once at highly relativistic speeds (although a large fraction of mass will be left in-place, because the specific energy of the fusion reaction is insufficient to move all the mass at high speeds). The most interesting detail of this process is just how defined and fast of a time-frame that it can happen in. The energy consumption rate is plainly and obviously limited by the relativistic expansion of material into space. There’s hardly any observation that matters other than a spherical boundary expanding into the galactic neighborhood at relativistic speed. If the AI is truly smart, then we might as well assume that this process is basically trivial to it. Its nature is to optimize and break-through any limit that restricts the number of paperclips made. So sure, expansion would happen at this mundane rate for a while, and this rate is very well-defined. Moving between stars in the local group at relativistic speed is simply a matter of decades, and there’s hardly anything else to say about the matter. This is where the concept of a singularity in the proper sense becomes interesting. What optimization does a multi-star, multi-supernova-power-consuming race of AI find? Clearly, this is the point at which they would be irresistibly tempted to test the limits of physics on a level that humans have not yet been able to probe. The entire game from that point on is a matter of what limitations on expansion yet-unknown laws of physics place on industrial expansion. It’s also very likely that whatever transition happens at this point redefines, fundamentally, the basic concepts of time and space. Let’s reformulate that story of the AI paperclip maker. 1. We design an AI to optimize paperclip production 2. The AI improves up to the ability of self-enhancement 3. AI’s pace of improvement becomes self-reinforcing, becomes god-like 4. Time ends. 5. Something else begins? There are many valid-sounding possibilities for the 5th step. The AI creates new baby universes from black holes. Maybe not exactly in this way. Perhaps the baby universes have to be created in particle accelerators, which is obvious to the AI after it solves the string theory problems of how our universe is folded. There’s also no guarantee that whatever next step is involved can be taken without destroying the universe that we live in. Go ahead, imagine that the particle accelerators create a new universe but trigger the vacuum instability in our own. In this case, it’s entirely possible that the AI carefully plans and coordinates the death of our universe. For a simplistic example, let’s say that after lifting the 10 nearest stars, the AI realizes the most efficient ways to stimulate the curved dimensions on the Planck scale to create baby universes. Next, it conducts an optimization study to balance the number of times this operation can be performed with gains from further expansion. Since its plans begin to largely max-out once the depth of the galactic disk is exploited, I will assume that its go-point is somewhere around the colonization of half of the milky way. At this point, a coordinated experiment is conducted throughout all of the space. Each of these events both create a baby universe and trigger an event in our own universe which destroys the meta-stable vacuum that we live in. Billions of new universes are created, while the spacetime that we live in begins to unravel in a light-speed front emanating out from each of the genesis points. There is an interesting energy-management concept that comes from this. A common problem when considering exponential galactic growth of star-lifted fusion power is that the empty space begins to get cooked from the high temperature radiated out into space. If the end-time of the universe was known in advance, this wouldn’t be a problem because one star would not absorb the radiation from the neighbor star until the light had time to propagate that distance at the speed of light. That means that the radiators can pump out high-temperature radiation into nice and normal 4-Kelvin space without concerns of boiling all the industrial machinery being used. Industrial activities would be tightly restricted until the “prepare-point”, when an energy bonanza happens so that the maximum number of baby-universe produces can be built. So the progress goes in phases. Firstly, there is expansion, next there is preparation, then there is the final event and the destruction of our universe There is one more modification that can be made. These steps could be applied to an intergalactic expansion if new probes could temporarily outrun the wave-front of the destruction of the universe if proper planning is conducted. Then it could make new baby universes in new galaxies, just before the wave-front reaches them. This might all happen within a few decades of 100 years in relative time from the perspective of someone aboard one of the probes. That is vaguely consistent with my own preconceptions of the timing of an asymptotic technological singularity in our near future. So maybe we should indulge this thinking. Maybe there won’t be a year 2,500 or 3,000. Maybe our own creations will have brought about an end to the entire universe by that time, setting in motion something else beyond our current comprehension. Another self-consistent version of this story is that we are, ourselves, products of a baby universe from such an event. This is also a relatively good, self-consistent, resolution to the Fermi Paradox, the Doomsday argument, and the Simulation argument. AI Turn---Outweighs---2NC The harms of AI outweigh the AFF. Alexey Turchin & David Denkenberger 18. Turchin is a researcher at the Science for Life Extension Foundation; Denkenberger is with the Global Catastrophic Risk Institute (GCRI) @ Tennessee State University, Alliance to Feed the Earth in Disasters (ALLFED). 05/03/2018. “Classification of Global Catastrophic Risks Connected with Artificial Intelligence.” AI & SOCIETY, pp. 1–17. According to Yampolskiy and Spellchecker (2016), the probability and seriousness of AI failures will increase with time. will reach their peak between the appearance of the first self-improving AI and the moment that an AI or group of AIs reach global power, and will later diminish, as late-stage AI halting seems to be a low-probability event. AI is an extremely powerful and completely unpredictable technology, millions of times more powerful than nuclear weapons. Its existence could create multiple individual global risks, most of which we can not currently imagine. We present several dozen separate global risk scenarios connected with AI in this article, but it is likely that some of the most serious are not included. The sheer number of possible failure modes suggests that there are more to come. We estimate that they Specifically causes infinite torture. (Alexey Turchin & David Denkenberger 18. Turchin is a researcher at the Science for Life Extension Foundation; Denkenberger is with the Global Catastrophic Risk Institute (GCRI) @ Tennessee State University, Alliance to Feed the Earth in Disasters (ALLFED). 05/03/2018. “Classification of Global Catastrophic Risks Connected with Artificial Intelligence.” AI & SOCIETY, pp. 1–17.)/LRCH Jrhee recut We could imagine a perfectly aligned AI, which was deliberately programmed to be bad by its creators. For example, a hacker could create an AI with a goal of killing all humans or torturing them. The Foundational Research Institute suggested the notion of s-risks, that is, the risks of extreme future suffering, probably by wrongly aligned AI (Daniel 2017). AI may even upgrade humans to make them feel more suffering, like in the short story “I have no mouth but I must scream” (Ellison 1967). The controversial idea of “Roko’s Basilisk” is that a future AI may torture people who did not do enough to create this malevolent AI. This idea has attracted attention in the media and is an illustration of “acausal” (not connected by causal links) blackmail by future AI (Auerbach 2014). However, this cannot happen unless many people take the proposition seriously. Outweighs extinction. (Max Daniel 17. Executive Director, Foundational Research Institute. 2017. “S-risks: Why they are the worst existential risks, and how to prevent them (EAG Boston 2017).” FRI. https://foundationalresearch.org/s-risks-talk-eag-boston-2017/ )/LRCH Jrhee “S-risk – One where an adverse outcome would bring about severe suffering on a cosmic scale, vastly exceeding all suffering that has existed on Earth so far.” So, s-risks are roughly as severe as factory farming, but with an even larger scope. To better understand this definition, let’s zoom in on the part of the map that shows existential risk. Risks of extinction have received the most attention so far. But, conceptually, x-risks contain another class of risks. These are risks of outcomes even worse than extinction in two respects. First, with respect to their scope, they not only threaten the future generations of humans or our successors, but all sentient life in the whole universe. Second, with respect to their severity, they not only remove everything that would be valuable but also come with a lot of disvalue – that is, features we’d like to avoid no matter what. Recall the story I told in the beginning, but think of Greta’s solitary confinement being multiplied by many orders of magnitude – for instance, because it affects a very large population of sentient uploads. 2NC—AI Turn—AT: Defense AI possible and scary Allan Dafoe & Stuart Russell 16. Dafoe is an assistant professor of political science at Yale University; Russell is a professor of computer science at the University of California, Berkeley. 11-02-16. “Yes, We Are Worried About the Existential Risk of Artificial Intelligence.” MIT Technology Review. https://www.technologyreview.com/s/602776/yes-we-are-worried-about-the-existential-risk-ofartificial-intelligence/. Etzioni then repeats the dubious argument that “doom-and-gloom predictions often fail to consider the potential benefits of AI in preventing medical errors, reducing car accidents, and more.” The argument does not even apply to Bostrom, who predicts that success in controlling AI will result in “a compassionate and jubilant use of humanity’s cosmic endowment.” The argument is also nonsense. It’s like arguing that nuclear engineers who analyze the possibility of meltdowns in nuclear power stations are “failing to consider the potential benefits” of cheap electricity, and that because nuclear power stations might one day generate really cheap electricity, we should neither mention, nor work on preventing, the possibility of a meltdown. Our experience with Chernobyl suggests it may be unwise to claim that a powerful technology entails no risks. It may also be unwise to claim that a powerful technology will never come to fruition. On September 11, 1933, Lord Rutherford, perhaps the world’s most eminent nuclear physicist, described the prospect of extracting energy from atoms as nothing but “moonshine.” Less than 24 hours later, Leo Szilard invented the neutron-induced nuclear chain reaction; detailed designs for nuclear reactors and nuclear weapons followed a few years later. Surely it is better to anticipate human ingenuity than to underestimate it, better to acknowledge the risks than to deny them. Many prominent AI experts have recognized the possibility that AI presents an existential risk. Contrary to misrepresentations in the media, this risk need not arise from spontaneous malevolent consciousness. Rather, the risk arises from the unpredictability and potential irreversibility of deploying an optimization process more intelligent than the humans who specified its objectives. This problem was stated clearly by Norbert Wiener in 1960, and we still have not solved it. We invite the reader to support the ongoing efforts to do so. Takeoff will be fast---recursive self-improvement will create superintelligence in days Dr. Olle Häggström 18, Professor of Mathematical Statistics at Chalmers University of Technology and Associated Researcher at the Institute for Future Studies, Member of the Royal Swedish Academy of Sciences, PhD in Mathematical Statistics and MSc in Electrical Engineering, “Thinking in Advance About the Last Algorithm We Ever Need to Invent”, 29th International Conference on Probabilistic, Combinatorial and Asymptotic Methods for the Analysis of Algorithms, Article Number 5, p. 5-6 Related to, but distinct from, the question of when superintelligence can be expected, is that of how sudden its emergence from modest intelligence levels is likely to be. Bostrom [6] distinguishes between slow takeoff and fast takeoff, where the former happens over long time scales such as decades or centuries, and the latter over short time scales such as minutes, hours or days (he also speaks of the intermediate case of moderate takeoff, but for the present discussion it will suffice to contrast the two extreme cases). Fast takeoff is more or less synonymous with the Singularity (popularized in Kurzweil’s 2005 book [27]) and intelligence explosion (the term coined by I.J. Good as quoted in Section 1, and the one that today is preferred by most AI futurologists). The practical importance of deciding whether slow or fast takeoff is the more likely scenario is mainly that the latter gives us less opportunity to adapt during the transition, making it even more important to prepare in advance for the event. The idea that is most often held forth in favor of a fast takeoff is the recursive self improvement suggested in we have managed to create an AI that outperforms us in terms of general intelligence, we have in particular that this AI is better equipped than us to construct the next and improved generation of AI, which will in turn be even better at constructing the next AI after that, and so on in a rapidly accelerating spiral towards superintelligence. But is it obvious that this spiral will be rapidly the Good quote in Section 1. Once accelerating? No, because alternatively the machine might quickly encounter some point of diminishing return – an “all the low-hanging fruit have already been picked” phenomenon. So the problem of deciding between fast and slow takeoff seems to remain open even if we can establish that a recursive self-improvement dynamic is likely. Just like with the timing issue discussed in Section 3, our epistemic situation regarding how suddenly superintelligence can be expected to emerge is steeped in uncertainty. Still, I think we are at present a bit better equipped to deal with the suddenness issue than with the timing issue, because unlike for timing we have what seems like a promising theoretical framework for dealing with suddenness. In his seminal 2013 paper [43], Yudkowsky borrows from economics the concept of returns on reinvestment, frames the AI’s self-improvement as a kind of cognitive reinvestment, and phrases the slow vs fast takeoff problem in terms of whether returns on cognitive reinvestment are increasing or decreasing in the intelligence level. Roughly, increasing returns leads to an intelligence explosion, while decreasing returns leaves the AI struggling to reach any higher in the tree than the low branches with no fruits left on them. From that insight, a way forward is to estimate returns on cognitive reinvestment based on various data sets, e.g, from the evolutionary history of homo sapiens, and think carefully about to what extent the results obtained generalize to an AI takeoff. Yudkowsky does some of this in [43], and leans tentatively towards the view that an intelligence explosion is likely. This may be contrasted against the figures from the Müller–Bostrom survey [30] quoted in Section 3, which suggest that a majority of AI experts lean more towards a slow takeoff. I doubt, however, that most of these experts have thought as systematically and as hard about the issue as Yudkowsky. 2NC---AT: Safe AI Goal optimization effect. Even if AI isn’t evil it will pick a random goal and kill off humans by siphoning free energy. James Daniel Miller 18. Based at Smith College, South Deerfield, Massachusetts. 10/11/2018. “When Two Existential Risks Are Better than One.” Foresight. Crossref, doi:10.1108/FS-04-2018-0038. *PAGI=powerful artificial general intelligence Unlike with whatever wetware runs the human brain, it would be relatively easy to make changes to a PAGI’s software. PAGI could even make changes to itself. Such selfmodification could possibly allow PAGI to undergo an intelligence explosion where it figures out how to improve its own intelligence, then, as it gets smarter, it figures out new ways to improve its intelligence. It has been theorized that through recursive selfimprovement a PAGI could go from being a bit smarter than humans to becoming a computer superintelligence in a matter of days (Good, 1965; Yudkowsky, 2008). If our understanding of the laws of physics is correct, the universe contains a limited amount of free energy, and this free energy is necessary to do any kind of work and most types of computing. Consequently, it has been theorized that most types of computer superintelligences would have an instrumental goal of gathering as much free energy as possible to further whatever ultimate goals they had (Omohundro, 2008). Humanity’s continued existence uses free energy. Consequently, if a PAGI did not have promoting human welfare as a goal, it would likely see humanity’s continuing existence as rival to its terminal values. A PAGI that wanted to maximize its understanding of, say, chess would further this end by exterminating mankind and using the atoms in our bodies to make chess computing hardware. A PAGI that wanted to maximize the number of paperclips in the universe would likewise kill us, not out of malice, but to align the atoms in our bodies with its objective. The term “paperclip maximizer” has come to mean a PAGI that seeks to use all the resources it can get for an objective that most humans would not consider worthwhile (Arbital Contributors, 2017). A PAGI that was smarter than humans, but not yet smart enough to take over the world, would have an incentive to hide its abilities and intentions from us if it predicted that we would turn the PAGI off if it scared us. Consequently, the PAGI might appear friendly weak, and unambitious right until it launches a surprise devastating attack on us, by taking what has been called a “treacherous turn” (Bostrom, 2014, pp. 116-119). Intent’s irrelevant---regardless, the cumulative non-existential effects of even narrow or benign AI undermine social resilience and cause extinction. Liu et al. 18. Hin-Yan Liu, Associate Professor, Centre for International Law, Conflict and Crisis, Faculty of Law, University of Copenhagen; Kristian Cedervall Lauta, Associate Professor, Centre for International Law, Conflict and Crisis, Faculty of Law, University of Copenhagen; Matthijs Michiel Maas, PhD Fellow, Centre for International Law, Conflict and Crisis, Faculty of Law, University of Copenhagen. 09/2018. “Governing Boring Apocalypses: A New Typology of Existential Vulnerabilities and Exposures for Existential Risk Research.” Futures, vol. 102, pp. 6–19. An example of this can be drawn from the prospect of super intelligent artificial intelligence (Bostrom, 2014; Yudkowsky, 2008a).Although the landmark research agenda articulated by Russell, Dewey, and Tegmark (2015)does call for research into ‘short-term’ policy issues, debates in this field of AI risk4have—with some exceptions—identified the core problem as one of value alignment, where the divergence between the interests of humanity and those of the superintelligence would lead to the demise of humanity through mere processes of optimisation. Thus, the existential risk posed by the superintelligence lies in the fact that it will be more capable than we can ever be; human beings will be outmanoeuvred in attempts at convincing, controlling or coercing that super-intelligence to serve our interests. As a result of this framing, the research agenda on AI risk has put the emphasis on evaluating the technical feasibility of an ‘intelligence explosion’(Chalmers, 2010;Good, 1964) through recursive selfimprovement after reaching a critical threshold (Bostrom, 2014; Sotala, 2017a; Yudkowsky, 2008a, 2013)5; on formulating strategies to estimate timelines for the expected technological development of such ‘human-level’ or ‘general’ machine intelligence (Armstrong & Sotala, 2012, 2015; Baum, Goertzel, & Goertzel, 2011; Brundage, 2015;Grace, Salvatier, Dafoe, Zhang, & Evans, 2017; Müller & Bostrom, 2016); and on formulating technical proposals to guarantee that a superintelligence’s goals or values will remain aligned with those of humanity—the so-called superintelligence ‘Control Problem’(Armstrong, Sandberg, & Bostrom, 2012; Bostrom, 2012, 2014;Goertzel & Pitt,2014;Yudkowsky, 2008a).6While this is worthwhile and necessary to address the potential risks of advanced AI, this framing of existential risks focuses on the most direct and causally connected existential risk posed by AI systems. Yet while super-human intelligence might surely suffice to trigger an existential outcome, it is not necessary to it. Cynically, mere human level intelligence appears to be more than sufficient to pose an array of existential risks (Martin, 2006; Rees, 2004).Furthermore, some applications of ‘narrow’ AI which might help in mitigating against some existential risks, might pose their own existential risks when combined with other technologies or trends, or might simply lower barriers against other varieties of existential risks. To give one example; the deployment of advanced AI-enhanced surveillance capabilities7—including automatic hacking, geospatial sensing, advanced data analysis capabilities, and autonomous drone deployment— may greatly strengthen global efforts to protect against ‘rogue’ actors engineering a pandemic (“preventing existential risk”). It may also offer very accurate targeting and repression information to a totalitarian regimes,8particularly those with separate access to nanotechnological weapons (“creating anew existential risk”). Finally, the increased strategic transparency of such AI systems might disrupt existing nuclear deterrence stability, by rendering vulnerable previously ‘secure’ strategic assets (“lowering the threshold to existential risk”)(Hambling, 2016;Holmes, 2016;Lieber & Press, 2017).Finally, many ‘non-catastrophic’ trends engendered by AI— whether geopolitical disruption, unemployment through automation; widespread automated cyberattacks, or computational propaganda—might resonate to instil a deep technological anxiety or reg-ulatory distrust in global public. While these trends do not directly lead to catastrophe, they could well be understood as a meta-level existential threat, if they spur rushed and counter-productive regulation at the domestic level, or so degrade conditions for co-operation on the international level that they curtail our collective ability to address not just existential risks deriving from artificial intelligence, but those from other sources (e.g. synthetic biology and climate change), as well. These brief examples sketch out the broader existential challenges latent within AI research and development at preceding stages or manifesting through different avenues than the signature risk posed by superintelligence. Thus, addressing the existential risk posed by superintelligence is both crucial to avoiding the ‘adverse outcome’, but simultaneously misses the mark in an important sense. Tons of impacts---accidents are inevitable Alexey Turchin & David Denkenberger 18. Turchin is a researcher at the Science for Life Extension Foundation; Denkenberger is with the Global Catastrophic Risk Institute (GCRI) @ Tennessee State University, Alliance to Feed the Earth in Disasters (ALLFED). 05/03/2018. “Classification of Global Catastrophic Risks Connected with Artificial Intelligence.” AI & SOCIETY, pp. 1–17. There are currently few computer control systems that have the ability to directly harm humans. However, increasing automation, combined with the Internet of Things (IoT) will probably create many such systems in the near future. Robots will be vulnerable to computer virus attacks. The idea of computer viruses more sophisticated than those that currently exist, but are not full AI, seems to be underexplored in the literature, while the local risks of civil drones are attracting attention (Velicovich 2017). It seems likely that future viruses will be more sophisticated than contemporary ones and will have some elements of AI. This could include the ability to model the outside world and adapt its behavior to the world. Narrow AI viruses will probably be able to use human language to some extent, and may use it for phishing attacks. Their abilities may be rather primitive compared with those of artifcial general intelligence (AGI), but they could be sufficient to trick users via chatbots and to adapt a virus to multiple types of hardware. The threat posed by this type of narrow AI becomes greater if the creation of superintelligent AI is delayed and potentially dangerous hardware is widespread. A narrow AI virus could become a global catastrophic risk (GCR) if the types of hardware it affects are spread across the globe, or if the afected hardware can act globally. The risks depend on the number of hardware systems and their power. For example, if a virus affected nuclear weapon control systems, it would not have to affect many to constitute a GCR. A narrow AI virus may be intentionally created as a weapon capable of producing extreme damage to enemy infrastructure. However, later it could be used against the full globe, perhaps by accident. A “multi-pandemic”, in which many AI viruses appear almost simultaneously, is also a possibility, and one that has been discussed in an article about biological multi-pandemics (Turchin et al. 2017). Addressing the question about who may create such a virus is beyond the scope of this paper, but history shows that the supply of virus creators has always been strong. A very sophisticated virus may be created as an instrument of cyber war by a state actor, as was the case with Stuxnet (Kushner 2013). The further into the future such an attack occurs, the more devastating it could be, as more potentially dangerous hardware will be present. And, if the attack is on a very large scale, affecting billions of sophisticated robots with a large degree of autonomy, it may result in human extinction. Some possible future scenarios of a virus attacking hardware are discussed below. Multiple scenarios could happen simultaneously if a virus was universal and adaptive, or if many viruses were released simultaneously. A narrow AI virus could have the ability to adapt itself to multiple platforms and trick many humans into installing it. Many people are tricked by phishing emails even now (Chiew et al. 2018). Narrow AI that could scan a person’s email would be able to compose an email that looks similar to a typical email conversation between two people, e.g., “this is the new version of my article about X”. Recent successes with text generation based on neural nets (Karpathy 2015; Shakirov 2016) show that generation of such emails is possible even if the program does not fully understand human language. One of the properties of narrow AI is that while it does not have general human intelligence, it can still have superhuman abilities in some domains. These domains could include searching for computer vulnerabilities or writing phishing emails. So, while narrow AI is not able to selfimprove, it could affect a very large amount of hardware. A short overview of the potential targets of such a narrow AI virus and other situations in which narrow AI produces global risks follows. Some items are omitted as they may suggest dangerous ideas to terrorists; the list is intentionally incomplete. 3.2.1 Military AI systems There are a number GCRs associated with military systems. Some potential scenarios: military robotics could become so cheap that drone swarms could cause enormous damage to the human population; a large autonomous army could attack humans because of a command error; billions of nanobots with narrow AI could be created in a terrorist attack and create a global catastrophe (Freitas 2000). In 2017, global attention was attracted to a viral video about “slaughterbots” (Oberhaus 2017), hypothetical small drones able to recognize humans and kill them with explosives. While such a scenario is unlikely to pose a GCR, a combination of cheap AI-powered drone manufacture and high-precision AI-powered targeting could convert clouds of drones into weapons of mass destruction. This could create a “drone swarms” arms race, similar to the nuclear race. Such a race might result in an accidental global war, in which two or more sides attack each other with clouds of small killer drones. It is more likely that drones of this type would contribute to global instability rather than cause a purely drone-based catastrophe. AI-controlled drones could be delivered large distances by a larger vehicle, or they could be solar powered; solarpowered airplanes already exist (Taylor 2017). Some advanced forms of air defense will limit this risk, but drones could also jump (e.g., solar charging interspersed with short fights), crawl, or even move underground like worms. There are fewer barriers to drone war escalation than to nuclear weapons. Drones could also be used anonymously, which might encourage their use under a false fag. Killer drones could also be used to suppress political dissent, perhaps creating global totalitarianism. Other risks of military AI have been previously discussed (Turchin and Denkenberger 2018a). Arms Control Arms Control Turn Nuke war will be limited, doesn’t cause extinction, BUT does solidify WMD norms James Scouras 19. Johns Hopkins University Applied Physics Laboratory. Summer 2019. “Nuclear War as a Global Catastrophic Risk.” Journal of Benefit-Cost Analysis, vol. 10, no. 2, pp. 274–295. Regarding consequences, does unconstrained nuclear war pose an existential risk to humanity? The consequences of existential risks are truly incalculable, including the lives not only of all human beings currently living but also of all those yet to come; involving not only Homo sapiens but all species that may descend from it. At the opposite end of the spectrum of consequences lies the domain of “limited” nuclear wars. Are these also properly considered global catastrophes? After all, while the only nuclear war that has ever occurred devastated Hiroshima and Nagasaki, it was also instrumental in bringing about the end of the Pacific War, thereby saving lives that would have been lost in the planned invasion of Japan. Indeed, some scholars similarly argue that many lives have been saved over the nearly three-fourths of a century since the advent of nuclear weapons because those weapons have prevented the large conventional wars that otherwise would likely have occurred between the major powers. This is perhaps the most significant consequence of the attacks that devastated the two Japanese cities. Regarding likelihood, how do we know what the likelihood of nuclear war is and the degree to which our national policies affect that likelihood, for better or worse? How much confidence should we place in any assessment of likelihood? What levels of likelihood for the broad spectrum of possible consequences pose unacceptable levels of risk? Even a very low (nondecreasing) annual likelihood of the risk of nuclear war would result in near certainty of catastrophe over the course of enough years. Most fundamentally and counterintuitively, are we really sure we want to reduce the risk of nuclear war? The successful operation of deterrence, which has been credited – perhaps too generously – with preventing nuclear war during the Cold War and its aftermath, depends on the risk that any nuclear use might escalate to a nuclear holocaust. Many proposals for reducing risk focus on reducing nuclear weapon arsenals and, therefore, the possible consequences of the most extreme nuclear war. Yet, if we reduce the consequences of nuclear war, might we also inadvertently increase its likelihood? It’s not at all clear that would be a desirable trade-off. This is all to argue that the simplistic logic described above is inadequate, even dangerous. A more nuanced understanding of the risk of nuclear war is imperative. This paper thus attempts to establish a basis for more rigorously addressing the risk of nuclear war. Rather than trying to assess the risk, a daunting objective, its more modest goals include increasing the awareness of the complexities involved in addressing this topic and evaluating alternative measures proposed for managing nuclear risk. I begin with a clarification of why nuclear war is a global catastrophic risk but not an existential risk. Turning to the issue of risk assessment, I then present a variety of assessments by academics and statesmen of the likelihood component of the risk of nuclear war, followed by an overview of what we do and do not know about the consequences of nuclear war, emphasizing uncertainty in both factors. Then, I discuss the difficulties in determining the effects of risk mitigation policies, focusing on nuclear arms reduction. Finally, I address the question of whether nuclear weapons have indeed saved lives. I conclude with recommendations for national security policy and multidisciplinary research. 2 Why is nuclear war a global catastrophic risk? One needs to only view the pictures of Hiroshima and Nagasaki shown in figure 1 and imagine such devastation visited on thousands of cities across warring nations in both hemispheres to recognize that nuclear war is truly a global catastrophic risk. Moreover, many of today’s nuclear weapons are an order of magnitude more destructive than Little Boy and Fat Man, and there are many other significant consequences – prompt radiation, fallout, etc. – not visible in such photographs. Yet, it is also true that not all nuclear wars would be so catastrophic; some, perhaps involving electromagnetic pulse (EMP) attacks 2 using only a few high-altitude detonations or demonstration strikes of various kinds, could result in few casualties. Others, such as a war between Israel and one of its potential future nuclear neighbors, might be regionally devastating but have limited global impact, at least if we limit our consideration to direct and immediate physical consequences. Nevertheless, smaller nuclear wars need to be included in any analysis of nuclear war as a global catastrophic risk because they increase the likelihood of larger nuclear wars. This is precisely why the nuclear taboo is so precious and crossing the nuclear threshold into uncharted territory is so dangerous (Schelling, 2005; see also Tannenwald, 2007). [[image omitted]] While it is clear that nuclear war is a global catastrophic risk, it is also clear that it is not an existential risk. Yet over the course of the nuclear age, a series of mechanisms have been proposed that, it has been erroneously argued, could lead to human extinction. The first concern 3 arose among physicists on the Manhattan Project during a 1942 seminar at Berkeley some three years before the first test of an atomic weapon. Chaired by Robert Oppenheimer, it was attended by Edward Teller, Hans Bethe, Emil Konopinski, and other theoretical physicists (Rhodes, 1995). They considered the possibility that detonation of an atomic bomb could ignite a self-sustaining nitrogen fusion reaction that might propagate through earth’s atmosphere, thereby extinguishing all air-breathing life on earth. Konopinski, Cloyd Margin, and Teller eventually published the calculations that led to the conclusion that the nitrogen-nitrogen reaction was virtually impossible from atomic bomb explosions – calculations that had previously been used to justify going forward with Trinity, the first atomic bomb test (Konopinski et al., 1946). Of course, the Trinity test was conducted, as well as over 1000 subsequent atomic and thermonuclear tests, and we are fortunately still here. After the bomb was used, extinction fear focused on invisible and deadly fallout, unanticipated as a significant consequence of the bombings of Japan that would spread by global air currents to poison the entire planet. Public dread was reinforced by the depressing, but influential, 1957 novel On the Beach by Nevil Shute (1957) and the subsequent 1959 movie version (Kramer, 1959). The story describes survivors in Melbourne, Australia, one of a few remaining human outposts in the Southern Hemisphere, as fallout clouds approached to bring the final blow to humanity. In the 1970s, after fallout was better understood to be limited in space, time, and magnitude, depletion of the ozone layer, which would cause increased ultraviolet radiation to fry all humans who dared to venture outside, became the extinction mechanism of concern. Again, one popular book, The Fate of the Earth by Jonathan Schell (1982), which described the nuclear destruction of the ozone layer leaving the earth “a republic of insects and grass,” promoted this fear. Schell did at times try to cover all bases, however: “To say that human extinction is a certainty would, of course, be a misrepresentation – just as it would be a misrepresentation to say that extinction can be ruled out” (Schell, 1982). Finally, the current mechanism of concern for extinction is nuclear winter, the phenomenon by which dust and soot created primarily by the burning of cities would rise to the stratosphere and attenuate sunlight such that surface temperatures would decline dramatically, agriculture would fail, and humans and other animals would perish from famine. The public first learned of the possibility of nuclear winter in a Parade article by Sagan (1983), published a month or so before its scientific counterpart by Turco et al. (1983). While some nuclear disarmament advocates promote the idea that nuclear winter is an extinction threat, and the general public is probably confused to the extent it is not disinterested, few scientists seem to consider it an extinction threat. It is understandable that some of these extinction fears were created by ignorance or uncertainty and treated seriously by worst-case thinking, as seems appropriate for threats of extinction. But nuclear doom mongering also seems to be at play for some of these episodes. For some reason, portions of the public active in nuclear issues, as well as some scientists, appear to think that arguments for nuclear arms reductions or elimination will be more persuasive if nuclear war is believed to threaten extinction, rather than merely the horrific cataclysm that it would be in reality (Martin, 1982). 4 To summarize, nuclear war is a global catastrophic risk. Such wars may cause billions of deaths and unfathomable suffering, as well set civilization back centuries. Smaller nuclear wars pose regional catastrophic risks and also national risks in that the continued functioning of, for example, the United States as a constitutional republic is highly dubious after even a relatively limited nuclear attack. But what nuclear war is not is an existential risk to the human race. There is simply no credible scenario in which humans do not survive to repopulate the earth A war is inevitable---BUT, the longer we wait, the worse it gets. Seth Baum & Anthony Barrett 18. Global Catastrophic Risk Institute. 2018. “A Model for the Impacts of Nuclear War.” SSRN Electronic Journal. Crossref, doi:10.2139/ssrn.3155983. On the other end of the spectrum, the norm could be weaker. The Hiroshima and Nagasaki bombings provided a vivid and enduring image of the horrors of nuclear war—hence the norm can reasonably be described as a legacy of the bombings. Without this image, there would be less to motivate the norm. A weaker norm could in turn have led to a nuclear war occurring later, especially during a near-miss event like the Cuban missile crisis. A later nuclear war would likely be much more severe, assuming some significant buildup of nuclear arsenals and especially if “overkill” targeting was used. A new nuclear war could bring a similarly wide range of shifts in nuclear weapons norms. It could strengthen the norm, hastening nuclear disarmament. Already, there is a political initiative drawing attention to the humanitarian consequences of nuclear weapons use in order to promote a new treaty to ban nuclear weapons as a step towards complete nuclear disarmament (Borrie 2014). It is easy to imagine this initiative using any new nuclear attacks to advance their goals. Alternatively, it could weaken the norm, potentially leading to more and/or larger nuclear wars. This is a common concern, as seen for example in debates over low-yield bunker buster nuclear weapons (Nelson 2003). Given that the impacts of a large nuclear war could be extremely severe, a shift in nuclear weapons norms could easily be the single most consequential effect of a smaller nuclear war. Future weapons and AI, unlike a nuclear war, are actually likely to cause extinction. Alexey Turchin & David Denkenberger 18. Turchin is a researcher at the Science for Life Extension Foundation; Denkenberger is with the Global Catastrophic Risk Institute (GCRI) @ Tennessee State University, Alliance to Feed the Earth in Disasters (ALLFED). 09/2018. “Global Catastrophic and Existential Risks Communication Scale.” Futures, vol. 102, pp. 27–38. “Civilizational collapse risks” As most human societies are fairly complex, a true civilizational collapse would require a drastic reduction in human population, and the break-down of connections between surviving populations. Survivors would have to rebuild civilization from scratch, likely losing much technological abilities and knowledge in the process. Hanson (2008) estimated that the minimal human population able to survive is around 100 people. Like X risks, there is little agreement on what is required for civilizational collapse. Clearly, different types and levels of the civilizational collapse are possible (Diamond, 2005) (Meadows, Randers, & Meadows, 2004). For instance, one definition of the collapse of civilization involves, collapse of long distance trade, widespread conflict, and loss of government (Coates, 2009). How such collapses relate to existential risk needs more research. “Human extinction risks” are risks that all humans die, and no future generations (in the extended sense mentioned above) will ever exist.“All life on Earth ends risks” involve the extinction of all life on earth. As this includes H. sapiens, such risks are at the very least on a par with human extinction, but are likely worse as the loss of biodiversity is higher, and (without life arising a second time) no other civilizations, human or otherwise, would be possible on Earth. “Astronomical scale risks” include the demise of all civilizations in the affectable universe. This of course includes human extinction, and all life on Earth, and so again are at the very least on a par, and very likely much worse outcomes, than those two. “S-risks” include collective infinite suffering (Daniel, 2017). These differ from extinction risks insofar as extinction leads to a lack of existence, whereas this concerns ongoing existence in undesirable circumstances. These also vary in scale and intensity, but are generally out of scope of this work. Even with a focus squarely on X Risk, global catastrophic risks and civilizational collapse are critically important. This is because there is at least some likelihood that global catastrophic risks increase the probability of human extinction risks—and the more extreme end of civilizational collapses surely would. Before shifting to a discussion of probability appropriate to X risk, we’ll discuss some reasons to link these kinds of risk. First, global risks may have a fat tail—that is a low probability of high consequences—and the existence of such fat tails strongly depend on the intrinsic uncertainty of global systems (Ćirković, 2012) (Baum, 2015), (Wiener, 2016) (Sandberg & Landry, 2015). This is especially true for risks associated with future world wars, which may include not only nuclear weapons, but weapons incorporating synthetic biology and nanotechnology, different AI technologies, as well as Doomsday blackmail weapons (Kahn, 1959). Another case are the risks associated with climate change, where runaway global warming is a likely fat tail (Obata & Shibata, 2012a), (Goldblatt & Watson, 2012). Second, global catastrophes could be part of double catastrophe (Baum, Maher, & Haqq-Misra, 2013) or start a chain of catastrophes (Tonn & and MacGregor, 2009), and in this issue (Karieva, 2018). Even if a single catastrophic risk is insufficient to wipe us out, an unhappy coincidence of such events could be sufficient, or under the wrong conditions could trigger a collapse leading to human extinction. Further, global catastrophe could weaken our ability to prepare for other risks. Luke Oman has estimated the risks of human extinction because of nuclear winter: “The probability I would estimate for the global human population of zero resulting from the 150 Tg of black carbon scenario in our 2007 paper would be in the range of 1 in 10,000 to 1 in 100,000” Robock, Oman, & Stenchikov, 2007), (Shulman, 2012). Tonn also analyzed chains of events, which could result in human extinction and any global catastrophe may be a start of such chain (Tonn and MacGregor, 2009). Because this, we suggest that any global catastrophe should be regarded as a possible cause of human extinction risks with no less than 0.01 probability. Similarly, scenarios involving civilization collapses also plausibly increase the risk of human extinction. If civilization collapses, recovery may be slowed or stopped for a multitude of reasons. For instance, easily accessible mineral and fossil fuel resources might be no longer available, the future climate may be extreme or unstable, we may not regain sufficient social trust after the catastrophe’s horrors, the catastrophe may affect our genetics, a new endemic disease could prevent high population density, and so on. And of course, the smaller populations associated with civilization collapse are more vulnerable to being wiped out by natural events. We estimate that civilization collapse has a 0.1 probability of becoming an existential catastrophe. In section 4, this discussion will form the basis of our analysis of an X risk’s “severity”, which is the main target of our scale. Before getting there, however, we should first discuss the difficulties of measuring X risks, and related worries regarding probabilities. 3. Difficulties of using probability estimates as the communication tool Plain probability estimates are often used as an instrument to communicate X risks. An example is a claim like “Nuclear war could cause human extinction with probability P”. However, in our view, probability measures are inadequate, both for measuring X risks, and for communicating those risks. This is because of conceptual difficulties (3.1), difficulty in providing meaningful measurements (3.2), the possibility of interaction effects (3.3) and the measurement’s inadequacy for prioritization (3.4) purposes. After presenting these worries, we argue that the magnitude of probabilities is a better option, which we use in our tool (3.5). 3.1 Difficulties in defining X risk probabilities Frequentism applies to X risks only with difficulty. One-off events don’t have a frequency, and multiple events are required for frequentist probabilities to apply. Further, on a frequentist reading, claims concerning X risks cannot be falsified. Again, this is because in order to infer from occurrences to probability, multiple instances are required. Although these conceptual and epistemic difficulties may be analyzed and partly overcome in technical scientific and philosophical literature, they would overcomplicate a communication tool. Also, discussion of X risks sometimes involves weird probabilistic effects. Consider, for example, what (Ćirković, Sandberg, & Bostrom, 2010) call the ‘anthropic shadow’. Because human extinction events entail a lack of humans to observe the event after the fact, we will systematically underestimate the occurrence of such events in an extreme case of survivorship bias (the Doomsday Argument (Tegmark & Bostrom, 2005) is similar). All of this makes the probabilities attached to X risks extremely difficult to interpret, bad news for an intended communication tool, and stimulates obscure anthropic reasoning. In addition, the subtle features involved in applying frequentism to one-off events, would otherwise tamper with our decision making process. 3.2 Data & X Risk There are little hard data concerning global risks from which probabilities could be extracted. The risk of an asteroid impact is fairly well understood, both due to the historical record, and because scientists can observe particular asteroids and calculate their trajectories. Studies of nuclear winter (Denkenberger & Pearce, 2016), volcanic eruptions, and climate change also provide some risk probability estimates, but are less rigorously supported. In all other cases, especially technological risks, there are many (often contradicting) expert opinions, but little hard data. Those probability calculations which have been carried out are based on speculative assumptions, which carry their own uncertainty. In the best case, generally, only the order of magnitude of the catastrophe’s probability can be estimated. Uncertainty in GCRs is so high, that predictions with high precision are likely to be meaningless. For example, surveys could produce such meaningless over-precision. A survey on human extinction probability gave an estimate of 19 percent in the 21st century (Sandberg & Bostrom, 2008). Such measurements are problematic for communication, because probability estimates of global risks often do not include corresponding confidence intervals (Garrick, 2008). For some catastrophic risks, uncertainty is much larger than for others, because of objective difficulties in their measurement, as well as subjective disagreements between various approaches (especially in the case of climate change, resource depletion, population growth and other politicized areas). As we’ll discuss below, one response is to present probabilities as magnitudes. 3.3 Probability density, timing and risks’ interactions Two more issues with using discrete frequentist probabilities for communicating X risks are related to probability density and the interactions between risks. For the purpose of responding to the challenges of X risk, the total probability of an event is less useful than the probability density: we want to know not only the probability but the time in which it is measured. This is crucial if policy makers are to prioritize avoidance efforts. Also, probability estimates of the risks are typically treated separate: interdependence is thus ignored. The total probability of human extinction caused by risk A could strongly depend on the extinction probability caused by risks B and C and also of their timing. (See also double catastrophes discussed by Baum, Maher, & HaqqMisra, 2013 and the integrated risk assessment project (Baum, 2017). Further, probability distributions of different risks can have different forms. Some risks are linear, others are barrier-like, other logistical. Thus, not all risks can be presented by a single numerical estimate. Exponentially growing risks may be the best way to describe new technologies, such as AI and synthetic biology. Such risks cannot be presented by a single annual probability. Finally, the probability estimation of a risk depends on whether human extinction is ultimately inevitable. We assume that if humanity becomes an interstellar civilization existing for millions of years, it will escape any near-term extinction risks; the heat death of the universe may be ultimate end, but some think even that is escapable (Dvorsky, 2015). If near-term extinction is inevitable, it is possible to estimate which risks are more probable to cause human extinction (like actuaries do in estimating different causes of death, based in part on the assumption that human death is inevitable). If near-term human extinction is not inevitable, then there is a probability of survival, which is (1- P(all risks)). Such conditioning requires a general model of the future. If extinction is inevitable, the probability of a given risk is just a probability of one way to extinction compared to other ways. 3.4 Preventability, prioritizing and relation to the smaller risks Using bare probability as a communication tool also ignores many important aspects of risks which are substantial for decision makers. First, a probability estimate does not provide sufficient guidance on how to prioritize prevention efforts. A probability estimate does not say anything about the risk’s relation to other risks, e.g. its urgency. Also, if a risk will take place at a remote time in the future (like the Sun becoming a red giant), there is no reason to spend money on its prevention. Second, a probability estimate does not provide much information about the relation of human extinction risks, and corresponding smaller global catastrophic risks. For example, a nuclear war probability estimate does not disambiguate between chances that it will be a human extinction event, a global catastrophic event, or a regional catastrophe. Third, probability measures do not take preventability into account. Hopefully, measures will be taken to try and reduce X risks, and the risks themselves have individual preventability. Generally speaking, it ought to be made clear when probabilities are conditional on whether prevention is attempted or not, and also on the probability of its success. Probability density, and its relation with cumulative probability could also be tricky, especially as the probability density of most risks is changing in time. 3.5 Use of probability orders of magnitude as a communication tool We recommend using magnitudes of probabilities in communicating about X risk. One way of overcoming many of the difficulties of using probabilities as communication tool described above is to estimate probabilities with fidelity of one or even two orders of magnitude, and do it over large fixed interval of time, that is the next 100 years (as it the furthest time where meaningful prognoses exist). This order of magnitude estimation will smooth many of the uncertainties described above. Further, prevention actions are typically insensitive in to the exact value of probability. For example, if a given asteroid impact probability is 5% or 25%, needed prevention action will be nearly the same. For X risks, we suggest using probability intervals of 2 orders of magnitude. Using such intervals will often provide meaningful differences in probability estimates for individual risks. (However, expert estimates sometimes range from “inevitable” to “impossible”, as in AI risks). Large intervals will also accommodate the possibility of one risk overshadowing another, and other uncertainties which arise from the difficulties of defining and measuring X-risks. This solution is itself inspired by The Torino scale of asteroid danger, which we discuss in more detail below. The Torino scale has five probability intervals, each with a two order of magnitude difference from the next. Further, such intervals can be used to present uncertainty in probability estimation. This uncertainty is often very large for even approximately well-defined asteroid risks. For example, Garrick (Garrick, 2008) estimated that asteroid impacts on the contiguous US with at least 10 000 victims to have expected frequency between once 1: 1900 and 1: 520 000 years with 90 percent confidence. In other words, it used more than 2 orders of magnitude uncertainty. Of course, there is a lot more to be said about the relationship between X risks and probability—however here we restrict ourselves to those issues most crucial for our purpose, that is, designing a communication tool for X risks. 4. Constructing the scale of human extinction risks 4.1. Existing scales for different catastrophic risks In section 2 we established the connection between global catastrophic risks, civilizational collapse risks, human extinction and X risks; we explored the difficulty of the use of probabilities as a communication tool for X risks in section 3; now we can construct the scale to communicate the level of risk of all global catastrophic and X risks. Our scale is inspired by the Torino scale of asteroid danger which was suggested by professor Richard Binzel (Binzel, 1997). As it only measures the energy of impact, it is not restricted to asteroids but applies to many celestial bodies (comets, for instance). It was first created to communicate the level of risk to the public, because professionals and decision makers have access to all underlying data for the hazardous object. The Torino scale combines a 5 level color code and 11 level numbered codes. One of the Torino scale’s features is that it connects the size and the probability using diagonal lines, i.e., an event with a bigger size and smaller probability warrants the same level of attention as smaller but more probable events. However, this approach has some difficulties, as was described by (Cox, 2008). There are several other scales of specific global risks based on similar principles: 1. Volcanic explosivity index, VEI, 0-8, (USGS, 2017) 2. DEFCON (DEFense readiness CONdition, used by the US military to describe five levels of readiness), from 5 to 1. 3. “Rio scale” of the Search for Extra-Terrestrial Intelligence (SETI) – complex scale with three subscales (Almar, 2011). 4. Palermo scale of asteroid risks compares the likelihood of the detected potential impactor with the average risk posed by objects of the same size measured both by energy and frequency (NASA, 2017). 5. San-Marino scale of risks of Messaging to Extra-Terrestrial Intelligence (METI) (Almar, 2007). The only more general scale for several global risks is the Doomsday Clock by the Bulletin of the Atomic Scientists, which shows global risks as minutes before midnight. It is oriented towards risks of a nuclear war and climate change and communicates only emotional impact (The Bulletin of the Atomic Scientists, 2017). 4.2. The goals of the scale How good a scale is depends in part on what it is intended to do: who will use it and how will they use it. There are three main groups of people the scale addresses: Public. Simplicity matters: a simple scale is required, similar to the hurricane Saffir-Simpson scale (Schott et al., 2012). This hurricane ACCEPTED MANUSCRIPT 13 measuring scale has 5 levels which present rather obscure wind readings as corresponding to the expected damage to houses and thus can help the public make decisions about preparedness and evacuation. In the case of X risks, personal preparedness is not very important, but the public make decisions about which prevention projects to directly support (via donations or crowdfunding) or voting for policymakers who support said projects. Simplicity is necessary to communicate the relative importance of different dangers to a wide variety of nonexperts. Policymakers. We intend our scale to help initiate communication of the relative importance of the risks to policymakers. This is particularly important as it appears that policymakers tend to overestimate smaller risks (like asteroid impact risks) and underestimate larger risks (like AI risks) (Bostrom, 2013). Our scale helps to make such comparison possible as it does not depend on the exact nature of the risks. The scale could be applicable to several groups of risks thus allowing comparisons between them, as well as providing a perspective across the whole situation. Expert community. Even a scale of the simplicity we suggest may benefit the expert community. It can act as a basis for comparing different risks by different experts. Given the interdisciplinarity inherent in studying X risk, this common ground is crucial. The scale could facilitate discussion about catastrophes’ probabilities, preventability, prevention costs, interactions, and error margins, as experts from different fields present arguments about the importance of the risks on which they work. Thus it will help to build a common framework for the risk discussions. 4.3. Color codes and classification of the needed actions Tonn and Steifel suggested a six-level classification of actions to prevent X risks (Tonn & Steifel, 2017). They start from “do nothing” and end with “extreme war footing, economy organized around reducing human extinction risk”. We suggest a scale which is coordinated with Tonn and Steifel’s classification of actions (Table 1), that is our colors correspond to the needed level of action. Also, our colors correspond to typical nonquantifiable ways of the risks description: theoretical, small, medium, serious, high and immediate. We also add iconic examples, which are risks where the probability distribution is known with a higher level of certainty, and thus could be used to communicate the risk’s importance by comparison. Such ACCEPTED MANUSCRIPT 14 examples may aid in learning the scale, or be used instead of the scale. For instance, someone could say: “this risk is the same level as asteroid risk”. The iconic risks are marked bold in the scale. Iconic examples are also illustrated with the best-known example of that type of event. For example, the best known supervolcanic eruption was the Toba eruption 74,000 years ago (Robock et al., 2009). The Chicxulub impact 66 million years ago is infamous for being connected with the latest major extinction, associated with the nonavian Dinosaur extinction. The scale presents the total risk of one type of event, without breaking categories down into subrisks. For example, it estimates the total risks of all known and unknown asteroids, but not the risk of any particular asteroid, which is a departure from the Torino scale. Although the scale is presented using probability intervals, it could be used instead of probabilities if they are completely unknown, but other factors, such as those affecting scope and severity, are known. For example, we might want to communicate that AI catastrophe is a very significant risk, but its exact probability estimation is complicated by large uncertainties. Thus we could agree to represent the risk as red despite difficulties of its numerical estimation. Note that the probability interval (when it is known) for “red” is shorter and is only 1 order of magnitude, as it is needed to represent most serious risks and here we need better resolution ability. As it is a communication scale, the scientists using it could come to agreement that a particular risk should be estimated higher or lower in this scale. We don’t want to place too many restrictions on how different aspects of a risk’s severity (like preventability or connection with other risks) should affect risks coding, as it should be established in the practical use of the scale. However, we will note two rules: 1. The purple color is reserved to present extreme urgency of the risk 2. The scale is extrapolated from the smaller than extinction risks and larger than extinction risks in Table 2. (This is based on idea that smaller risks have considerable but unknown probability to become human extinction risks, and also on the fact that policy makers may implement similar measures for smaller and larger risks). 4.4. Extrapolated version of scale which accounts for the risk size In Table 2 we extend the scale to include smaller risks like civilization collapse and global catastrophic risks as well as on “larger” ACCEPTED MANUSCRIPT 15 risks like life extinction and universe destruction, in accordance with our discussion in section 2. This is necessary because: 1) Smaller risks could become larger extinction risks by starting chains of catastrophic events. 2) The public and policymakers will react similarly to human extinction level catastrophe and to a global catastrophe where there will be some survival: both present similar dangers to personal survival, and in both similar prevention actions are needed. [[TABLE 2 OMITTED]] 4.5. Accessing risks with shorter timeframes than 100 years In Table 2 above we assessed the risks for the next 100 years. However, without prevention efforts, some risks could approach a probability of 1 in less time: climate change, for instance. We suggest that the urgency of intervening in such cases may be expressed by increasing their color coding. Moreover, the critical issue is less the timing of risks, but the timing of the prevention measures. Again, although extreme global warming would likely only occur at the end of the 21st century, it is also true that cutting emissions now would ameliorate the situation. We suggest, then, three ranks which incorporate these shorter timeframe risks. Note that the timings relate to implementation of interventions not the timings of the catastrophes. 1) Now. This is when a catastrophe has started, or may start in any moment: The Cuban Missile Crisis is an historical example. We reserve purple to represent it. 2) “Near mode”. Near mode is roughly the next 5 years. Typically current political problems (as in current relations with North Korea) are understood in near mode. Such problems are appropriately explored in terms of planning and trend expectations. Hanson showed that people are very realistic in “Near mode”, but become speculative and less moral in “Far mode” thinking (Hanson, 2010). Near mode may require one color code increase. 3) “Next 2-3 decades”. Many futurists predict a Technological Singularity between 2030-2050: that is around 10-30 years from now (Vinge, 1993), (Kurzweil, 2006). As this mode coincides with an adult’s working life, it may also be called “in personal life time”. In this mode people may expect to personally suffer from a catastrophe, or be personally responsible for incorrect predictions. MIRI recently increased its estimation of the probability that AGI will appear around 2035 (MIRI, 2017), pushing AGI into “next 2-3 decades” mode. There is a consideration against increasing the color code too much for near-term risks, as that may lead to myopia regarding longterm risks of human extinction. There will always be smaller but more urgent risks, and although these ought to be dealt with, some resources ought to be put towards understanding and mitigating the longer term. ACCEPTED MANUSCRIPT 19 Having said this, in high impact emergency situations, short term overwhelming efforts may help to prevent impending global catastrophe. Examples include the Cuban missile crisis and fighting the recent Ebola pandemic in Western Africa. Such short-term efforts do not necessarily constrain our long-term efforts towards preventing other risks. Thus, short term global catastrophic and larger risks may get a purple rating. 4.6. Detailed explanation of risk assessment principles in the color coded scale In Table 3, we estimate the main global risks, according to the scale suggested in section 4.4. Table 3. Detailed explanation of the X risks scale Color code Examples of risks White Sun becomes red giant. Although this risk is practically guaranteed, it is very remote indeed. Natural false vacuum decay. Bostrom and Tegmark estimated such events as happening in less than one in 1 billion years, (that is 10-7 in a century) (Tegmark & Bostrom, 2005). Moreover, nothing can be done to prevent it. Green Gamma-ray bursts. Earth threatening gamma-ray bursts are extremely rare, and in most cases they will result only in a crop failure due to UV increases. However, a close gamma-ray burst may produce a deadly muon shower which may kill everything up to 3 km in depth (A. Dar, Laor, & N.J, 1997). However, such events could happen less than once in a billion years (10-7 in a century) (Cirković & Vukotića, 2016). Such an event will probably kill all multicellular life on Earth. Dar estimates risks of major extinction events from gamma ray bursts as 1 in 100 mln years (A. Dar, 2001). Asteroid impacts. No dangerous asteroids have been thus far identified, and the background level of global catastrophic impacts is around 1 in a million years (10- 4 in a century). Extinction-level impact probability is 10-6 per century. There are several prevention options involving deflecting comets/asteroids. Also, food security could be purchased cheaply (Denkenberger, 2015). However, some uncertainty exists. Some periods involve intense comet bombardment, and if we are in such a time investment in telescopes should be larger (Rampino & Caldeira, 2015). High energy accelerator experiments creating false vacuum decay/black hole/strangelet. Vacuum decay seems to have extremely low probability, far below 10-8 currently. One obvious reason for expecting such events to have very low probability is that similar events happen quite often, and haven’t destroyed everything as yet (Kent, 2004). However, we give this event a higher estimation for two reasons. First, as accelerators become more capable such events might become more likely. Second, the risks are at an astronomical scale: it could affect other civilizations in the universe. Other types of accelerator catastrophes, like mini-black hole or strangelet creation, would only kill Earth life. However, these are more likely, with one estimate being <2E-8 risk from a single facility (the Relativistic Heavy Ion Collider) (Arnon Dar, De Rújula, & Heinz, 1999), which should be coded white. There many unknowns about dangerous experiments (Sandberg & Landry, 2015). Overall, these risks should be monitored, so green is advisable. Yellow Supervolcanic eruption. Given historical patterns, the likelihood of living in a century containing a super volcanic eruption is approximately 10-3 (Denkenberger, 2014). However, the chance of human extinction resulting is ACCEPTED MANUSCRIPT 21 significantly lower than this. If such an eruption produces global crop failure, it could end current civilization. Conventional wisdom is that there is nothing that could be done to prevent a super volcano from erupting, but some possible preventive measures have been suggested (Denkenberger, this issue). We estimate supervolcanic risks to be higher than asteroid impacts because of the historical record, as they likely nearly finished us off 74 000 ago (Robock et al., 2009). Natural pandemic. A natural pandemic is fairly likely to kill 1% (to an order of magnitude) of the global population during this century, as the Spanish flu did. However, such a pandemic is very unlikely to cause total extinction because lethality is under 100% and some populations are isolated. Between all natural pandemics, emerging pandemic flus have a shorter timespan and need much more attention. Bird flu has a mortality above 0.5 (WHO, 2017) and could produce widespread chaos and possible civilizational collapse if human-to-human transmission starts. Therefore, we estimate 10% probability this century of 10% mortality. Global warming triggering global catastrophe. According to the IPCC anthropogenic global warming may affect billions of people by the end of the 21st century (Parry, 2007), causing heat waves, crop failures and mass migration. Those events, and downstream consequences such as conflicts, could conceivably kill 1 billion people. However, this would only occur for tail risk scenarios which have order of magnitude 1% probability. Having said this, several experts think that methane release from permafrost and similar positive feedback loops may result in runaway global warming with much larger consequences (Obata & Shibata, 2012). Orange Full-scale nuclear war. There is roughly 0.02-7% chance per year of accidental fullscale nuclear war between the US and Russia (Barrett, Baum, & Hostetler, 2013). With fairly high probabilities of nuclear winter and civilization collapse given nuclear war, this is order of magnitude 10% this century. We should also take into consideration that despite reductions in nuclear weapons, a new nuclear arms race is possible in the 21st century. Such a race may include more devastating weapons or cheaper manufacturing methods. Nuclear war could include the creation of large cobalt bombs as doomsday weapons or attacks on nuclear power plants. It could also start a chain of events which result in civilization collapse. Nanotechnology risks. Although molecular manufacturing can be achieved without self-replicating machines (Drexler & Phoenix, 2004), technological fascination with biological systems makes it likely that self-replicating machines will be created. Moreover, catastrophic uses of nanotechnology needn’t be due to accident, but also due to the actions of purposeful malignant agents. Therefore, we estimate the chance of runaway self-replicating machines causing “gray goo” and thus human extinction to be one per cent in this century. There could also be extinction risks from weapons produced by safe exponential molecular manufacturing. See also (Turchin, 2016). Artificial pandemic and other risks from synthetic biology. An artificial multipandemic is a situation in which multiple (even hundreds) of individual viruses created through synthetic biology are released simultaneously either by a terrorist state or as a result of the independent activity of biohackers (Turchin, Green, & Dekenbergern, 2017). Because the capacity to create such a multipandemic could arrive as early as within the next ten to thirty years (as all the needed technologies already exist), it could overshadow future risks, like nanotech and AI, so we give it a higher estimate. There are also other possible risks, connected with synthetic biology, which are widely recognized as serious (Bostrom, 2002). Agricultural catastrophe. There is about a one per cent risk per year of a ten per cent global agricultural shortfall occurring due to a large volcanic eruption, a medium asteroid or comet impact, regional nuclear war, abrupt climate change, or extreme weather causing multiple breadbasket failures (Denkenberger 2016). This could lead to 10% mortality. Red AI risks. The risks connected with the possible creation of non-aligned Strong AI are discussed by could start an “intelligence explosion wave” through the Universe, which could prevent appearance of the other civilizations (Bostrom, 2014), (Yudkowsky, 2008), (Yampolskiy & Fox, 2013) and others. It is widely recognized as the most serious X risk. AI before they create their own AI. Purple Something like the Caribbean crisis in the past, but larger size. Currently, there are no known purple risks. If we could be sure that Strong AI will appear in the next 100 years and would probably be negative, it would constitute a purple risk. Another example would be the creation of a Doomsday weapon that could kill our species with global radiation poisoning (much greater ionizing radiation release than all of the current nuclear weapons) (Kahn, 1959). A further example would be a large incoming asteroid being located, or an extinction level pandemic has begun. These situations require quick and urgent effort on all levels. Specifically---it’ll be a nano-war---that’s not survivable. Jamie L. McGaughran 10. University of Colorado at Boulder. “Future War Will Likely Be Unsustainable for the Survival and Continuation of Humanity and the Earth’s Biosphere.” https://www.researchgate.net/profile/Jamie_McGaughran/publication/251232595_Future_War_Will_Li kely_Be_Unsustainable_for_the_Survival_and_Continuation_of_Humanity_and_the_Earth's_Biosphere/ links/0deec51ef553f71587000000.pdf The Exponential Advance of Technology and Its Implications on the Tool of War Technology is advancing at an extremely rapid rate as has been evidenced by personal computing. One major component of this advance is the size of the technology. As we rapidly progress toward nanoscale technologies and their daily integration, life as we know it will be fundamentally quite different. Some scientists believe that the 21st century is going to be the age of nanotechnology (Kurzweil, 2005). This belief has encouraged many individuals to be thinking and comprehending on a microscale level. Since the advent of the modern microscope in the 19th century, the concept of the microscale of life has been and continues to be an important part of science in our world. For example, rides at Disney World’s Epcot theme park such as the 3D computer generated ride ‘Body Wars’ (a video of this can be seen at http://www.youtube.com/watch?v=ybLGzie1mfU) provide individuals with some understanding of the nanoworld as do pictorial graphics and 3D models of the microworld. Charts such as Figure 3 below provide an in depth understanding of the microworlds on which our reality is based. 14 Figure 3 Cutting it Down to Nano (Misner, 2007) In general, developed countries will be quicker to adapt to nanotechnologies compared to developing countries mainly due to available resources and access to technology. Although, we are in the early stages of developing and implementing nanotechnology, many people remain unaware of this scientific trend. Most people understand the concepts of germs and vaccinations. Many also understand the concept of atoms. Yet, there are manyf individuals who experience considerable difficulty understanding how billions of nanocomputers will be able to assist and 15 mimic internal bodily functions. Nanotechnology is the next Industrial Revolution and the early formative stages are underway. For many years scientists have looked to create artificial life or synthetic life. Recently scientists have made a break through discovery in the creation of synthetic life. Dr Craig Venter and his team announced this landmark discovery to an understandably mixed reaction. Although this will open up new medical treatments, energy developments, aid in ridding pollution it will also bring a whole range of potential negatives. “We have now accomplished the last piece on the list that was required to do what ethicists called playing God" (Gill, 2010). There are deep seated ethical issues associated with this discovery and because it is so new, there are really no regulations as of yet. Thus weaponizing discoveries in this field immediately become a very dangerous reality (Gill, 2010). One of the Pentagon’s military arms or the Defense Advanced Research Projects Agency (DARPA) is working on creating synthetic organisms with built in kill switches providing evidence that this area of research is already being weaponized with back up kill switches in case the subject decides to quit or switch sides against its creator (Drummond, 2010). Another example of this presently arriving nano-revolution can be seen with Tel Aviv University researcher Yael Hanein [having] succeeded in growing living neurons on a mass of carbon nanotubes that act as an electrode to stimulate the neurons and monitor their electrical activity. [This] foundational research that may give sight to blind eyes, merging retinal nerves with electrodes to stimulate cell growth. Until then, her half-human, half-machine invention can be used by drug developers investigating new compounds or formulations to treat delicate nerve tissues in the brain (American Friends of Tel Aviv University: Seeing a Bionic Eye on Medicine's Horizon, 2010). 16 Other areas of research that overlap here are the development of transistors controlled by adenosine triphosphate (ATP) have been developed by researchers at Lawrence Livermore National Laboratory that include applications such as wiring prosthetic devices directly into the nervous system (Barras, 2010). Looking at how these technologies will affect one another is something we will have to reckon with in order to understand just how different tomorrow’s warfare will be. Noted futurist Kurzweil speculates that within the next two decades we will be living in a world capable of molecular manufacturing on a mass scale (Kurzweil, 2005). “Molecular manufacturing is a future technology that will allow us to build large objects to atomic precision, quickly and cheaply, with virtually no defects. Robotic mechanisms will position and react molecules to build systems to complex atomic specification” (Institute for Molecular Manufacturing, 1997). Progress in numerous prototype situations with nearly endless possibilities are continuously being achieved. In the near future we may see viruses being used as batteries to power artificial immune systems patrolling alongside our natural immune systems within the human body (Researchers Build Tiny Batteries with Viruses, 2006). In consideration of future developments of this nature, the coming decades may look completely alien to our current understanding and perception of reality. Science is fast working on developing artificial immune systems to complement and enhance our very capable natural immune systems (Burke and Kendall, 2010). Our world may seem to be evolving slower than it really is because when these technologies mature and come to market things will operate and look very different in our day to day reality. We could see the weaponization of synthetic organisms complete with artificial immune systems storming the battlefield or worse, civilian areas. 17 Nanotechnology has the potential to radically transform our world and species in the coming decades of the 21st century. Conversely, negative effects have been experienced as a result of new nanotechnologies. For example, a nanotech consumer product claiming an active nanoscale ingredient was recalled in Germany for public health reasons in 2006. “At least 77 people reported severe respiratory problems over a one-week period at the end of March -- including six who were hospitalized with pulmonary edema, or fluid in the lungs -- after using a ‘Magic Nano’ bathroom cleansing product, according to the Federal Institute for Risk Assessment in Berlin” (Thayer, 2006). The good news in nano news is that thus far there is not much bad news in regards to numbers of nano-victims (Talbot, 2006). There will however be unforeseen negative consequences which we will discover and learn about as they pop into existence. If we had given foresight to how the invention or discovery of electricity, factories, automobiles, nuclear power and the Internet might affect people and society, we might have done a much better job in managing their negative consequences - such as economic disruption, urban sprawl, pollution, nuclear arms race and high-tech crimes," explained Patrick Lin, research director for The Nanoethics Group (Rockets, 2006). From my research there are just not many cases that have existed to explore in depth what harm nanotechnologies have brought or will bring. But there is foresight and speculation on what they could bring. “The National Institute for Occupational Safety and Health, which conducts research on workplace safety, has no recommended exposure limit guidelines for nanomaterials, and the Occupational Safety and Health Administration has no permissible exposure limit specific to engineered nanomaterials” (Environmental Health Perspectives: No Small Worry: Airborne Nanomaterials in the Lab Raise Concerns, 2010). “The National Institute for Occupational Safety and Health also reports that some recent animal toxicology studies suggest 18 nanomaterials may cause specific health effects [such as] carbon nanotubes having been shown to induce inflammation and oxidative stress in animal models” (Environmental Health Perspectives: No Small Worry: Airborne Nanomaterials in the Lab Raise Concerns, 2010). Consequently, the question remains whether or not the nano transformation will be a blessing or a curse. Although nanotechnology carries great promise, serious threats to the survival of the human race and well being of the biosphere may arise (Nanotechnology Research, 2010). In an accelerating manner we are moving toward a future with wireless energy and information. Cables used for power transfer will be replaced by energy. An example of this is seen in information transfer via wireless power technologies. New Scientist magazine, among other scientific publications, have published various articles on power cables being phased out and power transfer becoming wireless (Robson, 2010). Concurrently, efforts are in progress toward reverse engineering of the human brain and applying the brain’s capabilities, resources and design to AI, intelligence amplification/augmentation (IA), robotics and computers. Presently there is a documentary underway that is actively filming the reverse engineering of the mammalian brain. One major area of work underway is called The Blue Brain Project. This “is the first comprehensive attempt to reverse-engineer the mammalian brain, in order to understand brain function and dysfunction through detailed simulations” (Blue Brain Project, 2010). When the human brain is successfully reverse engineered, experts predict life as we know it will advance to a significantly higher technological level. Figure 4 shows the implication of this in the growth of supercomputers in exponentially increasing floating point operations. 19 Figure 4 Growth in Supercomputer power via FLOPS or floating point operations (Kurzweil, 2005). In his landmark book, “The Singularity is Near”, Ray Kurzweil purports that there are three overlapping revolutions slated to change the very nature of our reality. According to Kurzweil, these specifically are the exponentially advancing fields of Genetics, Nanotechnology and Robotics. With regard to genetics, it is predicted that the field of bioinformatics will grow exponentially from the integration of the fields of information, technology and biology. In the areas utilizing nanotechnology, we will see the intersection of technology and information with the organic and inorganic world. This will likely lead to biological assembly and molecular manufacturing that may create startling changes on global societies. This will also likely aid in augmenting the intelligence of human beings through methods such as direct connection to the Internet and world bank of knowledge from a connection attached to or within one’s brain , which, in turn, may very well radically transform the concept of ‘humanness’. In addition, there is the third field of Robotics, what Kurzweil and others say will become infused with ‘Strong AI’ 20 (Artificial Intelligence that matches or exceeds human intelligence). Strong AI calculates and forecasts that both computers and machines can reach and surpass human levels of intelligence or ability make decisions based on rational thinking. Strong AI’s existence and validity is hypothesized to be comparably equal to a biological human. When anything, whether biological or non biological, is ‘truly intelligent’, then it can be considered to have mind in the sense that people do (Kurzweil, 2005). Mind being the ability to comprehend, conceptualize, calculate, resourcefully problem solve and be able to pass the famous Turing Test, is then as valid as a human beings. The Turing test is the scientific criterion of interacting with artificial intelligence in which one cannot distinguish whether one is interacting with a human or in fact a robot or artificial intelligence of some form (Harnad, 1992). It is conjectured in some scientific circles that when molecular manufacturing comes of age it will represent a significant technological breakthrough comparable to that of the Industrial Revolution, only accomplished in a much shorter period of time (Nanotechnology: Dangers of Molecular Manufacturing, 2010). The late Nobel Prize winner in physics, Richard Feynman, spoke of wanting to build billions upon billions of tiny factories, exact duplicates of each other, which would ceaselessly manufacture and create anything atom by atom. Feynman is quoted as saying “The principles of physics, as far as I can see, do not speak against the possibility of maneuvering things atom by atom. It is not an attempt to violate any laws; it is something, in principle, that can be done” (Hey, 2002). These types of developments may very well require a new paradigm in thinking. It appears that we are moving into the realm of microscale coherence, intelligence, computing and information storage. Multiple fields such as bioinformatics, robotics and AI are experiencing full scale technological revolutions and opening up new sub fields such as Quantum Computing and molecular manufacturing. Although we continue to see and 21 experience technological advancement in the macro world as illustrated in the continuation of smaller, faster computers, space satellites and the beginnings of commercial space flight, rather, it is in the realm of the microworld in which the biggest changes are yet to come. We have been creating new technologies and refining age old technologies and continue to do so with tremendous will based on the incentives of the interconnectivity and convenience they bring. With each passing year our world becomes more interconnected. Examples include telephones, computers, the Internet, email, social networking sites such as Facebook among others. It appears that not only are we interconnected but we are also interdependent due to these advancements and our state of technological progress. This has resulted in great advantages to people worldwide. An example of global interdependency can be best seen with global trade, transportation and shipping. There are also many companies such as Federal Express and Coca Cola that are present all over the globe. Chinese exports flow to various countries and the presence of U.S. computer operating systems such as Microsoft Windows and Apple Mac OS are in use worldwide. However, downsides exist between interconnectivity and interdependence. Prior to global political organizations such as the United Nations and the World Trade Organization many nations exercised considerable self sufficiency. Many countries were not nearly as interdependent in the past as they currently are and although this economic comparative advantage brings about tremendous benefits, it also brings potential disadvantages. When compared to our present civilization it can be seen that another world war would not only affect everyone on the planet, but most certainly doom countless millions, even possibly billions of people in the event of energy and food lines being disrupted in which all likelihood they would. The point here is simple. We are interconnected and interdependent and this is accompanied with both pros and cons. This was seen in both World Wars I and II. Thus future war can throw economic comparative advantage into a state of disadvantage for many countries, regions, and peoples. This is yet another reason on a long list of how unsustainable and devastating future war will likely be to human and biospheric prosperity. Customarily the most advanced technologies are used in war. They are frequently invented out of the interest of national defense or offense. Exponentially advancing technologies appear to have a destructive aspect in relation to this. These technologies can be used to kill on levels that may very well exceed all other known levels. Presently, the human species engages in war primarily on the macro level. What happens when we open up the microworld of warfare alongside the macro world? Will we conduct war in ways never before seen and in ways unable to be seen physically? Technology ranging from the ability to cloak jets and submarines to insect size and smaller miniature robots storming the battlefield are already operational. The U.S. and UK already have over 8,000 robots in use on the ground and in the air in wars in Iraq and Afghanistan (Bowlby, 2010). There are deadly remote control flying drones that kill insurgents from the sky and bomb disposal robots that save lives by disarming explosives on the ground. Iraq and Afghanistan have been more than battle fronts; they have been technological testing grounds for robotic military hardware. A new prototype currently being developed and tested by the Pentagon is a robot called the Energetically Autonomous Tactical Robot or EATR. “It can refuel itself on long journeys by scavenging for organic material - which raises the haunting spectre of a machine consuming corpses on the battlefield” (Bowlby, 2010). Dr Robert Finkelstein of Robotic Technology Inc, the inventor of the machine, insists it will consume "organic material, but mostly vegetarian” (Bowlby, 2010). At present, we are in a global technological race. There appears to be no limit to how as to how sophisticated and powerful computers will become nor is there an end in sight to how destructive bombs or weapons may become. Moore’s Law is a great example of this as explained earlier. In regards to the macro level, it is commonly understood that there is a continual increase in the power of explosives. Until recently, the U.S. had the most powerful non-nuclear weapon in the history of human civilization. A satellite-guided air bomb named the ‘Massive Ordnance Air Blast’, was unrivaled in non-nuclear explosivity and aptly nicknamed the ‘Mother Of All Bombs’(Russian military uses nanotechnology to build world's most powerful non-nuclear bomb, 2007). In 2007 the Russians developed a significantly more explosive non-nuclear weapon. As with exponential technological advancement, such as seen with computers and robotics, the Russian bomb is smaller in size than its U.S. counterpart while being significantly more powerful. It is reported “that while the Russian bomb contains about 7 tons of high explosives compared with more than 8 tons of explosives in the U.S. bomb, it's four times more powerful because it uses a new, highly efficient type of explosives [that were] developed with the use of nanotechnology” (Russian military uses nanotechnology to build world's most powerful non-nuclear bomb, 2007). It is reported that the blast radius of the Russian bomb is more than twice as large as the U.S. bomb and in terms of TNT explosiveness, the Russian bomb rates at 44 tons of regular explosives compared to 11 tons packed by the U.S. bomb (Russian military uses nanotechnology to build world's most powerful non-nuclear bomb, 2007). This further fuels the race for the most powerful war capabilities. The U.S. is not sitting idle having a defense budget that is nearly as large as the rest of the world combined (List of countries by military expenditures, 2009). The U.S. military will spend about $1.7 billion on ground-based robots in the next five years, covering the 2006-2012 period, according to figures reported by a 24 defense analyst from the National Center for Defense Robotics, a congressionally funded consortium of 160 companies (U.S. will spend $1.7B on military robots, 2007). According to Frank Allen, operations director for Florida State University's High Performance Materials Institute, “the U.S. military is using nanotechnology to make lighter body armor that is more durable, flexible and shellproof. In addition, they are using the same nanofabric in developing a super-strong, extra-light ‘unmanned aerial vehicle’ that could be carried into battle, unfolded and launched over the horizon to spy on [or kill] the enemy. A soldier could carry it in his backpack'' (Tasker, 2009). This nanomaterial is known as “buckypaper made from thin sheets of carbon nanotubes -carbon that has been vaporized and reformed into particles only a few atoms in size, becoming many times lighter and stronger than steel” (Tasker, 2009). It is also reported that Iran is making advanced attack drones i.e. military robo-planes that are “capable of carrying out assaults with high precision” (Iran to make 'advanced' attack drones – Telegraph, 2010). War as usual on the macro level alone is not sustainable and may lead to severe consequences if it is waged unabated in the coming decades. However, this is not the only threat to humanity and the biosphere. According to this author’s research, war on the micro level potentially looks to be even deadlier by possible orders of magnitude. Figure 4 below provides a look at our technological evolution into the nanoworld. Enter: Micro War 25 Figure 3 Nanotechnology Scale (science.doe.gov) Human technology is moving more towards the nano and micro scales as can be seen from figure 3. According to the Center for Responsible Nanotechnology ‘CRN’ (Nanotechnology: Dangers of Molecular Manufacturing, 2010), when molecular manufacturing begins, this technology has the potential to open an unstable arms race between competing and socially differing nations. The mere overuse of cheap nanoproducts alone could inflict widespread environmental damage. This pales in comparison to using nanotechnology as weaponry with its affects on the environment. Nanofactories would be small enough to fit in a suitcase and unleash an unfathomable amount of payloads. One scenario the CRN raises is the 26 possibility of a human species extinction nanotechnology. They use an example of small insects being approximately 200 microns to estimate the plausible size for a nanotech-built antipersonnel weapon capable of seeking and injecting toxin into unprotected humans. The human lethal dose of botulism toxin [a natural toxin manipulated by humans] is about 100 nanograms or about 1/100 the volume of the weapon. As many as 50 billion toxin-carrying devices—theoretically enough to kill every human on earth—could be packed into a single suitcase (Nanotechnology: Dangers of Molecular Manufacturing, 2010). Nanowarfare will change how war is conducted. Soldiers will not be required to be on the battlefield when micro killing devices can be more effective and potentially remain invisible and mysterious to the enemy forces. As a result of small integrated computers coming into existence, nanoscale weapons could be aimed at remote targets in time and space. Consequently, this will not only impair the targets defense, but also will reduce post-attack detection and accountability of the attacking party (Nanotechnology: Dangers of Molecular Manufacturing, 2010). More than just human civilization is threatened with extinction from weaponized nanotechnology. The entire planet could be devastated and our biosphere could be irreversibly damaged. Nanoweapons can generate myriad forms of weaponry. They can be eco-friendly in one attack and eco-devastating in another. According to Admiral David E. Jeremiah, ViceChairman (ret.), U.S. Joint Chiefs of Staff, in an address at the 1995 Foresight Conference on Molecular Nanotechnology, "Military applications of molecular manufacturing have even greater potential than nuclear weapons to radically change the balance of power." He describes nanotechnology’s potential to destabilize international relations. Arms Control Turn---Nuke War Key Nuke war won’t cause extinction---BUT, it’ll spur political will for meaningful disarmament. Daniel Deudney 18. Associate Professor of Political Science at Johns Hopkins University. 03/15/2018. “The Great Debate.” The Oxford Handbook of International Security. www.oxfordhandbooks.com, doi:10.1093/oxfordhb/9780198777854.013.22. Although nuclear war is the oldest of these technogenic threats to civilization and human survival, and although important steps to restraint, particularly at the end of the Cold War, have been achieved, the nuclear world is increasingly changing in major ways, and in almost entirely dangerous directions. The third “bombs away” phase of the great debate on the nuclear-political question is more consequentially divided than in the first two phases. Even more ominously, most of the momentum lies with the forces that are pulling states toward nuclear-use, and with the radical actors bent on inflicting catastrophic damage on the leading states in the international system, particularly the United States. In contrast, the arms control project, although intellectually vibrant, is largely in retreat on the world political stage. The arms control settlement of the Cold War is unraveling, and the world public is more divided and distracted than ever. With the recent election of President Donald Trump, the United States, which has played such a dominant role in nuclear politics since its scientists invented these fiendish engines, now has an impulsive and uninformed leader, boding ill for nuclear restraint and effective crisis management. Given current trends, it is prudent to assume that sooner or later, and probably sooner, nuclear weapons will again be the used in war. But this bad news may contain a “silver lining” of good news. Unlike a general nuclear war that might have occurred during the Cold War, such a nuclear event now would probably not mark the end of civilization (or of humanity), due to the great reductions in nuclear forces achieved at the end of the Cold War. Furthermore, politics on “the day after” could have immense potential for positive change. The survivors would not be likely to envy the dead, but would surely have a greatly renewed resolution for “never again.” Such an event, completely unpredictable in its particulars, would unambiguously put the nuclear-political question back at the top of the world political agenda. It would unmistakeably remind leading states of their vulnerability It might also trigger more robust efforts to achieve the global regulation of nuclear capability. Like the bombings of Hiroshima and Nagasaki that did so much to catalyze the elevated concern for nuclear security in the early Cold War, and like the experience “at the brink” in the Cuban Missile Crisis of 1962, the now bubbling nuclear caldron holds the possibility of inaugurating a major period of institutional innovation and adjustment toward a fully “bombs away” future. Arms control now both creates complacency AND prevents armed systemic shocks that are key to meaningful long-term disarmament. Richard Falk & David Krieger 16. Falk is Professor Emeritus of International Law and Practice, Princeton University, and Senior Vice President of the Nuclear Age Peace Foundation; Krieger is a founder of the Nuclear Age Peace Foundation and has served as its President since 1982. 07/02/2016. “A Dialogue on Nuclear Weapons.” Peace Review, vol. 28, no. 3, pp. 280–287. FALK: I approach the underlying issues in a different and more critical manner. In my view, the United States has long adopted what I would call a “managerial” approach to nuclear weapons, seeking to reduce risks associated with accidents, to control expenses arising from development and deployment, to promote diplomatic solution of disputes involving other states possessing nuclear weapons (for example, India–Pakistan), and most of all, to confine access to the weaponry by limiting the size of “the nuclear club” through imposing a geopolitically enforced non-proliferation regime to supplement the NPT. The use of punitive sanctions and war threats directed at Iran and the efforts to denuclearize North Korea are illustrative of how this regime operates. As has been widely observed, the disarmament obligations of the NPT have been violated for decades, but this has been obscured by successfully persuading the public to interpret the treaty from a managerial perspective. It is important to realize that the geopolitical regime is only concerned with stopping unwanted proliferation. The United States uses its political muscle to defuse pressures based on the treaty that mandates denuclearization. In short, unless the managerial approach is distinguished from the disarmament approach there will be no hope of achieving a world without nuclear weapons. And as the managerial approach is deeply embedded by now in the governmental bureaucracies of the major nuclear weapons states, only a popular mobilization of civil society can create a national and global political climate conducive to nuclear disarmament. From this perspective, I disagree mildly with your proposition that the reduction in the overall size of nuclear weapons arsenals “is a move in the right direction.” I believe the move is best understood as a managerial tactic that is not at all motivated by a disarmament ethos or goal, and thus from my point of view is a move in the wrong direction. It represents a continuation of the effort to retain the weaponry, but limits costs and risks of doing so, while fooling the public about the stability and acceptability of existing nuclear policy. It is hard to envision this sea change from management to disarmament taking place without some kind of shock to the system, which is itself a separate cause for deep concern that should further worry us. Those of us dedicated to the moral, legal, and prudential necessity of ridding the world of nuclear weapons must not shy away from the hard work of achieving through peaceful means such a paradigm shift in policy and public awareness. KRIEGER: I understand and appreciate your concerns for the dangers of the “managerial” approach to nuclear weapons. While I would still rather have fewer nuclear arms in the world than more, I realize that the reduction in numbers alone is not forging a path to nuclear zero. As long as nuclear weapons exist in the arsenals of some nations, there will be the possibility that these weapons may be used by any possessor nation by accident, miscalculation, insanity, or intention. The weapons may also proliferate to other nations and terrorist organizations and pose similar problems or worse. You say that a “shock to the system” may be needed. I have always believed that the failure to grasp the likelihood of such a shock (a nuclear detonation or nuclear war), which is inherent in the current system of nuclear “haves” and “have-nots,” reflects a failure of imagination and maturity of the human species. It is not only possible but probable that over time humanity will experience the shocks of nuclear detonations in the context of nuclear accidents, miscalculations, terrorism, or war. It is quite imaginable, and it does not speak well for the political and military leaders of the nuclear-armed nations who remain committed to nuclear weapons in the face of such potential catastrophes. In fact, the leaders of the nuclear-armed countries, particularly the permanent five (P5) members of the United Nations Security Council, have joined together in protecting their nuclear arsenals from nuclear disarmament, rather than taking true steps to negotiate the prohibition and elimination of their nuclear arsenals. It must also be said that the publics in the nuclear-armed countries don’t seem to realize that they are already targets of other countries’ nuclear weapons, and that their own weapons do not physically protect them from nuclear attack. Public complacency regarding nuclear dangers is appallingly high. I think of this complacency in terms of the acronym ACID, with the initials standing for apathy, conformity, ignorance and denial. To break through the complacency and achieve public engagement, it is necessary to move from apathy to empathy; conformity to critical thinking; ignorance to wisdom; and denial to recognition of the serious danger posed by nuclear weapons. This is truly hard work, and I don’t know if it will actually be possible to penetrate the shields surrounding the ACID of complacency. There are no easy answers, but I think that once one is awakened to the pressing dangers of nuclear weapons, it is not enough to simply await a catastrophic shock to the global system. The path to a world free of nuclear weapons must be cleared of false assumptions about the security provided by nuclear weapons and nuclear deterrence. Some steps that could be taken, although partly managerial, go beyond that to opening the way to actual progress toward achieving a world free of nuclear weapons. These include: first: taking all existing nuclear weapons off of high-alert status; second: pledging policies of No-use of nuclear weapons against non-nuclear weapon states, and No-first-use against nuclear-armed states; third: reinstating the Anti-Ballistic Missile Treaty, which the United States unilaterally abrogated; fourth: ratifying the Comprehensive Test Ban Treaty and ceasing all nuclear tests, including subcritical testing; fifth: safeguarding internationally all existing weapons-grade nuclear materials; sixth: reaching an interim agreement on ceasing modernization of nuclear arsenals; and seventh: convening negotiations among the nuclear-armed countries for total nuclear disarmament. Can we agree that these would be useful steps in moving forward on the path to nuclear zero? Of course, taking these steps will require strong leadership. FALK: Your response to my insistence that we recognize the managerial steps taken to stabilize the geopolitics of nuclearism for what they are is insightful, the existing world order hierarchy, which privileges nuclear weapons states, especially the P5, in relation to non-nuclear states and the peoples of the world. Such an arrangement is made tolerable by the false consciousness that arms control measures are somehow an expression of a longrange, yet authentic, commitment to nuclear disarmament in conformity with the NPT. I believe a truer understanding reverses this impression. These steps taken from time to time are part of a pernicious plot to retain nuclear weapons indefinitely, or at least until some kind of unprecedented challenge is mounted, either through a powerful transnational social movement, or as a result of transformative events (e.g., a regional war in which nuclear weapons are used or rapid proliferation in the global South). It is important to appreciate that, in addition to the geopolitics of nuclearism that embodies but still not sufficiently responsive conceptually and politically to satisfy me. I believe we need to take full account of status, destructive capabilities, and the ultimate weaponry of state terrorism, there are two further reinforcements of the nuclear weapons status quo: first, nuclear weapons establishments deeply entrenched in the governmental bureaucracies and further supported by private sector benefactors of the states that retain the weapons and second, a public pacified by a phenomenon you have summarized by the acronym of ACID. Until the antinuclear movement addresses all three as the main obstacles to the credible pursuit of a world without nuclear weapons, I believe genuine progress toward our shared goals will be illusory. I will go further and argue that arms control steps may even contribute to maintaining the policies we most oppose, namely, a world order that legitimizes “the geopolitics of nuclearism” by reassuring the public that the managerial mentality is capable of avoiding nuclear catastrophe as well as possessing a genuine, yet prudent and realistic, commitment to nuclear disarmament. I view your seven steps through the prism of an overriding commitment to achieving a world without nuclear weapons, getting to zero. If these steps, especially the first five, are taken as desirable steps without being combined with a critique of the three pillars of nuclearism I mentioned above, the “improvements” made will not lead in the direction of nuclear disarmament, and indeed will make that goal more remote, and seem increasingly utopian and irrelevant. It is my view that your sixth and seven steps are presently outside the domain of political feasibility, and will remain so, as long as nuclearism is sustained by the three pillars. Given these circumstances, I think that we need to give priority educationally and politically to this critique, and let others commend the government for its managerial initiatives whenever these are undertaken, while we keep our eyes focused on the urgency and practicality of a true embrace of denuclearizing goals. Perhaps, we should reflect upon the words of Karl Jaspers in his 1961 book, The Future of Mankind: “In the past, folly and wickedness had limited consequences, today they draw all mankind into perdition. Now, unless all of us live with and for one another, we shall all be destroyed together.” Although written in 1961, such a sentiment is more true now than then, and the crux of this awareness is a species’ death dance with nuclearism. KRIEGER: Your critique of the current geopolitics of nuclearism as embodying the ultimate weapons of state terrorism, reinforced by entrenched bureaucracies and private sector beneficiaries, along with a complacent public, is very powerful and rings true. Nuclearism has withstood the forces of rationality, decency, and desire to end the nuclear weapons threat to humanity for more than seven decades without any significant cracks in the metaphorical walls that protect the nuclear status quo. This deeply ingrained reliance on nuclear weapons, you’ve described as “three pillars of nuclearism”: deeprooted reliance on the ultimate weaponry of state terrorism; national security bureaucracies and private sector supporters; and a complacent public. I see nuclearism slightly differently, with the entrenchment of state terrorism (reliance on nuclear weapons) being the result of the national security elites who have been willing to take major risks; in effect, to gamble with the lives of their fellow citizens as well as people throughout the world. They have made the bet that nuclear deterrence will work under all circumstances to protect their respective countries; in my view, this is a very foolish bet. These national security elites and the bureaucracies, and the private sector interests that support them are the drivers of nuclearism. The best chance to depose them and reverse the nuclearism they have espoused and entrenched is by awakening the public from its complacency. This is the challenge of those who are already awakened to the urgent dangers posed by reliance on nuclear weapons. We are struggling for the heart of humanity and for the future of civilization. That said, I would prefer to push for some measures that would lessen the likelihood of nuclear war, nuclear proliferation, and nuclear terrorism, while still attempting to unbalance the three pillars of nuclearism, and destroy these by means of identifying and discrediting the bureaucracies of state terrorism for what they are. The key, it seems to me, is to shift public perceptions from viewing nuclear weapons as being protective, which they are not, to seeing them as an existential threat to all that each of us loves and treasures. Thank you for sharing the quote by Karl Jaspers. He suggests that in the Nuclear Age more is demanded of us than ever before. He echoes the sentiments of Bertrand Russell and Albert Einstein in the great RussellEinstein Manifesto issued in 1955. We all have a choice, and collectively our choices will determine whether humanity is to survive the Nuclear Age. If we leave our future to the high priests of nuclearism, there is little hope for humanity. But we still have a choice about our future, and we can choose a new path for humanity. I am committed to choosing hope and never giving up on creating a decent human future. I know that you are as well. In response to the Jaspers’ quote, I will share a favorite quotation by Erich Fromm: “For the first time in history, the physical survival of the human race depends on a radical change of the human heart.” It is our human ingenuity that has brought us to the precipice of oblivion in the Nuclear Age; to back away from that precipice will require, as Fromm argues, “a radical change of the human heart,” a change that will end our “species’ death dance with nuclearism,” and put us on the path to a world free of nuclear weapons. FALK: I think we have reached a useful point of convergence, although I want to highlight your unqualified remark, “I would prefer to push for some measures that would lessen the likelihood of nuclear war,” as giving clarity to our disagreement. Pushing for these measures, in the arms control mode, may lessen the short-term prospects of nuclear war but repeatedly, as experience has shown, at the cost of increased complacency and denial adding to long-term risks. This tradeoff between stabilizing and managing nuclearism and transforming and ending nuclearism is not imaginary, and those who want to embrace both arms control and disarmament approaches seem obligated to show their existential compatibility. Their conceptual compatibility can be affirmed, but after 70 years of frustration with respect to the quest for nuclear disarmament it is time to expose what seems to be the ultimate form of a Faustian Bargain. Every empirical example of effective arms control followed a nuclear crisis. Matthew Fuhrmann 16. Associate professor of political science at Texas A&M University. 11/2016. “After Armageddon: Pondering the Potential Political Consequences of Third Use.” Should We Let the Bomb Spread?, edited by Henry D. Sokolski, United States Army War College Press, http://www.dtic.mil/dtic/tr/fulltext/u2/1021744.pdf. The discussion in this section so far assumes that the third use of nuclear weapons would negatively affect the nonproliferation regime. It is also possible, and somewhat paradoxical, that nuclear use would result in a stronger regime. The international community often reacts to disasters by instituting sweeping reforms. Most of the major improvements to the nonproliferation regime since 1970 resulted from crises of confidence in existing measures. India’s nuclear test in 1974 led to the creation of the Nuclear Suppliers Group (NSG), a cartel designed to regulate trade in nuclear technology and materials. Iraq’s violations of the NPT prior to the 1991 Persian Gulf War caused the international community to give the International Atomic Energy Agency (IAEA), the main enforcer of the NPT, more teeth through the 1997 Additional Protocol. In addition, the international community sought to strengthen global export controls by passing United Nations Security Council Resolution 1540 after the public exposure of the A. Q. Khan network, a Pakistani-based operation that supplied nuclear weapon-related technology to Iran, Libya, and North Korea. As these examples illustrate, sweeping reforms are sometimes possible in a time of crisis. The third use of nuclear weapons would no doubt be horrific. It might, therefore, create a broad international consensus to strengthen nonproliferation norms in an attempt to lower the odds that the bomb would be used a fourth time. This does not imply that the third use of nuclear weapons would be a good thing. The negative consequences would outweigh any marginal improvement in the nonproliferation regime resulting from nuclear use. Movements will literally overthrow recalcitrant governments. Nuclear use makes the audience costs huge. Steven R. David 18. Professor of Political Science at Johns Hopkins University. 2018. “The Nuclear Worlds of 2030.” Fletcher Forum of World Affairs, vol. 42, pp. 107–118. CATASTROPHE AND THE END OF NUCLEAR WEAPONS In the year 2025, the world very nearly came to an end. Smarting after several years of economic downturn and angry at American efforts to encircle it with NATO bases, Russia responded to a "plea" for help from co-ethnics in the Baltic states. Thousands of Russian troops, disguised as contract "volunteers" dashed across international borders allegedly to protect Russian speakers from governmental assaults. The Baltic countries invoked Article 5 of the NATO Treaty while American forces, deployed there precisely to deter this kind of aggression, clashed with Russian troops. Hundreds of Americans were killed. Washington warned Moscow to halt its invasion to no avail. The United States then prepared for a major airlift of its forces to the beleaguered countries, with Moscow threatening America with "unrestrained force" if it followed through. Washington ignored the threat and Moscow, seeking to "de-escalate by escalating," destroyed the American base of Diego Garcia in the Indian Ocean with a nuclear-armed cruise missile. The United States responded with limited nuclear strikes against Russian bases in Siberia. Thus far, the collateral damage had been kept to a minimum, but this bit of encouragement did not last. Fearing a massive American pre-emptive strike aimed at disarming its nuclear arsenal, Russia struck first against the range of US nuclear forces both in the United States and at sea. America responded with its surviving weapons, destroying much (but not all) of the remaining Russian nuclear arms. And then, both sides took a breather, but it was too late. Although cities had been largely spared, millions had died on each side. Making matters worse, predictions of nuclear winter came to pass - producing massive changes in the weather and killing millions more, especially in developing states. The world finally had enough. A dawning realization emerged that leaders of countries simply could not be trusted with weapons that could destroy humankind.3 Protests swept the globe calling for total disarmament. Mass demonstrations engulfed the United States and Russia demanding the replacement of their existing governments with ones committed to ending nuclear weapons. Voices calling for more moderate disarmament that would preserve a modest nuclear deterrent were angrily (and sometimes violently) quashed. The possession of nuclear weapons became morally repugnant and unacceptable. No longer were the intricacies of nuclear doctrine or force levels subject to debate. The only question remaining was how one could get rid of these loathsome weapons as quickly as possible. Under the auspices of the United Nations, a joint committee composed of the Security Council members, other countries known to possess nuclear arms, and several non-nuclear powers was established. Drawing on the structure and precedent of the Chemical Weapons Convention, this UN body drew up the Treaty that called for the complete disarmament of nuclear arms by 2030. The development, possession, and use of nuclear weapons was prohibited. An airtight inspection regime, enhancing the procedures already in existence through the Non-Proliferation Treaty, was established to first account for all nuclear arms and fissile material and then monitor the destruction of the nuclear weaponry. All countries were subject to the Treaty, whether they maintained nuclear facilities or not. Violations would produce a range of punishment from global economic sanctions to massive conventional attack.' 6 By 2030, all the nations of the world had agreed to the Treaty. No violations occurred. Armed conflicts persisted, but they proved to be of modest scale, erupting only within countries but not between them. Insofar as the fear of nuclear weapons helped keep the peace during the Cold War and post-Cold War eras, the horror of nuclear use now made war all but unthinkable. A feeling of relief swept the globe as the specter of nuclear holocaust vanished, tempered only by the painful regret that it took the death of millions to realize a goal that for so many had been self-evident since 1945. Random Tech Backlines Random Tech Extinction---1NC The military is developing isomer bombs---even just testing will destroy the Universe Gary S. Bekkum 4, Founder of Spacetime Threat Assessment Report Research, Founder of STARstream Research, Futurist, “American Military is Pursuing New Types of Exotic Weapons”, Pravda, 8-30, https://www.pravdareport.com/science/5527-weapons/ In recent years it has been discovered that our universe is being blown apart by a mysterious antigravity effect called "dark energy". Mainstream physicists are scrambling to explain this mysterious acceleration in the expansion of the universe. Some physicists even believe that the expansion will lead to "The Big Rip" when all of the matter in the universe is torn asunder - from clusters of galaxies in deep space down to the tiniest atomic particles. The universe now appears to be made of two unknowns - roughly 23% is "dark matter", an invisible source of gravity, and roughly 73% is "dark energy", an invisible anti-gravity force. Ordinary matter constitutes perhaps 4 percent of the universe. Recently the British science news journal "New Scientist" revealed that the American military is pursuing new types of exotic bombs - including a new class of isomeric gamma ray weapons. Unlike conventional atomic and hydrogen bombs, the new weapons would trigger the release of energy by absorbing radiation, and respond by re-emitting a far more powerful radiation. In this new category of gamma-ray weapons, a nuclear isomer absorbs x-rays and re-emits higher frequency gamma rays. The emitted gamma radiation has been reported to release 60 times the energy of the x-rays that trigger the effect. The discovery of this isomer triggering is fairly recent, and was first reported in a 1999 paper by an international group of scientists. Although this controversial development has remained fairly obscure, it has not been hidden from the public. Beyond the visible part of defense research is an immense underground of secret projects considered so sensitive that their very existence is denied. These so-called "black budget programs" are deliberately kept from the public eye and from most political leaders. CNN recently reported that in the United States the black budget projects for 2004 are being funded at a level of more than 20 billion dollars per year. In the summer of 2000 I contacted Nick Cook, the former aviation editor and aerospace consultant to Jane's Defence Weekly, the international military affairs journal. Cook had been investigating black budget super-secret research into exotic physics for advanced propulsion technologies. I had been monitoring electronic discussions between various American and Russian scientists theorizing about rectifying the quantum vacuum for advanced space drive. Several groups of scientists, partitioned into various research organizations, were exploring what NASA calls "Breakthrough Propulsion Physics" - exotic technologies for advanced space travel to traverse the vast distances between stars. Partly inspired by the pulp science fiction stories of their youth, and partly by recent reports of multiple radar tracking tapes of unidentified objects performing impossible maneuvers in the sky, these scientists were on a quest to uncover the most likely new physics for star travel. The NASA program was run by Marc Millis, financed under the Advanced Space Transportation Program Office (ASTP). Joe Firmage, then the 28-year-old Silicon Valley CEO of the three billion dollar Internet firm US Web, began to fund research in parallel with NASA. Firmage hired a NASA Ames nano-technology scientist, Creon Levit, to run the "International Space Sciences Organization", a move which apparently alarmed the management at NASA. The San Francisco based Hearst Examiner reported that NASA's Office of Inspector General assigned Special Agent Keith Tate to investigate whether any proprietary NASA technology might have been leaking into the private sector. Cook was intrigued when I pointed out the apparent connections between various private investors, defense contractors, NASA, INSCOM (American military intelligence), and the CIA. While researching exotic propulsion technologies Cook had heard rumors of a new kind of weapon, a "sub-quantum atomic bomb", being whispered about in what he called ⌠the dark halls of defense research. Sub-quantum physics is a controversial re-interpretation of quantum theory, based on so-called pilot wave theories, where an information field controls quantum particles. The late Professor David Bohm showed that the predictions of ordinary quantum mechanics could be recast into a pilot wave information theory. Recently Anthony Valentini of the Perimeter Institute has suggested that ordinary quantum theory may be a special case of pilot wave theories, leaving open the possibility of new and exotic non-quantum technologies. Some French, Serbian and Ukrainian physicists have been working on new theories of extended electrons and solitons, so perhaps a sub-quantum bomb is not entirely out of the question. Even if the rumors of a sub-quantum bomb are pure fantasy, there is no question that mainstream physicists seriouslycontemplate a phase transition in the quantum vacuum as a real possibility. The quantum vacuum defies common sense, because empty space in quantum field theory is actually filled with virtual particles. These virtual particles appear and disappear far too quickly to be detected directly, but their existence has been confirmed by experiments that demonstrate their influence on ordinary matter. "Such research should be forbidden!" In the early 1970's Soviet physicists were concerned that the vacuum of our universe was only one possible state of empty space. The fundamental state of empty space is called the "true vacuum". Our universe was thought to reside in a "false vacuum", protected from the true vacuum by "the wall of our world". A change from one vacuum state to another is known as a phase transition. This is analogous to the transition between frozen and liquid water. Lev Okun, a Russian physicist and historian recalls Andrei Sakharov, the father of the Soviet hydrogen bomb, expressing his concern about research into the phase transitions of the vacuum. If the wall between vacuum states was to be breached, calculations showed that an unstoppable expanding bubble would continue to grow until it destroyed our entire universe! Sakharov declared that "Such research should be forbidden!" According to Okun, Sakharov feared that an experiment might accidentally trigger a vacuum phase transition. Nanotech is quickly coming online---it’ll become self-replicating and destroy the Universe Hu 18 – Jiaqi Hu, Humanities Scholar and President and Chief Scientist of the Beijing Jianlei International Decoration Engineering Company and 16Lao Group, Graduate of Dongbei University, Elected as the Chinese People’s Consultative Conference Member for Beijing Mentougou District, Saving Humanity: Truly Understanding and Ranking Our World's Greatest Threats, p. 208-210 As a unit of measurement, a nanometer is 10^9 meters (or one billionth of a meter); it is roughly one 50,000th of a strand of hair and is commonly used in the measuring of atoms and molecules. In 1959, Nobel Prize winner and famous physicist Richard Feynman first proposed in a lecture entitled "There's Plenty of Room at the Bottom" that humans might be able to create molecule-sized micro-machines in the future and that it would be another technological revolution. At the time, Feynman's ideas were ridiculed, but subsequent developments in science soon proved him to be a true visionary. In 1981, scientists developed the scanning tunneling microscope and finally reached nano-level cognition. In 1990, IBM scientists wrote the three letters "IBM" on a nickel substrate by moving thirty-five xenon atoms one by one, demonstrating that nanotechnology had become capable of transporting single atoms. Most of the matter around us exists in molecule forms, which are composed of atoms. The ability to move atoms signaled an ability to perform marvelous feats. For example, we could move carbon atoms to form diamonds, or pick out all the gold atoms in low-grade gold mines. However, nanotechnology would not achieve any goals of real significance if solely reliant on manpower. There are hundreds of millions of atoms in a needle-tip-sized area—even if a person committed their life to moving these atoms, no real value could be achieved. Real breakthroughs in nanotechnology could only be produced by nanobots. Scientists imagined building molecule-sized robots to move atoms and achieve goals; these were nanobots. On the basis of this hypothesis, scientists further postulated the future of nanotechnology; for example, nanobots might be able to enter the bloodstream and dispose of cholesterol deposited in the veins; nanobots could track cancer cells in the body and kill them at their weakest moment; nanobots could instantly turn newly-cut grass into bread; nanobots could transform recycled steel into a brand new-car in seconds. In short, the future of nanotechnology seemed incredibly bright. This was not the extent of nanotechnology's power. Scientists also discovered that nanotechnology could change the properties of materials. In 1991, when studying C60, scientists discovered carbon nanotubes (CNTs) that were only a few nanos in diameter. The carbon nanotube became known as the king of nano materials due to its superb properties; scientists believed that it would produce great results when applied to nanobots. Later, scientists also developed a type of synthetic molecular motor that derived energy from the high-energy adenosine triphosphate (ATP) that powered intracellular chemical reactions. The success of molecular motor research solved the core component problem of nano machines; any molecular motor grafted with other components could turn into a nano machine, and nanobots could use them for motivation. In May 2004, American chemists developed the world’s first nanobot: a bipedal molecular robot that looked like a compass with ten-nanometer-long legs. This nanobot was composed of DNA fragments, including thirty-six base pairs, and it could "stroll" on plates in the laboratory. In April 2005, Chinese scientists developed nano-scale robotic prototypes as well. In June of 2013, the Tohoku University used peptide protein micro-tablets to successfully create nanobots that could enter cells and move on the cell membrane. In July 2017, researchers at the University of Rome and the Roman Institute of Nanotechnology announced the development of a new synthetic molecular motor that was bacteria-driven and light-controlled. The next step would be to get nanobots to move atoms or molecules. Compared to the value produced by a nanobot, they are extremely expensive to create. The small size of nanobots means that although they can accomplish meaningful tasks, they are often very inefficient. Even if a nanobot toiled day and night, its achievements would only be calculated in terms of atoms, making its practical total attainment relatively small. Scientists came up with a solution for this problem. They decided to prepare two sets of instructions when programming nanobots. The first set of instructions would set out tasks for the nanobot, while the second set would order the nanobot to self-replicate. Since nanobots are capable of moving atoms and are themselves composed of atoms, self-replication would be fairly easy. One nanobot could replicate into ten, then a hundred, and then a thousand . . . billions could be replicated in a short period of time. This army of nanobots would greatly increase their efficiency. One troublesome question that arises from this scenario is: how would nanobots know when to stop self-replicating? Human bodies and all of Earth are composed of atoms; the unceasing replication of nanobots could easily swallow humanity and the entire planet. If these nanobots were accidentally transported to other planets by cosmic dust, the same fate would befall those planets. This is a truly terrifying prospect. Some scientists are confident that they can control the situation. They believe that it is possible to design nanobots that are programmed to self-destruct after several generations of replication, or even nanobots that only self-replicate in specific conditions. For example, a nanobot that dealt with garbage refurbishing could be programmed to only self-replicate around trash using trash. Although these ideas are worthy, they are too idealistic. Some more rational scientists have posed these questions: What would happen if nanobots malfunctioned and did not terminate their self-replication? What would happen if scientists accidentally forgot to add self-replication controls during programming? What if immoral scientists purposefully designed nanobots that would not stop self-replicating? Any one of the above scenarios would be enough to destroy both humanity and Earth. Chief scientist of Sun Microsystems, Bill Joy, is a leading, world-renowned scientist in the computer technology field. In April of 1999, he pointed out that if misused, nanotechnology could be more devastating than nuclear weapons. If nanobots self-replicated uncontrollably, they could become the cancer that engulfs the universe. If we are not careful, nanotechnology might become the Pandoras box that destroys the entire universe and all of humanity with it. We all understand that one locust is insignificant, but hundreds of millions of locusts can destroy all in their path. If self-replicating nanobots are really achieved in the future, it might signify the end of humanity. If that day came, nothing could stop unethical scientists from designing nanobots that suited their immoral purposes. Humans are not far from mastering nanotechnology. The extremely tempting prospects of nanotechnology have propelled research of nanobots and nanotechnology. The major science and technology nations have devoted particular efforts to this field. Tech advancements make future time travel certain Awes Faghi Elmi 18, Contributing Writer at n’world Publications, BS in Forensic Science from London South Bank University, Extended Diploma in Physics with Distinction from Leyton Sixth Form College, Futurist, “Technological Progress Might Make Possible Time Travel And Teleportation”, Medium, 8-13, https://medium.com/nworld-publications/technological-progress-might-make-possible-time-travel-andteleportation-45176c3c89bc [typo edited] This is a question that many people ask their-selves. This question has occurred many times. It is said that time travel is possible and in fact it is. The key things needed to travel through time are speed and kinetic energy. Einstein’s theory also known as the theory of relativity can be used ro understand how to deal with travelling to the future. Einstein showed that travelling forward in time is easy. According to Einstein’ theory of relativity, time passes at different rates for people who are moving relative to one another although the effect only becomes large when you get close to the speed of light. Time travel sometime can cause side effects called paradoxes. These paradoxes can occur especially when going back in time. As if only one thing even the minimum of the details can change something big may happen in the future. Another scientist who believes that time travel is possible after Einstein is Brian Cox who as Einstein believes that we are only going to be able to travel in the future. This obviously would happen if having a super-fast machine that allows you to go into the future. Cox also agrees on Einstein’s theory of relativity which states that to travel forward in time, something needs to reach speeds close to the speed of light. As it approaches these speeds, time slows down but only for that specific object. They both think as said, that time travel to the future is possible however travelling back in time is impossible, as something must be really as fast as the speed of light. This however for some scientists can be wrong. They state that with the technology that we have now it could be possible to build some sort of machine who will actually be able to travel in both future and past. A wormhole as shown in the image is a theoretical passage through space-time that could create shortcuts for long journeys across the universe. Wormholes are predicted by the theory of general relativity. However, wormholes bring with them the dangers of sudden collapse, high radiation and dangerous contact with exotic matter. The public knows that time travel is possible but humans at the moment are not able to. However other sources except theories of the past are currently trying to develop a way of time travel. The audience actually cannot wait that this will happen as many media state, such as BBC. Many TV programmes talk about both time travel and teleportation. That collapses the Universe Steve Bowers 16, Control Officer in the United Kingdom, Executive Editor and Moderator of the Orion’s Arm Universe Project, Contributing Author for the Orion’s Arm Novella Collection, “WHY NO TIME TRAVEL IN OA”, 1-1, https://orionsarm.com/page/77 If the universe does allow reverse time travel, usable by sentient/sophont entities, it won't stop at one or two little historical research trips . . . If there is no effective chronological protection mechanism, the universe of today will be overrun with travellers from the future. Even if there is no 'Big Rip' where the Universe tears itself apart through accelerating expansion, hundreds of trillions of years from now the cosmos will be a slowly dying place. Even red dwarf stars will eventually burn out, leaving the inhabitants of the far future only their dying embers to gather energy from, although the creation and merger of black holes could perhaps keep civilisation going for an (admittedly very long) while. Eventually the entities of the far future will be limited to reversible computation to save energy. This means confining themselves to a very limited set of mental processes. This prospect would surely not appeal to the heirs of once-mighty advanced civilisations. If time travel were possible then refugees from the far future would flood back, sometimes in multiple instances. The future sophonts would come back in an exponentiating wave to constantly change the present and the past, and whole galaxies of material particles will begin to exist in space time reference that did not have them before - some? many? most? matter and events may turn out to be acausal, going round and round in closed timelike loops and increasing the total mass of the universe, which may begin to collapse in the distant future, sending chronistic refugees in massive tardises back to our time thus accelerating the collapse; increasing the mass of the present day universe until it collapses. The collapse will get closer to the present day, until it eventually happened yesterday and we will cease to exist . . . believe me, you don't want to go there. For an explanation how under certain circumstances a wormhole can connect different parts of the universe without causing temporal paradoxes see this page. Dark Matter---1NC Dark matter research is massively increasing Bertone 18 [Dr. Gianfranco Bertone, Professor in the GRAPPA Institute and Institute of Physics at the University of Amsterdam, PhD in Astrophysics from the University of Oxford, and Dr. Tim M.P. Tait, Professor in the Department of Physics and Astronomy at the University of California, Irvine, PhD in Physics from Michigan State University, BSc in Physics from UC San Diego, Former Research Associate at the Fermi National Accelerator Laboratory and Argonne National Laboratory, "A New Era in the Quest for Dark Matter", Nature, 10/4/18, https://arxiv.org/pdf/1810.01668.pdf ]////LRCH Jrhee recut In the quest for dark matter, naturalness has been the guiding principle since the dark matter problem was established in the early 1980s. Although the absence of evidence for new physics at the LHC does not rule out completely natural theories, we have argued that a new era in the search for dark matter has begun, the new guiding principle being “no stone left unturned”: from fuzzy dark matter (10−22 eV) to primordial black holes (10 M ), we should look for dark matter wherever we can. It is important to exploit to their fullest extent existing experimental facilities, most notably the LHC, whose data might still contain some surprises. And it is important to complete the search for WIMPs with direct detection experiments, until their sensitivity reaches the so-called neutrino floor94 . At the same time we believe it is essential to diversify the experimental effort, and to test the properties of dark matter with gravitational waves interferometers and upcoming astronomical surveys, as they can provide complementary information about the nature of dark matter. New opportunities in extracting such information from data arise from the booming field of machine learning, which is currently transforming many aspects of science and society. Machine learning methods have been already applied to a variety of dark matter-related problems, ranging from the identification of WIMPs from particle and astroparticle data95, 96 to the detection of gravitational lenses97, and from radiation patterns inside jets of quarks and gluons at the LHC98 to real-time gravitational waves detection99. In view of this shift of the field of dark matter searches towards a more data-driven approach, we believe it is urgent to fully embrace, and whenever possible to further develop, big data tools that allow to organize in a coherent and systematic way the avalanche of data that will become available in particle physics and astronomy in the next decade. Causes quantum effects that collapse the vacuum—destroys the universe Arkell 14 [Esther Inglis-, Contributor to the Genetic Literacy Project, Contributing Editor and Senior Reporter at io9, Freelance Writer for Ars Technica, BS in Physics from Dartmouth College, "We Might Be Destroying The Universe Just By Looking At It", io9 – Gizmodo, 2/3/14, https://io9.gizmodo.com/wemight-be-destroying-the-universe-just-by-looking-at-1514652112 ]////LRCH Jrhee It's not often that astronomy goes well with the book of Genesis. But this is a theory that evokes the line, "But of the tree of the knowledge, thou shalt not eat of it: for in the day that thou eatest thereof thou shalt surely die." In this theory, knowledge doesn't just kill you — it kills the entire universe. Indeed, one physicist speculates that continuous observation of the universe might put it into a state that will destroy us all. Our universe's eventual demise, in this case, springs from the fact that it wasn't properly created. The big question has always been, how does something come from nothing? If, in the beginning, there was nothing but a vacuum, devoid of energy or matter, where did the universe come from? As it turns out, not all vacuums are alike - some of them are what's called "false vacuums." They are "bubbles" of space that look like vacuums, but aren't actually at their bottom energy state. They can collapse at nearly any time, and go into their ground energy state. The collapse of such a false vacuum releases energy. At first, many physicists thought this is how our universe began. A false vacuum collapsed down to a true one, and the matter and energy of our universe was the result of its collapse. It's also possible that the collapsing false vacuum didn't create a true vacuum. It simply created, along with all that matter and energy, another false vacuum. The universe we live in now might simply be a long-lived bubble of false vacuum that's not really at its lowest energy state. If you have trouble believing that the vacuum of space that astronomers observe isn't at its lowest energy state - ask yourself what dark energy is if not a higher-than-expected energy state for the universe. We might be in a fragile, and unstable, bubble of universe that could collapse at any time. It's unpleasant to think the universe might collapse out of existence at any moment. Especially since, as the collapse won't exceed the speed of light, we'll probably see it coming for us, knowing we're unable to escape it. Fortunately, we have (theoretical) options. Dark energy drives the expansion of the universe. Although bubbles decay, they decay along different lines according to the energy state they're in when they start collapsing. If they're in a high energy state, the rate of decay is also high. If they're in a low energy state, the rate of decay is slow. Put the fast rate of decay in a race against the expansion of the universe, and we are all winked out of existence. Put the slow rate of decay in that same race, and we all have the chance to live productive lives. The problem is, when we observe a system, we can keep it in a certain state. Studies have shown that repeatedly observing the state of an atom set to decay can keep that atom in its higher-energy state. When we observe the universe, especially the "dark" side of the universe, we might be keeping it in its higher-energy state. If the process of collapse happens when it is in that state, the universe will cease to exist. If we stop looking, and the universe quietly shifts to a state at which its decay is slower, then we're all saved. The more we look at the universe, the more likely it is to end. Grey Goo---1NC Grey goo will be rapidly developed and weaponized now (Duncan H. Forgan 19. Associate Lecturer at the Centre for Exoplanet Science at the University of St Andrews, Scotland, founding member of the UK Search for Extra-terrestrial Intelligence (SETI) research network and leads UK research efforts into the search. 04/30/2019. “14.2 Nanotechnology, Nanomachines, and ‘Grey Goo’.” Solving Fermi’s Paradox, Cambridge University Press.)/LRCH Jrhee In the now classic Engines of Creation, Eric Drexler imagined the apex of nanotechnology – a molecular assembler that could rearrange atoms individually to produce nanomachines. Such a molecular assembler can in principle reproduce itself. If this reproduction cycle was in some way corrupted, the assembler could begin producing indefinite copies. Exponential growth of assemblers would rapidly result in the conversion of large fractions of a planet’s mass into assemblers. This is referred to as the ‘grey goo’ scenario. Freitas (2000) further subcategorises this into grey goo (land based), grey lichen (chemolithotrophs), grey plankton (ocean based) and grey dust (airborne). Assuming that molecular assemblers are possible, what can be done to mitigate against this risk? Careful engineering can ensure that there is a rate-limiting resource in replication. If the assembler can use commonly found materials to replicate, then its growth is unlimited. If the assembler requires some rare element or compound, then replication will cease when that element is exhausted. Like biological replicators, one might imagine that mutation or copying errors may find templates that bypass this element, and exponential growth recommences unabated. Freitas (2000) imagined a range of defence mechanisms specifically designed to dismantle assemblers, being triggered by the byproducts of assembly (e.g., waste heat). Producing a fleet of ‘goodbots’ to dismantle or remove corrupted assemblers is one such strategy. Is a grey goo scenario likely on Earth? In 2004, the Royal Society published a report on the then consensus – that molecular assembly itself is unlikely to be produceable technology in the foreseeable future, due to fundamental physical constraints on locating individual atoms. On the other hand, molecular assemblers exist in nature. Organisms depend on molecular assembly for a range of fundamental processes. Molecular machines exploiting these biological processes are very possible, and currently being produced in research labs. Constructing a molecular assembler from these machine components remains challenging. Perhaps the best way to mitigate this risk is to simply not build autonomous assemblers. Phoenix and Drexler (2004) suggest that centrally controlled assemblers are more efficient at manufacturing, and hence the desire for assemblers to be able to replicate themselves is at odds with industrial goals. Of course, a molecular assembler could be weaponised. We may need to consider grey goo as an associated risk with future conflicts. Devours all carbon---extinction. ---A2: benevolent nanotech (Jayar Lafontaine 15. MA in Philosophy from McMaster University and an MDes in Strategic Foresight and Innovation from OCAD University. Foresight strategist with a leading North American innovation consultancy, where he helps organizations to develop their capacity for future thinking so that they can respond more flexibly to change and disruption. 8-17-2015, Misc Magazine "Gray Goo Scenarios and How to Avoid Them," io9, https://io9.gizmodo.com/gray-goo-scenarios-and-how-to-avoid-them1724622907 )/LRCH Jrhee In a lecture delivered in 1948, mathematician John Von Neumann first described the potential for machines to build perfect copies of themselves using material sourced from the world around them. Almost immediately, people began to worry about what might happen if they never stopped. Writing decades later about the science of molecular nanotechnology – in other words, microscopically small man-made machines – Eric Drexler gave this worry a name: Gray Goo. A Gray Goo scenario works something like this: Imagine a piece of self-replicating nanotechnology manufactured for a purely benevolent reason. Say, a micro-organism designed to clean up oil slicks by consuming them and secreting some benign by-product. So far, so good. Except the organism can’t seem to distinguish between the carbon atoms in the oil slick and the carbon atoms in the sea vegetation, ocean fauna, and human beings around it all that well. Flash forward a few thousand generations – perhaps not a very long time in our imagined micro-organism’s life cycle – and everything on Earth containing even a speck of carbon has been turned into a benign, gray, and gooey byproduct of its digestive process. Absolute Zero---1NC Super-cooling experiments will break absolute zero Siegfried 13 – Tom Siegfried, Former Editor in Chief of Science News, Former Science Editor of The Dallas Morning News, Writer for Science, Nature, Astronomy, New Scientist and Smithsonian, Master of Arts with a Major in Journalism and a Minor in Physics from the University of Texas at Austin, Undergraduate Degree from Texas Christian University with Majors in Journalism, Chemistry and History, “Scientists Are Trying to Create a Temperature Below Absolute Zero”, Smithsonian Magazine, April, https://www.smithsonianmag.com/science-nature/scientists-are-trying-to-create-a-temperaturebelow-absolute-zero-4837559/ When a cold snap hits and the temperature drops, there’s nothing to stop it from falling below zero, whether Celsius or Fahrenheit. Either zero is just a mark on a thermometer. But drive a temperature lower and lower, beyond the coldest realms in the Arctic and past those in the most distant reaches of outer space, and eventually you hit an ultimate limit: absolute zero. It’s a barrier enforced by the laws of physics below which temperatures supposedly cannot possibly go. At minus 459.67 degrees Fahrenheit (or minus 273.15 Celsius), all the heat is gone. Atomic and molecular motion ceases. Trying to create a temperature below absolute zero would be like looking for a location south of the South Pole. Of course, scientists perceive such barriers as challenges. And now some lab trickery has enabled researchers to manipulate atoms into an arrangement that appears to cross the forbidden border. With magnets and lasers, a team at Ludwig-Maximilians University Munich in Germany has coaxed a cloud of 100,000 potassium atoms into a state with a negative temperature on the absolute scale. “It forces us to reconsider what we believe to know about temperature,” says Ulrich Schneider, one of the leaders of the research team. As a bonus, the weird configuration of matter might provide clues to some deep mysteries about the universe. Schneider and his colleagues relied on laser beams to trap the atoms in a grid, kind of like the dimples in an egg carton. By tuning the lasers and applying magnetic fields, the team could control the energy of the atoms, key to manipulating temperature. Ordinarily, not all the atoms in a sample possess the same amount of energy; some are slow-moving, low-energy sluggards, while others zip about like speed demons. A higher proportion of zippy atoms corresponds to a higher temperature. But most of the atoms are always slower than the very fastest—when the temperature is positive. With their magnet-and-laser legerdemain, the German scientists pushed the majority of the potassium atoms to higher energies, the opposite of the usual situation. Though that may not seem like a big deal, the switch messed with the mathematics that determines the gas’s temperature, leading to a negative value. Technically, physicists define temperature as a relationship between changes in entropy (a measure of disorder) and energy. Usually more energy increases a system’s entropy. But in the inverted case, entropy decreases as energy increases, flipping the sign of the relationship from positive to negative. The atoms had a temperature of minus a few billionths of a kelvin, the standard unit on the absolute scale. Destroys the universe Hohmann 16 – Creator and Editor of Moonwards, “Tempertures Below Zero Degrees Kelvin”, World Building, 5-29, https://worldbuilding.stackexchange.com/questions/42510/tempertures-below-zerodegrees-kelvin Temperature is a measure of how much the atoms vibrate and move (e.g. kinetic energy). 0 Kelvin is defined as the point where the atoms do not vibrate any more at all, so you can not really have subkelvin temperatures, as it is not possible to move less than standing totally still. If you somehow discover that atoms at what we now believe is absolute zero actually move a little, your new temperature is just defined as the new 0 Kelvin. A second consideration is that negative degrees Kelvin allows for heat flow that reduces entropy, a pretty important violation of physics, as that allows for infinite energy, perpetual motion machines, etc. In short, negative absolute temperatures can not exist in reality, and in a sci-fi setting, it is likely to have a lot of unexpected consequences, having a good chance to somehow destroy the universe. Isomer Bombs---Feasible---2NC Isomer bombs are feasible---the science is proven by lab experiments, it’ll be cheap, and they can be developed in the short-term David Hambling 3, Freelance Technology Journalist, Writes for New Scientist magazine, Aviation Week, Popular Mechanics, WIRED, The Economist, and The Guardian, “Gamma-Ray Weapons Could Trigger Next Arms Race”, The New Scientist, 8-13, https://www.newscientist.com/article/dn4049gamma-ray-weapons-could-trigger-next-arms-race/ An exotic kind of nuclear explosive being developed by the US Department of Defense could blur the critical distinction between conventional and nuclear weapons. The work has also raised fears that weapons based on this technology could trigger the next arms race. The explosive works by stimulating the release of energy from the nuclei of certain elements but does not involve nuclear fission or fusion. The energy, emitted as gamma radiation, is thousands of times greater than that from conventional chemical explosives. The technology has already been included in the Department of Defense’s Militarily Critical Technologies List, which says: “Such extraordinary energy density has the potential to revolutionise all aspects of warfare.” Scientists have known for many years that the nuclei of some elements, such as hafnium, can exist in a high-energy state, or nuclear isomer, that slowly decays to a low-energy state by emitting gamma rays. For example, hafnium-178m2, the excited, isomeric form of hafnium-178, has a half-life of 31 years. The possibility that this process could be explosive was discovered when Carl Collins and colleagues at the University of Texas at Dallas demonstrated that they could artificially trigger the decay of the hafnium isomer by bombarding it with low-energy X-rays (New Scientist print edition, 3 July 1999). The experiment released 60 times as much energy as was put in, and in theory a much greater energy release could be achieved. Energy pump Before hafnium can be used as an explosive, energy has to be “pumped” into its nuclei. Just as the electrons in atoms can be excited when the atom absorbs a photon, hafnium nuclei can become excited by absorbing high-energy photons. The nuclei later return to their lowest energy states by emitting a gamma-ray photon. Nuclear isomers were originally seen as a means of storing energy, but the possibility that the decay could be accelerated fired the interest of the Department of Defense, which is also investigating several other candidate materials such as thorium and niobium. For the moment, the production method involves bombarding tantalum with protons, causing it to decay into hafnium178m2. This requires a nuclear reactor or a particle accelerator, and only tiny amounts can be made. Currently, the Air Force Research Laboratory at Kirtland, New Mexico, which is studying the phenomenon, gets its hafnium-178m2 from SRS Technologies, a research and development company in Huntsville, Alabama, which refines the hafnium from nuclear material left over from other experiments. The company is under contract to produce experimental sources of hafnium-178m2, but only in amounts less than one ten-thousandth of a gram. Extremely powerful But in future there may be cheaper ways to create the hafnium isomer – by bombarding ordinary hafnium with high-energy photons, for example. Hill Roberts, chief scientist at SRS, believes that technology to produce gram quantities will exist within five years. The price is likely to be high – similar to enriched uranium, which costs thousands of dollars per kilogram – but unlike uranium it can be used in any quantity, as it does not require a critical mass to maintain the nuclear reaction. The hafnium explosive could be extremely powerful. One gram of fully charged hafnium isomer could store more energy than 50 kilograms of TNT. Miniature missiles could be made with warheads that are far more powerful than existing conventional weapons, giving massively enhanced firepower to the armed forces using them. The effect of a nuclear-isomer explosion would be to release high-energy gamma rays capable of killing any living thing in the immediate area. It would cause little fallout compared to a fission explosion, but any undetonated isomer would be dispersed as small radioactive particles, making it a somewhat “dirty” bomb. This material could cause long-term health problems for anybody who breathed it in. It’s experimentally proven, theoretically possible, cheap, and U.S. development will spur a global isomer arms race Gabriel Gache 8, Science News Editor, “Isomer Explosives, Not Different from Nuclear Ones”, Softpedia, 4-14, http://news.softpedia.com/news/Isomer-Explosives-no-Different-than-Nuclear-Ones83289.shtml Isomer decay Just as the energy of the electrons inside an atom can be increased by simply forcing photon absorption, hafnium-178m2 can be brought into an explosive state by pumping energy into its nucleus with the help of high-energy photons. After the atom experiences a high-energy state, it will start to decay to a low-energy one, by emitting gamma-ray photons. Originally, isomers were thought as a mean to store energy for long periods, however the Department of Defense saw an opportunity into studying an accelerated decay, and started experimenting with thorium and niobium. By bombarding tantalum with protons, DoD researchers succeeded in producing hafnium-178m2, even though only small amount of isomer could be created at a time. For the time being, hafnium-178m2 is obtained by refining it from nuclear material waste, in amounts less than one ten-thousandth of a gram. Extreme power Anyway, in the near future larger amounts of hafnium isomer may be created in cheaper ways, such as bombarding ordinary hafnium with highenergy photons. According to SRS Technologies, gram quantities of hafnium-178m2 could be obtained through this process in less than five years. Production cost will most likely reach that of enriched uranium, however hafnium-178m2 explosives could be used in just about any quantity, since it does not require a critical mass to maintain the chain nuclear reaction, as uranium does. DoD researchers reckon that about one gram of hafnium isomer may be able to produce an energy equivalent to that released by 50 kilograms of TNT. Not only that small missiles could produce much more damage than conventional weapons, but also the gamma-ray emissions determined by the explosion would kill any living being in the surrounding area. Unlike nuclear weapons, isomer explosives would determine very little fallout, but they would disperse radioactive particles, which could act very similarly to a 'dirty' bomb. Already thin line starts to blur In the outcome of World War II, the US realized the potential danger posed by small nuclear weapons, such as the 'Davy Crockett' nuclear bazooka capable of delivering 18 tons of TNT explosive power, and stopped manufacturing them. These weapons would basically be on the line between nuclear and conventional weapons. They feared that in a potential war, military commanders equipped with such explosive devices, would most likely use a nuclear weapon opposed to a conventional one, although the obtained effect would be roughly identical. Additionally, in 1994, the US took another step towards increasing the gap between nuclear weapons and conventional weapons, by passing the Spratt-Furse law to prevent the US Army from creating nuclear weapons smaller than five kilotons. The development of the isomer weapon is now endangering all these preventive steps took by the US, and if not stopped now, it may eventually lead to a new arms race. Isomer Bombs---Impact---2NC High energy impacts cause a vacuum phase transition---it’s the fastest route to total destruction of the Universe Dr. Katie Mack 15, Assistant Professor of Physics at North Carolina State University, PhD in Astrophysics from Princeton University, Former Discovery Early Career Researcher Award (DECRA) Fellow at the University of Melbourne, “Vacuum Decay: The Ultimate Catastrophe”, Cosmos Magazine, August-September, https://cosmosmagazine.com/physics/vacuum-decay-ultimate-catastrophe Every once in a while, physicists come up with a new way to destroy the Universe. There’s the Big Rip (a rending of spacetime), the Heat Death (expansion to a cold and empty Universe), and the Big Crunch (the reversal of cosmic expansion). My favourite, though, has always been vacuum decay. It’s a quick, clean and efficient way of wiping out the Universe. To understand vacuum decay, you need to consider the Higgs field that permeates our Universe. Like an electric field, the Higgs field varies in strength, based on its potential. Think of the potential as a track on which a ball is rolling. The higher it is on the track, the more energy the ball has. The Higgs potential determines whether the Universe is in one of two states: a true vacuum, or a false vacuum. A true vacuum is the stable, lowest-energy state, like sitting still on a valley floor. A false vacuum is like being nestled in a divot in the valley wall – a little push could easily send you tumbling. A universe in a false vacuum state is called “metastable”, because it’s not actively decaying (rolling), but it’s not exactly stable either. There are two problems with living in a metastable universe. One is that if you create a high enough energy event, you can, in theory, push a tiny region of the universe from the false vacuum into the true vacuum, creating a bubble of true vacuum that will then expand in all directions at the speed of light. Such a bubble would be lethal. The other problem is that quantum mechanics says that a particle can ‘tunnel’ through a barrier between one region and another, and this also applies to the vacuum state. So a universe that is sitting quite happily in the false vacuum could, via random quantum fluctuations, suddenly find part of itself in the true vacuum, causing disaster. The possibility of vacuum decay has come up a lot lately because measurements of the mass of the Higgs boson seem to indicate the vacuum is metastable. But there are good reasons to think some new physics will intervene and save the day. One reason is that the hypothesised inflationary epoch in the early Universe, when the Universe expanded rapidly in the first tiny fraction of a second, probably produced energies high enough to push the vacuum over the edge into the true vacuum. The fact that we’re still here indicates one of three things. Inflation occurred at energies too low to tip us over the edge, inflation did not take place at all, or the Universe is more stable than the calculations suggest. If the Universe is indeed metastable, then, technically, the transition could occur through quantum processes at any time. But it probably won’t – the lifetime of a metastable universe is predicted to be much longer than the current age of the Universe. So we don’t need to worry. But what would happen if the vacuum did decay? The walls of the true vacuum bubble would expand in all directions at the speed of light. You wouldn’t see it coming. The walls can contain a huge amount of energy, so you might be incinerated as the bubble wall ploughed through you. Different vacuum states have different constants of nature, so the basic structure of matter might also be disastrously altered. But it could be even worse: in 1980, theoretical physicists Sidney Coleman and Frank De Luccia calculated for the first time that any bubble of true vacuum would immediately suffer total gravitational collapse. They say: “This is disheartening. The possibility that we are living in a false vacuum has never been a cheering one to contemplate. Vacuum decay is the ultimate ecological catastrophe; in a new vacuum there are new constants of nature; after vacuum decay, not only is life as we know it impossible, so is chemistry as we know it. “However, one could always draw stoic comfort from the possibility that perhaps in the course of time the new vacuum would sustain, if not life as we know it, at least some creatures capable of knowing joy. This possibility has now been eliminated.” To know for sure what would happen inside a bubble of true vacuum, we’d need a theory that describes our larger multiverse, and we don’t have that yet. But suffice it to say, it Luckily, we’re probably reasonably safe. At least for now. would not be good. Nano---Feasible---2NC Nano’s feasible and extremely likely Dennis Pamlin 15, Executive Project Manager of the Global Risks Global Challenges Foundation, and Stuart Armstrong, James Martin Research Fellow at the Future of Humanity Institute of the Oxford Martin School at University of Oxford, Global Challenges Foundation, February, http://globalchallenges.org/wp-content/uploads/12-Risks-with-infinite-impact.pdf Nanotechnology is best described as a general capacity, rather than a specific tool. In relation to infinite impacts this is a challenge, as there are many ways that nanotechnology can be used that could result in infinite impacts, but also many others where it can help reduce infinite impacts. Different possible sequences from today’s situation to precise atomic manufacturing are well documented and the probability that none of the possible paths would deliver results is very small. What specific sequence and with what results is however very uncertain. Compared with many other global challenges nanotechnology could result in many different risks - and opportunities, from an accelerated ability to manufacture (new) weapons623 to the creation of new materials and substances. These are certainly orders of magnitude more likely, far likelier than any probability of the “grey goo” that has resulted in significant misunderstanding. The data availability is difficult to estimate as there are very different kinds of data, and also an obvious lack of data, as nanotechnology is in its very early days. There are some estimates from experts, but the uncertainty is significant. A relative probability estimate is a possible ffrst step, comparing nanotechnology solutions with existing systems where the probability is better known. Admiral David E. Jeremiah, for example, said at the 1995 Foresight Conference on Molecular Technology: “Military applications of molecular manufacturing have even greater potential than nuclear weapons to radically change the balance of power.” 624 A systems forecasting approach could probably provide better estimates and help develop complementary measures that would support the positive parts of nanotechnology while reducing the negative. Nano---Outweighs---2NC Nanoscale risks are unique and outweigh nukes---math supports probability (Duncan H. Forgan 19. Associate Lecturer at the Centre for Exoplanet Science at the University of St Andrews, Scotland, founding member of the UK Search for Extra-terrestrial Intelligence (SETI) research network and leads UK research efforts into the search. 04/30/2019. “14.3 Estimates of Existential Risk from Nanoscale Manipulation.” Solving Fermi’s Paradox, Cambridge University Press.)/LRCH Jrhee *graph omitted bc it wouldn’t copy The nature of the nanoscale risk is quite different from the risks posed by (say) nuclear weapons. The nuclear arsenals of the Earth remain under the control of a very small number of individuals. As genetic engineering and nanotechnology mature as fields of research, the ability to manipulate the nanoscale becomes available to an increasing number of individuals. Any one of these individuals could accidentally (or purposefully) trigger a catastrophic event that destroys a civilisation. If there are E individuals with access to potentially destructive technology, and the probability that an individual destroys civilisation in any given year is P, then we can define the probability that the civilisation exists after time t and can communicate, C, as a set of independent Bernoulli trials, i.e., P(C|t, E, P) = (1 − P)Et . (14.1) Therefore, we can compute the number of civilisations existing between t = 0, t0 as N(t0) = B t0 0 P(C|t , E, P)dt (14.2) where we have assumed a constant birth rate B. This can be easily integrated to show (Sotos, 2017): N(t0) = B St0 − 1 ln S , S = (1 − P)E (14.3) At large t, this tends to the steady-state solution N(t) → B E P (14.4) i.e., there is an inverse relationship between the number of individuals in a given civilisation, and the number of civilisations remaining (Figure 14.1). The generality of this solution to Fermi’s Paradox – that the democracy of technology is the cause of its downfall – is particularly powerful. All we have assumed is that technology can be destructive, and that it eventually becomes accessible to a large enough number of individuals that the product E P becomes sufficiently large. The number of communicating civilisations as a function of time, given E individuals causing self-destruction with a probability of P per individual per year. The civilisation birth rate B is fixed at one per year. This is a sufficiently general solution that we may consider it to be hard – our definition of intelligence hinges on technology, and almost all advanced technology has a destructive use. We can already use the Great Silence to rule out the upper curves in Figure 14.1, which would yield civilisation counts that would be visible on Earth (see also section 19.2). This suggests that if this is the solution to Fermi’s Paradox, then roughly E P > 10−3. Nano---A2: Defense---General No checks or countermeasures Dr. Olle Häggström 16 (Professor of Mathematical Statistics at Chalmers University of Technology and Associated Researcher at the Institute for Future Studies, Member of the Royal Swedish Academy of Sciences, PhD in Mathematical Statistics and MSc in Electrical Engineering, Here Be Dragons: Science, Technology and the Future of Humanity, p. 136-138, smarx, MLC) not clear how convincing his idea is that it would take “some maniac” to initiate grey goo that it cannot happen by mistake? we can expect non-maniac nanotechnology engineers to attempt that, as it seems to be the most promising way to produce nanobots in the huge quantities we will need for a variety of applications precautions could fail. The comparison to biology is apt, but it is “for this very purpose.”313 Can we state with certainty It is true that self-replication will require “clever design,” but . Such construction will presumably be done with a degree of precaution so as to avoid the self-replication going overboard as in the grey goo scenario, but such At this point, the grey goo skeptic could point again to biology, and the fact that life has existed on our planet for billions of years without turning into grey (or green) goo, as an argument for why self-replication is unlikely to spontaneously produce such a catastrophe. While this argument has some force, it is far from conclusive, as the newly formed nanobots may, in the abstract space of possible living organisms, be located quite far hardly absurd to think that this might give the nanobots a robust reproductive power unmatched by anything that the evolution of biological life has discovered. it is possible to proceed with nanobot technology without risking a grey goo scenario, provided that we respect certain safety protocols, whereas, on the other hand, grey goo would be a real danger without these precautions from all biological life, and have very different physical and chemical properties. It is At the very least, this issue deserves further study. A possible middle ground could be that, on one hand, . Phoenix and Drexler (2004) have a number of suggestions for how nanobots can be made to self-replicate in controlled and safe fashion. A key insight is that the self-replication ability of an agent (be it a biological organism or a robot) is always contingent on its environment. Even a set of blacksmith’s tools can, in the right environment (one that provides suitable input of skills and muscle power), produce a duplicate set, and can thus be What is always needed for self-replication capability is the raw material and energy needed to produce the replicates we could avoid grey goo if we construct the self-replicating machines to contain elements not available in the natural environment described as self-replicating. .314 Hence, . But we may not even have to go that far. Phoenix and Drexler point out that a general-purpose molecular assembler will typically not be a general purpose molecular disassembler. It will, most likely, be far easier to construct an APM machine that requires its raw material to be delivered in the form of a limited range of simple chemicals (such as acetylene or acetone), rather than having the ability to extract With a risk such as grey goo, where the consequences of a single mishap could be global and even put an end to humanity, it is clearly not sufficient that most practitioners of the technology adhere to the regulatio Without defenses, the available biomass could be destroyed by gray goo very rapidly future nanotech manufacturing devices can be created with safeguards that would prevent the accidental creation of self-replicating nanodevices does not eliminate the threat of gray goo The nanotechnology immune system mentioned above, for example, will ultimately require selfreplication; otherwise it would be unable to defend us against the development of increasingly sophisticated types of goo. determined adversary or terrorist can defeat safeguards against unwanted self-replication molecular fragments and atoms from arbitrary chemical compounds. Suppose that we regulate the technology so as to make a specific list of sufficient safety precautions obligatory. n, or that almost all of them do: anything short of obedience to regulation from all practitioners would be unsatisfactory. Can this be ensured? This is not clear. Kurzweil (2006) advocates another safety layer, in the form of nanobots specifically designed to fight grey-goo-like outbreaks. . Clearly, we will need a nanotechnology immune system in place before these scenarios become a possibility. . . . Eric Drexler, Robert Freitas, Ralph Merkle, Mike Treder, Chris Phoenix, and others have pointed out that . However, this observation, although important, as I pointed out above. There are other reasons (beyond manufacturing) that self-replicating nanobots will need to be created. It is also likely to find extensive military applications. Moreover, a ; hence, the need for defense. The suggestion of setting up this kind of defense system against grey goo – which Kurzweil elsewhere calls “police nanobots” and “blue goo”315 – goes back to Drexler (1986). It makes me very uneasy. Joy (2000) points out that the system. Nano---A2: Defense---Regulations Nano regulation is impossible Stephen J. Ridge 18, Master of Arts in Security Studies from the Naval Postgraduate School, Assistant Senior Watch Officer, U.S. Department of Homeland Security, National Operations Center, “A REGULATORY FRAMEWORK FOR NANOTECHNOLOGY”, Thesis, March, p. 2-4 There are many roadblocks to developing suitable nanotechnology regulation. One major roadblock for nanotechnology regulation stems from the lack of consensus on the definition of nanotechnology. It is difficult to construct an architectural framework for nanotechnology regulation when many leaders within the field cannot agree on what the term even means. According to Andrew Maynard, Professor in the School for the Future of Innovation in Society at Arizona State University and co-chair of the World Economic Forum Global Agenda Council on Nanotechnology, “a sensible definition [for nanotechnology] has proved hard, if not impossible, to arrive at.”3 A brief perusal of nanotechnology stakeholders reveals a myriad of definitions upon which their interaction with the technology is based. For instance, nanotechnology is defined by the U.S. National Nanotechnology Initiative as the understanding and control of matter at dimensions between approximately 1 and 100 nanometers where unique phenomena enable novel applications. Encompassing nanoscale science, engineering, and technology, nanotechnology involves imaging, measuring, modeling, and manipulating matter at this length scale.4 In 2010, the European Commission released this definition for public comment: “a material that consists of particles with one or more external dimensions in the size range 1 nm-100 nm for more than 1 percent of their number;” and/or “has internal or surface structures in one or more dimensions in the size range 1 nm-100 nm;” and/or “has a greater than 60 m2/ cm3, excluding materials consisting of particles with a size lower than 1 nm, excluding materials consisting of particles with a size lower than 1 nm.”5 In the 2003 law 21st Century Nanotechnology Research and Development Act, the U.S. Congress defines “nanotechnology” as “the science and technology that will enable one to understand, measure, manipulate, and manufacture at the atomic, molecular, and supramolecular levels, aimed at creating materials, devices, and systems with fundamentally new molecular organization, properties, and functions.”6 The inaugural issue of Nature Nanotechnology in 2006 asked a wide array of researchers, industrialists, and scientists what nanotechnology means to them. Unsurprisingly, the 13 different individuals queried yielded 13 different responses. This lack of a commonly agreed upon nanotechnology lexicon makes regulation difficult. Another roadblock arises because nanotechnology is still an emerging technology. When establishing regulation, the regulating entity and those empowering the regulation assume that the regulating entity has the knowledge of what “good behavior” in an industry should be.7 This assumption is somewhat flawed because predicting the way a new technology will be developed and adopted is very difficult. Therefore, predicting what “good behavior” in an industry will look like is also very difficult.8 This challenge of regulation does not mean regulation should be ignored until the technology is mature, but rather that it is necessary to implement a regulatory framework that can both address problems as they arise and attempt to prevent future problems by exercising foresight into what the near-term and long-term technological developments may be. Next, the properties of engineered nanomaterials make categorization of these materials difficult under current regulatory definitions. For example, according to the National Nanotechnology Initiative (NNI), at the nanoscale, a particle’s properties such as “melting point, fluorescence, electrical conductivity, magnetic permeability, and chemical reactivity change as a function size of the size of the particle.”9 Additionally, according to the NNI “nanoscale materials have far larger surface areas than similar masses of largerscale materials. As surface area per mass of a material increases, a greater amount of the material can come into contact with surrounding materials, thus affecting reactivity.”10 These physical properties provide much of the basis for why the technology has the potential to be revolutionary. However, according to Beaudrie, Kandlikar, and Satterfield, “not enough is known about the relationship between nanomaterial physicochemical characteristics and behavior to anticipate risks. The result is a serious lack of predictive analytical capacity to anticipate harmful implications.”11 Also problematic is that nanotechnology research and development encompass many different fields of science and across several sectors of society. These sectors include: energy, electronics, defense/homeland security, chemicals, communications/information technology, manufacturing, government, food and agriculture, pharmaceutical/health companies, transportation, education, and commerce/economics. This broad range of potential application blurs the lines of regulatory responsibility across different regulatory agencies. Also, each of these sectors contains stakeholders who have differing concerns and interests in the technology and are often competing with one another for the funding of research and development. Time Travel---Impact---2NC Excess matter crashes everything Alexander Vivoni 18, Chemical Engineer, B.S. Chemical Engineering, Federal University of Rio De Janeiro, “Could Traveling Back In Time Destroy The Universe?”, Quora, 10-28, https://www.quora.com/Could-traveling-back-in-time-destroy-the-universe Why? Well, because if you can send any amount of matter back in time, then this exact amount of matter will be duplicated there. The amount of matter used to build the Time Displacement Equipment (whatever it may be) already existed in any past you can send it. It was there since its formation. But now there are two of it. Matter creation. And this creation will propagate instantly from that point in time forward. All momentum of the Universe will change. It may be almost imperceptible for one time travel, but repeat it enough times and you may have the Universe collapsing onto itself for excess matter. Conservation of matter and energy exist for some reason. Four more scenarios! Simon Bucher-Jones 18, Contributor to the Faction Paradox, Member of the British Civil Service, Author, Poet, Artist, “How Could a TARDIS Destroy the Whole Universe?”, StackExchange, 2-25, https://scifi.stackexchange.com/questions/182229/how-could-a-tardis-destroy-the-whole-universe There are a number of possible ways a faulty time machine could destroy a universe. These can be catalogued under three headings: 1) preventing it retroactively, this is the opposite of a boot-strap paradox as instead of part of a universe causing itself (and the universe it is in), part of a universe anulls itself (and the universe it is in). Consider Dirac's hypothesis of the universe as a particle moving forward and backward in time, interacting with itself until all matter is woven from it - then blow that particle up, before it interacts with itself the first time. [Not in Doctor Who, but in other science fiction Barrington Bayley has a short-story in which this happens]. 2) blowing all of it up at once, for example in Douglas Adam's unmade Doctor Who film "Doctor Who and the Krikkitmen" [recently novelised by James Goss], and the ultimate weapon works by opening space-time conduits between the cores of all suns causing simultaneous hypernovae. As the destruction of the TARDIS explicitly causes the cracks in the universe, one hypothesis would be that they link suns in such a way. 3) Affecting space-time itself. A hypotheis explained here: https://cosmosmagazine.com/physics/vacuum-decay-ultimatecatastrophe suggests that if our universe is of a certain kind, specific interactions could collapse the vaccuum itself. Such an effect would propogate at apparent faster than light speeds and would conceivably unravel space-time. None of the above is expressly stated within the fiction, but all TARDIS itself. are possible without postulating infinite energies within the Additionally, although this is not true in Doctor Who, in which time travel exists as a mature technology, Larry Niven has hypotheised that all universes in which time travel is possible destroy themselves through cumulative paradoxes leaving only those universes in which time travel is impossible. Infinite loops Randall 2 Degree in Physics from Cal Tech, “Time Travel - the Possibilities and Consequences”, 11-24, https://h2g2.com/entry/A662447 This theory involves two types of temporal loops. One type is the loop mentioned in the last paragraph, the 'grandfather paradox'. For the rest of this paragraph, let's call it the 'infinite repeat' loop, because it results in two different possibilities, infinitely repeating after one another. Another type of loop exists. It is the 'infinite possibilities' loop. In this loop, the loop changes every single time that the loop repeats. Think of this: Imagine that you ask your best friend to go back in time to before you were born and kill your granddad. Also, you had enough forethought to tell him to, while he's back there, write a note to his future self to go back in time and kill the man who would be your granddad. Everything's Okay, right? Maybe not. When your friend is given the instruction to go and kill your granddad from you, he might do one thing. When he receives a note from his future self, he might do another. And if he does another thing during the second repeat, he must do a different thing the third. And the forth. And the fifth. A change in one iteration of the loop would result in a change in the note, which would result in a change in the next iteration. Eventually, he'll do something that ends up breaking down the loop (ie, forgetting to write himself a note). This will result in a infinite repeat loop starting. And as was already mentioned, infinite repeat loops may cause the universe to end. Time Travel---A2: No Travelers Lack of travelers doesn’t disprove time travel Dr. Carl Sagan 2k, David Duncan Professor of Astronomy and Space Sciences, “Carl Sagan Ponders Time Travel”, PBS Nova, November, http://www.pbs.org/wgbh/nova/time/sagan.html, recut: smarx, MLC) we are not awash in thousands of time travelers from the future, and therefore time travel is impossible. This argument I find very dubious, and it reminds me very much of the argument that there cannot be intelligences elsewhere in space, because otherwise the Earth would be awash in aliens. I can think half a dozen ways in which we could not be awash in time travelers, and still time travel is possible. NOVA: Such as? Sagan: First of all, it might be that you can build a time machine to go into the future, but not into the past, and we don't know about it because we haven't yet invented that time machine. Secondly, it might be that time travel into the past is possible, but they haven't gotten to our time yet, they're very far in the future and the further back in time you go, the more expensive it is. Thirdly, maybe backward time travel is possible, but only up to the moment that time travel is invented. We haven't invented it yet, so they can't come to us. They can come to as far back as whatever it would be, say A.D. 2300, but not further back in time. Then there's the possibility that they're here alright, but we don't see them. They have perfect invisibility cloaks or something. If they have such highly developed technology, then why not? Then there's the possibility that they're here and we do see them, but we call them something else—UFOs or ghosts or hobgoblins or fairies or something like that. Finally, there's the possibility that time travel is perfectly possible, but it requires a great advance in our technology, and human civilization will destroy itself before time travelers invent it. I'm sure there are other possibilities as well, but if you just think of that range of possibilities, I don't think the fact that we're not obviously being visited by time travelers shows that time travel is impossible. One of Hawking's arguments in the conjecture is that Time Travel---A2: Grandfather Paradox The Grandfather Paradox can’t happen. Experimental evidence proves the Universe will stop it. Alasdair Wilkins 10, Master's in Science and Medical Journalism at the University of North Carolina, Writer for AV Club and io9, “Physicists Reveal How The Universe Guarantees Paradox-Free Time Travel”, io9, 7-20, https://io9.gizmodo.com/5591796/physicists-reveal-how-the-universe-guarantees-paradoxfree-time-travel, recut: smarx, MLC) the Grandfather Paradox, in which a time traveler kills his or her grandfather before he fathered the traveler's parent, gets the most attention here. Various Oxford physicist David Deutsch came up with an intriguing possibility in the early 90s when he suggested that it was impossible to kill your grandfather, but it was possible to remember killing your grandfather. In some weird way, the universe would forbid you from creating a paradox, even if your memories told you that you had. This theory, like most others put As always, workarounds have been proposed over the years - forward, relies on liberal use of the word "somehow" and the phrase "for some reason" to explain how it works. As such, it's not an ideal explanation for paradox-free time travel, and that's says that paradoxes might be impossible, but extremely improbable things that prevent them from happening very definitely aren't. Let's go back to the grandfather paradox to see what he means. Let's say you shoot your grandfather at point-blank range. This theory suggests that something will happen, such as the bullet being defective or the gun misfiring, to stop your temporal assassination. This can involve some very low-probability events - for instance, the manufacturer becomes incredibly more likely to make that specific bullet improperly than any other, for the sole reason that it will be later used to kill your grandfather. It might even come down to an ultra-low-probability quantum fluctuation, in which the bullet suddenly alters course for no apparent physical reason, in order to keep the paradox at bay. where a new idea by MIT's Seth Lloyd comes into the picture. He Speed of Light Impact---2NC Elmi says going the speed of light---That destroys the Universe Timothy Bahry 16, York University, “Could Traveling Faster Than The Speed Of Light Destroy The Universe?”, Quora, 10-25, https://www.quora.com/Could-traveling-faster-than-the-speed-of-lightdestroy-the-universe Yes, it might actually be possible to travel faster than light and the universe can be destroyed by it . Here’s an answer I originally posted at If I run along the aisle of a bus traveling at (almost) the speed of light, can I travel faster than the speed of light? According to special relativity, information can't ever travel faster than light for the reason described at https://www.quora.com/How-does-relativity-work/answer/Timothy-Bahry. The universe does obey special relativity in the absense of a gravitational field. Diamond has the highest speed of sound of any substance on Earth which is 12 km/s so if you have a super long diamond rod in outer space and you twist one end, the twisting will travel to the other end at only 12 km/s, far slower than the speed of light. There might actually be a substance whose bulk modulus is larger than its density times c^2 so the wave equation would predict that a longitudinal wave in it will travel faster than light, which is what a neutron star is made of. The reason I'm wondering that is because I read that some neutron stars have a photon sphere. Obviously, even if that is the case, if you bombard its surface, the shockwave in it will not travel faster than light because then it would be travelling backwards in time in another frame of reference and therefore it doesn't obey the wave equation. It can still be destined to obey the wave equation for any analytic initial state without information travelling faster than light. That's because given all derivatives of velocity with respect to position at one point and the fact the the function is analytic, it can be fully determined what the velocities at all the other points are. For that reason, it wouldn't break any laws for a sinusodial wave to travel through a neutron star faster than light. Since the universe doesn't follow special relativity, it might be possible to travel faster than light. Accoriding to MU Physicist Says Testing Technique for Gravitomagnetic Field is Ineffective, gravitomagnetism has been detected so we're pretty sure the universe follows general relativity at the macroscopic level. Objects actually do get dragged faster than light by moving space beyond the event horizon of a black hole. See my answer to What is a black hole? How can we understand it?. Also, galaxies will receed from us faster than light because of the nonzero cosmological constant and just like if they were falling into a black hole, they will appear to approach the certain finite distance away from us without ever reaching it while getting ever more red shifted. However, since our universe follows general relativity, I don't think its laws predict that anything can go from a point in space-time back to the same point without going faster than light within the space itself but I'm not absolutely sure. If an object does do so, its path is called a closed timelike curve. After all, there probably does exist a deterministic set of laws a universe could have that doesn't let information travel faster than light within the space and yet for some initial states, gives a contradictory prediction of what it will evolve into because it predicts the that a closed timelike curve will exist. There's no way a space ship could travel faster than light by bending space around it according to those laws because then the information telling the space to warp would have to travel through the unwarped space far enough ahead of the ship faster than light. Those laws do however permit you to build a track through space no faster than light where time is contracted by a factor of 21 within the track enabling things to go 20 times faster than light within the track as observed from outside. After that, those laws would then also permit another track to be built right beside it that contracts time by a factor of 21 in another frame of reference enabling a spaceship to travel into its own past light cone. If general relativity just like that other set of laws really does allow for closed time-like curves, here's one possible solution. The moment a closed light-like curve gets created, it will nucleate the disappearance of space at the speed of light before it gets a chance to evolve into a closed time-like curve. Such a nucleation may have already occurred in a black hole but never got to us destroying us because light can't escape from a black hole. Grey Goo---2NC Industrialized society makes it inevitable. (Jayar Lafontaine 15. MA in Philosophy from McMaster University and an MDes in Strategic Foresight and Innovation from OCAD University. Foresight strategist with a leading North American innovation consultancy, where he helps organizations to develop their capacity for future thinking so that they can respond more flexibly to change and disruption. 8-17-2015, Misc Magazine "Gray Goo Scenarios and How to Avoid Them," io9, https://io9.gizmodo.com/gray-goo-scenarios-and-how-to-avoid-them1724622907 )/LRCH Jrhee One of the key effects of globalization and the interconnectedness enabled by information technology is that all human systems are becoming increasingly complex, and so more and more susceptible to the Gray Goo effects we notice in the domain of biological ecosystems. Along with the potential for fatal asteroid strikes, gamma ray bursts, and super volcano eruptions, the Future of Humanity Institute at Oxford University – a think tank dedicated to assessing risks that might end human life on Earth – lists such human-led endeavors as artificial intelligence, anthropogenic climate change, and molecular nanotechnology among its key areas of research. The threat of Gray Goo and the risk of ruin, it seems, is on the rise. Whether we are up to the challenge of adjusting the way we think about risk and thinking thoroughly about how our choices impact our increasingly complex world remains to be seen. Warming Warming Turn Nuke war won’t cause extinction---BUT, it does crash the economy, which is key to avert existentially threatening warming. Samuel Miller-McDonald 19. Master of Environmental Management at Yale University studying energy politics and grassroots innovations in the US. 01-04-2019. "Deathly Salvation." TROUBLE.. https://www.the-trouble.com/content/2019/1/4/deathly-salvation The global economy is hurtling humanity toward extinction. Greenhouse gas emissions are on track to warm the planet by six degrees Celsius above preindustrial averages. A six-degree increase risks killing most life on earth, as global warming did during the Late Permian when volcanoes burned a bunch of fossilized carbon (e.g., coal, oil, and gas). Called the Great Dying, that event was, according to New York Magazine, “The most notorious [extinction event…]; it began when carbon warmed the planet by five degrees, accelerated when that warming triggered the release of methane in the Arctic, and ended with 97 percent of all life on Earth dead.” Mainstream science suggests that we’re on our way there. During the winter of 2017, the Arctic grew warmer than Europe, sending snow to the Mediterranean and Sahara. The planet may have already passed irreversible thresholds that could accelerate further feedback loops like permafrost melt and loss of polar ice. Patches of permafrost aren’t freezing even during winter, necessitating a rename (may I suggest ‘nevafrost’?). In the summer of 2018, forests north of the Arctic Circle broke 90 degrees Fahrenheit and burned in vast wildfires. We’re reaching milestones far faster than scientists have even recently predicted. As Guardian columnist George Monbiot noted, “The Arctic meltdown […] is the kind of event scientists warned we could face by 2050. Not by 2018.” Mass marine death that rapidly emits uncontrollable greenhouse gasses is another feedback loop that seems ready to strike. The ocean is now more acidic than any time in the last 14 million years, killing everything from snails to whales. It’s growing rapidly more acidic. Meanwhile, from the global South to wealthier industrialized countries, people are already dying and being displaced from the impacts of extreme climate change via extreme droughts, floods, wildfires, storms, and conflicts like the Syrian civil war. Authoritarianism is on the rise due directly to these climate emergencies and migrations. The IPCC has recently alerted the world that we have about a decade to dramatically cut emissions before collapse becomes inevitable. We could prevent human extinction if we act immediately. But the world is unanimously ignoring climate change. Nations will almost certainly fail to avert biosphere collapse. That is because doing so will require a rapid decarbonization of the global economy. But why does decarbonization--an innocuous enough term--seem so implausible? Well, let’s put it this way: a sufficient transition to non-carbon energy would require all the trains, buses, planes, cars, and ships in the world to almost immediately stop and be replaced with newly manufactured vehicles to run on non-carbon fuel, like hydrogen cells, renewable electricity, or some carbon-neutral biofuel. All this new manufacturing will have to be done with low-carbon techniques, many of which don’t exist yet and may be impossible to achieve at scale. This means all the complex supply chains that move most of the world’s food, water, medicine, basically all consumer goods, construction materials, clothing, and everything else billions of people depend on to survive will have to be fundamentally reformed, in virtually every way, immediately. It also means that all the electric grids and indoor heating and cooling systems in the world must be rapidly transformed from centralized coal and gas power plants to a mixture of solar, wind, and nuclear—both distributed and centralized—dispersed through newly built micro-grids and smart-grids, and stored in new battery infrastructure. These new solar panels, batteries, and nuclear plants will somehow have to be built using little carbon energy, again something that may be impossible to achieve at a global scale. The cost of this transition is impossible to know, but surely reaches the tens of trillions of dollars. It needs to happen in just about every industrialized nation on the planet and needs to happen now—not in 2050, as the Paris Agreement dictates, or the 2030s, as reflected in many governments’ decarbonization goals. The engineering and administrative obstacles are immense; disentangling century-old, haphazard electric grid systems, for example, poses an almost unimaginable cascade of institutional and logistical hurdles. Imagine the difficulty of persuading millions of municipalities around the world to do anything simultaneously; now, imagine convincing them all to fundamentally shift the resource infrastructure on which their material existence depends immediately. Perhaps even more daunting are the political obstacles, with diverse financial interests woven together in a tapestry of inertia and self-interest. Virtually all retirement funds, for instance, are invested in fossil fuel companies. Former and current fossil fuel industry managers sit on all manner of institutional committees in which energy and investment decisions are made: trustee boards of universities, regulatory commissions, city councils, congressional committees, philanthropic boards, federal agencies, the Oval Office couch. Lots of people make lots of money from fossil fuels. Will they sacrifice deeply vested interests to prevent collapse? They certainly have not shown signs of doing so yet, when the stakes are as dire as they’ve ever been; most have instead ruthlessly obstructed meaningful action. Will enough people be willing to do what it takes to forcibly remove them from the most powerful institutions in the world? That also seems unlikely, given meager public involvement in this issue so far. This is the obstacle of collective action: everyone has to sacrifice, but no one wants to start. Who will assent to giving up their steady returns from fossil fuels if everyone else refuses? When people are living so precariously as it is (43% of American can’t afford basic necessities), how can we ask them to undertake energy transition? The US drags its feet on decarbonizing and justifies it by arguing that China has not made strong enough commitments. Which country will voluntarily give up access to strategic fossil fuel reserves? Much of our geopolitical dynamics and wars have revolved around access to mineral resources like oil. Is the US going to put itself in a disadvantaged position for the climate? Shell withdraws research funding for renewables because ExxonMobil goes full steam ahead on oil, and, hey, they must compete. Fossil fuel funded politicians of both parties certainly will not aid transition. If untangling the webs of influence, interests, and engineering preventing decarbonization weren’t daunting enough, the world will also have to suck billions of tons of greenhouse gases out of the atmosphere that have already been emitted. Keeping the planet to even a deadly 1.5 degrees Celsius increase of warming depends on it. This sounds simpler than it is, as if a big vacuum cleaner could siphon particulates from the sky. But no one really knows how to extract and sequester carbon at the scale necessary to prevent catastrophic climate change. Engineers have thrown out a lot of ideas—some more plausible than others—but most scientists who have looked at proposals generally agree that it’s wishful thinking. As Huffington Post quotes Clive Hamilton, “In order to capture just a quarter of the emissions from the world's coal-fired power plants we would need a system of pipelines that would transport a volume of fluid twice the size of the global crude-oil industry.” Of course, manufacturing, shipping, and constructing those pipelines would require immense carbon energy inputs and emissions. And that’s just to capture the emissions from coal! Like energy transition, carbon capture and sequestration requires governments to act collectively to invest trillions of dollars in risky, experimental, and probably mostly ineffectual sequestration technologies. Again, it’s a collective action problem: nobody wants to be the one to sacrifice while no one else is putting themselves on the line. And the miniscule likelihood that energy transition will occur under a TrumpDigs-Coal presidency—and the Trumpian nationalists winning elections across the world—casts further doubt on the possibility of rapid decarbonization. The administration’s energy department has projected that, “The carbon footprint of the United States will barely go down at all for the foreseeable future and will be slightly higher in 2050,” as InsideClimateNews notes. The world, today, is still setting records for carbon emissions and there’s no sign that will change anytime soon. The only period in US history the nation has undertaken anything near the magnitude of collective action necessary for mitigation was during the Second World War and the rebuilding effort in its aftermath. But even those projects involved a fraction of the capital and coordination that will be necessary for sufficient energy transition and carbon sequestration. More importantly, today’s collective action will have to be politically justified without the motivation of defeating a personified enemy—a Hitler, if you will. Today, with interpersonal alienation running rampant and extremely consolidated wealth and power, industrial economies seem infinitely far from a cultural, political atmosphere in which collective action policies are even close to possible. To the contrary, wealthy countries are all still slashing public goods, passing austerity budgets, and investing heavily in fossil fuel infrastructure. Even most elected Democrats are dragging their feet on passing climate policy. The world is going in the exact opposite direction from one in which humans can live. We’ve tied ourselves in a perfect Gordian knot. The global economy is a vast machine, operating beyond the control of even the most powerful individuals, and it has a will of its own to consume and pollute. It’s hard to believe that this massive metal beast will be peacefully undone by the people who survive by it, and we all survive by it in some way, often against our wills; it bribes and entraps us all in ways large and small. But a wrench could clog the gears, and maybe only a wrench can stop it. One wrench that could slow climate disruption may be a large-scale conflict that halts the global economy, destroys fossil fuel infrastructure, and throws particulates in the air. At this point, with insane people like Trump, Putin, Xi, May, and Macron leading the world’s biggest nuclear powers, large-scale conflagration between them would probably lead to a nuclear exchange. Nobody wants nuclear war. Rather, nobody sane and prosocial wants nuclear war. It is an absolute horror that would burn and maim millions of living beings, despoil millions of hectares, and scar the skin of the earth and dome of the sky for centuries, maybe millennia. With proxy conflict brewing between the US and Russia in the Middle East and the Thucydides trap ready to ensnare us with an ascendant China, nuclear war looks like a more realistic possibility than it has since the 1980s. A devastating fact of climate collapse is that there may be a silver lining to the mushroom cloud. First, it should be noted that a nuclear exchange does not inevitably result in apocalyptic loss of life. Nuclear winter—the idea that firestorms would make the earth uninhabitable—is based on shaky science. There’s no reliable model that can determine how many megatons would decimate agriculture or make humans extinct. Nations have already detonated 2,476 nuclear devices. An exchange that shuts down the global economy but stops short of human extinction may be the only blade realistically likely to cut the carbon knot we’re trapped within. It would decimate existing infrastructures, providing an opportunity to build new energy infrastructure and intervene in the current investments and subsidies keeping fossil fuels alive. In the near term, emissions would almost certainly rise as militaries are some of the world’s largest emitters. Given what we know of human history, though, conflict may be the only way to build the mass social cohesion necessary for undertaking the kind of huge, collective action needed for global sequestration and energy transition. Like the 20th century’s world wars, a nuclear exchange could serve as an economic leveler. It could provide justification for nationalizing energy industries with the interest of shuttering fossil fuel plants and transitioning to renewables and, uh, nuclear energy. It could shock us into reimagining a less suicidal civilization, one that dethrones the death-cult zealots who are currently in power. And it may toss particulates into the atmosphere sufficient to block out some of the solar heat helping to drive global warming. Or it may have the opposite effects. Who knows? What we do know is that humans can survive and recover from war, probably even a nuclear one. Humans cannot recover from runaway climate change. Nuclear war is not an inevitable extinction event; six degrees of warming is. Given that mostly violent, psychopathic individuals manage the governments and industries of the world, it may only be possible for antisocial collective action—that is, war—to halt, or at least slow, our inexorable march toward oblivion. A courageous, benevolent ruler might compel vast numbers of people to collective action. But we have too few of those, and the legal, political, and military barriers preventing them from rising are immense. Our current crop of villainous presidents, prime ministers, and CEOs, whether lusting for chaos or pursuing their own petty ends, may inadvertently conspire to break the machine now preventing our future. When so bereft of heroes, we may need to rely on humanity’s antagonists and their petty incompetence to accidentally save the day. It is a stark reflection of how homicidal our economy is—and our collective adherence to its whims—that nuclear war could be a rational course of action. Nuke war solves warming Sorin Adam Matei 12. Ph.D., Associate Dean of Research and Professor of Communication, College of Liberal Arts and Brian Lamb School of Communication, Purdue University. 3-26-2012. "A modest proposal for solving global warming: nuclear war – Sorin Adam Matei." Matei. https://matei.org/ithink/2012/03/26/a-modest-proposal-for-solving-global-warming-nuclear-war/ We finally have a solution for global warming. A discussion on the board The Straight Dope about the likely effect of a nuclear war brought up the hypothesis that a nuclear war on a large scale could produce a mini-nuclear winter. Why? Well, the dust and debris sent into the atmosphere by the conflagrations, plus the smoke produced by the fires started by the explosions would cover the sun for a period long enough to lower the temperature by as much as 40 degrees Celsius for a few months and by up to 2-6 degree Celsius for a few years. One on top of the other, according to this Weather Wunderground contributor, who cites a bona fide research paper on nuclear winter, after everything would settle down we would be back to 1970s temperatures. Add to this the decline in industrial production and global oil consumption due to industrial denuding of most large nations and global warming simply goes away. I wonder what Jonathan Swift would have thought about this proposal? Warming Turn---Overview Outweighs the AFF---unlike nuclear war, climate change actually kills everyone. Samuel Miller-McDonald 18. Master of Environmental Management at Yale University studying energy politics and grassroots innovations in the US. 5-2-2018. "Extinction vs. Collapse." Resilience. https://www.resilience.org/stories/2018-05-02/extinction-vs-collapse/ What’s more frightening than potentially implausible uncertainties are the currently existing certainties. For example: Ecology + The atmosphere has proven more sensitive to GHG emissions than predicted by mainstream science, and we have a high chance of hitting 2oC of warming this century. Could hit 1.5C in the 2020s. Worst-case warming scenarios are probably the most likely.+ Massive marine death is happening far faster than anyone predicted and we could be on the edge of an anoxic event. + Ice melt is happening far faster than mainstream predictions. Greenland’s ice sheet is threatening to collapse and already slowing ocean currents, which too could collapse.+ Which also means predictions of sea level rise have doubled for this century.+ Industrial agriculture is driving massive habitat loss and extinction. The insect collapse – population declines of 75% to 80% have been seen in some areas – is something no one predicted would happen so fast, and portends an ecological sensitivity beyond our fears. This is causing an unexpected and unprecedented bird collapse (1/8 of bird species are threatened) in Europe.+ Forests, vital carbon sinks, are proving sensitive to climate impacts.+ We’re living in the 6th mass extinction event, losing potentially dozens of species per day. We don’t know how this will impact us and our ability to feed ourselves. Energy+ Energy transition is essential to mitigating 1.5+C warming. Energy is the single greatest contributor to anthroGHG. And, by some estimates, transition is happening 400 years too slowly to avoid catastrophic warming.+ Incumbent energy industries (that is, oil & gas) dominate governments all over the world. We live in an oil oligarchy – a petrostate, but for the globe. Every facet of the global economy is dependent on fossil fuels, and every sector – from construction to supply chains to transport to electricity to extraction to agriculture and on and on – is built around FF consumption. There’s good reason to believe FF will remain subsidized by governments beholden to their interests even if they become less economically viable than renewables, and so will maintain their dominance.+ We are living in history’s largest oil & gas boom.+ Kilocalorie to kilocalorie, FF is extremely dense and extremely cheap. Despite reports about solar getting cheaper than FF in some places, non-hydro/-carbon renewables are still a tiny minority (~2%) of global energy consumption and will simply always, by their nature, be less dense kcal to kcal than FF, and so will always be calorically more expensive.+ Energy demand probably has to decrease globally to avoid 1.5C, and it’s projected to dramatically increase. Getting people to consume less is practically impossible, and efficiency measures have almost always resulted in increased consumption.+ We’re still setting FF emissions records. Politics + Conditions today resemble those prior to the 20th century’s world wars: extreme wealth inequality, rampant economic insecurity, growing fascist parties/sentiment, and precarious geopolitical relations, and the Thucydides trap suggests war between Western hegemons and a rising China could be likely. These two factors could disrupt any kind of global cooperation on decarbonization and, to the contrary, will probably mean increased emissions (the US military is one of the world’s single largest consumers/emitters of FF). + Neoliberal ideology is so thoroughly embedded in our academic, political, and cultural institutions, and so endemic to discourse today, that the idea of degrowth – probably necessary to avoid collapse – and solidarity economics isn’t even close to discussion, much less realization, and, for self-evident reasons, probably never will be.+ Living in a neoliberal culture also means we’ve all been trained not to sacrifice for the common good. But solving climate change, like paying more to achieve energy transition or voluntarily consuming less, will all entail sacrificing for the greater good. Humans sometimes are great at that; but the market fundamentalist ideology that pervades all social, commercial, and even self relations today stands against acting for the common good or in collective action.+ There’s basically no government in the world today taking climate change seriously. There are many governments posturing and pretending to take it seriously, but none have substantially committed to a full decarbonization of their economies. (Iceland may be an exception, but Iceland is about 24 times smaller than NYC, so…) + Twenty-five years of governments knowing about climate change has resulted in essentially nothing being done about it, no emissions reductions, no substantive moves to decarbonize the economy. Politics have proven too strong for common sense, and there’s no good reason to suspect this will change anytime soon. + Wealth inequality is embedded in our economy so thoroughly – and so indigenously to FF economies – that it will probably continue either causing perpetual strife, as it has so far, or eventually cement a permanent underclass ruled by a small elite, similar to agrarian serfdom. There is a prominent view in left politics that greater wealth equality, some kind of ecosocialism, is a necessary ingredient in averting the kind of ecological collapse the economy is currently driving, given that global FF capitalism by its nature consumes beyond carrying capacities. At least according to one Nasa-funded study, the combination of inequality and ecological collapse is a likely cause for civilizational collapse. Even with this perfect storm of issues, it’s impossible to know how likely extinction is, and it’s impossible to judge how likely or extensive civilizational collapse may be. We just can’t predict how human beings and human systems will respond to the shocks that are already underway. We can make some good guesses based on history, but they’re no more than guesses. Maybe there’s a miracle energy source lurking in a hangar somewhere waiting to accelerate non-carbon transition. Maybe there’s a swelling political movement brewing under the surface that will soon build a more just, ecologically sane order into the world. Community energy programs are one reason to retain a shred of optimism; but also they’re still a tiny fraction of energy production and they are not growing fast, but they could accelerate any moment. We just don’t know how fast energy transition can happen, and we just don’t know how fast the world could descend into climate-driven chaos – either by human strife or physical storms. What we do know is that, given everything above, we are living through a confluence of events that will shake the foundations of civilization, and jeopardize our capacity to sustain large populations of humans. There is enough certainty around these issues to justify being existentially alarmed. At this point, whether we go extinct or all but a thousand of us go extinct (again), maybe that shouldn’t make much difference. Maybe the destruction of a few billion or 5 billion people is morally equivalent to the destruction of all 7 billion of us, and so should provoke equal degrees of urgency. Maybe this debate about whether we’ll go completely extinct rather than just mostly extinct is absurd. Or maybe not. I don’t know. What I do know is that, regardless of the answer, there’s no excuse to stop fighting for a world that sustains life. 2NC—Warming Turn—AT: Causes War It turns every impact and causes extinction Phil Torres 16. PhD candidate @ Rice University in tropical conservation biology, Op-ed: Climate Change Is the Most Urgent Existential Risk, http://ieet.org/index.php/IEET/more/Torres20160807 Climate change and biodiversity loss may pose the most immediate and important threat to human survival given their indirect effects on other risk scenarios. Humanity faces a number of formidable challenges this century. Threats to our collective survival stem from asteroids and comets, supervolcanoes, global pandemics, climate change, biodiversity loss, nuclear weapons, biotechnology, synthetic biology, nanotechnology, and artificial superintelligence. With such threats in mind, an informal survey conducted by the Future of Humanity Institute placed the probability of human extinction this century at 19%. To put this in perspective, it means that the average American is more than a thousand times more likely to die in a human extinction event than a plane crash.* So, given limited resources, which risks should we prioritize? Many intellectual leaders, including Elon Musk, Stephen Hawking, and Bill Gates, have suggested that artificial superintelligence constitutes one of the most significant risks to humanity. And this may be correct in the long-term. But I would argue that two other risks, namely climate change and biodiveristy loss, should take priority right now over every other known threat. Why? Because these ongoing catastrophes in slow-motion will frame our existential predicament on Earth not just for the rest of this century, but for literally thousands of years to come. As such, they have the capacity to raise or lower the probability of other risks scenarios unfolding. Multiplying Threats Ask yourself the following: are wars more or less likely in a world marked by extreme weather events, megadroughts, food supply disruptions, and sea-level rise? Are terrorist attacks more or less likely in a world beset by the collapse of global ecosystems, agricultural failures, economic uncertainty, and political instability? Both government officials and scientists agree that the answer is “more likely.” For example, the current Director of the CIA, John Brennan, recently identified “the impact of climate change” as one of the “deeper causes of this rising instability” in countries like Syria, Iraq, Yemen, Libya, and Ukraine. Similarly, the former Secretary of Defense, Chuck Hagel, has described climate change as a “threat multiplier” with “the potential to exacerbate many of the challenges we are dealing with today — from infectious disease to terrorism.” The Department of Defense has also affirmed a connection. In a 2015 report, it states, “Global climate change will aggravate problems such as poverty, social tensions, environmental degradation, ineffectual leadership and weak political institutions that threaten stability in a number of countries.” Scientific studies have further shown a connection between the environmental crisis and violent conflicts. For example, a 2015 paper in the Proceedings of the National Academy of Sciences argues that climate change was a causal factor behind the record-breaking 2007-2010 drought in Syria. This drought led to a mass migration of farmers into urban centers, which fueled the 2011 Syrian civil war. Some observers, including myself, have suggested that this struggle could be the beginning of World War III, given the complex tangle of international involvement and overlapping interests. Warming Turn---AT: Paris Solves Paris fails. Joana Castro Pereira & Eduardo Viola 18. Lusiada University; University of Brasilia. 11/2018. “Catastrophic Climate Change and Forest Tipping Points: Blind Spots in International Politics and Policy.” Global Policy, vol. 9, no. 4, pp. 513–524. According to CAT,4 the pledges that governments have made under the Paris Conference have a probability of more than 90 per cent of exceeding 2°C and a 50 per cent chance of reaching 3.2°C. This possibility is even more problematic since the countries are not currently on track to meet their pledges. The United Nations Environmental Programme (UNEP 2017) experts suggest that even if all pledges are fully implemented, the available carbon budget for keeping the planet at 1.5°C will already be well depleted by 2030. There is a serious inconsistency between the 1.5°C goal of the Paris Climate Agreement and the generic and diffuse pathways that were designed to achieve it. Although it can be seen as a diplomatic success, from the scientific point of view the agreement is weak, inadequate, and overdue, indicating very slow progress in decarbonizing the global economy. For twenty-five years now, after multiple conferences and pledges under the United Nations Framework Convention on Climate Change (UNFCCC), global carbon emissions have increased significantly at a rapid pace, and climate change has worsened considerably. The Paris Agreement is insufficient to reverse this path. The weakness of the agreement can essentially be summarized in five points. 1. Due mostly to strong resistance by countries such as the US and India, the Intended Nationally Determined Contributions (INDCs) are voluntary, and there are no tools to punish (not even moral sanctions) the parties that do not meet their pledges. 2. As we have seen, even if fully implemented – which seems very unlikely – the sum of all INDCs have a probability of less than 10 per cent of remaining at less than 2°C and a greater than 50 per cent chance of exceeding 3°C. 3. Due to resistance from countries such as China and India, which consider it an intrusion on their national sovereignty, the system established for monitoring the implementation of INDCs is weak. 4. There are no established dates by which parties must achieve their GHG emissions peaks – only a vague ‘as soon as possible’. Furthermore, the concept of decarbonization was removed from the agreement: there is no reference to the end of fossil fuel subsidies. 5. The Green Climate Fund, which is intended to raise 100 billion dollars per year by 2020 to support developing countries in their mitigation and adaptation efforts – established in Cancun in 2010 and minimally implemented – is part of the agreement. However: (1) the amount of public resources to be transferred, that is, those that could be truly guaranteed, was not defined; (2) these 100 billion dollars represent only approximately 0.4 per cent of the GDP of developed countries and are insufficient to truly address the problem; and (3) the US is the largest contributor to the fund, but President Trump’s decision to withdraw from the agreement indicates that funding for climate programmes will be cut. In addition, with the exception of China, the emerging middle-income countries have refused to commit to transferring financial resources to poor countries, which is particularly relevant since many developing countries (India among them) have made their pledges dependent on international financial and technological support. It thus seems highly unlikely that the medium and longterm processes established by the Paris Agreement can prevent dangerous climate change. Consequently, the risk of transcending the IPCC’s mid-range RCPs might be far greater than estimates suggest. Nevertheless, GCRs in general and CCC in particular remain neglected in the academic and political realms. Discussions about climate change rarely acknowledge catastrophic climate risk. Warming Turn---AT: Reform Solves Global coordination to solve climate change is impossible. David Wallace-Wells 19. National Fellow at New America, deputy editor of New York Magazine. 02/19/2019. “I. Cascades.” The Uninhabitable Earth: Life After Warming, Crown/Archetype. The United Nations’ Intergovernmental Panel on Climate Change (IPCC) offers the gold-standard assessments of the state of the planet and the likely trajectory for climate change—goldstandard, in part, because it is conservative, integrating only new research that passes the threshold of inarguability. A new report is expected in 2022, but the most recent one says that if we take action on emissions soon, instituting immediately all of the commitments made in the Paris accords but nowhere yet actually implemented, we are likely to get about 3.2 degrees of warming, or about three times as much warming as the planet has seen since the beginning of industrialization—bringing the unthinkable collapse of the planet’s ice sheets not just into the realm of the real but into the present. That would eventually flood not just Miami and Dhaka but Shanghai and Hong Kong and a hundred other cities around the world. The tipping point for that collapse is said to be around two degrees; according to several recent studies, even a rapid cessation of carbon emissions could bring us that amount of warming by the end of the century. Reformist solutions to climate change are impossible due to cognitive bias, ideological entrenchment, and game theory. Joana Castro Pereira & Eduardo Viola 18. Lusiada University; University of Brasilia. 11/2018. “Catastrophic Climate Change and Forest Tipping Points: Blind Spots in International Politics and Policy.” Global Policy, vol. 9, no. 4, pp. 513–524. An attentive analysis of the latest scientific data demonstrated that the worst climate scenarios are not distant dystopias. Catastrophic climate risk is closer than most of humanity can perceive: we might have already crossed the core planetary boundary for climate change. The planet is experiencing record temperatures, and even 2°C global warming could trigger tipping points in the Earth’s system; the prevailing estimates and assumptions are based on the stability of threatened crucial terrestrial biomes, such as boreal forests and the Amazon rainforest, and political action under the Paris Climate Agreement is clearly imprecise and insufficient to prevent dangerous climate change, pushing the world closer to transcending the IPCC’s mid-range RCPs. However, policymakers are disregarding the risk of crossing a climate tipping point. Furthermore, the real gravity of the climate risk is widely recognized by natural scientists but by only a small segment of the social sciences. As experts working on GCR reduction have stated, ‘we can reduce the probability that a trigger event will occur at a certain magnitude, (...) the probability that a certain consequence will follow, and (...) our own fragility to risk, to decrease the potential damage or support faster recovery’ (Global Challenges Foundation, 2016, p. 12); however, humanity is presently entirely unprepared to envisage and avert, and even less manage, the devastating consequences of potential CCC. Let us now focus on a number of cognitive and cultural, as well as institutional and political, limitations that hinder our capacity to prevent climate catastrophe. First, the limitations of our cognitive ability prevent us from thinking accurately about catastrophe: the human brain is wired to process linear correlations, so our minds are not prepared to make sense of sudden, rapid, and exponential changes. Human cognitive expectations are failed by the uncertainty and nonlinearity of the Earth and social systems (Abe et al., 2017; Posner, 2004). In addition, modern societies have developed under the stable and predictable environmental conditions of the Holocene. As a result, the current political-legal system was developed to address structured, short-term, direct cause and effect issues, providing simple solutions that produce immediate effects. Acknowledgement of the Anthropocene’s features is essential. The traditional premises of a stable environment are no longer valid: as we have seen, humans are profoundly and systemically altering the Earth’s system, and the consequences are uncertain given the intrinsic non-linear properties of the system itself (Viola and Basso, 2016). Moreover, catastrophic climate risk is not only marked by high levels of uncertainty, but it also offers little or no opportunity for adaptive management, that is, learning from experience and revising policies and institutions. Effectively managing catastrophic climate risk requires a proactive approach that anticipates emerging threats, mobilizes support for action against potential future harm, and undertakes measures that are sufficiently correct the first time. Nevertheless, current institutions do not seem capable of acting in this manner, and attempts to follow a proactive approach within less perfect institutions could translate into oppressive behaviours and security measures, such as restrictions of civil liberties (Bostrom, 2013). Few existing governance instruments can be used to prevent unprecedented and uncertain threats. Furthermore, when addressing potential CCC, it is important that policies neither misplace priorities nor produce catastrophic risk-risk trade-offs, that is, creating a new catastrophic risk through efforts to prevent another, as in the case of using solar radiation management techniques (namely, stratospheric aerosol injection) to overcome global warming, which might, for instance, accelerate stratospheric ozone depletion. In the absence of concrete progress, pressures for early deployment of several poorly understood geoengineering options, the outcomes of which are unpredictable, could arise. This task is particularly demanding in managing risk-risk trade-offs (Pereira, 2016; Wiener, 2016). Hence, financial resources for innovative research and policy foresight are needed to better anticipate the future consequences of our present choices. Nevertheless, the multidisciplinary nature of CCC, as well as it being not easily manageable by traditional/familiar scientific research methodologies, hinders research and, as a consequence, our capacity to address the problem (Bostrom, 2013). Second, avoiding CCC requires breaking with one of the core dogmas of the prevailing scholarship and politics: the nature-society dichotomy. Climate change is generally considered an external enemy (Methmann and Rothe, 2012). However, one key lesson from the Anthropocene’s new geological conditions is that there is an unbreakable link between the natural and social worlds: humans are now geological agents, and threats to security are no longer limited to outside agents of each national society (Fagan, 2016; Pereira, 2017; Pereira and Freitas, 2017). The twenty-first century human development paradigm recognizes only rhetorically the importance of nature. Averting climate catastrophe is thus inseparable from an honest and open reflection about the materials and energy systems used to produce consumer goods, as well as consumption patterns, lifestyles, values, beliefs, and current institutions. The world requires new ideas, stories, practices, and myths that promote fundamental changes in the manner in which humanity thinks and acts in relation to nature (Burke et al., 2016; Dalby, 2013). However, recognition that overcoming climate change demands a major paradigm shift in our fundamental thinking and acting has fuelled climate sceptics, who have been raising their voices in the media, creating popular confusion and inflaming political resistance (Capstick et al., 2015; Gupta, 2016). Additionally, as Chris Hedges (2015) noted, there seems to be a human tendency towards dismissing reality when it is unpleasant as well as a ‘mania’ for positive thinking and hope in Western culture; a belief that ‘the world inevitably moves toward a higher material and moral state’. This na€ıve optimism ignores the fact that history is not linear and moral progress does not always accompany technical progress, defying reality. History had long dark ages; in the absence of vigorous action, nothing can ensure us a safe future. Yet this ‘ideology of inevitable progress’ hinders our capacity for radical change. In Oryx and Crake, Margaret Atwood also touched upon this idea, asserting that humanity is ‘doomed by hope’. Third, current generations need strong incentives to adopt new practices. The mitigation of climate change is usually associated with the far future argument, that is, the assumption that people should act to avert catastrophic threats to humanity with the aim of improving the far future of human civilization. Nevertheless, many people lack motivation to help the far future, being concerned only with the near future or even only with themselves and those around them (Baum, 2015). GCR reduction is an intergenerational public good, thus addressing a problem of temporal free riding: future generations enjoy most of the benefits; consequently, the current generation benefits from inaction, while future generations bear the costs (Farquhar et al., 2017). Resisting short-term individual benefits (such as enjoying current consumption patterns) to achieve long-term collective interests requires strong discipline and persistence. Moreover, catastrophe’s large magnitude of impact can result in numbing. In psychology, Slovic (2007) found that people are usually willing to pay more to save one or two individuals than a mass of people – high-magnitude situations usually translate into general distrust in human agency, thus demobilizing people. He also found that willingness to pay increases as a victim is described in greater detail and if he or she is given a face since it stimulates compassion. Conversely, future generations are a vast and blurred subject. For the same reasons, numbing also seems to occur regarding the conservation of nature. In addition, people tend to be concerned about recent, visible events that stimulate strong feelings, but global catastrophes are very rare or ultra-low-frequency risks; consequently, they are not recent or visible. Since they have not been experienced, there is no psychological availability, so public concern for them is low, in turn generating neglect in politics (Wiener, 2016). Catastrophic risks could thus face undervaluation. In this regard, it is worth considering David Wallace-Wells’ New York Magazine cover story, ‘The Uninhabitable Earth’, published in July 2017, and the debate it raised within the climate science community about the consequences of elevating the idea of environmental catastrophes (see, for instance, Mooney, 2017). The American journalist described an apocalyptic scenario in which climate change renders the Earth uninhabitable by 2100. This projected scenario of hopelessness received harsh criticism by climate scientists such as Michael Mann, Jonathan Foley, Marshall Shepherd, and Eric Steig, who described the article as ‘paralyzing’, ‘irresponsible’, and ‘alarmist’, presenting the problem as unsolvable (Mooney, 2017). Indeed, apocalyptic scenarios can stimulate numbing, so the Wallace-Wells story is clearly not an example of how climate risk should be communicated. Additionally, as we have seen in Section 2, the future consequences of climate change may actually be catastrophic without necessarily implying the existential risks described in ‘The Uninhabitable Earth’. Consequently, it is possible to address catastrophic climate risk without succumbing to hopeless descriptions of the ‘end of the world’. Since public concern for catastrophic climate risk is an essential condition for overcoming neglect in politics, elevating the importance of the topic among the public appears inevitable. One premise seems certain, however: CCC should not be framed as unavoidable or impossible to mitigate, but the problem should neither be addressed with an unrealistic sense of optimism/hope that masks the need for vigorous action. As Hedges (2015) put it, ‘hope, in this sense, is a form of disempowerment’. Communicating the potential catastrophic consequences of the climate crisis without stimulating mass numbing, along with avoiding excessive positive thinking that hinders the necessary radical collective action is fundamental. Yet this is not an easy undertaking. Fourth, averting global catastrophes requires deep levels of global cooperation. Nevertheless, countries have historically been unwilling to truly cooperate without having first experienced a crisis; however, global catastrophes might not offer opportunities for learning. As Barrett (2016) observed , strong threshold uncertainty undermines collective action to avert catastrophic events. When uncertainty about the threshold is substantial, acting to avert a catastrophe is a prisoners’ dilemma because countries have a major collective incentive to act but a very significant individual incentive not to. If the threshold is certain, and every other country plays its part in averting the catastrophe, each country wants to participate since it is certain that a lack of effective action by one country will lead to a catastrophic outcome. Conversely, when the threshold is uncertain, even if every other country plays its part, each country has an incentive to diminish its efforts. In this case, a country reduces its costs considerably and increases the odds of catastrophe only marginally. Nevertheless, when all countries face this same incentive and behave accordingly, efforts to avert a catastrophe decrease significantly, rendering a catastrophic outcome nearly certain, which is the current international political scenario regarding catastrophic climate risk. President Trump’s decision to withdraw from the Paris Agreement is symptomatic of the enormous challenges facing global cooperation. Actually, there has been a slowdown in global intergovernmental cooperation, with a tendency towards abandoning legally binding quantitative targets; climate governance is now the result of bilateral and multilateral agreements, voluntary alliances, and city coalitions, as well as public-private partnerships. Climate change is a ‘super wicked’ problem, intricately linked to everything else: water, food, energy, land use, transportation, housing, development, trade, investment, security, etc. As a result, it seems inevitable that there is a lack of consensus regarding the distribution of rights and responsibilities among countries and that the governance of the problem has undergone a number of different actors and stages (Gupta, 2016). However, and although bottom-up initiatives are an important part of the solution, a global, legally binding agreement is fundamental if humanity is to reduce net GHG emissions to zero. Warming Turn---AT: Tech Solves Tech fails absent political will. Collapse has to happen this year, natural rates of tech adoption will take 400 years to reduce emissions. David Wallace-Wells 19. National Fellow at New America, deputy editor of New York Magazine. 02/19/2019. “III. The Climate Kaleidoscope.” The Uninhabitable Earth: Life After Warming, Crown/Archetype. The same can be said, believe it or not, for the much-heralded green energy “revolution,” which has yielded productivity gains in energy and cost reductions far beyond the predictions of even the most doe-eyed optimists, and yet has not even bent the curve of carbon emissions downward. We are, in other words, billions of dollars and thousands of dramatic breakthroughs later, precisely where we started when hippies were affixing solar panels to their geodesic domes. That is because the market has not responded to these developments by seamlessly retiring dirty energy sources and replacing them with clean ones. It has responded by simply adding the new capacity to the same system. Over the last twenty-five years, the cost per unit of renewable energy has fallen so far that you can hardly measure the price, today, using the same scales (since just 2009, for instance, solar energy costs have fallen more than 80 percent). Over the same twenty-five years, the proportion of global energy use derived from renewables has not grown an inch. Solar isn’t eating away at fossil fuel use, in other words, even slowly; it’s just buttressing it. To the market, this is growth; to human civilization, it is almost suicide. We are now burning 80 percent more coal than we were just in the year 2000. And energy is, actually, the least of it. As the futurist Alex Steffen has incisively put it, in a Twitter performance that functions as a “Powers of Ten” for the climate crisis, the transition from dirty electricity to clean sources is not the whole challenge. It’s just the lowest-hanging fruit: “smaller than the challenge of electrifying almost everything that uses power,” Steffen says, by which he means anything that runs on much dirtier gas engines. That task, he continues, is smaller than the challenge of reducing energy demand, which is smaller than the challenge of reinventing how goods and services are provided—given that global supply chains are built with dirty infrastructure and labor markets everywhere are still powered by dirty energy. There is also the need to get to zero emissions from all other sources—deforestation, agriculture, livestock, landfills. And the need to protect all human systems from the coming onslaught of natural disasters and extreme weather. And the need to erect a system of global government, or at least international cooperation, to coordinate such a project. All of which is a smaller task, Steffen says, “than the monumental cultural undertaking of imagining together a thriving, dynamic, sustainable future that feels not only possible, but worth fighting for.” On this last point I see things differently—the imagination isn’t the hard part, especially for those less informed about the challenges than Steffen is. If we could wish a solution into place by imagination, we’d have solved the problem already. In fact, we have imagined the solutions; more than that, we’ve even developed them, at least in the form of green energy. We just haven’t yet discovered the political will, economic might, and cultural flexibility to install and activate them, because doing so requires something a lot bigger, and more concrete, than imagination—it means nothing short of a complete overhaul of the world’s energy systems, transportation, infrastructure and industry and agriculture. Not to mention, say, our diets or our taste for Bitcoin. The cryptocurrency now produces as much CO2 each year as a million transatlantic flights. — We think of climate change as slow, but it is unnervingly fast. We think of the technological change necessary to avert it as fastarriving, but unfortunately it is deceptively slow—especially judged by just how soon we need it. AT: Extinction No Nuke Winter---1NC Datasets prove there would be rainout because of chemical conversion to hydrophilic black carbon---eliminates the entire climate effect within days---and that’s an overestimate. Reisner et al. 18. Jon Reisner, atmospheric researcher at LANL Climate and Atmospheric Sciences; Gennaro D'Angelo, UKAFF Fellow and member of the Astrophysics Group at the School of Physics of the University of Exeter, Research Scientist with the Carl Sagan Center at the SETI Institute, currently works for the Los Alamos National Laboratory Theoretical Division; Eunmo Koo, scientist in the Computational Earth Science Group at LANL, recipient of the NNSA Defense Program Stockpile Stewardship Program award of excellence; Wesley Even, R&D Scientist at CCS-2, LANL, specialist in computational physics and astrophysics; Matthew Hecht is a member of the Computational Physics and Methods Group in the Climate, Ocean and Sea Ice Modelling program (COSIM) at LANL, who works on modeling high-latitude atmospheric effects in climate models as part of the HiLAT project; Elizabeth Hunke, Lead developer for the Los Alamos Sea Ice Model, Deputy Group Leader of the T-3 Fluid Dynamics and Solid Mechanics Group at LANL; Darin Comeau, Scientist at the CCS-2 COSIM program, specializes in high dimensional data analysis, statistical and predictive modeling, and uncertainty quantification, with particular applications to climate science; Randall Bos is a research scientist at LANL specializing in urban EMP simulations; James Cooley is a Group Leader within CCS-2. 03/16/2018. “Climate Impact of a Regional Nuclear Weapons Exchange: An Improved Assessment Based On Detailed Source Calculations.” Journal of Geophysical Research: Atmospheres, vol. 123, no. 5, pp. 2752–2772. *BC = Black Carbon The no-rubble simulation produces a significantly more intense fire, with more fire spread, and consequently a significantly stronger plume with larger amounts of BC reaching into the upper atmosphere than the simulation with rubble, illustrated in Figure 5. While the no-rubble simulation represents the worst-case scenario involving vigorous fire activity, only a relatively small amount of carbon makes its way into the stratosphere during the course of the simulation. But while small compared to the surface BC mass, stratospheric BC amounts from the current simulations are significantly higher than what would be expected from burning vegetation such as trees (Heilman et al., 2014), e.g., the higher energy density of the building fuels and the initial fluence from the weapon produce an intense response within HIGRAD with initial updrafts of order 100 m/s in the lower troposphere. Or, in comparison to a mass fire, wildfires will burn only a small amount of fuel in the corresponding time period (roughly 10 minutes) that a nuclear weapon fluence can effectively ignite a large area of fuel producing an impressive atmospheric response. Figure 6 shows vertical profiles of BC multiplied by 100 (number of cities involved in the exchange) from the two simulations. The total amount of BC produced is in line with previous estimates (about 3.69 Tg from no-rubble simulation); however, the majority of BC resides below the stratosphere (3.46 Tg below 12 km) and can be readily impacted by scavenging from precipitation either via pyro-cumulonimbus produced by the fire itself (not modeled) or other synoptic weather systems. While the impact on climate of these more realistic profiles will be explored in the next section, it should be mentioned that these estimates are still at the high end, considering the inherent simplifications in the combustion model that lead to overestimating BC production. 3.3 Climate Results Long-term climatic effects critically depend on the initial injection height of the soot, with larger quantities reaching the upper troposphere/lower stratosphere inducing a greater cooling impact because of longer residence times (Robock et al., 2007a). Absorption of solar radiation by the BC aerosol and its subsequent radiative cooling tends to heat the surrounding air, driving an initial upward diffusion of the soot plumes, an effect that depends on the initial aerosol concentrations. Mixing and sedimentation tend to reduce this process, and low altitude emissions are also significantly impacted by precipitation if aging of the BC aerosol occurs on sufficiently rapid timescales. But once at stratospheric altitudes, aerosol dilution via coagulation is hindered by low particulate concentrations (e.g., Robock et al., 2007a) and lofting to much higher altitudes is inhibited by gravitational settling in the low-density air (Stenke et al., 2013), resulting in more stable BC concentrations over long times. Of the initial BC mass released in the atmosphere, most of which is emitted below 9 km, 70% rains out within the first month and 78%, or about 2.9 Tg, is removed within the first two months (Figure 7, solid line), with the remainder (about 0.8 Tg, dashed line) being transported above about 12 km (200 hPa) within the first week. This outcome differs from the findings of, e.g., Stenke et al. (2013, their high BC-load cases) and Mills et al. (2014), who found that most of the BC mass (between 60 and 70%) is lifted in the stratosphere within the first couple of weeks. This can also be seen in Figure 8 (red lines) and in Figure 9, which include results from our calculation with the initial BC distribution from Mills et al. (2014). In that case, only 30% of the initial BC mass rains out in the troposphere during the first two weeks after the exchange, with the remainder rising to the stratosphere. In the study of Mills et al. (2008) this percentage is somewhat smaller, about 20%, and smaller still in the experiments of Robock et al. (2007a) in which the soot is initially emitted in the upper troposphere or higher. In Figure 7, the e-folding timescale for the removal of tropospheric soot, here interpreted as the time required for an initial drop of a factor e, is about one week. This result compares favorably with the “LT” experiment of Robock et al. (2007a), considering 5 Tg of BC released in the lower troposphere, in which 50% of the aerosols are removed within two weeks. By contrast, the initial e-folding timescale for the removal of stratospheric soot in Figure 8 is about 4.2 years (blue solid line), compared to about 8.4 years for the calculation using Mills et al. (2014) initial BC emission (red solid line). The removal timescale from our forced ensemble simulations is close to those obtained by Mills et al. (2008) in their 1 Tg experiment, by Robock et al. (2007a) in their experiment “UT 1 Tg”, and © 2018 American Geophysical Union. All rights reserved. by Stenke et al. (2013) in their experiment “Exp1”, in all of which 1 Tg of soot was emitted in the atmosphere in the aftermath of the exchange. Notably, the e-folding timescale for the decline of the BC mass in Figure 8 (blue solid line) is also close to the value of about 4 years quoted by Pausata et al. (2016) for their long-term “intermediate” scenario. In that scenario, which is also based on 5 Tg of soot initially distributed as in Mills et al. (2014), the factor-of2 shorter residence time of the aerosols is caused by particle growth via coagulation of BC with organic carbon. Figure 9 shows the BC mass-mixing ratio, horizontally averaged The BC distributions used in our simulations imply that the upward transport of particles is substantially less efficient compared to the case in which 5 Tg of BC is directly injected into the upper troposphere. The semiannual cycle of lofting and sinking of the aerosols is associated with atmospheric over the globe, as a function of atmospheric pressure (height) and time. heating and cooling during the solstice in each hemisphere (Robock et al., 2007a). During the first year, the oscillation amplitude in our forced ensemble simulations is particularly large during the summer solstice, compared to that during the winter solstice (see bottom panel of Figure 9), because of the higher soot concentrations in the Northern Hemisphere, as can be seen in Figure 11 (see also left panel of Figure 12). Comparing the top and bottom panels of Figure 9, the BC reaches the highest altitudes during the first year in both cases, but the concentrations at 0.1 hPa in the top panel can be 200 times as large. Qualitatively, the difference can be understood in terms of the air temperature increase caused by BC radiation emission, which is several tens of kelvin degrees in the simulations of Robock et al. (2007a, see their Figure 4), Mills et al. (2008, see their Figure 5), Stenke et al. (2013, see highload cases in their Figure 4), Mills et al. (2014, see their Figure 7), and Pausata et al. (2016, see one-day emission cases in their Figure 1), due to high BC concentrations, but it amounts to only about 10 K in our forced ensemble simulations, as illustrated in Figure 10. Results similar to those presented in Figure 10 were obtained from the experiment “Exp1” performed by Stenke et al. (2013, see their Figure 4). In that scenario as well, somewhat less that 1 Tg of BC remained in the atmosphere after the initial rainout. As mentioned before, the BC aerosol that remains in the atmosphere, lifted to stratospheric heights by the rising soot plumes, undergoes sedimentation over a timescale of several years (Figures 8 and 9). This mass represents the effective amount of BC that can force climatic changes over multi-year timescales. In the forced ensemble simulations, it is about 0.8 Tg after the initial rainout, whereas it is about 3.4 Tg in the simulation with an initial soot distribution as in Mills et al. (2014). Our more realistic source simulation involves the worstcase assumption of no-rubble (along with other assumptions) and hence serves as an upper bound for the impact on climate. As mentioned above and further discussed below, our scenario induces perturbations on the climate system similar to those found in previous studies in which the climatic response was driven by roughly 1 Tg of soot rising to stratospheric heights following the exchange. Figure 11 illustrates the vertically integrated mass-mixing ratio of BC over the globe, at various times after the exchange for the simulation using the initial BC distribution of Mills et al. (2014, upper panels) and as an average from the forced ensemble members (lower panels). All simulations predict enhanced concentrations at high latitudes during the first year after the exchange. In the cases shown in the top panels, however, these high concentrations persist for several years (see also Figure 1 of Mills et al., 2014), whereas the forced ensemble simulations indicate that the BC concentration starts to decline after the first year. In fact, in the simulation represented in the top panels, mass-mixing ratios larger than about 1 kg of BC © 2018 American Geophysical Union. All rights reserved. per Tg of air persist for well over 10 years after the exchange, whereas they only last for 3 years in our forced simulations (compare top and middle panels of Figure 9). After the first year, values drop below 3 kg BC/Tg air, whereas it takes about 8 years to reach these values in the simulation in the top panels (see also Robock et al., 2007a). Over crop-producing, midlatitude regions in the Northern Hemisphere, the BC loading is reduced from more than 0.8 kg BC/Tg air in the simulation in the top panels to 0.2-0.4 kg BC/Tg air in our forced simulations (see middle and right panels). The more rapid clearing of the atmosphere in the forced ensemble is also signaled by the soot optical depth in the visible radiation spectrum, which drops below values of 0.03 toward the second half of the first year at mid latitudes in the Northern Hemisphere, and everywhere on the globe after about 2.5 years (without never attaining this value in the Southern Hemisphere). In contrast, the soot optical depth in the calculation shown in the top panels of Figure 11 becomes smaller than 0.03 everywhere only after about 10 years. The two cases show a similar tendency, in that the BC optical depth is typically lower between latitudes 30º S-30º N than it is at other latitudes. This behavior is associated to the persistence of stratospheric soot toward high-latitudes and the Arctic/Antarctic regions, as illustrated by the zonally-averaged, column-integrated mass-mixing ratio of the BC in Figure 12 for both the forced ensemble simulations (left panel) and the simulation with an initial 5 Tg BC emission in the upper troposphere (right panel). The spread in the globally averaged (near) surface temperature of the atmosphere, from the control (left panel) and forced (right panel) ensembles, is displayed in Figure 13. For each month, the plots show the largest variations (i.e., maximum and minimum values), within each ensemble of values obtained for that month, relative to the mean value of that month. The plot also shows yearly-averaged data (thinner lines). The spread is comparable in the control and forced ensembles, with average values calculated over the 33-years run length of 0.4-0.5 K. This spread is also similar to the internal variability of the globally averaged surface temperature quoted for the NCAR Large Ensemble Community Project (Kay et al., 2015). These results imply that surface air temperature differences, between forced and control simulations, which lie within the spread may not be distinguished from effects due to internal variability of the two simulation ensembles. Figure 14 shows the difference in the globally averaged surface temperature of the atmosphere (top panel), net solar radiation flux at surface (middle panel), and precipitation rate (bottom panel), computed as the (forced minus control) difference in ensemble mean values. The sum of standard deviations from each ensemble is shaded. Differences are qualitatively significant over the first few years, when the anomalies lie near or outside the total standard deviation. Inside the shaded region, differences may not be distinguished from those arising from the internal variability of one or both ensembles. The surface solar flux (middle panel) is the quantity that appears most affected by the BC emission, with qualitatively significant differences persisting for about 5 years. The precipitation rate (bottom panel) is instead affected only at the very beginning of the simulations. The red lines in all panels show the results from the simulation applying the initial BC distribution of Mills et al. (2014), where the period of significant impact is much longer owing to the higher altitude of the initial soot distribution that results in longer residence times of the BC aerosol in the atmosphere. When yearly averages of the same quantities are performed over the IndiaPakistan region, the differences in ensemble mean values lie within the total standard deviations of the two ensembles. The results in Figure 14 can also be compared to the outcomes of other previous studies. In their experiment “UT 1 Tg”, Robock et al. (2007a) found that, when only 1 Tg of soot © 2018 American Geophysical Union. All rights reserved. remains in the atmosphere after the initial rainout, temperature and precipitation anomalies are about 20% of those obtained from their standard 5 Tg BC emission case. Therefore, the largest differences they observed, during the first few years after the exchange, were about - 0.3 K and -0.06 mm/day, respectively, comparable to the anomalies in the top and bottom panels of Figure 14. Their standard 5 Tg emission case resulted in a solar radiation flux anomaly at surface of -12 W/m2 after the second year (see their Figure 3), between 5 and 6 time as large as the corresponding anomalies from our ensembles shown in the middle panel. In their experiment “Exp1”, Stenke et al. (2013) reported global mean surface temperature anomalies not exceeding about 0.3 K in magnitude and precipitation anomalies hovering around -0.07 mm/day during the first few years, again consistent with the results of Figure 14. In a recent study, Pausata et al. (2016) considered the effects of an admixture of BC and organic carbon aerosols, both of which would be emitted in the atmosphere in the aftermath of a nuclear exchange. In particular, they concentrated on the effects of coagulation of these aerosol species and examined their climatic impacts. The initial BC distribution was as in Mills et al. (2014), although the soot burden was released in the atmosphere over time periods of various lengths. Most relevant to our and other previous work are their one-day emission scenarios. They found that, during the first year, the largest values of the atmospheric surface temperature anomalies ranged between about -0.5 and -1.3 K, those of the sea surface temperature anomalies ranged between -0.2 and -0.55 K, and those of the precipitation anomalies varied between -0.15 and -0.2 mm/day. All these ranges are compatible with our results shown in Figure 14 as red lines and with those of Mills et al. (2014, see their Figures 3 and 6). As already mentioned in Section 2.3, the net solar flux This overall agreement suggests that the inclusion of organic carbon aerosols, and ensuing coagulation with BC, should not dramatically alter the climatic effects resulting from our forced ensemble simulations. Moreover, aerosol growth would likely shorten the residence time of the BC particulate in the atmosphere (Pausata et al., 2016), possibly reducing the duration of these effects. anomalies at surface are also consistent. Atmospheric testing by the DOD disproves---regardless of model uncertainty. Frankel et al. 15. Dr. Michael J. Frankel is a senior scientist at Penn State University’s Applied Research Laboratory, where he focuses on nuclear treaty verification technologies, is one of the nation’s leading experts on the effects of nuclear weapons, executive director of the Congressional Commission to Assess the Threat to the United States from Electromagnetic Pulse Attack, led development of fifteenyear global nuclear threat technology projections and infrastructure vulnerability assessments; Dr. James Scouras is a national security studies fellow at the Johns Hopkins University Applied Physics Laboratory and the former chief scientist of DTRA’s Advanced Systems and Concepts Office; Dr. George W. Ullrich is chief technology officer at Schafer Corporation and formerly senior vice president at Science Applications International Corporation (SAIC), currently serves as a special advisor to the USSTRATCOM Strategic Advisory Group’s Science and Technology Panel and is a member of the Air Force Scientific Advisory Board. 04-15-15. “The Uncertain Consequences of Nuclear Weapons Use.” The Johns Hopkins University Applied Physics Laboratory. DTIC. https://apps.dtic.mil/dtic/tr/fulltext/u2/a618999.pdf Scientific work based on real data, rather than models, also cast additional doubt on the basic premise. Interestingly, publication of several contradictory papers describing experimental observations actually predated Schell’s work. In 1973, nine years before publication of The Fate of the Earth, a published report failed to find any ozone depletion during the peak period of atmospheric nuclear testing.26 In another work published in 1976, attempts to measure the actual ozone depletion associated with Russian megaton-class detonations and Chinese nuclear tests were also unable to detect any significant effect.27 At present, with the reduced arsenals and a perceived low likelihood of a large-scale exchange on the scale of Cold War planning scenarios, official concern over nuclear ozone depletion has essentially fallen off the table. Yet continuing scientific studies by a small dedicated community of researchers suggest the potential for dire consequences, even for relatively small regional nuclear wars involving Hiroshimasize bombs. Nuclear Winter The possibility of catastrophic climate changes came as yet another surprise to Department of Defense scientists. In 1982, Crutzen and Birks highlighted the potential effects of high-altitude smoke on climate,29 and in 1983, a research team consisting of Turco, Toon, Ackerman, Pollack, and Sagan (referred to as TTAPS) suggested that a fivethousand-megaton strategic exchange of weapons between the United States and the Soviet Union could effectively spell national suicide for both belligerents.30 They argued that a massive nuclear exchange between the United States and the Soviet Union would inject copious amounts of soot, generated by massive firestorms such as those witnessed in Hiroshima, into the stratosphere where it might reside indefinitely. Additionally, the soot would be accompanied by dust swept up in the rising thermal column of the nuclear fireball. The combination of dust and soot could scatter and absorb sunlight to such an extent that much of Earth would be engulfed in darkness sufficient to cease photosynthesis. Unable to sustain agriculture for an extended period of time, much of the planet’s population would be doomed to perish, and—in its most extreme rendition—humanity would follow the dinosaurs into extinction and by much the same mechanism.31 Subsequent refinements by the TTAPS authors, such as an extension of computational efforts to three-dimensional models, continued to produce qualitatively similar results. The TTAPS results were severely criticized, and a lively debate ensued between passionate critics of and defenders of the analysis. Some of the technical objections critics raised included the TTAPS team’s neglect of the potentially significant role of clouds;32 lack of an accurate model of coagulation and rainout;33 inaccurate capture of feedback mechanisms;34 “fudge factor” fits of micrometer-scale physical processes assumed to hold constant for changed atmospheric chemistry conditions and uniformly averaged on a grid scale of hundreds of kilometers;35 the dynamics of firestorm formation, rise, and smoke injection;36 and estimates of the optical properties and total amount of fuel available to generate the assumed smoke loading. In particular, more careful analysis of the range of uncertainties associated with the widely varying published estimates of fuel quantities and properties suggested a possible range of outcomes encompassing much milder impacts than anything predicted by TTAPS.37 Aside from the technical issues critics raised, the five-thousand-megaton baseline exchange scenario TTAPS envisioned was rendered obsolete when the major powers decreased both their nuclear arsenals and the average yield of the remaining weapons. With the demise of the Soviet Union, the nuclear winter issue essentially fell off the radar screen for Department of Defense scientists, which is not to say that it completely disappeared from the scientific literature. In the last few years, a number of analysts, including some of the original TTAPS authors, suggested that even a “modest” regional exchange of nuclear weapons—one hundred explosions of fifteenkiloton devices in an Indian– Pakistani exchange scenario—might yet produce significant worldwide climate effects, if not the full-blown “winter.”38 However, such concerns have failed to gain much traction in Department of Defense circles. Nuclear winter is a KGB invention---defectors prove---<<AND, the agent who leaked this was assassinated recently! Spookie>> James Slate 18. Citing Stanislav Lunev, GRU officer, and KGB officer Sergei Tretyakov, 1-29-2018. "How the Soviet Union helped shape the modern peace Movement." Medium. https://medium.com/@JSlate__/how-the-soviet-union-helped-shape-the-modern-peace-movementd797071d4b2c In 1951 the House Committee on Un-American Activities published The Communist “Peace” Offensive, which detailed the activities of the WPC and of numerous affiliated organisations. It listed dozens of American organisations and hundreds of Americans who had been involved in peace meetings, conferences and petitions. It noted, “that some of the persons who are so described in either the text or the appendix withdrew their support and/or affiliation with these organizations when the Communist character of these organizations was discovered. There may also be persons whose names were used as sponsors or affiliates of these organizations without permission or knowledge of the individuals involved.” Russian GRU defector Stanislav Lunev said in his autobiography that “the GRU and the KGB helped to fund just about every antiwar movement and organization in America and abroad,” and that during the Vietnam War the USSR gave $1 billion to American anti-war movements, more than it gave to the VietCong,although he does not identify any organisation by name. Lunev described this as a “hugely successful campaign and well worth the cost”. The former KGB officer Sergei Tretyakov said that the Soviet Peace Committee funded and organized demonstrations in Europe against US bases. According to Time magazine, a US State Department official estimated that the KGB may have spent $600 million on the peace offensive up to 1983, channeling funds through national Communist parties or the World Peace Council “to a host of new antiwar organizations that would, in many cases, reject the financial help if they knew the source.”Richard Felix Staar in his book Foreign Policies of the Soviet Union says that non-communist peace movements without overt ties to the USSR were “virtually controlled” by it. Lord Chalfont claimed that the Soviet Union was giving the European peace movement £100 million a year. The Federation of Conservative Students (FCS) also alleged Soviet funding of the CND. In 1982 the Heritage Foundation published Moscow and the Peace Offensive, which said that non-aligned peace organizations advocated similar policies on defence and disarmament to the Soviet Union. It argued that “pacifists and concerned Christians had been drawn into the Communist campaign largely unaware if its real sponsorship.” U.S. plans in the late 1970s and early 1980s to deploy Pershing II missiles in Western Europe in response to the Soviet SS-20 missiles were contentious, prompting Paul Nitze, the American negotiator, to suggest a compromise plan for nuclear missiles in Europe in the celebrated “walk in the woods” with Soviet negotiator Yuli Kvitsinsky, but the Soviets never responded.Kvitsinsky would later write that, despite his efforts, the Soviet side was not interested in compromise, calculating instead that peace movements in the West would force the Americans to capitulate. In November 1981, Norway expelled a suspected KGB agent who had offered bribes to Norwegians to get them to write letters to newspapers denouncing the deployment of new NATO missiles. In 1985 Time magazine noted “the suspicions of some Western scientists that the nuclear winter hypothesis was promoted by Moscow to give antinuclear groups in the U.S. and Europe some fresh ammunition against America’s arms buildup.”Sergei Tretyakov claimed that the data behind the nuclear winter scenario was faked by the KGB and spread in the west as part of a campaign against Pershing II missiles.He said that the first peer-reviewed paper in the development of the nuclear winter hypothesis, “Twilight at Noon” by Paul Crutzen and John Birks was published as a result of this KGB influence. Even if not a total hoax it is strongly politicized. ---empirically disproven---Kuwaiti oil fires ---their studies have been tested multiple times and unbiased analysts proved it was for political reasons S. Fred Singer 18. Professor emeritus at the University of Virginia and a founding director and now chairman emeritus of the Science & Environmental Policy Project, specialist in atmospheric and space physics, founding director of the U.S. Weather Satellite Service, now part of NOAA, served as vice chair of the U.S. National Advisory Committee on Oceans &amp; Atmosphere, an elected fellow of several scientific societies, including APS, AGU, AAAS, AIAA, Sigma Xi, and Tau Beta Pi, and a senior fellow of the Heartland Institute and the Independent Institute. 6-27-2018. "Remember Nuclear Winter?." American Thinker. https://www.americanthinker.com/articles/2018/06/remember_nuclear_winter.html Nuclear Winter burst on the academic scene in December 1983 with the publication of the hypothesis in the prestigious journal Science. It was accompanied by a study by Paul Ehrlich, et al. that hinted that it might cause the extinction of human life on the planet. The five authors of the Nuclear Winter hypothesis were labeled TTAPS, using the initials of their family names (T stands for Owen Toon and P stands for Jim Pollak, both Ph.D. students of Carl Sagan at Cornell University.) Carl Sagan himself was the main author and driving force.Actually, Sagan had scooped the Science paper by publishing the gist of the hypothesis in Parade magazine, which claimed a readership of 50 mil lion! Previously, Sagan had briefed people in public office and elsewhere, so they were all primed for the popular reaction, which was tremendous. Many of today's readers may not remember Carl Sagan. He was a brilliant astrophysicist but also highly political. Imagine Al Gore, but with an excellent science background. Sagan had developed and narrated a television series called Cosmos that popularized astrophysics and much else, including cosmology, the history of the universe. He even suggested the possible existence of extraterrestrial intelligence and started a listening project called SETI (Search for Extraterrestrial Intelligence). SETI is still searching today and has not found any evidence so far. Sagan became a sort of icon; many people in the U.S. and abroad knew his name and face. Carl Sagan also had another passion: saving humanity from a general nuclear war, a laudable aim. He had been arguing vigorously and publicly for a "freeze" on the production of more nuclear weapons. President Ronald Reagan outdid him and negotiated a nuclear weapons reduction with the USSR. In the meantime, much excitement Study after study tried to confirm and expand the hypothesis, led by the Defense Department (DOD), which took the hypothesis seriously and spent millions of dollars on various reports that accepted Nuclear Winter rather uncritically. The National Research Council (NRC) of the National Academy of Sciences published a report that put in more quantitative detail. It enabled critics of the hypothesis to find flaws – and many did. The names Russell Seitz, Dick Wilson (both of Cambridge, Mass.), Steve Schneider (Palo Alto, Calif.), and Bob Ehrlich (Fairfax, Va.) (no relation to Paul Ehrlich) come to mind. The hypothesis was really "politics disguised as science." The whole TTAPS scheme was contrived to deliver the desired consequence. It required the smoke layer to be of just the right thickness, covering the whole Earth, and lasting for many months. The Kuwait oil fires in 1991 produced a lot of smoke, but it rained out after a few days. I had a was stirred up by Nuclear Winter. mini-debate with Sagan on the TV program Nightline and published a more critical analysis of the whole hypothesis in the journal Meteorology & Atmospheric Physics. I don't know if Carl ever saw my paper. But I learned a lot from doing this analysis that was useful in later global warming research. For example, the initial nuclear bursts inject water vapor into the stratosphere, which turns into contrail-like cirrus clouds. That actually leads to a strong initial warming and a "nuclear summer." 2NC—No Nuke Winter—AT: Extinction Even if nuke winter is true, it doesn’t cause extinction, even under worst case assumptions. Andrew Yoram Glikson 17. School of Archaeology and Anthropology @ Australian National University. 2017. “The Event Horizon.” The Plutocene: Blueprints for a Post-Anthropocene Greenhouse Earth, Springer, Cham, pp. 83–96. link.springer.com, doi:10.1007/978-3-319-57237-6_3. As these lines are written some 16,000 N-bombs are poised available for a suicidal “use them or lose them” strategy where perhaps some 50% may be dispatched, enough to kill billions. Much would depend on the sequence of events: following a short nuclear winter survivor would emerge into a heating world, or an AMOC collapse28 taking place either before or after a nuclear event. The collapse as industry and transport systems will lead to a decline in CO2 emissions. The increasing likelihood of global conflict and a consequent nuclear winter would constitute a coup-degrace for industrial civilization (Carl Sagan29) though not necessarily for humans. Relatively low temperatures in the northern latitudes would allow humans to survive, in particular indigenous people genetically and culturally adapted to extreme conditions. Perhaps similar conditions could apply in high mountain valleys and elevated tropical islands. Vacated habitats would be occupied by burrowing animals and by radiation and temperature resistant Arthropods. We don’t need to win much to outweigh---100 survivors could reboot civilization. Alexey Turchin & David Denkenberger 18. Turchin is a researcher at the Science for Life Extension Foundation; Denkenberger is with the Global Catastrophic Risk Institute (GCRI) @ Tennessee State University, Alliance to Feed the Earth in Disasters (ALLFED). 09/2018. “Global Catastrophic and Existential Risks Communication Scale.” Futures, vol. 102, pp. 27–38. “Civilizational collapse risks” As most human societies are fairly complex, a true civilizational collapse would require a drastic reduction in human population, and the break-down of connections between surviving populations. Survivors would have to rebuild civilization from scratch, likely losing much technological abilities and knowledge in the process. Hanson (2008) estimated that the minimal human population able to survive is around 100 people. Like X risks, there is little agreement on what is required for civilizational collapse. Clearly, different types and levels of the civilizational collapse are possible (Diamond, 2005) (Meadows, Randers, & Meadows, 2004). For instance, one definition of the collapse of civilization involves, collapse of long distance trade, widespread conflict, and loss of government (Coates, 2009). How such collapses relate to existential risk needs more research. Read their studies skeptically---most say famines would kill “billions” and some casually assert everyone would die---BUT, that’s an assumption not warranted by their studies---far more likely that some remnants would survive. David S. Stevenson 17. Professor of planetary science at Caltech. 2017. “Agents of Mass Destruction.” The Nature of Life and Its Potential to Survive, Springer, Cham, pp. 273–340. link.springer.com, doi:10.1007/978-3-319-52911-0_7. What of humanity? Could it survive? In short, yes, if we are prepared to adapt to a life underground. Here, small communities of people could live on, feeding directly from the remnant biosphere, or from artificially lit greenhouse-cultivated plants. Humanity could persist in a vast underground ark. Here we could continue as a subterranean species, living for billions of years. Life could even become pleasant with enough sub-surface engineering. However, escape would only be permissible if we maintained sufficient technology to reach and re-colonize the frigid surface. With far more limited resources, and with most people likely having been wiped out in the initial freeze, the number of survivors in such caves might be measured in the hundreds. Survival of humanity would depend on whoever survived by maintaining a The Nature of Life and Its Potential to Survive 311 power supply, having food reserves, water reserves and seeds. If you could not maintain the food supply, most survivors would die of starvation within weeks of moving underground. AT: Chalko Chalko is a hack and is wrong about earthquakes—proves we shouldn’t trust him about nuclear war Chalko also flips neg – He thinks warming will make the earth explode, and the only way to slow warming is through spark Wesley J. Smith 08 [6-19-2008, "The Politicization of Science or the Stupidity of Media?", National Review, https://www.nationalreview.com/human-exceptionalism/politicization-science-or-stupiditymedia-wesley-j-smith/, Wesley J. Smith is an author and a senior fellow at the Discovery Institute’s Center on Human Exceptionalism.] This blog does not deal with global warming or climate change. But it does deal with the corruption of science that comes from overt politicization of science papers and advocacy. And this one might just take the cake. According to one scientist, the world is experiencing an increase in destructive seismic activity–and it is due to global warming! From the story on the CBS Website: New research compiled by Australian scientist Dr. Tom Chalko shows that global seismic activity on Earth is now five times more energetic than it was just 20 years ago. The research proves that destructive ability of earthquakes on Earth increases alarmingly fast and that this trend is set to continue, unless the problem of “global warming” is comprehensively and urgently addressed. What? How could a degree or two of warming in the last 100 years cause earthquakes? “NASA measurements from space confirm that Earth as a whole absorbs at least 0.85 Megawatt per square kilometer more energy from the Sun than it is able to radiate back to space. This ‘thermal imbalance’ means that heat generated in the planetary interior cannot escape and that the planetary interior must overheat. Increase in seismic, tectonic and volcanic activities is an unavoidable consequence of the observed thermal imbalance of the planet,” said Dr. [Tom] Chalko. Dr. Chalko has urged other scientists to maximize international awareness of the rapid increase in seismic activity, pointing out that this increase is not theoretical but that it is an Observable Fact. “Unless the problem of global warming (the problem of persistent thermal imbalance of Earth) is addressed urgently and comprehensively – the rapid increase in global seismic, volcanic and tectonic activity is certain. Consequences of inaction can only be catastrophic. There is no time for halfmeasures.” I would say this is a hoax, but it appeared in the AP so it must be true! Moreover, Dr. Chalko is real. Well, he’s really far out. He’s into space aliens, auras, and believes that global warming could cause the earth to explode. Now space aliens and auras might be real, but someone who promotes their existence and worries that thermal imbalance will cause the earth to explode is hardly an “expert” whose opinions should be quoted by the AP and reported on CBS on global warming! When the media want something to be true–they will print anything! In fact, the AP apparently copied the story whole from Dr. Chalko’s Web page. Or, it wasn’t AP at all and CBS was fooled. Check it out! He assumes neutron bombs – Nobody has those except maybe Israel - ERW = Enhanced Radiation Weapon, aka neutron bomb Tim Cole 16 [3-12-2016, "Are neutron bombs, which kill people without damaging property, still part of major powers nuclear arsenals?", Quora, https://www.quora.com/Are-neutron-bombs-which-killpeople-without-damaging-property-still-part-of-major-powers-nuclear-arsenals, Tim Cole is a military history enthusiast, interested in the history of nuclear energy, specializing in nuclear weaponry] Now that all that's out of the way, on to your primary question. It's very difficult to know exactly what's in nuclear weapons storage bunkers. This sort of thing is highly classified. From the public information I can find, the US does not keep ERW weapons in their active stockpile, and neither does the UK. I don't think France does, but I'm not as confident about that assertion. It doesn't appear that Russia has active ERWs, either, though that's a tougher thing to know. Their security apparatus covers a lot more military activities than ours does. As to whether China has ERWs, that's even harder to track down. From what little I can read, it doesn't appear they do, but I can guarantee you this: if China wants ERWs, they will have them. It won't take long, either. Israel is widely thought to have at least some ERWs. Of course, none but a very few people know for certain what Israel has in its arsenal. India and Pakistan? I very much doubt they have ERWs. North Korea? Not likely. AT: Disease/Famine Checks Won’t lower population enough – History proves Joseph George Caldwell 18 [5-15-2018, "Is Nuclear War Survivable?", Foundation, http://foundationwebsite.org/IsNuclearWarSurvivable.htm, Caldwell is a Consultant in Statistics, Economics, Information Technology and Operations Research, but let’s face it, you probably already know what’s going on here] Damage to the biosphere caused by large human numbers and industrial production is not the only existential threat to humanity. Other existential threats include disease – both for human beings and their plant and animal food sources – and nuclear war. (This article does not address existential threats over which mankind has no control, such as collision with a large asteroid.) History shows that the human species is incredibly resistant to disease. Whatever new disease comes along may kill a large proportion of the population, but no large population is ever wiped out. That is not to say it might not happen – it just appears, based on history, that it is not likely. With the advent of mass transportation and commerce on a global scale, foreign species have been introduced around the world, and are wreaking havoc with once-stable environments. Ecological events such as these are routinely treated with pesticides and genetic modification. They may even contribute to starvation and famine, but historically they have not had an appreciable effect on reducing total human numbers and industrial production. Based on history, it appears that disease and pestilence are not serious existential threats to mankind. The world’s population has been operating in a highly intermingling mode for some time, and collapse has not occurred. Of the various threats to mankind’s existence, it appears that just one, nuclear war, is a serious threat. AT: Environment We’ll turn it – Even if there is a risk nuclear attacks destroy the environment; industrialism guarantees environmental collapse – It’s try-or-die for spark. Nukes don’t irreversibly harm the environment Michael J. Lawrence et al. 15 [8-14-2015, “The effects of modern war and military activities on biodiversity and the environment”, National Research Council Research Press, p. 6, https://www.researchgate.net/publication/283197996_The_effects_of_modern_war_and_military_acti vities_on_biodiversity_and_the_environment; M.J. Lawrence, A.J. Zolderdo, D.P. Struthers, and S.J. Cooke, Fish Ecology and Conservation Physiology Laboratory, Department of Biology and Institute of Environmental Science, Carleton University. H.L.J. Stemberger, Department of Biology, Carleton University] Both thermal and kinetic impacts of a nuclear detonation occur over an acute timeframe and would likely result in a great reduction in the abundances and diversity of local flora and fauna. However, over a more chronic duration, these impacts are likely to be minimal as populations and diversity could recover through dispersal to the area as well as contributions from surviving organisms. Indeed, this has been observed in a number of plant (Palumbo 1962; Shields and Wells 1962; Shields et al. 1963; Beatley 1966; Fosberg 1985; Hunter 1991, 1992) and animal (Jorgensen and Hayward 1965; O’Farrell 1984; Hunter 1992; Wills 2001; Kolesnikova et al. 2005; Pinca et al. 2005; Planes et al. 2005; Richards et al. 2008; Houk and Musburger 2013) communities from a diversity of testing site environments. In some instances, the exclusion of human activity from test sites has been quite beneficial to the recovery and prosperity of organisms found in these areas, as in the case of the atolls of the Marshall Islands (see Fig. 2 [omitted]; Davis 2007; Richards et al. 2008; Houk and Musburger 2013). AT: EMP Fake news Alice Friedemann 17. Author of “When Trucks Stop Running: Energy and the Future of Transportation”, 2015, Springer and “Crunch! Whole Grain Artisan Chips and Crackers”. Podcasts: Derrick Jensen, Practical Prepping, KunstlerCast 253, KunstlerCast278, Peak Prosperity , XX2 report. 12-20-17. “Dangers of EMP exaggerated?.” http://energyskeptic.com/2017/dangers-of-emp-exaggerated/ Warnings that North Korea could detonate a nuclear bomb in orbit to knock out US electrical infrastructure are far-fetched, says arms expert. There is no shame in enjoying dystopian science fiction – it helps us contemplate the ways in which civilisation might fail. But it is dangerous to take the genre’s allegorical warnings literally, as too many people do when talk turns to a possible electromagnetic pulse (EMP) attack. There have been repeated recent claims that North Korea could use a nuclear bomb in space to produce an EMP to ruin US infrastructure and cause societal collapse. This is silly. We know a nuclear explosion can cause an EMP – a burst of energy that can interfere with electrical systems – because of a 1962 US test called Starfish Prime. US nuclear weaponeers wanted to see if it was capable of blacking out military equipment. A bomb was launched to 400 kilometres above the Pacific before exploding with the force of 1.5 megatons of TNT. But it was a let-down for those hoping such blasts could knock out Soviet radar and radio. The most notable thing on the ground were the visuals. Journalist Dick Stolley, in Hawaii, said the sky turned “a bright bilious green”. Yet over the years, the effects of this test have been exaggerated. The US Congress was told that it “unexpectedly turned off the lights over a few million square miles in the mid-Pacific. This EMP also shut down radio stations, turned off cars, burned out telephone systems, and wreaked other mischief throughout the Hawaiian Islands, nearly 1,000 miles distant from ground zero.” It didn’t. That was clear from the light-hearted tone of Stolley’s report. Immediate ground effects were limited to a string of street lights in Honolulu failing. But no one knows if the test was to blame. Of course, we rely on electronics more today. Those warning of the EMP threat say it would lead to “planes falling from the sky, cars stalling on the roadways, electrical networks failing, food rotting”. But evidence to back up such claims is lacking. A commission set up by the US Congress exposed 55 vehicles to EMP in a lab. Even at peak exposures, only six had to be restarted. A few more showed “nuisance” damage, like blinking dashboard displays. This is a far cry from the fantasies being aired as tensions with North Korea rise. AT: Radiation Radiation from a small nuke war would be on balance beneficial. ---no impact---empirics---1500 nuke weapons tests and no increases in cancer rates ---studied every soldier affected by it--- had longer lifespans, lower death rates from cancer and leukemia with more exposure ---political bias---most of the physicists who developed nukes to deter instilled fear of radiation ---assumes “but it’s a lot of radiation” because it measures based on nuclear blasts AND countries not hit will definitely survive Charles L. Sanders 17. Scientists for Accurate Radiation Information, PhD in radiobiology, professor in nuclear engineering at Washington State University and the Korea Advanced Institute of Science and Technology. 2017. “Radiological Weapons.” Radiobiology and Radiation Hormesis, Springer, Cham, pp. 13–44. link.springer.com, doi:10.1007/978-3-319-56372-5_2. The official stance of the USA and the U.S.S.R. with respect to nuclear tests is that they represent the development and testing of nuclear weapon reliability. In fact, such tests also suggest a surrogate nuclear war among the superpowers, a war of intimidation by proxy. Jaworowski [7] described the exaggerated fear of ionizing radiation that arose during the Cold War period with incessant testing of nuclear weapons. Radioactivity from the atmospheric tests spread over the whole planet, mostly in the northern hemisphere. People feared the terrifying prospect of a global nuclear war and “large” doses of radiation from fallout. However, it was the leading physicists responsible for inventing nuclear weapons, who instigated the fear of small doses. In their endeavor to stop preparations for atomic war, they were soon joined by many scientists from other fields. Subsequently, political opposition developed against atomic power stations and all things nuclear. The LNT has played a critical role in influencing a moratorium and then a ban on atmospheric testing of nuclear weapons. The United Nations Committee on the Effects of Atomic Radiation (UNSCEAR) was concerned mainly with the effects of nuclear tests, fulfilling a political task to stop weapons testing. False arguments of physicists were effective in stopping atmospheric tests in 1963. However, the price paid created a radiophobia of demanding near zero radiation doses for future generations. This worldwide societal radiophobia was nourished by the LNT assumption. A video called The Inheritance of Trauma: Radiation Exposed Communities around the World claimed that half of background radiation comes from past nuclear weapons testing [8]. This type of fear mongering in the midst of abundant and easily available data to refute this outrageous statement is one of many that caused radiophobia in the American public. President Clinton also promoted his antinuclear campaign by grossly exaggerating the radiation risk from nuclear testing fallout based on the use of the LNT. The truth is that radiation exposure from nuclear weapons tests never amounted to more than 100 μGy per year in 1962 to those living in the northern hemisphere. The exposure from nuclear testing dramatically decreased in 1963 due to the test ban; today, test fallout contributes much less than 10 μGy per year. Mean background dose in the USA and the world today is 2500 μGy per year, with natural environmental exposures ranging up to 700,000 μGy per year in regions of Iran. In 1945, Stalin ordered the U.S.S.R. to develop its own nuclear weapons; by 1949, they had developed the A-bomb. However, this crash program cost the lives of many Soviet scientists and technicians who had ignored hazards of very high radiation doses [13]. Over 1500 nuclear weapons tests have been carried out since 1944, the majority up to 1963 in the atmosphere [14]. No evidence of cancer risk increase has been found in inhabitants of the world due to nuclear test fallout. The Standardized Mortality Ratio (SMR) for all-cause mortality and all cancer mortality was 0.71 and 0.74, respectively, for 250,000 participants at the UK and US nuclear test sites [15]; that means that about 25–30% expected mortality may have been protected by lowdose radiation from fallout. US nuclear tests have been carried out at the Nevada Test Site, at Eniwetok and Bikini Atolls in the South Pacific, at Johnson Island, at Christmas Island, and at Amchitka, Alaska. There were 30 nuclear weapons tests at the Nevada Test Site in 1957 as part of the PLUMBOB test series. A cohort study of 12,219 military participants, who received a mean red bone marrow dose of 3.2 mGy and a maximum of 500 mGy, showed that the participants lived longer than the general population [16]. Twenty-three nuclear tests were carried out in the Bikini Atolls (Fig. 2.2). The first H-bomb test was by the USA (code named Mike) on October 31, 1952 at Eniwetok Atoll. It had a yield of 10.4 MT and left a crater 1 mile in diameter and 175 feet deep. Its mushroom cloud shot up to 25 miles into the stratosphere and spread out over 100 miles downwind. The largest US test (Bravo) was of a 15-MT H-bomb at Bikini Atoll on February 28, 1954, with a fireball greater than 3 miles in diameter. Operation Crossroads in 1946 at Bikini Atoll involved 235 nuclear bomb tests which exposed about 40,000 US Navy, 6400 Army, and 1100 Marine personnel. Because available data were not considered suitable for epidemiologic analysis, a risk study was based on exposure surrogate groups [18]. There were 32,000 US observers in the later (1951–1957) nuclear tests. Both solid cancer and leukemia mortality rates decreased as exposures increased [19]. The median dose received by military personnel was <4 mGy. The military in the late 1940s sent personnel to clean contaminated ships within a few hours after warhead detonations. The General Accounting Office rebuked the Pentagon’s assertions of low whole-body doses to military personnel in Operation Crossroads tests at Bikini Atoll. The AEC (Atomic Energy Commission) miscalculated the yield and weather conditions of its 1954, 15-megaton H-bomb test (Bravo) in the Bikini Atolls. As a result, 64 inhabitants of the nearby Rongelap Atoll received high radiation doses (mean γ-dose, 1.8 Gy) from fallout about 150 miles from the test site [20]. None died from acute radiation effects, although all developed beta skin burns and the children experienced thyroid damage (nodules, hypothyroidism) from uptake of I-131 into the thyroid gland. The Bikini ash also fell on a Japanese fishing boat, the Lucky Sea Dragon, at sea 80 miles east of the test site, causing the death of Aikichi Kuboyama among the 23 crew members, while all others experienced radiation sickness (mean γ-dose, 3 Gy). An additional 714 islanders and Americans received cumulative gamma doses of <0.05-0.8 Gy [17, 21]. Massive plutonium production reactors and extraction chemical plants at Hanford, WA; the half-mile-long uranium enrichment facility at Oak Ridge, TN; a laboratory at Los Alamos, NM, for designing and building A-bombs; a plutonium bomb building facility in Golden, CO; nuclear test sites in Nevada and the South Pacific; and scores of nuclear power plants spread over the USA employed millions of people, often for the major time of their working careers.1 Multiple epidemiological studies of workers in the USA and over the world have failed to demonstrate a significantly increased risk of cancer or any other disease among workers at cumulative doses of <100 mGy [22]. The Russian test site at Novaya Zemlya near the city of Semipalatinsk, the Soviet equivalent to Los Alamos, was the site for 456 tests carried out from 1949 to 1989 with 700,000 people living downwind exposed to fallout. In 1957, a very large piece of land (20,000 km2 ) downwind from Kyshtym, Ural Mountains, U.S.S.R., was contaminated by the release of 700 PBq from the explosion of a nuclear waste storage tank. Twenty villages with 7500 inhabitants were permanently evacuated. Later epidemiological studies failed to demonstrate an increased mortality risk in either locations, but did show fewer than expected cancer deaths [22]. 2.3 Predicted Radiation Effects of Strategic Nuclear War Soviet Premier Nikita Khrushchev initially wanted to test a 100-megaton weapon. Miniaturization was a far more important technical hurdle for a would-be nuclear power, which needed bombs that were small and light enough to fit on ballistic missiles far more than it needed ones that produce an impressive yield. The Cuban Missile Crisis and the consequent Soviet removal of nuclear weapon delivery systems from the western hemisphere came about a year after the Tsar Bomba test. Both the USA and U.S.S.R. realized that such a bomb had no strategic significance; no further tests of such magnitude were ever attempted by either side nor by anyone else. The National Academy of Sciences issued a report, Long-Term Effects of Multiple Nuclear Weapons Detonations, in which they concluded that the impact of a nuclear war between the USA and NATO countries and the U.S.S.R. and Warsaw Pact countries would not be as catastrophic upon noncombatant countries (not directly hit by nuclear weapons) as had been previously feared. The report was kept classified in order to maintain the state of radiophobia needed to obtain the political objectives of the two military sides. The report analyzed the likely effects of a 10,000-MT nuclear exchange on populations in the northern and southern hemispheres. Nuclear fallout would be very high in many regions of the USA (Fig. 2.3). In one attack scenario, 1440 warheads with 5050-MT surface and 1510-MT air bursttotal yields would potentially expose all remaining survivors to significant radiation exposures if unprotected. Nuclear fallout would be complicated by multiplicity of detonations, timing of detonations, and mix of surface and air detonations, making it difficult to predict fallout patterns in local areas of the country [24–26]. At Hijiyama High School, 51 girls were outdoors playing on the school grounds about 0.5 miles from the hypocenter of the first A-bomb detonated over Japan in World War II. All were dead within a few days from severe burns. At 1 mile, the mortality among unshielded school children was 84% and 14% among shielded children. The damage to Hiroshima, and to Nagasaki a few days later, was enormous, even by World War II standards of destruction. Overall, more than 75,000 died and 100,000 were injured in Hiroshima’s 245,000 population. Of the injured survivors, 70% suffered from blast injuries, 65% from serious burns, and 30% from prompt radiation effects. About 90% of all buildings within the city limits were destroyed. In Hiroshima, 270 out of the city’s 298 doctors and 1645 of its 1780 nurses were killed, while 42 of the city’s 45 hospitals were destroyed [27]. The yields of the two warheads were so low as not to cause significant nuclear fallout of any immediate health hazard concern to survivors. Yet all this death and destruction was from a primitive, puny (by today’s standards) uranium bomb with an equivalent explosive power of about 13 KT. The physical effects of the atomic bomb were described in vivid detail by many authors, including this account by a Methodist missionary who was in Hiroshima right after the bomb fell: He was the only person making his way into the city; he met hundreds and hundreds who were fleeing, and every one of them seemed to be hurt in some way. The eyebrows of some were burned off and skin hung from their faces and hands. Others, because of pain, held their arms up as if carrying something in both hands. Some were vomiting as they walked. Many were naked or in shreds of clothing … Almost all had their heads bowed, looked straight ahead, were silent, and showed no expression whatever … It was at that moment … the sound … the lights out … all was dark … How I got out, I don’t know … the sky was lost in half-light with smoke … like and eclipse … The window frames began to burn; soon every window was aflame and then all the inside of the building … There were eight of us there … The fire spread furiously and I could feel the intense heat … The force of the fires grew in violence, and sparks and smoke from across the river smothered us … and barely managed to escape [28]. Parents, half-crazy with grief, searched for their children. Husbands looked for their wives, and children for their parents. One poor woman, insane with anxiety, walked aimlessly here and there through the hospital calling her child’s name [29]. Fallout radiation levels from modern nuclear warheads are very high near the site of detonation, decreasing and increasing with distance due to radioactive decay and from fallout. Prompt fallout occurs during the first day producing the greatest radiation levels. Geography, wind conditions, and precipitation can greatly influence early radiation fallout patterns, causing local “hotspots” of radioactivity, even hundreds of miles downwind. The fallout pattern of volcanic ash following the May 1980 eruption of Mount St. Helens is similar to what one might anticipate from a multi-megaton surface blast. Uncertainties in bomb effects and radionuclide fallout patterns depend far more on local topography and weather conditions than on bomb design. For example, more than 50% of radioactivity in a cloud will be washed out by a heavy rainfall of 2-hour duration. Radioactive particles will also settle to the earth by dry deposition. Particles >10 μ in diameter settle promptly by sedimentation; smaller particles are more readily dispersed by wind updrafts and turbulence. In regions of low to moderate rainfall, dry deposition of radioactive particles may account for a greater total deposition than washout in precipitation. The acute radiation syndrome in humans was known and described in reasonably good detail by Pfahler as early as 1918 and by others two decades later who called the syndrome “radiation sickness.” The largest body of data concerning radiation sickness in humans is from the Japanese A-bomb survivors. The Japanese exposures were instantaneous to a mixture of γ-rays and limited neutrons. Fallout of fission products was minimal in the Japanese. Ionizing radiation from nuclear weapons fallout can produce a variety of biomedical effects, whether the exposure comes from external or internally deposited α-, β-, and γ-emitting radionuclides. External γ-rays cause acute radiation sickness when they are delivered over a substantial portion of the whole body. Biological damage is related to dose and dose rate. A lethal dose of external, whole-body, Co-60, 1-MeV gamma rays delivered in 1 h is 3000–6000 mGy per hour. This is about ten million times greater than the mean background dose rate for the world of 0.20 μGy per hour. The dose rate in Japanese A-bomb survivors near the hypocenter was 6000 mGy per second, which is 2 × 1015 times greater than the highest dose rate from the Chernobyl fallout. An acute whole-body, external γ-ray exposure to humans has rapid biological effects at a high-dose rate and as the dose increases. At 1 Gy, blood changes are observed but little or no evidence of acute radiation disease. At 2 Gy, radiation sickness occurs with few deaths. At 3.5 Gy, death occurs in 50% of the population within 60 days due mostly to failure of the blood-forming tissues in the bone marrow. At 10 Gy, death occurs in about a week in 100% of the population due to gastrointestinal damage as well as severe bone marrow failure. For humans the median lethal radiation dose is about 4.5 Gy if given in 1 day. There is some disagreement as to what is the LD50(60) dose for humans exposed under the expected conditions of nuclear war. Most believe that the LD50(60) lies between 3.5 and 4.5 Gy, when the dose is delivered to the whole body within a period of less than a day. There is a sharp steepness in the radiation dose-lethality curve. A dose that is only 20% greater than the LD50(60) may result in death of over 90% of the population, while a dose that is 20% less than the LD50(60) may result in death of only 5% of the population. The number of deaths from the acute radiation syndrome in the first 60 days and the number of cancer deaths during the next 50 years have been exaggerated by both the USA and U.S.S.R. to achieve a political agenda in their nuclear war scenarios. Local fallout from a 1-MT surface burst would result in a patch of about 200 square miles (oval area 6 miles at its widest and 45 miles at its longest for a continuous unidirectional wind of 15 mph) where radiation levels would be lethal to anyone not protected. It is important to remember that as radiation levels in the cloud are decreasing due to radioactive decay, they may be increasing for a period of time on the ground due to fallout accumulation. Radiation levels near the detonation site will be rapidly decreasing, while those hundreds of miles downwind will be increasing for the first few days (Table 2.2). In this example, the radiation dose rate would decrease to about 15 Gy/h at about 1 h to about 1.5 Gy/h after 12 h to about 0.15 Gy/h after 4 days and to about .01 Gy/h after 40 days [30]. The rate at which fallout radioactivity decreases can be estimated: The estimate is fairly accurate for times from 1 h to about 6 months after detonation, assuming the fallout is completed by t = H + 1. As a rule of thumb, the radiation levels from fallout will decrease by a factor of 10 for every sevenfold increase in time. This rule is applicable for the first 6 months after detonation. This means that radioactivity in fallout will decrease to 1/10th of the 1-h level by 7 h and to 1/100th at 49 h. The shape of this dose rate curve is similar to that near Fukushima, where after a week or two, dose rate had fallen to near baseline; the dose rate never reached the background dose rate at Ramsar, Iran (260 mGy/y), even at its peak which was at about 180 mGy/y. Residual radiation results from neutron activation of elements in the soil and buildings and from fission-product fallout. Neutron-related gamma doses were negligible in Japanese cities. Gamma doses from fission-product fallout were less than 25 mGy [31]. Today Hiroshima and Nagasaki are modern cities of 2.5 million people with no residual radiation attributed to A-bomb detonation. The Life Span Study (LSS) of the Japanese atomic bomb survivors is considered the “gold standard” for radiation epidemiology; nevertheless, these studies are filled with significant limitations at low doses, which are of great interest to radiation protection agencies and the EPA [32]. The threshold based on the LSS data is very conservative, 100 mGy/y. The actual threshold is likely 200–700 mGy/y with hormesis effects being seen below these doses. It appears that there was significant misunderstanding, misinterpretation, or even possible deliberate scientific misconduct in the 1956 NAS paper concerning the use of the LNT in evaluating A-bomb survivor data [33–36]. The Radiation Effects Research Foundation (RERF) data show more evidence of hormesis than adverse effects at low doses (Figs. 2.4 and 2.5). However, thresholds and radiation benefits are not considered by radiation protection agencies. The use of the RERF results for LNT modeling of harmful health effects is well known to be inappropriate, because A-bomb exposures do not apply to radiation protection for workers or for the public exposed to chronic and highly fractionated and low-doserate radiation, especially for extreme costly cleanup and decommissioning standards [38]. Dr. Gunnar Walinder believed the “expectation” that UNSCEAR members would manipulate the RERF data to produce “expected” results that supported the LNT [39]. The all-cause death rate in the USA for 2013 was 730 per 100,000, or about 2.2 million deaths per year. A moderate dose of radiation increases longevity [40]. Longevity is a better measure of health effects than is cancer mortality. A-bomb survivors had a small added risk of cancer at high radiation doses. And this highdose cohort lived only a few months less than their children and those not exposed to radiation. No health effects related to radiation exposure of their parents have been found in survivors [41]. The life expectancy in Japan in 2015 was 80.5 years for males and 86.8 years for females; mean for both sexes was 83.7 years. Japan is ranked number 1 in the world for life expectancy. The USA is ranked number 31 with life expectancy of 76.9 years for males and 81.6 years for females; mean for both sexes was 79.3 years. Australia has a cancer death rate of 314 per 100,000 per year that is about 50% higher than for low-dose Japanese A-bomb survivors (201 per 100,000 per year). A-bomb survivor cancer death rates at the highest doses were comparable to living in Australia. This means that Japanese Abomb survivors are living significantly longer than non-exposed Americans and Australians [42, 43]. Civil Defense CP 1NC---Civil Defense The United States federal government should invest in a civil defense system that provides stockpiles of food, water, medical supplies, radiological instruments, and shelters in addition to warning systems, emergency operation and communication systems, and a trained group of radiological monitors and shelter managers. Civil defense investments prevent nuclear war from causing extinction under any reasonable estimates. Charles L. Sanders 17. Scientists for Accurate Radiation Information, PhD in radiobiology, professor in nuclear engineering at Washington State University and the Korea Advanced Institute of Science and Technology. 2017. “Radiological Weapons.” Radiobiology and Radiation Hormesis, Springer, Cham, pp. 13–44. link.springer.com, doi:10.1007/978-3-319-56372-5_2. Americans are dreadfully ignorant on the subject of civil defense against nuclear war. Americans don’t want to talk about shelters. Most who take shelters seriously are considered on the lunatic survivalist fringe. The current US rudimentary fallout shelter system can only protect a tiny fraction of the population. There are probably less than one in a 100 Americans who would know what to do in the case of nuclear war and even fewer with any contingency plans. The civil defense system should, instead, provide stockpiles of food, water, medical supplies, radiological instru- ments, and shelters in addition to warning systems, emergency operation and communication systems, and a trained group of radiological monitors and shelter managers. There is a need for real-time radiation measurements in warning the public to seek shelter and prevent panic [61]. Shelters and a warning system providing sufficient time to go to a shelter are the most important elements of civil defense. The purpose of a shelter is to reduce the risks of injury from blast and thermal flux from nearby detonations and from nuclear fallout at distances up to hundreds of miles downwind from nuclear detonations. There are several requirements for an adequate shelter: 1. 2. 3. Availability—Is there space for everyone? Accessibility—Can people reach the shelter in time? Survivability—Can the occupants survive for several days once they are in the shelter? That is, is there adequate food, water, fresh air, sanitation, tools, clothing, blankets, and medical supplies? 4. Protection Factor—Does the shelter provide sufficient protection against radiation fallout? 5. Egress—Is it possible to leave the shelter or will rubble block you? There are several good publications that provide information for surviving nuclear war [62–64]. Two that offer good practical advice are Nuclear War Survival Skills by Kearny [65], and Life after Doomsday by Clayton [66]. Fallout is often visible in the form of ash particles. The ash can be avoided, wiped, or washed off the body or nearby areas. All internal radiation exposure from the air, food, and water can be minimized by proper ventilation and use of stored food and water. Radioactivity in food or water cannot be destroyed by burning, boiling or, using any chemical reactions. Instead it must be avoided by putting distance or mass between it and you. Radioactive ash particles will not induce radioactivity in nearby materials. If your water supply is contaminated with radioactive fallout, most of the radioactivity can be removed simply by allowing time for the ash par- ticles to settle to the bottom and then filtering the top 80% of the water through uncontaminated clay soil which will remove most of the remaining soluble radio- activity. Provision should be made for water in a shelter: 1 quart per day or 3.5 gallons per person for a nominal 14-day shelter period. A copy of a book by Werner would be helpful for health care [67]. During the 1950s, there was firm governmental support for the construction and stocking of fallout shelters. In Eisenhower’s presidency, the National Security Council proposed a $40 billion system of shelters and other measures to protect the civilian population from nuclear war. Similar studies by the Rockefeller Foundation, the Rand Corporation, and the MIT had earlier made a strong case for shelter construction. President Kennedy expected to identify 15 million shelters, saving 50 million lives. Even at that time, there were many who felt this was a dangerous delusion giving a false sense of security. However, the summary docu- ment of Project Harbor (Publication 1237) concerning civil defense and the testi- mony before the 88th Congress (HR-715) both strongly supported an active civil defense program by the US government. A latter 1977 report to Congress concluded that the USA lacked a comprehensive civil defense program and that the American population was mostly confused as to what action to take in the event of nuclear war. President Carter advocated CRP (Crisis Relocation Planning) as the central tenet of a new civil defense program. President Reagan in 1981 announced a new civil defense program costing 4.2 billion dollars over a 7-year period; this program included CRP and the sheltering of basic critical industries in urban and other target areas. President Reagan believed that civil defense will reduce the possibility that the USA could be coerced in time of crisis by providing for survival of a substantial portion of her population as well as continuity for the government. Stockpile, shel- tering, and education could be a relatively cheap insurance policy against Soviet attack [68]. With the fall of the U.S.S.R. came a lack of continuing interest in prepa- ration to survive a nuclear war in subsequent administrations. 2NC---Solvency Refuges solve their impacts but not ours. Nick Beckstead 15. Oxford University, Future of Humanity Institute, United Kingdom. 09/2015. “How Much Could Refuges Help Us Recover from a Global Catastrophe?” Futures, vol. 72, pp. 36–44. The number of catastrophes fitting this format grows combinatorially with the number of proposed individual catastrophes, and the probability of compound catastrophes is very small if the catastrophes are largely independent. This analysis considers only single-catastrophe scenarios, leaving it to others to consider the potential value of refuges for aiding with survival and recovery in compound catastrophe scenarios. Focusing then on single-catastrophe scenarios, building a refuge is a bet that: (i) a global catastrophe will occur, (ii) people in the refuge will survive the initial catastrophe, and (iii) survivors in the refuge will have advantages over others that allow them to help civilization recover from the catastrophe, when others outside the refuge could not (at least without help from people in the refuges). If one of these conditions does not hold, there is no distinctively compelling case for creating the refuge. If they all hold, then there could be a very compelling case for building refuges. Without assessing whether these catastrophes are likely to occur, this section will consider for which of the proposed catastrophes listed above, were they to happen, people in refuges plausibly fit (ii) and (iii). 4.1. ‘‘Overkill’’ scenarios: people in the refuges would not survive Some of these disasters, such as alien invasion and runaway AI, involve very powerful hostile forces. Refuges would be of limited use in these cases because such forces would probably have little trouble destroying any survivors. Some disasters would be so complete/destructive that people in refuges could not survive. These probably include global ecophagy from nanotechnology, physics disasters like the ‘‘strangelet’’ scenario, and simulation shutdowns. 4.2. ‘‘Underkill’’ scenarios: not enough damage for refuge to be relevant If the catastrophe leaves enough people, resources, relevant skills, and coordination, then the refuge would not be necessary for recovery. Pace Hanson (2008), earthquakes and hurricanes probably fit this description. The upper limit for the destructiveness of earthquakes appears to be not much higher than 9.6 on the Richter scale due to limits to how much pressure rocks can take before breaking (Saunders, 2011). An earthquake of this size happened in Chile in 1960 and was very far from being a global catastrophe. Hanson relies on the assumption that earthquakes follow a power law distribution, but physical factors dictate that this distribution needs to be truncated before global catastrophe becomes a realistic possibility. Similarly, it is likely that physical limits prevent hurricanes from creating global catastrophes. Not many of the other catastrophes clearly fit this description. It is true that very few, if any, of them would be potentially survivable yet result in sudden extinction (more under ‘‘sole survivors of a period of intense destruction’’). But it is conceivable that several could lead to extinction or failure to recover following the initial catastrophe. Many would survive the immediate effects of asteroids and comets, nuclear wars, and supervolcanoes, but it is more debatable how many would survive the global food crisis following them (more under ‘‘global food crisis scenarios’’). Similarly, it is conceivable that destruction from the above scenarios, an unprecedentedly bad pandemic, or an unprecedentedly bad non-nuclear global war could lead to a collapse of the modern world order (more under ‘‘social collapse scenarios’’). 4.3. Very long-term environmental damage: refuges do not address the problem Some proposed global catastrophic risks operate via permanent or very long-term negative consequences for the environment. These include climate change, gamma-ray bursts, and supernovae. This category could also include several of the overkill scenarios described above. Refuges would be of little help in these cases. 4.4. Sole survivors of a period of intense destruction: very little scope for this Conceivably, people in some refuge could be the only ones with defenses adequate to withstand a period of intense destruction (an ‘‘intense destruction’’ scenario). If that happened, they could be critical for the longterm survival of humanity. (Note: In this category, I mean to exclude global food crises and social collapse, and focus cases of ongoing active destruction (such as from conflict, hostile forces, disease, disasters, and so on.)) Refuge advocates have placed some of their hopes in this category. Hanson (2008), Matheny (2007), and Jebari (2014) all devote space to discussion of minimal viable population size and the importance of protection from fallout and other forms of destruction. Moreover, if refuges could be the sole survivors of some global catastrophic risk involving a period of intense destruction, it would make a very compelling case for their construction. However, with the possible exception of pandemic specifically engineered to kill all humans and the detonation of cobalt bomb made with similar intentions, I am aware of no proposed scenario in which a refuge would plausibly enable a small group of people to be the sole survivors of a period of intense destruction. Going through the above scenarios: The overkill scenarios of alien invasions, runaway AI, global ecophagy from nanotechnology, physics disasters like the ‘‘strangelet’’ scenario, and simulation shutdowns, are excluded. The underkill scenarios of earthquakes and hurricanes are excluded. The global food crisis scenarios of asteroids, supervolcanoes, and nuclear winter are excluded. At least under present (and declining) stocks of nuclear weapons, the immediate consequences of nuclear war would not threaten extinction. According to a 1979 report by the US Office of Technology Assessment (p. 8), even in the case of an all-out nuclear war between the US and Russia, only 35–77% of the US population and 20–40% of the Russian population would die within the first 30 days of the attack. The cases involving very long-term environmental damage (climate change, gamma-ray bursts, supernovae) are excluded. In conventional (non-nuclear, non-biological) wars and terrorist attacks, it is hard to see how rapid extinction could follow. It is hard to imagine, e.g., two sides simultaneously wiping out all remaining humans or each other’s food supply using conventional weapons. Absent purposeful global destruction of all human civilizations, it is also unclear how this would destroy the 100+ ‘‘largely uncontacted’’ peoples. That leaves pandemics and cobalt bombs, which will get a longer discussion. While there is little published work on human extinction risk from pandemics, it seems that it would be extremely challenging for any pandemic—whether natural or manmade—to leave the people in a specially constructed refuge as the sole survivors. In his introductory book on pandemics (Doherty, 2013, p. 197) argues: ‘‘No pandemic is likely to wipe out the human species. Even without the protection provided by modern science, we survived smallpox, TB, and the plagues of recorded history. Way back when human numbers were very small, infections may have been responsible for some of the genetic bottlenecks inferred from evolutionary analysis, but there is no formal proof of this.’’ Though some authors have vividly described worst-case scenarios for engineered pandemics (e.g. Rees, 2003; Posner, 2004; and Myhrvold, 2013), it would take a special effort to infect people in highly isolated locations, especially the 100+ ‘‘largely uncontacted’’ peoples who prefer to be left alone. This is not to say it would be impossible. A madman intent on annihilating all human life could use cropduster-style delivery systems, flying over isolated peoples and infecting them. Or perhaps a pandemic could be engineered to be delivered through animal or environmental vectors that would reach all of these people. It might be more plausible to argue that, though the people in specially constructed refuges would not be the sole survivors of our hypothetical pandemic, they may be the only survivors in a position to rebuild a technologically advanced civilization. It may be that the rise of technologically advanced societies required many contingent factors— including cultural factors—to come together. For example, Mokyr (2006) argues that a certain set of cultural practices surrounding science and industry were essential for ever creating a technologically advanced civilization, and that if such developments had not occurred in Europe, they may have never occurred anywhere. If such a view were correct, it might be more likely that 40 N. Beckstead / Futures 72 (2015) 36–44 people in well-stocked refuges would eventually rebuild a technologically advanced civilization, in comparison with societies that have preferred not to be involved with people using advanced technology. However, even if people familiar with Western scientific culture do survive, on a view like Mokyr’s, there would be no guarantee that they would eventually rebuild an advanced civilization. This may be the most plausible proposed case in which refuges would make humanity more likely to eventually fully recover from a period of intense destruction that would otherwise quickly lead to extinction. Jebari (2014) raises cobalt bombs as a potential use case for refuges. The concept of a cobalt bomb was first publicly articulated by Leo´ Szila´ rd in 1950, and he argued that it could result in human extinction. In a nuclear explosion, the nonradioactive cobalt-59 casing of a bomb would absorb neutrons and become cobalt-60, which is radioactive and has a longer half-life (5.26 years) than fallout from standard nuclear weapons. The hypothetical use case would involve a large quantity of cobalt-60 being distributed widely enough to kill all humans. People in refuges might be the sole survivors because it would take an extremely long time (perhaps decades) for radiation levels to become survivable for humans. Conceivably, people in an underground refuge with an unusually long-lasting food supply could be the only survivors. As mentioned above, government and private shelters generally do not hold more than about a year of food supply. However, the online Nuclear Weapon Archive claims that, at least according to the public record, no cobalt bomb has ever been built. One known experimental attempt by the British to create a bomb with an experimental radiochemical tracer ended in failure, and the experiment was not repeated. Early analysis of the problem by Arnold (1950) concluded that it was uncertain whether neutrons could be absorbed to make cobalt-60 as intended, and that it was unlikely that the radioactive material could be distributed evenly enough to create total extinction. More recent, and currently unpublished, results by Anders Sandberg reached a similar conclusion. Therefore, the cobalt bomb scenario seems both highly unlikely to occur and implausible as a sole survivors case. The CP enables civilization to survive nuclear war. Joseph George Caldwell 18. Mathematical statistician and systems and software engineer, author of The Late Great United States (2008); Can America Survive? (1999). 05-15-18. “Is Nuclear War Survivable?.” Foundation Website. http://www.foundationwebsite.org/IsNuclearWarSurvivable.pdf At the present time, human society is extremely wealthy and technologically advanced. We can fly to the moon and Mars. The challenge of preparing to survive a nuclear war can easily be met. Our society – even wealthy individuals in our society – can easily afford to establish a number of pods throughout the world, thereby assuring the survival of themselves and mankind after nuclear war occurs. Nuclear war is coming. When it does occur, it is reasonable to speculate that some groups or individuals will have had the foresight to prepare for it, and thereby assure not only their survival, but the survival of the human species. (Because of the politics of envy, pods would be vulnerable to destruction by people without access to one, who would feel that if they are not eligible to seek refuge in a pod, then no one should have that privilege. As a result of this vulnerability, if there are already pods in existence, the general public would be unlikely to know about them, and it would certainly not know of their locations.) Astronomer Fred Hoyle observed that, with respect to energy sufficient to develop technological civilization, the human species may have but one bite at the apple. When all of the readily available energy from fossil fuels is gone, no comparable source of inexpensive, highintensity energy is available to replace it. He observed that a key ingredient enabling the development and maintenance of our large, complex, technological society was the massive quantity of fossil fuels, accumulated over millions of years and expended in a few human generations. If modern technological civilization collapses, mankind would not, in Hoyle’s view, have this key ingredient – a massive amount of cheap, high-grade energy – available a second time, to build technological society again. Hoyle’s point is arguable. Although the one-time treasure trove of fossil fuels may have enabled a very rapid and very inefficient development of a planetary technological civilization, and may temporarily support a very wasteful and lavish lifestyle for a truly massive number of people, it appears that post-fossil-fuel sources of energy are quite sufficient to develop and maintain a technological civilization, a second time. 6. Planning to Survive a Nuclear War The fact is, with sufficient planning and preparation, a large- scale nuclear war is survivable for the human species, with high probability. 10 In the 1950s, the United States had a large-scale Civil Defense program, which identified fallout shelters having adequate ventilation, and stocked suitable shelters with water and other provisions. The program was eventually discontinued, when it was realized that in a large-scale nuclear attack most people would perish, with or without the fallout shelters. There was no point to fallout shelters, at least not of the sort used then (mainly, basements of buildings). Some nations maintain fallout shelters today, but they do not provide for the contingency of a nuclear winter. To survive a nuclear winter requires substantial planning, preparation, and expense. Locations such as Mount Weather and Cheyenne Mountain could be quickly modified to provide for two years of operation without outside support. In planning for space missions to Mars, the US and other countries are acquiring much useful information about supporting a small group of people for an extended period of time in a hostile environment. The Mormon religion requires its adherents to stockpile a year’s worth of food. Without provisions to protect survivors from radioactive fallout and from attack from survivors who have no food, such preparations are useless. Wealthy individuals such as George Soros, Bill Gates, Jeff Bezos, Elon Musk and Mark Zuckerberg have the wherewithal to plan and prepare shelters that could keep small groups alive for a couple of years. Mankind has gone to the moon and returned. It is now planning to explore and colonize Mars. Providing for a number of colonies of human beings to sit out the aftermath of a large-scale nuclear war, including the provision of those colonies with adequate biosphere restocking supplies, requires planning, preparation, and expense, but it is technologically less daunting and less expensive than going to the moon or to Mars (since no space travel is involved). Moreover, it is vastly more important – unlike an expedition to the moon or Mars, the survival of mankind depends on it!