1

Drought Forecasting: A Far Cry from a Dry Topic

By NATHAN MAVES MOORE

Abstract

Droughts are one of the world’s most

economically

disruptive

and

deadly

hydrological events. Forecasting them brings

unique difficulties. Droughts can span

anywhere from 3 months to 7+ years, with

significant non-linearity in their buildup and

recession. Methods utilizing machine learning,

especially artificial neural networks, have

displayed promising results with high

forecasting accuracy at relatively long lead

times. However, even the most complex

methodologies degrade quickly as forecasts

predict farther and farther into the future. In

this paper, I summarize the evolution of

drought forecasting, with emphasis on modern

methods in machine learning.

Introduction.

Of all hydrological events, droughts can

be classified as one of the most destructive.

With residual impacts effecting local ecology,

agriculture, and water security, droughts can

impede the development of society and

endanger the well beings of its members. With

droughts likely becoming more prevalent as the

onset of climate change worsens (Union of

Concerned Scientists, 2014), better prediction

methods will be integral to limiting their

adverse effects and allowing for more efficient

water usage.

From

forecasting

models’

humble

beginnings in the 20th century using basic panel

linear regression, Autoregressive Moving

Average (ARMA), Autoregressive Integrated

Moving Average (ARIMA), and Markov Chain

modeling techniques dominated the field for

decades. The field of drought forecasting has

recently undergone a renaissance, with cutting

edge non-linear forecasting methods being

applied throughout the field. Since the mid2000s, machine learning techniques have

begun to show promising results, with some

augmented Artificial Neural Networks (ANNs)

and Support Vector Machine (SVMs) models

predicting more than 95% of variance in certain

hydrological time series data.

Understanding drought forecasting requires a

holistic understanding not only of model

complexity, but also of drought measurement,

quantification, and the augmentation of data. In

this literature review, methods for normalizing,

augmenting, and forecasting drought data will

be reviewed at length, with most of the paper

focusing on recent modelling techniques,

especially artificial neural networks.

I. Section 1: Drought Definitions and

Indices – A Brief Overview

Before drought forecasting can be

understood, one needs to understand what

events are qualified as droughts and how they

are quantified. Mishra & Singh’s (2010)

overview of drought concepts groups droughts

into four separate classes: meteorological

drought, hydrological drought, agricultural

drought, and socio-economic drought.

Metrological drought is classified by

below-average precipitation for an extended

period of time. It is generally quantified with

precipitation indices. Hydrological drought is

defined by insufficient surface and subsurface

water supply for a fixed demand of water

resources. Measurements of watershed,

groundwater levels, and streamflow are

generally used to quantify it. Agricultural

drought pertains to below-average soil

moisture and subsequent crop failure.

Evotranspiration – the net water content of soil

after evaporation and precipitation – is

generally used to quantify it. Finally, socio-

2

economic drought is defined by water demand

exceeding supply, and the subsequent water

shortage. This is generally determined by

measuring volume of water supplied and

demanded under a given water distribution

infrastructure. This paper will primarily focus

on hydrological and meteorological drought

forecasting.

Droughts are forecasted using various

moisture or precipitation indices as a form of

measurement, the most popular of which being

the Standardized Precipitation Index (SPI).

Other indices and approaches exist such as the

Palmer Drought Severity Index, Crop Moisture

Index, z-score and the decile approach (Mishra

& Singh, 2010).

Generally speaking, indices pertinent to

forecasting normalize moisture or precipitation

data to some underlying distribution as to

rescale the variable to a self-relative measure.

In the case of the SPI, precipitation data is fitted

to some cumulative distribution function –

generally the gamma distribution – that is then

transformed to the standard normal

distribution (Mishra & Singh, 2010; Mishra &

Desai, 2006). The normal distribution offers

symmetry, as well as other elegant

mathematical properties that translate well to

statistical analysis.

Before normalization to a CDF, precipitation

or moisture data will be aggregated over some

time interval – either the average daily

precipitation over 1, 3, 6, 12 or 24 months – as

to analyze droughts of differing periods of

time. These different aggregation levels are

referred to as SPI 1, SPI 3, SPI 6, etc. Forecasts

will see how these SPI values change after a

given time step For example, SPI 3 may be

forecasted over a 1-month lead time; that is,

how does a moving average of SPI 3 change

when we move 1 month forward.

However, recent work has criticized the SPI,

and other standardized indices. Two issues

have been pointed out: first, hydrological data

1 See Farahmand & AghaKouchak (2015).

may not follow a fixed distribution over time.

Thus, the initial fitting of the data to an

underlying probability distribution for a

specific geographic area may be naïve. Second,

if a temporally fixed distribution does exist, the

precipitation distribution likely varies over

different climatic regions. Consequently if data

includes a large geographic area, fitting data to

a single parametric distribution may lead to

biases and measurement error (Farahmand &

AghaKouchak,

2015).

Non-parametric

approaches to indices calculation have been

proposed to address this issue1.

However, as long as forecasting is over a

small geographic area, or homogenous climatic

zone, it is likely that the data follows some

constant distribution. Although these issues

with data fitting may propose theoretical

problems, pragmatically, models using SPI and

other standardized data have been incredibly

effective at predicting various droughts.

II. Section 2: Linear Modelling – From

Humble

Beginnings

to

Complex

Methodology

Linear Regression

Like many topics applied statistics,

drought forecasting began with the humble

linear regression. Initial models aimed to

predict streamflow data (Benson & Thomas,

1970), as well as drought duration (Paulson et

al., 1985). Both Benson & Thomas (1970) and

Paulson et al. (1985) used simple log-log or

linear-log regressions to predict streamflow

and drought durations respectively.

𝐿𝑖𝑛𝑒𝑎𝑟 − 𝐿𝑜𝑔:

𝑦𝑡 = 𝛽0 + 𝛽1 ln 𝑥1,𝑡 + … + 𝛽𝑘 ln 𝑥𝑘,𝑡

𝐿𝑜𝑔 − 𝐿𝑜𝑔:

ln 𝑦𝑡 = 𝛽0 + 𝛽1 ln 𝑥1,𝑡 + … + 𝛽𝑘 ln 𝑥𝑘,𝑡

3

Although not focused on predicting the

occurrence of droughts, as much as the severity

and duration, these initial models serve to

illustrate the genesis of forecasting within

hydrology.

Benson & Thomas’s (1970) model best

serves as a measure of how far the field has

come. In their study of various river basins’

streamflow across the United States, the only

meaningful statistic proposed is the traditional

standard error calculation to their linear

regression’s estimated parameters. No

investigation is done into heteroskedasticity in

the data; no 𝑅2 is reported; mean squared error,

and other values are neglected. Average

absolute estimation error as a percentage of the

realized values is presented graphically.

Depending on the forecast-time horizon, it

exceeds 100%, but is as low as approximately

30%.

The model selection is also done through

explicit “P-hacking.” That is the model is first

over-specified, and insignificant indicators are

omitted until the final “optimal” model is

discovered. This is done utilizing the entire

data set to generate estimates. Thus, the

external validity of this model must be called

into question. Obvious issues with data-mining

could easily arise from this. However,

considering the state of applied statistics at the

time of 1970, this was cutting edge. They even

used a computer to calculate their regression

coefficients.

Paulson et al. (1985) offer a more robust

approach to linear regressions. They collected

streamflow data, then constructed drought

severity and duration indices within the

California Central Valley. These indices

included constructing values for their two

dependent variables – drought severity and

magnitude – indicating 10%, 50% and 90%

exceedance

probabilities.

One

can

conceptualize these values as the magnitude or

severity a given drought could exceed with

10% probability, 50% probability and 90%

probability. They also constructed termination

probabilities of 1 though 5 years. In other

words, they calculated the likelihood that a

current drought would persist through a given

termination horizon. They proceeded to use

various climatic data, such as precipitation and

average runoff, to generate linear predictions.

Finally, they restricted their analysis away

from rivers flowing out of the Sierra-Nevada

range as to create some level of climatic

homogeneity across their data, in hopes of

reducing prediction error.

Ultimately, Paulson et al. (1985) were able to

predict the likely termination horizon as well as

severity and magnitude thresholds of each

drought relatively well. 𝑅2 values ranging from

.56 to .98 depending on which dependent

variable they were predicting were achieved.

However, just like in Benson & Thomas

(1970), backward elimination procedures were

used over the entire dataset to determine which

independent variable to include in their final

models. Thus, issues of data-mining and phacking persisted, and a true causal chain with

strong external validity may not be so easily

linked to their model.

Markov Chains

As computational capabilities changed,

so did the statistical methods used to analyze

drought patterns. Lohani & Loganathan (1997)

utilized climatic and hydrological data from the

state of Virginia spanning from 1895 to 1990.

They calculated the Palmer Drought Severity

Index – an aggregation of various climatic and

hydrological variables into one succinct index

– for each month in the sample. From there,

they transformed the index from a continuum

to a seven-level variable by classifying subsets

of the continuum as levels of drought severity.

From there, they constructed probabilities of

ending up in a specific drought “state”, defined

by the aforementioned levels.

After recovering the state probabilities, they

assumed that its stochastic behaviour followed

4

a first order Markov process. That is, the

probability of ending up in state 𝑖 at time 𝑡 only

depended on the realized state, 𝑗, directly

preceding it at time 𝑡 − 1. The likelihood of

transitioning from state 𝑖 to state 𝑗 depends on

the month of the year one finds oneself in, but

the year itself does not matter; specific monthto-month transition probabilities are fixed, thus

month-to-month transition probabilities are

periodic of order 12.

Since transition probabilities are fixed yearto-year, one can go on to group them into

transition matrices, 𝑃[𝑡], where 𝑡 is the specific

month, and element 𝑃[𝑡]𝑖,𝑗 = 𝑃𝑟𝑜𝑏(𝑗[𝑡 +

1] | 𝑖[𝑡]). The unconditional state probabilities,

that is the probability of being in state 𝑖 in

month, 𝑡, is given by

𝑃𝑟𝑜𝑏(𝑖[𝑡]). The

unconditional probabilities are known at each

month, and they are grouped into a row vector,

𝑀[𝑡], where the row index indicates the

month, 𝑡2. Consequently, because of the

Markov Process one can model a month-tomonth transition as:

𝑀[𝑡] = 𝑀[𝑡 − 1]𝑃[𝑡 − 1]

Thus, from unconditional monthly state

probabilities, transition matrices can be

recovered analytically – through solving a

system of linear equations – as well as

empirically, by directly estimating the monthto-month conditional probabilities of a certain

drought state being realized. Lohani &

Loganathan (1997) use those transition

probabilities to construct decision trees that

then indicate the future likelihood of entering a

specific drought state from the current drought

state. This decision tree method was designed

to better inform policy makers on how to react

on short time intervals to impending droughts.

Lohani & Lognathan found that for short

term predictions, with only a few months of

lead time, these predictions seem to be reliable,

2 They are also easy to estimate via maximum likelihood

estimation. That is simply taking the proportion of data points in a

especially over relatively homogenous climatic

and geologic areas as found in Virginia.

Moreover, in comparing their estimates to

previous finding’s by Karl (1986), they found

that their predictions were well within his

estimates for conditional drought

state

probabilities. At the very least, this indicates

some level of external validity to the Markov

chain modeling system.

Finally, they compared their estimates of

conditional drought state probabilities, to

analytical findings that assumed a first order

Markov structure. Again, the differences

between the two forms of estimates were

minute, indicating that first order Markov

chains are likely a good estimate for month-tomonth transitions of droughts.

However, this method has one large short

coming. Similar to a stationarity assumption on

an autocorrelation function, it is only effective

if the underlying stochastic process is a fixed

first order Markov process. If not, the results

are effectively meaningless, especially at

multi-month time step prediction intervals, as

the transition matrices will not fixed, but

instead varying overtime.

ARMA, ARIMA, and DARMA Models

Throughout the late 20th century, the

most common practice for drought forecasting

was modelling via various autoregressive

models. Autoregressive moving average

(ARMA), autoregressive integrated moving

average (ARIMA), and discrete autoregressive

moving average (DARMA) models have all

been extensively applied to the problem of

forecasting hydrological data.

To begin with the most simple case, an

ARMA(p,q) model uses previous values of a

given time series, and previous residuals to

predict future values. We can conceptualize an

ARMA model as follows:

specific month with a certain realized state, 𝑖, and taking their

proportion over the total amount of data from that month in the sample

5

𝜃(𝐿)𝑥𝑡 = Θ(𝐿)𝜀𝑡

Where 𝐿 is a lag-operator, 𝜃(𝐿) is a lagpolynomial of order p, Θ(𝐿) is a lagpolynomial of order q, 𝑥𝑡 is our time series of

interest, and 𝜀𝑡 is some identically and

independently distributed error term, generally

approximated by estimation residuals after an

AR(p) estimation. The best way to understand

an ARMA model is, “we are making a

prediction for where we are going to be based

on where we have been, and how wrong we

were.”

ARIMA models simply replace the 𝑥𝑡 with

𝑑

∇ 𝑥𝑡 , a differencing d-times of the time series

of interest, and can be expressed as:

𝑥𝑡 =

Θ(𝐿)𝜀𝑡

𝜃(𝐿)∇𝑑 𝑥𝑡

This is done to maintain stationarity of the

data. Often a random variable will be of an

integrated process of order 𝑑. That is to say, the

variable itself may not have a constant

unconditional mean and variance, but the 𝑑 𝑡ℎ

difference does. Thus, taking the 𝑑 𝑡ℎ difference

allows one to estimate the time series via

traditionally least squares methods and

ultimately recover unbiased estimates of the

process.

DARMA models take the original ARMA

structure and apply it to Bernoulli variables, i.e.

binary random variables. To understand a

DARMA model, it is best to analyze a DAR(1)

model of order 1. Chung & Salas (2000) offers

the following successive explanation of a

DARMA model using a DAR(1) as a starting

point. A DAR(1) model has the following

form:

𝑋𝑡 = 𝑉𝑡 𝑋𝑡−1 + (1 − 𝑉𝑡 )𝑌𝑡

Where 𝑉𝑡 is a Bernoulli random variable – with

𝑃(𝑉𝑡 = 1) = δ and 𝑃(𝑉𝑡 = 0) = 1 − δ. 𝑌𝑡 is

another Bernoulli random variable with 𝑃(𝑌𝑡 =

1) = π1 and 𝑃(𝑌𝑡 = 0) = π0 = 1 − π1.

Thus the probability of transition from state 𝑖 to

state 𝑗, 𝑃(𝑖, 𝑗) can be given as:

δ + (1 − δ)π𝑗 ,

𝑖𝑓 𝑖 = 𝑗

𝑃(𝑖, 𝑗) = {

(1 − δ)π𝑗 ,

𝑖𝑓 𝑖 ≠ 𝑗

Clearly, a DAR(1) model can be

characterized as a first order Markov process,

as the probability of state 𝑖 being achieved in

time 𝑡 only depends on the state, 𝑗, achieved in

time, 𝑡 − 1. A DARMA(1,1) model simply

takes a time series, described by a DAR(1)

process and plugs it into another DAR(1)

model. Again, taken from Chung & Salas

(2000), it has the explicit form:

𝑋𝑡 = 𝑈𝑡 𝑌𝑡 + (1 − 𝑈𝑡 )𝑍𝑡−1

Where 𝑈𝑡 and 𝑌𝑡 are again Bernoulli random

variables with probability 𝑃𝑌 and 𝑃𝑈

probabilities of success. This nesting of

probabilities complicates the stochastic process

underpinning the time series of interest, 𝑋𝑡 .

However, taking the ordered pair as a single

variable [𝑋𝑡 , 𝑍𝑡−1 ] gives birth to another first

order Markov process, across 4 states ([0,0];

[1,0]; [0,1]; [1,1]). This two-dimensional

Markov process can be mapped to a 4-state

univariate first order Markov process;

something that can be relatively easily

estimated through traditional methods.

ARMA models have been used to predict

streamflow through the Niger river in Salas

(1980). They find that an ARMA(1,1)

describes the data best of any autoregressive

model. Squared sum of residual surfaces are

calculated on parameter balls of radius, 𝑟,

around initial parameter estimates to find

estimators that best minimize

squared

residuals. However, only residuals, and

squared residuals are reported in the paper.

From visual assessment of time series and

simulated time series data, the model fails to

predict variation at the extremes, i.e. when

6

streamflow is incredibly high or low as

compared to its unconditional mean.

Chung & Salas (2000) use a DARMA model

to predict “runs” of droughts in the Niger river

as well as the South Platte River in Colorado.

They first categorize streamflow data as being

either above (𝑋𝑡 = 1) or below (𝑋𝑡 = 0) the

sample mean, with above categorizing relative

wet periods and below categorizing relative dry

periods. “Runs” are defined as continued

periods of the same state being achieved. They

find that using a DARMA(1,1) model in place

of a DAR(1) model, generates models with

longer memory than simple Markov chains,

and better ability to predict the probability of

extended runs of droughts 3. However, due to

the limitations of the data sets used – only 73

years of streamflow data were available –

probabilities of extreme runs – more than 7

years – are impossible to estimate.

ARIMA models are often used as

benchmarks for predictive capability of more

complex machine learning based models. In

Mishra et al. (2007) and Mishra & Desai

(2006), pseudo-out of sample optimized

ARIMA models provide explanatory power on

SPI data in the range of 𝑅 2 = 0.4 to 0.6,

depending on the aggregation level of the SPI

data used.

The major shortcoming – and consequently

why I do not discuss the models at greater

breadth and depth – is there inability to

comprehend non-stationary data as well as nonlinear data. As mentioned above, linear

regressions, Markov chains, and various

autoregressive models all impose a linear

structure on the data generating process, that

often does not encapsulate variation of data at

the extremes. That is why in recent years

complex machine learning methods such as

ANNs and SVMs have been adopted to better

model hydrological data.

III. Section 3: : Machine Learning

Methods and their Immense Success

3 The complexities of the ARMA, DAR and DARMA models can

take up entire 15 page academic papers on their own. The results are

discussed briefly, as more importance is put on ML methods,

specifically ANNs.

In general, two types of machine learning

processes are utilized to predict various

hydrological data: Support Vector Machines

(SVMs) and Artificial Neural Networks

(ANNs). I will give a relatively rigorous

explanation of ANNs, using mathematical

definitions as well as heuristic explanations to

describe their modeling process. SVMs are a

very new part of machine learning, having only

been invented in the mid 1990s (Brunton,

2014). Consequently, their application to

hydrological data is promising, but actual

applications are limited. I will discuss them

briefly. I will also discuss how various machine

learning (ML) methods have been bettered

through various data augmentation methods.

However, before diving into how different

methods of machine learning work, and their

application to hydrological forecasting, it is

important to understand a core concepts

pertinent to all machine learning (ML) methods

and algorithms. ML methods and algorithms

rely explicitly on data mining; that is using the

sampled data’s realized structure to generate a

model, and not fitting a preconceived model to

a dataset. However, to avoid creating models

that “memorize” the entire data set, and lack

strong external validity, data sets are split up

randomly into subsets. Generally, an algorithm

will be trained on the “training” subset and then

assessed on the “test” subset. Assessment is

generally a function of the distance estimates

are from realized values. The important piece

is that models do not do any learning on their

test set; they simply apply their knowledge

from the training set onto it.

Broadly, there are also two different ways of

learning: supervised and unsupervised.

Supervised learning makes explicit what the

desired input and output sets are. In a

7

supervised environment, the algorithm has an

explicit target: it seeks to minimize its

predictive error. In an unsupervised

environment, output and input are not

explicitly described. Unsupervised methods are

more suited towards understanding data

structure, whereas supervised methods aim to

form a description of a data generating process.

The scope of ML algorithms and methods

encapsulated in this paper is limited to

supervised methods.

Artificial Neural Networks

ANNs are designed to learn in the same way

humans do. ANNs are composed of two pieces:

neurons and links. Neurons can be thought of

as functions, while links can be thought of as

weights that determine the inputs to said

functions. The following explanation leans

heavily on the lecture series provided by

Sanderson (2017).

Given an input vector – in this a vector of

time series data from 𝑡 to 𝑡 − 𝑝 – the first layer

of neurons represents the self-normalized

elements of an input vector. In normalizing the

data to itself, it insures that the value of that

specific neuron is somewhere between 0 and 1.

A common form of normalization is given in

Mishra & Desai (2006), and is carried out as

follows:

̃𝑡 =

𝑋

𝑋𝑡 − 𝑋𝑚𝑖𝑛

𝑋𝑚𝑎𝑥 − 𝑋𝑚𝑖𝑛

Where 𝑋𝑡 is the realized value of the time

series, 𝑋𝑚𝑖𝑛 is the minimum value of the time

series, 𝑋𝑚𝑎𝑥 is the maximum value of the time

̃𝑡 is the normalized value of the

series, and 𝑋

time series.

With each input neuron bounded in this

manner, we can think of the value the neuron

takes as the level of its activation, with 1 being

a fully activated neuron, and 0 being a neuron

4 This is because output values may not be bounded in any way.

that isn’t firing at all. The activation level of

each neuron is then mapped to a subsequent

layer of neurons, called the hidden layer. Each

neuron in the hidden layer will take as input a

weighted average of the input layer’s

activations, plus a constant bias term that

differs for each hidden layer neuron. Each

hidden layer neuron utilizes a normalization

function, specifically the sigmoid function,

described here, to bound the data between 0 and

1:

𝑆(𝑥) =

1

1 + 𝑒 −𝑥

In this case, 𝑥 is the weighted sum of

previous layer’s (input) neuron activations. The

weights and biases that determine the sums are

called linkages, or links. These are the integral

parameters of a neural network, and I will

return to their estimation soon.

Once the hidden layer’s activations are

determined, the process is repeated, and

weighted sums are calculated utlizing the

current layer’s activations as the new to-betransformed inputs of the next layer. Finally,

once the output layer is reached, the weighted

sums are not transformed and are given as a raw

estimated output4. Neural networks of this type

are referred to as feed-forward, as they

successively aggregate activations of previous

layers, until an output estimation is generated.

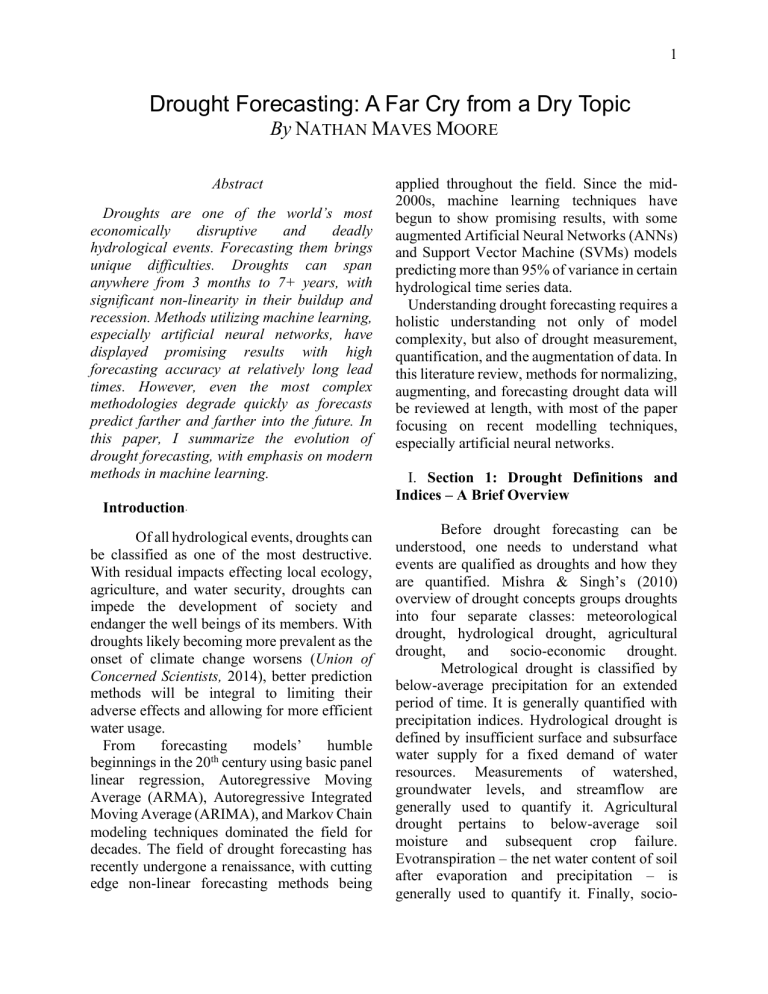

The following diagram lends some intuition

to the underlying structure of an ANN. The

circles represent neurons, while the lines

represent the weights (links) between them.

Each line links a neuron from the preceding

layer to each neuron in the current layer. We

also note that each neuron has its own unique

bias term.

8

FIGURE 1. A SIMPLE 3-2-1 F EED F ORWARD NEURAL NETWORK

Input

Output

neurons the weight is originating from5. This

indexing method of weights best lends itself to

thinking of the weights not as numbers but

linkages between neurons. From the new found

expression of average squared error as a

function of weights, the first order condition for

minimization is given:

0=

𝜕𝐸̃

, ∀𝑖, 𝑗, 𝑛

𝜕𝑤𝑖,𝑗 𝑛

Hidden Layer

Thus, in order to minimize the error,

numerical root finding methods can be applied

Once the ANN has outputted an estimate,

these estimated output are then compared to the

realized values via the following squared error

function:

𝑒(𝑦̂) = (𝑦 − 𝑦̂)2

Where 𝑦̂ is the estimated value and 𝑦 is the

realized value. Each 𝑒(𝑦̂) is averaged across

the sample and the resulting sample average of

error, 𝐸(𝑦̂) = ∑𝑖 𝑒𝑖 (𝑦̂) becomes the measure

of the model’s accuracy.

To return to calibrating the weights, once the

structure of the ANN has been decided, that is

how many hidden layers, and neurons on said

hidden layers there will be, one can then

reimagine the average squared error function

as a function of the weights and biases. In other

words, we aim to understand how adjusting

individual weights and biases changes the

estimate and in turn changes the error. Thus,

the aim of a neural network is to minimize:

min𝑤𝑖,𝑗𝑛 {𝐸(𝑦̂(𝑤𝑖,𝑗 𝑛 )} = min𝑤𝑖,𝑗 {𝐸̃ (𝑤𝑖,𝑗 𝑛 )}

Where 𝑖 indicates the neuron the weight is

coming from, 𝑗 indicates the neuron the weight

is connecting to, and 𝑛 indicates which layer of

5 Note that my usage of the term weight includes the constant bias

𝑛

term. The bias term is simply 𝑤0,0 for neuron n.

to the array of

𝜕𝐸̃

𝜕𝑤𝑖,𝑗 𝑛

to estimate the 𝑤𝑖,𝑗 𝑛 that

solve the optimization problem. Although the

partial derivatives of the average squared error

are easily thought of in tensor form, with index

(𝑖, 𝑗, 𝑛), it is better to organize the partial

derivatives of the error into a gradient vector,

as the neurons per layer may not be the same

throughout the entire model, and as a vector

value function is much easier to work with in

the optimization process.

The most common root finding methods are

gradient descent methods. These methods

follow the form of Newton-Raphson method,

where the root of a vector value function – in

this case the average squared error gradient –

𝑓(𝑥): ℝ𝑚 ⟶ ℝ𝑚 , is estimated by the

recursive algorithm:

𝑥 𝑘+1 = 𝑥 𝑘 − 𝑑𝑓(𝑥 𝑘 ) −1𝑓(𝑥 𝑘 )6

Where 𝑑𝑓(𝑥 𝑘 ) is the Jacobian of the vector

value function, 𝑓(𝑥 𝑘 ), and 𝑘 indicates the

iteration index. Note two things: 𝑥 𝑘 is the 𝑚

dimensional vector of estimated optimal

weights at the 𝑘 𝑡ℎ iteration and that 𝑓(𝑥) is the

gradient of the average squared error, not the

average squared error itself. Gradient descent

6 For more on optimization methods, as well as their application to

finance and economics see Miranda and Paul’s (2002) Applied

Computational Economics and Finance.

9

methods avoid calculating the second

derivative

of

the

average

squared

error, 𝑑𝑓(𝑥 𝑘 ), as that is computationally

costly, and simply assume it to be the identity

matrix. Some more complex methods for

training networks use linear approximations of

𝑑𝑓(𝑥 𝑘 ) to increase the speed of descent, or

randomize over the testing set such that the

average gradient is not calculated from every

test point, but only a sample.

The trick to effective neural networks is the

ability to generate the gradient of the average

squared error in a computationally efficient

manner. Backpropagation calculates the

average gradient at each estimated output point

in the testing sample, which is an analogous

concept to the derivative of the average squared

error function. Essentially, backpropagation

recognizes the nested non-linear regressions

occurring and uses the chain rule to its

advantage, meaning that identical derivatives

are not constantly recalculated.

Now that ANN’s structure and its underlying

learning process is better understood,

hydrological forecasting using ANNs can

begin to be discussed. One of the first to apply

ANNs to drought forecasting was Mishra &

Desai (2006). They applied two different types

of feed-forward neural networks to predict SPI

data in West Bengal. Their data spans the latter

half of the 20th century, from 1965 to 2001.

They implement two different estimating

structures: recursive multistep and direct

multistep neural networks.

A recursive multistep NN takes a vector of

inputs – the current, and lagged values of the

SPI data. Then, it feeds them through a single

hidden-layer feed forward neural network,

giving a single output that is one time step

ahead of the most recent input. From there, the

estimated value is added to a new input vector,

and the oldest input element is omitted. This

new input vector, with a single 1-time-stepahead estimate included in its elements, is then

fed through the neural network, to recover an

estimate of the SPI data two time steps ahead.

This is repeated until the desired time horizon

is reached.

The direct multi-step NN takes a vector of

inputs – again the current and lagged values of

the SPI data – and proceeds to feed it through a

single hidden layer NN that directly outputs a

vector of predictions for a certain number of

time steps ahead. The following diagrams are

taken from Mishra & Desai (2006), and express

the differing structures of the two networks,

where 𝑆𝑡 is the time series of SPI data, and 𝑡 is

the index of time (months):

FIGURE 2. A DIRECT MULTI-S TEP ANN (MISHRA & DESAI, 2006)

FIGURE 3. A RECURSIVE MULTI-S TEP ANN (MISHRA & DESAI, 2006)

These neural networks’ structures, along

with the benchmark linear ARIMA models,

were optimized for different aggregation

periods of SPI data using pseudo-out of sample

methods. In all cases, models with various time

lags and node structures were tested on training

10

data. The ones that performed best with regard

to mean absolute error (MAE) and root mean

squared error (RMSE) were chosen, and then

tested. Testing results between all the models

were compared over RMSE and MAE, and R

values were calculated for each model. They

find that the ANNs significantly outperform the

traditional stochastic ARIMA methods. The

direct

multistep

models

consistently

outperformed recursive multistep models at

longer time step predictions, however,

recursive multistep models were better for

immediate time step predictions, such as 1 to 2

time steps ahead. ARIMA models gave good

predictions for 1 to 2 time steps ahead, but

results’ accuracy decayed quickly afterward.

Over a 1 month lead time, differences in R

values when forecasting SPI 3, 6, 9, and 12

between ARIMA and ANNs were very small:

generally a difference of 0.01 to 0.03, with this

difference being smaller over longer

aggregations of SPI. However, at longer lead

times, the recursive and direct multistep ANNs

significantly outperformed the ARIMA models

with the direct step model consistently having

R values 0.2 greater than the ARIMA models.

ANNs are far from perfect, though. ANNs

still suffer from issues similar to that of

traditional ARIMA models. Taver et al. (2015)

and Butler & Kazakov (2011) discuss at length

the issues non-stationarity and non-linear

trends can have on traditional feed forward

ANNs. Butler & Kazakov (2011) investigate

financial time series data, and find that

predictive accuracy of feed forward ANNs

declines as non-stationary time series persist.

This is due to ANNs still imposing a temporally

constant functional form on the autocorrelation

function of the time series. If data is nonstationary, it could be the case that said

function is not constant. Taver et al. (2015)

investigate different forms of ANNs for

hydrological forecasting, specifically recurrent

networks with the aim of reducing nonstationarity’s adverse effects on predictions.

They do so by adapting the autocorrelation’s

functional form as more data is inputted.

However, their results exceed the depth and

breadth of this paper.

Feed forward ANNs also have a tendency to

overfit to data. That is to say, they are very

competent when it comes to understanding

underlying data generating processes within

the training set, but generalizing those

processes to the test set is not always accurate.

One way to remedy this is separating data into

separate trends before having an ANN analyze

it. Thus, the ANN can analyze different trends

separately and gain a more comprehensive

understanding of the underlying data

generating process. Discrete wavelet analysis

allows for data to be broken down into its

separate underlying trends. However, before

their discussion, I will briefly discuss support

vector machines (SVMs).

Support Vector Machines – A Brief

Heuristic Explanation

Before getting into data augmentation

methods, it is also important to briefly discuss

another machine learning technique. The

direction of hydrological forecasting seems to

be continuing towards feedforward neural

networks. However, some research has gone

into support vector machines (SVM), and their

potential

application

to

hydrological

forecasting. SVMs

attempt to draw decision

lines – or their higher dimensional equivalents,

called hyperplanes – between data as to group

data into subsets that have similar outcome

variable realizations.

Often, data cannot be easily separated within

the dimensional space of its input vector. Thus,

kernel functions are used to project the data

onto a higher dimensional spaces. Once data is

projected into a higher dimensional space,

linear hyperplanes can be constructed to draw

partitions, and consequently predictions can be

made.

The main benefit of an SVM is that they

prioritize data that is close to the dividing

11

hyperplane, and ignore data that is more easily

predictable, farther away. They try to create a

decision boundary that maximizes the distance

of boundary points within certain outcome

subsets from said decision hyperplane. This

allows it to better understand the underlying

conditions that most influence specific

outcomes, and ignore outside noise – in the

case of times series, potentially on-stationarity

– with more ease than ANNs. This allows for

greater generalizability on data outside of the

training set. However, data pre-treatment

methods, like discrete wavelet analysis have

allowed ANNs to overcome their issues with

generalizability and overfitting. Currently, the

direction of the field seems to be more focused

on refining ANNs than utilizing completely

new models like SVMs. It is for that reason that

I restrict the explanation of SVMs to a brief

heuristic.

Data Augmentation – Discrete Wavelet

Analysis

Wavelet analysis and transformation can be

thought of as somewhat analogous to a Fourier

transform. Similar to a Fourier transform,

wavelet analysis decomposes a time series into

a weighted aggregation of wavelets, exposing

the frequency and periodicity of certain trends.

It essentially breaks a time series down into its

long-lasting trends, and its detail trends. One

can think of the long lasting trends as the easy

to predict, and often linear time trends that are

more or less stationary. These could be multiyear patterns in the jet stream that effect

precipitation like the transition from a “La

Nina” year to an “El Nino” year.

Detail trends are the sporadic shocks that

enter time series data in a non-linear or nonstationary way. An example of these could be

less easily predictable changes in precipitation

patterns brought on by climate change.

7 This is the wavelet that is transformed continuously over

frequency and time-lag to generate the bases functions of the wavelet

function space

Moreover, wavelet analysis has the added

benefit of not being time invariant. Wavelet

decomposition changes when different points

in the time series are used as the initial point of

the wavelet. Effectively this maintains the time

structure of the series, and allows for

forecasting methods to be applied to the series

of individual wavelets.

The following explanation of wavelet

decomposition is explained leans heavily on

the lecture given by Andrew Nicoll (2020) and

is explained in the following way: a continuous

wavelet transform can be thought of as a

projection of a function – in this case a time

series – onto the infinite dimensional function

space of wavelets. There is some mother

wavelet, 𝜓, with two parameters: frequency

and time-lag. The different parameter values

produce different wavelets, that in turn

constitute the basis vectors of this function

space. The wavelets are similar to a sine or

cosine wave in a Fourier transform in this way.

Thus, for different frequency levels, 𝑠, and lag

lengths, 𝜏, that wavelets persist over, a

continuous wavelet transform expresses the

amount a time series is explained by a

particular wavelets. The method for

determining the magnitude of a times series

explained by a particular wavelet is given by

the inner product:

∞

𝐹(𝜏, 𝑠) = ∫ 𝑓(𝑡)𝜓 ∗ (

−∞

𝑡−𝜏

) 𝑑𝑡

𝑠

Where 𝜓 ∗ is the complex conjugate of the

“mother” wavelet7 and 𝑓(𝑡) is the time series

being

transformed.

Discrete

wavelet

transforms differ from continuous wavelet

transforms as they take a small, finite set of

wavelet bases and project the function in

question onto them. Generally, this subset is of

12

wavelets with high frequencies 8 as to capture

the “details” of the time series. The detail

wavelet projections are then subtracted from

the original time series, and the residual series

can be thought of as the long-lasting, low

frequency series. Also, as time series data is not

continuous, the integral is transformed into a

finite sum. For a set of 𝑝 times series points, the

discrete wavelet transform will have the form:

𝑝

𝐹̃ (𝑎, 𝑏) = ∑ 𝑓(𝑡𝑖 )𝜓(

𝑖=1

𝑡𝑖 − 𝑎

)

𝑏

Where 𝑎, 𝑏 are generally chosen dyadically,

that is 𝑎 = 𝛼2−𝑗 and 𝑏 = 2−𝑗 with 𝛼 ∈ ℝ and

𝑗 being some subset of natural numbers 𝑗 =

1, … , 𝑛.

The genius of transforming data via a

wavelet decomposition, is that it makes the

dataset an ANN learns over more concise.

Noise from multiple underlying frequencies

with varying trend lengths are separated and the

ANN can learn to predict each individual one.

In recent years, data augmentation with

wavelet decomposition has allowed ANNs to

gain even more predictive capability as it

separates a non-stationary time series, into

separate easily digestible wavelets describing

particular trends. In practical use this has had

tremendous success. Belayneh et al. (2016)

utilize SPI time series data from the Awash

River Basin in Ethiopia. They pre-process the

data with a discrete wavelet transforms and

train an ANN as well as a SVM on said data.

Using 3 and 6 month aggregation levels of SPI

data, they made predictions at 1 and 3 month

lead times. Not only did wavelet transforms

decrease both RMSE, mean absolute error, and

increase 𝑅2 significantly, but augmented

ANNs outperformed SVMs on the testing data

over the same metrics over almost all climatic

zones. Generally speaking, wavelet augmented

8 It is good to think of these high frequency wavelets like sporadic

bursts of non-stationarity, and consequently non-linearity on the data.

ANNs outperformed wavelet augmented

SVMs at 1-month lead times in 𝑅2 by 0.01 to

0.04. Augmented ANNs posted 𝑅2 ≈ 0.80 for

1-month lead times across all climatic zones,

while augmented SVMs were closer to 𝑅 2 ≈

0.76. SVMs were only more effective at

predicting SPI data in relatively wet climatic

areas at 3 month lead times, while middle and

low precipitation areas were dominated by

augmented ANNs. This came at somewhat of a

surprise to researchers, as SVMs generally do

not have the same overfitting problem as

ANNs, as mentioned earlier.

Also, wavelet augmented ANNs have been

trained on daily discharge data from the

Yangtze River basin. Although not a direct

metric of drought, daily river discharge is

related to hydrological drought as it pertains to

total watershed in a given geographic area.

Wang and Ding (2003) apply a wavelet

augmented ANN to this data, and explore its

effect on forecasting at different lead times.

Although results are not significantly improved

at short lead times, longer lead times – 4 to 5

days – undergo a significant improvement after

the data is filtered via a wavelet transformation.

Moreover, the wavelet transformation used

breaks the time series into 2 detail wavelets and

1 long term wavelet, a relatively crude

decomposition, with all other wavelet

decompositions cited using at least 3 detail

wavelets. Forecasts with <10% relative error

makeup 69.1% of 5 day lead forecasts for the

augmented ANN, whereas traditional ARMA

and ANN methods do not achieve <10% error

on more than 40% of forecasts.

Wavelet augmented SVMs and ANNs are

compared by Zhou et al. (2017) while

forecasting ground water depths in Mengcheng

County, China from 1974 to 2010. Despite the

previous findings of Belayneh et al. (2016) that

augmented ANNs outperform augmented

SVMs on SPI data, Zhou et al. (2017) find the

13

opposite, with SVMs slightly outperforming

ANNs. Using 3 levels of dyadic detail wavelet

filters (𝑗 = 1,2,3), augmented models were

able to predict ground water depth with an 𝑅

value of 0.97 on testing data compared to 0.72

and 0.78 for regular ANN and SVM

respectively, an incredibly remarkable result.

Wavelet decomposition has become the hottopic in hydrological forecasting, and the most

cutting edge models have decidedly dived a bit

deeper. The most recent trend is to combine

multiple models, generally an ANN and an

ARIMA model to predict different parts of the

variation of time series data.

Hybrid Models - The Cutting Edge

Although relatively new in hydrological

forecasting, hybrid models have shown

promising results and have gained some

traction. Mishra et al. (2007) builds on their

previous work in 2006, using recursive and

direct multistep ANNs to create a two-step

hybrid model. Due to ARIMA models linear

structure, they are effective at any linear time

series prediction, and computationally efficient

to estimate. As hydrological data’s

autocorrelation function is likely composed of

linear and non-linear relations, applying only a

non-linear or only a linear form could be naïve.

Consequently, Mishra et al. (2007) first

estimate a seasonal ARIMA model following

the same framework used in Mishra & Desai

(2006) to build their benchmark model. They

then obtain residuals from the seasonal

ARIMA estimates, and utilize both direct

multistep and recursive multistep models to

forecast said residual values. The ARIMA

model’s parameters are optimized using

Akaike Information Criterion, while the ANNs

node structures are optimized using trial and

error in order to minimize RMSE, and

maximize explained variation (R value).

Again, these models are constructed over SPI

9 An unexciting aspect is their excessively long name.

data from West Bengal, identical to that used in

Mishra & Desai (2006).

Ultimately, the hybrid seasonal ARIMAANN models outperform the standalone

seasonal ARIMA and standalone ANN models

over SPI 3, SPI 6, SPI 12, and SPI 24, and lead

times ranging from 1 month to 6 months.

Similar to the findings in Mishra & Desai

(2006), the recursive multistep hybrid model

outperformed direct mulstistep hybrid model

over short lead times (less than 2-months)

while the direct hybrid model was able to make

more accurate predictions over longer lead

times. Over the various SPI aggregations, at a

6 month lead time the direct hybrid model was

able to predict with 𝑅2 = 0.65 𝑡𝑜 0.72 as

compared to the seasonal ARIMA model that

never exceeded 𝑅 2 > 0.30, and the seasonal

ARIMA-recursive multistep ANN hybrid that

never exceeded 𝑅 2 > 0.60.

Another, more recent hybrid model is

discussed in Khan et al. (2020). Their model

uses an amalgamation of all previous methods

discussed. Analyzing SPI and raw rainfall data

in millimeters from the Langat River Basin in

Malaysia, they first use a discrete wavelet

transformation to break the data into 8 dyadic

levels of detail frequencies (𝑗 = 1, … ,8) and

one long term frequency. Then an ANN was

trained to forecast the 8 detail wavelet series,

while an ARIMA model, as outlined by Mishra

& Desai (2006) was utilized to forecast the long

term trends. The final hybrid model was able to

predict over a 1 month lead time relatively

concisely, with 𝑅 2 = 0.872 of explanatory

power. Traditional ANN and ARIMA models

did not exceed 𝑅2 = 0.4.

The exciting part of discrete wavelet

transform augmented hybrid-ANNs9 is their

applicability not only to hydrological

forecasting – specifically droughts – but also to

other time series. It seems the wavelet

transforms allow models to focus on specific

14

trends and not get “confused” dissecting a time

series down into multiple trends.

Conclusion - What More Can Be Done?

In the past 50 years, drought forecasting

has developed from rudimentary linear

regressions, utilizing basic climatic and

geological variables, to applying complex

methodology that aptly and efficiently

characterizes data’s variation. With cutting

edge models showing promising results, it begs

the question of what else can be done to better

hone in forecasts.

Without any doubt, machine learning is the

future of forecasting. However, determining

strategies for addressing various shortcomings

in ML will be the next step in better forecasting

hydrological data. It seems that data

augmentation methods such as discrete wavelet

transformations allow ANNs to overcome their

tendency to overfit in the presence of nonconstant autocorrelation functions. The

promising preliminary results of SVMs, and

their ability to more effectively generalize

results on training data to testing data needs to

be investigated more.

Moreover, despite being briefly mentioned

in the context of financial forecasting, recurrent

neural networks also show promise in adjusting

the functional form of a neural network as it

changes through time. This allows ANNs to

better adapt to non-stationary or non-constant

autocorrelation within a time series of

hydrological data.

With these more robust methods comes

issues of computational efficiency. It seems

that to better drought forecasts, work will have

to be split between finding better methods, and

determining optimal ways of implementing

them. Even though there is room for

improvement, it is still remarkable that

forecasting has come so far in such a short

period of time. To say the least, it is an exciting

time to be analyzing time series, as new

methods seem to appear by the day. Hopefully,

more innovative breakthroughs are just around

the corner.

References

1. Belayneh, A., Adamowski, J., & Khalil, B.

(2016). Short-term SPI drought forecasting

in the Awash River Basin in Ethiopia using

wavelet transforms and machine learning

methods. Sustainable Water Resources

Management, 2(1), 87–101.

2. Benson, M. A., & Thomas, D. M. (1970).

Generalization of streamflow characteristics

from drainage-basin characteristics.

3. Brunton, Steve. (2014, January 10). 16.

Learning: Support Vector Machines. MIT

OpenCourseWare. [video]. Retrieved from:

https://www.youtube.com/watch?v=_Pwhi

WxHK8o

4. Butler, M., & Kazakov, D. (n.d.). The Effects

of Variable Stationarity in a Financial TimeSeries on Artificial Neural Networks.

5. Chung, C., & Salas, J. D. (2000). Drought

Occurrence Probabilities and Risks of

Dependent Hydrologic Processes. Journal of

Hydrologic Engineering, 5(3), 259–268.

6. Drought and Climate Change | Union of

Concerned Scientists. (2014). Retrieved

March 4, 2021.

7. Farahmand, A., & AghaKouchak, A. (2015).

A generalized framework for deriving

nonparametric

standardized

drought

indicators. Advances in Water Resources,

76, 140–145

8. Karl, T. R. (1986). The Sensitivity of the

Palmer Drought Severity Index and

Palmer's Z Index to Their Calibration

Coefficients Including Potential

Evapotranspiration. Journal of Climate and

Applied Meteorology 25:77-86

9. Khan, Md. M. H., Muhammad, N. S., & ElShafie, A. (2020). Wavelet based hybrid

ANN-ARIMA models for meteorological

drought forecasting. Journal of Hydrology,

590, 125380.

10. Lohani, V. K., & Loganathan, G. V. (1997).

An Early Warning System for Drought

15

Management Using the Palmer Drought

Index1. JAWRA Journal of the American

Water Resources Association, 33(6), 1375–

1386.

11. Miranda, Mario J. & Fackler, Paul L..

(2002). Applied Computational Economics

and Finance. MIT Press, Cambridge, MA,

USA.

12. Mishra, A. K., Desai, V. R., & Singh, V. P.

(2007). Drought Forecasting Using a Hybrid

Stochastic and Neural Network Model.

Journal of Hydrologic Engineering, 12(6),

626–638.

13. Mishra, A. K., & Desai, V. R. (2006).

Drought forecasting using feed-forward

recursive neural network. Ecological

Modelling, 198(1), 127–138.

14. Mishra, A. K., & Singh, V. P. (2010). A

review of drought concepts. Journal of

Hydrology, 391(1), 202–216.

15. Nicoll, Andrew. (2020, July 29). The

Wavelet Transform for Beginners. Video

https://www.youtube.com/watch?v=kuuUaq

AjeoA&t=606s

16. Paulson, E. G., Sadeghipour, J., & Dracup, J.

A. (1985). Regional frequency analysis of

multiyear droughts using watershed and

climatic information. Journal of Hydrology,

77(1), 57–76.

17. Salas, J. D. (1980). Applied Modeling of

Hydrologic Time Series. Water Resources

Publication.

18. Sanderson, Grant. (2017). Deep Learning:

Neural Networks. [video]. Retrieved from:

https://www.youtube.com/playlist?list=PLZ

HQObOWTQDNU6R1_67000Dx_ZCJB3pi

19. Taver, V., Johannet, A., Borrell-Estupina,

V., & Pistre, S. (2015). Feed-forward vs

recurrent neural network models for nonstationarity

modelling

using

data

assimilation and adaptivity. Hydrological

Sciences Journal, 60(7–8), 1242–1265.

20. W. Wang and J. Ding. (2003). Wavelet

Network Model. Nature and Science, 1(1).

67-71.

21. Zhou, T., Wang, F., & Yang, Z. (2017).

Comparative Analysis of ANN and SVM

Models Combined with Wavelet Preprocess

for Groundwater Depth Prediction. Water,

9(10), 781.