What is population dynamics, importance of population dynamics and applications?

The term "population dynamics" refers to how the number of individuals in a population changes

over time.

Population dynamics deals with the short-term and long-term changes in the size and age

composition of populations, and the biological and environmental processes influencing those

changes.

Population dynamics is the branch of life sciences that studies the size and age composition

of populations as dynamical systems, and the biological and environmental processes driving

them (such as birth and death rates, and by immigration and emigration).

Example scenarios are ageing populations, population growth

Carrying capacity

Carrying capacity refers to the number of individuals who can be supported in a given area

within natural resource limits, and without degrading the natural social, cultural and economic

environment for present and future generations. The carrying capacity for any given area is not

fixed. It can be altered by improved technology, but mostly it is changed for the worse by

pressures which accompany a population increase. As the environment is degraded, carrying

capacity actually shrinks, leaving the environment no longer able to support even the number of

people who could formerly have lived in the area on a sustainable basis. No population can live

beyond the environment's carrying capacity for very long

Carrying Capacity

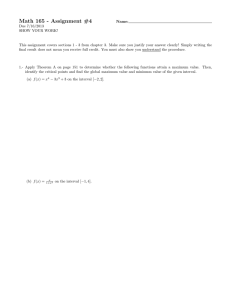

The graph above shows the population (N) of a certain species over time (t). At the carrying

capacity (K), the population stops growing as resources are maxed.

Modelling Population

• Mathematical models are required to understand and predict population behavior.

• Mathematical models are widely used in ecology. Ecosystems tend to be very complex and

governed by many intricate and usually non-linear mechanistic interactions.

• This course will be structured upon a foundation from mathematical models, expanding to the

biological evidence to support and/or reject various factors regulating populations.

• Introduction to the formulation, analysis and application of mathematical models that describe

the dynamics of biological populations.

What is a coupled system?

A coupled system is formed of two differential equations with two dependent variables and an

independent variable.

An example –

where a, b, c and d are given constants, and both y and x are functions of t.

Nonlinear Systems

Nonlinear state models

We will deal with the stability analysis of nonlinear systems, with emphasis on Lyapunov’s

method.

Consider a dynamical systems that are modeled by a finite number of coupled first-order

ordinary differential equations as

x f t , x, u ............................ 1

We call (1) the state equation and refer to x as the state and u as the input.

Consider another equation

y f t , x, u ................................ 2

Equation (2) is called the output.

State-space model

Equations (1) and (2) are called together the state space model or simply state model.

Unforced State equation

If the state model (1) is taken as

x f t , x ..................... 3

(3) where the input is specified as a function of time and state u t , x , then (3) is called

unforced state equation.

Implicit and explicit functions

In mathematics,

The explicit function is a function in which the dependent variable has been given “explicitly”

in terms of the independent variable. Or it is a function in which the dependent variable is

expressed in terms of some independent variables.

It is denoted by:

y=f(x)

Examples of Explicit functions are:

y=axn+bx where a , n and b are constant.

y=5x3-3

The Implicit function is a function in which the dependent variable has not been given

“explicitly” in terms of the independent variable. Or it is a function in which the dependent

variable is not expressed in terms of some independent variables.

It is denoted by:

R(x,y) = 0

Some examples of Implicit Functions are:

x2 + y2 – 1 = 0

function:

A

function is a function that is differentiable for all degrees of differentiation. For

instance,

is

because its th derivative

exists and is continuous.

All polynomials are

.

functions are also called "smooth" because neither they nor their

derivatives have "corners," which would make their graph look somewhat rough. For

example,

is not smooth.

Differentiable function:

In calculus (a branch of mathematics), a differentiable function of one real variable is a function

whose derivative exists at each point in its domain. As a result, the graph of a differentiable

function must have a (non-vertical) tangent line at each interior point in its domain, be relatively

smooth, and cannot contain any breaks, bends.

A differentiable function

Continuous function:

In mathematics, a continuous function is a function that does not have any abrupt changes in value,

known as discontinuities. A continuous function is a function that is continuous at every point in

itsdomain.That is f:A→B is continuous if ∀a∈A, lim f(x)=f(a).

x a

Graphical representation of continuous function.

Differentiability and Continuity

If f is differentiable at a point x0, then f must also be continuous at x0. In particular, any

differentiable function must be continuous at every point in its domain. The converse does not

hold: a continuous function need not be differentiable.

The absolute value function is continuous (i.e. it has no gaps). It is differentiable everywhere except

at the point x = 0, where it makes a sharp turn as it crosses the y-axis.

Smooth function:

A smooth function is a function that has continuous derivatives up to some desired order over

3

some domain.Now consider g(x)= x , this other function is also smooth.

Here is the graph of g(x):

Here is graph of

dg x

3x 2 :

dx

d 2 g x

6x

Here is graph of

dx 2

Non-Smooth function:

h(x)=|x| is not smooth, because it has corner.

Here is how the derivative of h(x) looks like:

Here is the derivate of h(x) as you can see its derivative is not even continuous.

Piece-wise continuous:

A function is called piecewise continuous on an interval if the interval can be broken into a finite

number of subintervals on which the function is continuous on each open subinterval (i.e. The

subinterval without its endpoints) and has a finite limit at the endpoints of each subinterval.

Figure: Piece-wise continuous

Lipschitz Continuous: Let: f :R n R be a function and x R n is a given point. Then f is said

to be Lipschitz near x , if there exist a scalar K > 0 and a positive number ε > 0 such that

f ( x1 ) − f ( x2 ) ≤ K x1 − x2 , ∀x1 , x2 ∈ B ( x,ε ), where B is the open ball of radius ε about x and

k is the called the Lipschitz constant.

Autonomous system

In mathematics, an autonomous system or autonomous differential equation is

a system of ordinary differential equations which does not explicitly depend on the independent

variable. When the variable is time, they are also called time-invariant systems.

An autonomous system is a system of ordinary differential equations of the form

x f x

If the system is not autonomous, then it is called nonautonomous or time varying.

What do you mean by equilibrium point?

A point x x in the state space is said to be an equilibrium point of x f x, t if f x 0 . It

has the property that whenever the state of the system starts at x x . It will remain at x x of

all future time.

Mathematically,

x t0 x x t x , t t0

Example:

Let the model 𝑓(𝑥) = 3𝑥(1 − 𝑥)

Put𝑓(𝑥) = 𝑥, then 3𝑥(1 − 𝑥) = 𝑥

⇒ 3𝑥 − 3𝑥 2 = 𝑥

⇒ 3𝑥 2 − 2𝑥 = 0

⇒ 𝑥(3𝑥 − 2) = 0

2

∴ 𝑥 = 0or𝑥 = 3 ≅ 0.6667

Stable:

An equilibrium point x=0 of the state space is said to be stable if nearby solutions stay nearby for

all future time

Mathematically,

The equilibrium point x=0 of x f x is stable if for each

x 0 < x t < , t 0.

The equilibrium point x=0 is unstable if it is not stable.

Asymtotically stable:

0 , there is 0 such that

Asymtotically stable if it is stable and 0 can be chosen such that

x 0 < lim x t 0

t

Theorem:

Suppose that f is a C 1 function and x x* in an equilibrium point of x f ( x) , that is f ( x* ) 0 .

Suppose also that f ( x* ) 0.

Then the equilibrium point x* is asymptotically stable if f ( x) 0, and unstable if f ( x) 0.

Proof:

First, we prove stability in the sense of Lyapunov. Suppose 0 is given. We need to find a 0

such that for all x(0) , it follows that x(t ) , t 0 . Let 1 min( , r ) . Define,

m min f ( x)

x 1

Since f ( x) is a continuous, the above m is well defined and positive. Choose satisfying

0 1 such that for all x , f ( x) m . Such a choice is always possible, again because of the

continuity of f ( x) . Now, consider such that x(0) , f ( x(0)) m , and let x(t ) be the resulting

trajectory. f ( x(t )) m . We will show that this implies that x(t ) 1 . Suppose their exists t1 such

that x(t1 ) 1 , then by continuity we must have that at an earlier time t 2 , x(t2 ) 1 and

min x 1 f ( x) m f ( x(t2 )) , which is a contradiction. Thus stability in the sense of Lyapunov

holds.

To prove asymptotic stability when f x(t ) c ,with c 0 . We want to show that c is in fact zero.

We can argue by contradiction and suppose that c 0 . Let the set S be defined as,

S x

n

| f ( x) c

and let Ba be a ball inside S of radius ,

S x S | x

Suppose x(t ) is a trajectory of the system that starts at x (0) , we know that f ( x(t )) is decreasing

monotonically to c and f ( x(t )) c for all t. Therefore x(t ) Ba ; recall that Ba S which is defined

as all the elements in

n

for which f ( x ) c . In the first part of the proof, we have established that

if x(0) then x(0) c . We can define the largest derivative of f ( x) as,

max f ( x)

x c

Clearly 0 since f ( x) is lnd. Observe that,

t

f ( x(t )) f ( x(0)) f ( x( ))d

0

f ( x(0)) t

Which implies that f ( x(t )) will be negative which will result in a contradiction establishing the

fact that c must be zero.

Example 1: consider the dynamical system which is governed by the differential equation.

x g ( x)

Clearly the origin is an equilibrium point. If we define a function,

x

f ( x) g ( y)dy

0

Then it is clear that f ( x) is locally positive definite (lpd) and

f ( x) g ( x) 2

which is locally negative definite (lnd). This implies that x 0 is an asymptotically stable

equilibrium point.

Lyapunov Theorem for Global Asymptotic Stability:

The region in the state space for which our earlier results hold is determined by the region over

which f(x) serves as a Lyapunov function. It is of special interest to determine the “basin of

attraction" of an asymptotically stable equilibrium point, i.e. the set of initial conditions whose

subsequent trajectories end up at this equilibrium point.An equilibrium point is globally

asymptotically stable (or asymptotically stable “in the large") if its basin of attraction is the entire

state space. If a function f(x) is positive definite on the entire state space, and has the additional

property that | f x | as || x || , and its derivative f is negative definite on the entire state

space, then the equilibrium point at the origin is globally asymptotically stable. We omit the proof

of this result. Other versions of such results can be stated, but are also omitted

Example 2:

Consider the nth order system

x C ( x)

With the property that C (0) 0 and xC ( x) 0 if x 0 . Convince yourself that the unique

equilibrium point of the system is at 0. Now consider the candidate Lyapunov function

f ( x) xx

Which satisfies all the desired properties, including f ( x)

as x

Evaluating its derivative

along trajectories, we get

A f ( x) 2 xx 2 xC ( x) 0 for x 0

Hence the system is globally asymptotically stable.

(1) Statement of the theorem:

Theorem 1 Suppose that F satisfies the following assumptions ,

We consider the initial value problem

y( x) F ( x, y( x))

y( x0 ) y0

(1.1)

Here we assume that F is a function of two variables (x, y), defined in a rectangle

(1.2)

H {( x, y : x0 a x x0 a),

y0 b y y0 b}

And we assume that F is continuous and has a continuous y-derivative

and

F

in R. Note that then F

y

F

are bounded in R; that is, there are non-negative constant M and K so that

y

(1.3)

| F ( x, y ) | M

(1.4)

F

( x, y ) K .

y

Let

b

I h ( x0 ) [ x0 h, x0 h], where h min a,

M

(1.5)

Then there is a unique function x y ( x) , defined for x in I h ( x0 ) , with continuous first derivative,

such that

y( x) F ( x, y( x))

for all x I h ( x0 )

And such that

y( x0 ) y0

The theorem allows us to make predictions on the length of the interval (that is h less than or equal

to the smaller of the numbers a and b/M). In most cases the lower bound is not very good, in the

sense that the interval on which the solution exist may be much larger than the interval predicted

by the Theorem.

We consider the problem,

e y ( x ) 1

y( x)

1 x 2 y ( x) 2

y (2) 1

2

(2.1)

which we likely cannot solve explicitly.

We want to find an interval on which a solution surely exists. Here our function F is defined by

F ( x, y) e y 1 (1 x2 y 2 )1 ( x)2 and x0 , y0 are given by x0 2, y0 1 . Thus we need to pick a

2

rectangle R which is centered at (-2, 1). In this rectangle we need to have good control on F and

F / y (c, f (1.3), (1.4)) and so we certainly have to choose R so small that it contains no points at

which the denominator 1 x 2 y 2 vanished. The exact choice of the rectangle is up to you but the

properties of F and F / y , as required in the theorem, must be satisfied.

Let’s pick a, b small in the definition of R, say let’s choose a=1/2 and b=1/4 so that we work in

the rectangle,

R {( x, y ) : 5 / 2 x 3 / 2,3 / 4 y 5 / 4}.

Notice that then for ( x, y ) in R we have x 2 9 / 4, y 2 9 /16, and therefore x 2 y 2 81/ 64, so

|1 x 2 y 2 | 17 / 64 1/ 4. for ( x, y ) in R. Thus we get | (1 x 2 y 2 )1 | 4 and e y

2

1

e9/16 3 which

implies,

e y 1

| F ( x, y ) |

3.4 12

1 x2 y 2

2

for ( x, y ) in R

Thus a legitimate (but non-optimal) choice for M in (1.3) is M=12.

To verify also (1.4) we compute

F

2 ye y 1

e y 1

( x, y )

2 yx 2

y

1 x 2 y 2 (1 x 2 y 2 )2

2

2

Observe that | 2 y | 5 / 2 and | 2 yx 2 | (5 / 2)3 in R and using the bounds above we can estimate

for all (x, y) in R

F

2 ye y 1

e y 1

( x, y )

2 yx 2

2 2

2 2 2

y

1 x y

(1 x y )

2

2

5

.12 3.42.(5 / 2)3 780

2

Thus we see that condition (2.2) is also satisfied, with K=780

Now if we take

b

h min a, min{1/ 2, (1/ 4) /12} 1/ 48,

M

Then by the theorem we can be sure that the problem has exactly one solution in the interval

[-2-h, -2+h]. So for example if we choose h=0.02 (which is less than 1/48), we would deduce

that there is a unique solution in the interval [-0.02, -1.98].

What do you mean by bifurcation?

Bifurcation theory is the mathematical study of changes in the qualitative or topological structure

of a given family, such as the integral curves of a family of vector fields, and the solutions of a

family of differential equations. Most commonly applied to the mathematical study of dynamical

systems, a bifurcation occurs when a small smooth change made to the parameter values (the

bifurcation parameters) of a system causes a sudden 'qualitative' or topological change in its

behaviour.[1] Bifurcations occur in both continuous systems (described by ODEs, DDEs or

PDEs), and discrete systems (described by maps). The name "bifurcation" was first introduced

by Henri Poincaré in 1885 in the first paper in mathematics showing such a behavior.[2] Henri

Poincaré also later named various types of stationary points and classified them.

Phase portrait as saddle-node bifurcation