See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/335909815

ROBUST PHONEME CLASSIFICATION USING RADON TRANSFORM OF

AUDITORY NEUROGRAMS

Thesis · September 2019

DOI: 10.13140/RG.2.2.10851.30242

CITATIONS

READS

0

245

4 authors:

Md.Shariful Alam

Wissam A. Jassim

University of Malaya

Trinity College Dublin

6 PUBLICATIONS 14 CITATIONS

38 PUBLICATIONS 339 CITATIONS

SEE PROFILE

SEE PROFILE

Mohd Yazed Ahmad

Muhammad S A Zilany

University of Malaya

Texas A&M University at Qatar

29 PUBLICATIONS 215 CITATIONS

46 PUBLICATIONS 966 CITATIONS

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

Audio signal processing View project

Wireless power transfer for implantable medical devices View project

All content following this page was uploaded by Md.Shariful Alam on 19 September 2019.

The user has requested enhancement of the downloaded file.

SEE PROFILE

ROBUST PHONEME CLASSIFICATION USING RADON

TRANSFORM OF AUDITORY NEUROGRAMS

MD. SHARIFUL ALAM

DEPARTMENT OF BIOMEDICAL ENGINEERING

FACULTY OF ENGINEERING

UNIVERSITY OF MALAYA

KUALA LUMPUR

2016

ROBUST PHONEME CLASSIFICATION USING

RADON TRANSFORM OF AUDITORY NEUROGRAMS

MD. SHARIFUL ALAM

DESSERTATION SUBMITTED IN FULFILMENT OF

THE REQUIREMENTS FOR THE DEGREE OF MASTER

OF ENGINEERING SCIENCE

DEPARTMENT OF BIOMEDICAL ENGINEERING

FACULTY OF ENGINEERING

UNIVERSITY OF MALAYA

KUALA LUMPUR

2016

UNIVERSITY OF MALAYA

ORIGINAL LITERARY WORK DECLARATION

Name of Candidate: Md. Shariful Alam

(I.C/Passport No: BH0110703)

Registration/Matric No: KGA140010

Name of Degree: Master of Engineering Science

Title of Dissertation:

ROBUST PHONEME CLASSIFICATION USING RADON TRANSFORM OF

AUDITORY NEUROGRAMS

Field of Study: Signal processing.

I do solemnly and sincerely declare that:

(1) I am the sole author/writer of this Work;

(2) This Work is original;

(3) Any use of any work in which copyright exists was done by way of fair

dealing and for permitted purposes and any excerpt or extract from, or

reference to or reproduction of any copyright work has been disclosed

expressly and sufficiently and the title of the Work and its authorship have

been acknowledged in this Work;

(4) I do not have any actual knowledge nor do I ought reasonably to know that

the making of this work constitutes an infringement of any copyright work;

(5) I hereby assign all and every rights in the copyright to this Work to the

University of Malaya (“UM”), who henceforth shall be owner of the

copyright in this Work and that any reproduction or use in any form or by any

means whatsoever is prohibited without the written consent of UM having

been first had and obtained;

(6) I am fully aware that if in the course of making this Work I have infringed

any copyright whether intentionally or otherwise, I may be subject to legal

action or any other action as may be determined by UM.

Candidate’s Signature

Date:

Subscribed and solemnly declared before,

Witness’s Signature

Date:

Name:

Designation:

iii

ABSTRACT

The use of speech recognition technology has increased considerably in the last three

decades. In the real world, the performance of well-trained speech recognizers is usually

degraded by different types of noise and distortions such as background noise,

reverberation and telephone channels. In particular, speech signal is extremely difficult

to recognize due to the interference created by reverberation and bandwidth of

transmission channels. The accuracy of traditional speech recognition systems in noisy

environments is much lower than the recognition accuracy of an average human being.

Robustness of speech recognition systems must be addressed for practical applications.

Although many successful techniques have been developed for dealing with clean signal

and noise, particularly uncorrelated noise with simple spectral characteristics (e.g.,

white noise), the problem of sound reverberation and channel distortions has remained

essentially unsolved. This problem hampers the wider use of acoustic interfaces for

many applications.

Unlike traditional methods in which features are extracted from the properties of the

acoustic signal, this study proposes a phoneme classification technique using neural

responses from a physiologically-based computational model of the auditory periphery.

The 2-D neurograms were constructed from the simulated responses of the auditorynerve fibers to speech phonemes. The features of the neurograms were extracted using

the Radon transform and used to train the classification system using a support vector

machine classifier. Classification performances were evaluated for phonemes extracted

from the TIMIT and HTIMIT databases. Experiments were performed in mismatched

train/test conditions where the test data in these experiments consist of speech corrupted

by variety of real world additive noises at different signal-to-noise ratios (SNRs),

convolutive distortions introduced by different room impulse response functions, and

iv

multiple telephone channel speech recordings with different frequency characteristics.

Performances of the proposed method were compared to those of Mel-Frequency

Cepstral Coefficients (MFCC), Gamma-tone Frequency Cepstral Coefficients (GFCC),

and Frequency Domain Linear Prediction (FDLP)-based phoneme classifiers. Based on

simulation results, the proposed method outperformed most of the traditional acousticproperty-based phoneme classification methods for both in quiet and under noisy

conditions. This approach is accurate, easy to implement, and can be used without any

knowledge about the type of distortion in the signal, i.e., it can handle any type of noise.

Using (support vector machine/ hidden Markov model) hybrid classifiers, the proposed

method could be extended to develop an automatic speech recognition system.

v

ABSTRAK

Semenjak 3 dekad yang lalu, penggunaan teknologi pengecaman pertuturan semakin

meningkat dengan mendadak. Dalam situasi praktikal, prestasi teknologi pengecaman

pertuturan selalunya jatuh disebabkan pelbagai jenis bunyi bising seperti bunyi latar

belakang, bunyi gema dan herotan telefon. Khususnya isyarat ucapan dengan bunyi latar

belakang atau herotan saluran amat sukar untuk dikecam disebabkan gangguan tersebut.

Ketepatan system pengecaman pertuturan tradisional dalam keadaan bising adalah amat

rendah berbanding prestasi pengecaman seorang manusia sederhana. Oleh itu,

kemantapansistem pengecaman pertuturan hendaklah diselidiki bagi aplikasi praktikal.

Walaupun pelbagai teknik telah bejaya diusahakan bagi mengendalikan isyarat dalam

keadaan bersih dan bising (khususnya bunyi bising dengan ciri-ciri spectral yang asas),

cabaran gangguan bunyi gema serta gangguan saluran tidak lagi diselesaikan. Masalah

ini menjadi suatu halangan bagi penggunaan antara muka akustik dalam ramai aplikasi.

Berlainan dengan kaedah tradisional di mana ciri-ciri diambil dari isyarat akustik, kajian

ini mencadangkan kaedah klasifikasi fonem yang berdasarkan tindak balas neural

daripada model pengiraan fisiologi sistem periferi pendengaran. Neurogram 2-D telah

diperolehi daripada simulasi tindak balas saraf auditori terhadap fonem pertuturan. Ciriciri neurogram telah diperolehi menggunakan transformasi Radon dan diguna untuk

melatih system pengelasan mesin vektor sokongan. Prestasi pengelasan bagi sistem

tersebut telah dinilai menggunakan fonem-fonem berbeza dari pangkalan data TIMIT

dan HTIMIT. Eksperimen telah dijalankan dalam keadaan latihan/ujian ‘mismatch’ di

mana data bagi proses ujian ditambah dengan herotan yang berbeza pada nisbah isyarathingar (SNR) berlainan, herotan kusut serta saluran telefon yang berlainan. Prestasi

kaedah yang dicadangkan telah dibandingkan dengan kaedah pengelasan MelFrequency Cepstral Coefficients (MFCC), Frequency Domain Linear Prediction (FDLP)

vi

dan gammatone frequency cepstral coefficients (GFCC). Berdasarkan keputusan

simulasi, kaedah yang dicadangkan telah menunjukkan prestasi yang lebih cemerlang

berbanding kaedah tradisional bderdasarkan ciri akustik dalam keadaan bising dan

senyap. Kaedah ini tepat, senang diguna dan boleh diguna tanpa maklumat mengenai

jenis herotan isyarat. Dengun menggunakan pengelas hybrid (mesin vektor sokongan,

model terselindung Markov), kaedah ini boleh diguna bagi mengusahakan sistem

pengecaman pertuturan automatik.

vii

ACKNOWLEDGEMENTS

In the name of Allah the most Merciful and Beneficent

First and Foremost praise is to ALLAH, the Almighty, the greatest of all, on whom

ultimately we depend for sustenance and guidance. I would like to thank Almighty

Allah for giving me opportunity, determination and strength to do my research. His

continuous grace and mercy was with me throughout my life and ever more during the

tenure of my research.

This research that is conducted at Auditory Neuroscience (AN) lab would not have been

possible without direct and indirect aid I received from so many others including:

teachers, family and friends. I would like to gratefully and sincerely thank my principle

supervisor Dr. Muhammad Shamsul Arefeen Zilany for his guidance, understanding,

patience, and most importantly, his friendship during my graduate studies. I also want to

thank my co-supervisor Dr. Mohd Yazed Bin AHMAD for his continuous support

throughout the entire project. I want to express my thanks for the financial support I

received from the HIR projects of UM.

My heartfelt gratitude also goes to Dr. Wissam A. Jassim who is a research fellow from

department of Electrical Engineering who dutifully and patiently taught me the hands on

application in developing the software, sharing ideas of new topics on solving the

problem arises in completing the thesis. Thanks also to my research-mates for sharing

their thoughts to improve the research outcome.

I owe everything to my parents and siblings who encouraged and helped me at every

stage of my personal and academic life and longed to see this achievement come true. I

dedicate this work to my wife and son (Saad Abdullah).

viii

TABLE OF CONTENTS

Abstract ............................................................................................................................ iv

Abstrak ............................................................................................................................. vi

Acknowledgements ........................................................................................................viii

List of Figures ................................................................................................................xiii

List of Tables.................................................................................................................. xvi

List of Symbols and Abbreviations ..............................................................................xviii

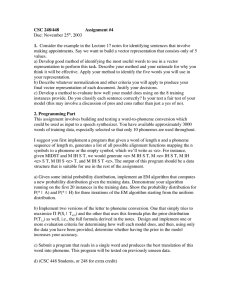

CHAPTER 1: INTRODUCTION .................................................................................. 1

1.1

Phoneme classification ............................................................................................ 2

1.2

Automatic speech recognition ................................................................................. 4

1.3

Problem Statement ................................................................................................... 5

1.4

Motivation................................................................................................................ 8

1.4.1

Comparisons between Humans and Machines ........................................... 8

1.4.2

Neural-response-based feature ................................................................... 9

1.5

Objectives of this study ......................................................................................... 10

1.6

Scope of the study.................................................................................................. 11

1.7

Organization of Thesis ........................................................................................... 13

CHAPTER 2: LITERATURE REVIEW .................................................................... 14

2.1

Introduction............................................................................................................ 14

2.2

Research background ............................................................................................. 14

2.3

Existing metrics ..................................................................................................... 16

2.3.1

Mel-frequency Cepstral Coefficient (MFCC) .......................................... 16

2.3.2

Gammatone Frequency Cepstral Coefficient (GFCC) ............................. 17

2.3.3

Frequency Domain Linear Prediction (FDLP) ......................................... 17

ix

2.4

2.5

Structure and Function of the Auditory System .................................................... 18

2.4.1

Outer Ear .................................................................................................. 19

2.4.2

Middle Ear (ME) ...................................................................................... 19

2.4.3

Inner Ear ................................................................................................... 20

2.4.4

Basilar Membrane Responses................................................................... 20

2.4.5

Auditory Nerve ......................................................................................... 21

Brief history of Auditory Nerve (AN) Modeling .................................................. 22

2.5.1

Description of the computational model of AN ....................................... 25

2.5.1.1 C1 Filter: ................................................................................... 26

2.5.1.2 Feed forward control path (including OHC): ............................ 26

2.5.1.3 C2 Filter: ................................................................................... 27

2.5.1.4 The Inner hair cell (IHC):.......................................................... 28

2.5.2

2.6

2.7

Envelope (ENV) and Temporal Fine Structure (TFS) neurogram ........... 31

Support Vector Machines ...................................................................................... 31

2.6.1

Linear Classifiers ...................................................................................... 32

2.6.2

Non-linear Classifiers ............................................................................... 33

2.6.3

Kernels ...................................................................................................... 33

2.6.4

Multi-class SVMs ..................................................................................... 34

Radon Transform ................................................................................................... 34

2.7.1

Theoretical foundation ............................................................................. 34

2.7.2

How Radon transform works ................................................................... 35

2.7.3

Current applications ................................................................................. 36

CHAPTER 3: METHODOLOGY ............................................................................... 37

3.1

System overview .................................................................................................... 37

3.2

Datasets .................................................................................................................. 37

3.2.1

TIMIT database ........................................................................................ 38

x

3.2.2

HTIMIT corpus ........................................................................................ 40

3.3

AN model and neurogram ..................................................................................... 41

3.4

Feature extraction using Radon transform ............................................................. 44

3.5

SVM classifier ....................................................................................................... 45

3.6

Environmental Distortions ..................................................................................... 47

3.6.1

3.7

Existing Strategies to Handle Environment Distortions ........................... 51

Generation of noise ................................................................................................ 52

3.7.1

Speech with additive noise ....................................................................... 52

3.7.2

Reverberant Speech .................................................................................. 52

3.7.3

Telephone speech ..................................................................................... 54

3.8

Similarity measure ................................................................................................. 55

3.9

Procedure ............................................................................................................... 56

3.9.1

Feature extraction using MFCC, GFCC and FDLP for classification ..... 58

CHAPTER 4: RESULTS.............................................................................................. 60

4.1

Introduction............................................................................................................ 60

4.2

Overview of result ................................................................................................. 60

4.3

Classification accuracies (%) for phonemes in quiet environment ....................... 63

4.4

Performance for signal with additive noise ........................................................... 64

4.5

Performance for reverberant speech ...................................................................... 68

4.6

Signals distorted by noise due to telephone channel ............................................. 69

CHAPTER 5: DISCUSSIONS ..................................................................................... 71

5.1

Introduction............................................................................................................ 71

5.2

Broad class accuracy.............................................................................................. 71

5.3

Comparison of results from previous studies ........................................................ 73

5.4

Effect of the number of Radon angles on classification results............................. 75

xi

5.5

Effect of SPL on classification results ................................................................... 76

5.6

Effect of window length on classification results .................................................. 77

5.7

Effect of number of CFs on classification results .................................................. 78

5.8

Robustness property of the proposed system ........................................................ 79

CHAPTER 6: CONCLUSIONS & FUTURE WORKS ............................................ 84

6.1

Conclusions ........................................................................................................... 84

6.2

Limitations and future work .................................................................................. 85

LIST OF PUBLICATIONS AND CONFERENCE PROCEEDINGS..................... 87

REFERENCES ……………………………………………………………………...88

APPENDIX A – CONFUSION MATRICES............................................................ 100

xii

LIST OF FIGURES

Figure 1.1: Overview of phoneme classification .............................................................. 2

Figure 1.2: Difference between phoneme classification and phoneme recognition. The

small box encloses the task of phoneme classifier, and the big box encloses the task of a

phoneme recogniser. After the phonemes have been classified in (b), the dynamic

programming method (c) finds the most likely sequence of phonemes (d). ..................... 3

Figure 1.3: Architecture of an ASR system [adapted from(Wang, 2015)] ....................... 4

Figure 2.1: Illustration of block diagram for MFCC derivation. .................................... 16

Figure 2.2: Illustration of methodology to extract GFCC feature ................................... 17

Figure 2.3: Deriving sub-band temporal envelopes from speech signal using FDLP. ... 18

Figure 2.4: Illustration of the structure of the auditory system showing outer, middle and

inner ear (Reproduced from Encyclopaedia Britannica, Inc. 1997)................................ 19

Figure 2.5: Motions of BM at different frequencies (Reproduced from Encyclopaedia

Britannica, Inc. 1997) ...................................................................................................... 21

Figure 2.6: Model of one local peripheral section. It includes outer/ME, BM, and IHC–

AN synapse models. (Robert & Eriksson, 1999) ............................................................ 23

Figure 2.7: The model of the auditory peripheral system developed by Bruce et al.

(Bruce et al., 2003), modified from Zhang et al. (Zhang et al., 2001) ............................ 24

Figure 2.8: Schematic diagram of the auditory-periphery. The model consists of ME

filter, a feed-forward control path, two signal path such as C1 and C2, the inner hair cell

xiii

(IHC), outer hair cell (OHC) followed by the synapse model with spike generator.

(Zilany & Bruce, 2006). .................................................................................................. 25

Figure 2.9: (A) Schematic diagram of the model of the auditory periphery (B) IHC-AN

synapse model: exponential adaptation followed by parallel PLA models (slow and

fast).................................................................................................................................. 29

Figure 2.10 (a) a separating hyperplane. (b) The hyperplane that maximizes the margin

of separability .................................................................................................................. 32

Figure 3.1: Block diagram of the proposed phoneme classifier...................................... 37

Figure 3.2: Time-frequency representations of speech signals. (A) a typical speech

waveform (to produce spectrogram and neurogram of that signal), (B) the corresponding

spectrogram responses, and (C) the respective neurogram responses. ........................... 42

Figure 3.3: Geometry of the DRT ................................................................................... 44

Figure 3.4: This figure shows how Radon transforms work. (a) a binary image (b)

Radon Transform at 0 Degree (c) Radon Transform at 45 Degree. ................................ 45

Figure 3.5: Types of environmental noise which can affect speech signals (Wang, 2015)

......................................................................................................................................... 48

Figure 3.6: Impact of environment distortions on clean speech signals in various

domain: (a) clean speech signal (b) speech corrupted with background noise (c) speech

degraded by reverberation (d) telephone speech signal. (e)-(h) Spectrum of the

respective signals shown in (a)–(d). (i)-(l) neurogram of the respective signals shown in

(a)–(d).(m)-(p) Radon coefficient of the respective signals shown in (a)–(d). ............... 50

xiv

Figure 3.7: Comparison of clean and reverberant speech signals for phoneme /aa/: (a)

clean speech, (b) signal corrupted by reverberation (c)-(d) Spectrogram of the respective

signals shown in (a)–(b) and (e)-(f) Radon coefficient of the respective signals shown in

(a)-(b). ............................................................................................................................. 53

Figure 3.8: A simplified environment model where background noise and channel

distortion dominate. ........................................................................................................ 55

Figure 3.9: Neurogram -based feature extraction for the proposed method: (a) a typical

phoneme waveform (/aa/), (b) speech corrupted with SSN (10 dB) (c) speech corrupted

with SSN (0 dB). (d)-(f) neurogram of the respective signals shown in (a)–(c). (g)-(h)

Radon coefficient of the respective signals shown in (a)–(b). (j) Radon coefficient of the

respective signals shown in (a)-(c). ................................................................................. 57

Figure 4.1: Broad phoneme classification accuracies (%) for different features in various

noise types at different SNRs values. Clean condition is denoted as Q. ......................... 67

Figure 5.1: Example of radon coefficient representations: (a) stop /p/ (b) fricative /s/ (c)

nasal /m/ (d) vowel /aa/. .................................................................................................. 72

Figure 5.2: Example of radon coefficient representations for stop: (a) /p/ (b)/t/ (c)/k/

and (d) /b/ ........................................................................................................................ 72

Figure 5.3: The correlation coefficient (a) MFCC features extracted from the phoneme

in quiet (solid line) and at an SNR of 0 dB (dotted line) condition. The correlation

coefficient between the two vectors was 0.76. (b) FDLP features under clean and noisy

conditions. Correlation coefficient between the two cases was 0.72. (d) Neurogram

responses of the phoneme under clean and noisy conditions. The Correlation coefficient

between the two vectors was 0.85. .................................................................................. 80

xv

LIST OF TABLES

Table 1.1: Human versus machine speech recognition performance (Halberstadt, 1998).

........................................................................................................................................... 8

Table 1.2: Human and machine recognition results. All percentages are word error rates.

Best results is indicated in bold......................................................................................... 9

Table 3.1: Mapping from 61 classes to 39 classes, as proposed by Lee and Hon (Lee &

Hon, 1989). ..................................................................................................................... 39

Table 3.2: Broad classes of phones proposed by Reynolds and Antoniou, (T. J.

Reynolds & Antoniou, 2003). ......................................................................................... 40

Table 3.3: Number of token in phonetic subclasses for train and test sets ..................... 40

Table 4.1: Classification accuracies (%) of individual and broad class phonemes for

different feature extraction techniques on clean speech, speech with additive noise

(average performance of six noise types at -5, 0, 5, 10, 15, 20 and 25 dB SNRs),

reverberant speech (average performance for eight room impulse response functions),

and telephone speech (average performance for nine channel conditions). The best

performance for each condition is indicated in bold. ...................................................... 61

Table 4.2: Confusion matrices for segment classification in clean condition................. 62

Table 4.3: Classification accuracies (%) of broad phonetic classes in clean condition. . 64

Table 4.4: Individual phoneme classification accuracies (%) for different feature

extraction techniques for 3 different noise types at -5, 0, 5, 10, 15, 20 and 25 dB SNRs.

The best performance for each condition is indicated in bold. ....................................... 65

xvi

Table 4.5: Individual phoneme classification accuracies (%) for different feature

extraction techniques for 3 different noise types at -5, 0, 5, 10, 15, 20 and 25 dB SNRs.

The best performance for each condition is indicated in bold. ....................................... 66

Table 4.6: Classification accuracies (%) in eight different reverberation test set. The best

performance for each condition is indicated in bold. Last column show the average

value indicated as “Avg”. ................................................................................................ 68

Table 4.7: Classification accuracies (%) for signal distorted by nine different telephone

channels. The best performance is indicated in bold. Last column shows the average

value indicated as “Avg”. ................................................................................................ 70

Table 5.1 Correlation measure in acoustic and Radon domain for different phoneme... 73

Table 5.2: Phoneme classification accuracies (%) on the TIMIT core test set (24

Speakers) and complete test set (168 speakers) in quiet condition for individual phone

(denoted as single) and broad class (denoted as Broad). Here, RPS is the abbreviation of

reconstructed phase space. .............................................................................................. 74

Table 5.3: Phoneme classification accuracies (%) as a function of the number of Radon

......................................................................................................................................... 76

Table 5.4: Effects of SPL on classification accuracy (%). .............................................. 77

Table 5.5: Effect of window size on classification performance (%). ............................ 78

Table 5.6: Effect of number of CF on classification performance (%). .......................... 79

Table 5.7: Correlation measure for different phoneme and their corresponding noisy

(SSN) phoneme (clean-10dB and clean-0dB) in different domain. Average correlation

measure (Avg) of seven phonemes is indicated in bold (last row). ................................ 82

xvii

LIST OF SYMBOLS AND ABBREVIATIONS

ABWE

:

Artificial bandwidth extension

AF

:

Articulatory features

AMR

:

Adaptive multi-rate

AN

:

Auditory Nerve

ANN

:

Artificial neural net-works

AP

:

Action Potential

AR

:

auto-regressive

ASR

:

Automatic speech recognition

BF

:

Best Frequency

BM

:

Basilar Membrane

CF

:

Character frequency

C-SVC

:

C-support vector classification

DCT

:

Discrete cosine transform

DRT

:

Discrete Radon transform

DTW

:

Dynamic time warping

FDLP

:

Frequency Domain Linear Prediction

FTC

:

Frequency Tuning Curve

GF

:

Gammatone feature

xviii

GFCC

:

Gammatone frequency coefficient cepstra

GMM

:

Gaussian Mixture Model

HMM

:

Hidden Markov Models

HTIMIT

:

handset TIMIT

IHC

:

Inner Hair Cells

LPC

:

Linear predictive coding

LVASR

:

Large Vocabulary ASR

LVCSR

:

Large vocabulary continuous speech recognition

OHC

:

Outer Hair Cells

OVO

:

One versus one

OVR

:

One versus rest

PLP

:

Perceptual linear prediction

PSTH

:

Post Stimulus Time Histogram

RASTA

:

Relative spectra

RBF

:

Radial basis function

RIR

:

Room impulse response

SRM

:

Structural Risk Minimization

SVM

:

Support vector machines

xix

CHAPTER 1: INTRODUCTION

Automatic speech recognition (ASR) has been extensively studied in the past several

decades. Driven by both commercial and military interests, ASR technology has been

developed and investigated on a great variety of tasks with increasing scales and

difficulty. As speech recognition technology moves out of laboratories and is widely

applied in more and more practical scenarios, many challenging technical problems

emerge. Environmental noise is a major factor which contributes to the diversity of

speech. As speech services are provided on various devices, ranging from telephones,

desktop computers to tablets and game consoles, speech signals also exhibit large

variations caused by channel characteristic differences. Speech signals captured in

enclosed environments by distant microphones are usually subject to reverberation.

Compared with background noise and channel distortions, reverberant noise is highly

dynamic and strongly correlated with the original clean speech signals. Speech

recognition based on phonemes is very attractive, since it is inherently free from

vocabulary limitations. Large Vocabulary ASR (LVASR) systems’ performance

depends on the quality of the phone recognizer. That is why research teams continue

developing phone recognizers, in order to enhance their performance as much as

possible. The classification of phonemes can be seen as one of the basic units in a

speech recognition system. This thesis will focus on the development of a robust

phoneme classification technique that works well in diverse conditions.

1

Unknown utterance

Speech signal

Predicted class

(b)

(a)

(c)

Learning Machine

(d) ../p/, /t/, /k/,...

Figure 1.1: Overview of phoneme classification

1.1

Phoneme classification

Phoneme classification is the task of determining the phonetic identity of a speech

utterance (typically short) based on the extracted features from speech. The individual

sounds used to create speech are called phonemes. The task of this thesis is to create a

learning machine that can classify phoneme (sequence of acoustic observations) both in

quiet and under noisy environments. To explain how this learning machine works, we

can consider a speech signal for an ordinary English sentence, labeled (a) in Fig. 1.1.

The signal is split up into elementary speech unit [(b) in the figure 1.1] and provided

into the learning machine (c). The task of this learning machine is to classify each of

these unknown utterances to one of the 39 targets, representing the phonemes in the

English language. The idea of classifying phonemes is widely used in both isolated and

continuous speech recognition. The predictions found by the learning machine can be

passed on to a statistical model to find the most likely sequence of phonemes that

construct a meaningful sentence. Hence for a successful ASR system accurate phoneme

classification is important.

2

Phoneme classification

(a)

Learning Machine

(b)

../p/, /t/, /k/,...

(c)

Dynamic programming

method

(d)

../p/, /k/,,...

Figure 1.2: Difference between phoneme classification and phoneme recognition. The

small box encloses the task of phoneme classifier, and the big box encloses the task of a

phoneme recogniser. After the phonemes have been classified in (b), the dynamic

programming method (c) finds the most likely sequence of phonemes (d).

In this thesis, the chosen learning machine for the task is support vector machines

(SVMs). The task of phoneme classification is typically done in the context of phoneme

recognition. As the name implies, phoneme recognition consists of identifying the

individual phonemes a sentence is composed of and then a dynamic programming

method is required to transform the phoneme classifications into phoneme predictions.

This difference is shown in Fig. 1.2. We have chosen not to perform complete phoneme

recognition, because we would need to include a dynamic programming method. This

may seem fairly straightforward, but to do it properly, it would involve introducing

large areas of speech recognition such as decoding techniques and the use of language

models. This would detract from the focus of the thesis. Phone recognition has a wide

range of applications. In addition to typical LVASR systems, it can be found in

applications related to language recognition, keyword detection, speaker identification,

and applications for music identification and translation (Lopes & Perdigao, 2011).

3

Figure 1.3: Architecture of an ASR system [adapted from(Wang, 2015)]

1.2

Automatic speech recognition

This section will introduce the speech recognition systems. The aim of ASR system is to

produce the most likely word sequence given an incoming speech signal. Figure 1.3

shows the architecture of an ASR system and its main components. In the first stage of

speech recognition, input speech signals are processed by a front-end to provide a

stream of acoustic feature vectors or observations. These observations should be

compact and carry sufficient information for recognition in the later stage. This process

is usually known as front-end processing or feature extraction. In the second stage, the

extracted observation sequence is provided into a decoder to recognise the mostly likely

word sequence. Three main knowledge sources, such as the lexicon, language models

and acoustic models are used in this stage. The lexicon, also known as the dictionary, is

usually used in large vocabulary continuous speech recognition (LVCSR) systems to

map sub-word units to words used in the language model. The language model

represents the prior knowledge about the syntactic and semantic information of word

sequences. The acoustic model represents the acoustic knowledge of how an

observation sequence can be mapped to a sequence of sub-word units. In this thesis, we

consider phone classification that allows a good evaluation of the quality of the acoustic

4

modeling, since it computes the performance of the recognizer without the use of any

kind of grammar (Reynolds & Antoniou, 2003).

1.3

Problem Statement

State-of-the-art algorithms for ASR systems suffer from poorer performance when

compared to the ability of human listeners to detect, analyze, and segregate the dynamic

acoustic stimuli, especially in complex and under noisy environments (Lippmann, 1997;

G. A. Miller & Nicely, 1955; Sroka & Braida, 2005). Performance of ASR systems can

be improved by using additional levels of language and context modeling, provided that

the input sequence of elementary speech units is sufficiently accurate (Yousafzai, Ager,

Cvetković, & Sollich, 2008). To achieve a robust recognition of continuous speech, both

sophisticated language-context modeling and accurate predictions of isolated phonemes

are required. Indeed, most of the inherent robustness of human speech recognition

occurs before and independently of context and language processing (G. A. Miller,

Heise, & Lichten, 1951; G. A. Miller & Nicely, 1955). For phoneme recognition, human

auditory system’s accuracy is already above chance level, at an signal-to-noise ratio

(SNR) of -18 dB (G. A. Miller & Nicely, 1955). Also, several studies have

demonstrated the superior performance of human speech recognition compared to

machine performance both in quiet and under noisy conditions (Allen, 1994; Meyer,

Wächter, Brand, & Kollmeier, 2007), and thus the ultimate challenge for an ASR is to

achieve recognition performance that is close to the performance of human auditory

system. In this thesis, we consider front-end features for phoneme classification because

accurate classification of isolated phonetic unit is very important for achieving robust

recognition of continuous speech.

Most of the existing ASR systems use perceptual linear prediction (PLP), Relative

spectra (RASTA) or Cepstral features, normally some variant of Mel-frequency cepstral

5

coefficients (MFCCs) as their front-end. Due to nonlinear processing involved in the

feature extraction, even a moderate level of distortion may cause significant departures

from feature distributions learned on clean data, making these distributions inadequate

for recognition in the presence of environmental distortions such as additive noise

(Yousafzai, Sollich, Cvetković, & Yu, 2011). Some attempts have been made to utilize

Gammatone frequency coefficient cepstra (GFCC) in ASR (Shao, Srinivasan, & Wang,

2007). But their improvement was not significant. During past years, efforts have also

been made to design a robust ASR system motivated by articulatory and auditory

processing (Holmberg, Gelbart, & Hemmert, 2006; Jankowski Jr, Vo, & Lippmann,

1995; Jeon & Juang, 2007). However, these models did not include most of the

nonlinearities observed at the level of the auditory periphery and thus were not

physiologically-accurate. As a result, the performance of ASR systems based on these

features is far below compared to human performance in adverse conditions (Lippmann,

1997; Sroka & Braida, 2005).

Until recently, the problem of recognizing reverberant signal and signal distorted with

telephone channel has remained unsolved due to the nature of reverberant speech and

bandwidth of telephone channel (Nakatani, Kellermann, Naylor, Miyoshi, & Juang,

2010; Pulakka & Alku, 2011). Reverberation is a form of distortion quite distinct from

both additive noise and spectral shaping. Unlike additive noise, reverberation creates

interference that is correlated with the speech signal. Most of the telephone systems in

use today transmit only a narrow audio bandwidth limited to the traditional telephone

band of 0.3–3.4 kHz or to only a slightly wider bandwidth. Natural speech contains

frequencies far beyond this range and consequently, the naturalness, quality, and

intelligibility of telephone speech are degraded by the narrow audio bandwidth (Pulakka

& Alku, 2011).

6

In order to solve the reverberation problem, a number of de-reverberation algorithms

have been proposed based on cepstral filtering, inverse filtering, temporal ENV

filtering, excitation source information and spectral processing. The main limitation of

these techniques is that the acoustic impulse responses or talker locations must be

known or blindly estimated for successful de-reverberation. This is known to be a

difficult task (Krishnamoorthy & Prasanna, 2009). These problems preclude its use for

real-time applications (Nakatani, Yoshioka, Kinoshita, Miyoshi, & Juang, 2009). In the

past decades, many pitch determination algorithms have been proposed to improve the

recognition accuracy of telephone speech. An important consideration for pitch

determination algorithms is the performance in telephone speech, where the

fundamental frequency is always weak or even missing, which makes pitch

determination even more difficult (L. Chang, Xu, Tang, & Cui, 2012). A significant

improvement in speech quality can be achieved by using wideband (WB) codecs for

speech transmission. For example, the adaptive multi-rate (AMR)-WB speech codec

transmits the audio bandwidth of 50–7000 Hz. But wideband telephony is possible only

if both terminal devices and the network support AMR-WB.

Most of the above-mentioned methods provide good performance (but still less than the

average performance of human being) for a specific condition, i.e., quiet environment,

additive noise, reverberant speech, or channel distortion, but the speech signals are

usually affected by multiple acoustic factors simultaneously. This makes difficult to use

them in real environment. In this study, we propose a method that can handle any types

of noise and thus can be used in real environment.

7

Table 1.1: Human versus machine speech recognition performance (Halberstadt, 1998).

Corpus

Description

Vocabulary

Size

Machine

Error (%)

Human

Error (%)

TI Digits

Read Digits

10

0.72

0.009

Alphabet Letters

Read Alphabetic Letters

26

5

1.6

Resource

Management

Read Sentences (Wordpair Grammar)

1,000

3.6

0.1

Resource

Management

Read Sentences (Null

Grammar)

1,000

17

2

Wall Street Journal

Read Sentences

7.2

0.9

North American

Business News

Read Sentences

Unlimited

6.6

0.4

Switchboard

Spontaneous Telephone

Conservations

2,000Unlimited

43

4

1.4

5,000

Motivation

Two sources of motivation contributed to the conception of the ideas for the

experiments in this thesis.

1.4.1

Comparisons between Humans and Machines

Different studies have shown the performance between humans and ASR systems

(Lippmann, 1997; G. A. Miller & Nicely, 1955; Sroka & Braida, 2005). Table 1.1

shows the performance of machine and human for different corpus. Kingsbury et al.

showed the difference between humans and machines for reverberant speech

(Kingsbury & Morgan, 1997). Machine recognition tests were run using a hybrid hidden

Markov model/multilayer perceptron (HMM/MLP) recognizer. Four front ends were

8

Table 1.2: Human and machine recognition results. All percentages are word error rates.

Best results is indicated in bold.

Experiment

Baseline

Feature set

Condition

Error (%)

PLP

Clean

17.8

Reverb

71.5

Clean

16.4

Reverb

74.4

Clean

16.9

Reverb

78.9

Clean

31.7

Reverb

66.0

Reverb

6.1

Log-RASTA

J-RASTA

Mod. Spec.

Humans

tested: PLP, log-RASTA-PLP, JRASTA-PLP, and an experimental RASTA-like front

end called the modulation spectrogram (Mod. Spec.). Their results are shown in the

Table 1.2.

Aforementioned results imply that the performances of humans are significantly better

than machines. Machines cannot extract efficiently the low-level phonetic information

from speech signal. These results motivated us to work on front-end features for

accurate recognition of phonemes, and closely related problem of classification.

1.4.2

Neural-response-based feature

The accuracy of human speech recognition motivates the application of information

processing strategies found in the human auditory system to ASR (Hermansky, 1998;

Kollmeier, 2003). In general, the auditory system is nonlinear. Incorporating nonlinear

properties from the auditory system in the design of a phoneme classifier might improve

the performance of the recognition system. The current study proposes an approach to

9

classify phonemes based on the simulated neural responses from a physiologicallyaccurate model of the auditory system (Zilany, Bruce, Nelson, & Carney, 2009). This

approach is expected to improve the robustness of the phoneme classification system. It

was motivated by the fact that neural responses are robust against noise due to the

phase-locking property of the neuron, i.e., the neurons fire preferentially at a certain

phase of the input stimulus (M. I. Miller, Barta, & Sachs, 1987), even when noise is

added to the acoustic signal. In addition to this, the auditory-nerve (AN) model also

captures most of the nonlinear properties observed at the peripheral level of the auditory

system such as nonlinear tuning, compression, two-tone suppression, and adaptation in

the inner-hair-cell-AN synapse as well as some other nonlinearities observed only at

high sound pressure levels (SPLs) (M. I. Miller et al., 1987; Robles & Ruggero, 2001;

Zilany & Bruce, 2006).

1.5

Objectives of this study

The robustness of a recognition system is heavily influenced by the ability to handle the

presence of background noise, to cope with the distortion due to convolution and

transmission channel. State-of-the-art algorithms for ASR systems exhibit good

performance in quiet environment but suffer from poorer performance when compared

to the ability of human listeners in noisy environments (Lippmann, 1997; G. A. Miller

& Nicely, 1955; Sroka & Braida, 2005). Our ultimate goal is to develop an ASR system

that can handle distortions caused by various acoustic factors, including speaker

differences, channel distortions, and environmental noises. Developing methods for

phoneme recognition and the closely related problem of classification are a major step

towards achieving this goal. Hence, this thesis only considers the prediction of

phonemes, since the classification of phonemes can be seen as one of the basic units in a

speech recognition system. The specific objectives of the study are to:

10

develop a robust phoneme classifier based on neural responses and to

evaluate the performance in quiet and under noisy conditions.

compare the recognition accuracy of the proposed method with the results

from some existing popular metrics, such as MFCC, GFCC and FDLP.

examine the effects of different parameter on the performance of the

proposed method.

1.6

Scope of the study

Phone recognition from TIMIT has more than two decades of intense research behind it,

and its performance has naturally improved with time. There is a full array of systems,

but with regard to evaluation, they concentrate on three domains: phone segmentation,

phone classification and phone recognition (Lopes & Perdigao, 2011). Phone

segmentation is a process of finding the boundaries of a sequence of known phones in a

spoken utterance. Phonetic classification takes the correctly segmented signal, but with

unknown labels for the segments. The problem is to correctly identify the phones in

those segments. Phone recognition also has complex task. The speech given to the

recognizer corresponds to the whole utterance. The phone models plus a Viterbi

decoding find the best sequence of labels for the input utterance. In this case, a grammar

can be used. The best sequence of phones found by the Viterbi path is compared to the

reference (the TIMIT manual labels for the same utterance) using a dynamic

programming algorithm, usually the Levenshtein distance, which takes into account

phone hits, substitutions, deletions and insertions. This thesis will focus on the phone

classification task only.

A number of benchmarking databases have been constructed in recognition purpose, for

example, the DARPA resource management (RM) database, TIDIGITS - connected

digits, Alpha- Numeric (AN) 4-100 words vocabulary, Texas Instrument and

11

Massachusetts Institute of Technology (TIMIT), WSJ5K - 5,000 words vocabulary. In

this thesis, most widely used TIMIT database has been used that includes the dynamic

behavior of the speech signal, source of variability, e.g., intra-speaker (same speaker),

inter-speaker (cross speaker), and linguistic (speaking style). TIMIT is totally and

manually annotated at the phone level.

Research in the speech recognition area has been underway for a couple of decades, and

a great deal of progress has been made in reducing the error on speech recognition.

Most of the approach shows high speech recognition accuracy under controlled

conditions (for example, in quiet environment or under specific noise), but human

auditory system can recognize speech without prior information about noise types. It is

very difficult to develop a feature that can handle all types of noise. Our proposed

feature can be used in quiet environment and under background noise, room

reverberation and channel variations.

Most of the existing phoneme recognition systems are based on the features extracted

from the acoustic signal in the time and/or frequency domain. PLP, RASTA, MFCCs

(Hermansky, 1990; Hermansky & Morgan, 1994; Zheng, Zhang, & Song, 2001) are

some examples of the preferred traditional features for the ASR systems. Some attempts

have also been made to utilize GFCC in ASR, for instance (Qi, Wang, Jiang, & Liu,

2013; Schluter, Bezrukov, Wagner, & Ney, 2007; Shao, Jin, Wang, & Srinivasan,

2009). Recently, Ganapathy et al. (Ganapathy, Thomas, & Hermansky, 2010) proposed

a feature extraction technique for phoneme recognition based on deriving modulation

frequency components from the speech signal. This FDLP feature is an efficient

technique for robust ASR. In this study, the classification results of the proposed neuralresponse-based method were compared to the performances of the traditional acoustic-

12

property-based speech recognition methods using features such as MFCCs, GFCCs and

FDLPs.

1.7

Organization of Thesis

This thesis proposes a new technique for phoneme classification. Chapter two provides

background information for the experimental work. We explain the anatomy and

physiology of the peripheral auditory system, model of the AN, the SVM classifier

employed in this thesis, Radon transform and some existing metrics. In Chapter three,

the procedure of the proposed method has been discussed. Chapter four presents the

experiments evaluating the various feature extraction techniques in the task of TIMIT

phonetic classification. We show the results for both in quiet and under noisy conditions

for different types of phonemes extracted from the TIMIT database. Chapter five

explains the reason behind the robustness of proposed feature. It also shows the effects

of different parameters on classification accuracy. Finally, the general conclusion and

some future direction are provided in Chapter six.

13

CHAPTER 2: LITERATURE REVIEW

2.1

Introduction

This chapter reviews some related work for phoneme classification. After that, brief

description of some acoustic-property-based feature extraction technique, and structure

of the auditory system is discussed. This chapter also describes SVM classifier, model

of the AN and Radon transforms technique that has been used in this study.

2.2

Research background

After several years of intense research by a large number of research teams, error rates

on speech recognition have been improved considerably but still the performances of

humans are significantly better than machines. In 1994, Robinson (Robinson, 1994)

reported a phoneme classification accuracy of 70.4%, using recurrent neural network

(RNN). In 1996, Chen and Jamieson showed phoneme classification accuracy 74.2%

using same classifier (Chen & Jamieson, 1996). Rathinavelu et al. used the hidden

Markov models (HMMs) for speech classification and got the accuracy 68.60 %

(Chengalvarayan & Deng, 1997). This approach becomes problematic when dealing

with the variabilities of natural conversational speech. In 2003, a broad classification

accuracy of 84.1% using MLP was reported by Reynolds et al (T. J. Reynolds &

Antoniou, 2003). Clarkson et al. created a multi-class SVM system to classify

phonemes. Their reported result of 77.6% is extremely encouraging and shows the

potential of SVMs in speech recognition. They also used Gaussian Mixture Model

(GMM) for classification and got the accuracy 73.7 %. Similarly Johnson et. al showed

the results for MFCC-based feature using HMM (Johnson et al., 2005). They reported

14

the single phone accuracy 54.86 % for complete test set. They also showed the accuracy

of 35.06% for their proposed feature.

A hybrid SVM/HMM system was developed by Ganapathiraju et al. (Ganapathiraju,

Hamaker, & Picone, 2000). This approach has provided improvements in recognition

performance over HMM baselines on both small-and large-vocabulary recognition

tasks, even though SVM classifiers were constructed solely from the cepstral

representations (Ganapathiraju, Hamaker, & Picone, 2004; Krüger, Schafföner, Katz,

Andelic, & Wendemuth, 2005). The above papers all show the potential of using SVMs

in speech recognition and motivated us to use SVM as a classifier. Phoneme

classification has been also studied by a large number of researchers (Clarkson &

Moreno, 1999; Halberstadt & Glass, 1997; Layton & Gales, 2006; McCourt, Harte, &

Vaseghi, 2000; Rifkin, Schutte, Saad, Bouvrie, & Glass, 2007; Sha & Saul, 2006) for

the purpose of testing different methods and representations.

In recent years, many articulatory and auditory based processing methods also have

been proposed to address the problem of phonetic variations in a number of framebased, segment-based, and acoustic landmark systems. For example, articulatory

features (AFs) derived from phonological rules have outperformed the acoustic HMM

baseline in a series of phonetic level recognition tasks (Kirchhoff, Fink, & Sagerer,

2002). Similarly, experimental studies of the mammalian peripheral and central auditory

organs have also introduced many perceptual processing methods. For example, several

auditory models have been constructed to simulate human hearing, e.g., the ensemble

interval histogram, the lateral inhibitory network, and Meddis’ inner hair-cell model

(Jankowski Jr et al., 1995; Jeon & Juang, 2007). Holmberg et al. incorporated a synaptic

adaptation into their feature extraction method and found that the performance of the

system improved substantially (Holmberg et al., 2006). Similarly, Strope and Alwan

15

(1997) used a model of temporal masking (Strope & Alwan, 1997) and Perdigao et al.

(1998) employed a physiologically-based inner ear model for developing a robust ASR

system (Perdigao & Sá, 1998).

2.3

Existing metrics

The performance was evaluated on the complete test set of TIMIT database and

compared to the results from three standard acoustic-property-based methods. In this

section we will describe these features such as MFCC, GFCC and FDLP.

2.3.1

Mel-frequency Cepstral Coefficient (MFCC)

MFCCs (Davis & Mermelstein, 1980) are a widely used feature in ASR system. Figure.

2.1 represent the derivation of MFCC- feature from a typical acoustic sound waveform.

Initially a FFT is applied to each frame to obtain complex spectral features. Normally

512-points FFT is applied to derive 256-points complex spectral without considering

phase information. To make more efficient information representation only 30 or so

smooth spectrum per frame is considered and scaled logarithmically to Mel or Bark

scale to make those spectrum more meaningful.

Mel Frequency Cepstral Coefficients (MFCC)

Speech Signal

Fast Fourier

Transform

Spectrum

Mel Scale

Filtering

Mel Frequency

Spectrum

Log (.)

Feature Vector

Derivatives

Cepstral

Coefficients

Discrete Cosine

Transform

Figure 2.1: Illustration of block diagram for MFCC derivation.

16

Input signal

Windowing

GT filter

Cubic root

operation

DCT

GFCC

Figure 2.2: Illustration of methodology to extract GFCC feature

In MFCCs derivation, linearly spaced triangular filter-bank is used. The final step is to

convert the filter output to cepstral coefficient by using discrete cosine transform

(DCT).

2.3.2

Gammatone Frequency Cepstral Coefficient (GFCC)

GFCC is an acoustic cepstral-based feature which is derived from Gammatone feature

(GF) using Gammatone filter-bank. To extract GFCC, the audio signal is initially

synthesized using a 128-channel Gammatone filter-bank. Its center frequencies are

quasi logarithmically spaced from 50 Hz to 8 KHz (or half of the sampling frequency of

the input signal), which models human cochlear filtering. Using 10 ms frame rate filterbank outputs are down sampled to100 Hz in the time dimension. Cubic root operation is

used to compress the magnitudes of the down-sampled filter-bank outputs. A matrix of

“cepstral” coefficients is obtained by multiplying the DCT matrix with a GF vector. The

30-order GFCCs among 128-channel-based GFCC are normally used for speaker

identification or speech recognition since there retain most information of GF due to

energy compaction property of DCT as mentioned in (Shao, Srinivasan, & Wang,

2007). So the size of the GFCC feature is m × 23 or m × 30; where m is the number of

frames. Figure 2.2 represent the GFCC derivation procedure.

2.3.3

Frequency Domain Linear Prediction (FDLP)

Ganapathy et al.(Ganapathy, Thomas, & Hermansky, 2009) proposed a feature which

is based on deriving modulation frequency components from the speech signal.

17

Speech signal

DCT

Critical Band

Windowing

Sub-band

FDLP

Envelopes

Figure 2.3: Deriving sub-band temporal envelopes from speech signal using FDLP.

The FDLP technique is implemented in two parts - first, the discrete cosine transform

(DCT) is applied on long segments of speech to obtain a real valued spectral

representation of the signal. Then, linear prediction is performed on the DCT

representation to obtain a parametric model of the temporal envelope. The block

schematic for extraction of sub-band temporal envelopes from speech signal is shown in

Fig. 2.3.

2.4

Structure and Function of the Auditory System

Peripheral auditory pathway connects our sensory organs (ear) with the auditory parts of

the nervous system to interpret the received information. The peripheral auditory system

converts the pressure waveform into a mechanical vibration and finally a change in the

membrane potential of hair cells. This transform of energy from mechanical motion to

electrical energy is called transduction. The change in the membrane potential triggers

the action potentials (AP’s) from AN fibers. The AP used to conveys information in the

nervous system. Anatomically, human auditory system consists of peripheral auditory

system and central auditory nervous system. Periphery auditory system can be further

subdivided into three major parts: outer, middle and inner ear. The human auditory

system is shown in Fig. 2.4.

18

Figure 2.4: Illustration of the structure of the auditory system showing outer, middle and

inner ear (Reproduced from Encyclopaedia Britannica, Inc. 1997)

2.4.1

Outer Ear

The outer ear composed of two components: one is the most visible portion of the ear

called pinna and another is the long and narrow canal that joined to ear drum called the

ear canal. The word ear may be used to refer the pinna. Ear drum also known as

tympanic membrane that act as boundary between the outer ear and (ME). The sounds

arrive at the outer ear and passes through canal into the ear drum. Ear drum vibrates

when the sound wave hits the ear drum. The primary functions of the outer ear are to

protect the middle and inner ears from external bodies and amplify the sounds with

high-frequency. It also provides the primary cue to determine the source of sound.

2.4.2

Middle Ear (ME)

The ME is separated from external ear through the tympanic membrane. It creates a link

between outer ear and fluid-filled inner air. This link is made through three

19

bones: malleus (hammer), incus (anvil) and stapes (stirrup), which are known as

ossicles. The malleus is connected with stapes through incus. The stapes is the smallest

bone that is connected with the oval window. The three bones are connected in such a

way that movement of the tympanic membrane causes movement of the stapes. The

ossicles can amplify the sound waves by almost thirty times.

2.4.3

Inner Ear

The structure of the inner ear is very complex that located within dense potion of the

skull. The inner ear, also known as labyrinth due to its complexity, can be divided into

two major sections: a bony outer casing and osseous labyrinth. The osseous labyrinth

consists of semicircular canals, the vestibule, and the cochlea. The coil, snail shaped

cochlea is the most important part of the inner ear that contain sensory organs for

hearing. The largest and smallest turn of the inner ear is known as basal turn and apical

turn respectively. Oval window and round window also part of the cochlea. The

winding channel can be divided into three sections: scala media, scala tympani and scala

vestibuli, where each section filled with fluid. Scala media move pressure wave in

response to the vibration as a consequence of oval window and ossicles. Basilar

membrane (BM) is a stiff structural element separate that seperate the scala media and

the scala tympani. Based on stimulus frequency the displacement pattern of BM also

changed. Cochlea acts like a frequency analyzer of sound wave.

2.4.4

Basilar Membrane Responses

The motion of the BM normally defined as travelling wave. Along the length of BM the

parameter at a given point determine the character frequency (CF). CF is the frequency

at which BM is most sensitive to sound vibrations. At the apex the BM is widest (0.08–

0.16 mm) and narrowest (0.08–0.16 mm) part is located at the base. Through ear drum

20

Figure 2.5: Motions of BM at different frequencies (Reproduced from Encyclopaedia

Britannica, Inc. 1997)

and oval window a sound pressure waveform pass to the cochlea and creates pressure

wave along the BM. There are different CF along the position of BM. The base of the

cochlea is more tuned to higher frequency and it decrease along apex. When sound

pressure passes through BM both low and high frequency regions are excited and cause

an overlap of frequency detection. Based on the low frequency tone (below 5 kHz), the

resulting nerve spike (AP) are synchronized through phase-locking process. The AN

model successfully captured this strategy. At different CF the motion of the BM is

shown in Fig. 2.5.

2.4.5

Auditory Nerve

AN fibers generate electrical potentials that do not vary with amplitude when activated.

If the nerve fibers fire, they always reach 100% amplitude. The APs are very short-lived

events, normally take 1 to 2 ms to rise in maximum amplitude and then return to resting

state. Due to this behavior they are normally called as spikes. These spikes are used to

decode the information in the auditory portions of the central nervous system.

21

2.5

Brief history of Auditory Nerve (AN) Modeling

AN modeling is an effective tool to understand the mechanical and physiological

processes in the human auditory periphery. To develop a computational model several

efforts (Bruce, Sachs, & Young, 2003; Tan & Carney, 2003; Zhang, Heinz, Bruce, &

Carney, 2001) had been made that integrate data and theories from a wide range of

research in the cochlea. The main focus of these modeling efforts was the nonlinearities

in the cochlea such as compression, two-tone suppression and shift in the best

frequencies etc. The processing of simple and complex sounds in the peripheral auditory

system can also be studying through these models. These models can also be used as

front ends in many research areas such as speech recognition in noisy environment

(Ghitza, 1988; Tchorz & Kollmeier, 1999) , computational modeling of auditory

analysis (Brown & Cooke, 1994) , modeling of neural circuits in the auditory brain-stem

(Hewitt & Meddis, 1993),design of speech processors for cochlear implants (Wilson et

al., 2005) and design of hearing-aid amplification schemes (Bondy, Becker, Bruce,

Trainor, & Haykin, 2004; Bruce, 2004). In 1960, Flanagan and his colleague developed

a computational model that can emulate the responses of the mechanical displacement

of the BM filter for a known stimulus. The model considered the cochlea as a linear and

passive filter and took account the properties of the cochlea responses by using simple

stimulus like clicks, single tones, or pair of tones (R. L. Miller, Schilling, Franck, &

Young, 1997; Sellick, Patuzzi, & Johnstone, 1982; Wong, Miller, Calhoun, Sachs, &

Young, 1998) However, in 1987, Deng and Geisler reported significant nonlinearities in

the responses of AN fibers due to the speech sound which was attributed as “synchrony

capture”. They described the main property of discovered nonlinearities as “synchrony

capture” which means that the responses produced by one formant in the speech syllable

is more synchronous to itself than what linear methods predicted from the fibers

threshold frequency tuning curve (FTC). These models are relatively simple but did not

22

Figure 2.6: Model of one local peripheral section. It includes outer/ME, BM, and IHC–

AN synapse models. (Robert & Eriksson, 1999)

consider the nonlinearities of BM (Narayan, Temchin, Recio, & Ruggero, 1998). Their

effort led to proposing a composite model which incorporated either a linear BM stage

or a nonlinear one (Deng & Geisler, 1987).

In 1999, a model was proposed by Robert and Eriksson which could able to produce

different effects of human auditory system seen in the electrophysiological recordings

related to tones, two-tones, and tone-noise combinations. The model was able to

generate significant nonlinear behaviors such as compression, two tone suppression, and

the shift in rate-intensity functions when noise is added to the signal (Robert &

Eriksson, 1999). The model is shown in Fig. 2.6. The model introduced by Zhang et al.

addressed some important phenomena of human AN such as temporal response properties

of AN fibers and the asymmetry in suppression growth above and below characteristics

frequency. The model focused more on nonlinear tuning properties like the compressive

23

Figure 2.7: The model of the auditory peripheral system developed by Bruce et al.

(Bruce et al., 2003), modified from Zhang et al. (Zhang et al., 2001)

changes in gain and bandwidth as a function of stimulus level, the associated changes in

the phase-locked responses, and two-tone suppression (Zhang et al., 2001). Bruce et al.

expanded the foresaid model of the auditory periphery to assess the effects of acoustic

trauma on AN responses. The model incorporated the responses of the outer hair cells

(OHCs), inner hair cells (IHCs) to increase the accuracy in predicting responses to

speech sounds. Their study was limited to low and moderate level responses (Bruce et

al., 2003). The schematic diagram of their model is shown in Fig. 2.7.

24

Figure 2.8: Schematic diagram of the auditory-periphery. The model consists of ME

filter, a feed-forward control path, two signal path such as C1 and C2, the inner hair cell

(IHC), outer hair cell (OHC) followed by the synapse model with spike generator.

(Zilany & Bruce, 2006).

2.5.1

Description of the computational model of AN

In this study, we used AN model developed by Zilany et al. that will be described in this

section (Zilany & Bruce, 2006). In 2006, the model of the auditory periphery developed

by Bruce et al.(2003) was improved to simulate the more realistic responses of the AN

fibers in response to simple and complex stimuli for a range of characteristics

frequencies (Bruce et al., 2003). Figure 2.8 shows the model of the auditory periphery

developed by Zilany et al. (Zilany & Bruce, 2006). The model introduced two modes of

BM that includes the inner and OHC resembling the physiological BM function in two

filter components C1 and C2. The C1 filter was designed to address low and

intermediate-level responses where C2 was introduced as a mode of excitation to the

IHC and produce high-level effects of the cochlea. Meanwhile, C2 corresponds to IHC

which filters high level response and then followed by C2 transduction function to

produce high-level effects and transition region. This feature in the Zilany-Bruce model

25

causes it to be more effective on a wider dynamic range of characteristics frequency of

the BM compared to previous AN models (Zilany & Bruce, 2006, 2007; Zilany et al.,

2009).

2.5.1.1 C1 Filter:

The C1 filter is a type of linear chirping filter that is used to produce the frequency

glides and BF shifts observed in the auditory periphery. The C1 filter was designed to

address low and intermediate-level responses of the cochlea. The output of the C1 filter

closely resembles the primary mode of vibration of the BM and act as the excitatory

input of the IHC. The filter order has a great impact on the sharpness of the tuning

curve. The filter remains sharply tuned if the filter order is too high even for high-SPL

or in the situation of OHC impairment. To enable the FTC of the filter more realistic in

both normal and impairment conditions, the filter order was used 10 instead of 20 (Tan

& Carney, 2003).

2.5.1.2 Feed forward control path (including OHC):

The feed forward control path regulates the gain and bandwidth of the of the BM filter

to reflect the several level-dependent properties of the cochlea by using the output of the

C1 filter. The nonlinearity of the AN model that represents an active cochlea is

modelled in this control path. There are three main stages involves in this path, which

are:

i) Gammatone filter:

The control-path filter is a time varying filter that is the product of a gamma distribution

and sinusoidal tone with a central frequency and bandwidth broader than these of C1

filter. The broader bandwidth of the control-path filter produces two-tone rate

suppression in the model output.

26

ii) Boltzmann function:

This symmetric non-linear function was introduced to control dynamic range and time

course compression in the model.

iii) A nonlinear function that converts the low pass filter output to a time-varying time

constant for the C1 filter. Any impairment of the OHC is controlled by the COHC and

the output is used to control the nonlinearity of the cochlea as well.

On the other hand, the nonlinearity of cochlea is controlled inside the feed forward

control path based on different type of stimulus of the SPLs. At low stimulus SPLs, the

control path response with maximum output. Thus the tuning curve becomes sharp with

maximum gain and the filter behaves linearly. In response to the moderate levels of

stimulus, the control signal deviates substantially from the maximum and varies

dynamically from maximum to minimum. The tuning curve of the corresponding filter

becomes broader and the gain of the filter is reduced. At very high stimulus SPLs the

control path stimulus saturates with minimum output level and the filter is again

becomes effectively linear with broad tuning and low gain.

2.5.1.3 C2 Filter:

The C2 filter, parallel to C1 the filter is a wideband filter with its broadest possible

tuning (i.e. at 40 kHz). The filter has been introduced based on the Kiang‟s two-factor

cancellation hypothesis, in which the level of stimuli will affect the C2‟s transduction

function followed after C2 filter‟s output. The hypothesis states that „the interaction

between the two paths produces effects such as the C1/C2 transition and peak splitting

in the period histogram (Zilany & Bruce, 2006). To be consistent with the behavioral