Technische Universität München

Department of Mathematics

Bachelor’s Thesis

Random Walks

Igor Faddeenkov

Supervisor: Silke Rolles

Advisor: Silke Rolles

Submission Date: 1.9.2016

Technische Universität München

Department of Mathematics

Bachelor’s Thesis

Random Walks

Igor Faddeenkov

Supervisor: Silke Rolles

Advisor: Silke Rolles

Submission Date: 1.9.2016

I hereby declare that this bachelor’s thesis is my own work and that no other

sources have been used except those clearly indicated and referenced.

Garching, 26.08.2016

Zusammenfassung

In dieser Bachelorarbeit wird zunächst die Frage geklärt, ob eine Irrfahrt

per se nur rekurrent oder nur transient sein kann. Danach werden verschiedene Arten davon vorgestellt, welche auf Rekurrenz untersucht werden. Anschließend werden verschiedene Kriterien zur Untersuchung von

verschieden-dimensionalen Irrfahrten angeben. Als Ausblick dient dann die

Betrachtung von solchen, welche ”wahrlich” dreidimensional sind.

Contents

1 Introduction

4

2 Prerequisites

5

3 The Hewitt-Savage zero-one law

8

4 Recurrence

13

4.1 Investigation of recurrence on random walks . . . . . . . . . . 13

4.2 Chung-Fuchs-Theorem . . . . . . . . . . . . . . . . . . . . . . 22

4.3 Alternative criterion for recurrence . . . . . . . . . . . . . . . 30

5 Outlook

41

6 Conclusion

42

1

Introduction

”Even a blind squirrel finds a nut once in a while.” This proverb may be true

since there are a lot of nuts in the world. But imagine, there would exist

only one nut in the whole world, would this proverb then still hold?

Mathematicians like Polyà proved that one has to identify, in which ”space”

one is. We see later that if the squirrel could only move forward or backward,

say by a limited mobility or if the earth were flat, this modified proverb would

be true. However, if the squirrel got wings and could fly in every direction,

the nut would not be found again.

In order to prove this , one can make observations with the squirrel and

wait for its return to the nut. This would be the approach physicists prefer.

One might obtain results by this procedure. However, as these were only the

one-, two- and three-dimensional cases, one can ask, what will happen in the

d-dimensional case. And here, experiments unfortunately fail. We will introduce the concept of the ”random walks”, which are in a way the movements

that our blind squirell does (assuming that the squirrel’s six senses are disabled). By some concepts from probability theory we will identify conditions

for whether one can return to its starting point or not.

4

2

Prerequisites

As calculus on random walks cannot be done without the right mathematics,

we will introduce some basic definitions and discuss important facts from

probability theory in this chapter. In this entire work, we will follow the

steps from Durrett. [1]

Definition 2.1. A probability space is a triple (Ω, F, P ) with Ω a set,

F ⊆ P(Ω) a σ-algebra and P : F → R a probability measure (with P (Ω) = 1).

Definition 2.2. Let (Xi )i∈N0 be a sequence of independent and identically

distributed (iid) random variables taking values in Rd , d ≥ 1.

Now we want to define a proper space for our mathematics. The most appropriate is the one from the proof of Kolmogorov’s extension theorem:

Definition 2.3. From now on, let

Ω = Rd

N

F = B Rd

⊗N

which is the product σ-algebra, i.e. the smallest

σ-algebra generated by the

d

sets {ω : ωi ∈ Bi , 1 ≤ i ≤ n}, Bi ∈ B R , n ∈ N. Here, Bi = (ai , bi ],

−∞ ≤ ai < bi < ∞, are finite dimensional rectangles.

µ = L(Xi ) distribution of Xi , i ∈ N0

d ⊗N

d N

, we have by Kolmogorov’s extension theorem the

,B R

On

R

unique probability measure

P = µ⊗N

which fulfills P (ω : ωi ∈ (ai , bi ], ∀1 ≤ i ≤ n) = µ⊗n ((a1 , b1 ] × ... × (an , bn ])

∀∞ ≤ ai < bi < ∞, ∀n. Finally, we look at the above defined random

variables Xn (ω) = ωn with

Xn : Ω −→ Rd , (wj )j∈N 7−→ ωn

which is the projection to the n-th component of ω ∈ Rd

N

.

Hence we are ready to note down our key definition:

Definition 2.4. A random walk (Sn )n∈N0 is defined by

S0 = 0

and

Sn = X1 + ... + Xn =

n

X

i=1

5

Xi .

Example 2.5. A random walk as defined above (set d = 1) with

P (Xi = 1) = P (Xi = −1) = 21 is called a simple random walk (SRW).

Definition 2.6. A multinomial coefficient is defined

Pmas follows: Let

(ki )1≤i≤m be a sequence of non-negative integers ki with i=1 ki = n. Then

n

n!

=

k1 , ..., km

k1 ! · ... · km !

From that we derive a generalization of the Binomial theorem which we give

without proof:

Theorem 2.7 (Multinomial theorem). Let m ∈ N, n ∈ N0 and xi ∈ R,

i ∈ N. Then

Y

n

m

X

n

k

n

(x1 + ... + xm ) =

·

xj j

k

,

...,

k

1

m

j=1

k +...+k =n

1

m

We return to the main concepts of probability theory which we will use for

our investigation on random walks:

Definition 2.8. Let (An )n∈N be a sequence with An ∈ F ∀n ∈ N. We define

the event

”An infinitely often (i.o.)” as

{An i.o.} = lim sup An =

n→∞

∞ [

∞

\

Ak

n=1 k=n

Definition 2.9. Let (Xn )n∈N be a sequence of iid random variables. Then,

Fn = σ(X1 , ..., Xn ) is the information which is known at time n. A random

variable τ taking values in {1, 2, ...} is called stopping time if, ∀ n < ∞,

{τ = n} ∈ Fn is satisfied.

Example 2.10. We state that N = inf{k : |Sk | ≥ x}, x ∈ R, is a stopping

time. To prove this, we simply verify the definition:

{N = k} = {|S1 | < x, |S2 | < x, ..., |Sk−1 | < x, |Sk | ≥ x} ∈ σ(X1 , ..., Xk ) = Fk

To generalize this example, we obtain:

Example 2.11. For A ∈ B Rd , N = inf{n : Sn ∈ A} is a stopping time

(which is shown in the lecture of [2]). It is called the hitting time of A.

6

Remark 2.12. From now on, by || · ||, we mean:

||x|| = sup |xi |

1≤i≤d

Definition 2.13. We call x ∈ Rd a possible point of the random walk

(Sn )n∈N if for any > 0 there is an m ∈ N0 with P (||Sm − x|| < ) > 0.

Definition 2.14. We call x ∈ Rd a recurrent point for the random walk

(Sn )n∈N0 if for every > 0 : P (||Sn − x|| < i.o.) = 1.

Remark 2.15. All recurrent points of a random walk (Sn )n∈N0 are possible

points.

7

3

The Hewitt-Savage zero-one law

We are ready to start with our investigation on random walks (Sn )n∈N . Our

aim here is to prove that the probability that (Sn )n∈N takes some random

value infinitely often is either 0 or 1, nothing ”between” them. But beforehand, we need to make some preparations:

Definition 3.1. Let (Xn )n∈N be a sequence of random variables. The tail

σ-algebra of (Xn )n∈N is defined by

T =

∞

\

σ(Xn , Xn+1 , ...)

n=1

Remark 3.2. If an event A is in the tail σ-algebra T , it means that the

occurence of A does not depend on changing the values of finitely many Xi .

If A does not occur, then the permutation of finitely many Xi , of course, does

not change it, too.

This leads us to a very special theorem, whose proof is given in [2]:

Theorem 3.3 (Kolmogorov’s Zero-One Law). Let (Xn )n∈N be a sequence

of independent random variables and A ∈ T . Then P (A) ∈ {0, 1}.

As we investigate random walks (Sn )n∈N , one has to figure out whether we

can apply Kolmogorov’s Zero-One Law. However,applying Kolmogorov’s

n

Zero-One Law on {(Sn )n∈N ∈ B i.o.}, B ∈ B Rd , is unfortunately not

possible since the sums (Si )i∈N , i ∈ N, are not independent.

As a consequence, we have to introduce another ”zero-one law” where these

tail events for the sums depend on the iid sequence (Xn )n∈N . But beforehand,

some definitions will be introduced.

Definition 3.4. A finite permutation of N is a bijection π : N −→ N,

π(i) 6= i for

only finitely many i ∈ N, i.e. ω ∈ A ⇒ ωπ ∈ A, where

ωπ = ωπ(i) i∈N .

Definition 3.5. An event A ∈ F is permutable if it is invariant under

finite permutations π of the Xi (ω) = ωi , i.e. ωπ(i) = ωi ∀i ∈ N.

Remark 3.6. To put it in other words, the occurence of a permutable event

A is not affected if finitely many of the random variables are rearranged.

Remark 3.7. One can show that the collection of such permutable events is

a σ-algebra and is called exchangeable. From now, we write E for it.

Remark 3.8. All events in the tail σ-algebra T are permutable.

8

Now we have all the tools in order to state the most important theorem of

this section.

Theorem 3.9 (Hewitt-Savage 0-1 law). If X1 , X2 , ... are i.i.d. and A ∈ E,

then P (A) ∈ {0, 1}.

Proof. Let A ∈ E. The idea is to show that A is independent of itself,

therefore

P (A) = P (A ∩ A) = P (A) · P (A) .

Then we can conclude that P (A) ∈ {0, 1}.

We want to approximate A by An ∈ σ(X1 , ..., Xn ) such that

P (An ∆A) −→ 0 as n → ∞

That such events do indeed exist is proven in Lemma 2.29 of [2].

We denote by

An ∆A = (A \ An ) ∪ (An \ A) = (A ∪ An ) \ (A ∩ An )

the symmetric difference.

n

Write An = {ω : (ω1 , ..., ωn ) ∈ Bn } where ωi = Xi (ω) and Bn ∈ B Rd .

We use the exchangeability: let A˜n = {ω : (ωn+1 , ..., ω2n ) ∈ Bn }.

We define the finite permutation π as

i + n, ∀i ∈ {1, ..., n}

π(i) = i − n, ∀i ∈ {n + 1, ..., 2n}

i,

for i > 2n

which sends An → A˜n as the permutation of random variables, i.e.

ω ∈ An ⇔ π(ω) ∈ A˜n .

Observe that π 2 = Id since for i ∈ {1, ..., n}:

π(π(i)) = π(i + n) = i + n − n = i

The other cases are calculated in the same way.

As the Xi , i ≥ 1, are iid and since P is invariant under π, we have:

P (ω : ω ∈ An ∆A) = P (ω : π(ω) ∈ An ∆A)

(1)

Then, since A is permutable and π 2 = Id, we obtain:

{ω : π(ω) ∈ An ∆A} = {ω : ω ∈ A˜n ∆A}

9

(2)

Note also that An and A˜n are independent as An ∈ σ(X1 , ..., Xn ) and

A˜n ∈ σ(Xn+1 , ..., X2n ). We have:

P (An ∆A) = P ({ω : ω ∈ An ∆A}) = P ({ω : π(ω) ∈ A˜n ∆A})

= P ({ω : ω ∈ A˜n ∆A}) = P (A˜n ∆A)

(3)

The second and third equation above follow from (1) and (2).

From

|P (An ) − P (A)| = |P (An \ A) + P (An ∩ A) − P (A \ An ) − P (A ∩ An )|

≤ P (An \ A) + P (A \ An ) = P (An ∆A)

and by interchanging An and A˜n , we obtain with (3):

P (An ) − P (A) → 0 and P (A˜n ) − P (A) → 0 .

Therefore,

P (An ) → P (A) and P (A˜n ) → P (A) .

(4)

Furthermore, we want to prove:

P (A˜n ∆An ) → 0

(5)

In order to show (5), we have to do a little calculus:

(An \ A˜n ) ⊂ (An \ A) ∪ (A \ A˜n )

(A˜n \ An ) ⊂ (A˜n \ A) ∪ (A \ An )

Therefore, and by (3),

P (An ∆A˜n ) = P (An \ A˜n ) + P (A˜n \ An )

≤ P (An \ A) + P (A \ An ) + P (A˜n \ A) + P (A \ A˜n )

= P (An ∆A) + P (A∆A˜n ) → 0

which shows (5). Since now

0 ≤ P (An ) − P (An ∩ A˜n )

≤ P (An ∪ A˜n ) − P (An ∩ A˜n ) = P (An ∆A˜n ) → 0 ,

and thus lim (P (An ) − P (An ∩ A˜n )) = 0, we get P (An ∩ A˜n ) → P (A) by (4).

n→∞

As A˜n and An are independent, we finally have

P (An ∩ A˜n ) = P (An ) · P (A˜n ) → P (A)2

Thus, P (A) = P (A)2 and therefore P (A) ∈ {0, 1}.

10

To return to our random walks, we will now apply the Hewitt-Savage 0-1 law

in order to show:

Theorem 3.10. If (Sn )n∈N0 is a random walk on R, then one of the following

four possibilities occurs with probability one:

(i) Sn = 0 ∀n ∈ N

(ii) Sn → ∞

(iii) Sn → −∞

(iv) −∞ < lim inf Sn < lim sup Sn < ∞

n→∞

n→∞

Proof. The event A = {ω : lim sup Sn = c} is permutable for c ∈ [−∞, ∞].

n→∞

Thus, we can apply the Hewitt-Savage 0-1 law, Theorem 3.9, for A and

lim sup Sn is indeed a constant c ∈ [−∞, ∞] a.s.

n→∞

Let S˜n = Sn+1 − X1 . S˜n and Sn have got the same distribution. Since

c = lim sup S˜n = lim sup Sn+1 − X1 = c − X1 ,

n→∞

n→∞

we obtain X1 = 0 a.s. for c finite and therefore (i). For X1 6= 0, then on the

other side c is either ∞ or −∞. Therefore, for c ∈ {−∞, ∞}, we have either

lim sup Sn = ∞ or lim sup Sn = −∞

n→∞

n→∞

By applying the same arguments to lim inf Sn , we get also either

n→∞

lim inf Sn = −∞ or lim inf Sn = ∞

n→∞

n→∞

As other combinations are not possible, the statement is shown.

An application of this theorem is the following example:

Example 3.11. Let (Xn )n∈N0 be a sequence of iid random variables in R.

We assume that they have a distribution which is symmetric about 0 and

where P (Xi = 0) < 1 holds.

We show that we are in the case of (iv) of Theorem 3.10.

Since P (Xi = 0) 6= 1 and therefore X1 6= 0 a.s., case (i) is not possible.

Now assume that (ii) holds. As we are symmetric about 0, (iii) must hold as

well [otherwise we have some kind of asymmetry] and the other way round of

course too. Since we have exactly four possibilities, we must be in case (iv).

11

Remark 3.12. Note that by Example 3.11 the simple symmetric RW which

cannot skip over any intergers, visits every integer infinitely often with probability 1 because

−∞ < lim inf Sn < lim sup Sn < ∞

n→∞

n→∞

holds, as such a random walk is symmetric about 0.

We conclude this section with an example which leads us directly to the

research on recurrence:

n

Example 3.13. Let (Bn )n∈N , Bn ∈ B Rd be a sequence. Then, for a

random walk (Sn )n∈N , we have P (Sn ∈ Bn i.o.) = {0, 1}.

To show this, we observe that for any k, Sk is invariant w.r.t. any permution

π. Therefore, the whole set

Ak =

∞

[

{Sn ∈ Bn }

n=m

is invariant to such permution

π. Furthermore, the Ak are decreasing in k.

T∞

Thus, the set Ãk = k=1 Ak is invariant to any permution π̃. As now by

Definition 2.8

Ãk =

∞ [

∞

\

{Sn ∈ Bn } = {Sk ∈ Bk i.o.} ,

k=1 n=k

we see that the set Ãk is permutable, i.e. Ãk ∈ E, and by Theorem 3.9

P (Ãk ) = P (Sk ∈ Bk i.o.) = {0, 1} .

12

4

4.1

Recurrence

Investigation of recurrence on random walks

We start with some basic facts which are our fundament to proceed further.

Definition 4.1. We define R as the set of the recurrent points and P as the

set of the possible points.

Theorem 4.2. If R =

6 ∅, then R = P.

Proof. We use the idea of [3]. Let R =

6 ∅. Since R ⊆ P by Remark 2.15, we

need to show P ⊆ R. Firstly, we will show:

If r ∈ R and p ∈ P, then (r − p) ∈ R.

(6)

We assume the opposite: if (r − p) ∈

/ R, then by definition of a recurrent

point, there exists > 0 with P (|Sn − (r − p)| < 2 i.o.) < 1. Therefore,

we can find an M ∈ N with P (|Sn − (r − p) ≥ 2| , ∀ n ≥ M ) = P (B̃) > 0,

where B̃ = {|Sn − (r − p) ≥ 2| , ∀ n ≥ M }.

On the other side, since p is a possible point, there exists N ∈ N with

P (|SN − p| < ) = P (A) > 0.

For a better overview, we define

A = {|SN − p| < }

B = {|Sn − SN − (r − p)| ≥ 2 ∀n ≥ N + M } .

(7)

Now, ∀n > N , Sn − SN = XN +1 + ... + Xn does not depend of the first N

steps of the random walk. Furthermore, Sn−N = X1 + ... + Xn−N has the

same distribution as Sn − SN . We obtain:

P (B) = P (B̃) > 0

For M ≥ 1, A and B are independent which follows from independence of

SN and Sn−N as stated before.

On A ∩ B, we obtain with the triangle inequality

2 ≤ |(Sn − r) − (SN − p)| ≤ |Sn − r| + |SN − p| < |Sn − r| + Thus, the event {|Sn − r| ≥ ∀n ≥ N + M } holds. By

{|Sn − SN − (r − p)| ≥ 2 ∀N +M and |SN −p| < } ⊆ {|Sn −r| ≥ ∀N +M }

and by the shown independence of A and B, we conclude

P (|Sn − r| ≥ ∀n ≥ N + M ) ≥ P (A ∩ B) = P (A) · P (B) > 0 .

13

Since r is a recurrent point, this is a contradiction and (6) is proved.

We still have to show:

(i) 0 ∈ R

(ii) x ∈ R ⇒ −x ∈ R

Let x ∈ R (existence given by R =

6 ∅). Then x ∈ P.

(i) is then obtained by taking r = x = p and using (6).

In the same way, (ii) is obtained by taking r = 0 and p = x.

To show that P ⊂ R, we assume y ∈ P. By (6) with r = 0 and p = y we

obtain −y ∈ R and by (ii) we conclude y ∈ R.

Corollary 4.3. We have got the following results:

(i) If 0 ∈ R, then R = P.

(ii) If 0 ∈

/ R, then R = ∅.

Proof. By Theorem 4.2, it is enough to have (at least) one recurrent point.

In addition, if our random walk (Sn )N0 has any recurrent points x ∈ R, then

by (6) of the previous proof: x − x = 0 ∈ R. Therefore, it is enough to

investigate whether 0 is a recurrent point or not.

Remark 4.4. The random walk (Sn )n∈N0 is transient if R = ∅ and recurrent if R =

6 ∅.

Remark 4.5. Since we showed that it suffices to check recurrence for one

single value, we can set x = 0 in Definition 2.14 and obtain:

(Sn )n∈N is recurrent ⇐⇒ P (Sn = 0 i.o.) = 1

An important fact is the following:

Remark 4.6. By Example 3.13, as {Sn = 0 i.o.} is a permutable event, we

have:

P (Sn = 0 i.o.) < 1 =⇒ P (Sn = 0 i.o.) = 0

P (Sn = 0 i.o.) > 0 =⇒ P (Sn = 0 i.o.) = 1

Now we want to go on with some fundamental example:

14

Example 4.7 (Simple random walk on Zd ). We see that

1

for each of the d unit vectors ej . Let τ0 = 0

P (Xi = ej ) = P (Xi = −ej ) = 2d

and τn = inf{m > τn−1 : Sm = 0} be the time of the n-th return to 0.

By the Strong Markov property, it follows from [4], as we can interpretate

the SRW as a Markov chain and τn is a stopping time, that:

P (τn < ∞) = P (τ1 < ∞)n .

Theorem 4.8. For any random walk, the following are equivalent:

i) P (τ1 < ∞) = 1

ii) P (Sm = 0 i.o.) = 1

P∞

iii)

m=0 P (Sm = 0) = ∞

Proof. If P (τ1 < ∞) = 1, then P (τ1 < ∞)n = 1 ∀n ∈ N0 .

Therefore, P (τn < ∞) = 1 by Example 4.7 which means that one returns to

0 with probability 1 infinitely often. Thus, P (Sm = 0 i.o.) = 1 and (i) ⇒ (ii)

shown. Let

∞

∞

X

X

V =

1(Sm =0) =

1(τn <∞) .

m=0

n=0

By this, we denote the number of visits to 0 counting the visit at time 0.

By taking the expectation and using Fubini’s theorem in order to change the

expectation and the sum, we obtain:

E[V ] =

∞

X

P (Sm = 0) =

m=0

∞

X

P (τn < ∞) =

n=0

∞

X

P (τ1 < ∞)n

n=0

For P (τ1 < ∞) < 1, we get by the geometric series:

E[V ] =

1

1 − P (τ1 < ∞)

For P (τ1 < ∞) = 1, we set E[V ] = ∞.

By Fubini’s theorem and since Sm = 0 is infinitely often (and the sum of

such indicator function is infinity), we obtain (ii) ⇒ (iii) by

" ∞

#

∞

X

X

P (Sm = 0) = E

1{Sm =0} = ∞ .

m=0

Suppose, P (τ1 < ∞) 6= 1, then

(i).

m=0

P∞

m=0

P (Sm = 0) < ∞ which shows (iii) ⇒

15

In order to talk about recurrence/transience on Zd , we need a little lemma:

P

Lemma 4.9. Let ni=1 xi = 1 with real numbers xi ≥ 0, 1 ≤ i ≤ n. Then:

n

X

x2i ≤ max xi

1≤i≤n

i=1

Proof.

n

X

i=1

x2i

=

n

X

xi · x i ≤

i=1

n

X

xi · max xi = 1 · max xi .

i=1

1≤i≤n

1≤i≤n

With all these tools we go ahead and prove a theorem, which indeed tells us

that ”all roads lead to Rome” (assuming the earth is a disc though):

Theorem 4.10 (Pólya’s recurrence theorem). Simple random walk on

Zd is recurrent in d ≤ 2 and transient in d ≥ 3.

Proof. We define ρd (m) := P (Sm = 0).

Firstly, ρd (m) = 0 if m is odd. This is the case since if the random walk

starts in a point v , it needs an even number of steps to return to v.

Secondly, we use Stirling’s formula

√

n! ∼ nn · e−n · 2πn as n → ∞

in order to obtain for d = 1 (n 6= 0):

2n!

2n

ρ1 (2n) = P (S2n = 0) =

· 2−2n =

· 2−2n

n

n! · n!

√

2n

−2n

(2n)

e

· 2π · 2n

√

√

∼ n n·

· 2−2n

n · n e−n · 2π · n · e−n · 2π · n

2n

1

2n

1

· 2−2n · √

=

=√

n

πn

πn

Thus we get: ρ1 (2n) ∼

∞

X

m=0

√1

πn

as n → ∞. Since now

P (Sm = 0) =

∞

X

P (S2n = 0) ∼

n=0

∞

X

n=0

1

√

=∞ ,

πn

we gain from the equivalence of Theorem 4.8 that P (Sm = 0 i.o.) = 1 and

therefore the recurrence for d = 1.

16

For d = 2, we use a similar argument. Every path of returning to 0 in

2n steps has got now the probability 4−2n . To obtain a path, we have to go

the same number, k times, up and down as well as the same number, n − k

times, to the right and to the left. Of course, these steps are in an arbitrary

order and 2k + 2(n − k) = 2n. So we have:

ρ2 (2n) = P (S2n = 0) = 4

−2n

·

n X

k=0

2n

k, k, n − k, n − k

n

X

(2n)!

(n!)2

·

(k!)2 · ((n − k)!)2 (n!)2

k=0

X

n 2n

n

n

−2n

=4

·

·

n

k

n−k

k=0

= 4−2n ·

By the Vandermonde’s identity, the last equality can be written as

2

2n

−2n 2

ρ2 (2n) = 2

·

= ρ1 (2n)2

n

Finally, as ρ1 (2n) ∼ √1πn , we conclude that ρ2 (2n) = ρ1 (2n)2 ∼

recurrence follows from Theorem 4.8, as

1

.

πn

The

∞

X

1

=∞ .

P (Sm = 0) ∼

πn

n=0

m=0

∞

X

An alternative proof for d = 2 is the following:

Consider Z2 , however now rotated by an angle of 45◦ . Then, each step in Z2

is like moving in a random walk in Z1 up or down and in another one right

or left. Since these two random walks in Z1 are independent, we obtain:

2

1

1

P (Sm = 0) ∼ √

=

πn

πn

By the same argument as above, we get the recurrence for d = 2.

For d = 3, we once again calculate ρ3 (2n) explicitly. Every path of returning to 0 in 2n steps has got now the probability 6−2n . As before, we gain

simply a new direction, before and behind. Therefore, we have m steps up

and down, l steps to the left and to the right and n − m − l steps behind

17

and before. Again, these overall 2n steps are in arbitrary order. We compute:

X 2n

−2n

ρ3 (2n) = 6

·

m, m, l, l, n − m − l, n − m − l

m,l=0

m+l≤n

X

= 6−2n ·

m,l=0

(2n)!

(n!)2

·

(m!)2 (l!)2 ((n − m − l)!)2 (n!)2

m+l≤n

−2n

=6

X 2

2n

n!

·

·

n

m!l!(n − m − l)!

m,l=0

m+l≤n

2

X 2n

n!

−2n

−n

=2

·

·

3 ·

m!l!(n − m − l)!

n

m,l=0

m+l≤n

By the Multinomial theorem, see 2.7, we obtain for this sum:

X n

n

(1 + 1 + 1) =

· (1m · 1l · 1n−m−l )

m, l, n − m − l

m,l=0

m+l≤n

⇔ 3n =

X

m,l=0

X

n!

n!

⇔ 1 = 3−n ·

m!l!(n − m − l)!

m!l!(n − m − l)!

m,l=0

m+l≤n

m+l≤n

By Lemma 4.9, we can state

−2n

ρ3 (2n) ≤ 2

n!

2n

·

max 3−n ·

n m,l≥0

m!l!(n − m − l)!

m+l≤n

The maximum is achieved by setting m and l as close as possible to b n3 c.

Here, b.c denotes the floor function. Thus, we obtain by Stirling’s formula:

√

n!

3−n · 2πn · nn /en

n!

−n

−n

≤3 ·

max 3 ·

3 ∼ q

3

n

m,l≥0

m!l!(n − m − l)!

2

n n/3 n/3

b

c!

m+l≤n

3

πn

·

e

3

3

√

3−n · (2πn)1/2 · nn

3 3

=

=

3/2

n

2

2πn

πn

· n

3

3

Finally, we can sum up all results together and get:

√

√

3/2

2n

3

3

1

3

3

1 3

−2n

∼√ ·

=

ρ3 (2n) ≤∼ 2

·

·

n

2πn

2 πn

πn 2πn

18

The transience follows from Theorem 4.8, as

∞

X

3/2

∞

X

1 3

P (Sm = 0) ∼

<∞ .

2 πn

m=0

n=0

For d > 3, we follow [5] . Let (S˜n )n∈N be the projection onto the first three

coordinates of the random walk (Sn )n∈N . We define the stopping times

T0 = 0 , Tn+1 = inf {m > Tn : S̃m 6= S̃Tn } .

Now we see that (S̃Tn )n∈N is a simple symmetric random walk in R3 . By

the proof above, one has P (S̃Tn = 0 i.o.) = 0. Therefore, as the first three

coordinates are constant between Tn+1 and Tn+2 and as Tn+2 − Tn+1 is finite

a.s., we obtain transience for the whole (Sn )n∈N .

Remark 4.11. One can even show that

ρd (2n) ∼

cd

nd/2

where d is the dimension of the SRW and cd a constant depending on the

dimension. For the proof, see [6] or [7].

Since we return to 0 infinitely often with the probability p(d) = 1, d = {1, 2},

one can ask the same for the higher dimensions d ≥ 3. Indeed, since we have

shown that the random walk Sn is transient in these cases, we obtain p(d) < 1

for d ≥ 3. In the following table, these probabilities are calculated explicitly:

19

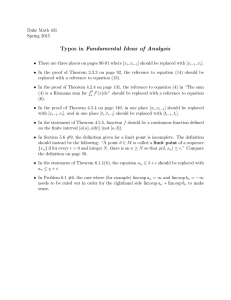

Figure 1: Probabilities p(d), depending on dimension d, to return to 0. See [8]

Discussing the random walks on the lattice Zd to the fullest, we move further

and analyse ”trees”.

The following definitions are taken from [9].

Definition 4.12. The regular tree Tk , k ∈ N, is a tree, i.e. a connected

acyclic graph on the vertex set V and edge set E, with deg(v) = k+1 ∀v ∈ V .

Remark 4.13. T1 is a line, T2 is a binary tree. Of course the regular tree

is an infinite graph.

Figure 2: Binary tree with starting point 0

Example 4.14 (Transience of T2 ). We will now show that the simple

random walk on the tree T2 , where the probability to get to a neighbour node

is 13 , is transient starting at 0.

As before in the SRW on Zd , we count n steps to the right (with the probability

20

2 n

)

3

n

and n steps to the left (with the probability 31 ).

n n

2n

2

1

P (S2n = 0) =

·

·

n

3

3

√

n n

2

1

(2n)2n · e−2n · 4πn

∼

·

√

2

3

3

nn · e−n · 2πn

n

n n

1

8

1

1

2

n

=√ ·

·

·4 · √

=

3

3

9

πn

πn

From the equivalence of Theorem 4.8 and as

√1

m

≤ 1 for m ∈ N, we obtain:

n

∞

8

1 X 1

√

P (Sm = 0) =

P (S2n = 0) ∼ √ ·

π n=0 n 9

m=0

n=0

∞ n

1 X 8

<∞

≤√

π n=0 9

∞

X

∞

X

Remark 4.15. We can even see, that, for arbitrary k ≥ 2, the SRW on Tk

is transient.

Proof. Since we investigate a regular tree Tk , the probability to jump to a

1

neighbouring node is k+1

. We start once again in 0. The probability to make

n

n

k

1

n steps to the right is k+1 and n steps to the left is k+1

. Thus we have

n n

2n

k

1

P (S2n = 0) =

·

·

n

k+1

k+1

n

n

k

1

4k

1

n

=√ ·

∼4 ·√ ·

(k + 1)2

(k + 1)2

πn

πn

For k ≥ 2, we have

∞

X

n=0

4k

(k+1)2

1

√ ·

πn

For k = 1, we have

4k

(k+1)2

∞

X

n=0

< 1 and since

4k

(k + 1)2

√1

πn

n

≤

≤ 1 ∀n ≥ 1, the following holds:

∞ X

n=0

4k

(k + 1)2

n

<∞

= 1 and then:

1

√ ·

πn

4k

(k + 1)2

n

=

∞

X

n=0

1

√

=∞

πn

Therefore, the SRW on T1 is recurrent (since this is in fact Z) and on Tk

transient for k ≥ 2.

21

Example 4.16 (Triangular lattice). This example is about clustered triangles, where every point has six neightbors, thus the probability to jump to a

neighbouring node equals p = 16 . A SRW on this triangular lattice is indeed

recurrent. Unfortunately, one cannot use combinatorics in order to prove

this. Frank Spitzer gives a complete proof in [10] using algebraic methods.

4.2

Chung-Fuchs-Theorem

In this chapter, we want to investigate random walks on Rd , d ∈ N. Therefore, we will establish a useful criterion for d = {1, 2} which is the ChungFuchs-Theorem. But beforehand, we need to make some preparations. Most

of the facts from this chapter refer to Chung [11].

Lemma 4.17. Let > 0. The following holds:

(i)

(ii)

∞

X

n=1

∞

X

P (||Sn || < ) < ∞ ⇐⇒ P (||Sn || < i.o.) = 0

(8)

P (||Sn || < ) = ∞ ⇐⇒ P (||Sn || < i.o.) = 1 .

n=1

Proof. From the first Borel-Cantelli lemma we obtain directly one half of (i).

The other direction is obtained once we show (ii), since the second direction

of (ii) is the converse of it.

For (ii), the first direction is obtained by the contrapositive of the first BorelCantelli lemma. Since {||Sn || < i.o.} is an exchangeable event, we use

a similar version of Remark 4.6 byPwhich P (||Sn || < i.o.) > 0 implies

P (||Sn || < i.o.) = 1 and therefore ∞

n=1 P (||Sn || < ) = ∞.

For the other direction we use a similar method to the one used in the proof

of Theorem 4.2. We set

F = {||Sn || < i.o.}c = lim inf {||Sn || ≥ } .

n→∞

We can interpret F as the event that ||Sn || < for only a finite number of

values of n. Consider ”the last time m” where ||Sm || < and

||Sn || ≥ ∀n > m.

Furthermore, since {||Sm || < } and {||Sn − Sm || ≥ 2} are independent

(which was shown in Theorem 4.2), by combining them, we once again obtain

2 ≤ ||Sn − Sm || ≤ ||Sn || + ||Sm || ≤ ||Sn || + 22

And therefore these two independent events imply {||Sn || ≥ }. Note also

that Sn−m and Sn − Sm have got the same distribution.

We can finally compute

P (F ) =

≥

=

∞

X

m=0

∞

X

m=0

∞

X

P (||Sm || < , ||Sn || ≥ ∀n ≥ m + 1)

P (||Sm || < , ||Sn − Sm || ≥ 2 ∀n ≥ m + 1)

P (||Sm || < ) · P (||Sn || ≥ 2 ∀n ≥ 1)

m=0

The last equation follows (once again) from the independence of the two

events.

P

As 1 ≥ P (F ) and ∞

m=0 P (||Sm || < ) = ∞, one must have

P (||Sn || ≥ 2 ∀n ≥ 1) = 0

in order to have a sum whose value is ≤ 1.

Our aim now is to show P (||Sn || ≥ 2 ∀n ≥ k) = 0 for k ≥ 2.

Let therefore

Am = {||Sm || < , ||Sn || ≥ ∀n ≥ m + k}

For m0 ≥ m + k, we have m0 − m ≥ k. The two events Am and Am0 are then

disjoint for m0 ≥ m + k and P (Am ) takes place at most k-times for m ≥ 0.

One can imagine that each ω belongs to Am for at most k times m ∈ N0 . For

the calculation above for each of the k, we obtain:

k≥

∞

X

m=0

P (Am ) ≥

∞

X

P (||Sm || < ) · P (||Sn || ≥ 2 ∀n ≥ m + k)

m=0

With same argument as for k = 1 before, we get:

P (||Sn || ≥ 2 ∀n ≥ m + k) = 0

Therefore,

P (F ) = lim P (||Sn || ≥ ∀n ≥ k) = 0

k→∞

This implies P (||Sn || < i.o.) = 1 and the proof is done.

Now we state a lemma which will help us later to prove another lemma.

23

Lemma 4.18. Let m be an integer with m ≥ 2 and > 0. Then

∞

X

P (||Sn || < m) ≤

n=0

∞

X

X

n=0

k∈{−m,...,m−1}d

P (Sn ∈ k + [0, )d )

Proof. Here, we follow the steps of Lazarus, see [3]. We define the events

A = {(ω1 , ..., ωd ) ∈ Rd : ∃i ∈ {1, ..., d} such that ωi = −m}

B = {ω ∈ Rd : ||ω|| < m}

Let now I = {−m, ..., m − 1}d . Let v = (v1 , ..., vd ) and define

Cv = [v1 , (v1 + 1)) × [v2 , (v2 + 1)) × ... × [vd , (vd + 1)) ∀v ∈ I .

Our aim is to partition

A ∪ B into (2m)d cubes, which are half-open by

S

definition. Since v∈I Cv = A ∪ B, as we fix together pairwise disjoint Cv ,

we obtain with B ⊂ A ∪ B and σ-additivity

∞

X

P (Sn ∈ B) ≤

n=0

=

∞

X

P (Sn ∈ A ∪ B) =

n=0

∞ X

X

∞

X

n=0

P (Sn ∈

[

Cv )

v∈I

(9)

P (Sn ∈ Cv )

n=0 v∈I

which proves the lemma.

Lemma 4.19. Let m be an integer with m ≥ 2.

∞

X

P (||Sn || < m) ≤ (2m)d ·

∞

X

P (||Sn || < )

n=0

n=0

Proof. Let Tk = inf {l ≥ 0 : Sl ∈ k + [0, )d } which is a stopping time.

Let I = {−m, ..., m − 1}d and C˜k = k + [0, )d .

By using Fubini’s theorem on the right side of Lemma 4.18, we can calculate

for the inner sum:

∞

X

n=0

P (Sn ∈ k + [0, )d ) =

n

∞ X

X

n=0 l=0

24

P (Sn ∈ C˜k , Tk = l)

Since exactly one of the events {Sn ∈ C˜k , Tk = 0}, ..., {Sn ∈ C˜k , Tk = n}

occurs, iff {Sn ∈ C˜k } occurs, the expression above is well-defined. [Of course,

none of these events occurs iff the main event does not occur.]

∞

X

d

P (Sn ∈ k + [0, ) ) =

n=0

∞ X

∞

X

P (Sn ∈ C˜k , Tk = l)

l=0 n=l

This is the case, since all summands are ≥ 0, we can interchange the order

of summation.

For the next step, we use the following argument: Fix l ∈ N0 . If Tk = l, then

the RW reaches C˜k at time l. After that, if we want to return to C˜k at time

n, one has got n − l steps from the time l. These n − l steps are only allowed

to shift the RW by ≤ in any cardinal disrection since these C˜k = k + [0, )d

are cubes with side length . Therefore,

∞

X

P (Sn ∈ C˜k , Tk = l) ≤

∞

X

P (||Sn − Sl || < , Tk = l)

n=l

n=l

As we have seen on multiple occasions, Sn−k and Sn − Sk have got the same

distribution. Thus, we obtain together with the reindexing i = n − l:

∞

X

P (Sn ∈ C˜k , Tk = l) ≤ P (Tk = l) ·

n=l

= P (Tk = l) ·

∞

X

n=l

∞

X

P (||Sn−l || < )

P (||Si || < )

i=0

Here we used that {Tk = l} and {||Sn − Sl || < } are independent since the

first l steps starting in 0 are independent of the follwing n − l steps. So,

putting all the sums together, we have:

∞ X

∞

XX

k∈I l=0 n=l

P (Sn ∈ C˜k , Tk = l) ≤

∞

XX

k∈I l=0

P (Tk = l)

∞

X

P (||Si || < )

i=0

By observing that Cv = C˜k and Sn ∈ B ⇔ ||Sn || < m in the proof of Lemma

4.18, we use (9) of the same to obtain:

25

∞

X

P (||Sn || < m) ≤

n=0

=

≤

∞

XX

P (Sn ∈ C˜k )

k∈I n=0

∞ X

∞

XX

P (Sn ∈ C˜k , Tk = l)

k∈I l=0 n=l

∞

XX

∞

X

k∈I l=0

i=0

P (Tk = l)

P (||Si || < )

P

Now we have ∞

l=0 P (Tk = l) ≤ 1 since Tk is a stopping time.

Furthermore, |I| = |{−m, ..., m − 1}d | = (2m)d . Therefore, we finally conclude the proof:

∞

X

d

P (||Sn || < m) ≤ (2m) ·

n=0

∞

X

P (||Si || < )

i=0

As an immediate consequence, setting d = 1, we get:

Corollary 4.20. Let m be an integer with m ≥ 2.

∞

X

P (|Sn | < m) ≤ 2m ·

n=0

∞

X

P (|Sn | < )

n=0

Another ingredient from [11] in order to prove Chung-Fuchs theorem is the

following:

Lemma 4.21. Let {un (m)}n∈N , where m is a real number with m ≥ 1, be a

sequence of positive numbers. Let {un (m)}n∈N satify

i) ∀n ∈ N : un (m) is increasing in m and un (m) → 1 as m → ∞.

P

P∞

ii) ∃c > 0 : ∞

n=0 un (m) ≤ cm ·

n=0 un (1) ∀m ≥ 1

iii) ∀δ > 0 : lim un (δn) = 1.

n→∞

Then one has

∞

X

un (1) = ∞ .

n=0

26

For this, we need however a lemma which uses Cesàro summation and will

be given without proof:

P

Lemma 4.22 (Cèsaro mean). Let {an }n∈N be a sequence and cn = n1 ni=1 ai .

Then one has

lim an = A ⇒ lim cn = A

n→∞

n→∞

Proof of Lemma 4.21. We prove this by contradiction. Let A > 0. Suppose

the sum converges, then one has:

bAmc

bAmc

∞

n

1 X

1 X

1 X

∞>

un (1) ≥

·

un (m) ≥

·

un (m) ≥

·

un

cm n=0

cm n=0

cm n=0

A

n=0

∞

X

This follows from (ii) and as un (m) is increasing in m.

With δ = A1 we derive from (iii) that lim un An = 1. With m → ∞ and

n→∞

Lemma 4.22, we get:

∞

X

un (1) ≥

n=0

1

A

· Am =

cm

c

Since A was arbitrary, we obtain the contradiction and the lemma is proved.

Now we can state an important fact:

P

Theorem 4.23. The convergence (resp. divergence) of ∞

n=0 P (||Sn || < )

for a value of > 0 is sufficient for transience (resp. recurrence).

Proof. This follows directly from Lemma 4.17 and Lemma 4.19:

We can vary the in Lemma 4.17 by applying Lemma 4.19 and since a

constant factor (2m)d , m ∈ {2, 3, ...}, does not affect convergence (resp.

divergence), as we estimate upwards, we obtain transience (resp. recurrence)

by definition.

Remark 4.24. Suppose, (Xn )n∈N have a welldefined expectation E[Xn ] =

µ 6= 0, ∀n ∈ N, then with the strong law of large numbers one has

Sn

→ µ a.s.. As n → ∞, |Sn | → ∞, the random walk is transient.

n

Theorem 4.25 (Chung-Fuchs I). Suppose d = 1. If the weak law of large

numbers holds in the form Snn → 0 in probability, then (Sn )n∈N is recurrrent.

27

Proof. We set

un (m) = P (||Sn || < m) .

Since P is a probability measure, (i) of Lemma 4.21 is satisfied. For (ii), we

use Corollary 4.20 with c = 2. To obtain the last condition, we observe that

Sn p

Sn

Sn

→ 0 ⇔ lim P

− 0 ≥ = 0 ⇔ lim P

< =1

n→∞

n→∞

n

n

n

Thus, un (δn) → 1 ∀δ > 0 as n → ∞. Since all three conditions are satisfied,

we conclude

∞

∞

X

X

un (1) =

P (||Sn || < 1) = ∞

n=0

By setting Xi =

P

S˜n = i=1 X̃i :

X̃i

,

n=0

i ∈ N, we get for a the modified random walk

∞

X

P (||S˜n || < ) = ∞

n=0

Since this argument does not violate the requirements of Chung-Fuchs theorem, Sn is indeed recurrent by Lemma 4.17

Remark 4.26. By Remark 4.24 and Theorem 4.25 we obtain the following

result:

If the (Xn )n∈N have an expectation µ = E[Xn ] ∈ [−∞, ∞], then the random

walk is recurrent ⇐⇒ µ = 0.

We now go on with the case d = 2:

Theorem 4.27 (Chung-Fuchs II). Let d = 2. If (Sn )n∈N is a random

Sn

→ a nondegenerate normal distribution , then (Sn )n∈N is

walk and √

n

recurrent.

Proof. With Lemma 4.19, setting = 1 and d = 2, we see:

∞

X

∞

1 X

P (||Sn || < 1) ≥

·

P (||Sn || < m)

2

4m

n=0

n=0

P

Our aim is to show that 4m1 2 · ∞

n=0 P (||Sn || < m) = ∞, since we can then

prove recurrence of (Sn )n∈N .

Let c > 0. Then, if n → ∞ and √mn → c, then one obtains certainly

P (||Sn || < m) = P

1

m

√ ||Sn || < √

n

n

28

Z

→

n(x)dx

||y||≤c

Here, n(x) denotes a non-degenerate normal distribution. If n(x) were degenerate, we would be at the previous one-dimensional problem, see Theorem

4.25. We call

Z

ρ(c) =

n(x)dx .

||y||≤c

Now, for θ > 0 and

∞

X

n

m2

≤θ<

n+1

,

m2

one has bθm2 c = n. Since ∀m ∈ N,

Z

∞

P (||Sbzc || < m)dz

P (||Sn || < m) =

0

n=0

we obtain by the substitution z = θm2 :

Z ∞

∞

1 X

P (||Sn || < m) =

P (||Sbθm2 c || < m)dθ

m2 n=0

0

Furthermore, as √ m

bθm2 c

→

√1 ,

θ

for m → ∞:

Sbθm2 c

m

p

<p

P (||Sbθm2 c || < m) = P

bθm2 c

bθm2 c

Z

1

→

n(x)dx = ρ √

θ

||y||≤ √1

θ

!

Since

Pit∞ suffices to show that, ∀C > 0, we can find m ∈ {2, 3, ...} such that

1

n=0 P (||Sn || < m) > C, we can also show:

4m2

∞

1 X

lim inf 2

P (||Sn || < m) = ∞

m→∞ m

n=0

With f = ρ √1θ , fm = P (||Sbθm2 c || < m), we already obtained fm → f as

m → ∞. Thus, we are able to use Fatou’s lemma:

Z

∞

1 X

1 ∞

lim inf

P (||Sn || < m) ≥

lim inf P (||Sbθm2 c || < m)dθ

m→∞ 4m2

4 0 m→∞

n=0

(10)

Z

1 ∞

1

ρ √ dθ

=

4 0

θ

R∞

It is left to show that 0 ρ √1θ dθ = ∞.

First of all, we observe that since n is a non-degenerate normal distribution,

29

n is continous at 0 and n(0) > 0. Therefore, there exists r > 0, x ∈ [−r, r]2 ,

such that |n(x) − n(0)| < n(0)

. For these x ∈ [−r, r]2 we have n(x) ≥ n(0)

.

2

2

Then, for c ∈ (0, r] and since we are in R2 :

Z

Z

Z

n(0)

n(0)

n(x)dx ≥

dx =

2c ·

= 2n(0)c2 (11)

ρ(c) =

2

2

2

||y||≤c

(−c,c)

(−c,c)

As n(0) > 0, we have ρ(c) > 0 on (0, ∞). Moreover, if θ ≥ r12 , then r2 ≥

and thus √1θ ∈ (0, r], which allows us to use (11):

2

Z ∞ Z ∞ Z ∞

1

1

1

ρ √ dθ ≥

ρ √ dθ ≥

2n(0) · √

dθ

1

1

θ

θ

θ

0

r2

r2

Z ∞

1

= 2n(0) ·

dθ = 2n(0) · [ln(θ)]∞

1/r2 = ∞

1

θ

2

1

θ

r

By (10), we obtain the statement.

4.3

Alternative criterion for recurrence

We will state a theorem, which allows us to show recurrence of a random

walk (Sn )n∈N in a different way. But beforehand, we need some tools which

enable us to prove it afterwards. In this chapter we mainly use [3] and [1].

Definition 4.28. For a random walk (Sn )n∈N on Rd , we define

Z

itX1 φ(t) = E e

eitx µ(dx) , t ∈ R

=

Rd

as the characteristic function of X1 on the corresponding probability measure µ.

Lemma 4.29 (Parselval relation). Let µ and ν be probability measures on

Rd with characteristic functions φ and ψ. Then

Z

Z

ψ(t)µ(dt) =

φ(x)ν(dx)

Rd

Rd

Proof. Since probability measures are bounded and |eit·x | ≤ 1 and thus

bounded either, we can use Fubini’s theorem. Therefore, we obtain:

Z

Z Z

ψ(t)µ(dt) =

eitx ν(dx)µ(dt)

d

d

Rd

R

R

Z Z

Z

itx

=

e µ(dt)ν(dx) =

φ(x)ν(dx) .

Rd

Rd

Rd

30

Lemma 4.30. If |x| ≤ π3 , then 1 − cos(x) ≥

x2

.

4

Proof. As f (x) = cos(x) is an even function, it suffices to show the result for

x > 0. To find a condition for cos(z) ≥ 21 , we calculate z ≤ cos−1 ( 12 ) = π3 .

Therefore, for y ∈ [0, π3 ], we obtain:

Z y

Z y

1

y

sin(y) =

cos(z)dz ≥

dz =

2

0

0 2

Now we get directly for x ∈ [0, π3 ]:

Z

Z x

sin(y)dy ≥

1 − cos(x) =

x

0

0

y

x2

dy =

2

4

For the next lemma, we cite [3].

Lemma 4.31. Let an ≥ 0 ∀n ∈ N0 . Then:

sup

∞

X

n

c · an =

c∈(0,1) n=0

∞

X

an

n=0

n

Proof. ” ≤ ” : This direction is trivial since cP

∈ (0, 1) implies

Pc∞ ≤ 1 and

∞

n

n

thus c · an ≤ an ∀n ∈ N0 . Therefore supc∈(0,1) n=0 c · an ≤ n=0 an .

” ≥ ” : For this direction, we consider the two cases ”convergence” and

”divergence”:

P

a = ∞, c ∈ (0, 1). Then we can find for every R > 0 a N ∈ N

(i) Let ∞

PN n=0 n

with n=0 an > 2R. Now choose c with cn ≥ 12 ∀n ∈ {0, 1, ..., N }. Then one

has

N

N

∞

X

X

X

1

c n · an ≥

c n · an ≥

an > R

2

n=0

n=0

n=0

P∞ n

Therefore, ∀R > 0 ∃c such that n=0 c an > R. We conclude:

sup

∞

X

cn · an = ∞ .

c∈(0,1) n=0

P

P∞

(ii) Let ∞

n=0 an < ∞, i.e. ∃C > 0 withP n=0 an = C.

M

Let > 0. Now we choose M ∈ N with

n=0 an > C − 2 .

Furthermore, we choose c such that ∀n ∈ {0, 1, ..., M }, we have

cn ≥ 1 −

.

2(C + 1)

31

Then one has, as

∞

X

n=0

n

C

C+1

c · an ≥

< 1 ∀C > 0:

M

X

c · an > 1 −

n

n=0

2(C + 1)

· C−

2

C ·

2

−

+

>C− −

2 2(C + 1) 4(C + 1)

2 2

∞

X

=C −=

an − =C−

n=0

Therefore, ∀ > 0 ∃c such that

∞

X

n

c · an >

n=0

We conclude

sup

∞

X

an − .

n=0

∞

X

c n · an ≥

c∈(0,1) n=0

∞

X

an

n=0

We give here a distribution which will be essential for one direction in the

upcoming proof of the important criterion.

Example 4.32 (Triangle distribution). Let X be a random variable distributed with the following density:

f (x) = 1 − |x| , x ∈ (−1, 1)

Then the characteristic function φX (t) can be calculated by partial integration:

Z 0

Z 1

itx

(1 + x) · eitx dx

φX (t) =

(1 − |x|) · e dx =

−1

−1

Z 1

1

1

1

1

+

(1 − x) · eitx dx = 2 − 2 e−it − 2 eit + 2

t

t

t

t

0

2 − 2 cos(t)

=

t2

Using this, we can evolve this example to:

32

Example 4.33. Let X be a random variable distributed with the following

density:

δ − |x|

g(x) =

, |x| ≤ δ and g(x) = 0 otherwise

(12)

δ2

Applying Example 4.32, we obtain the associated characteristic function

φ(t) =

2 − 2 cos(δt)

.

(δt)2

Lemma 4.34. For z ∈ C, Re z < 1, one has

1

1

≤

0 ≤ Re

1−z

Re(1 − z)

Proof. Let z = x + iy. We observe that, since Re z = Re z and Re z < 1:

1

1−z

Re(1 − z)

Re

≥0

= Re

=

2

1−z

|1 − z|

|1 − z|2

Re(1 − z)

1−x

1−x

1−x

1

=

=

≤

=

2

2

2

2

2

|1 − z|

|(1 − x) − iy|

(1 − x) + y

(1 − x)

Re(1 − z)

We can finally state our condition where we only need to know the characteristic function.

Theorem 4.35. Let δ > 0 and ψ a characteristic function. Then:

Z

1

dy = ∞

(Sn )n∈N is recurrent ⇐⇒

Re

1 − ψ(y)

(−δ,δ)d

For our purposes, it suffices however to prove a weaker result. Observe therefore:

Theorem 4.36. Let δ > 0 and ψ a characteristic function. Then:

Z

1

Re

(Sn )n∈N is recurrent ⇐⇒ sup

dy = ∞

1 − rψ(y)

r<1 (−δ,δ)d

33

Proof. ” =⇒ ”: Let µn be the distribution of Sn . As the density g in (12)

cos(δt)

has the charactersitic function (ch. f.) φ(t) = 2−2(δt)

, we can now observe

2

the following:

Firstly, if t ∈ − 1δ , 1δ , then one gets from the definition ofthe density above

π

≥ 1 ≥ |δt|. Thus, one obtains the following ∀t ∈ − 1δ , 1δ by Lemma 4.30:

3

1 − cos(δt) ≥

4(1 − cos(δt)

(δt)2

⇔

≥ 1 ⇔ 2φ(t) ≥ 1

4

(δt)2

Since we are in the d-dimensional case, we assume that we have Y1 , ..., Yd iid

random variables with triangular density and therefore the same correspond

ding ch.f. φ(t). Then, one can state ∀(x1 , ..., xd ) ∈ − 1δ , 1δ , since 2φ(t) ≥ 1:

d

2 ·

d

Y

d

Y

φ(xi ) =

(2φ(xi )) ≥ 1

i=1

(13)

i=1

Secondly, we have

d ! Z

1 1

Sn ∈ − ,

=

1 dµn (x)

d

δ δ

(− 1δ , 1δ )

1

P ||Sn || <

=P

δ

By (13), we have:

Z

d

Y

1

d

P ||Sn || <

≤2 ·

φ(xi ) dµn (x)

d

δ

(− 1δ , 1δ ) i=1

Now we observe that φ(t) ≥ 0 ∀t ∈ R since 1 − cos(δt) ≥ 0.

d

As − 1δ , 1δ ⊆ Rd , we can expand the interval of the integral and obtain:

P

1

||Sn || <

δ

Z

d

≤2 ·

d

Y

φ(xi ) dµn (x)

Rd i=1

Now we can use Lemma 4.29 to interchange the probability measures and

the corresponding ch.f.s to get:

P

1

||Sn || <

δ

d

Z

≤2 ·

Rd

d

Y

δ − |xi |

i=1

δ2

ψ n (x) dx

Here, we denote by ψ the ch.f. of X1 and since the sequence (Xn )n∈N0 is iid,

ψSn = ψ n .

34

Since g is given such that ∀t ∈ R, g(t) = δ−|t|

, if |t| ≤ δ and g(t) = 0

δ2

otherwise, we can look at the smaller interval (−δ, δ)d ⊆ Rd and have

Z

d

Y

1

δ − |xi | n

d

P ||Sn || <

≤2 ·

ψ (x) dx

δ

δ2

(−δ,δ)d i=1

To be able to use Lemma 4.17, we need to insert sums. To interchange the

sum and the integral, we choose a constant r such that 0 < r < 1. Then,

since ψ(x) ≤ 1 ∀x ∈ R as a ch. f., we have:

!

Z

∞

∞

d

X

X

Y

δ

−

|x

|

i

·

rn ψ n (x) dx

rn · P (||Sn || < 1/δ) ≤ 2d ·

2

d

δ

(−δ,δ) i=1

n=0

n=0

(14)

d Z

d

Y δ − |xi |

1

2

·

dx

·

=

δ

δ

1 − rψ(x)

(−δ,δ)d i=1

In the second equation, we did not justify the change of the order of summation and integral. To achieve this, we observe that we have to use Fubini’s

theorem to do such a thing. Thus, we have to show

∞ Z

X

n=0

(r · ψ(x))n

(−δ,δ)d

d

Y

δ − xi

δ

i=1

dx < ∞

(15)

dx < ∞

(16)

as well as the changement

∞

X

Z

n

(r · ψ(x))

(−δ,δ)d n=0

d

Y

δ − xi

δ

i=1

Firstly, |ψ(x)| ≤ 1 and therefore |ψ n (x)| ≤ 1 on Rd . Secondly, for t ∈ (−δ, δ),

we have 0 ≤ δ−|t|

= 1 − |t|δ ≤ 1. For (x1 , ..., xd ) ∈ (−δ, δ)d , we obtain:

δ

n

(r · ψ(x))

d

Y

δ − xi

δ

i=1

≤ |rn |

Therefore, we get for (15), since 0 < r < 1:

∞ Z

X

n=0

=

n

(r · ψ(x))

(−δ,δ)

∞

X

d

d

Y

δ − xi

i=1

δ

(δ − (−δ))d · rn = (2δ)d ·

n=0

35

dx ≤

∞ Z

X

n=0

1

<∞

1−r

(−δ,δ)

rn dx

d

For (16), we have:

∞

X

Z

n

(r · ψ(x))

d

(−δ,δ) n=0

Z

=

(−δ,δ)d

d

Y

δ − xi

δ

i=1

∞

X

Z

dx ≤

rn dx

d

(−δ,δ) n=0

1

1

dx = (2δ)d ·

<∞

1−r

1−r

Since now L(Xi ) = L(−Xi ) ∀i ∈ N and as the Xi are iid, ψ(x) is real. Thus,

we do not affect the right-hand side of (14) by taking the real value. Together

Q

i|

with 0 ≤ di=1 δ−|x

≤ 1, we obtain:

δ

∞

X

d Z

1

2

Re

r · P (||Sn || < 1/δ) ≤

·

dx

d

δ

1

−

rψ(x)

(−δ,δ)

n=0

n

(17)

Since 0 < r < 1, we can apply Lemma 4.31 with an = P (||Sn || < 1/δ) and

get

d

Z

∞

X

2

1

P (||Sn || < 1/δ) ≤

dx .

· sup

Re

δ

1 − rψ(x)

r<1 (−δ,δ)d

n=0

Now we assume that

Z

sup

r<1

Re

(−δ,δ)d

1

dx < ∞ .

1 − rψ(x)

But then one also would have

∞

X

P (||Sn || < 1/δ) < ∞

n=0

and with Theorem 4.23 setting =

rection is shown.

” ⇐= ”: Now we assume that

Z

sup

r<1

(−δ,δ)d

Re

1

δ

we obtain transience and the first di-

1

dx = ∞

1 − rψ(x)

holds. The density

h(t) =

δ(1 − cos(t/δ)

has got ch. f. θ(t) = 1 − |δt| , |t| ≤ 1/δ

πt2

36

and elsewhere 0. This is called Polya’s distribution. For the explicit calculation (which is not difficult but takes its time) see [1] or [3].

We argue similarly as in ” =⇒ ”: if t ∈ (− 1δ , 1δ ), then

0 ≤ θ(t) = 1 − |δt| ≤ 1 .

Since we are again in the d-dimensional case, we obtain ∀(x1 , ..., xd ) ∈ (− 1δ , 1δ )d :

d

Y

0≤

d

Y

θ(xi ) =

(1 − |rxi |) ≤ 1

i=1

(18)

i=1

And thus with (18):

d

Y

Z

Z

P (||Sn || < 1/δ) =

(− 1δ , 1δ )

1 dµn (x) ≥

d

d

(− 1δ , 1δ )

θ(xi ) dµn (x)

i=1

As θ(t) = 0 for t ∈

/ (− 1δ , 1δ ), we can expand the interval of the integral and

get:

Z Y

d

P (||Sn || < 1/δ) ≥

θ(xi ) dµn (x)

(19)

Rd i=1

By the same argument as in the first direction, with Lemma 4.29, we interchange the probability measures and the corresponding ch. fs. to get (with

i /δ)

the above density) :

h(xi ) = δ(1−cos(x

πx2

i

d

Y

Z

P (||Sn || < 1/δ) ≥

h(xi ) ψ n (x) dx

Rd i=1

As before, with 0 < r < 1 and summing up from 0 to n:

∞

X

n

r · P (||Sn || < 1/δ) ≥

∞ Z

X

(r · ψ(x))

Rd

n=0

n=0

n

d

Y

h(xi ) dx

i=1

Once again, in order to make use of Fubini’s theorem, we have to show:

∞ Z

X

n=0

as well as

Z

(r · ψ(x))n

Rd

∞

X

Rd n=0

d

Y

h(xi ) dx < ∞

(20)

h(xi ) dx < ∞

(21)

i=1

n

(r · ψ(x))

d

Y

i=1

37

We prove only (20) since (21) can be concluded from it.

First of all, some basic observations: h ≥ 0 on R as 1 − cos(z) ≥ 0 ∀z ∈ R

and therefore |h| = h on R.

R Furthermore, as ψ is a ch.f., we have |ψ| ≤ 1 and

n

thus |ψ| ≤ 1. Moreover, R h(t)dt = 1 since h is a density function. Now we

can calculate for r < 1 using Fubini’s theorem since h ≥ 0 on R:

Z Y

∞ Z

d

d

∞

X

Y

X

n

n

(r · ψ(x))

h(xi ) dx ≤

r ·

h(xi ) dx

n=0

≤

Rd

∞

X

n=0

n

r ·

d Z

Y

i=1

h(t)dt =

R

∞

X

∞

X

rn · 1d =

n=0

Therefore we obtain, since

Rd i=1

n=0

i=1

1

1−rψ(x)

is real by the argument in the first part:

Z

n

1

<∞

1−r

r · P (||Sn || < 1/δ) ≥

∞

d

X

Y

n

(r · ψ(x))

h(xi ) dx

Rd n=0

n=0

d

Y

Z

=

Rd

i=1

d

Y

Z

=

Rd

i=1

!

h(xi )

·

1

dx

1 − rψ(x)

!

h(xi )

· Re

i=1

1

dx

1 − rψ(x)

By Lemma 4.34, since r · ψ(x) ≤ 1 ∀x ∈ R, we have

1

Re

≥0 .

1 − rψ(x)

Now, it is not a problem, if we take a smaller interval (−δ, δ)d ⊆ Rd , and

have:

!

Z

d

∞

Y

X

1

dx

h(xi ) · Re

rn · P (||Sn || < 1/δ) ≥

1

−

rψ(x)

d

(−δ,δ)

n=0

i=1

Now we once again apply Lemma 4.30, as

h(t) =

π

3

≥1≥

t

δ

, ∀t ∈ (−δ, δ) , t 6= 0:

δ · (1 − cos(t/δ)

δ · (t/δ)2

1

≥

=

2

2

πt

4πt

4πδ

(22)

This observation we can, of course, apply ∀(x1 , ..., xd ) ∈ (−δ, δ)d . Since (22)

is independent of (x1 , ..., xd ), we get certainly:

d

d

d

Y

Y

1

1

h(xi ) ≥

=

4πδ

4πδ

i=1

i=1

38

Therefore, we have:

∞

X

n

r · P (||Sn || < 1/δ) ≥

n=0

Z

1

d

(4πδ)

·

Re

(−δ,δ)d

1

dx

1 − rψ(x)

We conclude (once again) with Lemma 4.31 and Theorem 4.23, with = 1δ :

∞

X

P (||Sn < 1/δ) ≥

n=0

Z

1

d

(4πδ)

· sup

r<1

Re

(−δ,δ)d

1

dx = ∞

1 − rψ(x)

To end with this chapter, an example is given where we apply one of our

stated conditions.

α

Example 4.37. Let d = 1. Let ψ(t) = e−|t| for α ∈ (0, 2]. We observe that

α

1 − rψ(t) = 1 − r · e−|t| ↓ 1 − e−|t|

α

as r ↑ 1 .

Furthermore, as

(

α

−α · |t|α−1 · e−|t| , t ≥ 0

0

ψ (t) =

α

α · |t|α−1 · e|t|

, t<0

and with f (t) = |t|α we obtain by using L’Hôpital’s rule:

α

α

α|t|α−1 e−|t|

1 − e−|t|

α

= lim

= lim e−|t| = 1

lim

α−1

t→0

t→0

t→0

f (t)

α|t|

Here, we computed the case for t ≥ 0 but this is all the same for t → 0.

Thus,

α

1 − e−|t| ∼ |t|α as t → 0 .

Applying Theorem 4.36, we get by Fatou’s lemma:

Z

Z

1

1

Re

dt →

dt

sup

1 − rψ(t)

r<1 (−δ,δ)d

(−δ,δ)d 1 − ψ(t)

(

Z

=∞ , α≥1

∼

|t|−α dt

<∞ , α<1

(−δ,δ)d

Thus, these random walks are recurrent ⇐⇒ α ≥ 1. We have the following

three cases:

39

(i) For α > 1, we have for this case E[|X|k ] < ∞ for some k ≥ 1. Then,

for j ≤ 1 ≤ n, we get:

1

E[Xj ] = φ0 (0) = 0

i

Therefore, we can apply Theorem 4.25 with E[Xj ] = 0 ∀j ∈ N and get

Sn

→ 0 in probability and thus recurrence.

n

(ii) For α = 1, we observe that φ1 (t) = e−|t| , t ∈ R has the density

1

f (x) = π1 · 1+x

2 , x ∈ R which is the density of the Cauchy distribution,

see [2].

P Since the Xj are iid, the ch.f. of Sn , ψ(t), is given by (since

Sn = ni=1 Xi ):

ψ(t) = φn1 (t) = e−|t|

n

= e−n|t|

Now, Sn /n has the following ch .f. δ(t):

δ(t) = E[eitSn /n ] = eit·0 · ψ(t/n) = e−|t| = φ1 (t)

Thus, Snn → a Cauchy distribution as n → ∞, and therefore Snn 6→ 0

in probability. So surprisingly, the weak law of large numbers does not

hold, even though Sn is recurrent.

(iii) For α < 1, we first of all see that by Theorem 3.10 we have four possibilities.

Since α < 1, the distribution of the (Xn )n∈N is symmetric about 0. We

apply Example 3.11, as P (Xi = 0) < 1 ∀i ∈ N, and obtain

−∞ < lim inf Sn < lim sup Sn < ∞ .

n→∞

n→∞

This, however, implies P (||Sn || < i.o.) = 0 for any > 0 and thus

we have transience.

40

5

Outlook

In the recurrence condition in Theorem 4.36 does not only depend on whether

a recurrent random walk has arbitrary large tails or not [12]. One can prove:

Theorem 5.1. Let (x) be a function such that lim (x) = 0, then there

x→∞

exists a symmetric random variable X1 with the property:

P (|X1 | > x) ≥ (x) for large x

and Sn is recurrent.

Similar to Example 4.37, one can show for d = 2 that the random walks with

α

ch.f. φ(t) = e−|t| , where | · | denotes here the Euclidean norm, are transient

for α < 2 and recurrent for α = 2.

This implies that Theorem 4.27 is indeed a sharp criterion as the ”α = 2”case is the normal nondegenerate distribution.

For d = 3, we observe that we need to look at random walks not staying on a plane through 0, as a two-dimensional random walk on R3 is still

recurrent. More formally:

Definition 5.2. A random walk (Sn )n∈N in R3 is truly three-dimensional

if ∀θ 6= 0 the distribution of X1 has P (hX1 , θi =

6 0) > 0.

We close this chapter with the last conclusion:

Theorem 5.3. No truly three-dimensional random walk is recurrent.

For the proof, see once again [1] or [3].

Therefore, we finally generalized the recurrence conditions for random walks

on Rd .

41

6

Conclusion

To return to our ”blind squirrel” from the introduction, we can deduce the

following:

It cannot find the same nut once again, or say infinitely often, if it has wings

using them to fly and changing its fly level, i.e. the squirrel does not fly ”on

a plane” of the same height but also up and down.

In general, if one wants to investigate random walks on recurrence, this

bachelor’s thesis provides proper tools to do so, with basic definitions and

”pre-work” beforehand.

42

References

[1] R. Durrett, Probability: Theory and Examples. Cambridge: Cambridge

University Press, 2010.

[2] S. Rolles, “Probability theory.” Lecture notes, TUM, 2015.

[3] S. Lazarus, “On the recurrence of random walks.” Paper for REU 2013,

University of Chicago.

[4] S. Rolles, “Notizen zur Vorlesung Markovketten (MA2404).” Lecture

notes, TUM, 2016.

[5] J. Watkins, “Discrete Time Stochastic Processes.” Lecture notes, University of Arizona, 2007.

[6] S. Endres, “Irrfahrten - Alle Wege führen nach Rom.” diploma thesis,

Bayerische Julius - Maximilians - Universität Würzburg, 2008.

[7] T. Leenman, “Simple random walk.” Mathematical Institute of Leiden

University, 2008.

[8] W. L. Champion, “Lost In Space,” Mathematical Scientist, vol. 32, p. 88,

2007.

[9] S. Rolles, “Probability on graphs.” Lecture notes, TUM, 2016.

[10] F. Spitzer, Principles of Random Walk. Springer Science+Business Media, LLC, 1976.

[11] K. Chung, A Course in Probability Theory. Stanford University: Academic Press, 2001.

[12] R. Peled, “Random Walks and Brownian Motion.” Lecture notes, Tel

Aviv University, 2011.

43