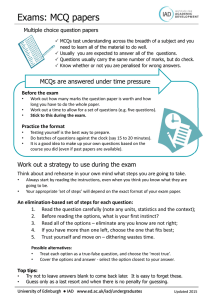

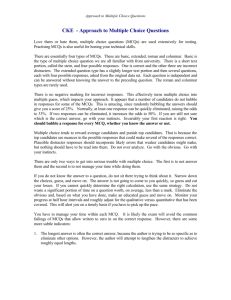

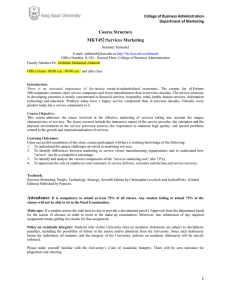

Designing Effective Multiple‐Choice Questions (MCQs)? Does it ever fail to be true that none of the so‐called "Quagmire Factors" sometimes come into play during a routine Helzog‐Froomish analysis? ______________________________________ A. Yes, always B. No, never C. No, always D. All of the above E. None of the above F. "A" and "C" G. "D" and "E" VII. Sometimes, at low temperatures Source: http://cte.uwaterloo.ca/teaching_resources/tips/designing_multiple_choice_questions.html Designing Effective Multiple-Choice Questions (MCQs) Teaching and Learning Services, McGill, with support from professors Marie Dagenais (Dentistry), David Ragsdale (Medicine) and Carolyn Samuel (Continuing Studies). Workshop Goals Describe what can be tested with multiple‐choice questions Evaluate multiple choice questions using commonly accepted criteria Become familiar with guidelines for writing effective multiple‐choice questions Focus: Formal (graded) testing Your Experience with MCQs What has been your experience with MCQs as a student? As a TA or instructor? What can be tested by MCQs? “I am wondering if there is any way to really use multiple choice tests meaningfully before I banish them from my classes forever” Levels of Learning (Bloom’s Taxonomy ‐ Revised)1 Highest level: not appropriate for MCQs Higher order learning Lower order learning Higher order learning that can be tested with MCQs Lower order learning is what MCQs most often target 1. Anderson, L.W., & Krathwohl, D. R. (Eds.). (2001). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives. New York: Longman. Image from Purdue University, Reflections on Teaching and Learning Webpage: http://blogs.itap.purdue.edu/learning/2012/05/04/review-of-idc-tools-to-assess-blooms-taxonomy-of-cognitive-domain/ Examples of MCQs at Different Levels of Learning See handout Creating Effective MCQs 1. Defining the purpose 2. Writing the questions Defining the Purpose What learning outcome do you want to test? Where could the learner go wrong? (challenges, common mistakes, misconceptions) Examples of questions with rationale Calculate the median of the following numbers: 15, 27, 27, 44, 67, 75, 81. A. 7 B. 27 C. 44 D. 48 Example Learning Outcome: to make appropriate clinical decisions based on laboratory data. A 62 year‐old woman with a history of confusion and constipation comes to the office for a follow‐up visit. Laboratory investigations reveal a serum calcium of 2.9mmol/L, a creatinine of 146 µmol/L, and a hemoglobin of 108 g/L. Which one of the following would help confirm the diagnosis? Parathyroid hormone B. Serum protein electrophoresis* C. 25‐OH vitamin D D. Serum creatinine E. Abdominal ultrasound A. Writing the Questions Anatomy of a MCQ 3. What is chiefly responsible for the increase in the average length of life in the USA during the last fifty years? stem distractor — a. Compulsory health and physical education courses in public schools. answer — b. The reduced death rate among infants and young children alternatives distractor — c. The safety movement, which has greatly reduced the number of deaths from accidents. distractor — d. The substitution of machines for human labor. Source: Burton et. al. (1991). How to Prepare Better Multiple-Choice Test Items: Guidelines for University Faculty. Brigham Young University Testing Services. Retrieved from http://testing.byu.edu/info/handbooks/betteritems.pdf One‐Best‐Answer Questions Stem Vignette Lead‐in question 3‐5 alternatives 1 correct (best) answer 2‐4 plausible distractors Stem Addresses a single problem/question Worded positively unless knowing what “not to do” is important Contains only relevant information A question that can be answered by hiding the alternatives Alternatives Homogeneous in nature Mutually exclusive Plausible Effective alternatives are designed to: Prevent students who DON’T know the answer from guessing it correctly. Prevent students who DO know the answer from being confused. (see checklist) Example A test which can be scored by a clerk untrained in the content area of the test is an 1. diagnostic test. 2. criterion‐referenced tests. 3. objective test. 4. reliable test. 5. subjective test. A test, which can be scored by a clerk untrained in the content area of the test, is said to be 1. diagnostic. 2. criterion‐referenced. 3. objective. 4. reliable. 5. subjective Example Which of the following songs did the Beatles compose? a) Let It Be b) Yesterday c) Polythene Pam d) All of the above e) None of the above Activity: Evaluating MCQs What’s wrong with these questions? (see handout) Conclusion A good MCQ: Measures a learning outcome for which it is well suited Does not contain clues to the right answer Is clearly worded Source: Burton et. al. (1991). How to Prepare Better Multiple-Choice Test Items: Guidelines for University Faculty. Brigham Young University Testing Services. Retrieved from http://testing.byu.edu/info/handbooks/betteritems.pdf References This article provides a thorough overview of the topic: Burton, S., J., Sudweeks, R., R., Merrill, P., F., & Wood, B. (1991). How to prepare better multiple‐choice test items: Guidelines for university faculty. [PDF document]. Retrieved from Brigham Young University Testing Centre Web site: http://testing.byu.edu/info/handbooks/betteritems.pdf This blog post is written by Jonathan Sterne, an associate professor in the Department of Art History and Communication Studies at McGill. He presents a fresh take on developing multiple‐choice questions that go beyond recognition for use in a large lectures: J. Sterne. (2013, January 10). Multiple choice exam theory (just in time for the new term) [Web log comment]. Retrieved from http://chronicle.com/blogs/profhacker/multiple‐choice‐exam‐theory/45275 Case, S. M., & Swanson, D. B. (2002). Constructing written test questions for the basic and clinical sciences (3rd ed.). [PDF document]. Retrieved from National Board of Medical Examiners Web site: http://www.nbme.org/PDF/ItemWriting_2003/2003IWGwhole.pdf Clegg, V. L., & Cashin, W. E. (1986). Improving multiple‐choice tests. IDEA Paper No. 16, Center for Faculty Evaluation and Development, Kansas State University. Haladyna T., M., Downing, S., M., & Rodriguez, M., C. (2010). A review of multiple‐choice item‐writing guidelines for classroom assessment. Applied Measurement in Education, 15, 309‐334. Hurley, K.F. (2005). OSCE and clinical skills handbook. Canada: Elsevier Saunders. Medical Council of Canada. (2010). Guidelines for the development of multiple‐choice questions. Retrieved from Medical Council of Canada Web site: http://www.mcc.ca/pdf/MCQ_Guidelines_e.pdf The Centre for Teaching Excellence, University of Waterloo. (n.d.). Designing multiple‐choice questions. Retrieved from http://cte.uwaterloo.ca/teaching_resources/tips/designing_multiple_choice_questions.html Thank You!! Please complete the workshop evaluation form