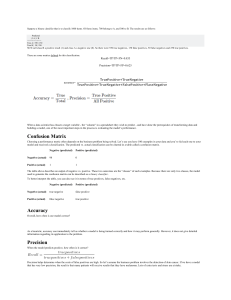

Classification Definition Classification is a task that requires the use of machine learning algorithms that learn how to assign a class label to examples from the problem domain. An easy to understand example is classifying emails as “spam” or “not spam.” Classification Predictive Modeling In machine learning, classification refers to a predictive modeling problem where a class label is predicted for a given example of input data. Examples of classification problems include: -Given an example, classify if it is spam or not. -Given a handwritten character, classify it as one of the known characters. -Given recent user behavior, classify as churn or not. Class labels are often string values, e.g. spam, not spam, and must be mapped to numeric values before being provided to an algorithm for modeling. This is often referred to as label encoding, where a unique integer is assigned to each class label, e.g. “spam” = 0, “no spam” = 1. Types of Classification • • • • Binary Classification Multi-Class Classification Multi-Label Classification Imbalanced Classification Binary Classification Binary classification refers to those classification tasks that have two class labels. Binary Classification Examples include: -Email spam detection (spam or not). -Churn prediction (churn or not). -Conversion prediction (buy or not). Binary classification tasks involve one class that is the normal state (class label 0) and another class that is the abnormal state (class label 1). Popular algorithms Algorithms that can be used for binary classification include: • Logistic Regression • k-Nearest Neighbors • Decision Trees • Support Vector Machine • Naive Bayes Some algorithms are specifically designed for binary classification and do not natively support more than two classes. -examples include Logistic Regression and Support Vector Machines. Multi-Class Classification Multi-class classification refers to those classification tasks that have more than two class labels. Multi-Class Classification Examples include: -Face classification. -Plant species classification. -Optical character recognition In multi class classification, examples are classified as belonging to one among a range of known classes. Popular algorithms Algorithms that can be used for multi-class classification include: – k-Nearest Neighbors. – Decision Trees. – Naive Bayes. – Random Forest. – Gradient Boosting. Other Classifications Multi-Label Classification -Multi-label classification refers to those classification tasks that have two or more class labels, where one or more class labels may be predicted for each example. Imbalanced Classification -Imbalanced classification refers to classification tasks where the number of examples in each class is unequally distributed. Assessing Classification Performance Why? -Multiple methods are available to classify or predict -For each method, multiple choices are available for settings -To choose best model, need to assess each model’s performance Accuracy Measures (Classification) Misclassification error • Error = classifying a record as belonging to one class when it belongs to another class. • Error rate = percent of misclassified records out of the total records in the validation data Confusion Matrix A confusion matrix is a table that is often used to describe the performance of a classification model (or "classifier") on a set of test data for which the true values are known. Confusion Matrix A confusion matrix (Kohavi and Provost, 1998) contains information about actual and predicted classifications done by a classification system. Performance of such systems is commonly evaluated using the data in the matrix. Example confusion matrix for a binary classifier -There are two possible predicted classes: "yes" and "no". If we were predicting the presence of a disease, for example, "yes" would mean they have the disease, and "no" would mean they don't have the disease. -The classifier made a total of 165 predictions (e.g., 165 patients were being tested for the presence of that disease). -Out of those 165 cases, the classifier predicted "yes" 110 times, and "no" 55 times. -In reality, 105 patients in the sample have the disease, and 60 patients do not. true positives (TP): These are cases in which we predicted yes (they have the disease), and they do have the disease. true negatives (TN): We predicted no, and they don't have the disease. false positives (FP): We predicted yes, but they don't actually have the disease. (Also known as a "Type I error.") false negatives (FN): We predicted no, but they actually do have the disease. (Also known as a "Type II error.") • Accuracy: Overall, how often is the classifier correct? – (TP+TN)/total = (100+50)/165 = 0.91 • Misclassification Rate: Overall, how often is it wrong? – (FP+FN)/total = (10+5)/165 = 0.09 – equivalent to 1 minus Accuracy – also known as "Error Rate" • True Positive Rate: When it's actually yes, how often does it predict yes? – TP/actual yes = 100/105 = 0.95 – also known as "Sensitivity" or "Recall" • False Positive Rate: When it's actually no, how often does it predict yes? – FP/actual no = 10/60 = 0.17 • True Negative Rate: When it's actually no, how often does it predict no? – TN/actual no = 50/60 = 0.83 – equivalent to 1 minus False Positive Rate – also known as "Specificity" • Precision: When it predicts yes, how often is it correct? – TP/predicted yes = 100/110 = 0.91 • Prevalence: How often does the yes condition actually occur in our sample? – actual yes/total = 105/165 = 0.64 ROC Curve ROC = Receiver Operating Characteristic • Started in electronic signal detection theory (1940s - 1950s) • Has become very popular in biomedical applications, particularly radiology and imaging • Also used in machine learning applications to assess classifiers • Can be used to compare tests/procedures ROC curves: simplest case • Consider diagnostic test for a disease • Test has 2 possible outcomes: – ‘positive’ = suggesting presence of disease – ‘negative’ • An individual can test either positive or negative for the disease ROC Analysis • True Positives = Test states you have the disease when you do have the disease • True Negatives = Test states you do not have the disease when you do not have the disease • False Positives = Test states you have the disease when you do not have the disease • False Negatives = Test states you do not have the disease when you do Specific Example Threshold Some Definitions… Some Definitions… Some Definitions… Some Definitions… Moving the Threshold: right Moving the Threshold: left Threshold Value • The outcome of a logistic regression model is a probability • Often, we want to make a binary prediction • We can do this using a threshold value t • If P(y = 1) ≥ t, predict positive – If P(y = 1) < t, predict negative – What value should we pick for t? Threshold Value • Often selected based on which errors are “better” • If t is large, predict positive rarely (when P(y=1) is large) – More errors where we say negative , but it is actually positive – Detects patients who are negative • If t is small, predict negative rarely (when P(y=1) is small) – More errors where we say positive, but it is actually negative – Detects all patients who are positive • With no preference between the errors, select t = 0.5 – Predicts the more likely outcome THANK YOU!