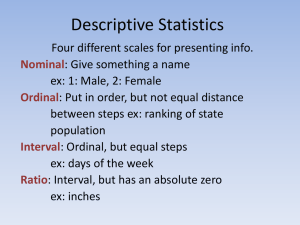

Chapter 1 – Introduction to Statistics and Business Analytics 1.1 – Basic Statistical Concepts • • Statistics – a science dealing with the collection, analysis, interpretation and presentation of numerical data. Key Elements of Statistics o Descriptive Statistics Population – collection of persons, objects, or items of interest (called a census when they gather data from a whole population) A sample is a portion of the whole and, if properly taken, is representative of the whole. If a business analyst is using data gathered on a group to describe or reach conclusions about that group, it’s called descriptive statistics. • Example: if one produces stats to summarize class’s exam results and uses those statistics to reach conclusions about the class. o Inferential Statistics If an analyst gathers data from a sample and uses the statistics generated to reach conclusions about the population from which the sample was taken. Used a lot in pharmaceutical research Used to study the impact of advertising on various market segments A descriptive measure of the population is called a parameter A descriptive measure of a sample is called a statistic The basis for inferential statistics, then, is the ability to make decisions about parameters without having to complete a census of the population 1.2 – Variables, Data and Data Measurement • • • In business statistics, a variable is a characteristic of any entity being studies that is capable of taking on different values. o Examples: Return of investment, advertising dollars, labour productivity, stock price etc. A measurement occurs when a standard process is used to assign numbers to particular attributes or characteristics of a variable. (Data are recorded measurements) Four common levels of data measurements are o Nominal Nominal-level data can be used only to classify or categorize • Example: employee identification numbers, sex, religion, place of birth. o Ordinal Ordinal-level data can be used to rank or order objects. • Example: supervisor ranking employees 1 to 3 • Not helpful – extremely helpful scale is used Both Nominal and Ordinal data are derived from imprecise measurements, so they are nonmetric data, or often called qualitative data. o Interval Distances between the consecutive numbers have meaning and the data are always numerical. Interval data have equal intervals The zero is not a fixed-point example is temperature. o Ratio Same as intervals but radio data have an absolute zero. • The zero value in the data represents the absence of the characteristic being studied. Examples: Height, mass time, production cycle time, passenger distance. Since both interval and ratio level data are collected with precise instruments, they are called metric data and are sometimes referred to as quantitative data. • Comparison of the Four Levels of Data o Nominal data are the most limited data in terms of the types of statistical analysis that can be used with them. Ordinal data allow the statistician to perform any analysis that can be done with nominal data and some additional ones. With ratio data, a statistician can make ratio comparisons and appropriately do any analysis that can be performed on nominal, ordinal, • or interval data. Some statistical techniques require ratio data and cannot be used to analyze other levels of data. Statistical techniques can be separated into two categories: Parametric Statistics and nonparametric statistics. o Parametric statistics require that data be interval or ratio o Non-Parametric statistics require the data to be nominal or ordinal. 1.3 – Big Data • • • Big data had been defined as a collection of large and complex datasets from different sources that are difficult to process using traditional data management and processing applications. Can be seen as a large amount of either organized or unorganized data that is analyzed to make an informed decision or evaluation. 4 characteristics of big data o Variety Many different forms of data based on data sources o Velocity The speed at which data is available and can be processed o Veracity Has to do about data quality, correctness and accuracy Indicates reliability, authenticity, legitimacy and validity in the data o Volume Has to do with the ever-increasing size of the data and databases. A fifth characteristic that is sometimes considered is Value, Analysis of data that doesn’t generate value is no use to an organization. Chapter 2 – Visualizing Data with Charts and Graphs 2.1 – Frequency Distributions • • • • • • • Raw data or data that have not been summarized in any way, are sometimes referred to as ungrouped data Data that has been organized into a frequency distribution are called grouped data One particularly useful tool for grouping data is the frequency distribution which is a summary of data presented in the form of class intervals and frequencies. The range is often defined as the difference between the largest and smallest numbers. The midpoint of each class interval is called the class midpoint and sometimes referred to as the class mark. It is the value halfway across the class interval and can be calculated as the average of the two class endpoints. Relative frequency is the proportion of the total frequency that is in any given class interval in a frequency distribution. Individual class frequency divided by the total frequency. Cumulative frequency is a running total of frequencies through the classes of a frequency distribution. Frequency for that class added to the preceding cumulative total. 2.2 – Quantitative Data Graphs • • • • • • One of the more widely used types of graphs for quantitative data is the histogram. A histogram is a series of contiguous rectangles that represents the frequency of data in given class intervals. X-axis with class endpoints and y-axis with the frequencies A frequency polygon, like the histogram is a graphical display of class frequencies. In a frequency polygon each class frequency is plotted as a dot at the class midpoint, and all the dots are connected by a series of line segments. An ogive is a cumulative frequency polygon, the scale of the yaxis has to be great enough to include the frequency total. o Ogives are most useful when the decision-maker wants to see running totals. Steam-and-leaf plot is constructed by separating the digits for each number of the data into two groups, a stem and a leaf. o The leftmost digits are the stem and have higher-valued digits o The rightmost digits are the leaves and contain the lower-valued digits Chapter 3 – Descriptive Statistics 3.1 – Measures of Central Tendency • • • • • • Measures of Central Tendency yield information about the centre, or middle part, of a group of numbers The arithmetic mean is the average of a group of numbers and is computed by summoning all numbers and dividing it by the number of numbers. (a.k.a. Mean) The median is the middle value in an ordered array of numbers. For an array with an odd number of terms the median is the middle number. For any array with an even number of terms the median is the average of the two middle numbers. Them mode is the most frequently occurring value in a set of data Percentiles are measures of central tendencies that divide a group of data into 100 parts. o There are 99 percentiles because it takes 99 dividers to separate a group into 100 parts. o Percentiles are “stair-step” values so a 67.7% would round down top the 67th percentile Quartiles are measures of central tendency that divide a group of data into four subgroups or parts. An example that best describes this is: 3.2 – Measures of Variability • Measures of variability are used to describe the spread or the dispersion of a set of data. Measures of variability is necessary to complement the mean value in describing the data. • • The range is the difference between the largest value of data and the smallest value of the set. Three other measures of variability are the variance, the standard deviation, and the mean absolute deviation. To identify the spread of the data you would subtract the mean from each data value which would yield the deviation from the mean (xi − μ). Sum of Deviations from the Arithmetic Mean is always zero. The mean absolute deviation (MAD) is the average of the absolute values of the deviations around the mean for a set of numbers The variance is the average of the squared deviations about the arithmetic mean for a set of numbers. The population variance is denoted by σ2. The sum of the squared deviations around the mean of a set of values is called the sum of squares of x. Standard Deviation is the square root of the variance. Empirical Rule is an important guideline that is used to state the approximate percentage of values that lie within a given number of standard deviations from the mean of a set of data if the data are normally distributed. The main use for sample variances and standard deviations is as estimators of population variances and standard deviations Computational Formulas Utilize he sum of the x values and the sum of the x2 values instead of the difference between the mean and each value and computed deviations • • • A z-score represents the number of standard deviations a value is above or below the mean set of numbers when the data are normally distributed. o If raw value is below the mean the z score is negative, and if the raw value is above the mean then the z score is positive, The coefficient off a variation is a statistic that is the ratio of the standard deviation to the mean expressed in percentage and is denoted CV The coefficient of variation is essentially a relative comparison of a standard deviation to its mean. The coefficient of variation can be useful in comparing standard deviations that have been computed from data with different means 3.4 – Business Analytics using Descriptive Statistics • Descriptive statistics is one of the three categories of business analytics o Excel and other computer packages have a descriptive statistics feature that can produce many descriptive statistics in one table. Example: o Used to simplify large amounts of data, and the excel programs, charts and tables help us do just this.