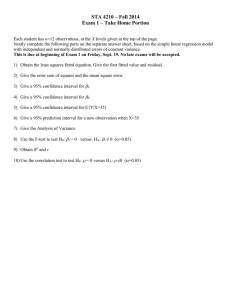

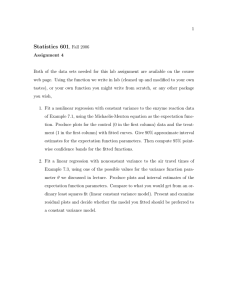

- - - - - - - Review for Linear Regression Association: two variables are associated if knowing the values of one of the variables tells you something about the values of the other variable o A lot of the time, the goal is causality, not association Association does not mean causality Response variable (y): outcome of the study o In statistics, it is not called the dependent variable because dependency is only valid when there is causation; not necessarily if there is association Explanatory variable (x): explains or causes changes in the response variable On a scatterplot, explanatory variable is on the x axis and response variable is on the y axis. Deterministic relationship between X and Y, meaning that Y is a function, g of X o Y = g(X) Scatterplot is described using form, direction, strength, and outliers o Form: linear, curved, clusters, no pattern o Direction: positive, negative, horizontal o Strength: strong or weak, how close the points are to the line o Outliers: outliers on the y-axis usually wouldn’t affect the regression line too much. However, x-axis outliers can greatly affect the regression line so always remember to look for x-axis outliers. Regression line: a straight line that describes how a response variable y changes as an explanatory variable x change o We can use a regression line to predict the value of y for a given value of x Notation: o n independent observations o xi are the explanatory observations o yi are the observed response variable observations o we have n ordered pairs (xi , yi) simple linear regression model o Let (xi, yi) be pairs of observations. We assume that there exist constants 0 and 1 such that xi is fixed the variance of Yi is the same as the variance of I since 0, 1, and Xi are constants all the randomness in Y comes from epsilon o Yi = 0 + 1Xi + i o where i ~ N(0, σ2) o E(Yi) = 0 + 1Xi o i are random deviations from the line or random error terms related to the true regression line The error terms are just the difference of each point from the line in the y direction iid: independent with identical distributions the estimated value of Y sub i is the deterministic model because the error term has a mean of 0 Assumptions for linear regression - - o SRS with the observations independent of each other o The relationship between X and Y is linear in the population o The residuals have a normal distribution o The standard deviation of the residuals is constant SSR: variance due to the line, b1*Sxy SSE: variance due to error, SST – SSR SST: total variance, Syy or SSR + SSE dfr: the equation for the line has 2 consonants and there is 1 average, dfr = 2 – 1 = 1 dfe: n – 2 dft: n – 1 MSR: SSR MSE: SSE/dfe MST: SST/dft Facts about least square regression o Slope: a change of y with one-unit change in x o Intercept: the value of y when x = 0, there is no practical significance of the value when x = 0 so most of the time, this value is not relevant o The line that passes through the point (x̄, ȳ) o There is an inherent difference between x and y If we switch x and y, the slope will change and the assumptions will change We assume that x is fixed, so if we switch x and y, we are now assuming that y is fixed o b1 = Sxy/Sxx Sxy is the sum of the x term times the y term, in order for the slope to be negative, Sxy must be less than 0 Sxx is the sum of the x term squared and can never be negative o Y-hat is an unbiased estimator for µy given x o b0 is an unbiased estimator for beta 0 o b1 is an unbiased estimator for beta 1 o the residual, ei, is yi – y-hati o s2 = SSE/dfe = MSE o sqrt(MSE) = s <= good estimator but not unbiased of the standard deviation R2: coefficient of determination, fraction of the variation of the values of y that is explained by the least-squares regression of y on x, SSR/SST o Proportion of the response variable that is explained by the linear relationship with the explanatory variable o If this is high, this is a good fit because most of the variance is due to the line, not due to the residuals or error o If this is low, then we don’t have a good fit because the line doesn’t explain the variance of Y o R2 does not tell you anything about the linearity of the data points. If R2 is high, it is probably linear, if it’s low, we don’t know anything about the linearity unless the data points are plotted. A low R2 means that either the points are not close to the line or it is not linear. o R2 is not resistant to outliers - - - - o Just because R squared is large, it doesn’t mean that you can make a good prediction because R-squared is a ratio. For linear regression, you need to know MSE (the measure of the absolute error) to know if prediction is valid or not. o r2 = R2 is only valid for simply linear regression Sample correlation: r, is a measure of the strength of a linear relationship between two continuous variables, Sxy/sqrt(Sxx*Syy) o Correlation makes no distinction between explanatory and response variables If X and Y were switched, there would be no change to the calculation o r has no units and does not change when the units of x and y change o r > 0, positive association o r < 0, negative association o r is always a number between -1 and 1 o -1 < r < -0.8, 0.8 < r < 1, strong correlation o -0.8 < r < -0.5, 0.5 < r < 0.8, moderate correlation o -0.5 < r < 0.5, weak correlation o r = 0, x and y are linearly uncorrelated, does not mean there is no association between x and y. This is saying that there is no linear association between x and y o correlation requires that both variables be quantitative o correlation measures the strength of linear relationships only o correlation is not resistant to outliers o Correlation is not a complete summary of bivariate data, it does not provide information on form o ALWAYS PLOT YOUR DATA Analyzing the y-intercept would only make sense if the y-intercept holds physical meaning and that x = 0 is realistic Why is a residual plot useful? o It is easier to look at points relative to a horizontal line vs. a slanted line o The scale is larger Fts: compares the variance due to the model to the variance due to the residuals or error o If the test statistic is large, most of the variance in the response variable is due to the model so there is an association between X and Y. This means that there is a “large” slope. o If the test statistic is small, the variance due to the model is around the same or less than the variance due to the residuals so the slope is approximately equal to 0 or there is no association. The model utility test is for testing whether or not there is an association between two variables. If you want to know if the slope is positive or negative, then you have to use the test of significant on the slope. The value of the Fts is tts squared Caution about correlation and regression o Requires good experimental design o Both describe linear relationship o Both are affected by outliers o Beware of extrapolations o Beware of lurking variables - - - - - Extrapolation occurs when you are looking outside of the range of the explanatory variable, where there are no points. Extrapolation is within the range of the x-axis o You cannot accurately predict anything about the response variable if it is out of the range of the x axis If there are lurking variables, then you might not be measuring what you think you are If you want to determine causation from association, you need to look at additional information SEµ-hat*: variance associated with the mean response o Consists of two parts, 1/n comes from the y-intercept and the other term comes from y-intercept and the slope o No error comes from the original error of the point o Part of the variance that comes from this term depends on the value of x-star, this means that the variance will increase if x-star is further from the mean value of x SEy-hat*: variance associated with the observation The confidence band tells us the confidence of the equation of the line. Therefore, the actual data points are not necessarily included in the shaded area. However, the prediction band is telling you what the next value will be. All or most of the data points will be included in the area. The confidence interval will be narrower than the prediction interval because the prediction interval has the added uncertainty of the point which is sigma squared. Therefore, the SE is bigger for prediction intervals. To make a good prediction o Precise prediction interval o Low MSE o High n o Large Sxx Model Utility F test Step 1: define the terms Not needed for model utility F test Step 2: state the hypotheses H0: there is no association between X and Y Ha: there is an association between X and Y Step 3: state the test statistic, df, p-value Fts = MSR/MSE, df1 = dfr = 1, df2 = dfe = n – 2 If data is unknown p-value = pf(Fts,df1,df2,lower.tail=FALSE) If data is known Table.lm <- lm(YVar~XVar, data = Table) Summary(Table.lm) Step 4: conclusion in context Reject or fail to reject because… The data does provide strong support (p = value) to the claim that there is a linear relationship between… Test of Significance on the Slope Step 1: define the terms Beta 1 is the population slope [] vs [] Step 2: state the hypotheses H0: beta 1 = beta 1 0 = 0 Ha: beta 1 ≠ beta 1 0 ≠ 0 Note: beta 1 0 is a 0 after a 1 not beta 10 Step 3: state the test statistic, df, p-value tts = b1/SE or b1/sqrt(MSE/Sxx), df = dfe = n – 2 if data is unknown 2*pt(tts,df,lower.tail=FALSE) Step 4: conclusion in context Reject or fail to reject H0 because… The data does [not] provide [strong] support (p = value) to the claim that there is a linear relationship between… Confidence interval for the slope If data is unknown t <- qt(alpha/2,df,lower.tail=FALSE) SE <- sqrt(MSE/Sxx) c(b1-t*SE, b1+t*SE) if data is known Table.lm <- lm(YVar~XVar, data = Table) Summary(Table.lm) confint(Table.lm, level = C) Confidence Interval for Mean at a Point (SEµ-hat*) If data is unknown qt(alpha/2,df,lower.tail=FALSE) SE <- sqrt(MSE*(1/n+(xstar–xbar)^2/Sxx)) If data is known newdata <- data.frame(XVar = NewValue) predict(Table.lm, newdata, interval = "confidence", level = 0.99) We are C% confident that the population mean y is covered by the interval () when x is x* Prediction Interval for Mean at a Point (SEy-hat*) If data is unknown SE <- sqrt(MSE*(1 + 1/n + (xstar – xbar)^2/Sxx)) If data is known newdata <- data.frame(XVar = NewValue) predict(Table.lm, newdata, interval = "prediction", level = 0.99) We are C% confident that the next y is covered by the interval () when x is x*