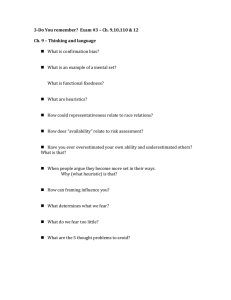

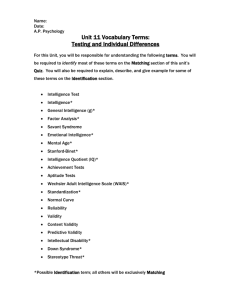

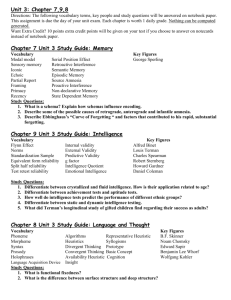

PSYC371 Exam Notes CHAPTER 1 – CONTEXT Psychological tests = used to make decisions or promote self-understanding, by providing more accurate information about a person than what you could get without the test. Ability to select, administer, score, interpret and report on the results are the main skills needed for being a psychologist. Timeline: o 1890- First time ‘mental test’ was used by James McKeen Cattell o 1905 – Binet produced intelligence test for children in French schools for special education classes. o 1916 – Terman produced Stanford- Binet Intelligence test (still used today) o 1917 – Woodworth produces first personality assessment o 1921 – Rorschach develops Inkblot projective test (based on psychoanalysis – Freud) o 1939 – Wechsler develops adult intelligence test (WAIS) o 1942 – MMPI developed o 1957 – Raymond Cattell develops performance motivation test o 1968 – Mischel critiques personality assessments o 1988 – Ziskin and Faust challenge use of psychological tests in court Key Players: o Alfred Binet Intelligence testing of children for special needs classes in France Stanford-Binet still in use. Concept of “Mental Age” o Stanley Porteus Testing with Australian Aboriginal children No need for English fluency Maze like intelligence test o David Wechsler Adult intelligence test Developed in inpatient facility to assist with differential diagnosis Deviation IQ instead of Mental age o Hathaway and McKinley Minnesota Multiphasic Personality Inventory (MMPI) 566 items o Rorschach Projective test Inkblot test Most widely used projective test o Murray Projective Test Thematic Apperception Test (TAT) Second most widely used projective test Characteristics of Psychological Tests o Sample of behaviour that is used to make inferences about the individual within a social context Criterion referencing = what effective behaviour looks like in the situation (thus the person has to meet a certain criteria – e.g. 50/100 = PASS in an exam) Norm referencing = performance of an individual related to the group norms from which they came (e.g. normal for a small child to be afraid of the dark. Not normal for an adult to be.) o Objective procedures Standardised measures of scoring, administration, time limits etc No intentional or unintentional bias Requires inter-rater reliability and validity o Quantitative measures Scoring as opposed to “ideas” o Provides objective reference point for evaluating behaviour Criterion referencing v norm referencing Allows for a comparison between the individual and everyone else. o Must meet a number of criteria to be consider a useful information gathering device Psychometric properties Scoring Accuracy and reproducible test Limitations of psychological tests: o Only a tool – doesn’t have all the answers o Used to attempt to capture a hypothetical construct – e.g. intelligence testing only really assesses one’s academic intelligence, not their real life, worldly intelligence. o Need to be constantly evaluated and refined to stay current, else they become obsolete. o Can be disadvantageous to minority groups (e.g. English literacy problems, cultural context for learning etc). CHAPTER 2 – ETHICS AND BEST PRACTICE Testing = a measure that only examines a specific construct Assesment = holistic approach (multiple factors), of which testing is a part (also observation, interviews etc) Testing and assessment by ClinPsychs, EducationPsychs, ForensicPsychs, OrganisationalPsychs, Neuropsychs All tests are similar, but their differences are in the areas of : o Reponses required – self-report, interview, performance tests etc o Administration – number of people who can administer the test is restricted for some tests (ClinPsych v Neuropsych) o Frame of reference for comparing performance – Norm referencing v criterion referencing Best Practice: o Determine whether test is needed (not always suitable or applicable) o Select appropriate test o Administer test o Score Test o Interpret Test o Report on findings o Keep records Code of Ethics from APS o 3 broad principles The rights and dignity of people and peoples Propriety Integrity NHMRC national statement of research ethics: o Values and Principles o Risk and benefit o Discomfort and inconvenience o Low risk and negligible risk o Consent Cultural differences: o Culture goes beyond language o Tests need to be (as much as possible) culture neutral – Culture Fair Test o Tests need to be unbiased CHAPTER 3 – SCORING AND NORMS Interpreting test scores: o Raw scores usually need interpretation or transforming o Criterion referenced scores v Norm referenced scores will have different interpretations. Transforming scores from norm referenced: o Usually done by transforming scores into o Z-score o T-score (personality tests) o STEN score – 10 point scale M=5.5 SD=2 o Percentile (not the same as a percentage) o Stanine Scale (Standard Nine) percentile groups Other methods of scoring: o Difficulty level to score ability tests (Logit Scale) o Age/Grade equivalents o Expectancy Tables Norms: o Rule of thumb: Check source of norms is generalizable to your situation (contextualise) Are norms relevant to situation? Known susceptibility of norms being culturally specific? How is the test scores being used? Can results be checked a different way? Explain why you used the norms you did. o Random Sampling means norms are indicative of the general population o Flynn effect – global IQ is rising – thus tests need to be re-normed. CHAPTER 4 - RELIABILITY Reliability = is the test dependable time and again i.e. will the test keep getting the same result if tested across time. o Systematic error = systematic changes that can be predicted and therefore compensated for (i.e. phone battery dying over a period of time, and carrying a charger) o Unsystematic error = unpredictable changes that cannot be compensated for (i.e. dropping phone and smashing screen is unpredictable and you can’t compensate for it). Domain-Sampling Model: o Based on generalizability theory and classical test theory o A way of thinking about the composition of a psychological test that sees the test as a representative sample of the larger domain of possible items that could be included in the test o Idea is that if a person gets a certain score on a test, then we assume that this score would’ve been obtained if the samples of the items were put to the person repeatedly. o We expect some variation due to sampling, however the mean of the scores would tell us the person’s true score (as called in Classical Test Theory). o Test-retest reliability o Inter-rater reliability o Generalizability Reliability Scores: o Observed Score = True Score + Error Score Variance o Reliability coefficient = the proportion of observed score variance that is due to true score variance. o Reliability scores range from r=1(perfect reliability) r=.5 (50% reliability) r=0 (no reliability). Measures of reliability: o Equivalent forms o Internal consistency o Test-retest o Inter-scorer (like HSC drama marking panel) Reliability Coefficients: o Three main methods of calculating: Equivalent forms (correlation between equivalent forms of the test – two versions of the test – see how well they correlate.) Split Half (Split the test in half and then correlate the halves – SpearmanBrown Formula) Internal Consistency (Cronbach’s Alpha – test each item as if it were a subtest and then correlate each “subtest” against all the others.) Good internal consistency = .7 or above. Best item = lowest alpha Worst item = highest alpha Sources of Error: o Test Construction (item content or sampling, format) o Test Administration (test environment, examiner-related variables eg presence, demeanour, rapport etc) o Test Taker (Human fallibility, time, practice effects) o Test Scoring & Interpretation (human error in scoring, less applicable in computer scoring.) Standard Error of Measurement – think of like a confidence interval or margin of error CHAPTER 5 - VALIDITY Validity = does the test measure what it’ supposed to measure. Without validity, the items are meaningless. Construct validity = does the test measure the construct effectively (for example, does an intelligence test measure intelligence?) Strong theory = strong validity. Test development helps develop theory and theory helps test development. o Cronbach and Meehl developed the Multi-trait Multi-method (MTMM) matrix as a tool to assess construct validity by setting a criteria for what correlations should be large and which should be small in terms of the psychological theory of the constructs. (The table with the triangles!) o The main idea is that the measures of the same construct should relate more strongly than measures of different constructs using the same or different measures. Content validity = validity of the content of the items in the test (FACE VALIDITY – can you guess what the test is asking about based on the items.) o Good for achievement testing o Not overly psychometrically sound – sometimes you don’t want he test taker to know what you’re getting at. o Culture has a big impact on judgments concerning the content validity of tests and test items. (e.g content of an Australian history test is going to be more more valid as a measure of Australian intelligence around history, than it would be in America.) Predictive Validity = how well does the test predict how a person will perform in something else (for example, how well does an aptitude test predict how well a child will do at school; or how well does the DASS21 predict how severely depressed/anxious/stressed a person is?) o Criterion is external to the test itself o Criterion scores obtained after some time interval from test scores = Predictive of something in the future (Kindy aptitude test vs HSC) o Sensitivity = How good is the test at identifying what we want to know? How many are predicted to have the trait (eg how many people are depressed?) o Specificity = How well does the test discriminate? How many are predicted to not have the trait? (eg how many people are not depressed?) Concurrent Validity = both the test and the criterion are being assessed at the same time (e.g. testing a new depression/anxiety/stress scale against the DASS – you’re assessing a) content validity (is the new scale assessing D/A/S AND b) you’re assessing whether a person is depressed/anxious/stressed.) Example DASS21 and K10. Incremental Validity = the degree to which an additional predictor explains something about the criterion measure that’s over and above that explained by the predictors already in use (for example – HSC results being explained by not only intelligence of students, but also by their school, socio-economic status etc. This is on top of their HSC result.) – Related to multiple regression. Factor Analysis = calculated all possible correlation coefficients between all possible pairs of tests. o Exploratory factor analysis = no hypothesis, exploring possible factors o Confirmatory factor analysis = hypothesis that you are trying to confirm. o Can be used to determine both convergent and divergent validity (how alike or different two constructs are) Decision theory = the simplest decision that can be made with a test is when it is used to decide which of two categories a person belongs to (eg male v female, mentally disordered v not mentally disordered, gambler v not gambler). o Can lead to errors though (for example): Valid positive: Looks like depressed – is depressed Valid negative: Doesn’t look depressed – is not depressed False positive: Looks depressed – is not depressed False negative: Doesn’t look depressed – is depressed. CHAPTER 6 – TEST CONSTRUCTION Types of items include: o Problems that need to be solved (attribute = ability) o Questions about typical behaviour (attribute = personality characteristics) o Expression of sentiments (attribute = attitudes) o Statement of preference (attribute = interest) Rational-Empirical Approach = a way of constructing the test that relies on both reasoning about what is known about the construct you are testing for AND evaluating the data about how the test items behave when administered to a sample. o Rational-Empirical = Reasoning about the construct + data Need to have a reason for including the items (for example DASS items are all to do with symptoms of depression, anxiety and stress – no random ones.) Therefore good face validity. Empirical Approach = a way of constructing the test that relies on collecting an devaluating data about how each of the items from a pool of items discriminates (via correlations) between groups of respondents who are thought to either show or not show the attribute in question (criterion). o Empirical = Data + Correlation with criterion of interest. MMPI was developed using empirical approach Item was selected for inclusion if it could discriminate between groups that showed the trait, and those that couldn’t. o For example people with Schizophrenia apparently endorsed the “I like riding horses” item???? Go figure! o Pretty ridiculous reasoning for inclusion, thus most people will use the rational-empirical approach, which uses a theoretical understanding of the construct (eg Schizophrenia) to guide and justify the inclusion or rejection of items, and thus allow for the items to discriminate between those with the attribute, and those without. STEPS IN TEST CONSTRUCTION Specify attribute = clearly specify the attribute or characteristic you’re looking at. (Construct/latent trait – denoted by theta 𝜃. SPECIFY ATTRIBUTES Literature search = If there’s already a test on it, that fits your idea and attribute – don’t reinvent the wheel. Check Mental Measurement Yearbook. CHECK LITERATURE FOR EXISTING TEST END, IF SUITABLE TEST AVAILABLE CHOOSE A MEASUREMENT MODEL WRITE AND EDIT ITEMS ADMINISTER AND ANALYSE RESPONSES Measurement model = Nominal, ordinal or interval/ratio. Item characteristic curve – where you have a) a discriminant parameter (where the line switches direction) b) Difficulty parameter – further along the line the harder the task is) and c) Guessing parameter – how high above the x-axis the line starts indicates how likely it is that with zero ability you could’ve guessed and got that score. - Classical test theory: mathematically expressed set of ideas that measure psychological variables in a test (Observed score = true score +error) - Item Response Theory: specifies the functional relationship between response in a test and the strength of the trait it’s assessing. (Likert scale - the stronger the trait the higher the score). Writing and Editing= clear and concise!!! Choose answer method SELECT ‘BEST’ ITEMS FOR TEST CHECK RELIABILITY AND VALIDITY NORM PREPARE TEST MANUAL PUBLISH TEST Item analysis = Examining how each item behaves in relation to the model. Best to use sample population first. Look at intercorrelations amongst items, corrected item total correlations and Cronbach’s alpha if item deleted (Analysis of best and worst performing items - Best item = lowest alpha, worst item = highest alpha). Make sure you reduce social desirability bias. Assessing reliability and validity = Reliability tested over time (test-retest reliability) and Cronbach’s alpha. Validity – multiple different methods (eg Multi-trait multi-method matrix or factor analysis). Norms = Necessary if you want it to be published. Representative sample needed. Info needed about age, gender etc. Publication = Publisher becomes responsible. Manual is written. Put in Mental Measurements Yearbook. CHAPTER 7 – INTELLIGENCE Implicit theories = lay people’s theories of intelligence Explicit theories = constructed by psychologists, based on empirical evidence Not just an issue of IQ Term ‘intelligence’ only came into play after the development of the Stanford-Binet test in 1905 (before that was called Mental Ability or Cognitive Ability). Phrenology = study of the shape and size of the human skull and brain, as an indicator of intelligence (ie big head = more brain) ALFRED BINET (1857-1911): o Tested children in France for special needs classes. o Concept of ‘mental age’ o Test involved naming, comprehension, language and memory tasks. o Assessed ‘global intelligence’ - represented by ability, judgment, memory and abstract thinking. o ‘Norm group’ looked at a comparison between mental age v chronological age. o Asked “What does intelligence actually look like?” CHARLES SPEARMAN (1863-1945) o Contemporary of Binet o Assessed ‘global intelligence’ o Looked at correlation and factor analysis o Psychometric ‘g’ o Two factor model of intelligence – ‘g’ – global intelligence and ‘s’ – specific abilities (mechanical, verbal, spatial and numerical). STANFORD-BINET by LEWIS TERMAN o Terman reformed the Binet Intelligence test. o Stanford-Binet Intelligence Scale (SB-5 is now being used). o Terman brought in the idea of the intelligence quotient (Binet just used mental age, compared to norms.) o IQ=(Mental age/chronological age)X100 o Mean IQ=100 (no reason for it really), and SD=15 (average 1Q ranges from 85-115) o Terman’s termites – longest running longitudinal study of 1000 children that were considered ‘gifted’ (IQ over 140.) More intelligence = greater income, longevity and better relationships. David Wechsler (1896-1981) o Initial interest was in adult psychiatric patients and using intelligence as part of differential diagnosis. o Believed that intelligence was about learning behaviours to be able to deal effectively with your environment. o Used deviation IQ – how many standard deviations above or below the mean determines your level of intelligence. o 4 parts to the test: Verbal comprehension Working memory Perceptual reasoning Processing speed o WAIS, WISC LOUIS THURSTONE (1887-1955) o Multifactor theory of intelligence (7 main mental abilities): Verbal comprehension Reasoning Perceptual speed Numerical ability Word fluency Associative memory Spatial visualisation J.P. GUILFORD (1897-1987) o First person to ask “What IS intelligence?” o Rejected the idea of ‘g’ o Focussed on the ‘Structure of intellect’ model. SOI had 3 domains: Operations (types of mental processes) Convergent =focussing on one problem and finding it’s one answer Divergent = thinking more creatively and broadly about the problem and how to solve it. Contents (types of stimuli to be processed) Products (types of information to be processed and stored) PHILIP VERNON (1905-1987) o Incorporated Spearman’s ‘g’ and Thurstone’s Primary mental abilities into a hierarchical model of intelligence (similar to Bloom’s taxonomy). o Believed there was a general ‘base’ of intelligence ‘g’, but then had multiple minor factors that contributed to overall intelligence. RAYMOND CATTELL (1905-1998) o Two factor theory – fluid and crystallised intelligence Fluid intelligence (Gf) = nonverbal, culture free basic mental capacity that underpins abstract problem solving (essentially one’s general mental ability) Crystallised intelligence (Gc) = culture-specific/context specific knowledge and intelligence acquired through life experiences o 16PF test (16 personality factors) o Culture-fair tests (where one’s culture does not inherently impact how well they perform on a test – eg an Australian history test given to American students is not culture fair.) o Culture free tests are not really achievable (even direction of writing is culturally specific to either left or right start readers.) o All knowledge is inherently culturally specific. CATTELL, HORN AND CARROLL o Extension of the Gf-Gc model to include other second order factors Gf – fluid intelligence Gc – crystallised intelligence Gv – visual processing Ga – auditory processing Gy – general memory and learning Gr – retrieval ability Gs – cognitive speed Gt – decision reaction time o Three Stratums: Stratum III – General Mental Ability Stratum II – Broad intellectual Abilities (Above) Stratum I – Large number of narrow abilities (bottom of the pyramid) o General tests for Stratum II include: Differential Abilities Scale Kaufman Assessment Battery for Children Woodcock-Johnson Battery o No test currently assesses all of Stratum II and I. JEAN PIAGET (1896-1980) o Looked at the development of intelligence instead of psychometrics o Proposed that intelligence in children is due to the interaction between their biological endowment and their environment. o Four main stages of development: Sensorimotor (0-2 years) Preoperational (2-6 years) Concrete Operational (7-12 years) Formal Operational (12+ years) INFORMATION PROCESSING VIEW o Intelligence = how material is processed by the brain o PASS = Planning (Executive functioning), Attention-arousal (Attention span), Simultaneous (Stimuli and understanding) and Successive (sequential information and problem solving) (cognitive processing theory) o Cognitive Assessment System (CAS) – Nagleiri. HOWARD GARDNER (1943 - ) o Multiple intelligences o Intelligence based on functional areas of the brain, which find expression in a cultural context. o 8 Intelligences include: Linguistic Interpersonal Intrapersonal Bodily kinaesthetic Logical-Mathematical Naturalistic Musical Visuo-spatial ROBERT STERNBERG (1949 - ) o Triarchic intelligence Componential (analytical) Experiential (creative) Contextual (pratical) o Theoretical v practical intelligence - challenging the idea that book smarts > street smarts. SUMMARY: o Factor Analysis: Binet Stanford-Binet (Terman) Spearman Wechsler Thurstone Guilford Vernon Cattell CHC model o Developmental: Piaget o Information Processing: PASS (Nagleiri) CAS (Nagleiri) Gardner Sternberg Aptitude is different to achievement o Aptitude = potential/ability to succeed (fluid) o Achievement = success/understand and knowledge of past learning (crystallised) Group testing – GAMSAT – LSAT etc. CHAPTER 8 – PERSONALITY Psychoanalytic Approach o Unconscious processes – no control over self. o Basic needs drive behaviour o Early experiences unconsciously affect us o Freud’s Id (Desires) Ego (Reality) Superego (Morality) o TESTING: Rorschach inkblot test (1920) No objective scoring Hasn’t been revised since creation Scoring solely dependent on scorers subjective interpretation. Sentence completion Face validity easily manipulated Washington University Sentence Completion Test (1970) Rotter Incomplete Sentences Blank (1950) Figure Drawing Draw-A-Person Test – serious lack of validity!! Auditory Projective Technique (BF Skinner) - total crap! Interpersonal o Personality comes from you interactions with others – not in isolation o Child learns to repeat behaviours that are praised, and don’t repeat those that aren’t. o Harry Stack Sullivan – therapist as participant observer – focus on ‘significant others’ - strange personality traits are just extensions of normal o Interpersonal Circumplex Guttman Personality Assessment Inventory (PAI) Personological o Henry Murray – researching ‘normal’ people. Needs = underlying desires Presses = environment affects needs and drives behaviour. Diagnostic council. Adjective checklist o Thematic Apperception Test (Murray and Morgan – 1930’s) Multivariate (Trait) Approach o Looks at what makes people individual. o Oldest style (Greek humours) o Allport first to formalise theory o Cattell developed 16 personality factors o Eysenck developed EPQ which assessed neuroticism, psychoticism and extraversion o Costa and McCrae developed the Big 5 using factor analysis to determine number of traits needed. o Tests: 16PF – Cattell (1956) EPI/ EPQ – Eysenck (1975) BIG FIVE – Costa and McCrae (1992) Empirical Approach o Looks at what makes people the same and how to predict their behaviour. o Minnesota Multiphasic Personality Inventory (MMPI) 10 subscales: Hypochondria Depression Conversion Hysteria Psychopathic Deviation Masculinity/Femininity Paranoia Psychasthenia (anxiety) Schizophrenia Hypomania Social Introversion Criterion keying – doesn’t matter what it says, but what it’s getting at. Validity - Had a lie scale and Infrequency scale Social Cognitive Approach o Mischel (1968) – argued that psychoanalysis and trait approaches don’t take into consideration the effect that one’s experiences can have on your personality. Context skills and adaptation Can develop new skills depending on the situation (how to eat dinner at home vs with the Queen). o Kelly (1955) – what makes people the same or different as a reflection of your own personality. Repertory Grid o Bandura (1982) – Self efficacy, self regulation and delayed gratification as indicators of personality. Positive Psychology o Carl Rogers – Actual Self v Ideal self. o Abraham Maslow – hierarchy of needs and self actualisation o Martin Seligman – Strengths based approach – what is right with people not what is wrong with them. Wellbeing, contentment, satisfaction, hope and optimisim. o Self reports are main assessments. CHAPTER 9 – CLINICAL TESTING AND ASSESSMENT Referral question Case History Data Clinical Interview Mental Status Examination Psychological Tests (Intelligence - Wechsler, Personality- MMPI/Rorschach, Psychopathology- PAI, Depression and Anxiety – BDI/BAI/DASS21/42) Psychological Report CHAPTER 10 – ORGANISATIONAL TESTING Performance Appraisal o Quantitative production counts (number of shoes sold) o Qualitative production measures (number of shoes returned) o Personnel information (absenteeism) o Training proficiency (scores on training exams) o Judgmental Data (supervisor ratings, customer ratings) Supervisor Ratings o Subjective and bias free o Need to be aware of job requirements Rating Scales o Behaviourally Anchored Rating Scales (BARS) Never greets the customer to Always greets to customer. (Anchored end points) o Behavioural Observation Scale Same as BARS but each item has a Likert frequency scale (Greet the customer (Always, almost always, sometimes, rarely, never) Theories of performance o Task performance – core aspects of the job (can you do your job) o Contextual performance – do you contribute to the company atmosphere (Total IT geek might be great with computers, but have no social skills). Personnel Selection o Criterion of future employment – prediction from application o False negatives and false positive common. Employee charcteristics o KSAO – Knowledge, Skills, Abilities, Other Characteristics o Job analysis o Equal Opportunity Employment o Validity Generalisation League Table What are the best predictors for performance? Schmidt and Hunter found this combination: GMA + Work Sample GMA + Integrity Test GMA+ Structured Interview o Armed Services Vocational Aptitude Battery (ASVAB) 1983 Tried to break down GMA into specialised fields for soldiers Turns out GMA only is the best indicator for success in any field. o General Mental Ability tests: Wonderlic Personnel Test (1920) Big 5 and Job Success o Conscientiousness rates highest and predicts honesty o Extraversion better for leadership roles and sales o Openness to experience will be able to be trained better o Agreeableness and Neuroticism more to do with work place culture. Integrity tests – can’t use polygraph anymore. Work attitudes – job satisfaction v organizational commitment v organizational justice CHAPTER 12 – FORENSIC TESTING AND ASSESSMENT Fairly new field (1993 established in Australia as part of the APS) Involves testifying in court as an expert witness and providing forensic assessments Before presenting a forensic assessment in court it needs to be decided that: o Evidence is necessary o Evidence is admissible in court Evidence is required by the judge or jury to assist in decision making Person giving evidence must be suitably qualified Must present scientific evidence that is widely accepted in the scientific community Expert Witness must: o Use psychologically and psychometrically sound tests and assessment techniques o Draw conclusions based on a validated theory o Weigh and qualify testimony based on empirical research and theory o Know how to defend scientific basis of the procedure used for the assessment Commerically available tests Reliability coefficient of at least .80 Use tests that are relevant to the legal issue involved Administered on standardised instructions Test is applicable to age, gender, intelligence etc If possible use test that provides a formula for scoring If possible, assess response style of person and score accordingly. Setting for forensic assessment: o Criminal Defence, prosecution, sentencing etc o Civil Personal injury and compensation o Family Custody Main differences between Therapeutic and Forensic Assessments: o Therapeutic: Client is assumed to be reliable Client employs therapist - Working with the therapist Seeking help Diagnosis and treatment of a problem o Forensic: Client not assumed to be reliable Therapist employed by state or other (not client) Not seeking help – just assessing them objectively Diagnosis, but not treatment Legal presentation of results Three main Assessment Techniques: o Type 1 – test specifically designed for forensic assessment and designed to a egal standard: MacArthur Competence Assessment Tool- Criminal Adjudication o Type 2 – test not designed for forensic assessment/legal requirements but constructs being measured have legal standards (eg psychopathy, malingering) o Type 3 – test are not developed for the use of forensic assessments, but have been adopted to answer a legal question (eg intelligence tests) Competency to stand trial: o Competency Screening Test (1971) Sentence completion regarding legal proceedings o MacArthur Competence Assessment Tool – Criminal Adjudication (1992) Functional abilities associated with the legal construct on competency to stand trial Competence scales – Understanding, Reasoning, Appreciation Uses a scenario and questions to assess three competence scales. o Risk Assessment/Prediction of Aggression or Dangerousness Clinical judgement Actuarial formula (more accurate) Violence Risk Appraisal Guide (1988) Static-99 (1999) Youth Level of Service/Case Management Inventory (2005) Juvenile Sex Offender Assessment Protocol Version II (2003) Psychopathy Checklist- Revised Second Edition (2003) (Gold standard for psychopath/antisocial personality forensic assessment) Custody Evaluation o Cognitive and personality tests used (WAIS-IV, WISC-IV, MMPI-2) o Ackerman-Schoendorf Scales of Parental Evaluation of Custody (ASPECT) – only test that assesses parental suitability for custody of children. Malingering o Structured Interview of Reported Symptoms (SIRS) 1992. Focuses on deliberate distortions in self-presentation Highly reliable o Test of Memory Malingering (TOMM) 1996 Aims to detect response bias, intentional faking and exaggeration of symptoms