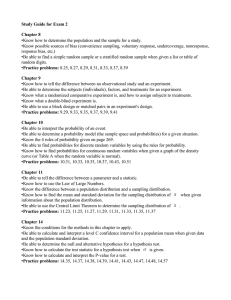

Z Score = (X - or These normal distributions can have any mean or any positive standard deviation. The z-score formula lets us work with the standard normal distribution. ̅)/𝒔 (xi – 𝒙 Z Statistic 𝒁= (𝒙 − 𝝁) 𝝈 √𝒏 where: x - sample mean μ - population mean σ - population standard deviation n - number of sample observations Lower Tail Test & Upper Tail Test Region of Acceptance The range of values that leads the researcher to accept the null hypothesis is called the region of acceptance. This is decided on the basis of the sign in the alternate hypothesis. You will conduct a lower-tail test when the sign in the alternate hypothesis is < Level of Significance Probability with which one may reject a NULL hypothesis when it is true is called the level of significance ( Confidence Interval Confidence with which a NULL hypothesis is accepted or rejected. A/B testing, at its most basic, is a way to compare two versions of something to figure out which performs better. An A/B test tells you whether there is a statistical difference in the performance of the two options. E.g. Trebo (Tax included Vs Tax Excluded) Chi-Square Chi-Square goodness of fit test is a nonparametric test that is used to find out how the observed value of a given phenomena is significantly different from the expected value. Independence PROBABILITY Two events are independent if and only if: 𝑵𝒐. 𝒐𝒇 𝑫𝒆𝒔𝒊𝒓𝒆𝒅 𝑶𝒖𝒕𝒄𝒐𝒎𝒆𝒔 𝑷𝒓𝒐𝒃𝒂𝒃𝒊𝒍𝒊𝒕𝒚 = 𝑻𝒐𝒕𝒂𝒍 𝒏𝒖𝒎𝒃𝒆𝒓 𝒐𝒇 𝑷𝒐𝒔𝒔𝒊𝒃𝒍𝒆 𝑶𝒖𝒕𝒄𝒐𝒎𝒆𝒔 𝐏(𝐀|𝐁) = 𝐏(𝐀) P (A and B) = P(A) * P(B) Probability gives a measure of how likely it is for something to happen. Sum of the probabilities of all outcome’s must = 1 probability of an impossible outcome =0 Probability of Certain Outcome = 1.0 Joint probability is the probability of two events occurring simultaneously. P(A & B) or P(A,B) Marginal probability is the probability of an event irrespective of the outcome of another variable. E.g. the probability of X=A for all outcomes of Y probability of one event is not affected by the fact that the other event has occurred. Intuition These types of probability form the basis of much of predictive modelling with problems such as classification and regression. For example: The probability of a row of data is the joint probability across each input variable. The probability of a specific value of one input variable is the marginal probability across the values of the other input variables. The predictive model itself is an estimate of the conditional probability of an output given an input example. P(X=A) = sum P (X=A and Y=yi) for all y Conditional probability is the probability of one event occurring in the presence of a second event. JOINT PROBABILITY Joint probability is a statistical measure that calculates the likelihood of two events occurring together and at the same point in time. 𝑷(𝑨 𝒂𝒏𝒅 𝑩) = 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑜𝑢𝑡𝑐𝑜𝑚𝑒𝑠 𝑠𝑎𝑡𝑖𝑠𝑓𝑦𝑖𝑛𝑔 𝐴 𝑎𝑛𝑑 𝐵 𝑡𝑜𝑡𝑎𝑙 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑒𝑙𝑒𝑚𝑒𝑛𝑡𝑎𝑟𝑦 𝑜𝑢𝑡𝑐𝑜𝑚𝑒𝑠 General Rules The probability of one event in the presence of all (or a subset of) outcomes of the other random variable is called the marginal probability or the marginal distribution. It is called the marginal probability because if all outcomes and probabilities for the two variables were laid out together in a table (X as columns, Y as rows), then the marginal probability of one variable (X) would be the sum of probabilities for the other variable (Y rows) on the margin of the table. Addition Rule P (A or B) = P(A) + P(B) – P (A and B) If A and B are mutually exclusive P (A and B) = 0 P (A or B) = P(A) + P(B) Multiplication Rule 𝐏(A and𝐁) = 𝐏(𝐀|𝐁)𝐏(𝐁) If A and B are independent, then 𝐏(𝐀|𝐁) = 𝐏(𝐀) and P (A and B) = P(A).P(B) 𝐏(𝐀 and 𝐁) 𝐏(𝐁) The conditional probability of A given that B has occurred. 𝐏(𝐀 and 𝐁) 𝐏(𝐁|𝐀) = 𝐏(𝐀) 𝐏(𝐀|𝐁) = 𝐏(𝐀) = P(𝐀|𝐁𝟏 )𝐏(𝐁𝟏 ) + 𝐏(𝐀|𝐁𝟐 )𝐏(𝐁𝟐 ) + ⋯ + 𝐏(𝐀|𝐁𝐤 )𝐏(𝐁𝐤 ) 𝐏(𝐀|𝐁) = 𝐏(𝐀), Hence 𝐏(𝐀) = 𝐏(𝐀 and 𝐁𝟏 ) + 𝐏(𝐀 and 𝐁𝟐 ) + ⋯ + 𝐏(𝐀 an𝒅 𝐁𝐤 ) Normal Distribution Sampling Distribution A sampling distribution is a distribution of all of the possible values of a sample statistic for a given sample size selected from a population. Sample Mean Sampling Distribution Standardized Z-score: of an observation is the number of standard deviations it falls above or below the mean. Raw scores above the mean have positive standard scores, while those below the mean have negative standard scores. Distribution of (Sample’s-Mean’s) (appears normal) E.g. GPA, distribution of mean GPA’s for multiple samples of size 50 each If a population is normal with mean ‘μ’ and standard deviation ‘σ’, the sampling distribution of 𝐗 is also normally distributed with: 𝛍𝐗 = 𝛍 𝛔𝐗 = 𝛔 √𝐧 Z-Table - Standard Normal Table Entries in table give the area under the curve between the Mean and ‘Z’ standard deviations above/below the mean. In sampling without replacement, two sample values aren't independent. 𝒁 = 𝒐𝒃𝒔𝒆𝒓𝒗𝒂𝒕𝒊𝒐𝒏 – 𝒎𝒆𝒂𝒏 𝒔𝒕𝒂𝒏𝒅𝒂𝒓𝒅 𝒅𝒆𝒗𝒊𝒂𝒕𝒊𝒐𝒏 Z score of mean = 0 Z score > 2 -> Unusual observation Defined for distributions of any shape Z-value for the sampling distribution of 𝐗 (sample mean) (X − μX ) (𝐗 − 𝛍) = 𝛔 σX √𝐧 μ = population mean σ = population standard deviation n = sample size 𝐙= 𝛍= Z scores can be used to calculate percentiles when the distribution is normal ∑ 𝐗𝐢 𝐍 ∑(𝐗 𝐢 − 𝛍)𝟐 √ 𝛔= 𝐍 Standard Error of Mean 𝛔𝐗 = Graphically percentile is the area below the probability distribution curve to the left of that observation. the Mean & Standard Deviation Percentiles Mathematically, this means that the covariance between the two is zero. Sampling with Replacement Two sample values are independent. i.e. what we get on the first one doesn't affect what we get on the second. 𝛔 √𝐧 Different samples of same size from the same population will yield diff. sample means Variability of mean from sample to sample (w/ replacement Or from infinite population standard error of the mean decreases as the sample size increases Central Limit Theorem Applies even if the population is not normal Sample means from the population will be approximately normal as long as the sample size is large enough As the sample size gets large enough, the sampling distribution of the sample mean becomes almost normal regardless of shape of population. Sampling Size: For most distributions, n > 30 will give a sampling distribution that is nearly normal For fairly symmetric distributions, n > 15 For a normal population distribution, the sampling distribution of the mean is always normally distributed Population Proportions Sample proportion (p / 𝝅) provides an estimate the proportion of the population having some characteristic. 𝐩= = Sampling Distribution of Sample Proportion 𝝅(𝟏 − 𝝅) 𝛔𝐩 = √ 𝐧 𝐗 𝐧 # items in sample with the characteristic of interest 𝛍𝐩 = 𝝅 sample size p is approximately distributed as a normal distribution when n is large where π = population proportion Z value for proportions 𝐙= 𝐩−𝝅 = 𝛔𝐩 𝐩−𝝅 √𝝅(𝟏 − 𝝅) 𝐧 Simple Linear Regression Doubt Clearing – Supriya (05/03) LIMITATIONS of Association T-Statistic Co-relation: Only for Linear Association x̄ = sample mean = 280 For non-linear relationship: μ0 = population mean = 300 s = sample standard deviation = 50 n = sample size = 15 Rank Correlation (captures rank instead of values) Mutual Information – Statistical solution represented by Venn diagram t = (280 – 300)/ (50/√15) = -20 / 12.909945 = -1.549. Association does not guarantee causation. Z Score – For large data set Causation guarantees association. T-Score – Small data set As a rule of thumb, a correlation coefficient below 0.3 is not considered a good precedent for regression (if better coefficients are available) Regression is a statistical method used to determine the relationship between variables in the form of an equation. Dependent variable: the variable that you predict is called the ‘dependent’ or the ‘response’ variable. It is usually denoted by ‘Y’. Independent variable: the variable that is used to predict this dependent variable is called the ‘independent’ or ‘explanatory’ variable. It is usually denoted by ‘X’. Helps establish a Cause-Effect relationship between any two/more variables. Regression analysis is “finding the best-fitting straight line for a set of data”. This regression line represents the linear equation that has the least amount of error (distance) between the line and the actual data, or the line that is the least far away from the data and is therefore most representative. What the line is then showing you is the relationship between two variables of interest. Once we have this line we can make predictions about future outcomes if we only have data for one of the variables. If we take this even further and assess the data over time, then we can make predictions about what will happen in the future. This is the point of a time series regression analysis. If we want to look at relationships over time in order to identify trends, we use a time series regression analysis the residual plot for salary-experience pair is distributed randomly across the horizontal axis. On the other hand, the gold-silver price pair shows a pattern that can be closely identified as an inverted U distribution. Such a distribution suggests a nonlinear relationship between the variables. p-value - Statistical Significance Regression Model The p-value approach to hypothesis testing uses the calculated probability to determine whether there is evidence to reject the null hypothesis. R-Square is a metric that is used to evaluate the simple linear regression model developed. Also referred as model-fit. A smaller p-value means that there is stronger evidence in favor of the alternative hypothesis. You also learnt that the R-Square value ranges from 0 to 1. The significance level is stated in advance to determine how small the p-value must be in order to reject the null hypothesis. The R-square value of 1 indicates that the regression model completely explains the variation in the data. For example, a p value of 0.0254 is 2.54%. This means there is a 2.54% chance your results could be random. Value near to zero indicates weak predictive relationship between the dependent and independent variable. The following are important considerations to account for before doing a regression analysis: The higher the R-square value, the better the model is considered to be. Linearity: There should be an overall linear pattern between the dependent variable and all the independent variables. The R-squared will always either increase or remain the same when you add more variables. Because you already have the predictive power of the previous variable, and so the R-squared value can definitely not go down. And a new variable, no matter how insignificant it might be, cannot decrease the value of R-squared. Interpretation of Regression Model 1. R-Squared Value : Closer to 1 stronger is the predictive relation 2. regression coefficient P-Value – Should be less the Alpha (type-1 error) depending on confidence level chosen 3. Residual Plot : random pattern implies the best regression analysis The salary-experience pair gives a better regression analysis than gold-silver price pair. This is because Since the P-value of both the cause variables is less than 0.05, we can assume that both the cause variables are significant. Now, the first analysis is considerably weaker than the second one due to their respective R-squared values. No autocorrelation: The error term associated with a particular value of each independent variable is assumed to not depend on the residual of another value. In other words, a residual term is not correlated with other residual terms. Normality: The error term is assumed to be a normally distributed random variable for all the values of the observations. Constant variance: The residuals of data points are assumed to have equal variance for all the values of the observations. No multicollinearity: The independent variables in the regression model should not be highly correlated with one another. BASICS Normal distribution, also known as the Gaussian distribution, is a probability distribution that is symmetric about the mean. The standard normal distribution is a special case of the normal distribution. It is the distribution that occurs when a normal random variable has a mean of zero and a standard deviation of one. REGRESSION RESULTS INTERPRETATION Multicollinearity R-Square is a metric that is used to evaluate the simple linear regression model developed. we need to assess multicollinearity between independent variables. If multicollinearity is high, significance tests on regression coefficient can be misleading. But if multicollinearity is low, the same tests can be informative. P-Value estimates the significance of any independent variable in explaining the dependent variable. Every normal random variable X can be transformed into a z score via the following equation: z = (X - μ) / σ A z-score (aka, a standard score) indicates how many standard deviations an element is from the mean. Z Score = 0 Z Score < 0 Z Score > 0 Z Score = 1 Element equal to mean Element < mean Element > mean Element 1 SD > mean 68% elements have Z score between 95% elements have Z score between 99.7% elements have Z score between R-Square value ranges from 0 to 1 The R-square value of 1 indicates that the regression model completely explains the variation in the data. The higher the R-square value, the better the model is considered to be. -1 & 1 -2 & 2 -3 & 3 The probability of committing a Type I error is called α, the level of significance. based on your confidence interval, you can check if the P-value is within the expected bounds. At a 95% confidence interval, the P-value should be less than 0.05 in order for you to successfully reject the null hypothesis and establish the significance of that variable. Residual Plots Among the below three plots, a random pattern implies the best regression analysis. HYPOTHESIS TESTING Population Standard Deviation Known If the population standard deviation, sigma, is known, then the population mean has a normal distribution, and you will be using the z-score formula for sample means. The test statistic is the standard formula you've seen before. The critical value is obtained from the normal table, or the bottom line from the t-table. Population Standard Deviation Unknown If the population standard deviation, sigma, is unknown, then the population mean has a student's t distribution, and you will be using the t-score formula for sample means. The test statistic is very similar to that for the z-score, except that sigma has been replaced by s and z has been replaced by t. The critical value is obtained from the t-table. The degrees of freedom for this test is n-1. If you're performing a t-test where you found the statistics on the calculator (as opposed to being given them in the problem), then use the VARS key to pull up the statistics in the calculation of the test statistic. This will save you data entry and avoid round off errors. HYPOTHESIS PROCESS 1. State the null hypothesis H0 and alternative hypothesis. 2. Decide on the significance level, α. 3. Compute the value of the test statistic. 4. Critical value approach: Determine the critical value. 5. Critical value approach: If the value of the test statistic falls in the rejection region, rejectH0; otherwise, do not rejectH0. 4. P-value approach: Determine the p-value. 5. P-value approach: If p≤α, reject H0; otherwise, do not reject H0. 6. Interpret the result of the hypothesis test. Correlation and Chi-square Test for Independence http://www.realstatistics.com/correlation/dichotomous-variables-chisquare-independence-testing/ Hypothesis Testing https://psychology.illinoisstate.edu/jccutti/psych240/c hpt8.html