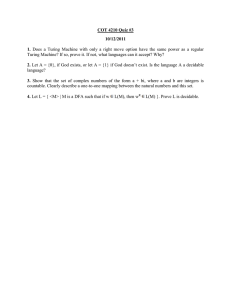

Tony Xi PHILH401 Functionalism vs. Block Word count: 2065 Xi 1 As one of the most dominant theories of mind, functionalism is considered to be the most prevailing theory in the field. This theory has been the foundation for the recent discussion over the nature of consciousness and the development of the computational theory of mind. Functionalism, as Ravenscroft describes, is the idea that “to be in (or have) mental state M is to have an internal state which does the 'M-job'.” (Ravenscroft, 2005, 50-51) Functionalists believe that mental states can be seen as a set of sensory inputs, a set of outputs, and a black box that performs a certain function. This relational way of describing functionalism is consistent with that of a simple Turing machine or automata. A Turing machine is considered as the basic unit of computation in computers. It can be inferred that functionalism is consistent with the computational theory of mind and the ideas of those like Alan Turing. Functionalism does a remarkable job in resolving the multiple realization problem that made many abandon the type-identity theory. For something that is multiply realizable, like pain or a certain belief, the material composition of the pain or the belief is irrelevant to functionalism as long as they perform the function of pain of a certain belief. Functionalism, therefore, resolves the multiple realization problem by focusing on the functionalities performed rather than the composition of something. Like other famous theories of mind, there have been ongoing debates over the correctness of functionalism. Philosophers like Ned Block proposed arguments against the functionalists’ view of mind first developed by Lewis, Armstrong, and Putnam during 1960s. Over the course of this paper, I shall respond to one of the objections proposed by Ned Block and demonstrate that this objection is, in fact, not as destructive to functionalism as many might think. The Blockhead objection to functionalism, named after Ned Block, was first proposed in his paper Psychologism and behaviorism in 1981. Block intended to show that “no behavioral Xi 2 disposition is sufficient for intelligence” (Block, 1981, 16), but his argument can be extended to an argument against functionalism with ease. In his paper, Block described an unintelligent machine that can pass the neo-Turing Test. Block defined the neo-Turing Test as a test to show if a machine had what’s called the neo-Turing Test conception of intelligence: “Intelligence ( or, more accurately, conversational intelligence) is the capacity to produce a sensible sequence of verbal responses to a sequence of verbal stimuli, whatever they may be.” (Block, 1981, 18) This allegedly unintelligent machine takes in sensible strings as inputs and produces sensible responses to imitate a human in a conversation. Since the alphabet of a given language is finite, the language formed by the alphabet and recognized by people should also be finite. The machine stores the sensible strings in a tree data structure. When a certain string is recognized as an input, the machines conducts a search starting at the node containing this input string. Sensible responses are found by searching the children of this node. A perfectly implement machine, Brock illustrated, could then perform just as well in a neo-Turing Test as a human could. (Block, 1981, 19-22) Although the neo-Turing Test is restricted to only the conversational intelligence, the existence of such a machine rejects the validity of the Turing Test. The Turing Test was originally proposed by Alan Turing as the Imitation Game. In this game, an interrogator has to identify two subjects just by asking them questions. Turing extended the setting and made the game into a test of the question “can a machine think?” (Turing, 1950, 54-55) It can be inferred that if an unintelligent machine passes some form of the test, then the test should not be valid. Block concludes, with his tree-search machine objection, that “whether behavior is intelligent behavior is in part a matter of how it is produced. Even if a system has the actual and potential Xi 3 behavior characteristic of an intelligent being, if its internal processes are like those of the machine described, it is not intelligent.” (Block, 1981, 21) Block rejects, with this objection, the behaviorist view of intelligence by demonstrating that behavior characteristics of intelligence does not imply intelligence. This is a problem for functionalism as well. This “Blockhead” machine possesses a part that does the “conversation” job, which can be used to infer intelligence, but does not posses any intentionality of the conversation or, in other words, a mental state of such a conversation. Ravenscroft used a different example of a “Blockhead” machine when presenting this objection. A robot that searches for the action “Look for a fire escape” when it’s given the sensory input of a burning building performs the same function as a human being but is generally considered to have no mental states. (Ravenscroft, 2005, 60) The conceivability of this “Blockhead” machine deals damage to both the validity of the Turing Test and the functionalist theory of mind. There have been arguments rejecting this “Blockhead” objection by defending one of these theories. I find the following two defenses to be particularly compelling. With the purpose of defending the Turing Test against Ned Block’s objection, Jack Copeland illustrated that the performance of the machine didn’t prove the Turing Test to be wrong in an unprecedented way to Turing during his time. In his paper: The Turing Test, Copeland gave his own interpretations of the underlying principles behind the Turing Test, also known as the imitation game, and the deductions that can be drawn from it. He called his interpretation of Turing’s theory the Turing Principle. (Copeland, 2000, 12) Turing, as Copeland believed, only intended the test to be actually correct. Therefore, the fact that something unintelligent could pass the test doesn’t reject the test as a whole. When responding to the fact Xi 4 that the functionalists’ view on the Turing Test was not logically sufficient for intelligence, Copeland wrote that “This suggests that a more fundamental definition must involve something relating to the manner in which the machine arrives at its responses.” (Copeland, 2000, 12) Copeland’s analysis suggested that Turing believed the key to unlocking machine intelligence requires further sophisticated empirical research. In support of the computational theory of mind, Copeland believed that a sophisticated machine that, computationally, emulates the brain can be consider as thinking. He called it “faithful emulation”. (Jakobsen, 2007, 20) As a direct objection to Block’s argument, Copeland wrote: “In modern terms, a machine that happens to pass one Turing test, or even a series of them, might be shown by subsequent tests to be a relatively poor player of the imitation game.” (Copeland, 2000, 12) Just because a machine is able to pass one Turing Test doesn’t mean it’s a “faithful emulation” of the human brain. Ravenscroft defended functionalism against the “Blockhead” robot he adopted from Block’s original argument. He alleged that Block had approached functionalism in a wrong way. To claim that “Blockhead believes that it is in a burning building if it has a n internal state ca used by seeing flames and ca using fire-escape-seeking behavior” is to “describe functionalism far too crudely”. (Ravenscroft, 2005, 61) Ravenscroft believed that the internal state that Blockhead is in does not cause other beliefs such as calling the fire department or realizing that the situation is life-threatening. He then goes on to claim that this state “does not occupy the functional role characteristic of believing that the building is burning, and so functionalism does not regard Blockhead as believing that the building is burning.” (Ravenscroft, 2005, 61) This particular defense seems to demonstrate a lack of recognition to the current development of neural network. When trained under a neural network model, a machine can easily build on its Xi 5 existing knowledge and make associations when trained under a large number of samples. This argument makes Ravenscroft’s defense less compelling. I shall attempt to defend functionalism on a more technical account. Block claimed that his unintelligent machine could pass the Turing Test. I think that this unintelligent machine, no matter how perfect it is, is unable to produce an answer when given a certain set of questions. This machine, at its core, is a complex Turing machine. This machine, therefore, can only perform computations on the syntax of the input provided. Without understanding the semantics of the conversation, this “Blockhead” machine will never be able to produce an answer for a problem that is Undecidable for Turing machines, no matter how sophisticated it is. An Undecidable problem is defined as a problem that no Turing machine is able to give the answer to. It is universally acknowledged and proven that any machine that does computation can be transformed into a Turing machine. According to the Church-Turing thesis, Turing machines have the strongest computational power. Any form of computational algorithms or machines will be equivalent to Turing machines. (Rich, 2009, 413) The Entscheidungsproblem, proposed in 1928 by David Hilbert and William Ackermann, states an undecidable problem. The problem is about the existence of an algorithm or a machine that, given a set of premises, determines whether the input is a theorem. (Rich, 2009, 413) In 1936, Alan Turing proved that no Turing machine will be able to solve this problem in his paper ON COMPUTABLE NUMBERS, WITH AN APPLICATION TO THE ENTSCHEIDUNGSPROBLEM. In this paper, Turing proposed the Halting Problem and claimed that the Entscheidungsproblem can be proven to be undecidable if the Halting Problem is proven to be undecidable. Turing’s argument can be summarized as follow: Xi 6 Assume there is a machine H that solves the Halting Problem. This machine is given two inputs: the description of a machine p and an input I for that machine. H returns True if the machine halts on input i and False otherwise. Then construct a machine H+ which will loop forever if the machine halts on input i and halt otherwise. A contradiction will then rise if we feed H+ into itself. In other words, will H+ halt on input H+? If H+ does halt and H returns True, then it will loop forever so it doesn’t halt; if H+ doesn’t halt and H returns False, then H+ halts. The conclusion we get from this is that if H+ does halt, then it doesn’t halt; if H+ doesn’t halt, then it does halt. This is a contradiction, so the machine H that solves the Halting Problem doesn’t exist. (Turing, 1960, 230-264) I have established that the “Blockhead” machine is a Turing machine no matter how perfect or sophisticated it is, and that there is no Turing machine that can solve the Halting Problem, then the “Blockhead” machine can’t solve the Halting Problem. There are many undecidable problems or languages that can be reduced to the Halting Problem using mapping reduction, which is saying that the problem P is at least as hard as the Halting Problem. An example of an undecidable problem is: given two descriptions of two Turing machines, are they equivalent? My conclusion is a “Blockhead” machine that produces responses to sensible conversations based on tree-searching computations will never be able to answer the Halting Problem or any undecidable problem that can be reduce to the Halting Problem. A human being, however, is able to use semantics of the input string and the abstract knowledge of the context of the conversation to produce a sensible answer. The “Blockhead” machine, therefore, can not pass the Turing Test, even when restricted to sensible conversations. The interrogator in the Turing test can mention a program or a snippet of computer code in a conversation. A programmer will be able to tell if the given program or halt or not based on semantic and abstract knowledge. The Xi 7 same can not be said for the machine since the problem is undecidable for it and there are an infinite number of possible halting programs, so it’s unrealistic to enumerate all cases in the tree. Ned Block challenges functionalism and the validity of the Turing Test by providing an unintelligent machine that has no mental states. Copeland have defended the Turing Test by claiming that this case does not prove the test to be actually wrong. I made a conclusion through discussing the Halting Problem that this unintelligent machine is not able to pass the Turing test even when restricted to sensible conversations. Therefore, the Blockhead objection to functionalism is not as detrimental to functionalism as people might think. Xi 8 Reference Block, N. (1981). Psychologism and Behaviorism. The Philosophical Review, 90(1), 543. doi:10.2307/2184371 Copeland, B. J. (2000). The Turing Test. Minds and Machines, 519-539. Jakobsen, David. (2007). THE TURING TEST AND OTHER MINDS. Ravenscroft, I. (2005). Philosophy of mind: a beginner’s guide. Oxford: Oxford University Press, 50-64 Rich, E. (2009). Automata, computability and complexity: theory and applications. Upper Saddle River, NJ: Pearson/Prentice Hall. Turing, A. (1960). On Computable Numbers, with an Application to the Entscheidungsproblem. Annual Review in Automatic Programming, 230–264. doi: 10.1016/b978-0-08-009217-1.50024-4 Turing, A. M. (1950). Computing Machinery and Intelligence. Parsing the Turing Test, 23–65. doi: 10.1007/978-1-4020-6710-5_3