Random Variables: Discrete Probability & Statistics Lecture Notes

advertisement

STAT 302 Chapter 4

Random Variables

Introduction

A random variable is a function that maps outcomes in a sample space to a numerical quantity. We use uppercase letters to denote a random variable.

Example: Toss a fair coin three times:

S = {HHH, HHT, HTH, THH, HTT, THT, TTH, TTT}

Let’s define X to be the number of heads in three tosses.

Outcomes in sample space

X

----------------------------------HHH

3

HHT

2

HTH

2

THH

2

HTT

1

THT

1

TTH

1

TTT

0

The probability that X takes on a certain value k depends on the set of outcomes that gives

X = k.

P (tossing no heads) = P (X = 0) = P ({T T T }) = 1/8

P (tossing exactly 1 head) = P (X = 1) = P ({HT T } ∪ {T HT } ∪ {T T H}) = 3/8

and so on ....

When the values taken on by X come from a finite or countably infinite set of numbers,

the random variable is discrete.

Discrete random variables

Let X be a discrete random variable.

• Probability mass function of X:

p(a) = P (X = a)

Note: 0 ≤ p(x) ≤ 1 for all x, and

P

x

p(x) = 1

1

• Cumulative distribution function of X:

P

F (a) = P (X ≤ a) = all x≤a p(x)

Note: F (x) is a non-decreasing step function, and 0 ≤ F (x) ≤ 1 for all x

For example, X = number of heads in 3 tosses of a fair coin

F (0) =

F (1) =

F (2) =

F (3) =

Note: F (k) = P (X ≤ k) = P (X ≤ a) = F (a) for a ≤ k < a + 1, a = 0, 1, 2, 3

• p(a) = F (a) − F (a − 1)

2

Expected value of X

For the coin tossing example, if one repeats the random experiment (which involes tossing a

fair coin for 3 times) many many times, what will be the average number of heads out of 3

tosses?

• Let X be a discrete random variable.

Expected value of X:

NotationsPand synonyms: E(X), µ, the expectation of X, the mean of X

E(X) = all x xp(x)

– E(X) is the weighted sum of x values; p(x)0 s are the weights.

– Interpretation: the long-run average of the x values taken on by the random variable

X if the random experiment is to be repeated for a large number of times

For example, X = number of heads in 3 tosses of a fair coin:

E(X) =

• Let g(X) be a real function of X.

Expected value of g(X):

Notations and

P synonyms: E(g(X)), the expectation of g(X), the mean of g(X)

E(g(X)) = all x g(x)p(x)

Note: E(g(X)) 6= g(E(X))

• Let a, b be some real constants, X, X1 , X2 be discrete random variables, and g1 and g2

be real functions. Then

E(aX + b) = aE(X) + b

E ag1 (X1 ) + bg2 (X2 ) = aE(g1 (X1 )) + bE(g2 (X2 ))

Variance and standard deviation of X:

• Let X be a discrete random variable.

Variance ofX:

P

V (X) = E (x − µ)2 = all x (x − µ)2 p(x)

Standard deviation

of X:

p

SD(X) = V (X)

3

– V (X) is the weighted sum of the squared deviations of x values from the mean;

p(x)0 s are the weights.

– Interpretation: both the variance and standard deviation of X measure the spread/dispersion

of the x values taken on by the random variable X if the random experiment is to

be repeated for a large number of times

• Alternative formula for V (X):

V (X) = E(X 2 ) − [E(X)]2

Note: E(X 2 ) 6= [E(X)]2

Proof:

V (X) =

=

=

=

=

E (X − µ)2

E(X 2 − 2Xµ + µ2 )

E(X 2 ) − 2µE(X) + µ2

E(X 2 ) − 2[E(X)]2 + [E(X)]2

E(X 2 ) − [E(X)]2

For example, X = number of heads in 3 tosses of a fair coin:

V (X) =

• Let a, b be some real constants, X be a discrete random variable. Then

V aX + b = a2 V (X)

Proof: Let Y = aX + b. Then E(Y ) = E(aX + b) = aE(X) + b

V (Y ) =

=

=

=

=

E(Y 2 ) − [E(Y )]2

E(a2 X 2 + 2abX + b2 ) − [aE(X) + b]2

a2 E(X 2 ) + 2abE(X) + b2 − [a2 E(X)2 + 2abE(X) + b2 ]

a2 E(X 2 ) + 2abE(X) + b2 − a2 E(X)2 − 2abE(X) − b2

a2 {E(X 2 ) − [E(X)]2 } = a2 V (X)

4

Exercise 1

An insurance policy pays $100 per day for up to 3 days of hospitalization and $50 per day for

each day of hospitalization thereafter. The number of days of hospitalization, X, is a discrete

random variable with probability mass function

6−k

for k = 1, 2, 3, 4, 5

15

P (X = k) =

0

otherwise

(a) Find the cumulative distribution function of X.

(b) Determine the expected number of days of hospitalization. Also find the standard deviation.

5

(c) Determine the expected payment for hospitalization under this policy.

Common disrete random variables

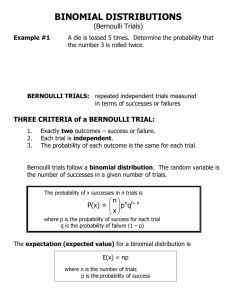

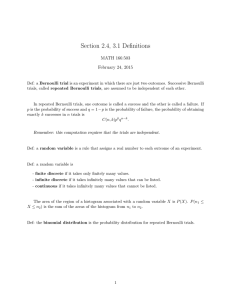

I. Bernoulli and Binomial random variables

• A Bernoulli trial is a random experiment that gives only one of two outcomes (generally

referred to as “success” and “failure”)

Examples:

(1) sample one electronic component: “success” if it is defective, “failure” if it is nondefective

(2) toss a fair coin: “success” if a head is tossed, “failure” if a tail is tossed

• The number of “success” in a Bernoulli trial is a Bernoulli random variable with parameter p: X ∼ Bernoulli(p) where p is the probability of the “success” outcome,

0 ≤ p ≤ 1.

• For X ∼ Bernoulli(p),

p(x) = px (1 − p)1−x , x = 0, 1

E(X) = p, V ar(X) = p(1 − p)

6

• A Binomial experiment consists of n (fixed in advance) identical and independent Bernoulli

trials. (By independence it means the result of a trial does not affect the result of any

other trial.)

Let the n Bernoulli trials give X1 , X2 , ..., Xn where Xi ∼ Bernoulli(p) [p is constant across all n trials].

Define Y = X1 + X2 + ... + Xn .

Y is the total number of successes out of the n trials.

Y is a Binomial random variable with parameters n and p: Y ∼ Bin(n, p)

• For Y ∼ Bin(n, p),

p(y) = ny py (1 − p)n−y , y = 0, 1, ..., n

where ny gives the total number of combinations that contain y successes.

P

P

F (y) = yk=0 p(k) = yk=0 nk pk (1 − p)n−k

E(Y ) = np, V ar(Y ) = np(1 − p)

Example

Let X be the total number of heads obtained out of 5 tosses of a biased coin (with a

30% chance of tossing

a head). Then X ∼ Bin(n = 5, p = 0.3).

5

P (X = x) =

0.30x (1 − 0.30)5−x , x = 0, 1, 2, · · · , 5

x

x |

0

1

2

3

4

5

-------|----------------------------------------P(X=x) |0.168 0.360 0.309 0.132 0.028 0.002

7

More examples: Probability histograms of some other Binomial distributions

8

Exercise 2

A firm sells items randomly selected from a large lot that is known to contain 10% defectives. Defective items will be returned for repair, and the repair cost is given by

C = 3Y 2 + Y + 2

where Y is the number of defectives sold.

(a) Find the probability that two out of the first ten items sold are defective.

(b) Find the probability that at most one of the first four items sold is defective. Also find

the expected repair cost for the four items sold.

(c) Find the probability that the first defective sold is the seventh item that is sold.

9

II. Geometric random variable

• Consider a setting identcal to the Binomial experiment except that the number of

Bernoulli trials, n, is not fixed in advance. Our interest is in the number of trials

until the “success” outcome occurs for the first time.

• Let X be the number of trials until the first “success” (with probability p on a single

trial) occurs. Then X is a Geometric random variable with parameter p: X ∼ Geom(p).

• For X ∼ Geom(p),

p(x) = (1 − p)x−1 p,

x = 1, 2, 3, ...

P

P

F (x) = xk=1 p(k) = xk=1 (1 − p)k−1 p = 1 − (1 − p)x

E(X) = p1

V ar(X) = 1−p

p2

III. Negative Binomial random variable

• Consider a sequence of independent Bernoulli trials. Suppose that we are interested in

finding the number of trials on which the second or the third or the rth success occurs.

• Let X be the number of trials needed to have a total of r successes (probability of success

on a single trial is p). Then X is a Negative Binomial random variable with parameters

r and p: X ∼ N egBin(r, p).

• For X ∼ N egBin(r, p),

r

p(x) = x−1

p (1 − p)x−r , x = r, r + 1, r + 2, ...

r−1

r

P

P

x

p (1 − p)k−r

F (x) = k=r p(k) = xk=r k−1

r−1

r

E(X) = p

V ar(X) = r(1−p)

p2

Derivation of p(x):

p(x) = P (the rth success occurs on the xth trial)

= P (the first x − 1 trials contain r − 1 successes and the xth trial is a success)

x − 1 r−1

=

p (1 − p)x−r p

by independence

r−1

x−1 r

=

p (1 − p)x−r

r−1

10

Exercise 3

An appliance comes in two colors, white and brown, which are in equal demand. Customers

arrive and independently order these appliances. Find the probability that

(a) the third white appliance is ordered by the fifth customer.

(b) the fourth brown appliance is ordered by the fifth customer.

IV. The Poisson process and Poisson random variable

• The Poisson process: the occurrence of a certain event E is said to follow a Poisson

process of rate λ if the following conditions are satisfied:

(1) the frequency of occurrences of E on two non-overlapping intervals are independent,

(2) the probability of having exactly one occurrence of E on any interval of length l is

approximately λl for small l, and

(3) the probability of having two or more occurrences of E on any interval of length l

is negligibly small when l → 0.

Examples where the Poisson process is applicable:

- arrival of airplanes at an airport

- occurrence of tornadoes in a certain geographical region

11

• Let X be the number of occurrences of an event E that follows a Poisson process of rate

λ over an interval of length l. Then X is a Poisson random variable with parameter λ:

X ∼ P oisson(λl). For a unit-length interval, X ∼ P oisson(λ).

• For X ∼ P oisson(λl),

x −λl

p(x) = (λl)x!e , x = 0, 1, 2, ...

P

P

k −λl

F (x) = xk=0 p(k) = xk=0 (λl)k!e

E(X) = λl, V ar(X) = λl

12

Exercise 4

The number of telephone calls coming into the central switchboard of an office building

averages four per minute. Find the probability that

(a) no calls will arrive in a given one-minute period.

(b) no more than 5 calls will arrive in a given one-minute period.

(c) at least two calls will arrive in a given two-minute period.

13

• Possion approximation to the Binomial:

The Poisson random variable can be used to approximate a Binomial random variable X ∼ Bin(n, p) when both of the following conditions are satisfied:

(1) n is large (n ≥ 20)

(2) p or 1 − p is small enough such that min(np, n(1 − p)) < 5

X ∼ P oisson(λ = np) approximately.

Proof: For X ∼ Bin(n, p), E(X) = np. Let λ = np, p = λ/n

n k

P (X = k) =

p (1 − p)n−k

k

λ k n!

λ n−k

=

1−

k!(n − k)! n

n

n(n − 1)(n − 2)...(n − k + 1) λk n (1 − nλ )n o

=

nk

k!

(1 − nλ )k

→

Note that

n(n−1)(n−2)...(n−k+1)

nk

λk e−λ

k!

as n → ∞

→ 1, (1 − nλ )n → e−λ and (1 − nλ )k → 1 as n → ∞

14

Exercise 5

At a local store that sells a large number of computers, only 0.1% of all computers sold

experience CPU failure during the warranty period. Consider a sample of 4,000 computers.

Use the Poisson approximation to answer the following parts.

(a) What is the approximate probability that no sampled computers have CPU defect?

(b) Find the expected value and the standard deviation of the number of computers in the

sample that have CPU defect.

15

V. Hypergeometric random variable

• Consider sampling objects from a relatively small set containing N objects. Suppose

that out of N objects, m are of one kind and the remaining N − m are of another kind.

A total of n objects are to be randomly drawn without replacement from the N objects.

Here, the trials (a trial refers to drawing an object) are not independent because N

is small.

Let X be the number of objects of a particular kind among the n drawn. Then X is a Hypergeometric random variable with parameters N , n and m: X ∼ Hypergeom(N, n, m)

where m refers to the number of objects of the kind that we are interested in.

• For X ∼ Hypergeom(N, n, m),

−m

(mx)(Nn−x

)

, x = 0, 1, ..., min(m, n)

N

(n)

P

P

(m)(N −m)

F (x) = xk=0 p(k) = xk=0 k Nn−k

(n)

m

m N −n

E(X) = nm

,

V

ar(X)

=

n(

)(1

−N

)( N −1 )

N

N

p(x) =

16

Exercise 6

From a box containing four white and five red balls, three balls are selected at random,

without replacement.

(a) Find the probabilities of the following events.

(1) Exactly one white ball is selected.

(2) Exactly two white balls are selected, given that at least one white ball is selected.

(3) The second ball drawn is white.

(b) Find the expected number of red balls drawn.

17

Exercise 7

One-third of blood donors at a clinic have O+ blood.

(a) Assuming the donors are independent of each other, find the probability that

(1) six or more donors have to be screened in order to find two who have O+ blood.

(2) among six donors screened, more than two do not have O+ blood.

(3) more than six donors have to be screened in order to find the first with O+ blood.

(b) Find the expected number of donors who must be screened in order to find five with O+

blood.

18