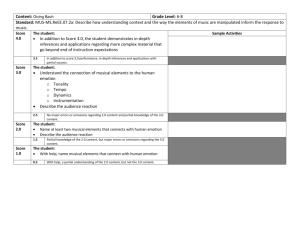

Musical Mood Induction and its Effects on Analogical Reasoning (Master's Thesis: University of Louisiana at Monroe)

advertisement