Advanced Econometrics

ADVANCED

ECONOMETRICS

SAJID ALI KHAN

1

Advanced Econometrics

ADVANCED

ECONOMETRICS

SAJID ALI KHAN

M.Phil. Statistics AIOU, Islamabad

M.Sc. Statistics AJKU, Muzaffarabad

PRINCIPAL

GREEN HILLS POSTGRADUATE COLLEGE

RAWALAKOT AZAD KASHMIR

E.Mail: sajid.ali680@gmail.com

Mobile: 0334-5439066

2

Advanced Econometrics

CONTENTS

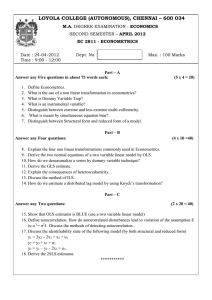

Chapter: 1. Econometrics

1.1.

1.2.

1.3.

1.4.

1.5.

1.6.

1.7.

1

Introduction

Mathematical and statistical relationship

Goals of econometrics

Types of econometrics

Methodology of econometrics

The role of the computer

Exercise

Chapter: 2. Simple Linear Regression

2.1.

2.2.

2.3.

2.4.

2.5.

2.6.

2.7.

2.8.

2.9.

2.10.

2.11.

2.12.

2.13.

2.14.

The nature of the regression analysis

Data

Method of ordinary least squares

Properties of least square regression line

Assumptions of ordinary least square

Properties of least squares estimators small/ large sample

Variance of disturbance term 𝑼𝒊

Distribution of dependent variable Y

Maximum likelihood method

Goodness of fit test

Mean prediction

Individual prediction

Sampling distributions and confidence interval

Exercise

Chapter: 3. Multiple Linear Regression and Correlation

3.1.

3.2.

3.3.

3.4.

3.5.

3.6.

3.7.

3.8.

44

Introduction

Properties of GLR

Polynomial

Exercise

Chapter: 5. Dummy Variables

5.1.

5.2.

5.3.

5.4.

36

Multiple linear regression

Coefficient of multiple determination

Adjusted 𝑹𝟐

Cobb-Douglas production function

Partial correlation

Testing multiple regression (F-test)

Relation between 𝑹𝟐 𝒂𝒏𝒅 𝑭

Exercise

Chapter: 4. General Linear Regression

4.1.

4.2.

4.3.

4.4.

6

53

Nature of dummy variables

Dummy variable trap

Uses of dummy variables

Exercise

3

Advanced Econometrics

Chapter: 1

ECONOMETRICS

1.1: INTRODUCTION

Econometrics is the field of economics that concerns itself

with the application of mathematical statistics and the tools of

statistical inference to the empirical measurement of relationships

postulated by economic theory.

the quantitative measurement and analysis of actual economic and

business phenomena. Econometrics is a fascinating set of

techniques that allows the measurements and analysis of economic

trends.

Econometrics, the result of a certain outlook on the role of

economics, consists of the application of mathematical statistics to

economic data to lend empirical support to the models constructed

by mathematical economics and to obtain numerical results.

Econometrics may be defined as the quantitative analysis of actual

economic phenomena based on the concurrent development of

theory and observation, related by appropriate methods of

inference.

Econometrics may be defined as the social science in which

the tools of economic theory, mathematics and statistical inference

are applied to the analysis of economic phenomena. Econometrics

is concerned with the empirical determination of economic laws.

Frisch (1933) and his society responded to an

unprecedented accumulation of statistical information. They saw a

need to establish a body of principles that could organize what

5

Advanced Econometrics

ANALYSIS: Econometrics aims primarily at the verification

of economic theories. In this case we say that the purpose of

the research is analysis that is obtaining empirical evidence to

test the explanatory power of economic theories.

1.4: TYPES OF ECONOMETRICS

Econometrics may be divided into two broad categories:

THEORETICAL ECONOMETRICS

Theoretical econometrics is concerned with the development of

appropriate methods for measuring economic relationship specified

by econometric models. Since the economic data or observations

of real life and not derived from controlled experiments, so

econometrics methods have been developed for such non

experimental data.

APPLIED ECONOMETRICS

In applied econometrics we use the tools of theoretical

econometrics to study some special field of economics and business,

such as the production function, investment function, demand and

supply function, etc.

Applied econometric methods will be used for estimation of

important quantities, analysis of economic outcomes, markets or

individual behavior, testing theories, and for forecasting. The last of

these is an art and science in itself, and the subject of a vast library of

sources.

1.5: METHODOLOGY OF ECONOMETRICS

Traditional econometric methodology has the following main

points:

1. Statement of theory or hypothesis.

7

Advanced Econometrics

2.

3.

4.

5.

6.

7.

8.

Specification of the mathematical model of the theory.

Specification of the statistical or econometric model.

Obtaining the data.

Estimation of the parameters of the econometric model.

Hypothesis testing.

Forecasting or prediction.

Using the model for control or policy purpose.

1. Statement of Theory or Hypothesis

Keynes stated, the fundamental psychological law is men

(women) are disposed as a rule and on average, to increase their

consumption as their income but not as much as the increase in

their income.

2. Specification of the Mathematical Model

Although Keynes postulated a positive relationship

between consumption and income, a mathematical economist

might suggest the following form of consumption function:

X

0< <1

Where:

3. Specification of the Econometric Model of Consumption

The inexact relationship between economic variables, the

econometrician would modify the deterministic consumption

function as follows:

+ X+u

known as the disturbance, error term or random

(stochastic) variable.

4. Obtaining Data

To estimate the econometric model that is to obtain the

, we need data. e.g

8

Advanced Econometrics

Year

2004

2005

2006

Y

55

58

60

X

67

70

72

5. Estimation of the Econometric Model

Regression analysis technique to obtain the estimates of the

model. Thus

6. Hypothesis Testing

Assuming that the fitted model is a reasonably good

approximation of reality, we have to develop suitable criteria to

find out whether the estimates obtained in accord with the

expectations of the theory that is being tested.

7. Forecasting or Prediction

If the chosen model does not refute the hypothesis or theory

under consideration, we may use it to predict the future value of

the dependent, or forecast variable Y on the basis of known or

expected future value of the explanatory or predictor variable X.

8. Use of the Model for Control or Policy Purposes

An estimated model may be used for control, or policy

purposes. By appropriate fiscal and monetary policy mix, the

government can manipulate the control variable X to produce the

desired level of the target variable Y.

1.6: THE ROLE OF THE COMPUTER

Regression software packages, such as

EVIEWS, SAS, SPSS, STATA, SHAZAM etc.

9

MINITAB,

Advanced Econometrics

1.7: Exercise

1. What is econometrics? How many types

econometrics.

2. Discuss the methodology of econometrics.

3. Differentiate between statistics and mathematics.

4. What are the goals of econometrics?

10

of

Advanced Econometrics

Chapter: 2

SIMPLE LINEAR REGRESSION

2.1: THE NATURE OF REGRESSION ANALYSIS

2.1.1: HISTORICAL ORIGIN OF THE TERM REGRESSION

The term regression was introduced by Francis Galton.

Galton found that there was a tendency for tall parents to have tall

children and for short parents to have short children, the average

height of children born of parents of a given height tended to move

e average height in the population as a

whole.

2.1.2: THE MODERN INTERPRETATION OF REGRESSION

Regression analysis is concerned with the study of

dependence of one variable on one or more other variable variables

with a view to estimating the mean value of the former in terms of

the known or fixed values of the latter.

TERMINOLOGY AND NOTATION

Dependent variable

Independent variable

Explained

Explanatory

Predictand

Predictor

Regressand

Regressor

Response

Stimulus

Endogenous

Exogenous

Controlled

Control

11

Advanced Econometrics

2.2: DATA

Collection of information or facts and figures is called data.

2.2.1: TYPES OF DATA

There are three types of data.

Time Series Data: A time series is a set of observations on the

values that a variable takes at different times. Such data may be

collected at regular time intervals, such as daily, weekly, monthly,

quarterly and yearly.

Cross-Section Data: Cross-Section data are data on one or more

variables collected at the same point in time, such as the census of

population conducted by the Census Bureau every 10 years.

Pooled Data: In pooled, or combined, data are elements of both

time series and cross-section data.

Panel, Longitudinal, or Micro panel Data: This is a

special type of pooled data in which the same crosssectional unit is surveyed over time.

2.3: METHOD OF ORDINARY LEAST SQUARES

The method of ordinary least squares is the sum of squares of

observed

The estimated model is

12

Advanced Econometrics

Then the residual sum of squares is

a bX

eq. (A)

( 1)

a bX)

a bX)

+b

+b

eq. (1)

Minimizing

a bX

( X)

a bX)

eq. (2)

+

̅

̅

b̅

̅

̅

13

Advanced Econometrics

=(

)

=

+b

+b

b

b{

b=

2.4: PROPERTIES OF LEAST SQUARE REGRESSION LINE

2.5:

It passes through mean points ( ̅ ,

The mean value of residual = 0.

The residual are uncorrelated with predicted

The residual are uncorrelated with predicted

.

.

THE ASSUMPTIONS UNDERLYING THE

METHOD OF LEAST SQUARES: THE

CLASSICAL LINEAR REGRESSION

MODEL

1. Linear Regression Model

The regression model is linear in the parameter. That is

= +

+

14

Advanced Econometrics

2. X Value are Fix in Repeated Sampling

Values taken by the regression X are considered fixed in

repeated samples. More technically, X is assumed to be

nonstochastic.

3. Zero Mean Value of Disturbance Term 𝒊

Given the value of X, the mean or expected value of random

disturbance term is zero. Technically the conditional mean value

of is zero. That is

E[ ⁄ ]=0

4. Homoscedasticity or Equal Variances of 𝑼𝒊

Given the value of X, the variance

is the same for all

observation. That is the conditional variance of are identical.

[ ⁄ ]= E[

⁄ ] = E[

⁄ ]=

5. No Autocorrelation between the Disturbance Term 𝑼𝒊

Given any two X values

and

between any two and

[

⁄

]=E[{

⁄

[

⁄

]= E[ ⁄ ][ ⁄ ]

[

⁄

]= 0

][{

6. Zero Covariance between 𝑼𝒊 and 𝒊

][

(

) = E[

[

]

(

)=E

(

)=E

(

)=0

( ⁄ )}]

]

E

=0

E

7. The Number of Observations” n” Must be Greater than the

Number of Parameter to be Estimated

greater than the number of explanatory variables.

15

Advanced Econometrics

8. Variability in X Values

The X values in a given sample must not all be the same.

Technically variance of X must be a finite positive number.

9. The Regression Model is Correctly Specified

Alternatively, there is no specification bias error in the

model used in empirical analysis.

10. There is No Perfect Multicollinearity

There is no perfect linear relationship among the

explanatory variables.

2.6: PROPERTIES OF LEAST SQUARES ESTIMATORS

2.6.1: SMALL SAMPLE PROPERTIES OF THE LEAST SQUARES ESTIMATORS

I. Unbiasedness: An estimator is said to be unbiased if the

expected value is equal to the true population parameter.

II. Least Variance: An estimate is best when it has the smallest

variance as compared with any other estimate obtained from other

econometric methods.

16

Advanced Econometrics

II.

parameter b, if the asymptotic mean of ̂ is equal to be b.

That is

[̂ ]

Consistency: An estimator ̂ is a consistent estimator of the

true population parameter b, if it satisfies two conditions:

(a) ̂ Must be asymptotically unbiased.

That is

[̂ ]

(b) The variance of ̂ must approach zero as n tends to

infinity. That is

[̂ ]

III.

2.6*

Asymptotic Efficiency: An estimator ̂ is an

asymptotically efficient estimator of the true population

parameter b, if

(a) ̂ is consistent.

̂ has a smaller asymptotic variance as compared with any

other consistent estimator.

GAUSS MARKOV THEOREM

STATEMENT:

Least squares theory was put forth by Gauss in 1809 and

minimum variance approach to the estimators of

was proposed

by Markov in 1900. Since determining of minimum variance linear

unbiased estimator involves both the concepts, the theorem is

known as Gauss-Markov theorem. It can be stated as follows:

Let

be n independent variables with mean

and variance. The minimum variance linear unbiased estimators of

the regression coefficients are (j=1,2,..,k).

Under the terms and conditions imposed above, the

minimum variance linear unbiased estimators of the regression

coefficients are identically the same as the least square estimators.

18

Advanced Econometrics

The combination of the above two statements is known as

Gauss-Markov theorem. i.e. the least square estimators of

and

are best, linear, unbiased estimators (BLUE).

PROOF:

We use the model,

Y=

FOR

LINEARITY:

̅

=

̅

=

=

=

=

Where

=

are nonstochastic weight,

=

This is linear function of sample observations

UNBIASEDNESS:

=

=

=

Properties of is

1.

2.

eq. (1)

=

19

Advanced Econometrics

3.

Put these results in eq. (1).

=

= +

E

=E

E

=

+

Which shows that

is an unbiased estimator of

Variance of :

By definition

) = E[

.

]

]

) = E[

]

) = E[

from eq. (2)

]

) = E[

(

)=

, (

)=

)=

(

)

̅

And

20

)

)

Advanced Econometrics

FOR

LINEARITY:

̅

̅

*

̅ +

+

Which is linear function of sample observations .

̅

Where

UNBIASEDNESS:

+

+

Taking expectation on both sides

E( )= +

E( )=

,

.

Variance of :

By definition

) = E[

) = E[

) = E[

) = E[

]

]

]

from eq. (2)

]

(

)=

, (

)

)=

)=

*

)=

*

21

+

̅

̅ +

)

Advanced Econometrics

)=

*

)=

*

̅

̅

̅

+

+

,

2.6** MINIMUM VARIANCE PROPERTY OF LEAST SQUARE

ESTIMATORS

Suppose

is any other linear unbiased estimator of

Taking expectation on both sides

E

=

E

=

Variance of

= E[

= E[

= E[

]

]

]

rom eq. 2.

]

= E[

=[

]

22

Advanced Econometrics

=

23

Advanced Econometrics

Variance of

= E[

= E[

= E[

]

]

]

... From eq. 2.

]

= E[

=[

]

=

, (

24

Advanced Econometrics

+

=

For sample

̅

By subtraction

̅

̂=

̂

Making substitution in . Using eq. 1 & eq, 2.

̂

Applying sum and squares on both sides.

[

]

Taking expectation on both sides.

E

]+E

=E[

. ..eq.

]

Now, E[

E[

]

*

[

+

]

26

Advanced Econometrics

E[

]

E[

]

E[

]

E[

]

E[

]

(

)

=

E

E

* (

E

*

E

[

E

[

E

[

E

E

27

)

+[

+[

]

]

]

]

]

Advanced Econometrics

Put eq.

.

(

)=

(

) =

(

) =

(

) =

(

)

E

=

This shows that

2.8: DISTRIBUTION OF DEPENDENT VARIABLE Y

Let

+

Mean of

:

[ ]

[

[ ]

[ ]

+

Variance of :

+ ]

+

)

[

]

[

]

The shape of the distribution

and by assumption of OLS. We assume that distribution of

28

is

Advanced Econometrics

normal and we also know that any linear function of normal

variable is also normal.

Since

2.9: MAXIMUM LIKELIHOOD ESTIMATORS

𝟐

OF ,

(

)=∏

(

)

√

(

)

and equating zero.

Differentiate eq.(A) w.r.t

=

⁄

2

0=

0=

0=

29

Advanced Econometrics

=

2

0=

0=

0=

=0

[

=

0=

0=

(

[

(

(

)

)

]

]

)

0=

0=

0=

30

Advanced Econometrics

Which is biased estimator of

.

Taking expectations on both sides.

(

)

(

)

(

)

(

(

)

)

Hence M.L.E of

is bias estimator. But M.L.E of

2.10: TEST OF GOODNESS OF FIT

𝟐

The ratio of explained variation to the total variation is called

the coefficient of determination. The varies between 0 and 1.

Total Variation = Unexplained Variation + Explained Variation

̅

(

̂) + ( ̂

In deviation form:

̂

̂

Where

̅

31

̅)

Advanced Econometrics

̂)

(

2.11: MEAN PREDICTION

Where

E(

(̂)

(̂ )

(̂ )

(̂ )

̅

*

̅

+

(

̅

(̂ )

32

̅

)

Advanced Econometrics

(

̂ )

[

(

̂ )

[

(

̂ )

[

(

̂ )

(

̂ )

(

̂ )

]

]

]

By definition variance of prediction error is:

(

̂ )

[(

(

̂ )

[

(

̂ )

(

̂ )

(

̂ )

̂ )

̂ )]

(

]

[

]

̅

*

[

]

+

[

(

̅

̂ )

(

̂ )

[̅

(

̂ )

̅

(

̂ )

̅

33

̅

)

̅

(

*

]

̅]

+

Advanced Econometrics

2.13: SAMPLING DISTRIBUTIONS AND CONFIDENCE INTERVAL

Use z-test if

is known or n is large, otherwise

we use t-test.

.

√

Z=

/

and

with (n

√

√

(

̅

(

) )

And

√

(

)

Confidence Interval for

√

(

√

:

(

,

̅

)

)

Confidence Interval for

√

,

Confidence Interval for Mean Prediction:

√

(

*

̅

+

Confidence Interval for Individual Prediction:

̂

Confidence Interval for

√

*

:

34

̅

+

:

Advanced Econometrics

Example: Given data

X 30

Y 50

60

80

90

120

120

130

150

180

i) Estimate the model Y=

ii) Estimate Y when X = 60.

iii) Test the significance of

.

iv) 95% confidence interval of

v) Estimate

vi) Estimate mean and individual prediction when

vii)

and r.

Solution:

X

30

60

90

120

150

450

i)

Y

50

80

120

130

180

560

Y=

XY

1500

4800

10800

15600

27000

59700

𝟐

𝟐

900

3600

8100

14400

22500

49500

2500

6400

14400

16900

32400

72600

𝒊

̅

̅

̅

̅

35

.

Advanced Econometrics

e) Critical region:

| |

f) Conclusion:

Since our calculated value less than table value so

we accept , and may conclude that null hypothesis is

better than alternative hypothesis.

Testing for

a)

b) Choose level of significance at

c) Test statistic

with n-2 d.f.

√

̂

d) Computation:

√

e)

iv)

Critical region:

| |

f) Conclusion:

Since our calculated value greater than table

value so we reject , and may conclude that

alternative hypothesis is better.

95% confidence interval for

⁄

√

(

19.3

19.3

37

̅

)

Advanced Econometrics

90% confidence interval for

⁄

√

:

̂

0.7947

v)

Covariance:

̅

vi)

Mean prediction:

When

(̂ )

̅

*

+

(̂ )

*

+

(̂ )

[

]

(̂ )

38

Advanced Econometrics

Individual prediction:

When

(̂ )

̅

*

+

(̂ )

*

+

(̂ )

[

]

(̂ )

vii)

𝟐

and r :

Total Variation = Unexplained Variation + Explained Variation

̅

(

̂) + ( ̂

̅)

In deviation form:

̂

(

Unexplained Variation

̂)

̅

⁄

⁄

39

Advanced Econometrics

√

40

Advanced Econometrics

2.14: Exercise

1.

2.

3.

4.

Discuss the nature of regression analysis.

What are the different types of data for economic analysis?

State and prove Gauss-Markov theorem.

Prove that

̅

5. Prove that

6.

7.

8.

9.

E( ̂ ) =

Find the ML estimates of least square regression line.

Given the data:

X

2

3

1

5

9

Y

4

7

3

9

17

i.

Estimate the model Y=

by OLS.

ii.

Find the variance of

.

iii.

.

The following marks have been obtained by a class of students

in economics:

X 45 55 56 58 60 65 68 70 75 80 85

Y 56 50 48 60 62 64 65 70 74 82 90

1. Find the equation of the lines of regression.

2. Test the significance of

.

3. 98% confidence interval of

.

A sample of 20 observations corresponding to the model

gave the following data:

(a) Estimate

and calculate estimates of variance of

your estimates.

(b) Find 95% confidence interval for . Explain the mean

value of Y corresponding to a value of X fixed at X = 10.

41

Advanced Econometrics

Chapter: 3

MULTIPLE LINEAR REGRESSION AND

CORRELATION

3.1: Multiple Linear Regression

It investigates the dependence of one variable (dependent

variable) on more than one independent variables, e.g. production

of wheat depends upon fertilizer, land condition, temperature,

water etc.

Y=

Normal equations are:

̅

̅

0

[

̅ ̅

1

{

√

42

} ]

Advanced Econometrics

[

]

And

0

[

1

{

} ]

√

[

]

or

√

3.2: Coefficient of Multiple Determinations

Co-efficient of multiple determinations is the proportion of

variability due to independent variable

and dependent

variable Y of total variation.

̂

̅

43

Advanced Econometrics

3.3: Adjusted 𝑹𝟐

The important property of

that it is non-decreasing.

That is including the explanatory variable. Value of

increasing

and do not decrease to adjust this we are adjusted ̅ .

̅

̅

3.4: COBB-DOUGLAS PRODUCTION FUNCTION

The Cobb-Douglas Production function, in its stochastic

form, may be expressed as

Where Y = output,

,

capital input

U = stochastic disturbance term, e = base of natural logarithm

The relationship between output and two inputs is nonlinear.

Using log-transformation we obtain linear regression model in the

parameters.

Where

and

.

3.5: Partial Correlation

If there are three variables Y,

. Then the

correlation between Y and

is called partial correlation. The

simple partial correlation co-efficient is the measure of strength of

44

Advanced Econometrics

linear relationship between Y and

after removing the linear

influence of from Y and is denoted by

.

=

√(

)√(

)

3.6: TESTING THE OVERALL SIGNIFICANCE OF A

MULTIPLE REGRESSION (The F-test)

Hypothesis

Choose level of significance at

Test statistic to be used:

with

Computations:

̅

Total SS =

(

Residual SS =

̂)

Explained SS = Total SS

S. O. V

d. f

Regression k

Residual

n

Total

n

SS

Explained

Residual

Total

45

MS

F

⁄

⁄

Advanced Econometrics

Example: Given the following data:

Y

i.

ii.

iii.

5

1

2

7

3

4

8

9

3

10

8

10

Estimate

them.

Find

and ̅ .

Test the goodness of fit.

and interpret

Solution:

i.

Estimate

𝟐

𝑼𝒊

𝟐

𝟐

𝟐

𝟐

𝟐

Y

5

7

8

10

1

3

9

8

2

4

3

10

5

21

72

80

10

28

24

100

2

12

27

80

1

9

81

64

4

16

9

100

25

49

64

100

30

21

19

178

162

121

155

129

238

𝟐

𝟐

̂

Normal equations are:

Solving these equations, we get

47

𝟐

Advanced Econometrics

̂

ii.

Find 𝑹𝟐 and 𝑹𝟐

(

̅

⁄

⁄

61

̅

̅

iii.

Testing

a)

b)

c) Test statistic

48

̂)

Advanced Econometrics

⁄

with d.f.

⁄

d) Computation

⁄

⁄

e) Critical region

f) Since our calculated value less than table value so

we accept null hypothesis.

49

Advanced Econometrics

3.8: Exercise

1.

2.

3.

4.

5.

Differentiate between simple and multiple regression.

Write note on

and ̅ .

Discuss the Cobb-Douglas production function.

How the overall significance of regression is tested?

Consider the following data:

Y 40 30 20 10 60 50 70 80 90

50 40 30 80 70 20 60 50 40

20 10 30 40 80 30 50 10 60

iv.

Estimate

and interpret

them.

v.Find

and ̅ .

vi.

Test the goodness of fit.

vii.

Find variance of

6. Use the following data:

Y

5.5

190

49

6.5

170

58

8.0

210

55

7.5

170

58

7.0

190

55

5.0

180

49

6.0

200

46

6.5

210

46

a. Estimate

by OLS.

b. Test overall significance of regression model.

c. Find adjusted coefficient of multiple correlation.

d. Find

.

50

Advanced Econometrics

Chapter: 4

GENERAL LINEAR REGRESSION (GLR)

4.1: INTRODUCTION

The general linear regression is an extension of simple

linear regression and it involves more than one independent

variables.

Let we

relationship exist between a variable

and K

explanatory

variables

, then regression model is:

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

It may be written as a matrix notation

[ ]

[

[ ]

[

]

][

51

]

[

]

[

]

Advanced Econometrics

Assumptions of GLR:

1.

[ ]

[ ]

Taking expectation on both sides

[ ]

[ ]

[

2. Variance

( )

]

[

]

[

( )

[

]

]

( )

[

]

52

Advanced Econometrics

̂

̂

Since ̂

̂

̂

̂ ̂

is scalar, therefore it is equal its transpose i.e.

̂

̂

̂

̂ ̂

̂̂

Minimize with respect to ̂ and equating zero.

̂

̂

̂

̂

̂

54

̂

̂

Advanced Econometrics

̂

̂

3. Minimum Variance: By definition

( ̂)

[̂

( ̂ )][ ̂

( ̂)

[̂

( ̂ )]

][ ̂

]

Using eq. (1) we get

̂

̂

( ̂)

[

][

]

( ̂)

[

][

]

( ̂)

( ̂)

[

[

( ̂)

[

]

]

]

( ̂)

Example: Given

Y

X

i)

ii)

4

5

6

7 8

2

3

4

5 7

Calculate SLR estimate using GLR technique.

Also find their variance and covariance.

55

Advanced Econometrics

Solution:

Y

4

5

6

7

8

30

X

2

3

4

5

7

21

𝟐

XY

8

15

24

35

56

138

𝟐

2

9

16

25

49

103

16

25

36

49

64

190

i)

̂

̂

̂

∑

[

]

∑

[

]

*

|

|

|

*

+

∑

+

|

|

|

*

+

Now

̂

ii)

̂

*

̂

[

+*

+

̂

]

Variance-covariance

̂

56

*

+

[

̂

]

Advanced Econometrics

̂

[

]*

+

[

]

̂

( ̂)

̂

( ̂)

( ̂)

*

*

+

+

0

̂

̂

̂

̂

̂

̂

1

4.3: POLYNOMIAL

Any algebraic expression in which the degree

-negative i.e. positive or zero is known as

polynomial. E.g.

Y=

PLYNOMIAL REGRESSION

It is a simple multiple linear regression, where

explanatory variables are all powers of a single variable. E.g

second degree polynomial variable in which

It is called polynomial regression model in one regression. If

Then thi

variables. The Kth order polynomial in one variable is:

57

Advanced Econometrics

Polynomial regression model is used where the relationship

between the response variable and explanatory variable is

curve linear.

58

Advanced Econometrics

4.4: Exercise

1. Discuss general linear regression.

2. State the assumptions under which OLS estimates are best,

linear and unbiased in general linear regression.

3. Prove that:

a) ̂

b)

( ̂)

4. Define polynomial regression.

5. Given the data:

X

15

20

30 50 100

Y

20

40

60 80 120

Find:

i)

ii)

( ̂)

iii)

90% confidence interval of ̂ .

̂

iv)

Test the hypothesis when

.

v)

Estimate Y when X=200.

vi)

And ̅ .

6. Consider the GLR model with the following data:

Y

3

7

5

9

7

11

8

10

5

3

9

3

Find:

i)

ii)

( ̂)

iii)

90% confidence interval of ̂

̂

iv)

Test the hypothesis when

.

̅

v)

And .

7. Given the following information in deviation form:

*

*

+,

+

59

Advanced Econometrics

̅

, ̅

, ̅

,

a) Find the estimates of ̂

variances and covariance.

b) How would you estimate ̂

̂

c) Test the hypothesis that

d)

And ̅ .

8. Given the following data:

2

3

8

10

12

16

19

20

22

25

1

5

6

8

10

13

17

21

23

24

̂ . Also find their

̂

3

4

7

6

11

14

18

20

25

27

Find:

a) Estimate the model in deviation form

b)

( ̂)

c) 95% confidence interval of ̂ and ̂ .

̂

d) Test the hypothesis when

.

̅

e)

And .

60

.

.

Advanced Econometrics

Chapter: 5

DUMMY VARIABLES

Econometric models are very flexible as they allow for the

use of both qualitative and quantitative explanatory variables. For

the quantitative response variable each independent variable can

either a quantitative variable or a qualitative variable, whose levels

represent qualities and can only be categorized. Examples of

qualitative variables may be male and female, black and white etc.

But for a qualitative variable, a numerical scale does not exist. We

must assign a set of levels to qualitative variable to account for the

effect that the variable may have on the response, then we use

dummy variables.

A dummy variable is a variable which we construct to

describe the development or variation of the variable under

5.1: NATURE OF DUMMY VARIABLES

In regression analysis dependent variable is

affected not only by quantitative variables but also by qualitative

variables. For example income, output, height, temperature etc, can

be quantified on some well define scales. Similarly religion,

nationality, strikes, earthquakes, sex etc, are qualitative in nature.

These all variables affect on dependent variable. In

order to study these variables, we quantified the qualitative

varia

are called dummy variables. Dummy variables are also called

Indicator, Binary, Categorical variables.

EXAMPLE:

Where

61

Advanced Econometrics

Suppose

Sex

3000

Female

0

4000

Male

1

5000

Female

0

6000

Male

1

Using OLS method. There is only one dummy variable in the model.

̂

Mean salary of Female College Professors:

( ⁄

)

Mean salary of Male College Professors:

( ⁄

)

5.2: DUMMY VARIABLE TRAP

If an indicator variable has k categories, that is k-1 dummy

variables, otherwise the situation of perfect multicollinearity arises

and the researcher will fall into the dummy variable trap.

We consider a model

Where

62

Advanced Econometrics

This model is an example of dummy variable trap. There is a

rule of introducing a dummy variable. If a qualitative variable have

introduce only (m ) variable (dummy). If this

rule is not followed we say that there is trap of dummy variable.

EXAMPLES:

Sex has two categories F and M that is m = 2. If we introduce

m

dummy variable, we follow the rule of

introducing dummy variables. If we introduce 2 dummy

variables then we say there is dummy variable trap.

Suppose there are three categories of color as white, black and

red. Then m = 3. If we not introduce m

dummy variables, then there will be dummy variable trap.

5.3: USES OF DUMMY VARIABLES

a) Dummy variables used as alternate for qualitative factors.

b) The dummy variables can be used to deseasonalize the time

series.

c) Dummy variables are used in spline function.

d) Interaction effects can be measured by using dummy

variables.

e) Dummy variables are used for determining the change of

regression coefficient.

f) Dummy variables are used as categorical regressors.

63

Advanced Econometrics

5.4: Exercise

1. What are the dummy variables? Discuss briefly the

features of the dummy variable regression model.

2. Discuss the uses of dummy variables.

64

Advanced Econometrics

Chapter: 6

AUTO-REGRESSIVE AND DISTRIBUTED-LAG

MODEL

6.1: DISTRIBUTED-LAG MODEL

In regression analysis involving time-series data, If the

regression model includes not only the current but the lagged (past)

lag-model. That is,

Represent a distributed lag-model.

6.2: AUTO-REGRESSIVE MODEL

If the model includes one or more lagged values of

the dependent variable among its explanatory variables, it is called

an auto-regressive model. That is,

Represent an auto-regressive model. Auto-regressive models

are also known as dynamic models. Auto-regressive and

distributed-lag models are used extensively in econometric

analysis.

6.3: LAG

In economics the dependence of a variable Y

(dependent variable) on other variables (explanatory variable) is

rarely instantaneous (happen immediately). Very often Y responds

to X with a laps of time, such a laps of time is called a lag.

65

Advanced Econometrics

6.4: REASONS SOURCES OF LAGS

There are three main reasons of lags.

1. Psychological Reasons: Due to the force of habit people do

not change their consumption habits immediately following a

price decrease or an income increase. For example those who

become instant millionaires by winning lotteries may not

change their life styles. Given reasonable time, they may learn

to live with their newly acquired fortune.

2. Technological Reason: Technological reason is the major

source of lags. In the field of economics if the drop in price is

expected to be temporary firms may not substitute labor,

especially if they expected that after the temporary drop, the

price of capital may increase beyond the previous levels. For

example, since the introduction of electronic pocket calculators

dramatically decrease as a result consumers for the calculators

may hesitate to buy until they have time to look into the

features and prices of all the competing brands. Moreover they

may hesitate to buy in the expectation of further decrease in

price.

3. Institutional Reason: These reasons also contribute to lags.

For example, those who have placed funds in long term saving

accounts for fixed durations such as 1 year, 3 year or 7 year are

may be such that higher yields are available elsewhere.

Similarly, employers often given their employees a choice

among several health insurance plans, but one a choice is made

on employee may not switch to another plan for at least one

year.

66

Advanced Econometrics

6.5: TYPES OF DISTRIBUTED LAG MODEL

There are two types of distributed lag model:

1. Infinite Distributed Lag Model

In case of infinite distributed lag model we do not

specify the length of the lag. It means that how for back

into the past we want to go: e.g

2. Finite Distributed Lag Model

In case of finite distributed lag model we specify the

length of lag: e.g

6.6: ESTIMATION OF DISRIBUTED LAG MODEL

We use the following methods for estimation of

distributed lag model.

1) Ad Hoc Estimation Method.

2) Koyck Estimation Method.

3) Almon Approach Method.

1) Ad Hoc Estimation Method

This is the approach taken by Alt and Tinbergen.

They suggest estimating,

One may proceed sequentially under this method, first we

regress

then regress

and

and so on. This

sequential procedure stops when the regressive coefficients of

the lagged variables start becoming statistically insignificant and

67

Advanced Econometrics

or the coefficients of at least one of the variables. Changes sign

from positive to negative or vice versa.

2) Koyck Approach

This method is used in case of finite distributed lag model.

Under this method we assume that

are all of the same sign.

Koyck assume they decline geometrically as follows:

.

.

.

.

.

.

decay of the distributed lag where 1

is known as the speed of

adjustment. As the distributed lag model is:

From eq. (A) we substitute

we get

...eq. (C)

Lagging one period, we get

Subtracting eq. (D) from eq. (C), we get

68

Advanced Econometrics

It is also regressive model, so we can apply OLS method to

model (E) and get

,

,

using them we can fined

In a sense of multicollinearity is resolved by replacing

By a single variable

. But note the

following features of Koyck transformation.

Koyck model is transformend into auto regressive model from

distributed lag model.

It gives biased and inconsistent estimator.

3) Almon Approach to Distributed Lag Models

If

coefficients do not decline geometrically, They

increase at first and then decrease it is assumed that

follow a

cyclical pattern. In this situation we apply Almon approach.

To illustrate Almon technique, we use the finite distributed

lag model.

This may be written as:

69

Advanced Econometrics

6.7: Exercise

1. Differentiate between auto-regressive and distributed-lag models.

2. What is Lag? Discuss the sources of lags.

3. Discuss the different methods of distributed-lags model.

70

Advanced Econometrics

Chapter: 7

MULTICOLLINEARITY

7.1: Collinearity

In a multiple regression model with two independent

variables, if there is linear relationship between independent

variables, we say that there is collinearity.

7.2: Multicollinearity

If there are more than two independent variables and they

are linearly related, this linear relationship is called

multicollinearity.

Multicollinearity

arises

from

the

presence

of

interdependence among the regressors in a multivariable equation

system. The departure of orthognality in the set of regressors in a

measure of multicollinearity. It means the existence of a perfect or

exact linear relationship among some or all explanatory variables.

When the explanatory variables are perfectly correlated, the

method of least squares breaks down.

7.3: Sources of Multicollinearity

The data collection method employed for example,

sampling over a limited range of the values taken by the

regressors in the population.

Constraints on the model or in the population being

sampled. In the regression of electricity consumption (Y)

on income ( ) and house size ( ) there is a physical

constraints in the population in that families with higher

income generally larger homes than families with lower

income.

Model specification: For example adding polynomial

terms to a regression model, especially when the range of

the variable is small.

71

Advanced Econometrics

An Over determined Model: This happens when the

model has more explanatory variables than the number of

observations. This could happen in medical research,

where there may be a small number of patients about

whom information is collected on a large number of

variables.

An additional reason for multicollinearity, especially in

time series data may be that the regressors included in the

model share a common trend, that is they all increase or

decrease over time. Thus in the regression of consumption

expenditure on income, wealth and population, the

regressors income, wealth and population may all be

growing over time at more or less the same rate leading to

collinearity among these variables.

7.4: TYPES OF MULTICOLLINEARITY

There are two types of multicollinearity.

Perfect Multicollinearity

Relates to the situation where explanatory variables are

perfectly linearly related with each other. Simply when

correlation between two explanatory variables is exactly one i.e.

). This situation is called perfect multicollinearity.

Imperfect Multicollinearity

If the correlation coefficient between two explanatory

variables is not equal to one but close to one approximately 0.9,

it is called high multicollinearity. If

approximately 0.5,

it is called moderate and if

it is called low

multicollinearity. Both are troublesome because it cannot be

easily detected.

72

Advanced Econometrics

7.5: ESTIMATION IN THE PRESENCE OF PERFECT

MULTICOLLINEARITY

The three variable regression model using deviation form as

̂

̂

(

̂

(

)

)(

(

̂

(

And

)

)

)(

)

Assume that

-zero constant. Then

(

̂

(

)

)(

)

(

̂

(

)

)(

(

[

̂

(

)

(

(

)

)

)

[(

̂

(

)

)]

) ]

[ ]

[ ]

̂

.

Similarly,

(

̂

(

)

)(

(

̂

(

̂

)

)

)(

)

(

[

[(

73

(

(

)

)

)

(

(

)

) ]

)]

Advanced Econometrics

[ ]

̂

[ ]

̂

(̂ )

(

(̂ )

)(

(

) (

)(

(̂ )

(̂ )

)

) (

(

)(

[(

)

)

)

(

(

)

) ]

(̂ )

(̂ )

(̂ )

.

Similarly,

(̂ )

(̂ )

(

)(

(

)(

(̂ )

(̂ )

(̂ )

74

) (

)

) (

(

)(

[(

)

)

(

)

(

) ]

)

Advanced Econometrics

(̂ )

(̂ )

.

̂

̂

Put

̂

̂

̂

̂

̂

Where ̂

̂

̂

Regression in y on x is:

̂

Therefore, although we can estimate ̂ uniquely, but there is

no way to estimate ̂ ̂ uniquely. Hence in the case of perfect

̂

multicollinearity the variance and standard error of ̂

individually are infinite.

7.6: CONSEQUENCES OF MULTICOLLINEARITY

1) The estimate of the coefficient of statistical unbiased,

even multicollinearity is strong. The sample property

of unbiased of the estimate does not require that the

estimate seriously imprecise.

2) If the intercorrelation between the explanatory is

perfect. Then the estimates of the coefficient are

indeterminate.

75

Advanced Econometrics

Proof:

The three variable regression model using

deviation form as

̂

̂

(

̂

(

̂

)

)(

(

(

And

)

)

)(

)

Assume that

̂

-zero constant. Then

(

)

(

)(

)

(

̂

(

̂

)

)

(

)

[

)

[(

̂

(

)(

(

(

(

)

)

)]

) ]

[ ]

[ ]

̂

.

Similarly,

̂

(

(

)

)(

)

(

̂

(

̂

[

[(

̂

[ ]

[ ]

76

)

(

)(

)

(

)

)

(

)

(

(

)

) ]

)]

Advanced Econometrics

̂

3) If the intercorrelation of the explanatory is perfectly

one. Then the standard error of these estimate become

infinitely large.

Proof:

If

, the standard error the estimate become

infinitely large in the two variable model:

0

1

*

⁄

*

⁄

[

+

+

]

⁄√

*

+

Putting

*

+

* +

Infinitely large.

Similarly:

77

Advanced Econometrics

*

+

*

⁄

*

⁄

[

+

+

]

⁄√

*

+

Putting

*

+

* +

Infinitely large

4) In case of strong multicollinearity regression

coefficients are determinate but their standard errors

are large.

Proof:

*

+

Put

*

78

+

Advanced Econometrics

*

+

[

]

In case of

If

*

+

*

+

*

+

5) In case of multicollinearity the confidence interval

becomes wider.

6) In the presence of multicollinearity the t-test will be

misleading.

7) In the presence of multicollinearity prediction is not

accurate.

7.7: DETECTION OF MULTICOLLINEARITY

1. The Farrar and Glauber Test of Multicollinearity

A statistical test for multicollinearity has been developed by

Farrar and Glauber. It is really a set of three tests.

a) The first test is a 𝟐 test for the detection of the

existence and the severity of multicollinearity in a function

including several explanatory variables.

Procedure:

i.

ii.

iii.

.

Choose level of significance at

Test statistic to be used

*

+

79

Advanced Econometrics

iv.

Computations: where

is the value of the

standardized correlation determinant. K is

number of explanatory variables.

v.

Critical Region:

vi.

Conclusion: Reject

if our calculated value is

greater than table value. Otherwise accept.

b) The second test is an F-test for locating which

variables are multicollinear.

Procedure:

i.

ii. Choose level of significance at

iii. Test statistic to be used

with

d.f

iv. Computations:

Compute the multiple correlation coefficients

among the explanatory variables.

v. Critical Region: F

vi. Conclusion:

Reject

if our calculated value is greater than

table value. Otherwise accept.

c) The third test is a t-test for finding out the pattern

of multicillinearity that is for determining which variables are

responsible for the appearance of the multicollinear variable.

Procedure:

i.

ii.

iii.

Choose level of significance at

Test statistic to be used

√

√

with

80

Advanced Econometrics

iv.

Computations:

Computed the partial

coefficients.

v.

Critical Region: | |

correlation

vi.

Conclusion:

Reject

if our calculated value

is greater than table value. Otherwise

accept.

2. High Pair Wise Correlation among Regressors

Multicollinearity exists if the pair wise or zero order

coefficients between the two regressors are very high.

3.

Eigen Value and Condition Number

A condition number K is defined as

If K is between 100 and 1000, There is moderate to

strong multicollinearity and if exceeds 1000 there is severe

multicollinearity.

The condition index defined as

√

If

is the condition effect lie between 10 and 30 then

there is moderate to strong multicollinearity and if it exceed

30 there is severe multicollinearity.

4. Tolerance and Variance Inflation Factor

As

the coefficient of determination in the regression

of regressors

on the remaining regressor in the model

increases towards that is as the collinearity with the other

81

Advanced Econometrics

regressor increases VIF all the increases and the limit it can

be infinite.

VIF

(

)

Tolerance can also

multicollinearity. That is

be

(

Tolerance

used

to

detect

the

)

5. High 𝑹𝟐 but Few Significant t-Ratios

If

is high the F-test in most cases will reject the

hypothesis that the partial correlation coefficients are

simultaneously equal to zero, but the individual t-test will

show that non are very few of the partial slope of coefficients

are statistically different from zero. This is the symptom of

multicollinearity.

6. Some Other Multivariate Methods

Like Principal Component Analysis (PCA), Factor

Analysis (FA) and Ridge Regression can also be used for

detection of multicollinearity.

7.8. REMEDIAL MEASURES OF MULTICOLLINEARITY

i. A Prior Information

Suppose we consider the model

Where Y = Consumption,

Income and wealth variable tends to be highly collinear.

Suppose

that is the rate of change of

consumption with respect to wealth one tended the

corresponding rate with respect to income. We can then run

the regression

82

Advanced Econometrics

Where

Once we obtain

we can estimate

postulated relationship between

and .

from the

ii. Combining Cross-sectional and Time Series Data

A variant of the extraneous are a priori information

technique is the combination of cross-sectional and time

series data known as pooling the data. The combination of

cross-sectional and time series data may be a situation of

reduction of multicollinearity.

iii. Dropping a Variable or Variables

When faced with severe multicollinearity one of the

simplest things to do is to drop one of the collinear variables.

In dropping a variable

from the model we may be

committing a specification bias or specification error.

iv. Transformation of Variables

One way of minimizing this dependence is to proceed as

follows:

If the above

we have

is arbitrary, therefore

is known as first difference form.

83

Advanced Econometrics

The first difference regression model often reduces the

severity of multicollinearity.

v. Additional or New Data

Since multicollinearity is a sample feature, it is possible

that in another sample involving the same variables.

Multicollinearity may not be as serious as in the first sample.

Sometimes simply increasing the size of slope may reduce the

multicollinearity problem.

vi. Other Methods

Multivariate statistical technique such as factor analysis

and principal components or other techniques such as ridge

regression are often implied to solve the problem of

multicollinearity.

84

Advanced Econometrics

7.9: Exercise

1)

2)

3)

4)

Explain the problem of multicollinearity and its types.

Explain the methods for detection of multicollinearity.

Describe the consequences of multicollinearity.

How would you proceed for estimation of parameters in

the presence of perfect multicollinearity?

5) Define any four methods for removal of multicollinearity.

6) Apply Farrar and Glauber test to the following data:

6

6

6.5

7.6

9

40.1 40.3 47.5

58

64.7

5.5

4.7

5.2

8.7

17.1

108

94

108

99

93

7) Find severity, location and pattern of multicollinearity to

the following data:

85

Advanced Econometrics

Chapter: 8

HETEROSCEDASTICITY

8.1. NATURE OF HETEROSCEDASTICITY

One of the important assumptions of the classical linear

regression model is that the variance of each disturbance term is

equal to . This is the assumption of homoscedasticity.

[ ]

Symbolically,

If this assumption of the homoscedasticity is fail that is:

[ ]

[

]

may all

be different.

DIFFERENCE BETWEEN HOMOSCEDASTICITY AND

HETEROSCEDASTICITY

Homoscedasticity is the situation in which the probability

distributions of the disturbance term remain same overall

is the same for all values of the explanatory variables.

Heteroscedasticity is the situation in which the probability

distributions of the disturbance term does not remain the same over

each

is not the same for all the values of the explanatory

variables.

8.1.1. Reasons of Heteroscedasticity

i. Error Learning Model

86

Advanced Econometrics

As people learn their error of behavior become smaller

over time. In this case

is expected to decrease, e.g. as the

number of hours of typing practice increases. The average

number of typing errors as well as their variances decreases.

ii. Data Collection Technique

Another reason of heteroscedasticity is the collection of

data techniques. Improvement of data collection techniques

is likely to decrease.

iii. Variance in Cross-Section and Time Series Data

In cross-sectional data the variance is greater than as

compared to the time series data variance. Because in crosssectional data, one usually deals with numbers of population

at a given point in time.

iv. Due to Specification Error

The heteroscedasticity problem is also arises from

specification errors, due to that error the variance tends to

variate.

8.2. OLS ESTIMATION OF HETEROSCEDASTICITY

Let us we use two variable model

[

=

=

87

]

Advanced Econometrics

= +

E

=E

E

=

+

Which shows that is still unbiased estimator of

the presence of heteroscedasticity.

Variance of

, even in

:

By definition

) = E[

]

]

) = E[

]

) = E[

]

) = E[

(

)=

By assumption of heteroscedasticity

, (

)

)

)=

)=

In the presence of heteroscedasticity, we observed that

OLS estimator is still linear, unbiased and consistent but not

BLUE, that is

is not efficient, because has not minimum

variance in the class of unbiased estimator in the presence of

heteroscedasticity.

88

Advanced Econometrics

8.3: CONSEQUENCES OF HETEROSCEDASTICITY

1) The OLS estimators in the presence of heteroscedasticity

are still linear, unbiased and consistent.

2) In the presence of heteroscedasticity the OLS estimators

are not BLUE, that is they have not minimum variance in the

class of unbiased estimators.

3) In the presence of heteroscedasticity the confidence

interval of OLS estimators are wider.

4)

misleading.

8.4: DETECTION OF HETEROSCEDASTICITY

1. The Park Test

Professor Park suggested that

is same function of the

explanatory variable . The functional form is

Where is the stochastic disturbance term.

Taking In on both sides. We get

Since

is generally not known. Park suggests using

as a proxy and running the following regression

If

turns out to be statistically significant it means

heteroscedasticity is present in the data, otherwise does not

present it.

Two stages of Park test:

Stage 1: we run the OLS and obtain .

89

Advanced Econometrics

Stage 2: again we run OLS with

as a dependent variable.

2. Glejser Test

Glejser test is similar in spirit to Park test. The

difference is that Glejser suggests as many as six functional

forms while Park suggested only one functional form.

Furthermore Glejser used absolute values of

. Glejser used

the following functional forms to detect heteroscedasticity.

I.

| |

II.

| |

√

III.

| |

( )

IV.

| |

(

V.

| |

√

VI.

| |

√

√

)

Stages of Glejser test:

Stage 1: Fit a model Y on X and compute .

Stage 2: Take the absolute value of

and then

regress

with X using any one of functional form.

3. Spearman Rank Correlation Test

Rank correlation co-efficient can be used to detect

heteroscedasticity. That is

Step 1: State hypothesis

,

Step 2: Fit the regression of Y on X and compute .

Step 3: Taking the absolute values of . Rank both | | and

X according to ascending or descending order then compute

90

Advanced Econometrics

Where

| |

Step 4: For n

√

with

d.f.

√

| |

Step 5: C.R

⁄

Step 6: Conclusion: As usual.

4. Goldfeld Quandt Test

This test is applicable to large samples. The observations

must be at least twice as many as the parameters to be

estimated.

Step 1. State null and alternative hypothesis.

Step 2. Choose level of significance at

Step 3. Test statistic to be used

(

)

(

)

With

(

)

(

)

Step 4. Computation: Where C is central observations

omitted and K is number of parameters estimated.

i.

We arrange the observations in ascending

or descending order of magnitude.

ii.

of central observations which we omitted

one fourth of the observations for n>30.

91

Advanced Econometrics

iii.

The remaining (n-c) observations are

divided into two sub samples of equal

size , one including the small values of

iv.

We fit a separate regression lines to each

sub samples, we obtain the sum of

squared residuals from each of them. That

is

.

Compute the value of F.

v.

Step 5. C.R:

Step 6. Conclusion:

Since our calculated value is greater than

table value. So we reject null hypothesis and may

conclude that there is heteroscedasticity.

8.5: REMEDIAL MEASURES OF HETEROSCEDASTICITY

There are two approaches of remediation:

(a) When

is known.

(b) When

is not known.

𝟐

(a) When 𝒊 is known

The most straight forward correcting method of

heteroscedasticty, when

is known by means of weighted

least squares for the estimator, thus obtained for BLUE. i.e

Dividing by

on both sides.

92

Advanced Econometrics

(b) When

𝟐

𝒊

is unknown

We consider two variable regression model.

That is

Now we consider several assumptions about

the pattern of heteroscedasticity.

I.

The error variance proportional to

.

Proof: Dividing original model by

Where

. That is

.

is the disturbance term.

Taking squaring and expectation on both sides.

( )

(

Hence the variance of

II.

)

is homoscedastic.

The error variance proportional to

.

93

. That is

Advanced Econometrics

Proof: The original model can be transform as:

√

Where

√

√

√

is the disturbance term.

√

Taking squaring and expectation on both sides.

(

√

(

Hence the variance of

III.

)

)

is homoscedastic.

The error variance proportional to the squares of the

[

] .

Proof: The original model can be transform as:

Where

is the disturbance term.

94

Advanced Econometrics

Taking squaring and expectation on both sides.

(

(

[

)

)

]

[

[

Hence the variance of

IV.

]

]

is homoscedastic.

A log transformation such as:

Reduces heteroscedasticity, when compared with the

regression:

.

95

Advanced Econometrics

8.9: Exercise

a) Define

Heteroscedasticity?

What

are

the

consequences of the violation of the assumption of

Homoscedasticity?

b) Review suggested approaches to estimation of a

regression

model

in

the

presence

of

Heteroscedasticity.

c) Discuss the three methods for detection of

Heteroscedasticity.

d) What are the solutions of Heteroscedasticity?

e) Apply Goldfeld and Quandt test on the following

data to test whether there is heteroscedasticity or not.

X

Y

20

18

25

17

23

16

18

10

26

8

27

15

29

16

31

20

22

18

27

17

32

19

35

18

40

26

f) Given

Year

2000

2001

2002

2003

2004

2005

2006

2007

2008

Y

3.5

4.5

5.0

6.0

7.0

9.0

8.0

12.0

14.0

15

20

30

42

50

54

65

8.5

90

16

13

10

7

7

5

4

3.5

2

-0.16

0.43

0.12

0.22

-0.50

1.25

-1.31

-0.43

1.07

g) Consider the model:

Using the data below apply Park-Glejser test?

Year

2002

2003

2004

2005

Y

37

48

45

36

96

X

4.5

6.5

3.5

3.0

41

25

39

23

Advanced Econometrics

Chapter: 9

AUTOCORRELATION

9.1: INTRODUCTION

Autocorrelation refer to a case in which the error term in

one time period is correlated with the error term in any other time

ordered in time as in case of time series data or space as in case of

crossOne of the assumptions of linear regression model is

that there is zero correlation between error terms. That is

(

)

If the above assumption is not satisfied than there is

autocorrelation, that is if the value of in any particular period is

correlated with its own preceding value or values. Therefore it is

known as the autocorrelation or serial correlation. That

is (

)

. Autocorrelation is a special case of correlation.

Autocorrelation is referring to the relationship not between two

different variables but between the successive values of the same

variable.

Autocorrelation:

Lag correlation of a given series with itself is called

autocorrelation, thus correlation between two time series such as

is called autocorrelation.

Serial Correlation:

Lag correlation between two different series is called

serial correlation, thus correlation between two different series

such as

is called serial correlation.

97

Advanced Econometrics

9.2. REASONS OF AUTOCORRELATION

There are several reasons which become the cause of

autocorrelation.

1) Omitting Explanatory Variables:

Most of the economic variables are generally tend

to be auto correlated. If an auto correlated variable has been

excluded from the set of explanatory variables, its influence

be auto correlated.

2) Miss Specification of the Mathematical Model:

If we have adopted a mathematical form which

differs from the true form of the relationship, the

show serial correlation.

3) Specification Bias:

Autocorrelation also arises due to specification bias,

arises from true variables excluded from model and wrong

use of functional form.

4) Lags:

Regression models using lagged values in time

series data occur relatively often in economics, business

and some fields of engineering. If we neglect the lagged

term from the autoregressive model, the resulting error

term will reflect a systematic pattern and therefore

autocorrelation will be present.

5) Data Manipulation:

For empirical analysis, the raw data are often

manipulated. Manipulation introduces smoothness into the

raw data by dampening the fluctuations. This manipulation

98

Advanced Econometrics

leads to a systematic pattern and therefore, autocorrelation

will be there.

9.3. OLS ESTIMATION IN THE PRESENCE OF

AUTOCORRELATION

Mean:

Taking expectations on both sides

[

]

[

]

[ ]

Variance: By definition:

[

[ ]

[

]

]

[

]

, r=0, 1, 2, 3...

[

]

The expression in brackets is a sum of a geometric

progression of infinite term.

Where is first term of geometric progression and

common ratio, when | |

, the formula reduce to

By using this formula, we get

*

99

+

is

Advanced Econometrics

Where

Covariance:

[

[

][

]

]

Given that

[

]

[

]

[

]

[ ]

[

]

[

]

[ [

]

[

[

*

]

]

[

*

]

]

(

)+

+

Similarly:

In general

100

Advanced Econometrics

9.4. CONSEQUENCES OF AUTOCORRELATION

Following are the consequences of OLS method in

the presences of autocorrelation.

1. The least square estimator is unbiased even when the

residuals are correlated.

2. With autocorrelation values of the disturbance term

the OLS variance of the parameter are likely to be

larger than those of other econometric models, so they

do not have the minimum variance that is BLUE.

3. If the values of are auto correlated the prediction

based on ordinary least square estimates will be

inefficient in the sense that they will have larger

variances as compared to others.

4.

likely to give misleading conclusion.

5.

correlated.

9.5. DETECTION OF AUTOCORRELATION

1. Durbin Watson d-Statistic

This test was developed by Durbin and Watson to

examine whether autocorrelation exist in a given situation or

(

101

)

Advanced Econometrics

Where

then

*

+

Which is simply the ratio of the sum of squared

differences in successive residuals to RSS (residual sum of

square) is called Durbin Watson d-Statistic. It is noted that

in the numerator of the d-statistic, the number of

observations in (N ) because one observation is lost in

taking successive differences.

Assumption of Durbin Watson d-Statistic

1. The regression model includes the intercept term.

2.

-stochastic or

fixed in repeated sampling.

3.

nerated by the first order

auto regressive scheme i.e.

4. The regression model does not include lag values of the

dependent variable Y.

5. There is no missing observation in the data.

102

Advanced Econometrics

9.6. REMEDIAL MEASURES OF AUTOCORRELATION

There are two types of remedial measures, when

is

known and when is unknown.

I.

When is known

The problem of autocorrelation can be easily

solved, if the coefficient of first order

autocorrelation is known.

II.

When is not known

There are different ways of estimating

i.

ii.

The First-Difference Method

DurbinWatson d-Statistic

103

.

Advanced Econometrics

9.7: Exercise

1) What is autocorrelation? Discuss its consequences.

2) Differentiate between autocorrelation and serial

correlation. What are its various sources?

3) How can one detect each autocorrelation?

4) In the presence of autocorrelation how can one

obtain efficient estimates?

5) Describe briefly Durbin Watson d-statistic.

6) Apply Durbin Watson d-statistic to the following

data:

Y

X

2

1

1.37

2

2

0.46

2

3

0.45

1

4

-2.36

3

5

1.27

5

6

-0.81

6

7

-0.09

6

8

-1.00

10

9

2.08

10

10

1.17

10

11

0.27

12

12

1.36

15

13

3.44

10

14

-2.46

11

15

2.37

104

Advanced Econometrics

Chapter: 10

SIMULTANEOUS EQUATION MODELS

10.1: INTRODUCTION

There are two types of Simultaneous Equation Models

1. Simultaneous Equation Models

2. Recursion Equation Models

1. Simultaneous Equation Models

When the independent variable in one equation is also an

independent variable in some other equation we call it

simultaneous equations system or model. The variable entering a

simultaneous equation models are two types:

i .Endogenous variable

ii. Exogenous variable

i. Endogenous variable

The variable whose values are determined within the model

is called Endogenous variable

ii. Exogenous variable

The variable whose values are determined outside the

model is called exogenous variable. These variables are treated as

nonstochastic.

2. Recursion Equation Models

In this model one dependent variable may be a function of

other dependent variable but other dependent variable might not be

the function variable.

105

Advanced Econometrics

10.2: SYSTEM OF SIMULTANEOUS EQUATION

between Y and X by using single equation.

We must use a multi-equation model which we include

separate equations in which m Y and X, would appear as an

endogenous variable although that might appear as explanatory

variable in other equation of the model.

10.3: Simultaneous Equation Bias

It refers to the overestimation or underestimations of the

structural parameters obtain from the applications the OLS to the

structural equations. This bias result because these endogenous

variables of the system which are also explanatory variables or

correlated with the error term.

Structural Equations and Parameters

Structural equations describe the structure of an economy

or behaviors are some economic agents such as consumer or

producer. There is only on structural equation for each of the

endogenous variable of the system.

The coefficients of the structural equations are called

structural parameters and express the direct effect of each