For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Data Services - Platform and

Transforms

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

PARTICIPANT HANDBOOK

INSTRUCTOR-LED TRAINING

Course Version: 10

Course Duration: 3 Day(s)

Material Number: 50120656

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

SAP Copyrights and Trademarks

© 2014 SAP AG. All rights reserved.

No part of this publication may be reproduced or transmitted in any form or for any purpose without

the express permission of SAP AG. The information contained herein may be changed without prior

notice.

Some software products marketed by SAP AG and its distributors contain propr ietary software

components of other software vendors.

•

Microsoft, Windows, Excel, Outlook, and PowerPoint are registered trademarks of Microsoft

Corporation.

•

IBM, DB2, DB2 Universal Database, System i, System i5, System p, System p5, System x,

System z, System zlO, System z9, zl O, z9, iSeries, pSeries, xSeries, zSeries, eServer, z/VM,

z/OS, i5/0S, S/390, OS/390, OS/400, AS/400, S/390 Parallel Enterprise Server. PowerVM,

Power Architecture, POWER6+, POWER6, POWER5+, POWER5, POWER, OpenPower, PowerPC,

BatchPipes, BladeCenter, System Storage, GPFS, HACMP, RETAIN, DB2 Connect, RACF,

Red books, OS/2, Parallel Sysplex, MVS/ESA, AIX, Intelligent Miner, WebSphere, Netfinit y, Tivoli

and lnformix are trademarks or r egistered trademarks of IBM Corporat ion.

•

Linux is the registered t rademar k of Linus Torvalds in the U.S. and other countries.

•

Adobe, the Adobe logo, Acrobat, Postscript, and Reader are either trademarks or registered

trademar ks of Adobe Systems Incorporated in the United States and/or other countries.

•

Oracle is a registered tradema rk of Oracle Corporation

•

UNIX, X/Open, OSF/ 1, and Mot if are registered trademarks of the Open Group.

•

Cit rix, ICA, Program Neighborhood, MetaFrame, WinFrame, VideoFrame, and MultiWin are

trademar ks or registered trademarks of Citrix Systems, Inc.

•

HTML, XM L, XHTM L and W3C are trademarks or registered t rademar ks of W3C"', World Wide

Web Consortium, Massachusetts Institute of Technology.

•

Java is a registered trademark of Sun Microsystems, Inc.

•

JavaScript is a registered trademark of Sun Microsystems. Inc., used under license for

technology invented and implemented by Netscape.

•

SAP, R/3, SAP NetWeaver, Duet, PartnerEdge, ByDesign, SAP BusinessObjects Explorer,

StreamWork, and other SAP products and services mentioned herein as well as their respective

logos are trademarks or r egistered trademarks of SAP AG in Germany and other countries.

•

Business Objects and the Business Objects logo, BusinessObjects, Crystal Reports, Crystal

Decisions, Web Intelligence, Xcelsius, and other Business Objects products and services

mentioned herein as well as their respective logos are trademarks or registered trademarks of

Business Objects Software Ltd. Business Objects is an SAP company.

•

Sybase and Adaptive Server, iAnywhere, Sybase 365, SQL Anywhere, and other Sybase

products and services mentioned herein as well as their respective logos are trademarks or

register ed trademarks of Sybase, Inc. Sybase is an SAP company.

All other product and service names mentioned are the trademarks of their respect ive companies.

Data contained in this document serves informational purposes only. National product

specifications may vary.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

These materials are subject to change without not ice. These materials are provided by SAP AG

and its affiliated companies ("SAP Group") for informat ional purposes only, without

representation or warranty of any kind, and SAP Group shall not be liable for errors or omissions

with respect to the materials. The only warranties for SAP Group products and services are

those th at are set forth in the express war ranty statements accompanying such products and

services, if any. Nothing herein should be construed as constituting an additional warranty.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

© Copy right . All r ights r eserved.

iii ~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

IV

© Copyright . All rights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

About This Handbook

This handbook is intended to both complement the instructor-led presentation of this course and to serve

as a reference for self-study.

Typographic Conventions

American English is the standard used in this handbook.

The following typographic conventions are also used.

This information is displayed in the instructor's presentation

Demonstration

Procedure

Warning or Caution

Hint

A

0

Related or Additional Information

Facilitated Discussion

.... .

User interface control

Example text

Window title

Example text

© Copyright . All r ights r eserved.

v ~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

VI

© Copyright . All rights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Contents

ix

Course Overview

1

Unit 1:

Lesson: Defining Data Services

2

15

Unit 2:

16

21

29

35

41

Lesson: Defining a Data Services Flat File Format

Exercise 2: Create a Flat File Format

Unit 3 :

108

117

121

125

130

141

Batch Job Creation

Lesson: Creating Batch Jobs

Exercise 3: Create a Basic Data Flow

Unit 4:

64

67

75

78

83

88

95

107

Source and Target Metadata

Lesson: Defining Datastores in Data Services

Exercise 1: Create Source and Target Datastores

42

55

63

Data Services

Batch Job Troubleshooting

Lesson: Writing Comments with Descriptions and Annotations

Lesson: Validating and Tracing Jobs

Exercise 4: Set Traces and Annotations

Lesson: Debugging Data Flows

Exercise 5: Use the Interactive Debugger

Lesson: Auditing Data Flows

Exercise 6: Use Auditing in a Data flow

Unit 5:

Functions, Scripts, and Variables

Lesson: Using Built-In Functions

Exercise 7: Use the search_replace Function

Exercise 8: Use the lookup_ext() Function

Exercise 9: Use Aggregate Functions

Lesson: Using Variables. Parameters, and Scripts

Exercise 10: Create a Custom Function

© Copyright . All rights reserved.

vii ~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

151

Unit 6 :

153

156

159

163

169

184

187

197

201

207

209

217

Lesson: Using Platform Transforms

Lesson: Using the Map Operation Transform

Exercise 11: Use the Map Operation Transform

Lesson: Using the Validation Transform

Exercise 12: Use the Validation Transform

Lesson: Using the Merge Transform

Exercise 13: Use the Merge Transform

Lesson: Using the Case Transform

Exercise 14: Use the Case Transform

Lesson: Using the SQL Transform

Exercise 15: Use the SQL Transform

Unit 7:

218

227

235

272

277

281

287

293

viii

Error Handling

Lesson: Setting Up Error Handling

Exercise 16: Create an Alternative Work Flow

Unit 8:

236

242

247

256

263

271

Platform Transforms

Changes in Data

Lesson: Capturing Changes in Data

Lesson: Using Source-Based Change Data Capture (CDC)

Exercise 17: Use Source-Based Change Data Capture (CDC)

Lesson: Using Target-Based Change Data Capture (CDC)

Exercise 18: Use Target-Based Change Data Capture (CDC)

Unit 9:

Data Services Integrator Transforms

Lesson: Using Data Services Integrator Transforms

Lesson: Using the Pivot Transform

Exercise 19: Use the Pivot Transform

Lesson: Using the Data Transfer Transform

Exercise 20: Use the Data Transfer Transform

© Copyright . All rights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Course Overview

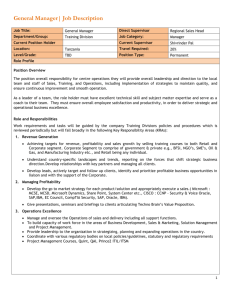

TARGET AUDIENCE

This course is intended for the following audiences:

•

Data Consultant/Manager

•

Solution Architect

•

Super I Key I Power User

© Copy right . All r ights r eserved.

ix ~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

x

© Copyright . All rights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Data Services

Lesson 1

Defining Data Services

2

UNIT OBJECTIVES

•

Define Data Services

© Copyright . All r ights r eserved.

1 ~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 1

Lesson 1

Defining Data Services

LESSON OVERVIEW

Data Services is a graphical interface for creating and staging jobs for data integration and data

quality purposes.

Create jobs t hat extract data from heterogeneous sources . Transform the data to meet the

business requirements of your organization. and load the data into a single location.

This unit describes the Data Services platform and its architecture, Data Services objects and its

graphical interface. the Data Services Designer.

LESSON OBJECTIVES

After completing this lesson, you will be able to:

•

Define Data Services

Data Services

Business Example

For reporting in SAP NetWeaver Business Warehouse. your company needs data from d iverse

data sources, such as SAP systems, non-SAP systems, the Internet. and other business

applications. Examine the technologies that SAP Data Services offers for data acquisition.

Data Services

Data Services provides a graphical interface that allows:

•

The easy creation of jobs that extract data from heterogeneous sources

•

The transformation of data to meet the business requirements of the organization

•

The loading of data to one or more locations

Data Services combines both batch and real-time data movement and management with

intelligent caching to provide a single data integration platform. As shown in the figure Data

Services Architecture-Access Server, the platform is used to manage information from any

information source and use.

2

© Copyright . All rights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Data Services

Real-time

Client

l

I

Adapters

I

Access

Server

-

Job

Server

•

Source

•

•

?:;~t'"' ~

.-r

- - - - - < > - - - - - - - Engines

Local

Repository

t

Management

Console

Target

Browser

Figure 1: Data Services Architecture - Access Server

Data Staging

This unique combination allows you to:

•

Stage data in an operational data store. data warehouse, or data mart

•

Update staged data in batch or real-time modes

•

Create a single environment for developing, testing, and deploying t he entire data integration

platform

•

Manage a single metadata repository to capture the relationships between different extraction

and access methods and provide integrated lineage and impact analysis

For most Enterprise Resource Planning (ERP) applications. Data Services generates SQL that is

optimized for the specific target database (for example. Oracle. DB2, SQL Server. and Inform ix).

Automatically generated, optimized code reduce the cost of maintaining data warehouses and

enables quick building of data solutions that meet user requirements faster t han other methods

(for example. custom-coding, direct-connect calls, or PL/SQL).

Data Services can apply data changes in various data formats, including any custom format using

a Data Services adapter. Enterprise users can apply data changes against mult iple back-office

systems singularly or sequentially. By generating calls native to the system in question, Data

Services makes it unnecessary t o develop and maintain customized code to manage the process.

It is also possible to design access intelligence into each transaction by adding flow logic that

checks values in a data warehouse or in the transaction itself before posting it to the target ERP

system.

Data Services Architecture

Data Services relies on several unique components to accomplish the data integration and dat a

quality activities required to manage corporate data.

Data Services Standard Components

•

Designer

© Copyright . All rights reserved.

3 ~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 1: Data Services

•

Repository

•

Job server

•

Engines

•

Access server

•

Adapters

•

Real-time services

•

Management console

The figure Data Services Architecture illustrates the relationships between components.

Real-time

Client

I Adapters I

Designer

Access

SeNer

Job

SeNer

•••:::?"

Source

Engines

Local

Repository

t

Br owser

Management

Console

Target

Figure 2: Data Services Architecture

The Data Services Designer

Data Services Designer is a Windows c lient application used to create, test, and manually execute

jobs that transform data and populate a data warehouse. Using the Designer, as shown in the

figure Data Services Designer Interface. create data management applications that consist of

data mappings, transformations, and control logic.

4

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Data Services

1r:=:-

••

.. . . 0..,..

..:.

•.)6

,,.,,.,.6#.1

=1 .a*"-('

; ct

1'

.o---

•• Ila

""""""'HQuo>

•I

· &-..............;f.

A

- .

(

•

lf......ftt- ..

•

a...

..... ObtiK& Lbf/Y

-'"".....

•

x

~~dlr..

''"'-

---

I w.o.:.

''.,,_,__

w~

iii""

l~~~""'lf"'°!JO Ii? :F •

.,,,~~

-

"'

o.t...... ., . .

~-(w.tllMlfnJ)f"·

(;l IP

~

,.,.

Figure 3: Data Services Designer Interface

Create objects that represent data sources, and then drag, drop, and configure them in flow

diagrams. The Designer allows for the management of metadata stored in a local repository.

From the Designer. trigger the job server to run jobs for initial application testing.

The Data Services Repository

The Data Services repository is a set of tables that stores user-created and predefined system

objects, source and target metadata, and transformation rules. It is set up on an open client/

server platform to facilitate sharing metadata with other enterprise tools. Each repository is

stored on an existing Relational Database Management System (RDBMS).

Data Services Repository Tables

The Data Services repository is a set of tables that includes:

•

User-created and predefined system objects

•

Source and target metadata

•

Transformation rules

Each repository is stored on a supported RDBMS like MySQL, Oracle, Microsoft SQL Server,

Sybase, and 082. Each repository is associated with one or more job servers.

Repository Types

•

Local repository:

Known in the Designer as the Local Object Library, the local repository is used by an

application architect to store definitions of source and target metadata and Data Services

objects.

•

Central repository:

© Copyright . All rights reserved.

5 ~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 1: Data Services

Known in the Designer as the Central Object Library. the central repository is an optional

component that can be used to support a multi user environment. The Central Object Library

provides a shared library that allows developers to check objects in and out for development.

•

Profiler repository:

Is used to store information that determines the quality of data.

Data Services Job Server

Each repository is associated with at least one Data Services job server, which retrieves the job

from its associated repository and starts the data movement engine as shown in the figure Data

Services Architecture. The data movement engine integrates data from multiple heterogeneous

sources, performs complex data transformations, and manages extractions and transactions

from ERP systems and other sources. The job server can move data in batch or real-time mode

and uses distributed query optimization. multi threading, in-memory caching, in-memory data

transformations. and parallel processing to deliver high data throughput and scalability.

<

Real-time

Client

-

=

/

-

=

Designer

[

J

Access

Seiver

Job

Seiver

JI

I Adapters

E.

~A

~---\}

EliJ

B --+-----

Engines

Source

Target

Local

Repository

t

/

Management

Console

Brow'Ser

Figure 4: Data Services Architecture - Job Server

Designing a Job

When designing a job, run it from the Designer. In the production environment, the job server runs

jobs triggered by a scheduler or by a real-time service managed by the Data Services access

server. In production environments, balance job loads by creating a job server group (multiple job

servers), which execute jobs according to the overall system load . Data Services provide

distributed processing capabilities through server groups. A server group is a collection of job

servers that each reside on different Data Services server computers. Each Data Services server

can contribute one job server to a specific server group. Each job server collects resource

utilization information for its computer. This information is utilized by Data Services to determine

where a job, data flow, or subdata fl ow (depending on the distribution level specified) is executed.

The Data Services Engines

When Data Services jobs are executed, the job server starts Data Services engine processes to

perform data extraction . transformation, and movement. Data Services engine processes use

parallel processing and in-memory data transformations to deliver high data throughput and

scalability.

6

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Data Services

The Data Services Management Console

The Data Services Management Console provides access to these features:

•

Administrator

Administer Data Services resources, including:

Scheduling, monitoring, and executing batch jobs.

Configuring, starting, and stopping real-time services.

Configuring job server, access server, and repository usage.

Configuring and managing adapters.

Managing users.

Publishing batch jobs and real-time services via web services.

Reporting on metadata.

Data Services object promotion which is used to:

Promote objects between repositories for development, testing and production phases.

•

Auto documentation

View, analyze, and print graphical representations of all objects as depicted in Data Services

Designer, including their relationships, properties etc.

•

Data Validation

Evaluate the reliability of the target data based on the validation rules created in the Data

Services batch jobs to review, assess, and identify potential inconsistencies or errors in source

data.

•

Impact and Lineage Analysis

Analyze end-to-end impact and li neage for Data Services tables and columns, and SAP

BusinessObjects Business Intelligence platform objects such as universes, business views,

and reports.

•

Operational Dashboard

View dashboards of status and performance execution statistics of Data Services jobs for one

or more repositories over a given time period.

•

Data Quality Reports

Use data quality reports to view and export SAP Crystal Reports for batch and real-time jobs

that include statistics-generating transforms. Report types include job summaries, transformspecific reports, and transform group reports.

To generate reports for Match, US Regulatory Address Cleanse, and Global Address Cleanse

transforms, enable the Generate Report Data option in the Transform Editor. Generate report

data option in the Transform Editor.

Other Data Services Tools

©Copyright . All rights reserved.

7 ~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 1: Data Services

There are also several tools that assist with the managing of Data Services installation. The Data

Services repository manager allows the creation, upgrade, and checking of versions of the local.

central. and profiler repositories.

The Data Services server manager allows adding. deleting. or editing of properties in the job

server. It is automatically installed on each computer on which a job server is installed.

Use the server manager to define li nks between job servers and repositories. Link multiple job

servers on different machines to a single repository (for load balancing) or each job server to

multiple repositories (with one default) to support individual repositories (for example, separating

test and production environments).

The license manager displays the Data Services components for which a license is currently held.

Data Services Objects

Data Services provides various objects that are used when building data integration and data

quality applications.

Data Services Object Types

•

Projects, for example, folders for organizing a repository

•

Jobs that are executable

•

Work flows. control operations. for example, sub jobs

•

Dataflows, where t he ETL occurs

•

Scripts, code embedded in other objects

•

Datastores. sources, and targets, for example. a database

•

File formats. for example, FLAT files. XML schemas, and Excel

Objects Used

In Data Services, all entit ies that are added. defined. modified. or worked with are objects. Some

of t he most frequently used objects are:

•

Projects

•

Jobs

•

Work flows

•

Data flows

•

Transforms

•

Scripts

The figure Data Services objects shows some common objects.

8

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Data Services

Project Area

Pr oject

Job

Work fl ow

Data fl ow

Transform

-

x

+ B CJ~~ojects l

--+-I_ .,.18 @ CustomerOim_Job

!

.. 8

CustomerOim_WF

"'8

rf CustomerOim_DF

8 ~~M ods_customer(OEMO_Source.dem•

8 mJ cust_cflm(OEMO_Target.demo_tar•

--+----.s

Script - - . - - -- 18 ~

l><I Query

Script

Figure 5: Data Services Objects

Objects

All objects have options. properties. and classes. and can be modified to change the behavior of

the object.

Options control the object, for example. to set up a connection to a database. the database name

is an option for the connection. Properties describe the object, for example, the name and

creation date describes what the object is used for and when it became active. Attributes are

properties used to locate and organize objects. Classes define how an object can be used.

Every object is either reusable or single-use. Single-use objects appear only as components of

other objects. They operate only in the context in which they were created. Single-use objects

cannot be copied. A reusable object has a single definition and all calls to t he object refer to that

definition. If you change the definition of the object in one place. and then save the object, the

change is reflected in all other calls in the object.

Most objects created in Data Services are available for reuse. After you define and save a

reusable object, Data Services stores the definition in the repository. Reuse the definition as

necessary by creating calls to it, for example, a data flow within a project is a reusable object.

Multiple jobs. such as a weekly load job and a daily load job. can call the same data f low. If this

data flow is changed. both jobs call the new version of the data flow.

Edit reusable objects at any t ime independent of the current open project, for example. if you

open a new project, open a data flow and edit it. However. the changes made to the data flow are

not stored until they are saved.

Defining the Relationship between Objects

Jobs are composed of work flows and/or data flows as shown in the figure Data Services Object

Relationships:

•

A work flow is t he incorporation of several data flows into a sequence

•

A data flow process transforms source data into target data

©Copyright . All rights reserved .

9 ~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 1: Data Services

Pt'OjflctS

...

·1

,

~

I

I

'

Aowt ..

Cot-cl.1\10"-l'J.

Wort(

I

'O"ipU

~·ti:h O.t•

JI

I

flow•

•

Tr•nJtornw

I

O.t.Mtor..0.l•~·

d•t.&StOl'tS

-I

Doto

I

I

I I

~

I

I

I

I

"•\ fll0$

T.01..

~

Tr•nJfom'lf

o nf;I Toroot:s

I'll• form•ts

w

I

Flowt

'

I I

8ot• n:o~

..rr..,... O•t.

M eH4Q•

fomi.\S

H

XML folo•

H

T .mci&.t41

XML files

-I

Tetn,Plll\41

t•ble•

"'"

m"w~

FuftClll..M

AdtMll,-

-I

del.lllfl~•

TebhK

Ooc:Vft.,.,"

O utboUr'id

mflMOH

l"unebOnf

~

Kay:

I

I

I

I

I

I

.

WOI'~

&•uh •nd n.•l•lll'N

&etch only

'l-l·tim• """

flOWf •Ad OOl'ld•llO~lf ..,,

optlioo'"t end o.n be embedded

M ett bO•

fvnc:tiotK

Figure 6 : Data Services Object Relationships

Work and Data Flows

A work flow manages data flows and the operations that support them. It also defines the

interdependencies between data flows, for example, if one target table depends on values from

other tables, use the work flow to specify the order in which Data Services populates the tables.

Work f lows are also used to define strategies for handling errors that occur during project

execution. or to define conditions for running sections of a project.

A data flow defines t he basic task that Data Services accomplishes. it involves moving data from

one or more sources to one or more target tables or files. Define data f lows by identifying the

sources from which to extract data. the transformations the data should undergo, and targets.

Defining Projects and Jobs

A project is the highest-level object and is scheduled independently for execution . A project is a

single-use object that allow the grouping of jobs. for example, use a project to group jobs that

have schedules, which depend on one another or that are monitored together.

Project Characteristics

•

Projects are listed in the Local Object Library

•

Only one project can be open at a time

•

Projects cannot be shared among multiple users

The objects in a project appear hierarchically in the project area. If a plus sign (+)appears next to

an object. it can be expanded to view the lower-level objects contained in the object. Data

Services d isplays the contents as both names and icons in the project area hierarchy and in the

workspace. Jobs are associated with a project before t hey can be executed in the project area of

Designer.

Using Work Flows

10

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Data Services

Jobs with data flows can be developed without using work flows. However, nesting data flows

inside of work flows by default is considered. This practice can provide various benefits.

By always using work f lows. jobs are more adaptable to additional development and/or

specification changes. For instance, if a job initially consists of four data flows that are to run

sequentially, they could be set up without work flows. But what if specification changes require

that they be merged into another job instead? The developer must create a correct replicate of

their sequence in the other job. If they had been init ially added to a work f low, the developer could

then copy the work f low into the correct position within the new job. It is unnecessary to learn,

copy, and verify the previous sequence. The change is made more quickly with greater accuracy.

If there is one data flow per work flow, t here are benefits to adaptability. Initially, it may have been

decided that recovery units are not important; the expectation being that if t he job fails, the whole

process could simply be rerun. However, as data volumes tend to increase, it may be determined

that a f ull reprocessing is t ime consuming. The job may then be changed to incorporate work

f lows to benefit from recovery units to bypass reprocessing of successful steps. However. these

changes can be complex and can consume more time than allotted in a project plan. It also opens

up the possibil ity that units of recovery are not properly defined. Setting these up during init ial

development when t he full analysis of the processing nature is preferred.

})

Note:

This course focuses on creating batch jobs using database datastores and file

formats.

Using the Data Services Designer

The Data Services Designer interface allows t he planning and organizing of data integration and

data quality jobs in a visual way. Most of the components of Data Services can be programmed

with this interface.

Describing the Designer Window

The Data Services Designer interface consists of a single application window and several

embedded supporting windows. The application window contains the menubar, toolbar, Local

Object Library, project area, tool palette, and workspace.

Using the Local Object Library

The Local Object Library gives access to Data Services object types. Import objects to and export

objects from the Local Object Library as a file. Importing objects from a f ile overwrites existing

objects with the same names in t he destination Local Object Library.

Whole repositories can be exported in either .atl or .xml format. Using the .xm l file format can

make repository content easier to read. It also allows for the exporting of Data Services to other

products.

Using the Tool Palette

The tool palette is a separate window that appears by default on the right edge of the Designer

workspace. Move the tool palette anywhere on the screen or dock it on any edge of the Designer

window.

The icons in the tool palette allow for t he creation of new objects in the workspace. Disabled icons

occur when there are invalid entries to the diagram open in the workspace. To show the name of

each icon. hold the cursor over the icon until the tool t ip for the icon appears.

©Copyright . All rights reserved.

11

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 1: Data Services

When you create an object from the tool palette, create a new definition of an object. If a new

object is reusable, it is automatically available in the Local Object Library after it has been

created.

If the data flow icon is selected from the tool palette and a new data flow called DFl is defined, it is

possible to later drag the existing data flow from the Local Object Library and add it to another

data flow called DF2.

Using the Workspace

When you open a job or any object within a job hierarchy, the workspace becomes active with

your selection. The workspace provides a place to manipulate objects and graphically assemble

data movement processes.

These processes are represented by icons that are dragged and dropped into a workspace to

create a d iagram. This diagram is a visual representation of an entire data movement application

or some part of a data movement application.

Specify the flow of data by connecting objects in the workspace from left to right in the order that

the data is to be moved.

LESSON SUMMARY

You should now be able to:

•

12

Define Data Services

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 1

Learning Assessment

1. Which of the following statements about work f lows are true? A work flow:

Choose the correct answers.

D

D

D

D

A Transforms source data into target data.

B Incorporates several data flows into a sequence.

C Makes jobs more adaptable to additional development and/or specification changes.

D Is not an object like a project, a job, a transform or a script.

© Copy right . All r ights r eserved.

13

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 1

Learning Assessment - Answers

1. Which of the following statements about work f lows are true? A work flow:

Choose the correct answers.

D

14

A Transforms source data into target data.

0

0

C Makes jobs more adaptable to addit ional development and/or specification changes .

D

D Is not an object like a project. a job, a transform or a script.

B Incorporates several data flows into a sequence.

© Copyright . All rights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Source and Target Metadata

Lesson 1

16

21

Defining Datastores in Data Services

Exercise 1: Create Source and Target Datastores

Lesson 2

29

Defining a Data Services Flat File Format

35

Exercise 2: Create a Flat File Format

UNIT OBJECTIVES

•

Define types of datastores

•

Define flat file formats

© Copy right . All r ights r eserved.

15

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2

Lesson 1

Defining Datastores in Data Services

LESSON OVERVIEW

Use Datastores to define data movement requirements in Data Services.

LESSON OBJECTIVES

After completing this lesson. you will be able to:

•

Define types of datastores

Datastores

Business Example

You are responsible for extracting data into the company's SAP NetWeaver Business Warehouse

system and want to convert it using Data Services as the new data transfer process.

A datastore provides a connection or multiple connections to data sources such as a database.

Using the datastore connection. Data Services can import the metadata that describes the data

from the data source as shown in the figure Datastore.

ERP

Database

Application

<

Datastore

<

<

Datastore

Datastore

>

>

>

Data

Services

Figure 7: Datastore

Datastore

•

Connectivity to data source

•

Import metadata from data source

•

Read and write capability to data source

Data Services uses datastores to read data from source tables or to load data to target tables.

Each source or target is defined individually and the datastore options available depend on which

Relational Database Management System (RDBMS) or application is used for the datastore.

16

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Datastores in Data Services

The specific information that a datastore contains depends on the connection. When a database

or application changes, corresponding changes are made to the datastore information in Data

Services as these structural changes are not detected automatically.

Datastore Types

•

Database datastores:

Import metadata directly from a RDBMS.

•

Application datastores:

Import metadata from most ERP systems, including: J.D. Edwards One World and J.D.

Edwards World. Oracle Applications, PeopleSoft. SAP Applications. SAP Master Data Services,

SAP NetWeaver BW. and Siebel Applications (see the appropriate supplement guide).

•

Adapter datastores:

Access application data and metadata, or just metadata. For example, if the data source is

SQL compatible, the adapter might be designed to access metadata. while Data Services

extracts data from or loads data directly to the application.

•

Web service datastores:

Represent a connection from Data Services to an external Web service-based data source.

Adapters

Adapters provide access to a third-party application's data and metadata. Depending on the

implementation, adapters can be used to:

•

Browse application metadata

•

Import application metadata to the Data Services repository

For batch and real-time data movement between Data Services and applications, SAP offers an

Adapter Software Development Kit (SDK) to develop custom adapters. It is also possible to buy

Data Services prepackaged adapters to access application data and metadata in any application.

Use the Data Mart Accelerator for the SAP Crystal Reports adapter to import metadata from the

SAP BusinessObjects Business Intelligence platform.

Datastore Options and Properties

Changing a Datastore Def inition

Like all Data Services objects, datastores are defined by both options and properties:

•

Options control the operation of objects, including the database server name, database name,

user name, and password for the specific database.

The Edit Datastore dialog box allows for the editing of connection properties except datastore

name and datastore type for adapter and application datastores. For database datastores,

edit all connection properties except datastore name, datastore type, database type, and

database version.

© Copyright . AlI rights reserved .

17

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

•

Properties document the object. for example. the name of the datastore and the date on which

it is created are datastore properties. Properties are descriptive of the object and do not affect

its operation.

Table 1: Properties

The properties and description of each types are outlined in the table:

Properties Tab

Description

General

Contains the name and description of the

datastore. if available. The datastore name

appears on the object in the Local Object

Library and in calls to the object. The name of

the datastore cannot be changed after creation

Attributes

Include the date when the datastore is created .

This value is not changeable

Class attributes

Includes overall datastore information such as

description. and date created

Metadata

Importing Metadata from Data Sources

Data Services determines and stores a specific set of metadata information for tables. Import

metadata by naming, searching, and browsing. After importing metadata, edit column names.

descriptions, and data types. The edits are propagated to all objects that call these objects.

Datastore Metadata

•

External metadata:

Connects to database. displays objects for which access is granted.

•

Repository metadata:

Has been imported into the repository. The metadata is used by data services.

•

Reconcile vs reimport:

Reconcile compares external metadata to repository metadata.

Reimport overwrites repository metadata with external.

Table 2: Metadata

The metadata and description of each types are outlined in the table:

18

Metadata

Descri ption

Table name

The name of the table as it appears in the

database

Table description

The description of the table

Column name

The name of the table column

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Datastores in Data Services

Metadata

Description

Column description

The description of the column

Column data type

The data type for each column. If a column is

defined as an unsupported data type (see data

types listed below), data services converts the

data type to one that is supported. In some

cases, if data services cannot convert the data

type, it ignores the column entirely. Supported

data types are: BLOB, CLOB, date, date time.

decimal, double, int, interval, long, numeric.

real, t ime, t ime stamp, and varchar

Primary key column

The column that comprises the primary key for

the table. After a table has been added to a

data flow diagram, these columns are

indicated in the column list by a key icon next

to the column name

Table attribute

Information Data Services records about the

table such as the date created and date

modified, when available

Owner name

Name of the table owner

It is also possible to import stored procedures from DB2. MS SQL Server, Oracle, SAP HANA,

SQL Anywhere, Sybase ASE, Sybase IQ. and Teradata databases. Import stored functions and

packages from Oracle, use these functions and procedures in the extraction specifications given

to Data Services.

Imported Information

Information that is imported for f unctions includes:

•

Function parameters

•

Return type

•

Name, owner

Imported functions and procedures appear in the Function branch of each datastore tree on the

datastores tab of the Local Object Library.

Importing Metadata from Data Sources

The easiest way to import metadata is by browsing. Note that functions cannot be imported using

this method.

Import Metadata by Browsing

1. On the datastores tab of the Local Object Library, right-click the datastore and select Open

from the menu.

The items available to import appear in the workspace, choose External Metadata.

© Copyright . AlI rights reserved .

19

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

2. Navigate to and select the tables for which you want to import metadata.

Hold down the Ctr/ or Shift keys and select multiple tables.

3. Right-click the selected items and select Import from the menu.

The workspace contains columns that indicate whether the table has already been imported

into data services (Imported) and if the table schema has changed since it was imported

(Changed). To verify whether the repository contains the most recent metadata for an object,

right-click the object and select Reconcile.

4. In the Local Object Library, expand the datastore to display the list of imported objects.

organized into functions. tables. and template tables.

5. To view data for an imported datastore. right-click a table and select View Data from the

menu.

20

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2

Exercise 1

Create Source and Target Datastores

Business Example

You are working as an ETL developer using SAP Data Services Designer. You will create

datastores for the source, t arget, and staging databases.

Note:

When the data values for the exercise include XX, replace XX with the number that

your instructor has provided to you.

Start the SAP BusinessObjects Data Services Designer

1. Log in to the Data Services Designer.

Create Datastores and import metadata for the Alpha Acquisitions, Delta, HR_Datamart, and

Omega databases.

1. In your Local Object Library, create a new source Datastore for the Alpha Acquisitions

database.

Table 3: Alpha Datastore Values

Field

Value

Datastore name

Alpha

Datastore type

Database

Database type

Sybase ASE

Database version

Sybase ASE 15.X

Database server name

WDFBLMT5074

Database name

ALPHA

User name

sourceuser

Password

sourcepass

2. Import the metadata for the Alpha Acquisitions database source tables.

3. In your Local Object Library, create a new Datastore for the Delta staging database.

Field

Value

Datastore name

Delta

© Copy right . All r ights r eserved.

21 ~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

Field

Value

Datastore type

Database

Database type

Sybase ASE

Database version

Sybase ASE 15.X

Database server name

WDFBLMT5074

Database name

DELTAXX

User name

studentXX

Password

studentXX

4 . In your Local Object Library, create a new target Datastore for the HR Data Mart.

Field

Value

Datastore name

HR_datamart

Datastore type

Database

Database type

Sybase ASE

Database version

Sybase 15.X

Database server name

WDFLBMT5074

Database name

HR_DATAMARTXX

User name

studentXX

Password

studentXX

5. Import the metadata for the HR_datamart database source tables.

6. In your Local Object Library, create a new target Datastore for the Omega data warehouse.

22

Field

Value

Datastore name

Omega

Datastore type

Database

Database type

Sybase ASE

Database version

Sybase ASE 15.X

Database server name

WDFLBMT507 4

Database name

OMEGAXX

User name

studentXX

Password

studentXX

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2

Solution 1

Create Source and Target Datastores

Business Example

You are working as an ETL developer using SAP Data Services Designer. You will create

datastores for t he source, t arget, and staging databases.

Note:

When the data values for t he exercise include XX, replace XX with the number that

your instructor has provided to you.

Start the SAP BusinessObjects Data Services Designer

1. Log in to t he Data Services Designer.

a) In the Windows Terminal Server (WTS) training environment desktops, choose Start - All

Programs - _SAP Data Services 4.2 - Data Services Designer.

b) The System-host[:port] field should be: WDFLBMT5074 : 6400

c) In the SAP Data Services Repository Login dialog box, in t he User name field, enter your

user ID, train- xx.

d) In the password field, ent er your password, which is the same as your user name.

e) ChooseLogon.

f) From the list of repositories, choose your repository, DSREPOXX.

g) Choose OK.

Create Datastores and import metadata for the Alpha Acquisitions, Delta, HR_Datamart, and

Omega databases.

1. In your Local Object Library, create a new source Datastore for the Alpha Acquisitions

database.

Table 3: Alpha Datastore Values

Field

Value

Datastore name

Alpha

Datastore type

Database

Database type

Sybase ASE

Database version

Sybase ASE 15.X

© Copyright . All r ights r eserved.

23

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

Field

Value

Database server name

WDFBLMT5074

Database name

ALPHA

User name

sourceuser

Password

sourcepass

a) In t he Local Object Library, choose the Datastores tab.

b) Right click the white workspace of the tab and choose New.

c) In the resulting d ialog box, in the appropriate fields, enter the values from the Alpha

Datastore Values table.

d) To save the Datastore. choose OK.

e) To close the display, choose the x icon in the upper right corner of the data d isplay.

2 . Import the metadata for the Alpha Acquisitions database source tables.

a) Right click the Alpha datastore that you j ust created and choose Open .

You will see the following list of tables:

•

dbo.category

•

dbo.city

•

dbo.country

•

dbo.customer

•

dbo.department

•

dbo.employee

•

dbo.hr_comp_update

•

dbo.order_details

•

dbo.orders

•

dbo.product

•

dbo.region

b) To select all of the tables. hold the CTRL key and click each table name .

c) Right click the selected tables and choose Import.

d) To close the view of Alpha tables, on the tool bar, choose Back.

e) To confirm that there are four records in the Alpha table. right click the c atego ry table in

Local Object Library Datastore tab and choose View Data .

f) Close the data display.

24

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Datastores in Data Services

3 . In your Local Object Library, create a new Datastore for the Delta staging database.

Field

Value

Datastore name

Delta

Datastore type

Database

Database type

Sybase ASE

Database version

Sybase ASE 15.X

Database server name

WDFBLMT5074

Database name

DELTAXX

User name

studentXX

Password

studentXX

a) In the Local Object Library, choose t he Datastores tab.

b) Right click the white workspace of the tab and choose New.

c) In the resulting dialog box, in the appropriate f ields, enter the values from the table, above

d) To save the Datastore, choose OK.

4 . In your Local Object Library, create a new target Datastore for the HR Data Mart.

Field

Value

Datastore name

HR_datamart

Datastore type

Database

Database type

Sybase ASE

Database version

Sybase 15.X

Database server name

WDFLBMT507 4

Database name

HR_DAT AMARTXX

User name

studentXX

Password

studentXX

a) In the Local Object Library, choose t he Datastores tab, right click t he white workspace of

the tab and choose New.

b) In the resulting dialog box, in t he appropriate f ields, enter the values from the table above.

c) To save the Datastore, choose Ok.

5. Import the metadata for the HR_datamart database source tables.

a) Right-click the HR_datamart datastore that you have just created.

© Copyright . AlI rights reserved .

25

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

b) Choose Import By Name.

c) Enter the table name EMP_DEPT.

The owner is dbo.

d) Repeat steps band c for the following table names:

•

EMPLOYEE

•

HR COMP UPDATE

•

RECOVERY STATUS

e) To close the view of the HR_datamart table, choose Back.

6. In your Local Object Library, create a new target Datastore for the Omega data warehouse.

Field

Value

Datastore name

Omega

Datastore type

Database

Database type

Sybase ASE

Database version

Sybase ASE 15.X

Database server name

WDFLBMT5074

Database name

OMEGAXX

User name

studentXX

Password

studentXX

a) In the Local Object Library, choose the Datastores tab, right c lick the white workspace of

the tab and choose New.

b) In the result ing d ialog box, enter the values from the table above.

c) To import the metadata for the Omega database source tables, right click the Omega

datastore that you just created and choose Open.

You will see a list of tables:

•

dbo.emp_dim

•

dbo.product_dim

•

dbo.product_target

•

dbo.time_dim

d) To select all tables, select the first table and, while holding down the Shift key on the

keyboard, select the last table.

e) Right click the selected tables and choose Import.

26

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining Datastores in Data Services

f) Close the view of Omega tables.

g) To save your work, from the main menu, choose Project

© Copyright . AlI rights reserved .

~

Save All.

27

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

LESSON SUMMARY

You should now be able to:

•

28

Define types of datastores

© Copyright . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2

Lesson 2

Defining a Data Services Flat File Format

LESSON OVERVIEW

Use f lat file formats to create Datastores to help define data movement requirements in Data

Services.

LESSON OBJECTIVES

Aft er completing this lesson, you will be able to:

•

Define flat file formats

File Formats

Business Example

You are responsible for extracting flat file data into the company's SAP NetWeaver Business

Warehouse system and want to convert to using Data Services as t he new data transfer process.

You must know how to create flat file formats as the basis for creating a datastore.

File formats are connections to f lat files as datastores are connections to databases.

Explaining file formats

As shown in the figure File Format Ed itor. a file format is a set of properties that describes the

structure of a flat file (ASCII). File formats describe the metadata structure . A file format

describes a specific f ile. A f ile format template is a generic description that can be used for

multiple data files.

The software uses data stored in files for data sources and targets. A f ile format defines a

connection to a file. Therefore, use a file format to connect to source or target data when the data

is stored in a f ile rather than a database table. The Local Object Library stores file format

templates that are used to define specific file formats as sources and targets in data flows .

© Copyright . All r ights reserved.

29

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

~ ftle

fo1n1ot Editor

_-

•

"'~

Gcner..~-

F

lVPO

Delmlod

Fle.Jon,..._667

Cu«om tran$1er pr·ogram

P111alel PftXOS$ threads

D.... File($)

No

(ncne)

.......

I-

l'lelclNomo o.tol

LOCRoot drectOtY

FleM0>0(5)

Ddimit.,..

I-

CCUNl

'(now

°"""°

lno)

(none)

Text

I"

DeloultFormot

Escapectw

MAI. indic6tor

I-

l9"10f6 IOW fl\AJ~(s)

(ncne)

(,.,.....)

(ncne)

Dot•

Tlmo

Nl24;mi:ss

Oate·flne

yyyy.nwn.dd hha''fim ...

yyyy.mn.dd

1111>\lt/ OU<ou\

St~

HNdefs

0

No

No

Sl<l>oed•Skk> row headef

wrb row header

S

•

Wrt:0-80M

Custom tr..-itf~

____,I

9-an

Figure 8: File Format Editor

File Format Objects

File format objects describe the following file types:

•

Delimited:

Characters such as commas or tabs separate each field.

•

Fixed width :

Specify the column width.

•

SAP transport:

Define data transport objects in SAP application data flows.

•

Unstructured text:

Read one or more files of unstructured text from a directory.

•

Unstructured binary:

Read one or more binary documents from a directory.

Table 4: File Format Editor Modes

Use the file format editor to set properties for file format templates, and source or target file

formats. Available properties vary by the mode of the file format editor:

30

Mode

Description

New mode

Create a new file format template

Edit mode

Edit an existing file format template

Source mode

Edit the file format of a particular source file

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining a Data Services Flat File Format

Mode

Description

Target mode

Edit the file format of a particular target file

Table 5: Date Formats

In the Property Values work area, it is possible to override default date formats for files at the field

level. The following date format codes can be used :

Code

Description

DD

2-dig it day of the month

MM

2-dig it month

MONTH

Full name of the month

MON

3 -character name of the month

yy

2-digit year

yyyy

4-digit year

HH24

2-dig it hour of the day (0-23)

Ml

2-dig it m inute (0-59)

SS

2-dig it second (0-59)

FF

Up to 9-digit subseconds

Create a New File Format

1. On the Formats tab of the Local Object Library, right-click Flat Files and select New from the

menu to open the file format editor.

To make sure your file format definition works properly, finish inputting the values for the file

properties before moving on to the Column Attributes work area.

2. In the Type field, specify the file type:

•

De limi ted :

Select this file type if the file uses a character sequence to separate columns.

•

Fixe d wid th:

Select this file type if the file uses specified widths for each column .

If a f ixed-width file format uses a multibyte code page, then no data is d isplayed in the Data

Preview section of the f ile format editor for its files.

3. In the Name field, enter a name that describes the file format template.

Once the name has been created, it cannot be changed. If an error is made, the file format is

deleted and a new format is created.

©Copyright . All rights reserved.

31 ~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

4. Specify the location information of the data file including Location, Root Directory, and

File name.

The Group File Read can read multiple flat files with identical formats with a single file format.

By substituting a wild card character or list of file names for the single file name. multiple f iles

can be read.

5. Select Yes to overwrite the existing schema.

This happens automatically when you open a file.

6. Complete the other properties to describe files that this template represents.

Overwrite the existing schema as required.

Table 6: Column Attributes Work Area

For source files, specify the structure of each column in the Column Attributes work area:

Column

Description

Field Name

Enter the name of the column

Data Type

Select the appropriate data type from the

dropdown list

Field Size

For columns with a data type of varchar,

specify the length of the field

Precision

For columns with a data type of decimal or

numeric, specify the precision of the f ield

Scale

For columns with a data type of decimal or

numeric, specify the scale of the field

Format

For columns with any data type but varchar,

select a format for the f ield, if desired. This

information overrides the default format set in

t he Property Values work area for that data

type

Columns do not need to be specified for files used as targets. If the columns are specified and

they do not match the output schema from the preceding transform, Data Services writes to the

target file using the transform's output schema.

For a decimal or real data type, if the column names and data types in the target schema do not

match those in the source schema, Data Services cannot use the source column format

specified . Instead, it defaults to the format used by the code page on the computer where the job

server is installed. Select Save & Close to save the file format and close t he file format editor. In

the Local Object Library, right-click the file format and select View Data from the menu to see the

data.

Create File from Existing Format

To create a file format from an existing f ile format:

32

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Lesson: Defining a Data Services Flat File Format

1. On the Formats tab of the Local Object Library. right-click an existing file format and select

Replicate.

The f ile format editor opens, displaying the schema of the copied file format.

2. In the Name field, enter a unique name for the replicated file format.

Data Services does not allow you to save the replicated file with the same name as the original

(or any other existing file format object). After it is saved, you cannot modify the name again.

3. Edit the other properties as desired.

4. Select Save & Close to save the file format and close the file format editor.

Multiple Flat Files

To read multiple flat files with ident ical formats with a single file format

1. On the Formats tab of the Local Object Library, right-click an existing file format and select

Edit from the menu.

The format must be based on one single file that shares the same schema as the other files.

2. In the Location field of the format wizard, enter one of:

•

Root directory (optional to avoid retyping)

•

List of file names, separated by commas

•

File name containing a wild character(*)

When you use the(*) to call the name of several file formats, Data Services reads one file format,

closes it and then proceeds to read the next one. For example, if you specify the file name

revenue* .txt, Data Services reads all flat files starting with revenue in the file name.

There are new unstructured_ text and unstructured_binary file reader types for read ing all files in a

specific folder as long/BLOB records. There is also an option for trimming fixed width f iles.

File Format Error Handling

One of the features available in the File Format Editor is error handling as shown in the figure Flat

File Error Handling. The option Capture data conversion errors is not a yes or no option, instead it

captures errors or warnings. Selecting Yes identifies that there are errors, selecting No identifies

warnings.

Error handling

log data conversion warnings

log row format warnings

Maximum warnings to log

Capture data conversion errors

Capture row format errors

Maximum errors to stop job

Write error rows to file

Error file root directory

Error fife name

Yes

Ve$

{no limit}

Yes

Yes

{no limit}

Ves

i.. C: \Documents and Settings\Administrator\Oesktop\Scripts

~ file_errors. txt

i

Figure 9 : Flat File Error Handling

Error Handling for a File Format

©Copyright . All rights reserved .

33

~

~

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

Unit 2: Source and Target Metadata

When error handling is enabled for a f ile format, Data Services wil l:

•

Check for two types of f lat-file source errors:

Datatype conversion errors. for example, a f ield might be defined in the file format editor as

the integer data type, but the data encountered is the varchar data type.

Row-format errors. for example, in the case of a fixed-width file, Data Services identifes a

row that does not match the expected width value.

•

Stop processing the source file after reaching a specified number of invalid rows

•

Log errors in t he Data Services error log. It is possible to limit the number of log entries

allowed without stopping the job

It is possible to write rows with errors to an error f ile, which is a semicolon-delimited text file that

is created on the same machine as the job server.

Entries in an error file have the following syntax:

source file path and name; row number in source file; Data Services error; column number where

the error occurred; all columns from the invalid row

Flat File Error Handling

To enable flat file error handling in the file format editor:

1. On the Formats tab of the Local Object Library, right-click the file format and select Edit from

the menu.

2. Under the Error Handling section. in the Capture Data Conversion Errors dropdown list, select

Yes .

3. In the Capture Row Format Errors dropdown list, select Yes.

4. In the Write Error rows to file dropdown list, select Yes .

It is possible to specify the maximum warnings to log and the maximum errors before a job is

stopped.

5. In the Error File Root Directory field , select the folder icon to browse to the directory in which

you have stored the error handling text f ile you created .

6. In the Error File Name f ield, enter the name for the text f ile created to capture the f lat file error

logs in that directory.

7. Select Save & Close.

34

© Copyr ight . All r ights reserved.

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com

For Any SAP / IBM / Oracle - Materials Purchase Visit : www.erpexams.com OR Contact Via Email Directly At : sapmaterials4u@gmail.com