Data Structure

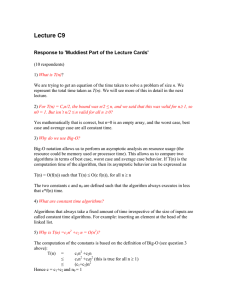

2. Algorithm Analysis

What is Algorithm?

Definition: An algorithm is a finite set of unambiguous instructions which, if

followed, can accomplish a certain task.

Analysis of Algorithm

The analysis of algorithm deals with the amount of space and time consumed by it.

In order to begin our study of algorithms analysis, let us see at some important

points.

What is a "good" algorithm?

There are two important meanings: Qualitative and Quantitative

1. Qualitative

User’s point of view

- User friendliness

- Good I/O

- Good termination

Programmer’s point of view

- Modularity

- Documentation

- Generality

- Machine and operating system independence (portability)

2. Quantitative (Complexity of algorithms)

Economical in the use of Computing time (CPU) and storage (Space)

Many conceptual problems will allow several different algorithmic solutions. These

algorithms will vary in the amount of memory they consume (space considerations)

and the speed with which they execute (time consideration).

Definition: (Computational Complexity)

The computational complexity of an algorithm is the performance of the

algorithm in CPU time.

Definition: (Size of algorithm)

The size of an algorithm is the number of data elements the algorithm

works on.

Ex1:

for(i=0;i<n;i++)

cin>>a[i];

min=a[1];

for(i=1;i<n;i++)

if(a[i]<min)

min=a[i];

size=n, which stands for a[0], a[1], a[2], …, a[n-1]

Prepared by : worku w. Unity U

Page 1 of 8

Data Structure

Definition: (Order of algorithm)

The order of algorithm is a function that outputs the number of operation(s)

the algorithm must perform as a function of the size of the algorithm.

For the above examples

Ex1: O (size) =2n

If the dominant algorithm is considered

O (size) =n

Definition: (Big-O Notation)

A function g(n) is said to be order of f(n) denoted as O(f(n)) (to mean Big-O

of f(n) ) provided that there is a constant C for which g(n)≤C.f(n)

A Big-O Analysis of Algorithms

Computers do their work in terms of certain fundamental operations: comparing two

integers, moving the content of one memory location to another, and so on. A single

instruction in a high-level language may be translated by a compiler into many of

these fundamental machine level instructions. There are several factors which can

affect performance of algorithms. For this reason when we come to our study of

algorithms analysis, we had better assume a hypothetical computer that requires one

micro second to perform one of the above fundamental operations.

The key to analyzing algorithms efficiency is to analyze the loops, more importantly

the nested loops within that algorithm.

As an example we will consider the following two algorithms which are intended to

sum each of the rows of an n x n two dimensional array a, storing the row sum in a

one dimensional array sum and the overall total in gt.

1)

gt=0;

for(i=0; i<n; i++)

{

sum[i]=0;

for(j=0; j<n; j++)

{

sum[i]=sum[i]+a[i][j];

gt=gt+a[i][j];

}

}

total time requirement

2n2+n

2)

gt=0;

for(i=0; i<n; i++)

{

sum[i]=0;

for(j=0; j<n; j++)

Prepared by : worku w. Unity U

total time requirement

n2+2n

Page 2 of 8

Data Structure

sum[i]=sum[i]+a[i][j];

gt=gt+sum[i];

}

From the above algorithms the second algorithm is seemingly guaranteed to execute

faster than the first algorithm for any non trivial value of n. But note that “faster”

here may not have much significance in the real world of computing. Thus, because

of the phenomenal execution speeds and very large amounts of available memory on

modern computers, proportionately small differences between algorithms usually

have little practical impact. Such considerations have led computer scientists toward

devising a method of algorithm classification which makes more precise the notation

of order of magnitude as it applies to time and space considerations. This method of

classification is typically referred to as Big-O notation.

Two important points here:

1. How can one determine the function f(n) which categorizes a particular

algorithm?

It is generally the case that, by analyzing the loop structure of an algorithm,

we can estimate the number of run-time operations (or amount of memory

units) required by sum of several terms, each dependent on n (the number of

items being processed by the algorithm). That is, typically we are able to

express the number of run time operations (or amount of memory) as a sum

of the form

f1(n)+f2(n)+…+fk(n)

Moreover, it is also typical that we identify one of the terms in the expression

as the dominant term. A dominant term is one which, for bigger values of n,

becomes so large that it will allow us to ignore all the other terms from a

Big-O perspective. For instance, suppose that we had an expression

involving two terms such as

n2+6n

Here, the n2 term dominates the 6n term since, for n ≥ 6, we have

n2+6n ≤ n2+n2 =2n2

Thus, n2+6n is expression which would lead to an O(n2) categorization

because of the dominance of the n2 term. In general the problem of Big-O

categorization reduces to finding the dominant term in an expression

representing the number of operations or amount of memory required by an

algorithm.

From this discussion now it is easy to categorize both of our previous

algorithms as O(n2) algorithms.

Common dominant terms in expressions for algorithmic analysis

n dominates logan, a is often 2

Prepared by : worku w. Unity U

Page 3 of 8

Data Structure

n logan dominates n, a is often 2.

nm dominates nk when m>k

an dominates nm for any a and m

Algorithms whose efficiency is dominated by a logan term (and hence

categorized as O(logan)) are often called logarithmic algorithms. Since logan

will increase much more slower than n itself, logarithmic algorithms are

generally very efficient. Algorithms whose efficiency can be expressed in

terms of a polynomial of the form

amnm+am-1nm-1+ … +a1n1 +a0

are called polynomial algorithms. Since the highest power of n will dominate

such a polynomial, such algorithms are O(nm). The only polynomial algorithms we

will be concerned with here are m=1, 2, or 3 and are called linear, quadratic, or

cubic respectively.

Algorithms whose efficiency dominated by a term of the form an are called

exponential algorithms. Exponential algorithms are one of a class of algorithms

known as NP algorithms (for Not Polynomial). It could be said that NP might also

stand for “Not Practical” because generally such algorithms can not reasonably run

on typical computers for moderate values of n.

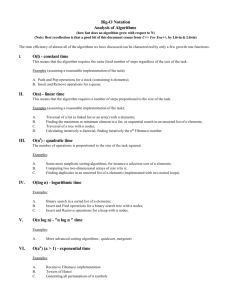

2. How well does a Big-O notation provide a way of classifying algorithms

from a real world perspective?

In order to get answer for this question consider the following table which

contains some typical f(n) functions we will be using to classify algorithms

and their order of magnitude run-time for an input of size 105 on our

hypothetical computer

Order of magnitude runtime for input of size 105

f(n)

(Assuming proportionality

constant C=1)

N

0.1 seconds

log2n

2 x 10-5 second

nlog2n

2 seconds

n2

3 hours

n3

32 years

2n

Centuries

From this table we can see that an O(n2) algorithm will take hours to execute for an

input of 105 . How many hours is dependent up on the constant of proportionality in

the definition of the Big-O notation.

Exercise:

Perform a Big-O Analysis for those statements inside each of the following nested

loop constructs

a) for(i=0; i<n; i++)

for(j=0; j<n; j++)

…..

Prepared by : worku w. Unity U

Page 4 of 8

Data Structure

b)

for(i=0; i<n; i++)

{

j=n;

while(j>0)

{

…

j=j/2;

}

}

c)

i=1;

do

{

j=1;

do

{

…

j=2*j

}while(j<n);

i=i++;

}while(i<n);

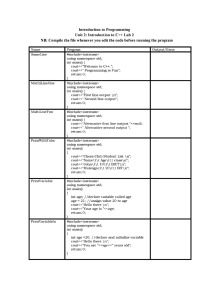

2.4. Classification of Order of Algorithms

1. Constant function

constant

2. Linear functions

3. Logarithmic function

4. Polynomial function

5. Exponential function

O (g (n)) =c dose not depend on size; always takes

time

O(g(n))=an + b

O(g(n))=log c n + b

O(g(n))=amnm+am-1nm-1+ … +a1n1 +a0

O(g(n))=a*b n

3. An Asymptotic Upper Bound (Big-O)

Definition (Big-O)

Given two functions f(n) and g(n) from set of natural numbers to set of

non-negative real numbers, we say f(n) is on the order of g(n) or that f(n) is O(g(n))

if there exist positive integers C and n0 such that f(n) ≤ Cg(n) for all

n ≥ n0

Prepared by : worku w. Unity U

Page 5 of 8

Data Structure

Ex1:

f(n) = n2 +100n

f(n) = n2 + 100n ≤ n2 + n2

=2 n2

C g(n) for n ≥ 100 n0 (C=2 and n0=100)

f(n) = O(g(n)) = O(n2)

This same f(n) is also O(n3), since n2 + 100n is less than or equal to 2n3 for all n

greater than or equal to 8.

Given a function f(n), there may be many functions g(n) such that f(n) is O(g(n)).

If f(n) is O(g(n)), "eventually" (that is, for n ≥ n0 ) f(n) becomes permanently

smaller or equal to some multiple of g(n). In a sense we are saying that f(n) is

bounded by g(n) from above, or that f(n) is a "smaller" function than g(n). Another

formal way of saying this is that f(n) is asymptotically bounded by g(n). Yet another

interpretation is that f(n) grows more slowly than g(n), since, proportionately (that

is, up to the factor of C), g(n) eventually becomes larger.

Ex2:

f(n) = 8n + 128

f(n) = 8n + 128 ≤ 8n + n, for n ≥ 128

= 9n

C g(n)

f(n) = O(g(n)) = O(n) ,for n ≥ 128

Consider the same function f(n) = 8n + 128

The following shows plot of f(n) and other functions

We wish to show that f(n) = O(n2). According to the definition of Big-O, in order to

show this we need to find an integer n0 and a constant C>0 such that for all integers

n ≥ n0 ,

f (n) Cn2.

It does not matter what the particular constants are as long as they exist! For example

if we take C = 1. Then

f(n) Cn2 8n + 128 n2

0 n2 - 8n – 128

Prepared by : worku w. Unity U

Page 6 of 8

Data Structure

0 (n-16)(n+8)

Since (n+8) > 0 for all values of n ≥ 0, we conclude that (n0-16) ≥ 0. That is, n0=16

So we have that for C=1 and n0=16, f(n) Cn2 for all integers n ≥ n0 . Hence,

f(n) = O(n2) . From the above graph one can see that the function f(n) = n 2 is greater

than the function f(n) = 8n + 128 to the right of n = 16. Of course, there are many

other values of C and n0 that will do.

Although a function may be asymptotically bounded by many other functions, we

usually look for an asymptotic bound that is a single term with a leading coefficient

of 1 and that is as "close a fit" as possible.

Ex3: f(n) = n

f(n) ≤ n + n

=2n

C g(n) for n

f(n) = O(g(n)) = O(n)

≥ 1 n0 ( C = 2 and n0 = 1)

Ex4: f(n) = n2 + 3n + 10

f(n) = n2 + 3n ≤ n2 + n2

= 2 n2

C g(n) for n ≥ 3 n0 (C = 2 and n0 = 3 )

f(n) = O(g(n)) = O(n2)

3.1. Conventions for Writing Big O

Expressions

Certain conventions have evolved which concern how Big O expressions are

normally written:

First, it is common practice when writing Big O expressions to drop all

but the most significant terms. Thus, instead of O(n2 + n log n + n) we

simply write O(n2).

Second, it is common practice to drop constant coefficients. Thus,

instead of O(3n2), we simply write O(n2). As a special case of this rule,

if the function is a constant, instead of, say O(1024), we simply write

O(1).

Of course, in order for a particular Big O expression to be the most useful,

we prefer to find a tight asymptotic bound. For example, while it is not

wrong to write f(n) = n = O(n3), we prefer to write f(n)=O(n), which is a

tight bound.

Certain Big-O expressions occur so frequently that they are given names.

The following table lists some of the commonly occurring Big-O expressions and

the useful name given to each of them

Prepared by : worku w. Unity U

Page 7 of 8

Data Structure

Expression

O(1)

O(log n)

O(log 2n)

O(n)

O(n log n)

O(n2)

O(n3)

Name

Constant

Logarithmic

Log squared

Linear

N log n

Quadratic

Cubic

O(2n)

Exponential

Prepared by : worku w. Unity U

Page 8 of 8