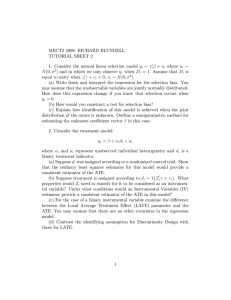

Lecture Note 1: Some Probability & Statistics 1 Basics Joint Distribution For discrete random variables, fXY (x; y) = Pr (X = x and Y = y) Marginal Distribution Sums/integrates out all but one variable, X X fX (x) = fXY (x; y) and fY (y) = fXY (x; y) y x XX y fXY (x; y) = 1 x Conditional Distribution fXjY (xjy) = fXY (x; y) fY (y) for fY (y) 6= 0 Note that for a …xed y, the conditional probability must sum to 1: X fXjY (xjy) = 1 x 2 Measures of Central Tendency Mean E [X] = X xfX (x) x Conditional Mean Mean of X given a value of Y , X E [XjY = y] = xfXjY (xjy) x 1 3 Properties of Conditional Expectations Law of Iterated Expectations X E [X] = E [E [ Xj Y ]] = E [Xj Y = y] fY (y) y Noting that E [ Xj Y = y] is just a function of Y , say g (Y ), we can write the above as: X E [X] = E [g (Y )] = g (y) fY (y) y In general, E [ Xj Y ] = E [E [Xj Y; Z]j Y ] Example 1 E Y 2 Y = y = y2 E XY 2 Y = y = y 2 E [Xj Y = y] E [g (X; Y )j Y = y] = E [g (X; y)j Y = y] = X g (x; y) fXjY (xjy) x 4 Best Constant Predictor Our objective is to …nd a)2 argmin E (Y a Let E [Y ] and note that E (Y a)2 = E ((Y = E (Y )2 + 2 ( = E (Y )2 + 2 ( a) E [(Y )] + ( a)2 = E (Y )2 + 2 ( a) (E [Y ] )+( a)2 = E (Y )2 + 2 ( a) 0 + ( = Var (Y ) + ( which is minimized when a))2 )+( = a) (Y a)2 )+( a)2 a)2 ; = E [Y ], so the mean is the best constant predictor. 2 4.1 Special Case Identi…cation Suppose that Y 2 N ( 0; 2 ) with 1 ' (y; ) = p exp 2 known. Is the ! (y )2 2 2 0 identi…ed? Let denote the PDF. Now, let )2 (Y L ( ) = log ' (Y ; ) = C 2 2 where C denotes a generic constant. If we know the exact distribution F of Y , we can calculate the expected value of L ( ): " # EF (Y )2 (Y )2 EF [L ( )] = EF C = C 2 2 2 2 But EF (Y )2 = EF ((Y 0) 2 = EF (Y 0) ( +( It follows that 2 0 )) 2 0) 2 = 0. Therefore, 0 +( 2 is identi…ed. Estimation Now suppose that Y1 ; : : : ; Yn by iid +( 2 0) 2 EF [L ( )] = C is maximized at 2 0) 2 0) = EF (Y 2 N ( 0; =C 2 2( 0 ) (Y 2 2 known. We can estimate n 1X E Y = E [Yi ] = n i=1 n it is an unbiased estimator of 0. 0 Con…dence Interval We need two properties of the normal distribution: 2 N( ; Lemma 2 If Y N (0; 1), then Pr [jY j Corollary 1 If Y N( ; ), then Y Lemma 1 If Y 2 ), then Pr N (0; 1). 1:96] = 95%. Y 1:96 = 95%. 3 2 0) 2 1X Y = Yi n i=1 Because +( 2 0) ( ) with 0) 0 Now, note that Y is a linear combination of Y1 ; : : : ; Yn iid 2 N ( 0; ) so we have 2 Y N 0; n It follows that Pr Y p0 / n 1:96 = 95% But Pr Y p 0 Y p / n 0 1:96 = Pr / n 1:96 p = Pr 0 2 0 n 1:96 p Y 0 1:96 p Y 0 n 1:96 p = Pr Y = Pr 1:96 = Pr n n Y + 1:96 p n 1:96 p ; Y + 1:96 p n n Y We conclude that Pr and Y 5 5.1 0 1:96 pn ; Y + 1:96 pn 2 Y 1:96 p ; Y + 1:96 p n n = 95% is the 95% con…dence interval for 0. Digression Conditional Expectation Our objective is to …nd some function (X) (X) such that argmin E (Y g (X))2 g( ) Let (X) E [Y j X] and note that (Y g (X))2 = ((Y = (Y g (X)))2 (X)) + ( (X) (X))2 + 2 ( (X) g (X)) (Y (X)) + ( (X) and therefore, E (Y g (X))2 X = E (Y (X))2 X + E [2 ( (X) + E ( (X) 4 g (X)) (Y 2 g (X)) X (X))j X] g (X))2 But E [2 ( (X) g (X)) (Y (X))j X] = 2 ( (X) g (X)) E [Y (X)j X] g (X)) (E [Y j X] = 2 ( (X) g (X)) (E [Y j X] = 2 ( (X) = 2 ( (X) E [ (X)j X]) (X)) g (X)) 0 = 0; and g (X))2 X = ( (X) E ( (X) g (X))2 It follows that E (Y g (X))2 X = E (Y (X))2 X + ( (X) g (X))2 and therefore E (Y g (X))2 = E (Y (X))2 + E ( (X) which is minimized when ( (X) g (X))2 = 0 or g (X) = (X) Therefore, (X) = E [Y j X] 5.2 Best Linear Predictor Predict Y using linear functions of X. Find ( ; ) and argmin E (Y such that (a + bX))2 a;b The minimization can be solved in two steps. Step 1 We …x b, and …nd (b) that solves (b) argmin E (Y a 5 (a + bX))2 g (X))2 ; Step 2 We …nd that solves ( (b) + bX))2 argmin E (Y b and recognize that = ( ). It is not di¢ cult to see that (b) = E [Y bX] = E [Y ] bE [X] = b Y X: Therefore, Step 2 optimization can be rewritten as min E (Y (( b b Y X) + bX))2 = min E b where Ye Now, let Y h i e Ye E X h i e2 E X b0 and note that E We therefore have E Ye e bX 2 =E =E =E Ye Ye Ye h Ye e b0 X X + 2 (b0 e b) X 2 + (b0 E [X] 2 b) E 2 b) h h Ye i 2 e E X ; e b0 X which is minimized when b = b0 . Therefore, we have = b0 = Cov (X; Y ) ; Var (X) and = ( )= Cov (X; Y ) Var (X) Y 6 2 i e =0 X e b0 X 2 e bX Cov (X; Y ) Var (X) = e + (b0 b0 X e b0 X e X E [Y ] ; Ye X: i e + (b0 X h i e2 b)2 E X Lecture Note 2: Computational Issues of OLS 6 Some Notation Data For each individual i, we observe (yi ; xi1 ; : : : ; xik ). We observe n individuals. Objective We want to “predict”y by xs: yi = b1 xi1 + + bk xik + ri : i = 1; : : : ; n Here, ri denotes the residual. Vector Writing 0 1 b1 B C b = @ ... A ; k 1 bk 0 1 xi1 B C xi = @ ... A ; k 1 xik we may compactly write yi = x0i b + ri : i = 1; : : : ; n Matrix Writing 0 1 x01 B C X = @ ... A ; n k x0n 0 1 y1 B C y = @ ... A ; n 1 yn we may more compactly write 0 1 r1 B C r = @ ... A : n 1 rn y = X b + r : n 1 7 n kk 1 n 1 n 1 OLS It seems natural to seek b that solves min b n X (yi (b1 xi1 + + bk xik ))2 = min b i=1 n X 7 2 x0i b) i=1 = min (y b (yi Xb)0 (y Xb) Basic Matrix Calculus De…nition 1 For a real valued function f : t = (t1 ; : : : ; tn ) ! f (t), we de…ne 2 @f 3 @t1 @f 7 6 = 4 ... 5 ; @t @f @f = @t0 @f @f ;:::; @t1 @tn : @tn Lemma 3 Let f (t) = a0 t. Then, @f (t)/ @t = a. Lemma 4 Let f (t) = t0 At, where A is symmetric. Then, @f (t)/ @t = 2At. Back to OLS Let S (b) Xb)0 (y (y Xb) = y 0 y b0 X 0 y y 0 Xb + b0 X 0 Xb 0 = y0y 2 (X 0 y) b + b0 X 0 Xb A necessary condition for minimum is @S (b) =0 @b Now using the two lemmas above,weobtain @S (b) = @b 2X 0 y + 2X 0 Xb from which we obtain Xb)0 (y argmin (y Xb) = (X 0 X) 1 X 0y b Theorem 1 Let e y X (X 0 X) 1 X 0 y. Then,X 0 e = 0. Proof. X 0e = X 0y Remark 1 Let b (X 0 X) 1 X 0 X (X 0 X) X 0 y. We then have e 1 X 0y = 0 y X b. Corollary 2 If the …rst column of X consists of ones, we have Theorem 2 (X 0 X) 1 X 0 y = argminb (y Xb)0 (y 8 Xb). P i ei = 0. Proof. Write y Xb+ Xb Xb = y =y X (X 0 X) =e X b Therefore, we have Xb) = e = e0 e 0 b X b 0 b = e0 e + X b e0 e This gives a proof as to why b minimizes (y 8 e b X 0e b X 0y b X b b =0 e0 X b Xb)0 (y 1 b and note that (y Xb b X b e0 X b 0 b X b Xb)0 (y 0 b + b b X 0X b b Xb). Digression: Restricted Least Squares Theorem 3 The solution to the problem min (y b Xb)0 (y is given by b where Here, R is m b (X 0 X) 1 Xb) s:t: h R0 R (X 0 X) b = (X 0 X) 1 1 Rb = q R0 i 1 Rb X 0 y: q ; k matrix. Both R and q are known. We assume that R has the full row rank. Proof. It is not di¢ cult to see that Rb = R b = Rb = q: R (X 0 X) Rb q 1 h R0 R (X 0 X) Suppose that some b satis…es Rb = q. Let d Rd = R (b b b ) = Rb 9 1 R0 i 1 Rb q b . We should then have Rb = q q = 0: We now show that e0 Xd = 0; where e y Xb : Note that X 0 e = X 0 (y Xb ) = X 0 e X 0X b =0 b X b b = X 0e h 1 b = X 0X (X 0 X) R0 R (X 0 X) h i 1 1 1 = X 0 X (X 0 X) R0 R (X 0 X) R0 Rb q h i 1 1 = R0 R (X 0 X) R0 Rb q : = X 0X b b X 0X b 1 It therefore follows that h i 1 h 1 d0 X 0 e = d0 R0 R (X 0 X) R0 R b q = (Rd)0 R (X 0 X) h i 1 1 = 00 R (X 0 X) R0 Rb q = 0 R0 1 i R0 1 i and 0 e0 Xd = (d0 X 0 e ) = 0: Therefore, we have (y Xb)0 (y Xb) = (e X (b = (e 0 Xd) (e = e0 e d0 X 0 e b ))0 (e X (b b )) Xd) e0 Xd + d0 X 0 Xd = e0 e + d0 X 0 Xd e0 e and the minimum is achieved if and only if d = 0 or b = b . Theorem 4 Let e y Then, e0 e = e0 e + R b q 0 h Xb : R (X 0 X) 10 1 R0 i 1 Rb q : Rb 1 Rb q q Proof. It is immediate from h i 1 R R (X X) R Rb h i 1 1 1 Rb = y X b + X (X 0 X) R0 R (X 0 X) R0 h i 1 1 1 = e + X (X 0 X) R0 R (X 0 X) R0 Rb q e =y and X b 0 (X X) 1 0 0 1 0 q q X 0 e = 0: 9 Projection Algebra Let X (X 0 X) P 1 X 0; M In P Theorem 5 P and M are symmetric and idempotent, i.e., P 0 = P; M 0 = M; P 2 = P; M 2 = M: Proof. Symmetry is immediate. P 2 = X (X 0 X) M 2 = (In = In 1 X 0 X (X 0 X) P ) (In P 1 X = X (X 0 X) P ) = In P + P = In P 1 X 0 = P; P + PP P = M: Theorem 6 P X = X; M X = 0: Proof. P X = X (X 0 X) M X = (In P)X = X 1 X 0 X = X; PX = X X = 0: Theorem 7 X b = P y; e = M y: Note that X b is the “predicted value” of y given X and the estimator b. 11 Proof. X b = X (X 0 X) X 0 y = P y: e = y X b = y P y = [In P ] y = M y: 1 Theorem 8 e0 e = y 0 M y: Proof. e0 e = (M y)0 M y = y 0 M 0 M y = y 0 M M y = y 0 M y: Theorem 9 (Analysis of Variance) 0 y 0 y = X b X b + e0 e: Proof. 0 y 0 y = y 0 (P + M ) y = y 0 P y + y 0 M y = y 0 P 0 P y + e0 e = (P y)0 P y + e0 e = X b X b + e0 e: 10 Problem Set 1. It can be shown that trace (AB) = trace (BA). Using such property of the trace operator, prove that trace (P ) = k if X has k columns. (Hint: trace (P ) = trace X (X 0 X) 1 X 0 = trace X (X 0 X) = trace (X 0 X) 1 1 X0 X 0 X = trace (X 0 X) 1 X 0 X = trace (I) : What is the dimension of the identity matrix?) 2. This question is taken from Goldberger. Let 2 3 1 2 6 1 4 7 6 7 6 7 X = 6 1 3 7; 6 7 4 1 5 5 1 2 Using Matlab, calculate the following: X 0 X; (X 0 X) P = X (X 0 X) 1 1 ; (X 0 X) X 0; P y; 12 2 6 6 6 y=6 6 4 1 14 17 8 16 3 3 7 7 7 7: 7 5 X 0; (X 0 X) M =I P; 1 X 0 y; e = M y: 11 Application of Projection Algebra: Partitioned Regression X = X1 n k1 n k Note that k1 + k2 = k. Partition b accordingly: Note that b1 is (k1 1), and b2 is (k2 b= b1 b2 X2 n k2 ! 1). Thus we have y = X1 b1 + X2 b1 + e We characterize of b1 as resulting from two step regression: Theorem 10 We have where 11.1 ye Discussion b1 = X e10 X e1 e1 X M2 y; 1 e10 ye; X M2 X1 : Theorem 11 Write m = k1 , and X1 = [x1 ; : : : ; xm ] ; X2 = [xm+1 ; : : : ; xk ] : Then, ye = M2 y is the residual when y is regressed on X2 , and where e1 = M2 X1 = M2 [x1 ; : : : ; xm ] = [M2 x1 ; : : : ; M2 xm ] ; X M2 x1 is the residual when x1 is regressed on X2 . We can thus summarize the characterization of b1 in the following algorithm: Regress y on xm+1 ; : : : ; xk . Get the residual, and call it ye. (e y captures that portion of y not correlated with X2 , i.e., ye is y partialled out with respect to X2 ) 13 e1 . (e Regress x1 on xm+1 ; : : : ; xk . Get the residual, and call it x x1 captures that portion of x1 not correlated with X2 ) e2 ; : : : ; x em . Repeat second step for x2 ; : : : ; xm . Obtain x e1 ; : : : ; x em . The coe¢ cient estimate is numerically equal to b1 . Regress ye on x You may wonder why we need to do the regression in two steps when it can be done in one single step. The reason is mainly computational. Notice that, in order to compute b, a k k matrix X 0 X has to be inverted. If the number of regressors k is big, then your computer cannot implement this matrix inversion. This point will be useful in the application to the panel data. 11.2 Proof Lemma 5 Let M2 I 1 X2 (X20 X2 ) X20 : Then, M2 e = e: Proof. Observe that X20 e = 0 * X 0e = X10 X20 e= X10 e X20 e = 0: Therefore, M2 e = In e X2 (X20 X2 ) 1 X20 e = e: Lemma 6 Proof. Premultiply by M2 , and obtain M2 y = M2 X1 b1 + e: y = X1 b1 + X2 b1 + e M2 y = M2 X1 b1 + M2 X2 b1 + M2 e = M2 X1 b1 + e; where the second equality follows from the projection algebra and the previous lemma. Lemma 7 X10 M2 y = X10 M2 X1 b1 : 14 Proof. Premultiply M2 y = M2 X1 b1 + e by X10 , and obtain X10 M2 y = X10 M2 X1 b1 + X10 e = X 0 M2 X1 b1 ; 1 where the second equality follows from the normal equation. 12 R2 Let X1 = [x1 ; : : : ; xm ] ; X2 = `: Then, Remark 2 Note that ye = y e 1 = [x1 X y `; X2 (X20 X2 ) 1 x1 `; : : : ; xm 1 0 X20 = ` (`0 `) ` = ` (n) xm `] : 1 0 ` = 1 0 `` n and therefore X2 (X20 X2 ) 1 X20 y = 1 0 `` y = ` n 1 0 `y n = `y Because e 1 b1 + e; ye = X we obtain e 1 b1 + e ye0 ye = X 0 e 0 e = 0; and X 1 0 b0 e 0 e 1 b1 + e = b0 X e0 e b X 1 1 X1 1 + 2 1 X1 e + e e 0 e0 X e b = b10 X 1 1 1 + e e: Here, e0 e denotes the portation of ye0 ye “unexplained” by the variation of X1 (from its own sample average). The smaller it is relative to ye0 ye, the more of ye0 ye is explained by X1 . From this intuitive idea, we develop e0 e R2 = 1 ye0 ye as a measure of goodness of …t. Theorem 12 Suppose that X = [X1 ; X2 ] : Then, we have min (y b1 X1 b1 )0 (y X1 b1 ) 15 min (y b Xb)0 (y Xb) : Proof. We can write min (y b1 X1 b1 )0 (y Xb)0 (y X1 b1 ) = min (y b Xb) s:t: b2 = 0: The latter is bounded below by min (y b Xb)0 (y Xb) Corollary 3 1 13 minb1 (y Problem Set X1 b1 )0 (y ye0 ye X1 b1 ) 1 minb (y Xb)0 (y ye0 ye Xb) : 1. You are given 0 B B B B B y=B B B B @ 1 3 5 3 7 9 4 1 C C C C C C; C C C A 2 6 6 6 6 6 X1 = 6 6 6 6 4 X = [X1 ; X2 ] ; 1 1 1 1 1 1 1 3 5 3 2 8 4 7 3 7 7 7 7 7 7; 7 7 7 5 2 6 6 6 6 6 X2 = 6 6 6 6 4 2 4 6 1 7 3 1 3 7 7 7 7 7 7: 7 7 7 5 (a) Using Matlab, compute b = (X 0 X) 1 X 0 y; 0 e=y X b; e0 e; R2 = 1 e0 e= X (yi y)2 : i (b) Write b = b1 ; b2 ; b3 . Try computing b1 ; b2 by using the partitioned regression technique: First, using Matlab, compute e 1 = M2 X1 ; X ye = M2 y where M2 = I X2 (X02 X2 ) 1 X02 . (In Matlab, you would have to specify the dimension of the identity matrix. What is the dimension?) Second, using Matlab again, compute 1 e0 X e1 e 0 ye X X 1 1 Is the result of your two step calculation equal to the …rst two component of b that you computed before? 16 (c) Using Matlab, compute b Xb)0 (y argmin (y s.t. Rb = q; Xb) b where R (1; 1; 1) and q = [1]. (d) Using Matlab, compute Xb )0 (y e0 e = (y Xb ) : (e) Using Matlab, compute Rb Is it equal to e0 e e0 e? q 0 h 0 R (X X) 1 R 0 i 1 Rb q : 2. You are given 0 B B B B B y=B B B B @ 1 3 5 3 7 9 4 1 C C C C C C; C C C A 2 X = [X1 ; X2 ] ; 6 6 6 6 6 X1 = 6 6 6 6 4 1 1 1 1 1 1 1 3 5 3 2 8 4 7 3 7 7 7 7 7 7; 7 7 7 5 2 6 6 6 6 6 X2 = 6 6 6 6 4 2 4 6 1 7 3 1 3 7 7 7 7 7 7: 7 7 7 5 Using Matlab, compute b = (X 0 X) Now, compute M2 = I X2 (X02 X2 ) 1 X02 ; 1 X 0 y; e 1 = M2 X1 ; X e=y ye = M2 y; X b: e0 X e b1 = X 1 1 1 e 0 ye; X 1 e 1b : e = ye X 1 Verify that b1 is numerically identical to the …rst two components of b. Also verify that e = e. 17 Lecture Note 3: Stochastic Properties of OLS 14 Note on Variances For scalar random variable Z: Var (Z) E (Z E [Z])2 = E Z 2 (E [Z])2 For random vector Z = (Z1 ; Z2 ; : : : ; Zk )0 ; k 1 we have a variance - covariance matrix: Var (Z) E (Z E [Z]) (Z E [Z])0 2 E [(Z1 E [Z1 ]) (Z1 E [Z1 ])] E [(Z1 6 E [(Z2 E [Z2 ]) (Z1 E [Z1 ])] E [(Z2 6 =6 .. 4 . 2 E [(Zk E [Zk ]) (Z1 E [(Z1 E [(Z2 E [Z1 ]) (Zk E [Z2 ]) (Zk .. . E [Z2 ])] E [(Zk E [Zk ]) (Zk E [(Z1 E [Z1 ]) (Z2 E [Z2 ])] E (Z2 E [Z2 ])2 .. . E [(Z1 E [(Z2 E [Z1 ]) (Zk E [Z2 ]) (Zk .. . E [Z1 ])] E [(Zk 2 E (Z1 E [Z1 ]) 6 E [(Z2 E [Z2 ]) (Z1 E [Z1 ])] 6 =6 .. 4 . E [(Zk E [Zk ]) (Z1 E [Z1 ])] 2 Var (Z1 ) Cov (Z1 ; Z2 ) 6 Cov (Z2 ; Z1 ) Var (Z2 ) 6 =6 .. .. ... 4 . . It is useful to note that 15 15.1 E [Z2 ])] E [Z2 ])] E [Zk ]) (Z2 E [(Zk Cov (Zk ; Z1 ) Cov (Zk ; Z2 ) E (Z E [Z1 ]) (Z2 E [Z2 ]) (Z2 .. . E [Z]) (Z E [Zk ]) (Z2 3 Cov (Z1 ; Zk ) Cov (Z2 ; Zk ) 7 7 7 .. 5 . .. .. . . E [Z2 ])] Var (Zk ) E [Z])0 = E [ZZ 0 ] E [Z] E [Z]0 Classical Linear Regression Model I Model y =X +" X is a nonstochastic matrix 18 E (Zk 3 E [Zk ])] E [Zk ])] 7 7 7 5 E [Zk ])] 3 E [Zk ])] E [Zk ])] 7 7 7 5 E [Zk ])2 X has a full column rank (Columns of X are linearly independent.) E ["] = 0 E [""0 ] = 15.2 2 2 In for some unknown positive number Discussion We will later discuss the case where X is stochastic such that (i) y = X + "; (ii) X has a full column rank; (iii) E ["j X] = 0; (iv) E [""0 j X] = 2 In . The third assumptions amounts to the identi…ability condition on . Suppose X does not have a full column rank. Then, by de…nition, we can …nd some 6= 0 such that X = 0. Now, even if " = 0, we would have y =X =X +X =X( + ) so that we would not be able to di¤erentiate from + from the data E ["] = 0 is a harmless assumption if we believe that E ["i ] are the same regardless of i. Write this common value as 0 . Then, we may rewrite the model as yi = 0 xi0 + xi1 + 1 + k xik + ui for u i = "i 0 and xi0 = 1: E [""0 ] = 2 In consists of two parts. First, it says that all the diagonal elements, i.e., variances of the error terms are equal. This is called the homoscedasticity. Second, it says all the o¤-diagonal elements are zeros. (This will not be satis…ed in the time series environment.) 15.3 Properties of OLS Lemma 8 b = + (X 0 X) 1 X 0" Proof. b = (X 0 X) = (X 0 X) 1 X 0 y = (X 0 X) 1 1 X 0 X + (X 0 X) 19 X 0 (X + ") 1 X 0" = + (X 0 X) 1 X 0 ": h i Theorem 13 Under the Classical Linear Regression Model I, we have E b = 2 (X 0 X) 1 Proof. h i E b = and + (X 0 X) Var b = E 1 h X 0 E ["] = b 1 0 = E (X X) 16 1 + (X 0 X) b 0 X 00 = 0 0 1 0 X "" X (X X) i = (X 0 X) 1 X 0 E [""0 ] X (X 0 X) 1 = (X 0 X) 1 X0 1 2 and Var b = I X (X 0 X) = 2 (X 0 X) 1 X 0 X (X 0 X) = 2 (X 0 X) 1 : 1 Estimation of Variance Lemma 9 e = M" Proof. e = M y = M (X + ") = M X + M " = M " Lemma 10 trace (M ) = n k; trace (P ) = k: Proof. trace (P ) = trace X (X 0 X) 1 X 0 = trace (X 0 X) = k; trace (M ) = trace (In =n P ) = trace (In ) k: 20 trace (P ) 1 X 0 X = trace (Ik ) Theorem 14 Let s2 = e0 e n k : Then, E s2 = 2 Proof. Observe that e0 e = "0 M 0 M " = "0 M " = trace ("0 M ") = trace (M ""0 ) ; and hence, E [e0 e] = E [trace (M ""0 )] = trace (M E [""0 ]) = trace M = (n 2 k) Corollary 4 In other words, s2 (X 0 X) 17 1 2 I = 2 trace (M ) : h i 1 E s2 (X 0 X) = Var b is an unbiased estimator of Var b . Basic Asymptotic Theory: Convergence in Probability Remark 3 A sequence of nonstochastic real numbers an converges to a, if for any > 0, there exists N = N ( ) such that jan aj < for all n N . De…nition 2 A sequence of random variables fzn g converges in probabilityto c, a deterministic number, if lim Pr [jzn cj ]=0 n!1 for any > 0. We sometimes write it as plimn!1 zn = c. For a sequence of random matrices An , we have the convergence in probability to a deterministic matrix A if every element of An convergences in probability to the corresponding element of A. Remark 4 If an is a sequence of nonstochastic real numbers converging to a, and if g ( ) is continuous at a, we have limn!1 g (an ) = g (a). Theorem 15 (Slutzky) Suppose that plimn!1 zn = c. Also suppose that g ( ) is continuous at c. We then have plimn!1 g (zn ) = g (c). 21 Corollary 5 If plim z1n = c1 ; plim z2n = c2 ; n!1 n!1 then plim (z1n + z2n ) = c1 + c2 ; plim z1n z2n = c1 c2 ; n!1 n!1 and if c2 6= 0, z1n c1 = : c2 n!1 z2n plim Theorem 16 (LLN) Given a sequence fzi g of i.i.d. random variables such that E [jzi j] < 1, P we have plimn!1 z n = E [zi ], where z n = n1 ni=1 zi . Corollary 6 Given a sequence fzi g of i.i.d. random variables such that E [jg (zi )j] < 1, we have n 1X plim g (zi ) = E [g (zi )] : n!1 n i=1 18 18.1 Classical Linear Regression Model III Model yi = x0i + "i (x0i ; "i ) i = 1; 2; : : : is i.i.d. xi ?"i E ["i ] = 0, Var ("i ) = 2 E [xi x0i ] is positive de…nite. Furthermore, all of its elements are …nite. 18.2 Some Auxiliary Lemmas Lemma 11 1X xi x0i n i=1 n b= Proof. b = (X 0 X) 1 X 0 y = (X 0 X) = + 1 + ! 1 ! n 1X x i "i : n i=1 1 X 0 (X + ") = + (X 0 X) X 0 " ! 1 ! ! n n n X X X 1 xi x0i x i "i = + xi x0i n i=1 i=1 i=1 22 1 ! n 1X x i "i : n i=1 Lemma 12 s2 = 1 n k Proof. 1 2 s = n k = n X 2 n X e2i = i=1 k n X n "2i k n X Lemma 14 plimn!1 1 n Proof. n X 2 "i x0i i=1 i=1 n Pn i=1 Pn i=1 k ! x0i b yi 1 i=1 1 n n X "i x0i i=1 b + b 1 2 = ! n k b 1 0 n n X k i=1 1 0 n 1 n Pn i=1 k xi x0i = E [xi x0i ] x i "i = 0 n "2i = 2 1X 2 plim "i = E "2i = n!1 n i=1 n 2 : Large Sample Property of OLS De…nition 3 An estimator b is consistent for Theorem 17 b is consistent for . Proof. 2 if plimn!1 b = . ! n X 1 plim b = plim 4 + xi x0i n n!1 n!1 i=1 ! 1 n X 1 xi x0i = + plim n!1 n i=1 = + E [xi x0i ] 1 0 = : 23 1 xi x0i i=1 x0i b x0i + "i + b n X 1X plim xi "i = E [xi "i ] = E [xi ] E ["i ] = 0 n!1 n i=1 Lemma 15 plimn!1 18.3 k 1 Lemma 13 plimn!1 Proof. n i=1 1 n "2i 1 !3 n X 1 x i "i 5 n i=1 ! n X 1 plim x i "i n!1 n i=1 ! b : 2 n X i=1 xi x0i ! b Theorem 18 plimn!1 s2 = 2 Proof. plim n!1 n 1X 2 s = plim "i n n!1 n i=1 k n 2 1X 0 "i x i n i=1 n plim 2 n!1 n!1 = 2 1X 0 "i x i n i=1 n 2 plim n!1 0 + plim b n!1 = 19 b 1X xi x0i n i=1 0 + plim b ! n ! plim n!1 2 ! b plim b n!1 ! n 1X xi x0i plim b n i=1 n!1 Large Sample Property of OLS with Model I? Lemma 16 If a sequence of random variables fzn g is such that E (zn plimn!1 zn = c. c)2 ! 0, then Proof. Let > 0 be given. Note that 2 E (zn c) = Z c)2 dFn (z) (z where Fn denotes the CDF of zn . But Z Z Z 2 2 (z c) dFn (z) = (z c) dFn (z) + (z c)2 dFn (z) jz cj jz cj< Z (z c)2 dFn (z) jz cj Z Z 2 2 dFn (z) = dFn (z) = 2 Pr [jz cj jz cj jz cj It follows that Pr [jz cj ] c)2 E (zn 2 24 !0 ] h We i will now use the fact that, under the Classical Linear Regression Model I, we have b E = and Var b = 2 (X 0 X) 1 . We …rst note that E b 2 0 b =E = E trace b = trace E 2 b b 0 1 b b 0 = trace Var b = 2 0 trace @ i=1 n 1X xi x0i n i=1 1 n ! 11 0 n 1X @ trace xi x0i n i=1 ! 11 A for all n, we can conclude that from which we can conclude that 1 n 0 n X i=1 xi x0i ! 11 n X 1 A= xi x0i trace @ n n i=1 Therefore, IF we assume that E 0 b 1 (X 0 X) = 2 trace (X 0 X) 0 0 ! 11 n X A = 2 trace @ n = 2 trace @ xi x0i = trace b = E trace 2 A ! 11 A B<1 2 b !0 plim b = n!1 P The only question is how we can make sure that trace n1 ni=1 xi x0i textbooks adopt di¤erent assumptions to ensure this property. 25 1 B < 1. Di¤erent Lecture Note 4: Statistical Inference with Normality Assumption 20 Review of Multivariate Normal Distribution De…nition 4 A square matrix A is called positive de…nite if t0 At > 0 8t 6= 0: Theorem 19 A symmetric positive de…nite matrix is nonsingular. De…nition 5 (Multivariate Normal Distribution) An n-dimensional random (column) vector Z has a multivariate normal distribution if its joint pdf equals (2 )n=2 for some 1 s det and positive de…nite Z Theorem 20 Let L be an m 0 z n 1 1 (z ) n 1 n n . We then write Z N( ; ) Z 1 2 exp ) N ( ; ). It can be shown that E [Z] = ; Var (Z) = : n deterministic matrix. Then, N( ; ) ) LZ Z= Z1 Z2 N (L ; L L0 ) : Theorem 21 Assume that has a multivariate normal distribution. Assume that E [(Z1 and Z2 are independent. 1 )(Z2 0 2) ] = 0. Then, Z1 Theorem 22 Suppose that Z1 ; : : : ; Zn are i.i.d. N (0; 1). Then, Z = (Z1 ; : : : ; Zn )0 = N (0; In ). Theorem 23 Z N (0; In ) ) Z 0Z 2 (n) : n is called the degrees of freedom. Theorem 24 Suppose that an n 2 Z 0 1Z (n) n matrix is positive de…nite, and Z N (0; ). Then, Theorem 25 Suppose that an n n matrix A is symmetric and idempotent, and Z 2 Then, Z 0 AZ (trace (A)) 26 N (0; In ). 21 Classical Linear Regression Model II In addition to the assumptions of the classical linear regression model I, we now assume that " has a multivariate normal distribution 21.1 Sampling Property of OLS Estimator Theorem 26 Proof. Recall that b N h i E b = ; 2 ; 1 (X 0 X) Var b = 2 : (X 0 X) 1 even without the normality assumption. It thus su¢ ces to establish normality. Note that b = (X 0 X) 1 X 0 "; a linear combination of the multivariate normal vector ". Thus, b normal distribution. De…nition 6 For simplicity of notation, write V 22 2 (X 0 X) 1 b V ; s2 (X 0 X) , and hence b has a 1 Con…dence Interval: Known Variance For a given k 1 vector r, we are interested in the inference on r0 . Speci…cally, we want to construct a 95% con…dence interval. Theorem 27 (95% Con…dence Interval) The random interval p p r0 b 1:96 r0 Vr; r0 b + 1:96 r0 Vr contains r0 with 95% probability: h p Pr r0 b 1:96 r0 Vr i p r0 b + 1:96 r0 Vr = :95 r0 Proof. Because r0 b we have and Pr " r0 b p N (r0 ; r0 Vr) ; r0 r0 Vr r0 b p N (0; 1) # r0 r0 Vr 1:96 = :95 27 Corollary 7 Write = ( 1; : : : ; b = b1 ; : : : ; bk 0 k) ; Note that bj = a0 b; j 0 : = a0j ; j where aj is the k-dimensional vector whose jth component equals 1 and the remaining elements all equal 0. The preceding theorem implies that bj p 1:96 Vjj ; p bj + 1:96 Vjj ; where Vjj is the (j; j)-element of V, is a valid 95% con…dence interval for j . Noting that Vjj is the variance of bj , we come back to the undergraduate con…dence interval: p estimator 1:96 variance! 23 Hypothesis Test: Known Variance Theorem 28 (Single Hypothesis: 5% Signi…cance Level) Given H0 : r 0 = q vs: HA : r0 6= q; the test which rejects the null i¤ r0 b q p r0 Vr has a size equal to 5%: Pr " 1:96 # r0 b q p r0 Vr 1:96 = :05 Corollary 8 Suppose we want to test H0 : j = 0 vs: HA : j 6= 0 The preceding theorem suggests that we reject the null i¤ b pj Vjj 1:96: This con…rms our undergraduate training based procedure rejecting the null i¤ q bj variance of bj 28 1:96: Theorem 29 (Multiple Hypotheses: 5% Signi…cance Level) Given H0 : R m k =q HA : R 6= q; vs: the test which rejects the null i¤ has a size equal to 5%. Rb 0 q Rb 1 (RVR0 ) 2 :05 q (m) Proof. It follows easily from and Rb 24 Rb q 0 N (0; RVR0 ) R 1 (RVR0 ) Rb 2 q (m) Problem Set 1. (In this question, you are expected to verify the theorems discussed in the class using MATLAB. Turn in your MATLAB program along with the result.) Consider the linear model given by yi = 1 + 2 xi2 + i ; i = 1; : : : ; n; where N (0; i 2 ) i:i:d: Let b1 and b2 denote the OLS estimators of 1 and 2 . Suppose that and n = 9. Note that the above model can be compactly written as 2 6 6 X=6 4 1 1 .. . 1 2 .. . 1 9 3 7 7 7 5 and 1 = 2 (a) Suppose that xi2 = i. Show that b2 29 N 1; = 2 = 2 =1 (1) y=X + for 1 1 60 : : (b) Show that P b2 1 1:96 p 60 2 b2 + 1:96 p1 = :95 60 Let u(1) ; : : : ; u(1000) denote 1,000 independent N (0; 2 In ) random vectors. Notice that y in (1) has the same distribution as y (j) given by y (j) X + u(j) : (j) (j) Let b2 denote the OLS estimator of 2 of the above model. b2 are i.i.d. random variables which has the same distribution as b2 . Let ( (j) b(j) + 1:96 p1 1 if b2 1:96 p160 2 2 60 D(j) = 0 otherwise Show that we have E[D(j) ] = :95 Argue that 1 X D(j) 1000 j=1 1000 :95 Verify that this indeed is the case by generating 1,000 independent uj from the computer. (This type of experiment is called the Monte Carlo.) 25 Con…dence Interval: Unknown Variance Lemma 17 e0 e 2 2 Proof. Let u Then, e0 e 2 = u0 M u " 2 (n k) : N (0; In ) : (trace (M )) = Lemma 18 b and e0 e are independently distributed. 2 (n k) : Proof. It su¢ ces to prove that b and e are independently distributed. But ! b (X 0 X) 1 X 0 " (X 0 X) 1 X 0 = = " M" M e 30 has a multivariate normal distribution because it is a linear combination of ". It thus su¢ ces to prove that b and e are uncorrelated. Their covariance equals h i h i 1 1 0 0 0 0 0 b E e = E (X X) X "" M = (X 0 X) X 0 E [""0 ] M 1 = (X 0 X) Theorem 30 r0 b r0 p b r0 Vr Proof. Notice that r 0 We also have b 2 0 N 0; 0 r (X X) 1 t (n e0 e 2 2 Now observe that b (X 0 X) 1 (X 0 M ) = 0: r0 b q r0 (X 0 X) N (0; 1) : 1 r k) and e0 e are independently distributed so that It thus follows that . q 0 b r0 (X 0 X) r q e0 e (n k) 2 Because p (n 2 I M= k) ) r 2 X0 e0 e/ (n r0 b q r0 (X 0 X) 1 r =p r0 b e0 e/ (n q k) r0 (X 0 X) we obtain the desired conclusion. 1 r ? e0 e 2 : r0 b r0 b q = q 1 0 0 k) r (X X) r s r0 (X 0 X) 1 r =q r0 b r0 s2 (X 0 X) 1 r t (n 1 r r0 b = p r0 b r0 Vr Theorem 31 (95% Con…dence Interval) The random interval p p 0b 0b 0 b b ; r t:025 (n k) r Vr; r + t:025 (n k) r0 Vr where t:025 (n k) denotes the upper 2.5 percentile of the t (n with 95% probability: p h 0b b Pr r t:025 (n k) r0 Vr r0 r0 b + t:025 (n 31 k) : k) distribution, contains r0 k) p b r0 Vr i = :95 Proof. It follows easily from r0 b p Theorem 32 1 Rb m Proof. Observe that Rb 0 R 0 R n h R r0 b r0 Vr 1 b 0 RVR 2 1 (X 0 X) being a function of b, is independent of k) s2 (n t (n = 2 i k) : Rb R0 R o e0 e 1 F (m; n Rb 2 2 R (n 2 k) (m) ; k) : It thus follows that Rb 0 R R (X 0 X) 1 1 R0 Rb 2 m, R 1 k) s2 (n n k 1 (n 2 F (m; n k) Because Rb = Rb 0 R R (X 0 X) 1 1 R0 Rb 2 R 0 R (X 0 X) 1 1 R0 s2 0 1 1 b 0 Rb R RVR Rb m we obtain the desired conclusion. m, R Rb R R ; = n m = k 1 Rb m k) s2 2 R 0 Rs2 (X 0 X) Corollary 9 Pr 1 Rb m R 0 b 0 RVR 1 Rb Remark 5 Suppose you want to test H0 : R = q vs: R F:05 (m; n k) = :05 HA : R 6= q We would then reject the null under 5% signi…cance level i¤ 1 Rb m q 0 b 0 RVR 1 Rb 32 q F:05 (m; n k) 1 R0 1 Rb R Remark 6 Observe that the statistics in Theorems 29 and 32 are identical except that replaced by s2 in Theorem 32. Theorem 33 1 Rb m 0 q 1 b 0 RVR Rb q = 2 is e0 e)/ m (e0 e ; e0 e/ (n k) where e is the residual from the restricted least squares Xb)0 (y min (y b subject to Xb) Rb = q Proof. Note that 1 Rb m q 0 b RVR Recall that 1 0 Rb q = e e = e e + Rb 0 Therefore, 1 Rb m q 0 0 b 0 RVR Rb 1 0 q Rb h 0 q 1 1 R0 s2 0 R (X X) q = R (X 0 X) 1 (e0 e R 0 i 1 Rb e0 e)/ m s2 Rb q m q : (e0 e e0 e)/ m e0 e/ (n k) = Corollary 10 Suppose that the …rst column of X consists of 1s. You would want to test H0 : 2 = = = 0: k (What are R and q?) We then have 1 Rb m q 0 b 0 RVR 1 Rb q = R2 / (k 1) : (1 R2 )/ (n k) Proof. We need to obtain e0 e …rst. Note that the constrained least squares problem can be written as X X min (yi b1 xi1 0 xi2 0 xik )2 = min (yi b1 )2 : b1 b1 i i We know that the solution is given by c1 = y. In other words, we have X b = (y; 0; : : : ; 0) ; e0 e = (yi y)2 = ye0 ye: i Our test statistic thus equals (e y 0 ye e0 e)/ (k 1) : e0 e/ (n k) 33 Note now that e0 e ye0 ye R2 = 1 The test statistic is thus equal to R2 / (k 1) : (1 R2 )/ (n k) Remark 7 The statistic R2 / (k 1) (1 R2 )/ (n k) is the “F -statistic” reported by many popular softwares. 26 Con…dence Interval for Mean: To be read, not to be taught in class Consider the estimation of from n i.i.d. N ( ; 2 ) random variables U1 ; : : : ; Un . It has been argued before that this is a special example of the classical linear regression model: y=X + where 0 1 U1 B C y = @ ... A ; Un The m.l.e. b equals 0 1 1 B C X = @ ... A ; 1 (`0 `) 0 B =@ = ; 1 0 1 `y =n X U1 Un Ui = U i the sample average! We also know that the distribution of b equals N ; Thus, the 95% con…dence interval for or 2 (`0 `) 1 =N ; 2 n is b 1:96 p ; b + 1:96 p n n U 1:96 p ; U + 1:96 p n n 34 1 .. . 1 C A What if we are not fortunate enough to know 2 ? Here, we can make use of the fact that U P 2 and i Ui U are independent of each other. Because of this independence, we know that U r p P i n 2 (Ui = U) 2 (n 1) p U n p It thus follows that we can use U as the 95% con…dence interval for 27 t:975 (n t(n s2 1): s 1) p n in this case. Problem Set In this problem set, you are expected to read and replicate results of Mankiw, Romer, and Weil (1992, QJE ). Use MATLAB. 1. Select observations such that the “nonoil” variable is equal to 1 and discard the rest of the observation. (How many observations do you have now?) For each country i, create yi = ln (GDP per working-age person in 1985) xi1 = 1 xi2 = ln (I / GDP) xi3 = ln (growth rate of the working age population between 1960 and 1985 + g + ) assuming that g + = :05. 2. Assume that yi = xi1 where "i i:i:d: N (0; 2 1 + xi2 2 + xi3 3 + "i ). Compute the OLS estimator b = b1 ; b2 ; b3 0 for = ( 1; 2; 0 3) . Compute the sum of squared residuals e0 e. Compute an unbiased estimator s2 of 2 . Compute an estimator of the variance-covariance matrix of b. Compute the standard deviations of b1 , b2 , and b3 . Present 95% con…dence intervals for 1 , 2 , and 3 . 3. Because R2 monotonically increases as more regressors are added to the model, some other measure has been developed. The adjusted R2 is computed as 2 R =1 sample size 1 1 sample size number of regressors 2 Compute R2 and R . 35 R2 4. You want to estimate the OLS estimator under the restriction that possibility is to rewrite the model as yi = xi1 1 + xi2 xi3 2 2 + "i = xi1 1 + (xi2 xi3 ) 2 3 2. = One + "i and consider the OLS of yi on xi1 and xi2 xi3 . Compute the OLS estimator b1 ; b2 of ( 1 ; 2 ) this way. (Note that this trick is an attempt to compute the restricted least squares of ( 1 ; 2 ; 3 ) = ( 1 ; 2 ; 2 ) as b1 ; b2 ; b2 .) Also compute the sum of squared residuals e0 e for this restricted model. 5. The restricted least squares problem in the previous question can be written as min (y c Xc)0 (y subject to Xc) Rc = q What is R? What is q? In your class, it was argued that the solution to such problem is given by h i 1 b (X 0 X) 1 R0 R (X 0 X) 1 R0 Rb q ; where b is the OLS estimator for the unrestricted model. Is it equal to b1 ; b2 ; which you obtained in the previous question? We also learned in class that i 1 0h 1 e0 e e0 e = R b q R (X 0 X) R0 Rb q : Subtract e0 e from e0 e . Is the di¤erence equal to R b 6. You can test the restriction that 3 = 2 q 0 R (X 0 X) or r0 = q for r0 = (0; 1; 1) 1 R0 1 Rb b2 0 , q ? q=0 by computing the t-statistic q r0 b q s2 r0 (X 0 X) (2) 1 r Compute the t-statistic in (2) by MATLAB, and implement the t-test under 5% signi…cance level. 7. You can test the restriction that 3 = 2 or R = q for R = (0; 1; 1) q=0 by computing the F -statistic Rb q 0 R (X 0 X) 1 R0 1 Rb q m (3) s2 What is m in this case? Compute the F -statistic in (3) by MATLAB, and implement the F -test under 5% signi…cance level. 36 8. Compute the square of the t-statistic in (6) and compare it with the F -statistic in (7). Are they equal to each other? In fact, if R consists of a single row so that r0 = R, then the square of the t-statistic as in (2) is numerically equivalent to the F -statistic as in (3). Provide a theoretical proof to such equality. 9. It was shown that the F -statistic computed as in (3) is numerically equal to e0 e/ (sample size e0 e)/ m (e0 e number of regressors in the unrestricted model) Compute the F -statistic this way, and see if it is indeed equal to the value you computed in (h). 10. You can test the restriction in a slightly di¤erent way. Rewrite the model as yi = xi1 1 + (xi2 xi3 ) 2 + xi3 ( 3 + 2) + "i This could be understood as a regression of yi on (1; xi2 xi3 ; xi3 ). If the restriction is correct, the coe¢ cient of xi3 in this model should be equal to zero. Therefore, if the estimated coe¢ cient of xi3 is signi…cantly di¤erent from zero, we can understand it as an evidence against the restriction, and reject it. Compute the regression coe¢ cient. Compute the t-statistic. Would you accept the restriction or reject it (under 5% signi…cance level)? 11. Based on their knowledge of capital’s share in income, Mankiw, Romer and Weil (1992) entertained the multiple hypothesis that ( 2 ; 3 ) = (0:5; 0:5). This could be written as 0 1 0 0 0 1 2 4 1 2 3 3 5= 0:5 0:5 Compute the corresponding F -statistic. Would you accept the null under 5% signi…cance level? 37 Lecture Note 5: Large Sample Theory I 28 Basic Asymptotic Theory: Convergence in Distribution De…nition 7 A sequence of distributions Fn (t) converges in distribution to a distribution F (t) if lim Fn (t) = F (t) n!1 at all points of continuity of F (t). With some abuse of terminology, if a sequence of random vectors zn , whose cdf are Fn (t), converges in distribution to a random variable z with cdf F (t) if Fn (t) converges in distribution to a distribution F (t). We will sometimes write d zn ! z and call F (t) the limiting distribution of zn . Theorem 34 A sequence of random variables Yn converges to a constant c if and only if it converges in distribution to a limiting distribution degenerate at c. Proof. Suppose that plimn!1 Yn = c. Let Fn ( ) denote the c.d.f. of Yn . It su¢ ces to show that lim Fn (y) = 0 if y < c; = 1 if y > c n Assume that y < c. Let = c Fn (y) = Pr [Yn y > 0 We then have y] = Pr [Yn Now assume that y > c. Let = y 1 Fn (y) = Pr [Yn > y] = Pr [Yn c y c] = Pr [Yn c ] Pr [jYn cj ]!0 c. c>y c] = Pr [Yn c> ] Pr [jYn cj > ] ! 0 so that lim F (y) = 1 n Now suppose that lim Fn (y) = 0 if y < c; n We have Pr [jYn cj > ] proving the theorem. Pr [Yn c h ] + Pr Yn 38 c> = 1 if y > c 2 i = Fn (c )+1 Fn c + 2 !0 d Theorem 35 (Continuous Mapping Theorem) If zn ! z and if g ( ) is a continuous funcd tion, then g (zn ) ! g (z) d Theorem 36 (Transformation Theorem) If an ! a and plim bn = b with b constant, then d d d an + bn ! a + b, an bn ! ab. If b 6= 0, thenan / bn ! a/ b. Theorem 37 (Central Limit Theorem) Given an i.i.d. sequence zi of random vectors with E [zi ] = …nite and Var (zi ) = …nite positive de…nite, we have p d n (z ) ! N (0; ) : Remark 8 Consider n i.i.d. random variables with unknown mean 2 . By the law of large numbers, we have X n 1 Xi2 ! E Xi2 ; X ! E [Xi ] and unknown variance i It follows that ^2 = n 1 X Xi2 i With the Slutsky Theorem, we obtain p X n 2 2 X ! ! N (0; 1) ^ In particular, we obtain Pr 1:96 < n1=2 X ^ < 1:96 ! :95 so that we can use ^ ^ 1:96 p ; X + 1:96 p n n as the asymptotic 95% con…dence interval for even when Xi does not necessarily have a normal distribution. X Theorem 38 If d and z2;n ! z; plim z1;n = c; n!1 then d z1;n + z2;n ! c + z; d z1;n z2;n ! c z: Theorem 39 (Delta Method) Suppose that p d n (zn c) ! N (0; 2 ) Also suppose that g ( ) is continuously di¤erentiable at c. We then have p d n (g (zn ) g (c)) ! N (0; g 0 (c)2 2 ) 39 Sketch of Proof. We have g (c) = g 0 (e c) (zn g (zn ) c) for some e c between zn and c. Because zn ! c in probability, and because g 0 ( ) is continuous, we have g 0 (e c) ! g 0 (c). Writing p p n (g (zn ) g (c)) = g 0 (e c) n (zn c) we obtain the desired conclusion. Theorem 40 (Multivariate Delta Method) Suppose that p d c) ! N (0; ) n (zn Also suppose that g ( ) is continuously di¤erentiable at c. We then have p d g (c)) ! N (0; G G0 ) n (g (zn ) where @g (c) @z 0 G 29 Classical Linear Regression Model III 29.1 Model yi = x0i + "i (x0i ; "i ) i = 1; 2; : : : is i.i.d. xi ?"i 2 E ["i ] = 0, Var ("i ) = E [xi x0i ] is positive de…nite. Furthermore, all of its elements are …nite. 29.2 Some Auxiliary Lemmas Lemma 19 1X xi x0i n i=1 n b= Lemma 20 s2 = 1 n k n X i=1 "2i 2 1 n k + n X i=1 "i x0i ! ! b 1 ! n 1X x i "i : n i=1 + b 40 1 0 n k n X i=1 xi x0i ! b : Lemma 21 plimn!1 1 n Lemma 22 plimn!1 1 n Lemma 23 plimn!1 1 n Lemma 24 Pn i=1 Pn i=1 Pn i=1 xi x0i = E [xi x0i ] x i "i = 0 "2i = p 2 1X d n xi "i ! N 0; n i=1 n 2 E [xi x0i ] : Proof. Let zi = xi "i . We have E [zi ] = E [xi "i ] = E [xi ] E ["i ] = 0; Var (zi ) = E "2i xi x0i = E "2i E [xi x0i ] = 2 E [xi x0i ] : Lemma 25 limn!1 t:975 (n) = 1:96 30 Large Sample Property of OLS if plimn!1 b = . De…nition 8 An estimator b is consistent for Theorem 41 b is consistent for . Theorem 42 plimn!1 s2 = Theorem 43 p Proof. We have p But we have 2 d n b ! N 0; 1X xi x0i n i=1 n n b = 1X xi x0i n i=1 n plim n!1 and ! 2 1 (E [xi x0i ]) ! 1 ! n 1 X p x i "i : n i=1 1 1 X d p xi "i ! N 0; n i=1 = [Exi x0i ] 1 n 41 2 : Exi x0i : Theorem 44 Suppose that g : Rk ! R is continuously di¤erentiable at @g (c) : @c0 (c) Then, p n g b such that d 2 g ( ) ! N 0; 1 ( ) (E [xi x0i ]) ( )0 : Proof. Delta Method. p b is a valid approximate 95% con…dence interval for r0 . Theorem 45 r0 b 1:96 r0 Vr Proof. We have p Now, notice that n r0 b r0 d ! N 0; v " # u n u X 1 plim tr0 xi x0i n i=1 n!1 and 2 r0 [Exi x0i ] 1 r : q 1 1 r r0 [Exi x0i ] 1 r0 [Exi x0i ] r= p plim s2 = : n!1 Thus, we have It thus follows that p n r 0b v " # u n u X 1 plim s tr0 xi x0i n n!1 i=1 r 0 v " # u n . u X 1 str0 xi x0i n i=1 1 r= 1 r=q and lim Pr r n!1 0b q 1:96 s2 r0 (X 0 X) 1 r r q 0 r 0b r0 b r: r0 s2 r0 (X 0 X) d 1 r ! N (0; 1) q + 1:96 s2 r0 (X 0 X) 1 r = :95 Theorem 46 Suppose that g : Rk ! R is continuously di¤erentiable at . Then, r 0 b V b b g b 1:96 is a valid approximate 95% con…dence interval for g ( ). 42 Proof. We have p n g b d 2 g ( ) ! N 0; and ( ) (E [xi x0i ]) 1 ( )0 ; p plim s2 = : n!1 Because is continuous and plim b = , we should have b = plim n!1 from which we obtain v " n # u u X 1 b xi x0i plim t n n!1 i=1 1 b = It thus follows that p n g b and " lim Pr g b n!1 v u . u g( ) st 31 b r t:025 (n 1X xi x0i n i=1 n b (X 0 X) 1:96 s2 Theorem 47 r0 b " k) Problem Set # ( ) q ( ) (E [xi x0i ]) 1 b =r b 1 g( ) s2 1 g b ( )0 ; g( ) b (X 0 X) r g b + 1:96 s2 1 d b 0 ! N (0; 1) b (X 0 X) p b is a valid approximate 95% con…dence interval for r0 . r0 Vr Mankiw, Romer, and Weil (1992, QJE ) considered the regression yi = xi1 with the restriction 3 = 2, 1 + xi2 2 + xi3 3 + "i where yi = ln (GDP per working-age person in 1985) xi1 = 1 xi2 = ln (I / GDP) xi3 = ln (growth rate of the working age population between 1960 and 1985 + g + ) In other words, they regressed yi on xi1 and xi2 xi3 . They noted that the coe¢ cient of xi2 in this restricted regression is an estimator of 1 , where is capital’s share in income. 43 xi3 1 b # = :9 1. In Table 1, Mankiw, Romer, and Weil (1992) report an estimator b of implied by the OLS coe¢ cient of xi2 xi3 . Con…rm their …ndings with the data set provided for the three samples. 2. Mankiw, Romer, and Weil (1992) also report the standard deviation of b. Using deltamethod, con…rm their results. 44 Lecture Note 6: Large Sample Theory II 32 32.1 Linear Regression with Heteroscedasticity: Classical Linear Regression Model IV Model yi = x0i + "i (x0i ; "i ) i = 1; 2; : : : is i.i.d. E ["i j xi ] = 0 2 Var ( "i j xi ) (xi ) not known E [xi x0i ] is positive de…nite. We are going to consider the large sample property of ! ! 1 n n X X 1 1 0 b= + xi xi x i "i n i=1 n i=1 and the related inference. 32.2 Some Useful Results Lemma 26 1X x i "i = 0 plim n!1 n i=1 n Proof. 1X xi "i = E [xi "i ] = E [E [xi "i j xi ]] = E [xi E ["i j xi ]] = E [xi 0] = 0: n!1 n i=1 n plim Lemma 27 1X 2 0 plim "i xi xi = E xi x0i "2i : n n!1 i=1 n 45 Lemma 28 p 1X d n xi "i ! N 0; E "2i xi x0i n i=1 n : Proof. Let zi x i "i : We have Var (zi ) = E "2i xi x0i : E [zi ] = 0; Apply CLT. Lemma 29 Under reasonable conditions, we have 1X 2 0 ei xi xi = E "2i xi x0i n!1 n i=1 n plim Proof. For simplicity, I will assume that xi is a scalar. It su¢ ces to prove that 1X 2 2 xi ei plim n!1 n i=1 n x2i "2i = 0: We have 2"i xi b = "2i so that e2i "2i = 2"i xi b 1X 2 2 x e n i=1 i i n 2 2 "i x i b 2 + jxi j2 b 1X 2 2 x e n i=1 i i 2 + x2i b + x2i b 2 j"i j jxi j b Thus, 2 xi b e2i = "i : 2 + x2i b n "2i "2i 2X j"i j jxi j3 n i=1 n ! ! 0: 1X jxi j4 n i=1 n b + if E j"i j jxi j3 < 1; 46 E jxi j4 < 1: ! b 2 32.3 Large Sample Property Theorem 48 plim b = : n!1 Proof. 1X xi x0i n i=1 n plim n!1 ! 1 1X x i "i n i=1 n Theorem 49 ! 1X xi x0i n i=1 n = plim n!1 p where n b [Exi x0i ] But we have n b plim n!1 and n plim n!1 1 1 E "2i xi x0i [Exi x0i ] n 1X xi x0i n i=1 ! ! 1 : ! n X 1 p x i "i : n i=1 1 = [Exi x0i ] 1 X d p xi "i ! N 0; E "2i xi x0i n i=1 1 n Theorem 50 Let Then, 1X x i "i n i=1 d n 1X xi x0i n i=1 = 1 ! N (0; ) ; Proof. We have p ! " n X bn = 1 xi x0i n i=1 # 1 " n 1X 2 0 e xi xi n i=1 i #" plim b n = : : n 1X xi x0i n i=1 # 1 : n!1 Theorem 51 Let We then have c W " n # X 1b xi x0i n = n i=1 r0 b r0 p r0 c Wr 1 " n X i=1 e2i xi x0i #" ! N (0; 1) 47 n X i=1 xi x0i # 1 ! = E [xi x0i ] 1 0 = 0: Proof. Corollary 11 r0 b r0 b r0 p r0 c Wr r0 b = q p r0 1 0b r nr n = n r0 b r0 q r0 b n r ! N (0; 1) p 1:96 r0 c Wr is a valid approximate 95% con…dence interval for r0 . Remark 9 For many practical purposes, we may understand c W as equal to Var b 33 Problem Set Mankiw, Romer, and Weil (1992, QJE ) considered the regression yi = xi1 with the restriction 3 = 2, 1 + xi2 2 + xi3 3 + "i where yi = ln (GDP per working-age person in 1985) xi1 = 1 xi2 = ln (I / GDP) xi3 = ln (growth rate of the working age population between 1960 and 1985 + g + ) In other words, they regressed yi on xi1 and xi2 xi3 . They noted that the coe¢ cient of xi2 in this restricted regression is an estimator of 1 , where is capital’s share in income. xi3 1. Using White’s formula, construct a 95% con…dence intervals for the coe¢ cients of xi1 and xi2 xi3 . 2. In Table 1, Mankiw, Romer, and Weil (1992) report an estimator b of implied by the OLS coe¢ cient of xi2 xi3 . Con…rm their …ndings with the data set provided for the three samples. 3. Mankiw, Romer, and Weil (1992) also report the standard deviation of b. Combining White’s formula with delta-method, construct a 95% con…dence interval for . 48 Lecture Note 7: IV 34 Omitted Variable Bias Suppose that yi = xi + wi + "i : We do not observe wi . Our object of interest is . 34.1 Bias of OLS What will happen if we regress yi on xi alone? P P P b = Pi xi yi = + Pi xi wi + Pi xi "i = + 2 2 2 i xi i xi i xi 1 n Letting P xi wi Pi 2 + 1 i xi n P x i "i Pi 2 ! i xi 1 n 1 n + E [xi wi ] E [x2i ] E [xi wi ] ; E [x2i ] we have plim b = Because + : P xw Pi i 2 i ! ; i xi we can interpret as the probability limit of the OLS when wi is regressed on xi : Unless wi and xi are uncorrelated, will be nonzero, and OLS will be biased. 34.2 IV Estimation Suppose that we also observe zi such that E [zi wi ] = 0 E [zi "i ] = 0 E [zi xi ] 6= 0 Note that we can regard ui wi + "i the “error term”in the regression of yi on xi . Also note that E [zi ui ] = E [zi wi ] + E [zi "i ] = 0 49 Consider bIV P zy Pi i i ; i zi xi which is obtained by replacing xi by zi in the numerator and the denominator of the OLS formula. Observe that P P z (x + u ) zi ui E [zi ui ] i i i i bIV = P = + Pi ! + = : E [zi xi ] i zi xi i zi xi We thus have 35 plim bIV = : Problem Set Suppose that yi = x0i + wi0 + "i : We do not observe wi . Our object of interest is . We assume that E [xi "i ] = 0; E [wi "i ] = 0 What is the probability limit of the OLS estimator of if we regress yi on xi alone? Hint: ! ! 1 n n X 1 1X xi x0i xi yi bOLS = n i=1 n i=1 ! 1 ! ! 1 ! n n n n X X X X 1 1 1 1 = + xi x0i xi wi0 + xi x0i x i "i n i=1 n i=1 n i=1 n i=1 When is the OLS estimator consistent for ? 36 Errors in Variables For simplicity, we assume that every random variable is a zero mean scalar random variable. Suppose that + "i yi = xi We do not observe xi . Instead, we observe a proxy xi = xi + ui 50 36.1 Bias of OLS What would happen if we regress yi on xi ? Condition 1 E ["i ui ] = 0; E ["i xi ] = 0; E [ui xi ] = 0 Observe that yi = xi + ("i ui ) : We thus have P b = Pi xi yi = 2 i xi But, + P i xi ("i P ui ) 2 i xi ! + E [xi ("i ui )] : 2 E [xi ] E x2i = E xi 2 + E u2i and E [xi ("i We thus have bias toward zero. 36.2 plim b = ui )] = E [(xi + ui ) ("i E [u2i ] = E [xi 2 ] + E [u2i ] ui )] = E u2i : E [xi 2 ] ; E [xi 2 ] + E [u2i ] IV estimation Suppose that we also observe zi = xi + vi ; where Condition 2 vi is independent of xi ; "i ; ui Consider bIV P zy Pi i i ; i zi xi which is obtained by replacing xi by zi in the numerator and the denominator of the OLS formula. Observe that P P z [x + (" u )] zi ("i ui ) E [zi ("i ui )] i i i i bIV = i P = + i P ! + : E [zi xi ] i zi xi i zi xi But, E [zi ("i ui )] = E [(xi + vi ) ("i We thus have plim bIV = : 51 ui )] = 0: 37 37.1 Simultaneous Equation: Identi…cation A Supply-Demand Model qd = 1 p+ qs = 1 p d y+ 2 + (Demand) s x+ 3 (Supply) q = qd = qs 1 1 p q 1 1 1 = 1 p q 1 1 0 2y 2 1y 1 + 3 + 3 d 1 3x 1 + s d + s 1 y x 3x + d y x 0 2 1 1 = 0 1 1 1 0 2 + (Equilibrium) 1 1 s =0 1 1 1 d 1 s 1 : Important observation: Both p; q in equilibrium will be correlated with d ; s . What happens in the “demand”regression? The probability limit will equal E [p2 ] E [py] E [py] E [y 2 ] 1 E [pq] E [yq] = E p E = E [p2 ] E [py] E [py] E [y 2 ] + 2 But d 1 h d 2 i E 1 1 1 E p E y d d d s 6= 0 in general! 37.2 General Notation Individual observation consists of (wi0 ; zi0 ) Here, wi denote the vector of endogenous variables, and zi denote the vector of exogenous variables. We assume that there is a linear relationship: w0 = z 0 B + 0 ; where denotes the vector of “errors”. Our assumption is that z is uncorrelated with : E [z 0 ] = 0: 52 37.3 Identi…cation Suppose that we know the exact population joint distribution of (w0 ; z 0 ). Can we compute and B from this distribution? In many cases, we are not interested in the estimation of the whole system. Rather we are interested in the estimation of just one equation. Assume without loss of generality that it is the …rst equation. Write w1 = Here, I assume that 0 w + 0 1 w1 w = @ w A; w0 0 1 z + : z z0 z= : Our restriction that E [z 0 ] = 0 implies that E zw1 = E [zw 0 ] + E [zz 0 ] = [E [zw 0 ] ; E [zz 0 ]] A necessary condition for the identi…cation of and the dimension of x is bigger than that of ( 0 ; 0 )0 : dim (z) from this system of linear equations if dim ( ) + dim ( ) = dim (w ) + dim (z ) But dim (z) = dim (z ) + dim z 0 : We thus have dim z 0 37.4 dim (w ) : General Identi…cation We have y = x0 + u; where the only restriction given to us is that E [zu] = 0: Identi…cation: E [zy] = E [zx0 ] A necessary condition for identi…cation is dim (z) dim ( ) Remark 10 You would like to make sure that the rank of the matrix E [zx0 ] is equal to dim ( ) as well, but it is not very important at the …rst year level. It will become important later. 53 37.5 Estimation with ‘Exact’Identi…cation When dim (z) = dim ( ), we say that the model is exactly identi…ed. We can then see that the matrix E [zx0 ] is square, and that = (E [zx0 ]) 1 E [zy] By exploiting the law of large numbers, we can construct a consistent esitmator of : ! 1 ! ! 1 ! n n n n X X X X 1 1 zi x0i zi yi = zi x0i zi yi = bIV ! n i=1 n i=1 i=1 i=1 The IV estimator is usually written in matrix notations: 38 bIV = (Z 0 X) 1 Z 0y Asymptotic Distribution of IV Estimator Theorem 52 Suppose that zi is independent of "i . Then where 2 " = Var ("i ). p n bIV ! N 0; 2 " (E [zi x0i ]) 1 E [zi zi0 ] (E [xi z Proof. Problem Set. 39 Problem Set All questions here are taken from Greene. ys denote endogenous variables, and xs denote exogenous variables. 1. Consider the following two-equation model: y1 = 1 y2 + 11 x1 + 21 x2 + 31 x3 + "1 y2 = 2 y1 + 12 x1 + 22 x2 + 32 x3 + "2 (a) Verify that, as stated, neither equation is identi…ed. (b) Establish whether or not the following restrictions are su¢ cient to identify (or partially identify) the model: i. 21 = 32 =0 ii. 12 = 22 =0 iii. 1 =0 iv. 2 = v. 21 + 1 and 22 32 =0 =1 54 2. Examine the identi…ability of the following supply and demand model: (Demand) ln Q = 0 + 1 ln P + 2 ln (income) + "1 ln Q = 0 + 1 ln P + 2 ln (input cost) + "2 (Supply) 3. Consider a linear model yi = x0i + "i m 1 with the restriction that E zi "i m 1 Derive the asymptotic distribution of IV ! 1 n X 1 bIV = zi x0i n i=1 =0 1X zi yi n i=1 n under the assumption that E (zi "i ) (zi "i )0 = (b) Show that n bIV = n 1X 0 zi xi n i=1 1X 0 zi xi n i=1 n converges to (E [zi x0i ]) = (Z 0 X) 1 ! ! 1 1 X p zi "i n i 1 1 in probability. (c) Show that 1 X p zi "i n i converges in distribution to N 0; (d) Conclude that p n bIV N 0; 2 "E [zi zi0 ] converges in distribution to 2 " (E [zi x0i ]) 55 1 Z 0y [zi zi0 ]. 2 "E (a) Show that p ! E [zi zi0 ] (E [xi zi0 ]) 1 ! Lecture Note 8: MLE 40 MLE We have a collection of i.i.d. random vectors Zi i = 1; : : : ; n such that Zi f (z; ) Here, f (z; ) denotes the (common) pdf of Zi . Our objective is to estimate . De…nition 9 (MLE) Assume that Z1 ; : : : ; Zn are i.i.d. with p.d.f. f (zi ; maximizes the likelihood: b = argmax c 40.1 n Y f (Zi ; c) = argmax c i=1 n X 0 ). The MLE b log f (Zi ; c) : i=1 Consistency Theorem 53 (Consistency) Assume that Z1 ; : : : ; Zn are i.i.d. with p.d.f. f (zi ; plimn!1 b = under some suitable regularity conditions. 0 ). Then, Below we provide an elementary proof of consistency. Write h (Zi ; c) = log f (Zi ; c) for simplicity of notation. We assume the following: Condition 3 There is a unique 0 2 such that max E [h (Zi ; c)] = E [h (Zi ; 0 )] c2 Remark 11 Because log is a concave function, we can use Jensen’s Inequality and conclude that f (Zi ; c) f (Zi ; c) E [log f (Zi ; c)] E [log f (Zi ; 0 )] = E log log E f (Zi ; 0 ) f (Zi ; 0 ) But f (Zi ; c) E = f (Zi ; 0 ) and hence Z f (z; c) f (z; f (z; 0 ) E [log f (Zi ; c)] 0 ) dz E [log f (Zi ; = Z 0 )] f (z; c) dz = 1 log (1) = 0 for all c. In other words, E [log f (Zi ; c)] E [log f (Zi ; for all c. 56 0 )] Condition 4 De…ne B ( ) f 2 :j 0j g. For each max E [h (Zi ; c)] < E [h (Zi ; c2B( ) Condition 5 maxc2 1 n Pn i=1 > 0, 0 )] : E [h (Zi ; c)] ! 0 almost surely. h (Zi ; c) Sketch of Proof. Fix > 0. Let = E [h (Zi ; and note that and 0 )] ; = max E [h (Zi ; c)] : c2B( ) < . But 1X max h (Zi ; c) ! max E [h (Zi ; c)] c2B( ) n c2B( ) i=1 n 1X h (Zi ; c) ! max E [h (Zi ; c)] max c2 c2 n i=1 n almost surely. It follows that b 62 B( ) for n su¢ ciently large. Thus, lim b n!1 0 almost surely < Since the above statement holds for every > 0, we have b ! 40.2 0 almost surely. Fisher Information with One Observation Remark 12 Without loss of generality, we omit the i subscript in this section. Assumption Z f (z; ), 2 De…nition 10 (Score) s (z; ) . @ log f (z; )/ @ Lemma 30 E [s (Z; )] = 0 Proof. Because 1= we have 0= Z @f (z; ) dz = @ Z Z f (z; ) dz @f (z; ) @ f (z; ) f (z; ) dz = Z s (z; ) f (z; ) dz De…nition 11 (Fisher Information) Z I ( ) = s (z; ) s (z; )0 f (z; )dz = E s (Z; ) s (Z; )0 : 57 Theorem 54 I( )= Z @ 2 log f (z; ) f (z; )dz = @ @ 0 Proof. Because 0= we have Z Z @s (z; ) f (z; ) dz + @ 0 @ 2 log f (Z; ) : @ @ 0 E s (z; ) f (z; ) dz Z @f (z; ) dz @ 0 Z Z @f (z; ) @ @ 2 log f (z; ) 0 = f (z; ) dz + s (z; ) @ f (z; ) dz 0 @ @ f (z; ) Z 2 Z @ log f (z; ) = f (z; ) dz + s (z; ) s (z; )0 f (z; ) dz @ @ 0 0= s (z; ) Example 2 Suppose that X N ( ; 2 ). Assume that 2 is known. The Fisher information I ( ) can be calculated in the following way. Notice that # " 1 (x )2 f (x; ) = p exp 2 2 2 so that )2 (x log f (x; ) = C 2 2 where C denotes the part of the log f wich does not depend on . Because s(x; ) = we have I ( ) = E s (X; )2 = Remark 13 In the multivariate case where s (x; ) = @ log f (x; ) @ E 2 1 4 )2 = E (X 1 2 = ( 1 ; : : : ; K ), we let 0 @ log f (x; ) 1 and I ( ) = E s (X; ) s (X; )0 = x B @ @ 1 @ log f (x; ) @ K @ 2 log f (x; ) = @ @ 0 58 .. . 2 6 E4 C A: @ 2 log f (X; ) @ 1@ 1 @ 2 log f (X; ) @ 1@ K @ 2 log f (X; ) @ K@ 1 @ 2 log f (X; ) @ K@ K 3 7 5 Example 3 Suppose that X is from N ( 1 ; log f (x; 1; 2) = Then, 2 ). 1 log (2 2 2 so that s (x; 1; 2) = @ log f =@ @ log f =@ 2 1) (x 2) x 1 = 1 2 2 from which we obtain E [ss0 ] = 2 0 1 2 2 1 2 + (x 2 1) 2 2 2 ! 0 1 2 22 In this calculation, I used the fact that E [Z 2m ] = (2m)!= (2m m!) and E [Z 2m 1 ] = 0 if Z N (0; 1). 40.3 Random Sample Assumption Z1 ; : : : ; Zn are i.i.d. random vectors f (z; ). Proposition 1 Let In ( ) denote the Fisher Information in Z1 ; : : : ; Zn . Then, In ( ) = n I ( ) ; where I ( ) is the Fisher Information in Zi . 40.4 Limiting Distribution of MLE Theorem 55 (Asymptotic Normality of MLE) p n b d ! N (0; I 1 ( )) Sketch of Proof. We will assume that the MLE is consistent. By the FOC, we have 0= n @ log f Z ; b X i @ i=1 0 1 n n @ 2 log f Z ; e X X i @ log f (Zi ; ) @ A b = + 0 @ @ @ i=1 i=1 where the second equality is justi…ed by the mean value theorem. Here, the e is on the line segment adjoining b and . It follows that p n b = 0 1 n @ 2 log f Z ; e X i @1 A n i=1 @ @ 0 59 1 n 1 X @ log f (Zi ; ) p @ n i=1 ! It can be shown that, under some regularity conditions, 2 e n 1 X @ log f Zi ; n i=1 @ @ 0 1 X @ 2 log f (Zi ; ) !0 n i=1 @ @ 0 n in probability. Because 1 X @ 2 log f (Zi ; ) @ 2 log f (Zi ; ) ! E n i=1 @ @ 0 @ @ 0 n in probability, we conclude that 2 e n 1 X @ log f Zi ; @ 2 log f (Zi ; ) plim = E = 0 0 @ @ @ @ n!1 n i=1 We also note that I( ) 1 X @ log f (Zi ; ) d p ! N (0; I ( )) @ n i=1 (4) n (5) by the central limit theorem. Combining (4) and (5) with Slutzky Theorem, we obtain the desired conclusion. Remark 14 How do we prove 2 e n 1 X @ log f Zi ; n i=1 @ @ 0 Here’s one way. Assume that 1 X @ 2 log f (Zi ; ) p ! 0? n i=1 @ @ 0 n is a scalar, so what we need to prove is 2 e n 1 X @ log f Zi ; n i=1 @ 2 1 X @ 2 log f (Zi ; ) p !0 n i=1 @ 2 n Note that we have by the mean value theorem e n 1 X @ log f Zi ; n i=1 @ 2 2 0 1 ee 3 @ log f Z ; n n i X C 1 X @ 2 log f (Zi ; ) B B1 C e = @n A n @ 2 @ 3 i=1 i=1 e for some e in between e and . Now assume that sup @ 3 log f (Zi ; ) @ 3 60 M (Zi ) and that E [M (Zi )] < 1. Then we have 2 e n 1 X @ log f Zi ; n i=1 @ 2 n 1 X @ 2 log f (Zi ; ) n i=1 @ 2 ! n X 1 M (Zi ) e n i=1 ! n 1X M (Zi ) b n i=1 where the second inequality used the fact that e is on the line segment adjoining b and and hence the distance between e and should be smaller than that b and . By the law of large numbers, we have n 1X p M (Zi ) ! E [M (Zi )] n i=1 By consistency, we also have b p ! 0. The conclusion then follows by Slutzky. Theorem 56 (Estimation of Asymptotic Variance 1) 0 1 n @ log f Z ; b @ log f Z ; b X i i 1 A plim Vb1 plim @ n i=1 @ @ 0 n!1 n!1 Theorem 57 (Estimation of Asymptotic Variance 2) 1 0 n @ 2 log f Z ; b X i 1 A plim Vb2 plim @ 0 n i=1 @ @ n!1 n!1 1 =I 1 ( ): 1 =I 1 ( ): Theorem 58 (Approximate 95% Con…dence Interval) For simplicity, assume that dim ( ) = 1. We have q q 3 2 Vb1 Vb b + 1:96 p 1 5 = :95 lim Pr 4 b 1:96 p n!1 n n q q 3 2 b V2 Vb b + 1:96 p 2 5 = :95 lim Pr 4 b 1:96 p n!1 n n Remark 15 Approximate 95% con…dence interval may therefore be constructed as q q b V Vb2 b 1:96 p 1 ; b or 1:96 p n n . . Many softwares usually report Vb1 n or Vb2 n, and call it the (estimated) variance. Therefore, p you do not need to make any adjustment for n with such output. 61 41 Latent Utility For simplicity of notation, assume that x0i is nonstochastic. We have U1i = x0i 1 + u1i : Choice 1 U0i = x0i 0 + u0i : Choice 0 Choice 1 is made if and only if Ui U1i U0i = x0i ( 0) 1 + (u1i u0i ) 0: or x0i "i 0: Otherwise, choice 0 is made. Example 4 Suppose (u1 ; u0 ) has a bivariate normal distribution. Then, "i has a normal distribution. Example 5 Suppose u1 and u0 are i.i.d. with the common c.d.f. F (z) = exp [ exp ( x)]. Then, et Pr [" t] = t : e +1 (Proof omitted.) 42 Binary Response Model Two Choices: yi = 1 Choice 1 is made yi = 0 Choice 0 is made Assume that yi = 1 , Ui = x0i "i 0 Let G (t) Pr ["i t] : Then, Pr [yi = 1] = Pr ["i Example 6 G (t) = Example 7 G (t) = (t): Probit Model et et +1 = (t): Logit Model 62 x0i ] = G (x0i ) : Note that individual likelihood equals y 1 yi G (x0i ) i [1 G (x0i )] It follows that the joint log likelihood equals X yi log G (x0i ) + (1 yi ) log [1 G (x0i )] i MLE from FOC: X i G x0i b i g x0i b h 0b 0b 1 G xi G xi yi xi = 0: Proposition 2 The log likelihood of the Probit or Logit model is globally concave. Proof. Exercise. Proposition 3 p n b d ! N 0; I 1 ( ) : Proposition 4 The Fisher Information I ( ) from the individual observation equals " # g (x0i )2 E xi x0i : 0 0 G (xi ) [1 G (xi )] Proof. Obvious from @ log f (zi ; ) yi G (x0i ) = g (x0i ) xi @ G (x0i ) [1 G (x0i )] and I( )=E @ log f @ log f : @ @ 0 Proposition 5 2 g x0i b 1X h i xi x0i = I ( ) ; plim n i G x0 b 1 G x0 b i i h i2 yi G x0i b 2 1X 0 plim xi x0i = I ( ) : i2 g xi b 2h n i G x0i b 1 G x0i b 63 43 Tobit Model (Censoring) Suppose yi = x0i + "i "i j x i N 0; 2 We observe (yi ; Di ; xi ),where Di = 1 (yi > 0) y i = Di y i Individual Likelihood: x0i 1 Di 1 yi x0i Di = 1 x0i 1 Di 1 yi x0i Di MLE is not simple because the likelihood is not concave in parameters: We have to deal with local vs. global maxima problem! 43.1 Bias of OLS Consider regressing yi on xi for those observations with Di = 1. For simplicity, assume that is a scalar. In this case, we may write P Di x i y i : b= P Di x2i Lemma 31 plim b = n!1 E [Pr [Di = 1j xi ] E [yi j Di = 1; xi ] xi ] : E [Pr [Di = 1j xi ] x2i ] Proof. Denominator: 1X Di x2i = E Di x2i = E E [Di j xi ] x2i = E Pr [Di = 1j xi ] x2i plim n!1 n Numerator: 1X Di xi yi = E [Di yi xi ] = E [E [Di yi j xi ] xi ] ; n!1 n plim and E [Di yi j xi ] = E [1 yi j Di = 1; xi ] Pr [Di = 1j xi ] + E [0 yi j Di = 0; xi ] Pr [Di = 0j xi ] = E [1 yi j Di = 1; xi ] Pr [Di = 1j xi ] 64 Corollary 12 b is consistent only if E [yi j Di = 1; xi ] = x0i : Lemma 32 Suppose u N (0; 1). Then, E [uj u > t] = (t) (t) 1 Proof. Rs Rs (x) dx (x) dx t Pr [u sj u > t] = R 1 = t : 1 (t) (x) dx t Thus, conditional p.d.f. of u at s given u > t equals Rs (x) dx d d (s) t Pr [u sj u > t] = = : ds ds 1 (t) 1 (t) It follows that E [ uj u > t] = Z t 1 s 1 (s) ds = (t) 1 Z 1 (t) 1 s (s) ds: t But from d (s) = s (s) ; ds we have Z 1 Z 1 d (s) ds = s (s) ds = ds t t from which the conclusion follows. (s)j1 t = Lemma 33 x0i E [yi j xi ; Di = 1] = x0i + x0i : Proof. x0i ] E ["i j xi ; Di = 1] = E ["i j xi ; "i = E "i xi ; "i x0i = x0i 1 x0i = x0i Corollary 13 b is inconsistent. 65 : x0i (t) ; 43.2 Heckman’s Two Step Estimator For notational simplicity, I will drop xi in the conditioning event. We know that E [ yi j Di = 1] = x0i + where x0i ; (s) : (s) (s) Thus, if is known, we can estimate consistently by regressing yi on xi and x0i . Observe that can be consistently estimated by the Probit MLE of D on x: we have Di = 1 i¤ x0i and "i + "i >0 N (0; 1) : This suggests two step estimation: Obtain MLE of d from the Probit model x0i d Regress yi on xi and 44 Sample Selection Model Suppose yi = x0i + ui We observe (yi ; Di ; xi ),where Di = 1 (zi0 + vi > 0) ; yi = Di yi Our goal is to estimate . We assume ui vi xi N 0 0 2 u ; u v 2 v u v : By the same reasoning as in the censoring case, yi regressed on xi for the subsample where Di = 1 will result in an inconsistent estimator. To …x this problem, we can either rely on MLE, or we can use the two step estimation. Lemma 34 E [yi j Di = 1] = x0i + 66 u zi0 : v Proof. Note that E [yi j Di = 1] = E [x0i + ui j zi0 + vi > 0] zi0 ] = x0i + E [ui j vi > Now recall that u wi = ui vi v is independent of vi . We thus have u zi0 ] = E E [ ui j v i > = vi E u vi > v = vi vi E u v = zi0 vi + wi vi > v zi0 u The lemma suggests that if we know v zi0 zi0 > v + E [ wi j vi > zi0 ] + E [wi ] v +0 v , then can be estimated consistently by regressing yi on xi and zi0 v . But v can be consistently estimated by the Probit MLE of Di on zi ! This suggests two step estimation. Obtain MLE of [ v from the Probit model Regress yi on xi and 45 zi0 [ v Problem Set 1. Suppose that (u; v) are bivariate normal with mean equal to zero. Let " Cov (u; v) u: Var (u) v Show that " and u are independent of each other. 2. Recall that, if N (0; 1), we have E[ j t] = 1 (t) : (t) Suppose that v u N 0 0 67 ; 2 v v u v u 2 u : Show that t E [ vj u u t] = v : t 1 u Hint: Observe that E [vj u v t] = E " + u u u t =E u t = v u u u E v u u u u u t : u 3. Suppose that yi = x0i + vi ; but we observe yi if and only if zi0 + ui 0: In other words, we observe (yi ; Di ; xi ; zi ) for each individual, where Di = 1 (zi0 + ui and yi = yi Di ; Assume that vi ui N 0 0 2 v ; 0) : v u 2 u v u : Show that E [yi j Di = 1; xi ; zi ] = (a) Suppose that you know x0i + v zi0 u zi0 u : . Show that the OLS of yi on xi and u the subsample where Di = 1 yields a consistent estimator of . (b) Suggest how you would construct a consistent estimator of u (zi0 u ) applied to (zi0 u ) . (c) Suggest a two step method to construct a consistent estimator of . 68 Lecture Note 9: Efficiency 46 46.1 Classical Linear Regression Model I Model y =X +" X is a nonstochastic matrix X has a full column rank (Columns of X are linearly independent.) E ["] = 0 E [""0 ] = 46.2 2 In for some unknown positive number 2 Gauss-Markov Theorem Theorem 59 (Gauss-Markov) Given the Classical Linear Regression Model I, OLS estimator is the minimum variance linear unbiased estimator. (OLS is BLUE) Proof. First note that b is a linear combination of y using (X 0 X) linear estimator Cy = CX + C": If c is to be unbiased, we should have CX = ; or CX = I: Also note that Var (Cy) = 2 CC 0 : Because the di¤erence CC 0 (X 0 X) 1 = CC 0 h =C I = CM C 0 CX (X 0 X) X 0C 0 i 1 0 0 X (X X) X C 0 = CM M 0 C 0 = CM (CM )0 ; is nonnegative de…nite, the result follows. 69 1 1 X 0 . Consider any other 46.3 Digression: GLS Theorem 60 Assume that is a positive de…nite matrix. Then, Xb)0 argmin (y 1 (y Xb) = X 0 1 1 X X0 1 y: b Proof. Because is a positive de…nite matrix, there exists T such that T T 0 = In : Observe that = (T ) 1 (T 0 ) 1 = (T 0 T ) 1 1 ) = T 0 T: We can thus rewrite the objective function as (y Xb)0 T 0 T (y T Xb)0 (T y Xb) = (T y X b)0 (y T Xb) = (y X b) ; which is minimized by (X 0 X ) 1 X 0 y = (X 0 T 0 T X) 1 X 0T 0T y = X 0 1 X 1 X0 1 y: We keep every assumption of the classical linear regression model I except we now assume that Var (") = , some known positive de…nite matrix. Consider the estimator bGLS de…ned by bGLS Xb)0 argmin (y 1 (y Xb) b Theorem 61 Under the new assumption, bGLS is BLUE. Proof. There exists T such that T T 0 = In : Now, consider the transformed model y =X +" ; where y = T y; X = T X; " = T" This transformed model satis…es every assumption of Classical Linear Regression Model I: h i 0 E " " = E [T ""0 T 0 ] = T T 0 = In : The BLUE for the transformed model equals (X 0 X ) 1 X 0 y = (X 0 T 0 T X) 1 X 0T 0T y = X 0 70 1 X 1 X0 1 y: Remark 16 OLS b= remains to be unbiased. 1 X 0" is unknown and has to be estimated. Let b denote some ‘good’ Remark 17 In general, estimator. Then, X0 b is called the feasible GLS (FGLS). 47 + (X 0 X) 1 1 X X0 b 1 y Approximate E¢ ciency of MLE: Cramer-Rao Inequality Theorem 62 (Cramer-Rao Inequality) Suppose that Z ased for , i.e., E [Y ] = . Then, E (Y )0 ) (Y I( ) f (Z; ) and Y = u (Z) is unbi1 : Proof. Assume for simplicity that is a scalar. We then have Z = u (z) f (z; ) dz and 1= We can rewrite them as Z @f (z; ) u (z) dz = @ E [Y ] = ; Z u (z) s (z; ) f (z; ) dz E [Y s (Z; )] = 1 Now, recall that E [s (Z; )] = 0, so that E [(Y ) s (Z; )] = E [Y s (Z; )] E [s (Z; )] = 1: De…ne y Y ; s (Z; ) : X Letting E X2 1 E [X y] = I ( ) and " y X ; we may write y=X 71 + ": 1 ; Observations to be made: (1) The coe¢ cient does not depend on Y at all; it only depends on the parameter estimated and the Fisher information I ( ); (2) X and hence U are zero mean random variables and they are not correlated: E [X "] = E [X y] E X2 =0 We thus have E y2 = 2 2 E X2 + E U 2 to be E X2 = 1 I( ) This inequality is known as the Cramer-Rao inequality, and the right hand side of this inequality is sometimes called the Cramer-Rao lower bound. De…nition 12 Let Y be an unbiased estimator of a parameter . Call Y an e¢ cient estimator if and only if the variance of Y equals the Cramer-Rao lower bound. De…nition 13 The ratio of the actual variance of some unbiased estimator and the CramerRao lower bound is called the e¢ ciency of the estimator. Remark 18 Cramer-Rao bound is not sharp. Suppose that Xi i = 1; : : : ; n are from N ( 1 ; Then, ! n 0 2 In ( ) = 0 2n2 2 ). 2 which implies that the Cramer-Rao lower bound for 2 is equal to 2 22 ? n 2 22 . n Does there exist an unbiased estimator of 2 with variance equal to Note that the usual estimator S 2 = P P P 2 n 1 X is unbiased and is a function of su¢ cient statistic ( ni=1 Xi ; ni=1 Xi2 ). i=1 Xi n 1 It can be shown (using Lehman-Sche¤é Theorem, which you can learn from any textbook on mathematical statistics) that S 2 is the unique unbiased minimum variance estimator. Because 2 2 2 2 2 (n 1)S 2 2 = (n 21)2 2 (n 1) = n 21 , which (n 1), we have Var (S 2 ) = (n 21)2 Var (n 1)S 2 2 is strictly larger than the Cramer-Rao bound 2 22 . n Theorem 63 (Asymptotic E¢ ciency of MLE) 48 p n b d ! N (0; I 1 ( )) E¢ cient Estimation with Overidenti…cation: 2SLS We are dealing with yi = x0i + "i ; where the only restriction given to us is that E [zi "i ] = 0: 72 We assume that 0 1 z1i B C zi = @ ... A ; zri such that r = dim (zi ) dim (xi ) = dim ( ) = q: We can estimate in more than one way. For example, if dim ( ) = 1, we can construct r di¤erent IV estimators: P P zri yi z y 1i i i b(1) = P ; : : : ; b(r) = P i : i z1i xi i zri xi How do we combine them e¢ ciently? The answer is given by 2 ! ! 1 !3 1 ! ! X X X X X b2SLS = 4 xi zi0 zi zi0 zi x0i 5 xi zi0 zi zi0 i i h = X 0 Z (Z 0 Z) 1 Z 0X i i 1 X 0 Z (Z 0 Z) i 1 1 X i i zi yi ! Z 0y This estimator is called the two stage least squares estimator because it is numerically equivalent to b = Z (Z 0 Z) 1. Regress xi on zi . Get a …tted value matrix X 2. Regress yi on x bi , and obtain X 0 Z (Z 0 Z) It can be shown that for some , and that bn = where ei yi b V p 1 n b2SLS Z 0X 1 1 Z 0X X 0 Z (Z 0 Z) 1 Z 0 y. ! N (0; ) can be consistently estimated by 2 ! ! P 2 X X e 1 1 i i 4 xi zi0 zi zi0 n n i n i xi b2SLS is the residual. Let 2 ! P 2 X 1b 1 i ei 4 1 xi zi0 n = n n n n i 1X 0 zi zi n i Then a valid 95% asymptotic con…dence interval is given by p b2SLS 1:96 V b 73 !3 1 1X 0 5 zi xi n i ! 1 1 !3 X 1 zi x0i 5 n i 1 Remark 19 In the derivation of the asymptotic variance above, I assumed that zi and "i are independent of each other. Remark 20 Our discussion generalizes to the situation where dim ( ) > 1. We still have h i 1 1 0 1 0 0 X Z (Z Z) Z X X 0 Z (Z 0 Z) Z 0 y as our optimal estimator. 49 Why is 2SLS E¢ cient? Consider the following seemingly unrelated problem. Write U = (U1 ; : : : ; Ur )0 . Suppose that P P E [U ] = 0 and Var (U ) = . Consider w0 U = rj=1 wj Uj with rj=1 wj = 1. You would like to minimize the variance. This problem can be written as min w0 w s:t: `0 w = 1 w The Lagrangian of this problem is 1 0 w w + (1 2 `0 w) FOC is given by 0= w ` `0 w 0=1 which can be alternatively written as 1 w= ` and 1 = `0 from which we obtain w= Now, note that 0 1 2 b(1) B .. C 6 @ . A=4 b(r) 2 6 =4 1 n P i z1i 1 `0 1 n i z1i 0 ` 1 1` xi ` 3 0 ... 1 n 0 P 1 xi .. . 1 n 74 P i zri P xi 0 i zri xi 7 5 3 7 5 1 1 2 6 4 1 n P i z1i yi 3 7 .. 5 . P 1 i zri yi n 1X zi yi n i ! so that 0 p B n@ b(1) .. . b(r) 1 2 C 6 A=4 E [z1 x] 3 0 .. 1 7 5 . 6 4 0 E [zr x] X 1 zi "i + op (1) = Q 1p n i ! N 0; Q 2 " 1 1 Q 2 p1 n p1 n = N 0; P i z1i P "i .. . i zri 2 1 "Q Q "i 3 7 5 + op (1) 1 where 2 6 Q=4 E [z1 x] 3 0 .. . 0 E [zr x] = E [zz 0 ] 7 5 According to the preceding analysis, the optimal combination is given by 1 `0 ( 2 1 "Q 1) Q 1 ` 2 1 "Q Q 1 1 `= 1 `0 Q 1 Q` Q 1 Q` Now, note that 2 6 Q` = 4 E [z1 x] 0 so that the optimal combination is 2 3 3 E [z1 x] 6 7 7 .. ... 5` = 4 5 = E [zx] . E [zr x] E [zr x] 0 Q (E [zz 0 ]) 1 E [zx] E [xz 0 ] (E [zz 0 ]) 1 E [zx] We now note that, if plim wn = w, 0 b(1) p B .. wn0 n @ . b(r) then 1 0 p B C 0 A = w n@ b(1) b(r) .. . 1 C A + op (1) so we can use the approximate optimal combination, using 2 1P 3 0 i z1i xi n P P 16 7 .. 1 1 0 0 x z 4 5 . i i i i zi zi n n P 1 0 i zri xi n wn0 = P P P 1 1 1 1 0 0 i xi zi i zi zi i zi xi n n n 75 So the approximately optimal combination is 2 1P i z1i xi n P P 16 ... 1 1 0 0 4 i xi zi i zi zi n n 1 n P i xi zi0 2 6 4 1 n 1 n 0 P P 1 0 i zi zi i z1i xi 1 n .. i zri i zi xi 1 n 0 P 1 n P . 3 0 P i zri xi 3 0 xi 1X zi yi n i P which is 2SLS! 50 1 7 5 1 n 1 n = 7 5 i P xi zi0 i xi zi0 P 1 n 1 n ! 0 i zi zi P 0 i zi zi P 1 1 n 1 1 n i zi P yi i zi xi Problem Set 1. (From Goldberger) You are given a sample produced produced by a simultaneous equations model: y1 = 1 y2 + 2 x1 + "1 y2 = 3 y1 + 4 x2 + "2 You naively regressed the endogenous variables on exogenous variables, and obtained following OLS estimates: y1 = 6x1 + 2x2 y2 = 3x1 + x2 Is ( 1 ; 2 ) identi…ed? Is ( identi…ed. 3; 4) identi…ed? Compute consistent estimates of s that are 2. Consider a linear model yi = x0i + "i m 1 with the restriction that E zi "i k 1 =0 where k > m. Derive the asymptotic distribution of 2SLS 2 ! ! 1 !3 1 ! ! X X X X X b2SLS = 4 xi zi0 zi zi0 zi x0i 5 xi zi0 zi zi0 i h = X 0 Z (Z 0 Z) i 1 Z 0X i i 1 i X 0 Z (Z 0 Z) 76 1 Z 0y i 1 X i zi yi ! under the assumption that E (zi "i ) (zi "i )0 = 2 "E [zi zi0 ]. (a) Show that p 2 n b2SLS =4 1X xi zi0 n i ! !3 1 1X 0 5 zi xi n i ! 1 ! 1X 0 1 X p zi zi zi "i n i n i ! 1 1X 0 zi zi n i ! 1X xi zi0 n i (b) Show that 2 X 4 1 xi zi0 n i ! 1X 0 zi zi n i ! !3 X 1 zi x0i 5 n i 1 converges to in probability. h E [xi zi0 ] (E [zi zi0 ]) (c) Show that 1 i (E [zi x0i ]) N 0; p n b2SLS N 0; 1X xi zi0 n i 2 "E [zi zi0 ] converges in distribution to 2 " h E [xi zi0 ] (E 1 [zi zi0 ]) (E i [zi x0i ]) 3. The “wage.xls”…le contains three variables (from three years): w0 earnings (in dollars), 1990 ed0 education (in years), 1990 a0 age (in years), 1990 w1 earnings (in dollars), 1991 ed1 education (in years), 1991 a1 age (in years), 1991 w2 earnings (in dollars), 1992 ed2 education (in years), 1992 a2 age (in years), 1992 77 ! E [xi zi0 ] (E [zi zi0 ]) 1 X p zi "i n i converges in distribution to (d) Conclude that 1 1 1 1X 0 zi zi n i 1 ! 1 (a) For the 1992 portion of the data, regress ln (wage)on edu, exp, (exp)2 , and a constant. (b) Ability is an omitted variable which may create an endogeneity problem with the education variable in our usual wage equation. It may be reasonable to assume that lagged education (1990 and 1991) are valid instruments for education in the 1992 regression. Re-estimate the wage regression using 2SLS. Never mind that the education variable hardly changes over time. 51 Method of Moments: Simple Example Suppose in the population we have E Yi i.e., the average 1 n =0 1 1 1 1 is the mean of Yi . Given that the expectation E can be approximated by a sample Pn by b that solves i=1 , it seems reasonable to estimate 1X Yi n i=1 n or 52 b =0 X b= 1 Yi n i=1 n Method of Moments: Generalization Suppose that we are given a model that satis…es E Yi Xi q q q 1 q 1 =0 Here, 0 is the parameter of interest. In order to estimate 0 , it makes sense to recall that sample average provides an analog of population expectation. Therefore, we expect 1X (Yi n i=1 n Xi ) 0 by b that solves It therefore makes sense to estimate 1X Yi n i=1 n or 1X Xi n i=1 n b= ! 1 1X Yi n i=1 n Xi b = 0 ! = n X Xi i=1 Such estimator is called the method of moments estimator. 78 ! 1 n X i=1 Yi ! Theorem 64 Suppose that (Yi ; Xi ) i = 1; 2; : : : is i.i.d. Also suppose that E [Xi ] is nonsingular. Then, b is consistent for . Proof. Let Ui Yi Xi Note that E [Ui ] = 0 by assumption. We then have 1X Xi n i=1 n b= ! 0 ! 1 + (E [Xi ]) 1X Xi n i=1 n 1 1X + Ui 0 n i=1 n (E [Ui ]) = ! 1X Xi n i=1 n = + 0: ! 1 1X Ui n i=1 n ! Theorem 65 Suppose that (Yi ; Xi ) i = 1; 2; : : : is i.i.d. Also suppose that E [Xi ] is nonsingular. Finally, suppose that E [Ui Ui0 ] exists and is …nite. Then, p n b ! N 0; (E [Xi ]) p 1X Xi n i=1 1 E [Ui Ui0 ] (E [Xi0 ]) 1 Proof. Follows easily from 52.1 n n b = ! 1 1 X p Ui n i=1 n ! Estimation of Asymptotic Variance De…nition 14 Given a matrix A, we de…ne kAk = p trace (A0 A). q De…nition 15 If a = (a1 ; : : : ; aq ) is a q-dimensional column vector, kak = a21 + 0 Lemma 35 kA + Bk + a2q . kAk + kBk Lemma 36 kA0 k = kAk Lemma 37 kABk kAk kBk bi = Yi Theorem 66 Suppose that b is consistent for . Let U E [Ui Ui0 ] in probability. 79 Xi b. Then, 1 n Pn i=1 bi U b0 ! U i Proof. Note that Xi b = Xi + Ui bi = Yi U and 0 bi U b 0 = Ui U 0 + Ui Xi b U i i Now note that Xi b = Ui + Xi b + Xi b Ui0 + Xi b 1X 1 X b b0 Ui Ui = Ui Ui0 n i=1 n i=1 n 0 n 1X Ui Xi b n i=1 n + 0 1X Xi b + n i=1 n Because 1X Ui Xi b n i=1 n and 0 Ui Xi b Xi b we have 1X Ui Xi b n i=1 n 1X Ui Xi b n i=1 n 0 0 kUi k kXi k b = kUi k Xi b 1X kUi k kXi k b n i=1 1X kUi k kXi k n i=1 n 0 Ui0 0 Xi b 0 Xi b kUi k 1X Xi b n i=1 n + n = ! b !0 in probability. The remaining two terms also converge to zero by similar reasoning. Theorem 67 probability. 52.2 1 n Pn i=1 Xi 1 1 n Pn i=1 bi U bi0 U 1 n Pn i=1 Xi0 1 ! (E [Xi ]) E¢ ciency by GMM Suppose that we are given a model that satis…es E Yi r 1 with r > q. Because there is no Xi r q q 1 =0 satisfying 1X (Yi n i=1 n Y X = 80 Xi ) = 0 1 E [Ui Ui0 ] (E [Xi0 ]) 1 in we will minimize Qn ( ) = Y X 0 Wn Y X and obtain 1 b = X 0 Wn X 0 X Wn Y 1 0 = + X Wn X 0 X Wn U or p Assume that n b = X Wn X 1 X p Ui n i=1 n 1 0 0 X Wn ! E [Xi ] = G0 plim Wn = W0 E [Ui Ui0 ] = S0 It is straightforward to show that p d n b ! N 0; (G00 W0 G0 ) 1 G00 W0 S0 W0 G0 (G00 W0 G0 ) 1 Given that Wn , hence W0 , can be chosen by the econometrician, we can ask what the optimal choice would be. It can be shown that, if we choose W0 = S0 1 , then the asymptotic variance is minimized. If W0 = S0 1 , then the asymptotic variance formula simpli…es to 1 G00 S0 1 G0 Remark 21 If you are curious, here’s the intuition. Consider the linear regression model in a matrix form y = G0 + u where G0 is nonstochastic and E [u] = 0 and E [uu0 ] = S0 . We can minimize G0 b)0 W0 (y (y G0 b) and obtain an estimator Because bb = (G0 W0 G0 ) 0 1 G00 W0 y bb = (G0 W0 G0 ) 1 G0 W0 (G0 + u) = + (G0 W0 G0 ) 0 0 0 we can see that bb is unbiased and has variance equal to (G00 W0 G0 ) 1 G00 W0 S0 W0 G0 (G00 W0 G0 ) 1 G00 W0 u 1 By the Gauss-Markov, this estimator cannot be as good as the GLS estimator that minimizes (y G0 b)0 S0 1 (y 81 G0 b) 53 Method of Moments Interpretation of OLS Suppose that yi = x0i + "i where E E [xi "i ] = 0 Then, we can derive x0i )] = E [xi yi 0 = E [xi "i ] = E [xi (yi xi x0i ] = E [Yi Xi ] with Yi = x i y i Xi = xi x0i Remark 22 It can be shown that ! 1 ! ! n n n 1X 1 X b b0 1X 0 Xi Ui Ui X n i=1 n i=1 n i=1 i 54 1 1X xi x0i n i=1 n = ! 1 1X xi x0i e2i n i=1 n ! 1X xi x0i n i=1 n Method of Moments Interpretation of IV The preceding discussion can be generalized to the following situation. Suppose that yi = xi + "i IF we can …nd zi such that E [zi "i ] = 0; the model can then be written as E [zi yi zi xi ] = 0 Write Yi zi yi ; Xi zi xi The method of moments estimator is then given by ! 1 ! P n n X X zi yi = bIV ; Xi Yi = P i z x i i i i=1 i=1 which is consistent for by the argument in the preceding subsection. Now assume that xi and zi are both q-dimensional, so that the model is given by yi = x0i + "i 82 ! 1 We then have Yi zi yi ; Xi zi x0i Therefore, we obtain n X Xi i=1 54.1 ! 1 n X i=1 Yi ! = n X zi x0i i=1 ! 1 n X zi yi i=1 ! = bIV Asymptotic Distribution As for its asymptotic distribution, we note that E [Ui Ui0 ] = E (zi "i ) (zi "i )0 = 2 "E [zi zi0 ] and E [Xi ] = E [zi x0i ] (if we assume that z and " are independent of each other.) We then have p 54.2 n bIV ! N 0; 2 " (E [zi x0i ]) 1 0 E [zi zi0 ] E (zi x0i ) 1 Overidenti…cation: E¢ ciency Consideration As before, we assume that the model is given by yi = x0i + "i Our restriction is E [zi "i ] = 0 The only di¤erence now is that we assume r = dim (zi ) > dim (xi ) = q This means that we cannot …nd any b such that 1X zi yi n i=1 n x0i b = 0 Given that equality is impossible, we do the next best thing: We minimize !0 ! n n 1X 1X 0 0 Qn ( ) = zi (yi xi ) Wn zi (yi xi ) n i=1 n i=1 for some weighting matrix Wn , which is potentially stochastic. 83 Example 8 Take 1X 0 zi zi n i Wn = ! 1 Then it can be shown that the solution is equal to 2 ! ! 1 X X 1 1 4 xi zi0 zi zi0 n i n i 2 ! ! X X =4 xi zi0 zi zi0 i i !3 X 1 zi x0i 5 n i !3 1 X zi x0i 5 1 1X xi zi0 n i 1 X i xi zi0 i h = X 0 Z (Z 0 Z) 1 ! ! 1X 0 zi zi n i X zi zi0 i Z 0X i 1 ! ! 1 1 1X zi yi n i X zi yi i X 0 Z (Z 0 Z) 1 ! ! Z 0 y = b2SLS According to the previous discussion, if we are to develop an optimal GMM, we need to use the weight matrix that converges to the inverse of S0 = E (zi "i ) (zi "i )0 = E "2i zi zi0 Suppose that "i happens to be independent of zi . If this were the case, we have S0 = E "2i zi zi0 = E "2i E [zi zi0 ] = 2 "E [zi zi0 ] which can be estimated consistently by Therefore, we would want to minimize 1X zi (yi n i=1 n !0 x0i ) 1X 0 b"2 zi zi n i ! = b" 1 1X 0 zi zi n i 1X zi (yi n i=1 !0 1X zi (yi n i=1 n 2 b"2 n x0i ) ! x0i ) 1X 0 zi zi n i ! 1 1X zi (yi n i=1 n x0i ! ) Note that the b" 2 does not a¤ect the minimization. Therefore, the GMM estimator e¤ectively minimizes !0 ! 1 ! n n X X 1X 1 1 zi (yi x0i ) zi zi0 zi (yi x0i ) n i=1 n i n i=1 This is 2SLS! 84 55 GMM - Nonlinear Case Suppose that we are given a model E [h (wi ; )] = 0 where h is a r 1 vector, and is a q 1 vector with r > q. Method of moments estimation is P impossible because there is in general no that solves n1 ni=1 h (Wi ; ) = 0. We minimize !0 ! ! ! X X X X 1 1 1 1 Qn ( ) = h (wi ; ) Wn h (wi ; ) = h (wi ; )0 Wn h (wi ; ) n i n i n i n i instead. We will derive the asymptotic distribution under the assumption that b is consistent. 55.1 Asymptotic Distribution The FOC is given by 1 0 0 b C B 1 X @h wi ; 0=@ A Wn n i @ 1X h wi ; b n i ! 10 b @h w ; X i 1 A Wn =@ n i @ 0 0 1X h wi ; b n i ! Using the mean value theorem, write the last term as 1 0 e @h w ; i 1X 1X 1X A b h wi ; b = h (wi ; ) + @ n i n i n i @ 0 We then have 0 1 X @h @ 0= n i 0 1 X @h +@ n i from which we obtain p n b = 0 wi ; b @ 0 wi ; b @ 0 10 A Wn 10 ! 1X h (wi ; ) n i 1 e @h w ; X i A Wn @ 1 A b n i @ 0 0 20 10 0 13 b e X @h wi ; 6@ 1 X @h wi ; A @1 A7 W 4 5 n 0 0 n i @ n i @ 10 b @h w ; X i @1 A Wn n i @ 0 85 ! 1 X p h (wi ; ) n i 1 (6) Assuming that b 1 X @h wi ; n i @ 0 e 1 X @h wi ; n i @ 0 1 X @h (wi ; ) p !0 n i @ 0 1 X @h (wi ; ) p !0 n i @ 0 we can infer that 0 10 1 0 b e @h w ; @h w ; X X i i p @1 A Wn @ 1 A! G00 W0 G0 n i @ 0 n i @ 0 where G0 = E @h (wi ; ) @ 0 W0 = plim Wn It follows that 20 10 0 13 0 10 b e b @h w ; @h w ; @h w ; X X X i i i 1 p 6@ 1 A Wn @ 1 A7 A Wn ! (G00 W0 G0 ) 4 5@ 0 0 0 n i @ n i @ n i @ 1 G00 W0 (7) Because E [h (wi ; )] = 0, we have by the CLT that 1 X d p h (wi ; ) ! N (0; S0 ) n i (8) where S0 = E h (wi ; ) h (wi ; )0 It follows that 0 10 b @h w ; X i @1 A Wn n i @ 0 ! 1 X d p h (wi ; ) ! N (0; S0 ) n i Combining (6) –(8), we conclude that p n b d ! N 0; (G00 W0 G0 ) 86 1 G00 W0 S0 W0 G0 (G00 W0 G0 ) 1 55.2 Optimal Weight Matrix Given that Wn , hence W0 , can be chosen by the econometrician, we can ask what the optimal choice would be. It can be shown that, if we choose W0 = S0 1 , then the asymptotic variance is minimized. If W0 = S0 1 , then the asymptotic variance formula simpli…es to 1 G00 S0 1 G0 55.3 Two Step Estimation How do we actually implement the above idea? The trick is to recognize that the asymptotic variance only depends on the probability limit of Wn and that Wn is allowed to be stochastic. Let’s assume that there is a consistent estimator . We can then see that S0 can be estimated by noting 1X 1X S0 = E h (wi ; ) h (wi ; )0 = plim h (wi ; ) h (wi ; )0 = plim h (wi ; ) h (wi ; )0 n i n i Remark 23 The last equality requires some justi…cation, i.e., we need to show that 1X 1X p h (wi ; ) h (wi ; )0 h (wi ; ) h (wi ; )0 ! 0 n i n i We have seen in the discussion of MLE how this can be done. Therefore, if we choose our weight matrix to be Wn = 1X h (wi ; n i ) h (wi ; 0 ) ! 1 then we are all set. Question is where we …nd such a . We usually …nd it by the preliminary GMM, that minimizes ! ! ! ! X X X 1X 1 1 1 h (wi ; )0 In h (wi ; ) = h (wi ; )0 h (wi ; ) n i n i n i n i although we can choose any other weight matrix. So, here’s the summary: 1. Minimize 1X h (wi ; )0 n i ! An ! 1X h (wi ; ) n i for arbitrary positive de…nite An . Call the minimizer Wn = 1X h (wi ; n i 87 ) h (wi ; , and let ! 1 )0 2. Minimize 55.4 1X h (wi ; )0 n i ! ! 1X h (wi ; ) n i Wn Estimation of Asymptotic Variance Suppose that we estimated the optimal GMM estimator b. How do we estimate the asymptotic variance? Noting that the asymptotic variance is G00 S0 1 G0 1 b0 Sb 1 G b G 1 we can estimate it by where b 1 X @h wi ; b G= n i @ 0 1X Sb = h wi ; b h wi ; b n i 88 0 Lecture Note 10: Hypothesis Test 56 Elementary Decision Theory We are given a statistical model with an observation vector X whose distribution depends on a parameter , which would be understood to be a speci…cation of the true state of nature. The ranges over a known parameter space . The decision maker has available a set A of actions, which is called the action space. For example, A = f0; 1g where 0 is “accept H0 ”and 1 is “reject H0 ”. We are given a loss function l ( ; a). In the case of testing, we may take l ( ; a) = 0 if the decision is correct and l ( ; a) = 1 if it is incorect. We de…ne a decision rule to be a mapping from the sample space to A. If X is observed, then we take the action (X). Our loss is then the random variable l ( ; (X)). We de…ne the risk to be Z R ( ; ) = E [l ( ; (X))] = l ( ; (x)) f (xj ) dx where f (xj ) denotes the pdf of X. A procedure improves a procedure 0 i¤ R ( ; ) R ( ; 0 ) for all (with strict inequality for some ). If is admissible, then no other 0 improves . We may want to choose such that the worst possible risk sup R ( ; ) is minimized. If is such that sup R ( ; ) = inf sup R ( ; ) then the is called the minimax procedure. The Bayes risk is Z r( ) = R( ; ) ( )d R starts from weights ( ) such that ( ) d = 1. Viewing ( ), we can write r ( ) = E [R ( ; )] as a random variable with pdf A Bayesian would try to minimize the Bayes risk. Because Z Z Z r( ) = R( ; ) ( )d = l ( ; (x)) f (xj ) ( ) dxd Z Z f (xj ) ( ) = l ( ; (x)) d f (x) dx f (x) where f (x) = Z f (xj ) ( ) d 89 we can write r ( ) = E [r ( j X)] where r ( j x) = Z f (xj ) ( ) d = l ( ; (x)) f (x) = E [ l ( ; (x))j X = x] Z l ( ; (x)) f ( j x) d Therefore, if we choose (x) such that r ( j x) is minimized, then the Bayes risk is minimized. (If is discrete, we should replace the integral by summation.) In a very simple testing context, the Bayes procedure with 0-1 loss would look like the following. Let a0 denote the action of accepting H0 , and let a1 denote the action of accepting H1 . Then the Bayes rule says that we should choose a0 if r (a0 j x) < r (a1 j x), and a1 if r (a1 j x) < r (a0 j x). Let denote f 0 ; 1 g, where 0 is “H0 is correct”and 1 is “H1 is correct”. Because X X f (xj j ) ( j ) r ( j x) = l ( j ; (x)) f ( j j x) = l ( j ; (x)) f (x) j=0;1 j=0;1 = l ( 0 ; (x)) f (xj 0) ( 0 ) + l ( 1 ; (x)) f (xj f (x) 1) ( 1) we have r (a0 j x) < r (a1 j x) if and only if l ( 0 ; a0 ) f (xj 0) ( 0 ) + l ( 1 ; a0 ) f (xj f (x) 0) ( 0 )+l ( 1 ; a0 ) f (xj 1) ( 1) < l ( 0 ; a1 ) f (xj 0) ( 0 ) + l ( 1 ; a1 ) f (xj f (x) 1) ( 1) or l ( 0 ; a0 ) f (xj 1) ( 1 ) < l ( 0 ; a1 ) f (xj 0) ( 0 )+l ( 1 ; a1 ) f (xj 1) ( 1) or f (xj or 57 1) ( 1 ) < f (xj f (xj f (xj 0) ( 0) 1) ( 1) <1 0) ( 0) Tests of Statistical Hypothesis Example 9 Assume that X1 ; : : : ; Xn N ( ; 10). We are going to consider the null hypothesis H0 : 2 M0 against the alternative H1 : 2 M1 , where M0 and M1 are some subsets of the one dimensional Euclidean space. We believe that 2 M0 or 2 M1 . We can either accept the null or reject the null. When we reject the null even when the null is true, we are making a Type I error. When we accept the null even when the alternative is true, we are making a Type II error. Our objective is to make a decision in such a way as to minimize the probability of either error. 90 Our decision will hinge on the realization of X1 ; : : : ; Xn . Suppose that we are going to reject the null if (X1 ; : : : ; Xn ) 2 C, which implicitly will lead us to accept the alternative. The set C is sometimes called the critical region. De…nition 16 A Statistical Hypothesis is an assertation about the distribtion of one or more random variables. If the statistical hypothesis completely speci…es the distribution, it is called a simple statistical hypothesis; if it does not, it is called a composite statistical hypothesis. Example 10 Assume that X1 ; : : : ; Xn N ( ; 10). A hypothesis H0 : hypothesis, whereas H0 : 75 is a composite hypothesis. = 75 is a simple De…nition 17 A test of a statistical hypothesis is a rule which, when the experimental sample values have been obtained, leads to a decision to accept or to reject the hypothesis under consideration. De…nition 18 Let C be that subset of the sample space which, in accordance witha prescribed test, leads to the rejection of the hypothesis under consideration. Then C is called the critical region of the test. De…nition 19 The power function of a test of a statistical hypothsis H0 against H1 is the function, de…ned for all distributions under consideration, which yields the probability that the sample point falls in the critical region C of the test, that is, a function that yields the probability of rejecting the hypothesis under consideration. The value of the power function at a parameter point is called the power of the test at that point. De…nition 20 Let H0 denote a hypothesis that is to be tested against an alternative H1 in accordance with a prescribed test. The signi…cance level of the test is the maximum value of the power function of the test when H0 is true. Example 11 Suppose that n = 10. Suppose that H0 : 75 and H1 : > 75. Notice that we are dealing with composite hypotheses. Suppose that we adopted a test procedure in which we reject the null only when X > 76:645 In this test, the critical region C equals (X1 ; : : : ; X10 ) : X > 76:645 The power function of the test is p( ) = P =1 X > 76:645 = Pr [N ( ; 1) > 76:645] = Pr [Z > 76:645 (76:645 ] ); where Z N (0; 1) and is the c.d.f. of N (0; 1). Notice that this power function is increasing in . In the set f : 75g, the power function is maximized when = 75, at which it equals 1 (1:645) = :05 Thus, the signi…cance level of the test equals 5%. 91 58 Additional Comments about Statistical Tests Suppose that H0 : = 75. If the alternative takes the form H1 : > 75 (or H1 : < 75 ), we call such an alternative a one-sided hypothesis. If instead, if takes the form H1 : 6= 75, we call it a two-sided hypothesis. Example 12 Now suppose that the pair of hypotheses is H0 : = 0 vs. H1 : that 2 and n are known. A commonly used test rejects the null if and only if X p 0 = n > 0. Assume c for some c, where c is chosen such that the signi…cance level of the test equals some prechosen value. Assume that it has been decided to set the signi…cance level at 5%, a common choice. Noting that X p 0 N (0; 1) ; = n it su¢ ces to …nd c such that Pr [Z c] = 5% where Z is a standard normal random varaible. From the normal distribution table, it can easily be seen that we want to set c = 1:645: Example 13 What would happen if we now have H1 : 6= 0 . A common test rejects the null if and only if X p 0 c; = n where c is again chosen so as to have the signi…cance level of the test equal to some prechosen value. Assuming that we want to have the signi…cance level of the test equal to 5%, it can easily be seen that we want to set c = 1:96: Example 14 What would happen if we now have the same two sided alternative, but do not know 2 ? A common procedure is to reject the null i¤ X p 0 s= n c; where s is the sample standard deviation: s2 = Because the ratio has a t (n t (n 1) distribution. 1 n 1 X Xi X 2 : i 1) distribution, we want to set c equal to 97.5th percentile of the 92 59 Certain Best Tests Remark 24 The term “test” and “critical region” can be used interchangeably: a test speci…es a critical region; but it can also be said that a choice of a critical region de…nes a test. Let f (x; ) denote the p.d.f. of a random vector X. Consider the two hypothesis H0 : vs. H1 : = 1 . We have = f 0 ; 1 g. = 0 De…nition 21 Let C denote the subset of the sample space. Then C is called the best critical region of size for testing H0 against H1 if, for every subset A of the sample space such that Pr [X 2 A; H0 ] = ; (a) Pr [X 2 C; H0 ] = ; (b) Pr [X 2 C; H1 ] Pr [X 2 A; H1 ]. In e¤ect, the best critical region maximizes the power of the test while keeping the signi…cance level of the test equal to . Theorem 68 (Neyman-Pearson Theorem) Let X denote a random vector with p.d.f. f (x; ) = L ( ; x). Assume that H0 : = 0 and H1 : = 1 . Let C L ( 1 ; x) L ( 0 ; x) x: k ; where k is chosen in such a way that P [X 2 C; H0 ] = : Then, C is the best critical region of size for testing H0 against the alternative H1 . Proof. Assume that A is another critical region of size . We want to show that Z Z f (x; 1 ) dx: f (x; 1 ) dx A C By using the familiar indicator function notation, we can rewrite the inequality as Z (IC (x) IA (x)) f (x; 1 ) dx 0: It su¢ ces to show that (IC (x) IA (x)) f (x; 1) k (IC (x) Notice that, if it (9) holds, it follows that Z (IC (x) IA (x)) f (x; 1 ) dx But since Z (IC (x) IA (x)) f (x; 0 ) dx = Z k Z f (x; C IA (x)) f (x; (IC (x) 0 ) dx IA (x)) f (x; Z A 93 f (x; 0 ) dx (9) 0) : = 0 ) dx: = 0; we have the desired conclusion. Notice that IC obviously have (IC (x) When IC IA = 1, we have f (x; (IC (x) When IC IA (x)) f (x; IA = 1) k (IC (x) kf (x; IA (x)) f (x; 1, we have f (x; (IC (x) 1) 1) IA equals 1, 0, or -1. When IC 0) 1) 0) : IA (x)) f (x; 0) : so that k (IC (x) < kf (x; IA (x)) f (x; IA = 0, we so that 0) IA (x)) f (x; 1) k (IC (x) IA (x)) f (x; 1) : IA (x)) f (x; 1) k (IC (x) IA (x)) f (x; 0) : We thus conclude that (IC (x) Example 15 Consider X1 ; : : : ; Xn i.i.d. N ( ; H0 : = 0 vs. H1 : = 1 with 0 < 1 . Now, p 1= 2 L ( 1 ; x1 ; : : : ; x n ) = p L ( 0 ; x1 ; : : : ; x n ) 1= 2 " = exp 2 2 X The best critical region C takes the form " ! X exp xi ( 1 i 2 ). We assume that P n exp[ i P n exp[ ! xi ( 0) = i 2 (xi 2 1) =2 2 ] (xi 2 0) =2 2 ] 0) = 1 n 2 2 0 i 2 n 2 1 =2 2 0 2 # 2 1 is known. We have =2 2 # : k for some k. In other words, C takes the form x c for some c. We can …nd the value of c easily from the standard normal distribution table. 60 Uniformly Most Powerful Test In this section, we consider the problem of testing a simple null against a composite alternative. Note that a composite hypothesis may be viewed as a collection of simple hypotheses. 94 De…nition 22 The critical region C is a uniformly most powerful critical region of size for testing a simple H0 against a composite H1 if the set C is a best critical region of size for testing H0 against each simple hypothesis in H1 . A Test de…ned by this critical region is called a uniformly most powerful test with signi…cance level . Example 16 Assume that X1 ; : : : ; Xn are i.i.d. N (0; ) random variables. We want to test H0 : = 0 against H1 : > 0 . We …rst consider a simple alternative H1 : = 00 where 00 > 0 . The best critical region takes the form k p 1= 2 p 1= 2 00 0 P n exp [ i x2i =2 00 ] P n = 2 0 i xi =2 ] exp [ n=2 0 exp 00 " 00 2 0 00 0 X i x2i # P In other words, the best critical region takes the form i x2i c for some c, which is determined by the size of the test. Notice that the same argument holds for any 00 > 0 . It thus follows P that i x2i c is the uniformly most powerful test of H0 against H1 ! Example 17 Let X1 ; : : : ; Xn i.i.d. N ( ; 1). There exists no uniformly most powerful test of H0 : = 0 against H1 : 6= 0 . Consider 00 6= 0 . The best critical region for testing = 0 against = 00 takes the form p P n 00 2 1= 2 exp ) =2 i (xi p k P n 0 )2 =2 (x exp 1= 2 i i or " exp ( Thus, when 00 00 0 ) X 00 2 xi n ( ) 0 2 ( ) # =2 i k > 0 , the best critical region takes the form X xi c; i and when 00 < 0 , it takes the form X xi c: i It thus follows that there exists no uniformly most powerful test. 61 Likelihood Ratio Test We can intuitively modify and extend the notion of using the ratio of the likelihood to provide a method of constructing a test of composite null against composite alternative, or of constructing a test of a simple null against some composite alternative where no uniformly most powerful test exists. 95 Idea: Suppose that X f (x; ) = L ( ; x). The random vector X is not necessarily one dimensional, and the parameter is not necessarily one dimensional, either. Suppose we are given two sets of parameter ! and where ! . We are given 2 !; H0 : 2 H1 : ! The likelihood ratio test is based on the ratio sup sup L ( ; x) 2! L ( ; x) 2 If this ratio is bigger than k, say, the null is rejected. Otherwise, we do not reject the null. The number k is chosen in such a way that the size of the test equals some prechosen value, say . Example 18 Suppose that X1 ; : : : ; Xn are i.i.d. N ( ; 2 ). We do not know is positive. We have H0 : = 0; H1 : 6= 0: 2 except that it Formally, we can write != 2 ; : 2 = 0; >0 and = ; 2 1< : 2 < 1; >0 : Now, we have to calculate sup L ( ; ; 2 ; x1 ; : : : ; xn = sup 2 )2! ( ; 2 )2! 2 2 and sup L ( ; ; 2 ; x1 ; : : : ; x n = 2 )2 sup ( ; 2 )2 exp " P n=2 1 2 exp " P n=2 1 2 i (xi 2 i (xi 2 In these calculations, we can consider maximizing log L = instead. For (10), we set n log (2 ) 2 n log 2 2 2 1 X 2 = 0 and di¤erentiate with respect to n 1 X 2 + x =0 2 2 2 2 i i 96 (xi )2 i 2 obtaining )2 # ; (10) )2 # : (11) 2 2 We thus have sup L 2 ; 1 ; x1 ; : : : ; xn = L 0; n 2 )2! ( ; X x2i ; x1 ; : : : ; xn i = 1 P 2 = i e P 2 i P 2 x P i 2i 2 i xi =n n=2 exp x2i =n n=2 1 : x2i =n For (11), we set the partial derivatives with respect to 1 X (xi ) = 0; 2 2 and ! equal to zero: i and n 1 X + (xi 2 2 2 2 i We then obtain sup L ( ; ; 2 ; x1 ; : : : ; xn = L x; n )2 = 0: 1 2 )2 X (xi x)2 ; x1 ; : : : ; xn i e 1 P 2 x)2 =n i (xi = Thus, the likelihood ratio test would be based on !n=2 P 2 =n x = P i i 2 : x) =n i (xi !n=2 ! : It is important to note that the numerator and the denominator are constrained mle’s of 2 . This important observation will be used often in the classical linear regression hypothesis testing setup. The likelihood ratio test would reject the null i¤ is bigger than certain threshhold. It is equivalent to the rejection when P 2 x =n P i i 2 x) =n i (xi is big. Because X X x2i =n = (xi x)2 =n + x2 ; i i the test is equivalent to the rejection when qP i is big, which has a jt (n x (xi x)2 = (n 1)j distribution! 97 1) 62 Asymptotic Tests Suppose that we have Xi i:i:d: f (x; ), where dim ( ) = k. Let b = argmax c n X log f (Xi ; c) i=1 denote the MLE. We would like to test H0 : = against H1 : 6= . The …rst test is Wald Test. Tentatively assume that k = 1. Recall that p It follows that we should have d n b ! N 0; I ( ) n b I( ) 2 d ! 1 2 (1) In most cases, I ( ) is a continuous function in , so I b 1 will be consistent for I ( ) 1 , and 2 n b Under the null, we should have 1 1 I b ! d 2 (1) d 2 (1) 2 n b ! 1 I b When k is an arbitrary number, we have the generalization n b 0 d b I b 2 ! (k) The Likelihood Ratio Test is based on the result that 2 n X i=1 log f Xi ; b n X ! ! d 2 (k) ! ! d 2 (k) ! ! d 2 (k) log f (Xi ; ) i=1 Under the null, we should have 2 n X i=1 log f Xi ; b n X log f (Xi ; i=1 The Score Test is based on the result that !0 n 1 X s (Xi ; ) I ( ) 1 n i=1 98 n X i=1 ) s (Xi ; ) so that n X 1 n !0 s (Xi ; ) i=1 n X 1 I( ) ! s (Xi ; ) i=1 d ! 2 (k) This test is sometimes called the Lagrange Multiplier Test because, if we want to maximize Pn = , and if we use the Lagrangian i=1 log f (Xi ; ) subject to the constraint n X 0 log f (Xi ; ) ( ) i=1 the …rst order conditions are n X s (Xi ; ) = i=1 so = = n X s (Xi ; ) i=1 63 63.1 Some Details Wald Test of Linear Hypothesis p Let b be such that n b We are interested in testing where R is m k with m n Rb r 0 h d ! N (0; V ) for some V , which is consistently estimated by Vb . H0 : R r=0 HA : R r 6= 0 k. The test statistic is then given by RVb R0 i 1 Rb r = Rb Its asymptotic distribution under the null is 63.2 2 r 0 (m). Wald Test of Noninear Hypothesis We are interested in testing H0 : h ( ) = 0 HA : h ( ) 6= 0 99 R 1b V n 1 R0 Rb r where dim (h) = m k. The test statistic is then given by i 1 0h 0 bVb R b0 b 1 Vb nh b R h b =h b R n b is a consistent estimator of where R 1 b0 R h b @h ( ) : @ 0 Its asymptotic distribution under the null is 2 (m). Common sense suggests that we can take R= b= R @h b @ 0 p The idea can be understood by Delta method: Because n b have p d n h b h ( ) ! N (0; RV R0 ) and therefore, we should have p under H0 . 63.3 d ! N (0; V ), we should d nh b ! N (0; RV R0 ) LR Test We now assume that b is MLE, i.e., V = I ( ) 1 . We are interested in testing H0 : h ( ) = 0 vs HA : h ( ) 6= 0, where dim (h) = m k. We assume that H0 can be equivalently written H0 : = g ( ) for some , where dim ( ) = k m = dim ( ) dim (h). P The LR test requires calculation of restricted MLE e, i.e., the maximizer of ni=1 log f (Xi ; c) subject to the restriction h (c) = 0. Given the alternative characterization, it su¢ ces to maxiP mize ni=1 log f (Xi ; g (a)) without any restriction. Let e = arg max e = g (e) We now calculate the LR test statistic by 2 n X i=1 log f Xi ; b We note that n X log f (Xi ; n X i=1 0) log f Xi ; e = i=1 n X i=1 + 1 2 n X ! log f (Xi ; g (a)) i=1 =2 n X i=1 log f Xi ; b n X log f (Xi ; g (e)) i=1 1 0 n @ log f X ; b X i A log f Xi ; b + @ 0 @ i=1 ! n X @ 2 log f (Xi ; ) 0 b b 0 0 0 @ @ i=1 100 ! 0 b for some in between zero. We therefore have 2 n X i=1 0 n X log f Xi ; b and b. Because of the obvious FOC, the second term on the right is log f (Xi ; ! 0) i=1 = b 0 = p = p = p 2 n X log f (Xi ; g (e)) i=1 log f (Xi ; g ( J( 0) ! = @ log f (Xi ; g ( @ =E p 0 n (e 0 )) @ b 0) 0 (J ( log f (Xi ; g ( @ 0 0 )) p n (e 0 )) But because @ log f (Xi ; g ( @ 0 0 )) = @ log f (Xi ; g ( @ 0 0 )) @g ( @ 0) 0 @ log f (Xi ; g ( @ 0 we have J ( 0 ) = G0 I ( 0 ) G. Now recall that p Similarly, we have p n (e n b 0) 0 = I ( 0) 1 1 X p s (Xi ; n 0) + op (1) 1 X 0 @ log f (Xi ; g ( 0 )) p G + op (1) @ n 1 X 1 = [G0 I ( 0 ) G] G0 p s (Xi ; 0 ) + op (1) n =J( 0) 1 101 0 ! n p 1 X @ 2 log f (Xi ; ) n b 0 n i=1 @ @ 0 p n b (I ( 0 ) + op (1)) 0 0 p n b + op (1) I ( 0) 0 0 n b ! 0 0 n b 0 )) i=1 where n b ) 0 @ @ i=1 Likewise, we have n X n X @ 2 log f (Xi ; 0 0 )) G 0) +op (1) 0 Therefore, we conclude that 2 n X i=1 = = = where log f Xi ; b 1 X p s (Xi ; 0 ) n 1 X p s (Xi ; 0 ) n 1 X p s (Xi ; 0 ) n 1 X p s (Xi ; 0 ) n n X i=1 0 log f Xi ; e ! 1 X p s (Xi ; 0 ) n 0 1 X 1 G [G0 I ( 0 ) G] G0 p s (Xi ; n I ( 0) 0 0 1 I ( 0) h 1 1 G [G0 G] 1 X p s (Xi ; n and we write 1 G [G0 I ( 0 ) G] 1 0) G0 i G0 0) + op (1) 1 X p s (Xi ; n 1 X p s (Xi ; n 0) 0) + op (1) + op (1) d ! N (0; I ( 0 )) = I ( 0 ) to avoid confusion with an identity matrix. It follows that ! n n X X d ! 2 (m) 2 log f Xi ; b log f Xi ; e i=1 i=1 An intuition can be given in the following way. Now consider u0 1 G [G0 G] 1 G0 u where u N (0; ). Let T be a square matrix such that T T 0 = , and write " Note that we can without loss of generality write u = T ". We then have u0 1 where X = T 0 G is a k G [G0 G] (k 1 G 0 u = "0 T 0 1 G [G0 G] = "0 T 0 1 = "0 Ik X [X 0 X] T 1 G [G0 G] 102 1 G0 u G0 T " T 0 G [G0 T T 0 G] 1 X0 " m) matrix. It follows that u0 1 2 (m) 1 G0 T " N (0; Ik ). 63.4 LM Test The score test is based on 1 X 1 X p s Xi ; e = p s (Xi ; n i=1 n i=1 n 1 X @s (Xi ; n i=1 @ 0 n 1 X s (Xi ; =p n i=1 n 0) + n 1 X =p s (Xi ; n i=1 ! p 0) p I ( 0 ) n (g (e) g( 0) p I ( 0 ) G n (e 0) 0) I ( 0 ) G [G0 I ( 0 ) G] n 1 X s (Xi ; =p n i=1 ) n e 0 )) + op (1) + op (1) 1 X p s (Xi ; n n h G [G0 G] = I 1 G0 i 1 X p s (Xi ; n i=1 n 0 1 G0 ! 0) 0) + op (1) + op (1) It follows that 1 X p s Xi ; e n i=1 n 1 X p s (Xi ; n i=1 n = 1 X p s (Xi ; n i=1 n = !0 1 X p s Xi ; e n i=1 n 1 !0 0) !0 0) h G [G0 G] I h 1 1 G0 G [G0 G] 1 ! i G0 i 1 h 1 G [G0 G] I 1 X p s (Xi ; n i=1 n G0 ! 0) i 1 X p s (Xi ; n i=1 n ! 0) + op (1) It follows that the score test is asymptotically equivalent to the LR test. 64 Problem Set 1. Let X1 ; : : : ; X10 be a random sample from N (0; 2 ). Find a best critical region of size = 5% for testing H0 : 2 = 1 against H1 : 2 = 2. Is this a best critical region for testing against H1 : 2 = 4? Against H1 : 2 = 12 > 1? 2. Let X1 ; : : : ; Xn be a random sample from a distribution with pdf f (x) = x 1 , 0 < x < 1, zero elsewhere. Show that the best critical region for testing H0 : = 1 against H1 : = 2 takes the form ( ) n Y (x1 ; : : : ; xn ) : xi c i=1 3. Let X1 ; : : : ; Xn be a random sample from N ( ; 100). Find a best critical region of size = 5% for testing H0 : = 75 against H1 : = 78. 103 + op (1) 65 Test of Overidentifying Restrictions Suppose that we are given a model E [h (wi ; )] = 0 where h is a r 1 vector, and is a q 1 vector with r > q. We ask if there is such a begin with. To be more precise, our null hypothesis is H0 : There exists some such that E [h (wi ; )] = 0 In order to test this hypothesis, a reasonable test statistic can be based on !0 ! X X 1 1 h wi ; b Wn h wi ; b Qn b = n i n i p where Wn ! S0 1 and b solves min Qn ( ) = !0 1X h (wi ; ) Wn n i ! 1X h (wi ; ) n i for the same Wn . It can be shown that d nQn b ! 2 r q The proof is rather lengthy, and consists of several steps: Step 1 Because S0 is a positive de…nite matrix, there exists T0 such that T0 S0 T00 = Ir Observe that S0 = (T0 ) 1 (T00 ) 1 = (T00 T0 ) 1 ) S0 1 = T00 T0 Now, write nQn b = = = !0 ! 1 X 1 X p h (wi ; ) Wn p h (wi ; ) n i n i !0 ! 1 X 1 X 0 0 1 1 p h (wi ; ) T0 (T0 ) Wn T0 T0 p h (wi ; ) n i n i !0 ! X 1 X 1 1 T0 p h (wi ; ) (T00 ) Wn T0 1 T0 p h (wi ; ) n i n i 104 to Note that 1 (T00 ) Wn T0 1 p ! (T00 ) 1 S0 1 T0 1 = Ir Therefore, we can see that the nQn b has the same limit distribution as !0 1 X T0 p h (wi ; ) n i ! 1 X T0 p h (wi ; ) n i Step 2 In general, we learned that 20 10 13 0 b e @h w ; @h w ; X i i p 6 1X A Wn @ 1 A7 n b = 4@ 5 0 0 n i @ n i @ 10 b @h w ; X i A Wn @1 n i @ 0 0 1 ! 1 X p h (wi ; ) n i 20 10 0 13 0 10 b e b @h w ; @h w ; @h w ; X X X i i i 1 p 6@ 1 A Wn @ 1 A7 A Wn ! (G00 W0 G0 ) 5@ 4 0 0 0 n i @ n i @ n i @ and p p n n b = n ! 1 X p h (wi ; ) n i 20 10 0 13 0 10 b e b @h w ; @h w ; @h w ; X X X i i i 1 6 1 A Wn @ 1 A7 A Wn = 4@ 5@ 0 0 n i @ n i @ n i @ 0 ! G00 S0 1 G0 G00 W0 1 X d p h (wi ; ) ! N (0; S0 ) n i Using that Wn ! S0 1 , we can conclude that where 1 1 G00 S0 1 in probability. Step 3 We now consider the distribution of 1 0 e @h w ; X X X i 1 1 1 A b p h wi ; b = p h (wi ; ) + @ p @ 0 n i n i n i 0 1 e @h w ; X X i p 1 1 A n b =p h (wi ; ) + @ 0 n i @ n i 105 (12) As before, we will assume that e 1 X @h wi ; n i @ 0 1 X @h (wi ; ) p !0 n i @ 0 which will imply that e 1 X @h wi ; p ! G0 0 n i @ Combined with (12), we can conclude that 1 X p h wi ; b = [I n i ! 1 X p h (wi ; ) n i n] such that 0 0 1 20 10 13 0 10 e b e b @h w ; @h w ; @h w ; @h w ; X X X X i i i i 1 1 @1 A6 A Wn @ 1 A7 A Wn 4@ 5@ n = 0 0 0 n i @ n i @ n i @ n i @ 0 ! G0 G00 S0 1 G0 1 G00 S0 1 in probability. Write 0 1 = G0 G00 S0 1 G0 G00 S0 1 By Slutsky, we conclude that 1 X d h wi ; b ! N (0; T0 (I T0 p n i Because T0 (I 0 ) S0 (I 0 0 0 ) T0 = T0 S0 T00 = Ir T0 0 0 S0 T0 T0 G0 G00 S0 1 G0 0 ) S0 T0 S0 1 + T0 G0 G00 S0 1 G0 = Ir 1 106 0 S0 0 0 0 T0 G00 T00 1 G00 T00 1 T0 G0 G00 S0 1 G0 G00 T00 1 G00 T00 G00 T00 T0 G0 G00 S0 1 G0 we can further conclude that 1 X d h wi ; b ! N 0; Ir T0 p n i + T0 G00 S0 1 S0 S0 1 G0 G00 S0 1 G0 T0 G0 G00 S0 1 G0 + T0 G0 G00 S0 1 G0 = Ir 1 0 0 0 T0 G00 S0 1 S0 T00 1 T0 S0 S0 1 G0 G00 S0 1 G0 0 0 0 ) T0 ) (I 1 G00 T00 T0 G0 G00 S0 1 G0 1 G00 T00 (13) Step 4 This step will be taken care of by analogy. We now imagine a GLS model y = G0 + " where " N (0; S0 ). Because T0 S0 T00 = Ir the transformed model y =G , +" T0 y = T0 G0 + T0 " is such that " N (0; Ir ). The residual vector e = Ir G (G )0 G 1 (G )0 then has the distribution N 0; Ir G 1 (G )0 G (G )0 = N 0; Ir T0 G0 (T0 G0 )0 T0 G0 1 (T0 G0 )0 Because Ir G (G )0 G 1 (G )0 = Ir 1 T0 G0 (T0 G0 )0 T0 G0 (T0 G0 )0 = Ir T0 G0 (G00 T00 T0 G0 ) 1 G00 T00 = Ir T0 G0 G00 S0 1 G0 1 G00 T00 we conclude that e N 0; Ir T0 G0 G00 S0 1 G0 1 G00 T00 Now compare (14) with (13). We can see that the limit distribution of T0 p1n (14) P i h wi ; b is identical to the distribution of e . Also recall that nQn b has the same limit distribution as !0 1 X T0 p h (wi ; ) n i ! 1 X T0 p h (wi ; ) n i which suggests that the limit distribution of nQn b should be identical to the distribution of (e )0 e . But we already know that (e )0 e 66 2 r q Hausman Test of OLS vs IV Suppose yi = xi + "i ; (i = 1; : : : ; n): 107 Also suppose that we observe zi such that E [zi "i ] = 0. Either H0 : E [xi "i ] = 0 or HA : E [xi "i ] 6= 0. Under H0 , both OLS bOLS and IV bIV are consistent: H0 : plim bOLS = plim bIV Furthermore, bOLS is e¢ cient. Under HA , only …xed e¤ects estimator are consistent: HA : plim bOLS 6= plim bIV Therefore, a reasonable test of H0 can be based on the di¤erence bOLS bIV . If the di¤erence is large, there is an evidence supporting HA . If small, there is an evidence supporting H0 . For p this purpose, we need to establish the asymptotic distribution of n bOLS bIV under the null. Under H0 , we would have p p In general, because n bOLS p n bIV n bOLS Vara bOLS bIV ! N 0; Vara bOLS bIV ! N 0; Vara bIV ! N 0; Vara bOLS = Vara bOLS + Vara bIV bIV 2 Cov a bOLS ; bIV ; we need to …gure out Cov a bOLS ; bIV , to implement such a test. Hausman’s intuition is that, if bOLS is e¢ cient under H0 , then we must have Cov a bOLS ; bIV = Vara bOLS : Suppose otherwise. Consider an arbitrary linear combination bOLS + (1 ) bIV ; whose asymptotic distribution is given by p n bOLS + (1 ) bIV ! N 0; 2 Vara bOLS + (1 )2 Vara bIV + 2 (1 Consider minimizing the asymptotic variance. First order condition is given by or Vara bOLS 1 = (1 ) Vara bIV + (1 Vara bOLS Cov a bOLS ; bIV Vara bOLS + Vara bIV 108 2 ) Cov a bOLS ; bIV 2 Cov a bOLS ; bIV =0 : ) Cov a bOLS ; bIV In order for bOLS to be e¢ cient, we had better have 1 =0 or Vara bOLS Cov a bOLS ; bIV : Therefore, the asymptotic variance calculation simpli…es substantially: Because bOLS is e¢ cient under the null, we should have Vara bOLS bIV = Vara bOLS +Vara bIV Therefore, a test can be based on r p n bOLS d a bIV Var bIV 2 Cov a bOLS ; bIV d a bOLS Var 109 N (0; 1) : = Vara bIV Vara bOLS :