Professor Dr. Poomthan Rangkakulnuwat

EP 784: Econometrics Time Series

Chapter 1

Box-Jenkin Model

1. Basic Concepts

The observed data

is a specification of the joint distributions (or possibly only the

means and covariances) of a sequence of random variables

of which

is

postulated to be a realization is a time series model.

•

x t (lower case) is used for

(random variable/ data).

•

Xt (upper case) is used for

(random variable/ data).

•

A complete probabilistic time series model for the sequence of random variables

{X1 ,

𝑋1

X2 , …, } would specify all of the joint distributions of the random vector [ ⋮ ] , t = 1, 2,

𝑋𝑡

…, or equivalently all of the probabilities

P[X1 ≤ x 1 , …, Xt ≤ x t], -∞ < x 1 , …, x t < ∞, t = 1, 2, …

•

The simples model for a time series is one in which there is no trend or seasonal

component and in which the observations are simply

•

If {Xt} is iid random variable, for any positive integer t and real number x 1 ,…, x t,

P[X1 ≤ x 1 , …, Xt ≤ x t]

=

=

where the cumulative distribution function is

1

•

A time series {Xt} is said to be strictly stationary if the joint distribution of (𝑋𝑡1 , … , 𝑋𝑡𝑘 )

is

a) That is the joint distribution of (𝑋𝑡1 , … , 𝑋𝑡𝑘 ) is

(identical/ different) to that

of (𝑋𝑡1 +ℎ , … , 𝑋𝑡𝑘 +ℎ ) for all h, where k is an arbitrary positive integer.

•

A time series {Xt} is said to be weakly stationary if both the mean of Xt and covariance

between Xt and Xt – h are

(time-variant/ time-invariant), where h is an

arbitrary integer. That is

a) E(Xt)

=

b) Var(Xt)

=

c) Cov(Xt, Xt-h ) =

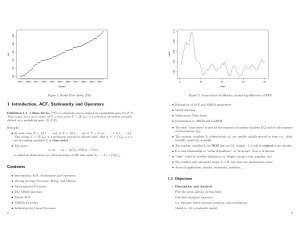

Example Based on the graphs of a time series data below, identify if it is stationary.

2

3

4

In practice, we often assume

(strongly/ weakly) stationary, since the condition of

(strongly/ weakly) stationary is hard to verify empirically.

•

The assumption of Cov(Xt, Xt-h ) = γh , which only depends on h implies two properties:

o Variance of a time series is constant over time:

o Covariance of a time series in different time periods depends on the distance of

two time period:

𝛾ℎ

•

The Autocorrelation function (ACF) of {Xt} at lag h is

•

If Xt is independent and identically distributed (iid) random variables with finite mean

𝜌ℎ =

𝛾0

.

and variance, then the time series Xt is called

•

If Xt is normally distributed with mean zero and variance σ2 , the series is called

•

In practice, for a white noise series, all sample the ACFs

(are/ are not) close to zero.

5

2. Linear Time Series Process

A time Series Xt is said to be linear if it can be written as

∞

𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡−𝑖

(1)

𝑖=0

where is the mean of

𝜓𝑖 (i = 1,…) are

{at} is a white noise series, or it is a sequence of iid random variables with mean zero and

a well-defined distribution.

E(at)

That is,

=

𝜎𝑎2

Var(at) =

The equation (1) can be written as

𝑋𝑡 = 𝜇 + 𝜓(𝐿)𝑎𝑡

(2)

∞

𝜓(𝐿) = ∑ 𝜓𝑖 𝐿𝑖

where

𝑖=0

Note:

(1) If Xt is a random variable, the lag operator (L) has the following properties:

LXt

=

L2 X t

=

LjXt

=

(2) The operator 𝜓(𝐿) can be thought of as

6

•

From equation (1), find the mean of Xt .

∞

𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡 −𝑖

(1)

𝑖 =0

E(Xt) = E() +∑∞

𝑖=0 𝜓𝑖 𝐸(𝑎𝑡−𝑖 )

•

=

From equation (1), find the variance of Xt (γ0 ).

∞

𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡−𝑖

(1)

𝑖=0

Var(Xt) = Var() + 𝜓𝑖2 ∑∞

𝑖 =0 𝑉𝑎𝑟(𝑎𝑡−𝑖 ) +2𝜓𝑖 𝜓𝑗 Cov(at-i, at-j) , i ≠ j

Since is parameter and at is iid ➔ Var() = 0 and Cov(at-i, at-j) = 0

2

Var(Xt) = Var() + ∑∞

𝑖 =0 𝜓𝑖 𝑉𝑎𝑟(𝑎𝑡−𝑖 ) +2𝜓𝑖 𝜓𝑗 Cov(at-i, at-j) , i ≠ j

2

= 𝜎𝑎2 ∑∞

𝑖=0 𝜓𝑖 = γ0

7

•

From equation (1), find the lag-h autocovariance of Xt (γh )

Cov(Xt, Xt-h ) = E[Xt – E(Xt)] [Xt-h – E(Xt-h )]

= E[Xt – ] [Xt-h – ] (If Xt is (weakly) stationary, E(Xt) = E(Xt-h ) = )

= E[Xt – ] [Xt-h – ]

(3)

∞

From 𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡−𝑖

(1)

𝑖=0

Xt – = ∑∞

𝑖 =0 𝜓𝑖 𝑎𝑡 −𝑖

Plug this equation into (3)

Cov(Xt, Xt-h ) = E[Xt – ] [Xt-h – ]

∞

∞

= 𝐸 (∑ 𝜓𝑖 𝑎𝑡−𝑖 ) (∑ 𝜓𝑖 𝑎𝑡 −ℎ−𝑖 )

𝑖=0

𝑖 =0

∞

(∑ 𝜓𝑖 𝑎𝑡−𝑖 ) = (𝜓0 𝑎𝑡 + 𝜓1 𝑎𝑡−1 + ⋯ + 𝜓ℎ 𝑎𝑡−ℎ + 𝜓ℎ −1 𝑎𝑡−ℎ−1 + ⋯ )

𝑖=0

Note: Don’t forget that 𝜓0 = 1

∞

(∑ 𝜓𝑖 𝑎𝑡−ℎ −𝑖 ) = (𝜓0 𝑎𝑡−ℎ + 𝜓1 𝑎𝑡 −ℎ−1 + ⋯ )

𝑖=0

Hence, Cov(Xt, Xt-h ) = 𝐸[(𝜓0 𝑎𝑡 + 𝜓1 𝑎𝑡−1 + ⋯ + 𝜓ℎ 𝑎𝑡−ℎ + 𝜓ℎ −1 𝑎𝑡−ℎ−1 + ⋯ )

× (𝜓0 𝑎𝑡−ℎ + 𝜓1 𝑎𝑡−ℎ −1 + ⋯ )]

= 𝐸[(𝜓0 𝜓0 𝑎𝑡 𝑎𝑡−ℎ + 𝜓0 𝜓1 𝑎𝑡 𝑎𝑡−ℎ−1 + ⋯ ) + ( 𝜓1 𝜓0 𝑎𝑡 −1 𝑎𝑡−ℎ + 𝜓0 𝜓1 𝑎𝑡−1 𝑎𝑡−ℎ −1 + ⋯ )

+ ⋯ + (𝜓ℎ 𝜓0 𝑎𝑡−ℎ 𝑎𝑡 −ℎ + 𝜓ℎ 𝜓1 𝑎𝑡−ℎ 𝑎𝑡−ℎ −1 + ⋯ )]

∞

∞

∞

∞

= 𝐸 [∑ ∑ 𝜓𝑖 𝜓𝑗 𝑎𝑡−𝑖 𝑎𝑡−ℎ −𝑗 ] = ∑ ∑ 𝜓𝑖 𝜓𝑗 𝐸(𝑎𝑡 −𝑖 𝑎𝑡−ℎ −𝑗 )

𝑖=1 𝑗=0

𝑖=1 𝑗=0

Since ai is iid ➔ Cov(at–i, at–h –j) = E(at–iat–h –j) = 0, and at –i = at –h – j when j = i+h, then

we will have

2

𝐶𝑜𝑣(𝑋𝑡, 𝑋𝑡−ℎ ) = ∑∞

𝑖=1 𝜓𝑖 𝜓𝑖 +ℎ 𝐸( 𝑎𝑡−𝑖 )

(Consider only the case of 𝑖 = 𝑗 + ℎ)

𝐶𝑜𝑣(𝑋𝑡, 𝑋𝑡−ℎ ) = 𝜎𝑎2 ∑∞

𝑖=1 𝜓𝑖 𝜓𝑖 +ℎ = 𝛾ℎ

8

•

From equation (1), find the lag-h autocorrelation of Xt (𝜌ℎ =

𝛾ℎ

𝛾0

)

∞

𝛾ℎ =

𝜎𝑎2 ∑ 𝜓𝑖 𝜓𝑖 +ℎ

𝑖=1

∞

𝛾0 =

𝜎𝑎2 ∑ 𝜓𝑖2

∞

=

𝜎𝑎2 (𝜓02

𝑖 =0

𝜌ℎ =

=

∞

+ ∑ 𝜓𝑖2 )

=

𝜎𝑎2 (1 + ∑ 𝜓𝑖2 )

𝑖=0

(𝜓0 = 1)

𝑖 =0

𝛾ℎ

𝜎 2 ∑∞ 𝜓 𝜓

= 2𝑎 𝑖 =1 ∞𝑖 𝑖 +ℎ2

𝛾0 𝜎𝑎 (1 + ∑𝑖 =0 𝜓𝑖 )

∑∞

𝑖 =1 𝜓𝑖 𝜓𝑖 +ℎ

2)

(1 + ∑∞

𝑖 =0 𝜓𝑖

Remark 1

Let {at} be a stationary time series with mean 0 and {Xt } is a linear process. If ∑∞

𝑖=0|𝜓𝑖 | < ∞,

then the time series

∞

𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡−𝑖 = 𝜇 + 𝜓(𝐿 )𝑎𝑡

𝑖=0

is stationary with mean and autocovariance function

∞

𝛾ℎ = 𝐶𝑜𝑣(𝑋𝑡 , 𝑋𝑡−ℎ ) =

𝜎𝑎2 ∑ 𝜓𝑖 𝜓𝑖+ℎ

( 4)

𝑖 =0

9

3. Autoregressive Model or AR model

AR model of order 1 or AR(1) is in the following form

Xt = ϕ0 + ϕ1 Xt–1 + at

(5)

where at is assumed to be a white noise series with mean zero and variance 𝜎𝑎2 . Given that Xt is

(weakly) stationary, we have constant mean and constant variance.

•

Find E(Xt)

From Xt

= ϕ0 + ϕ1 Xt–1 + at

(5)

E(Xt) = ϕ0 + ϕ1 E(Xt–1 ) + E(at)

E(Xt) = ϕ0 + ϕ1 E(Xt)

E(Xt) =

𝜙0

1−𝜙1

Since E(Xt ) = E(Xt-1 ) and E(at) = 0

= where ϕ1 ≠ 1

10

•

Show that the AR(1) process can be written in linear time series process.

From

𝜙0

= ➔

1−𝜙1

ϕ0 = (1–ϕ1 ) ➔ Plug in (5) we get

Xt

= (1–ϕ1 ) + ϕ1 Xt–1 + at

Xt

= + ϕ1 (Xt–1 –) + at

Xt – = ϕ1 (Xt–1 –) + at

From (6), we will have

Xt–1 – = ϕ1 (Xt–2 –) + at–1 ➔ Plug it back to (6), we get

Xt – = 𝜙12 (Xt–2 – ) + ϕ1 at–1 + at

From (6), we will have

(6)

(7)

Xt–2 – = ϕ1 (Xt–3 –) + at–2 ➔ Plug it back to (7), we get

Xt – = 𝜙13 (Xt–3 – ) + 𝜙12 at–2 + ϕ1 at–1 + at

(8)

By repeated substitution, we get

Xt – = at + ϕ1 at–1 + 𝜙12 at–2 + …

∞

= ∑ 𝜙1𝑖 𝑎𝑡−𝑖

(9)

𝑖 =0

11

•

Find Var(Xt)

𝑖

From equation (9), Xt – = ∑∞

𝑖 =0 𝜙1 𝑎𝑡 −𝑖

(9)

2

𝑖

Var(Xt) = E(Xt–)2 = 𝐸(∑∞

𝑖 =0 𝜙1 𝑎𝑡−𝑖 )

∞

= 𝐸 (∑ 𝜙12𝑖 𝑎2𝑡 −𝑖 + 2𝜙1𝑖 𝜙1𝑗 𝑎𝑡−𝑖 𝑎𝑡 −𝑗 )

𝑖=0

∞

= 𝜎𝑎2 ∑ 𝜙12𝑖

(Note: 𝐸 (𝑎2𝑡−𝑖 ) = 𝜎𝑎2 and E(𝑎𝑡−𝑖 𝑎𝑡−𝑗 ) = 0)

𝑖=0

= 𝜎𝑎2 (1 + 𝜙12 + 𝜙14 + 𝜙16 + ⋯ )

•

If 𝜙12 < 1 (or –1 < ϕ1 < 1), then

1

𝑉𝑎𝑟(𝑋𝑡 ) = 𝜎𝑎2 (

)

1 − 𝜙12

•

(10)

(11)

If 𝜙12 ≥ 1, then Var(Xt ) is infinite.

The (weakly) stationary of an AR(1) model implies that

12

•

Find Cov(Xt) using the remark 1.

From Remark 1, we have known that the linear time series process

∞

𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡−𝑖 = 𝜇 + 𝜓(𝐿 )𝑎𝑡

𝑖=0

is stationary with mean and autocovariance function

∞

𝛾ℎ = 𝐶𝑜𝑣(𝑋𝑡 , 𝑋𝑡−ℎ ) =

𝜎𝑎2 ∑ 𝜓𝑖 𝜓𝑖+ℎ

(2)

𝑖 =0

Comparing to the equation (9), we can imply that 𝜓𝑖 = 𝜙1𝑖 and 𝜓𝑖 +ℎ = 𝜙1𝑖+ℎ .

𝑖 𝑖 +ℎ

𝛾ℎ = 𝐶𝑜𝑣(𝑋𝑡 , 𝑋𝑡−ℎ ) = 𝜎𝑎2 ∑∞

𝑖 =0 𝜙1 𝜙1

That is

= 𝜎𝑎2

•

𝜙1ℎ

1 − 𝜙12

Find the lag-h autocorrelation of Xt (𝜌ℎ =

𝛾ℎ

𝛾0

(12)

(13)

)

𝜙1ℎ

𝛾

1 − 𝜙12

𝜌ℎ = ℎ =

= 𝜙1ℎ

𝛾0 𝜎 2 ( 1 )

𝑎

1 − 𝜙12

𝜎𝑎2

(14)

where |ϕ1 | < 1.

The ACF of a (weakly) stationary AR(1) series

13

•

Another way to convert the AR(1) process into linear time series process.

Consider the AR(1) process in the following form

or

Xt – ϕ1 Xt–1 = ϕ0 + at

(15)

ϕ(L)Xt

(16)

= ϕ0 + at

where ϕ(L) = 1 –ϕ1 L. From the equation (16), we have

𝑋𝑡 =

𝜙0

1

+

𝑎

𝜙(𝐿) 𝜙(𝐿) 𝑡

𝑋𝑡 =

𝜙0

1

+

𝑎

1 − 𝜙1 𝐿 𝜙(𝐿) 𝑡

(The lag operator L cannot be applied with parameters e.g. ϕ0 )

𝜙0

1

+

𝑎

1 − 𝜙1 𝜙(𝐿) 𝑡

𝑋𝑡 =

Since 𝜇 =

𝜙0

, we will have

1 − 𝜙1

𝑋𝑡 = 𝜇 +

1

𝑎

𝜙( 𝐿 ) 𝑡

(17)

Consider the linear time series process of equation (1),

∞

( 1)

𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡−𝑖

𝑖=0

= 𝜇 + 𝜓 ( 𝐿 ) 𝑎𝑡

(2)

∞

where

𝜓(𝐿) = ∑ 𝜓𝑖 𝐿𝑖

𝑖=0

From the equation (17) and (1), we can write that

1

= 𝜓 (𝐿)

𝜙( 𝐿 )

or

ϕ(L) (L) = 1

(18)

14

(1 – ϕ1 L)(1 + 1 L + 2 L2 + …) = 1

(19)

(1 + 1 L + 2 L2 + 3 L3 …

– ϕ1 L – ϕ1 1 L2 – ϕ1 2 L3 –… )

=1

(20)

1 + ( 1 – ϕ1 ) L + ( 2 – ϕ1 1 ) L2 +( 3 – ϕ1 2) L3 + … = 1

(21)

From the equation (21), we can imply that

1 – ϕ1

= 0,

that is 1

= ϕ1

2 – ϕ1 1

= 0,

that is 2

= ϕ1 1 = 𝜙12

3 – ϕ1 2

= 0,

that is 3

= ϕ1 2 = 𝜙13

(22)

⁞

If we plug 1 , 2 , 3 , … from the system (22) into the equation (1), we will get the AR(1)

model in the linear time series process.

Xt – = at + ϕ1 at–1 + 𝜙12 at–2 + …

∞

= ∑ 𝜙1𝑖 𝑎𝑡−𝑖

(23)

𝑖 =0

The equation (23) is the same as the equation (9).

15

•

Converting the AR(2) process into linear time series process.

o ϕ(L) = 1 –ϕ1 L – ϕ2 L2

From the equation (18),

ϕ(L) (L) = 1

(18)

We can write it for the case of AR(2) as follows:

(1 – ϕ1 L– ϕ2 L2 )(1 + 1 L + 2 L2 + …) = 1

(24)

(1 + 1 L + 2 L2 + 3 L3 …

– ϕ1 L – ϕ1 1 L2 – ϕ1 2 L3 –… )

–

ϕ2 L2 – ϕ2 1 L3 –… )

=1

1 + ( 1 – ϕ1 ) L + ( 2 – ϕ1 1 – ϕ2 ) L2 +( 3 – ϕ1 2 – ϕ2 1) L3 + … = 1

(25)

(26)

From the equation (26), we can imply that

1 – ϕ1

= 0,

that is 1

= ϕ1

2 – ϕ1 1 – ϕ2 = 0,

that is 2

= ϕ1 1 + ϕ2 = 𝜙12 + 𝜙2

3 – ϕ1 2 – ϕ2 1 = 0, that is 3

(27)

= ϕ1 2 + ϕ2 1

= (𝜙13 + 𝜙1 𝜙2 ) + 𝜙2 𝜙1

⁞

Hence,

j = ϕ1 j–1 + ϕ2 j–2

(28)

If we plug 1 , 2 , 3 , … from the system (27) into the equation (1), we will get the

AR(1) model in the linear time series process.

We can use this method to convert AR(3),…, AR(p) into linear time series process.

16

4. Moving Average (MA) Model

MA(1): Moving Average model of order 1 is in the following form

Xt = + at – θ1 at–1

(29)

where at is assumed to be a white noise series with mean zero and variance 𝜎𝑎2 .

•

Find E(Xt)

E(Xt) = E() + E(at) – θ1 E(at–1 )

=

Hence, the constant term in the MA process is the mean of Xt.

MA(q): Moving Average model of order 1 is in the following form

Xt = + at – θ1 at–1 – θ2 at–2 – … – θq at–q

(30)

MA(∞): Moving Average model of order 1 is in the following form

Xt = + at – θ1 at–1 – θ2 at–2 – …

(31)

Consider the linear time series process equation (1)

∞

𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡−𝑖

(1)

𝑖=0

That is, the linear time series process can be called as

•

Can we use this method to convert MA(1),…, MA(q) into AR(∞)?

Answer

17

•

Converting the MA(1) process into AR(∞).

Consider the MA(1) process in the following form

or

Xt

= + at – θ1 at–1

(29)

Xt

= + θ(L)at

(30)

where θ(L) = 1 – θ 1 L. From the equation (30), we have

1

𝑋𝑡 =

+ 𝑎𝑡

𝜃(𝐿)

𝜃(𝐿)

1

𝜇

𝑋𝑡 =

+ 𝑎𝑡

𝜃(𝐿)

1 − 𝜃1

(31)

(The lag operator L cannot be applied with parameters e.g. .

𝜇

Let 𝑐0 =

, we have

1 − 𝜙1

1

𝑋 = 𝑐0 + 𝑎𝑡

𝜃(𝐿) 𝑡

1

𝑋 = 𝑐0 + 𝑎𝑡

1 − 𝜃1 𝐿 𝑡

(32)

(33)

Given that |θ 1 | < 1, equation (33) can be written as

(1 + 𝜃1 + 𝜃12 + ⋯ ) 𝑋𝑡 = 𝑐0 + 𝑎𝑡

Xt = c0 –θ1 Xt–1 –𝜃12 Xt–2 – … + at

Hence, we say that MA(1) is invertible when |θ1 | < 1.

18

•

Another way to convert the MA(1) process into AR(∞)

From the equation (32),

1

𝑋 = 𝑐0 + 𝑎𝑡

𝜃(𝐿) 𝑡

(32)

Rewrite it as follow,

π(L)Xt = c0 + at

(33)

where π(L) = 1 + π1 L + 𝜋2 L2 +…

From (32) and (33), we can say that

θ(L)π(L)

1

𝜃(𝐿)

=1

= 𝜋(𝐿 ). Or we can write it as

(34)

(1– θ1 L) (1 + π1 L + 𝜋2 L2 +…) = 1

1 + π1 L + 𝜋2 L2 + π3 L3 …

–θ1 L – θ1 π1 L2 – θ1 π2 L3 …

=1

(35)

Hence, we have

(π1 –θ1 )

=0

➔ π1 = θ1

(π2 – θ1 π1 )

=0

➔ π2 = θ1 π1 = 𝜃12

(π3 –θ1 π2 )

=0

➔ π3 = θ1 π2 = 𝜃13

(36)

:

πj =

𝜃1𝑗

If we plug π1 , π2 , π3 , … from the system (36) into the equation (33), we will get the

MA(1) in the AR(∞) process.

19

5. Autoregressive Moving Average Model or ARMA model

The time series {Xt} is an ARMA(1,1) process if it is stationary and satisfies (for every t)

Xt – ϕ1 Xt –1 = ϕ0 + at – θ1 at–1

(37)

where {at} is white noise with mean 0 and variance σ2 and ϕ1 – θ1 ≠ 0.

➢ For stationarity of ARMA(1,1), we assume that |ϕ1 | < 1.

➢ For invertibility of ARMA(1,1), we assume that |θ1 | < 1.

•

Find E(Xt) of the ARMA(1,1) process

From ARMA(1,1) model, take expected value, we get

E(Xt) – ϕ1 E(Xt –1 ) = ϕ0 + E(at) + θ1 E(at–1 )

E(Xt) – ϕ1 E(Xt )

= ϕ0

𝐸 (𝑋𝑡 ) =

•

(Since stationarity of Xt : E(Xt ) = E(Xt–1 ))

𝜙0

1 − 𝜙1

Using the lag operator L, ARMA(1,1) can be written more concisely as

ϕ(L)Xt = ϕ0 +θ(L)at

(38)

where ϕ(L) =

θ(L) =

20

•

Convert the ARMA(1,1) process into linear time series process.

From (29), we get

Xt =

or

𝜙0

𝜙(𝐿)

Xt =

+

𝜙0

𝜙(1)

𝜃(𝐿)

𝜙(𝐿)

𝑎𝑡

(30)

𝜃(𝐿)

+ 𝜙(𝐿) 𝑎𝑡

(31)

Consider the linear time series process of equation (2),

𝑋𝑡 = 𝜇 + 𝜓(𝐿 )𝑎𝑡

= 𝜙(𝐿)

(32)

ϕ(L)(L)

= θ(L)

(33)

(1–ϕ1 L) (1+ 1 L + 2 L2 +…)

1+

𝜃(𝐿)

𝜓(𝐿 )

Hence,

or

(2)

= 1 – θ1 L

1 L + 2 L2 +…

–ϕ1 L – 1 ϕ1 L2 –…

=

1 –θ1 L

(34)

From (34), we get

( 1 –ϕ1 )

= –θ1 ➔ 1 = (ϕ1 – θ1 )

2 – 1 ϕ1

=0

➔ 2 = 1 ϕ1

= (ϕ1 – θ1 )ϕ1

3 – 2 ϕ1

=0

➔ 3 = 2 ϕ1

= (ϕ1 – θ1 )𝜙12

(35)

:

Hence,

i = (ϕ1 – θ1 )𝜙1𝑖−1

;j≥1

(36)

Note: Consider (35), it can imply that

(1)

(2)

21

Remark 1

| |

Let {a t } be a stationary time series with mean 0 and {Xt } is a linear process. If ∑∞

𝑖 =0 𝜓𝑖 < ∞, then the time series

∞

𝑋𝑡 = 𝜇 + ∑ 𝜓𝑖 𝑎𝑡 −𝑖 = 𝜇 + 𝜓(𝐿 ) 𝑎𝑡

𝑖=0

is stationary with mean and autocovariance function

∞

𝛾ℎ = 𝐶𝑜𝑣( 𝑋𝑡 , 𝑋𝑡 −ℎ ) =

𝜎𝑎2

∑ 𝜓𝑖 𝜓𝑖+ℎ

(4)

𝑖 =0

•

Use Remark 1 to find Var(X) of ARMA(1,1) process

. For j (j≥ 1) we can find them from (36)

We have known that 0 =

i = (ϕ1 – θ1 )𝜙1𝑖−1

From (36),

(36)

1 = (ϕ1 – θ1 )

2 = (ϕ1 – θ1 )ϕ1

3 = (ϕ1 – θ1 )𝜙12

:

From (4),

Var(Xt)

= γ0

∞

∞

= 𝜎𝑎2 ∑ 𝜓𝑖 𝜓𝑖 = 𝜎𝑎2 ∑ 𝜓𝑖2

𝑖=0

𝑖=0

= 𝜎𝑎2 (𝜓02 + 𝜓12 + 𝜓22 + 𝜓32 + ⋯ )

= 𝜎𝑎2 [1 + (𝜙1 − 𝜃1 )2 + (𝜙1 − 𝜃1 )2 𝜙12 + (𝜙1 − 𝜃1 )2 𝜙14 + ⋯ ]

= 𝜎𝑎2 [1 + (𝜙1 − 𝜃1 )2 {1 + 𝜙12 + 𝜙14 + ⋯ } ]

= 𝜎𝑎2 [1 + (𝜙1 − 𝜃1 )2 {

(𝜙1 − 𝜃1 )2

1

2

}]

=

𝜎

[1

+

]

𝑎

1 − 𝜙12

1 − 𝜙12

(1 − 𝜙12 ) + (𝜙1 − 𝜃1 )2

(1 − 𝜙12 ) + (𝜙12 − 2𝜙𝜃1 + 𝜃12 )

2

= 𝜎𝑎2 [

]

=

𝜎

[

]

𝑎

1 − 𝜙12

1 − 𝜙12

22

=

𝜎𝑎2

1 − 2𝜙1 𝜃1 + 𝜃12

[

]

1 − 𝜙12

23