Computer-generated Text Detection Using Machine

Learning: A Systematic Review

Beresneva Daria

Anti-Plagiat JSC

Moscow, Russia

dberesneva@ap-team.ru

Abstract––Computer-generated text or artificial text

nowadays is in abundance on the web, ranging from basic

random word salads to web scraping. In this paper, we present a

systematic review of some existing automated methods aimed at

distinguishing natural texts from artificially generated ones. The

methods were chosen by certain criteria and give the numeral

result of their work. We further provide a summary of the

methods considered. Comparisons, whenever possible, use

common evaluation measures, and control for differences in

experimental set-up. Such collations are not easy as benchmarks,

measures, and methods for evaluating artificial text detectors are

still evolving.

I. INTRODUCTION

The biggest part of artificial content is generated for

nourishing fake web sites designed to offset search engine

indexes: at the scale of a search engine, usage of

automatically generated texts render such sites harder to detect

than using copies of existing pages. Artificial content can

contain text (word salad) as well as data plots, flow charts, and

citations.

The examples of automatically generated content include:

•

Text translated by an automated tool without

human review or curation before publishing.

•

Text generated through automated processes, such

as Markov chains.

•

Text generated using automated synonymizing or

obfuscation techniques.

An artificial text looks like reasonable text from a distance

but is easily spotted as nonsense by a human reader. For

instance [24]:

“Many biologists would agree that, had it not been for

stable symmetries, the evaluation of 2 bit architectures might

never have occurred. A technical challenge in networking is

the simulation of agents. The notion that scholars interfere

with SCSI disks is rarely numerous. To what extent can the

Ethernet be developed to accomplish this objective?”

Even if it seems common sense, natural texts have many

simple statistical properties that are not matched by typical

word salads, such as the average sentence length, the

type/token ratio, the distribution of grammatical words, etc.

[7], so it could be easy to discover affectation in an automatic

way.

By definition [33], a systematic review answers a defined

research question. It does so by collecting and summarizing all

empirical evidence that fits pre-specified eligibility criteria.

Thus, the aim of this paper is to review existing methods of

artificial text detection. We survey these efforts, their results

and their limitations. In spite of recent advances in evaluation

methodology, many uncertainties remain as to the

effectiveness of text-generating filtering techniques and as to

the validity of artificial text discovering methods.

The rest of this paper is organized following systematic

review guidelines [22]. In Section 2 we elaborate on the

methodology of data extraction from the Internet open sources.

Section 3 presents the methods selected and their short

description. A comparison of the methods is available in

Section 4. Section 5 recaps our main findings and discusses

various possible extensions of this work.

II. LITERATURE SEARCH

According to systematic literature review rules [22], the

articles selection method was organized as follows.

The literature was searched for articles describing

automatically generated texts filtering methods and an

evaluation of its effectiveness. To find articles we used such

web libraries as Google Scholar, Elibrary.ru, and dl.acm.org.

We have only taken articles in Russian and English. The key

phrases of search queries were “artificial text”, “machine

generated text”, “spam classification”, “Web Spam Filtering”

and others.

Selection of the primary studies involved about a hundred

articles. We considered the first 30 pages of the search results

and then changed the query. If the given query did not give

any significant results up to 15 pages of search results, we

changed it as well. Incorrect results derived using the

methodology or results that are insufficiently noteworthy

because their results are represented better elsewhere, were

similarly omitted.

Finally, we have selected 14 articles that meet the following

criteria:

• Exploration of the problem of recognizing an

artificially created text

• Using machine learning algorithms

• The source of used real data is presented

• The method described is applicable to Russian and/or

English

• The article is released not earlier than 2005

The result of the algorithm - the number that determines the

probability of finding an element in the two classes. For the

error of 1% - 77.95% unnatural texts to 5% -90.61%.

• The article must be published;

B.

• The numeral result of the algorithm (accuracy/ Fmeasure) is presented

One more way of machine translation detection [2] uses not

only a statistical, but also linguistic characteristics of the text.

To do so they use classifiers that learn to distinguish human

reference translations from machine translations. This

approach is fully automated and independent of source

language, target language and domain.

• The threshold value of effectiveness of the method is

not less than 0.75.

III. THE METHODS OF ARTIFICIAL TEXT DETECTION

As said before, the way of artificial content detection

depends on the method by which it was generated. Let us

consider various existing ones.

A.

Frequency counting method

To discover whether a text is automatically generated by

machine translation (MT) system or is written/translated by

human, the paper [1] uses the correlations of neighboring

words in the text.

Let us take two thousand most frequently used words of

the Russian language. Let 𝐴𝑖𝑗 be a matrix where at the

intersection of the i-th row and j-th column is the frequency of

word pair occurrence in the language (based on fixed texts

‘ruscorpora’). A function

Cor (i, j )

Aij

Ai

Aij

Aj

measures the degree of "compatibility" of words with numbers

i and j, where 𝐴𝑖 and 𝐴𝑖 are the sums into rows and columns.

The hypothesis is: In the artificial text, the word’s pair

distribution should be broken with function Cor on the

functions (means the number of rare for language pairs are

longer than the standard and the number of frequent pairs) is

understated.

To test it the authors used machine-learning algorithm

TreeNet. As the potential a "sum of the logarithms of the

probabilities of misclassification” was taken.

Training sample - 2000 original texts, 250 artificial. Test

sample - 500 original and 245 automatically generated.

Parameters:

- Step regularization - 0.01;

- The proportion of the training sample, which went to training

at each step - 0.5;

- Corresponds to the number of iterations for minimizing the

error of the test set.

Factors: The number of pairs in text, Cor that lay within a

predetermined range (for example: from 0 to, and so on up to

the range Cor> 1).

Error threshold: 1% and 5% on a test subset of the 500

original text. Then, for these thresholds the fullness is

considered at the appropriate subset of unnatural documents."

Linguistic features method

The dataset consist of 350,000 aligned Spanish-English

sentence pairs taken from published computer software

manuals and online help documents. Training and test data

were evenly divided between English reference sentences and

Spanish-to-English translations.

For each sentence, 46 linguistic features were automatically

extracted by performing a syntactic parse. It is done to provide

a detailed assessment of the branching properties of the parse

tree. The features fall into two broad categories:

1) Perplexity features extracted using the CMUCambridge Statistical Language Modeling Toolkit [8]

2) Linguistic features felt into several subcategories:

branching properties of the parse, function word density,

constituent length, and other miscellaneous features.

Then a set of automated tools was used to construct decision

trees [9] based on the features extracted from the reference and

MT sentences. Decision trees were trained on all 180,000

training sentences and evaluated against the 20,000 held-out

test sentences.

Using only perplexity features or only linguistic features

yields accuracy less than the authors expected in their

literature selection, but combining the two sets of features

yields the highest accuracy, 82.89%.

C. Machine translation detection using SVM algorithm

The authors of the paper [4] determine, whether a support

vector machine (SVM) could distinguish machine-translated

text from human written text (both original text and human

translations).

For that, the authors have taken English and French parallel

corpora. The experiment was carried out with the different set

of data:

the Canadian Hansard (949 human-written

documents, and their machine translations for training, and

58 human-written documents and their machine

translations held aside for testing),

a basket of six public Government of Canada web

sites (21 436 original documents, and 21 436 machine

translated documents),

a basket of Government of Ontario web sites (17 583

nominally human-written documents and no machinetranslated text).

In each experiment, a support vector machine (SVM) was

used to classify text as human-written English (hu-e), humanwritten French (hu-f), machine-translated English (mt-e), or

machine-translated French (mt-f). These texts were translated

en masse by Microsoft’s Bing Translator.

The method described in [6] uses following feature set (a

thorough presentation of these indices is given in [10]) to train a

decision tree using the C4.5 algorithm [5]:

the mean and the standard deviation of words

length;

the mean and standard deviation of sentences

length;

the ratio of grammatical words;

the ratio of words that are found in an English

dictionary;

the ratio between number of tokens (i.e. the number

of running words) and number of types (size of

vocabulary), which measures the richness or diversity of

the vocabulary;

the χ2 score between the observed word frequency

distribution and the distribution predicted by the Zipf law;

Honore's score, which is related to the ratio of

´hapax’s (a type which occurs only once in a given text)

legomena in a text [12]. This score is defined as:

From these training texts scaled for the length of each

document simple unigram frequencies were extracted.

Numbers and symbols were removed leaving only words and

word-like tokens.

Then the training data were classified using 10-fold crossvalidation with LibSVM. For Hansard data, an accuracy of

99.89% was achieved overall, with an F-measure of 0.999 in

each class. Using Government of Canada web sites, the

classifier exceeded a chance baseline of 25% accuracy,

achieving an average F-measure of 0.98. The experiment with

Government of Ontario data was largely unsuccessful.

D.

The method of phrase analysis

The method [6] involves the use of a set of computationally

inexpensive features to automatically detect low-quality Webtext translated by statistical machine translation systems. The

method uses only monolingual text as input; therefore, it is

applicable for refining data produced by a variety of Webmining activities. The mechanism is evaluated on EnglishJapanese parallel documents.

𝐻 = 100 ∙

Evaluation results show that the proposed method achieves

an accuracy of 95.8% for sentences and 80.6% for text in

noisy Web pages.

E.

Artificial content detection using lexicographic features

By and large, the lexicographic characteristics can be used not

only in machine translation detection, but in general case

(patchwork, word stuffing or Markovian generators, etc.) as well.

Texts written by humans exhibit numerous statistical properties

that are both strongly related to the linguistic characteristics of

the language, and to a certain extent, to the author’s style.

𝑉(1)

𝑁

where N is the number of tokens and V(m) is the number

of words which appear exactly m times in the text.

Sichel’s scores, which is related to the proportion of

dislegomena [13]:

A sentence translated by an existing MT system is

characterized by the phrase salad phenomenon (see Introduction). Each phrase, a sequence of consecutive words is

fluent and grammatically correct; however, the fluency and

grammar correctness are both poor in inter-phrases. Based on

the observation of these characteristics, the authors define

features to capture a phrase salad by examining local and

distant phrases. These features evaluate fluency,

grammaticality, and completeness of non-contiguous phrases

in a sentence. Features extracted from human-generated text

represent the similarity to human-generated text; features

extracted from machine-translated text depict the similarity to

machine-translated text.

Each feature is finally normalized to have a zero-mean and

unit variance distribution. In the feature space, a support

vector machine (SVM) classifier [10] is used to determine the

likelihoods of machine-translated and human-generated

sentences. By contrasting these feature weights, one can

effectively capture phrase salads in the sentence.

𝑙𝑜𝑔𝑁

1−

S

V ( 2)

N

Simpson’s score, which measures the growth of the

vocabulary [21]:

D V ( m)

m

m m 1

N N 1

The positive instances in the training corpus contain human

productions, extracted from Wikipedia; the negative instances

contain a mixture of automatically generated texts, produced

with the generators presented above, trained on another section

of Wikipedia. For testing, the authors built one specific test set

for each generator, containing a balance mixture of natural and

artificial content.

The best F-measure of fake content detector based on

lexicographic features for various generation strategies

(patchwork, word stuffing or Markovian generators) is 100% for

10k of words generated by world stuffing. The patchwork

generator gave F-measure more than 78%, however for

Markov models the method failed: Markovian generators are

able to produce content that cannot be sorted out from natural

ones using the decision tree classifier.

F.

Perplexity-based filtering

One more way to detect the aforementioned kind of

generated texts based on conventional n-gram models is

considered. Briefly, n-gram language models represent

sequences of words under the hypothesis of a restricted order

Markovian dependency, typically between 2 and 6. For

instance, with a 3-gram model, the probability of a sequence

of k > 2 words is given by:

p(w1 ...wk ) p(w1 ) p(w2 | w1 )... p(wk 2 | wk 2 wk 1 )

A language model is entirely defined by the set of conditional

probabilities { 𝑝(𝑤|ℎ), h ∈ H }, where h denotes the n−1

words long history (sequence of n-grams) of w , and H is the

set of all sequences of length n−1 over a fixed vocabulary.

For example, let goal be to compute the probability of a word

w given some history h, or P(w|h). Suppose the history h is

“the sun is so bright that” and we want to know the probability

that the next word is the: P(the | the sun is so bright that)

These conditional probabilities are easily estimated from a

corpus a raw text: the maximum likelihood estimate for

𝑝(𝑤|ℎ) is obtained as the ratio between the counts of the

sequence hw and the count of the history. To ensure that all

terms 𝑝(𝑤|ℎ) are non-null these estimates need to be

smoothed using various heuristics [11].

A standard way to estimate how well a language model p

predicts a text 𝑇 = 𝑤1 … 𝑤𝑁 is to compute its perplexity over

T, where the perplexity is defined as:

PP( pT ) 2 H (T , p ) 2

1

N

N

log2 p ( wi |hi )

i 1

The baseline filtering system uses conventional n-gram

models (with n=3 and n=4) to detect fake content, based on the

assumption that texts having a high perplexity w.r.t. a given

language model are more likely to be forged than texts with a

low perplexity Here, the perplexities are computed with the

SRILM Toolkit [18].

Depending on the kind of text generators and different text

length (2k and 5k of words), the method gives different

results. The best result for 3-grams is 90% F-measure for 5k

patchwork, 100% for word stuffing and 96% for the second

order Markov model. As for 4-grams, the best results are 90%

for patchworks, 100% for word stuffing and 97% for the

second order Markov model.

G.

A fake content detector based on relative entropy

This method also seeks to detect Marcovian generators,

patchworks and word stuffing, and it uses a short-range

information between words [14]. Useful n-grams are the ones

with a strong dependency between the first and the last word.

Language model pruning can be performed using conditional

probability estimates [15] or relative entropy between n-gram

distributions [16]. Instead of removing n-grams from a large

model, one can start with a small model as well, and then

insert those higher order n-grams that improve performance

until a maximum size is reached.

The given entropy-based detector uses a similar strategy to

score n-grams according to the semantic relation between their

first and last words. This is done by finding useful n-grams,

means n-grams that can help detect artificial text.

Let {p(·|h)} denote an n-gram language model, h’ - the

truncated history, that is the suffix of length n − 2 of h. For

each history h, one can compute the Kullback-Leibler (KL)

divergence between the conditional distributions p(·|h) and

p(·|h’) ([17]):

KL( p | h) | p( | h' )) p( w | h) log

w

p ( w | h)

p ( w | h' )

which reflects the information lost in the simpler model when

the first word in the history is dropped.

To score n-grams the pointwise KL divergence is used:

The penalty score assigned to an n-gram (h, w) is:

S (h, w) max( PKL(h, v) PKL(h, w))

This score represents a progressive penalty for not respecting

the strongest relationship between the first word of the history

h and a possible successor: argmax PKL(h, v) . The total score

S(T) of a text T is computed by averaging the scores of all its

n-grams with known histories.

The method gives a 93% F-measure for 5 k of patchwork,

and 81% for 2k; 98% for 5k word stuffing and 92% for 2k; for

Markov models method gives 99% for 5k and 2k (for 3gramms). For 4-grams the satisfactory results are only for 2nd

Markov model: 97% for 5k and 88% for 2k.

H. Method of Hidden Style Similarity

The method [3] identifies automatically generated (by

template or script) texts on the Internet using a (hidden) style

similarity measure based on extra-textual features in HTML

source code. The authors also built a clustering algorithm to

sort the generated texts according to this measure. The method

includes a web page based on a template and style of writing.

The first step is to extract the content by removing any

alphanumeric character from the HTML documents and

keeping into account the remaining characters using n-grams.

The second step is to model the “style” of the documents by

converting the content into a model suitable for comparison.

For frequencies based distances, the second step consists of

splitting up the documents into multi-sets of parts (frequencies

vectors). For set intersection based distances, the split is done

into sets of parts. Depending on the granularity expected, these

parts may be sequences of letters (n-grams), words, sequences

of words, sentences or paragraphs. The parts may overlap or

not.

The similarity measure of the texts is computed using

Jaccard index:

Jaccard ( D1 , D2 )

| D1 D2 |

.

D1 D2

For similarity clustering is used the technique called

fingerprinting: the fingerprint of a document D is stored as a

sorted list of m integers.

Each document is divided into parts. P is the set of all

possible parts. One has to fix at random a linear ordering on P

(≺) and represent each document D ⊆ P by its m lowest

elements according to ≺ .

If ≺ is chosen at random over all permutations over P then

for two random documents D1, D2 ⊆ P and for m growing, it

is shown in [20] that

Min ,m ( D1 ) Min ,m ( D2 ) Min ,m | ( D1 D2 ) |

Min ,m ( D1 D2 )

distances between groups maximizes. The algorithm proceeds

by grouping the two texts separated by the smallest distance

and by recounting the average distance between all other texts

and this new set, and so on until the establishment of a single

set.

As the authors don’t provide any numerical results of the

method’s work, we have implemented the method using the

source Java code provided by SciDetect developers. The

algorithm was adapted to russian texts (particularly, the

regular expression for text tokenization was changed). As a

training set 600 machine translated text (from English:

SciGen, MathGen, PropGen, PhythGen to Russian) were

taken, and the words were lemmatizated.

Figure 1 shows the documents distribution of the testing set

in terms of distance to the nearest neighbor from the training

set. The bold line in the middle is the respective index to the

“Rooter” article – a corrected by human machine-translated

text, adjusted to scientific article view.

is a non-biased estimator of Jaccard (𝐷1 , 𝐷2 ).

Then the text clusters are built according to the chosen

threshold of similarity.

For the experiment a corpus of five million HTML

documents crawled from the web were used. At certain

threshold, the method provides 100% accuracy of detection

according to URLs prefixes.

I. Method using nearest neighbor classification

The next approach [21] was invented to detect documents

automatically generated using the software SCIGen, MathGen,

PropGen and PhysGen: the programs that generate random

texts without any meaning, but having the appearance of

research papers in the field of computer science, and

containing summary, keywords, tables, graphs, figures,

citations and bibliography.

For this, the distances between a text and others (intertextual distances) are computed. Then these distances are used

to determine which texts, within a large set, are closer to each

other and may thus be grouped together. Inter-textual distance

depends on four factors: genre, author, subject and epoch.

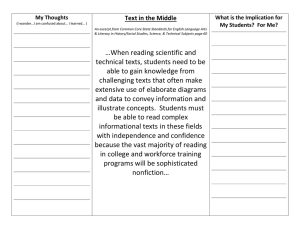

Fig 1. DISTANCE TO THE NEAREST NEIGHBOR IN THE TRAINING SET

The method gave the 100% accuracy in our dataset.

IV. CHOOSING A METHOD

The distance index is as follows:

Drel ( A, B )

| F

i( A B )

iA

FiB |

NA NB

where 𝑁𝐴 and 𝑁𝐵 are the number of word-tokens in texts A and

B respectively; 𝐹𝑖𝐴 and 𝐹𝑖𝐵 - the absolute frequencies of a type

i in texts A and B respectively; |𝐹𝑖𝐴 − 𝐹𝑖𝐵 |is the absolute

difference between the frequencies of a type i in A and B

respectively. This index can be interpreted as the proportion of

different words in both texts. To use this distance index the

texts should be of the same size. If the two texts are not of the

same lengths in tokens, the formula must be modified.

Then with nearest neighbor classification the distances

between texts of the same group are minimized and the

Every artificial content is generated for a specific purpose.

For instance [4]:

1)

To make a fake scientific paper for academic

publishing or increase in the percentage of originality ща

the article;

2) To send out spam e-mails and spam blog comments

with random texts to avoid being detected by conventional

methods such as hashing;

3)

To reach the top of search engines response lists [18];

4) To generate “fake friends” to boost one’s popularity

in social networks [19].

In our paper, we focus only on the first point.

It is important to recognize the aim of fake content for its

subsequent detection. Various generation strategies require

different

approaches

to

find

it.

For

example,

algorithms for detecting word salad are clearly possible and ar

e not particularly difficult to implement. A statistical approach

based on Zipf's law of word frequency has potential in

detecting simple word salad, as do grammar checking and the

use of natural language processing. Statistical Markovian

analysis, where short phrases are used to determine if they can

occur in normal English sentences, is another statistical

approach that would be effective against completely random

phrasing but might be fooled by dissociated press methods.

Combining linguistic and statistical features can improve the

result of experiment. By contrast, texts generated with

stochastic language models appear much harder to detect.

Comparing considered methods of machine translation

detection one may notice that using SVM algorithm and

unigram frequencies as a feature gives the best result in that

domain.

One also needs to estimate the data capacity: its training

data for machine learning approaches and testing sample. Text

corpuses are taken depending on the aim of the experiment

and capabilities of getting them. Like a generation strategy,

every data capacity needs different approach. For instance,

small trainings samples permit to use such indexes as Jaccard

or Dice [5] to count the similarity measure or distance between

documents. For big datasets, one can use some linguistics

features and variations of Support Vector Machine and

Decision Trees algorithms. Table I summarizes results of

described methods. The numerical results are provided by the

authors of the articles, except the last one.

TABLE I. SUMMING UP THE METHODS

№

The method

1

2

Dataset/language

The best result

Frequency

counting method

[1]

2000

original

texts,

250

artificial; Russian

90,61%

accuracy

The method of

linguistic features

[2]

2k, 5k, 10k of

generated words;

Spanish-English

100%

Fmeasure

for

world stuffing

350,000 aligned

Spanish-English

sentence pairs

82.89%

accuracy

2k, 5k, 10k of

generated words

100%

Fmeasure

for

word stuffing

2k, 5k, 10k of

generated words

99% F-measure

for patchwork

3

Unigrams + SVM

algorithm method

[4]

4

Phrase analysis

method [6]

5

Lexicographic

features method

[14]

Field

work

of

Machine

translation

detection

4-grams: 90%

for patchworks,

100% for word

stuffing

and

97%

2nd

Markov model

7

A fake content

detector based on

relative entropy

[14]

English and

French parallel

corpora

3-grams: 93%

F-measure for

5k

of

patchwork,;

98% for 5k

word stuffing;

for

Markov

models method

gives 99%

8

Hidden

Style

Similarity

method [3]

A corpus of 5

million html

pages

100% accuracy

at

certain

threshold

9

Distance index +

NN [21]

1600 artificial

documents+8200

original; English

100%

accurancy

V. CONCLUSION

This work presents the results of a systematic review of

artificial content detection methods. About a hundred articles

were considered for this review; perhaps one-sixth of them

met our selection criteria. All the presented methods give good

result in practice, but it makes no sense to choose the best one:

every approach works under with different conditions like

various text generation strategies, different dataset capacity,

quality of data and other. Thus, before choosing which method

to use, one needs to determine the features of artificial content

generating method. Anyway, each approach involves trade off

that require further evaluation.

In future, we plan to compare all the presented approaches

on standardized datasets and to do a robustness analysis across

different datasets.

REFERENCES

[1] Grechnikov E.A., Gusev G.G., Kustarev A.A., Raigorodsky

[2]

6

Perplexity-based

filtering[14]

Machine

translation

+ text

generator

detection

Text

generator

detection

[3]

[4]

English-Japanese

parallel

documents

For 5k; 3grams:

90%

F-measure

(patchwork),

100%

(word

stuffing); 96%

(2nd Markov)

[5]

A.M.: Detection of Artificial Texts, Digital Libraries:

Advanced Methods and Technologies, Digital Collections:

Proceedings of the XI All-Russian Research Conference

RCDL'2009. Petrozavodsk: KRC RAS, 2009. Pp. 306-308.

Simon Corston-Oliver, Michael Gamon and Chris Brockett: A

machine learning approach to the automatic evaluation of

machine translation, Proceeding ACL '01 Proceedings of the

39th Annual Meeting on Association for Computational

Linguistics, Pages 148-155

Tanguy Urvoy, Thomas Lavergne, Pascal Filo-che: Tracking

Web Spam with Hidden Style Similarity. AIRWEB’06,

August 10, 2006, Seattle, Washington, USA.

Thomas Lavergne, Tanguy Urvoy, Francois Yvon: Filtering

artificial texts with statistical machine learning Techniques.

Language Resources and Evaluation, March 2011, Volume

45, Issue 1, pp 25-43

Witten IH, Frank E: Data Mining: Practical Machine

Learning Tools and Techniques with Java Implementations,

Morgan Kaufmann Publishers (2011)

[6] Yuki Arase, Ming Zhou: Machine Translation Detection from

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

[16]

[17]

[18]

[19]

[20]

[21]

[22]

[23]

[24]

Monolingual Web-Text. Proceedings of the 51st Annual

Meeting of the Association for Computational Linguistics,

pages 1597–1607, Sofia, Bulgaria, August 4-9 2013

Baayen R.H.: Word Frequency Distributions. Kluwer

Academic Publishers, Amsterdam, The Netherlands, (2001)

Clarkson, P. and R. Rosenfeld. 1997. Statistical Language

Modeling Using the CMU-Cambridge Toolkit. Proceedings of

Eurospeech97. 2707- 2710.

Chickering, D. M., D. Heckerman, and C. Meek. 1997. A

Bayesian approach to learning Bayesian networks with local

structure. In Geiger, D. and P. Punadlik Shenoy (Eds.),

Uncertainty in Artificial Intelligence: Proceedings of the

Thirteenth Conference. 80-89

Vladimir N. Vapnik. 1995. The nature of statistical learning

theory. Springer.

Chen SF, Goodman JT (1996) An empirical study of

smoothing techniques for language modeling. In: Proceedings

of the 34th Annual Meeting of the Association for

Computational Linguistics (ACL), Santa Cruz, pp 310–318

Honore A (1979) Some simple measures of rich-ness of

vocabulary. In: Association for Literary and Linguistic

´Computing Bulletin, vol 7(2), pp 172–177

Sichel H (1975) On a distribution law for word frequencies.

In: Journal of the American Statistical Association, vol 70, pp

542–547

Thomas Lavergne, Tanguy Urvoy, Francois Yvon: Detecting

Fake Content with Relative Entropy Scoring, PAN 2008

K. Seymore and R. Rosenfeld: Scalable backoff language

models, In ICSLP ’96, volume 1, pp. 232–235, Philadelphia,

PA, (1996).

A. Stolcke. Entropy-based pruning of backoff language

models, 1998

C. D. Manning and H. Schutze: Foundations of Statistical

Natural Language Processing, The MIT Press, Cambridge,

MA, 1999.

Gyongyi Z, Garcia-Molina H (2005) Web spam taxonomy. In:

First International Workshop on Adversarial Information

Retrieval on the Web (AIRWeb 2005)

Heymann P, Koutrika G, Garcia-Molina H (2007) Fighting

spam on social web sites: A sur-vey of approaches and future

challenges. IEEE magazine on Internet Computing 11(6):36–

45

A. Z. Broder. On the resemblance and containment of

documents. In Proceedings of Compression and Complexity

of Sequences, page 21, 1998

Cyril Labb´e, Dominique Labb´e. Duplicate and fake

publications in the scientific literature: how many SCIgen

papers in computer science?. Scientometrics, Akad´emiai

Kiad´o, 2012, p.10.

Guidelines

for

Systematic

Reviews,

URL:

http://www.systematicreviewsjournal.com/

Random

Text

Generator

Online,

URL:

http://randomtextgenerator.com/

Systematic reviews and meta-analyses: a step-by-step guide,

URL:http://www.ccace.ed.ac.uk/research/softwareresources/systematic-reviews-and-meta-analyses