Lectures on Waves and Oscillations

advertisement

Vibrations, waves and optics

Course notes for PH221

Ananda DasGupta

IISER-Kolkata

Spring Semester - 2011

Part I

Vibrations

0.1

Chapter 1

Oscillations in

conservative systems

1.1

The simple harmonic oscillator

The equation of motion of a simple haromonic oscillator (e.g. a box

attached to a spring fixed at one end, moving on a smooth horizontal

table top ) is

d2 x

m 2 = −kx

(1.1)

dt

q

k

, this becomes

Introducing the parameter ω0 ≡ m

d2 x

+ ω02 x = 0.

dt2

The particle will oscillate about x = 0, as we will see below.

If a constant force is supreposed on a SHO, we have

d2 x

F

m 2 = −kx + F = −k x −

.

dt

k

Substituting X = x −

F

k

(1.2)

we see that

d2 X

+ ω02 X = 0.

dt2

So the particle will oscillate about X = 0 i.e. x = Fk . Thus, all that a constant force does is to shift the equilibrium position. (Thus, letting the

box hang vertically from the same spring will not change the frequency

of oscillations - all that will happen is that the equilibrium position will

shift down, by a distance mg

k ).

Puzzle : If this is really correct, then what effect will a rough table top

(where the force of friction is a constant µmg ) have on the oscillations of

our spring?

1.0

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

1.2

1.1

Solving the SHO equation

We want to solve the initial value problem

d2 x

+ ω02 x = 0,

dt2

1.2.1

subject to x (0) = x0 , and ẋ (0) = v0 .

(1.3)

First solution

Trying x = ept we see that the ODE is satisfied if

p2 + ω02 = 0

so that p = ±iω0 . This means that eiω0 t and e−iω0 t are two linearly

independent solutions of the linear homogeneous ODE (1.2). So, a

general solution to (1.2) is given by

x (t) = C1 eiω0 t + C2 e−iω0 t

where C1 and C2 are two arbitrary (in general complex) constants. Since

x (t) is real, we have

∗

[x (t)] = x (t)

which gives C2 = C1∗ . Writing C1 =

the general solution as

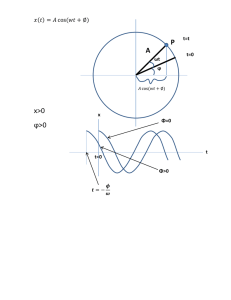

x (t) =

A iφ

2e ,

where A, φ ∈ R, we can write

A iφ iω0 t A −iφ −iω0 t

e e

+ e e

= A cos (ω0 t + φ)

2

2

(1.4)

The constant A which is the maximum value that x (t) attains is

also called the amplitude of the motion. The quantity ω0 t + φ on which

the motion depends is called the phase, and φ the initial phase (aka

epoch - a cute term that is slightly out of fashion nowadays). Equation

(1.4) shows that the particle moves between x = +A and x = −A - i.e.

it oscillates equally about x = 0. Since the cosine function is periodic

with a period of 2π , the motion repeats with a time period given by

T =

2π

.

ω0

(1.5)

This shows that the time period of a simple harmonic oscillator is actually independent of the amplitude of the motion (a quite amazing result

if you think about it!) - a property that is expressed by saying that the

simple harmonic motion is an isochronous system.

To solve the IVP, we note that (1.4) and its derivative

ẋ (t) = −ω0 A sin (ω0 t + φ)

(1.6)

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

1.2

leads to

A cos φ

=

A sin φ

=

x0

v0

−

ω0

so that

x (t)

= A cos φ cos ω0 t − A sin φ sin ω0 t

v0

sin ω0 t

= x0 cos ω0 t +

ω0

(1.7)

Equations (1.4) and (1.6) shows that if we were to plot a v versus x

plot for a simple harmonic oscillator, we would get a curve which obeys

the equation

v2

x2

+ 2 2 = 1.

2

A

ω0 A

This is the well known equation for an ellipse. This, of course, is not

a surprise - it is just a rewriting of the law of conservation of energy

which would directly give

1

1

mv 2 + kx2 = E

2

2

where we have used that E = 12 kA2 = 12 mω02 A2 . The two axes of the

ellipse are A and ω0 A, respectively (note that x and v being of two different dimensions, comparing lengths along the two axes of this plot

is senseless - and so there is no way to decide which axis is the major

and which the minor one here). The precise ellipse traced out depends

on the value of A and is thus dependent on initial conditions. Note

however that information about the epoch is lost in these plots. Such

v versus x plots are called phase space plots - and they play a very

important role in advanced studies of dynamics. Actually phase space

plots are plots of the momentum p versus x - which in this case differs

from the v versus x plots by just a simple scaling by m, so that the axes

are now A and mω0 A. The area of the ellipse in phase space is given by

πmω0 A2 = 2πE. This may look like a mere curiosity at this point of time.

However, let me try to pique your interest by saying that Max Plank had

arrived at his famous E = n~ω0 formula precisely by demanding that

this area be quantized in integer multiples of a fundamental constant

h!

1.2.2

Second solution

We can rewrite (1.2) as

v

dv

+ ω02 x = 0

dx

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

where v ≡

dx

dt .

1.3

This leads to vdv + ω02 xdx = 0 which readily leads to

v2

ω 2 x2

+ 0

= constant.

2

2

If the maximum value of x is A, then we must have v = 0 at x = A. This

leads to

v 2 = ω02 A2 − x2 .

This leads to

p

dx

= −ω0 A2 − x2

dt

(why the minus sign?) so that

−√

which integrates to

cos−1

1.2.3

dx

= ω0 dt

− x2

A2

x

= ω0 t + φ.

A

Third solution

Equation (1.2) gives us

ẍ (0) = −ω02 x0 .

Differentiating once and putting t = 0 gives

x(3) (0) = −ω02 v0 .

Repeating this procedure gives

x(2n) (0) = −ω02

n

and

x(2n+1) (0) = −ω02

x0

n

v0 .

Although these equations have been derived for n > 0, it is easy to

check that they work for n = 0 also. This gives us the Taylor series

solution

x (t)

=

∞

∞

∞

n

n

X

x(n) (0) n X (−1) ω02n x0 2n X (−1) ω02n v0 2n+1

t =

t +

t

n!

(2n)!

(2n + 1)!

n=0

n=0

n=0

∞

∞

n

2n

n

2n+1

X

(−1) (ω0 t)

v0 X (−1) (ω0 t)

+

(2n)!

ω0 n=0

(2n + 1)!

n=0

v0

= x0 cos ω0 t +

sin ω0 t.

ω0

= x0

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

1.2.4

1.4

Fourth solution

Introducing the operator D ≡

d

dt ,

we can write (1.2) as

D2 + ω02 x = 0.

The operator D2 + ω02 can be factored as (D + iω0 ) (D − iω0 ) so that

(D + iω0 ) (D − iω0 ) x = 0.

Define y (t) ≡ (D − iω0 ) x (t) to get the 1st order equation

(D + iω0 ) y = 0

subject to the initial condition

y (0) = v0 − iω0 x0

which is solved easily to yield

y (t) = y (0) e−iω0 t .

Thus

(D − iω0 ) x = y (0) e−iω0 t

which means that

d

x (t) e−iω0 t = y (0) e−i2ω0 t .

dx

This equation is solved easily to yield

x (t) e

−iω0 t

Z

− x0 = y (0)

t

0

dt0 e−i2ω0 t = y (0)

0

1 − e−i2ω0 t

2iω0

so that

x (t)

1.2.5

eiω0 t − e−iω0 t

2iω0

v0

iω0 t

= x0 e

− i sin ω0 t +

sin ω0 t

ω0

v0

= x0 cos ω0 t +

sin ω0 t.

ω0

= x0 eiω0 t + (v0 − iω0 x0 )

The free particle limit

We have seen that the general solution of the SHO equation (1.2) is

given by (1.4) :

x (t) = A cos (ω0 t + φ) .

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

1.5

The limit ω0 → 0 should lead to the general solution for a free particle.

A naive limit, however, yields

x (t) → A cos φ

- a constant! This is certainly a solution for the free particle - but hardly

the most general solution!

The situation becomes clearer if we start from the solution of the

initial value problem (1.7). Since

lim cos ω0 t = 1

ω0 →0

and

lim

ω0 →0

sin ω0 t

= t,

ω0

this yields

x (t) → x0 + v0 t

which is, of course, the expected limit!

Puzzle : can you figure out what went wrong (or, how to put things

right) when we used (1.4)?

1.3

Small oscillations and the SHO

The importance of the SHO equation lies in its all pervasiveness. The

reason being that all conservative systems in stable equilibrium tend

to oscillate when disturbed from this position, and this oscillation is

almost always approximately simple harmonic when the oscillations

are small. To see why, let us expand the potential energy function

about x0 , the latter being the position of stable equilibrium

V 00 (x0 )

V 000 (x0 )

2

3

(x − x0 ) +

(x − x0 ) + . . .

2!

3!

(1.8)

Since η = x − x0 is small, the smaller powers will dominate in this

expansion. Now, the smallest power that is relevant for dynamics is the

quadratic (the constant term is unimportant for dynamics, the linear

term vanishes because −V 0 (x0 ) is the force acting on the particle at x0

). Thus, for small oscillations we have

V (x) = V (x0 ) + V 0 (x0 ) (x − x0 ) +

V (x) ≈ V (x0 ) +

V 00 (x0 )

2

(x − x0 )

2!

Conservation of energy leads to

2

1

dx

V 00 (x0 )

2

m

+

(x − x0 ) = constant

2

dt

2!

Differentiation with respect to x leads to the equation

mv

dv

+ V 00 (x0 ) (x − x0 ) = 0

dx

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

1.6

or

d2 X

+ V 00 (0) X = 0

dt2

where X = x − x0 is the displacement from x = x0 . This shows that, at

least to a this order of approximation, the particle is going to execute

simple harmonic oscillations about the point x = x0 . It is easy to see

that the time period of oscillation will be

r

V 00 (x0 )

2π

where

ω=

(1.9)

T ≈

ω

m

m

As an example, consider the good old simple pendulum. The potential energy of the pendulum is given by

θ2

V (θ) = −mgl cos θ ≈ −mgl 1 −

2

So apart from a dynamically unimportant constant, the potential energy is given by 21 mglθ2 . Thus, the pendulum behaves like a spring with

a spring constant mgl (this, of course, is just the value of V 00 (0) - we

had just used the series expansion of cos θ above to find it fast!). We

have to be slightly careful before we use (1.9) to find the time period,

though! The point is that here the “coordinate” is θ and not x - and

so the kinetic energy term in the conservation of energy is going to be

1

1

2

2 2

2 mv = 2 ml θ̇ . This means that in this case we have to replace the m

in (1.9) above by ml2 . Doing this, we quickly get

s

s

ml2

l

T ≈ 2π

= 2π

(1.10)

mgl

g

which is the familiar formula from high school. Note also that this

is an approximate result, which hinges on the replacement of cos θ by

1 − 21 θ2 (or, equivalently, replacing the derivative − sin θ by θ). Thus, the

equation is valid as long as θ stays small - and hence the reason why

you must keep the amplitude of swing small (just how small is small is

a different question - one we will explore later).

As another example, consider a particle having mass m and charge

q that is free to slide along the axis of a ring of radius R and charge

−Q. It is easy to see that if we try to move the charge q away from the

center of the ring along the axis the ring tries to pull it back - so the

equilibrium at the center is stable along the axis1 . The potential energy

1 The qualifier “along the axis” is essential here - note that any displacement from the

center along the plane of the ring will produce a force that pulls the charge farther away

- the equilibrium is unstable under such displacements. This is something you have

learned in your em course - it is impossible to have stable equilibrium in electrostatics!

In our problem, of course, we have taken care of this by ensuring that the charge is

confined to move along the axis by some other force.

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

1.7

of the charge when it is at a distance x from the axis is given by

V (x) = −

1

qQ

√

.

4π0 R2 + x2

This shows that as expected V (x) is minimum at x = 0. We need to

calculate V 00 (0) to find the time period of small oscillations. We could

do this by differentiating twice, but as in the last example, a direct

expansion is easier :

qQ

V (x) = −

4π0 R

so that V 00 (0) =

qQ

4π0 R3 .

− 21

qQ

x2

1 x2

≈−

1+ 2

1−

R

4π0 R

2 R2

Thus, the time period of oscillation is given by

s

T ≈ 2π

4π0 R3

.

mqQ

As our final example, we will consider the case of two molecules,

each of mass m, bound together by the potential energy function

b

a

− 6

V (r) = V0

r12

r

- a form that is called the Lennard-Jones potential. Here r is the distance between the two molecules. This is a slightly complicated problem because a) this is a two mody problem, and b) because here we

have to work a bit before we figure out where the equilibrium position

is! The first problem is easily handled - elementary mechanics tells us

that the relative separation between two bodies interacting only within

themselves behaves as if the interaction force was acting on a single

particle of a reduced mass - which in this case is m/2. To settle the

second issue, we calculate

12a 6b

0

V (r) = V0 − 13 + 7

r

r

12 × 13 a 6 × 7 b

00

V (r) = V0

−

r14

r8

Solving V 0 (r0 ) = 0 gives the equilibrium separation r0 as

r

6 2a

r0 =

b

and this means that

V 00 (r0 ) =

V0

r014

156a − 42b ×

2a

b

=

72V0 a

r014

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

1.8

This means that the time period of oscillations is

s

7

r

r

m/2

πr07

m

π

m 2a 6

T ≈ 2π

=

=

V 00 (r0 )

6

V0 a

6 V0 a b

Given the derivation above, it may seem surprising that we had to

include the qualifier “almost all” when we spoke about systems that

show approximate simple harmonic behaviour when disturbed from

stable equilibrium. Given the general nature of the proof it seems that

the result has to be true for all such systems! The exception occurs

when the second derivative vanishes at the stable equilibrium position

x0 (in this case, the first nonzero derivative of V at x0 must be at a

higher even order, and must be positive - given that V (x) is a smooth

function ). For example a particle in a potential like αx4 will have a

position of stable equilibrium at x = 0 if α > 0, and the particle will

oscillate about this position if disturbed. But this oscillation will not be

simple harmonic.

Another issue that we must address is - just how small is the “small”

in small oscillations? Of course, when we are stopping at the quadratic

term in the Taylor series approximation of the potential, the term you

are ignoring is of the order of V 000 (x0 ) x3 . So, for the approximation

to be a valid one, the term that you retain (which is of the order of

V 00 (x0 ) x2 ) must dominate this term, so that the amplitude of motion

must satisfy

V 00 (x0 )

.

A

V 000 (x0 )

The result above will work as long as the third derivative is non-zero. If

all derivatives beyon the second are zero up to (but not including) the

n-th one, then the bound above will be

A

1.4

V 00 (x0 )

V (n) (x0 )

1

n−2

.

Beyond the small amplitude approximation

Consider a potential V (x) with a minimum at x = x0 . Let a particle

be moving in this potential with a total energy of E. In this case, the

points a and b, characterised by V (a) = V (b) = E are the turning points

of the motion. Since the velocity

q when the particle is at x ∈ [a, b] and is

2

moving from a to b is given by m

(E − V (x)), the time period (which is

twice the time the particle takes to go from a to b ) is given by

Z b

√

dx

p

T = 2m

(1.11)

E − V (x)

a

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

1.9

As a first example, let us verify that this does give us the correct

result for the case for which we know the answer - the simple harmonic

oscillator. If the amplitude of oscillation is A, then we have E = 21 kA2 =

1

2 2

2 mω0 A , so that

T

=

=

√

Z

A

2m

dx

q

1

2

2 k (A

−A

− x2 )

r Z A

dx

m

√

2

k −A A2 − x2

Substituting x/A = z reduces this to

r Z 1

m

dz

√

T =2

k −1 1 − z 2

a result which shows the property of isochronicity. The integral evaluates to π so that we easily recover our result in (1.5).

Let us next consider motion of a particle under a potential given by

n

V (x) = α |x|

Here, the time period of oscillation for an amplitude of A is given by

T

=

√

Z

A

dx

2m

p

−A

r

A

Z

dx

An − x n

r

Z

2m 1−n/2 1

dz

√

= 2

A

α

1 − zn

0

=

2

2m

α

n

α (An − |x| )

√

0

At this stage, we are in a position to say that T ∝ A1−n/2 , even without

carrying out the integral. This gives us back the fact that for n = 2,

T is independent of A. Indeed, what might come as a surprise is that

for n > 2, larger amplitudes lead to smaller time periods! It turns out

that the integral above can be worked out for arbitrary n - substituting

u = z n leads to

Z 1

Z

1 1 1−n

1

1 1

1 Γ n1 Γ 12

dz

− 12

n

√

=

u

(1 − u) du = B

,

=

n 0

n

n 2

n Γ n1 + 12

1 − zn

0

R1

b−1

where B (a, b) ≡ 0 ta−1 (1 − t)

dt is the beta function, and we have

used its well-known connection with the Euler gamma function :

B (a, b) =

Γ (a) Γ (b)

.

Γ (a + b)

CHAPTER 1. OSCILLATIONS IN CONSERVATIVE SYSTEMS

Using the fact that Γ

be

1

2

1.10

√

π, we see that the time period turns out to

r

2 2πmA2−n Γ n1

.

T =

n

α

Γ n1 + 12

=

As in all complicated calculations, it is a good idea to check the result

against a known case. In this case, n = 2 and α = 12 k gives

s

T =

r

2πm Γ 12

m

= 2π

k/2 Γ (1)

k

as expected.

Here we have used the well known values Γ (1) = 1 and

√

Γ 12 = π.

1.5

The 2-dimensional harmonic oscillator

So far we have been considering the motion of an oscillator in one dimension.

Chapter 2

The damped oscillator

All the oscillations we have seen so far, simple harmonic or not, are

eternal. Once started, they go on for ever! This, of course, is in stark

contrast to everyday experience - all oscillations we see around us die

out in time. The reason behind this contradiction is simple - we have

been considering conservative systems - while all real systems have

some form of dissipation or the other at work. We will now consider a

system in which a damping force is superposed on top of a spring like

system - the damped harmonic oscillator. The equation of motion is

now

d2 x

m 2 = −kx + Fd (x, v)

(2.1)

dt

where Fd is the damping force. Since a force which depends on the

position alone is conservative, we must have some form of dependence

on v. As long as Fd is dissipative , the motion will die out. Howver, the

precise nature of motion depends on the form of the force. We will take

a look at a particularly simple example.

2.1

Linear damping

The kind of damping force that we will consider is one which is proportional to the velocity

Fd = −Γv

(2.2)

where Γ is a positive constant (the minus sign is essential - it makes

the force oppose the motion). A reason often offered to justify this

choice is that viscous damping under some conditions does obey this

form (Stoke’s law). However, the real reason for this choice is that

this leads to a linear equation - one which is relatively easy to handle

mathematically. The equation then becomes

d2 x

dx

+ 2b

+ ω02 x = 0

2

dt

dt

2.0

(2.3)

CHAPTER 2. THE DAMPED OSCILLATOR

2.1

Γ

where we have chosen b = 2m

. As before, we also have the initial

conditions

x (0) = x0

and

ẋ (0) = v0 .

We will solve (2.3) using the fourth technique that we used to solve

the SHO equation in the last chapter. This equation can be written in

the form

(D − r1 ) (D − r2 ) x = 0

d

, and r1,2 are the roots of the equation r2 + 2br + ω02 = 0,

where D ≡ dt

which means that

q

r1,2 = −b ± b2 − ω02 .

Defining y (t) = (D − r2 ) x (t) we see that

(D − r1 ) y = 0,

y (0) = v0 − r2 x0

which is easily solved to yield

d

− r2 x (t) = y (0) er1 t = (v0 − r2 x0 ) er1 t .

y (t) ≡

dt

This can be easily rewritten in the form

d

xe−r2 t = (v0 − r2 x0 ) e(r1 −r2 )t

dt

which integrates to

x (t) e−r2 t − x0 = (v0 − r2 x0 )

Z

t

0

e(r1 −r2 )t dt0

0

and thus

x (t)

=

=

=

e(r1 −r2 )t − 1

e

x0 + (v0 − r2 x0 )

r1 − r2

r2

e r1 t − e r2 t

r1 t

r2 t

r2 t

e −e

+ v0

x0 e −

r1 − r2

r1 − r2

r2 t

r1 t

r1 t

r2 t

r1 e − r2 e

e −e

x0 +

v0

r1 − r2

r1 − r2

r2 t

(2.4)

Differentiation gives the velocity as

v (t) =

r1 r2

r1 er1 t − r2 er2 t

e r2 t − e r1 t x 0 +

v0

r1 − r2

r1 − r2

(2.5)

We now have a complete solution of the initial value problem. However,

the nature of the solution depends strongly on whether the roots r1,2

are real or complex, which depends on the relative sizes of b and ω0 .

CHAPTER 2. THE DAMPED OSCILLATOR

2.1.1

2.2

The underdamped case

Let us first consider the case b < ω0 . Since b = 0 gives us back the

simple harmonic oscillator, this is the natural extension of the motion

we studied in the last chapter. We define

q

ω = ω02 − b2

so that the roots are r1,2 = −b ± iω. This means that (2.4) reduces to

x (t)

e−bt (−b + iω) e−iωt − (−b − iω) eiωt x0 + eiωt − e−iωt v0

2iω eiωt − e−iωt

eiωt + e−iωt

eiωt − e−iωt

−bt

= e

b

+

v0

x0 +

2iω

2

2iω

v0

b

sin (ωt) e−bt

(2.6)

= x0 cos (ωt) + sin (ωt) e−bt +

ω

ω

=

We can find the velocity by differentiating (2.6), or perhaps somewhat

more simply from (2.5) :

v (t)

=

=

=

ω 2 + b2 −bt −iωt

e−bt e

e

− eiωt x0 +

(−b + iω) eiωt − (−b − iω) e−iωt v0

2iω

2iω eiωt + e−iωt

b eiωt − e−iωt

ω 2 eiωt − e−iωt

e−bt

−

x0

v0 − 0

2

ω

2i

ω

2i

2

ω x0

b

v0 cos (ωt) − sin (ωt) e−bt − 0 sin (ωt) e−bt

(2.7)

ω

ω

Note that for some purposes the form

x (t) = Ae−bt cos (ωt + φ)

of the solution is quite useful. The two constants A and φ are related

to the intial conditions by

x0

= A cos φ

v0

= −ωA sin φ − bA cos φ

or

s

A

tan φ

v0 + bx0

ω

=

x20

= −

v0 + bx0

ωx0

+

2

CHAPTER 2. THE DAMPED OSCILLATOR

2.1.2

2.3

The overdamped case

The case b > ω0 is called, naturally, overdamped.

In this case both

p

r1 and r2 are real (and negative). We denote b2 − ω02 by b0 . We could

repeat the whole calculation all over again. Replacing all the ω s in (2.6)

and (2.7) by −ib0 works just as well. Using the identities

cos (iz) =

and

ei(iz) + e−i(iz)

= cosh z

2

sin (iz)

ei(iz) − e−i(iz)

sinh z

=

=

iz

2i (iz)

z

we can easily see that we have

v0

b

x (t) = x0 cosh (b0 t) + 0 sinh (b0 t) e−bt + 0 sinh (b0 t) e−bt

b

b

(2.8)

and

ω 2 x0

b

v (t) = v0 cosh (b0 t) − 0 sinh (b0 t) e−bt − 0 0 sinh (b0 t) e−bt .

b

b

2.1.3

(2.9)

The critically damped case

The borderline bewteen the underdamped and overdamped cases occur

when b = ω0 . The solution to the IVP can be easily found in this case

by taking the ω → 0 limit of (2.6) and (2.7) :

x (t)

v (t)

= x0 (1 + bt) e−bt + v0 te−bt

= v0 (1 − bt) e

−bt

−

x0 ω02 te−bt

(2.10)

(2.11)

Chapter 3

The forced harmonic

oscillator

Consider the case where a harmonic oscillator is acted upon by an

additional force (in addition to the damping force, which is assumed to

be present) Fd (t) (the suffix d here stands for driving). The differential

equation obeyed by the particle is then

dx

d2 x

+Γ

+ kx = Fd (t)

dt2

dt

which can be rewritten as

d2 x

dx

+ ω02 x = f (t)

+ 2b

dt2

dt

m

where f (t) =

Fd (t)

m .

This can be written in the form

(D − r1 ) (D − r2 ) x = f (t)

where r1,2 = −b ± iω as before. Again using the abbreviation (D − r2 ) x =

y, we have, as before

(D − r1 ) y = f (t)

which satisfies

y (t) e−r1 t − y (0) =

t

Z

0

f (t0 ) e−r1 t dt0

0

so that

y (t) = y (0) e

r1 t

Z

t

+

0

f (t0 ) er1 (t−t ) dt0

0

This means that

Z t

0

d

xe−r2 t

= e−r2 t y (0) er1 t +

f (t0 ) er1 (t−t ) dt0

dt

0

Z t

0

= y (0) e(r1 −r2 )t +

f (t0 ) e(r1 −r2 )t e−r1 t dt0 ≡ y (0) e(r1 −r2 )t + g (t)

0

3.0

CHAPTER 3. THE FORCED HARMONIC OSCILLATOR

3.1

where we have used g (t) to abbreviate the integral on the right. Once

again, this is easy to integrate :

Z t

e(r1 −r2 )t − 1

g (t00 ) dt00

x (t) e−r2 t − x0 = y (0)

+

r1 − r2

0

Which leads to

r2 t

r1 t

Z t

Z t00

00

0

r1 e − r2 er1 t

e − er2 t

00 r2 t

+v0

+

dt e

dt0 f (t0 ) e(r1 −r2 )t e−r1 t

x (t) = x0

r1 − r2

r1 − r2

0

{z

}

|0

xd (t)

Note that the first two terms are exactly the same as the result (2.4) for

the damped oscillator. This, of course, makes perfect sense - because,

if f (t) is zero, our present problem reduces to just that! The third term,

which we have denoted by xd (t) above, is the result of the driving force.

Let us focus on this term now :

Z t

Z t00

00

0

xd (t) =

dt00 er2 t

dt0 f (t0 ) e(r1 −r2 )t e−r1 t

0

0

Z t

Z t

0

00

=

dt0 f (t0 ) er2 t e−r1 t

dt00 e(r1 −r2 )t

0

Z

t

0

Z

− e(r1 −r2 )t

r1 − r2

−r2 (t−t0 )

r1 (t−t0 )

−e

e

dt0 f (t0 ) er2 t e−r1 t

=

t

dt0 f (t0 )

=

0

Z

=

t0

(r1 −r2 )t

0

e

0

r1 − r2

t

dt0 f (t0 ) G (t − t0 )

0

where we have denoted by G (t) the function

G (t) ≡

e r1 t − e r2 t

r1 − r2

(3.1)

This is called the Green’s function for this particular problem. The concept of the Green’s function is a very important one - and we will meet

this many times in the days to come. The only nontrivial step above is

in the second line, where we have changed the order of integration. For

the important case of the underdamped harmonic oscillator (b < ω0 ),

the Green’s function takes the form

sin ωt

G (t) = e−bt

(3.2)

ω

Taking all this into account, the displacement for an underdamped

forced oscillator becomes

Z t

0

0 sin [ω (t − t )]

b

sin ωt −bt

x (t) = x0 cos ωt + sin ωt e−bt +v0

e +

dt0 f (t0 ) e−b(t−t )

ω

ω

ω

0

(3.3)

CHAPTER 3. THE FORCED HARMONIC OSCILLATOR

3.2

Let me point out that for practical calculations the form (3.1) is often

to be preferred over (3.2), it being easier to calculate the integrals of

exponentials.

3.1

Applications

3.1.1

A constant force

Let us now consider a very simple case - that of a constant force F0

superposed on a damped harmonic oscillator. In this case, we already

know the answer - all that the constant force does is to shift the equilibrium by F0 /k. Let us verify this by using (3.3).

0

0

r1 t

Z

F0 t 0 er1 (t−t ) − er2 (t−t )

e − e r2 t

r1 er2 t − r2 er1 t

+ v0

+

dt

x0

r1 − r2

r1 − r2

m 0

r1 − r2

r1 t

r2 t

r1 t

r2 t

r1 t

F0

e − 1 e r2 t − 1

e −e

r1 e − r2 e

+ v0

+

−

x0

r1 − r2

r1 − r2

m (r1 − r2 )

r1

r2

r2 t

r1 t

r1 t

r2 t

r2 t

r1 t

r1 e − r2 e

e −e

F0

r1 e − r2 e

x0

+ v0

+

1−

r1 − r2

r1 − r2

mr1 r2

r1 − r2

r2 t

r1 t

r1 t

r2 t

F0

r1 e − r2 e

F0

e −e

x0 −

+ v0

+

mr1 r2

r1 − r2

r1 − r2

mr1 r2

F0

v0

F0

b

+ x0 −

sin ωt e−bt

cos ωt + sin ωt e−bt +

k

k

ω

ω

x (t)

=

=

=

=

=

which shows that x − Fk0 behaves the same as x for a damped oscillator.

3.1.2

Sinusoidal driving force and resonance

We next turn to the case that is the most important one. This is the

case where the driving force is a sinusoid of frequency ωd :

Fd (t) = F0 cos (ωd t + φ)

So, the equation that we must solve is

m

d2 x

dx

+ kx = F0 cos (ωd t + φ)

+Γ

dt2

dt

(3.4)

subject to x (0) = x0 , v (0) = v0 . Instead, the equation we will solve is

m

d2 x̃

dx̃

+Γ

+ kx̃ = F̃0 eiωd t

dt2

dt

(3.5)

subject to x̃ (0) = x̃0 , ṽ (0) = ṽ0 . It is easy to see that if x̃ satisfies

(3.5), then its real part x (t) = < [x̃ (t)] satisfies (3.4), provided we take

CHAPTER 3. THE FORCED HARMONIC OSCILLATOR

3.3

F̃0 = F0 eiφ . We will get the initial values right, too - provided we take

x0 = < [x̃0 ] and v0 = < [ṽ0 ]. Using (3.3) it is easy to see that

x̃ (t)

0

r1 (t−t0 )

0 e

− er2 (t−t )

dt0 eiωd t

r1 − r2

0

iωd t

F̃0

e

− e r1 t

eiωd t − er2 t

+

−

m (r1 − r2 )

iωd − r1

iωd − r2

= x̃0

r1 er2 t − r2 er1 t

e r1 t − e r2 t

F̃0

+ ṽ0

+

r1 − r2

r1 − r2

m

= x̃0

r1 er2 t − r2 er1 t

e r1 t − e r2 t

+ ṽ0

r1 − r2

r1 − r2

Z

t

This shows why we preferred to work with complex functions - it is

after all, a lot easier to integrate exponentials rather than products of

sinusoids and exponentials. Note that we can split the driven term

x̃d (t) into two terms :

tr

= x̃ss

d (t) + x̃d (t)

1

1

F̃0 eiωd t

x̃ss

−

(t)

=

d

m (r1 − r2 ) iωd − r1

iωd − r2

r1 t

e

F̃0

e r2 t

tr

x̃d (t) = −

−

m (r1 − r2 ) iωd − r1

iωd − r2

x̃d (t)

Since both r1 and r2 have negative real parts, the x̃tr

d (t) dies out with

time (this is thus the transient part of the driven displacement), leaving

behind the steady state displacement

x̃ss (t) = x̃ss

d (t) =

F̃0 eiωd t

m (iωd − r1 ) (iωd − r2 )

Since r1 and r2 are roots of the quadratic r2 + 2br + ω02 = 0, we have

(r − r1 ) (r − r2 ) = r2 + 2br + ω02 , so that the (iωd − r1 ) (iωd − r2 ) in the

denominator is ω02 − ωd2 + i2bωd . Thus

x̃ss (t) =

m [ω02

F̃0 eiωd t

=

− ωd2 + i2bωd ]

F ei(ωd t+φ+δ)

q 0

2

m (ω02 − ωd2 ) + 4b2 ωd2

(3.6)

where δ = Arg ω02 − ωd2 − i2bωd . The steady state motion for a sinusoidal driving force F0 cos (ωd t + φ) is then

xss (t) = < [x̃ss (t)] =

F0

q

cos (ωd t + φ + δ)

2

m (ω02 − ωd2 ) + 4b2 ωd2

(3.7)

Thus there is a phase difference of δ between the driving force and the

resulting displacement. Since δ is in the third or the fourth quadrant,

the displacement always lags behind the force.

Differentiating (3.6) we get

ṽ ss (t) =

iωd F̃0 eiωd t

F̃0 eiωd t

h

=

2

− ωd + i2bωd ]

2bm 1 − i ω2b0 ωωd0 −

m [ω02

ωd

ω0

i

CHAPTER 3. THE FORCED HARMONIC OSCILLATOR

3.4

The steady state velocity is, of course, the corresponding real part :

v ss (t) = r

1 + Q2

v0

ω0

ωd

−

ωd

ω0

0

2 cos (ωd t + φ + δ )

ω0

F0

0

where

used

h we have

i the abbreviations v0 = 2bm , Q = 2b and δ =

Arg 1 + i ω2b0 ωωd0 − ωωd0 . What is the connection between δ 0 and the phase

δ?

Thus the peak steady state speed is given by

vp = r

1 + Q2

v0

ω0

ωd

−

ωd

ω0

2

(3.8)

This expression shows that the maximum value the peak steady state

speed can take is v0 and this speed is attained when the denominator

is a minimum - i.e. when ωd = ω0 . This is the phenomenon of resonance (velocity resonance to be precise) and it occurs when the driving

frequency matches the natural frequency of the system. What happens

to this peak speed when the system is off-resonance, i.e. when ωd 6= ω0 ?

The peak speed now is of course less than v0 - but just how fast it drops

off as ωd moves away from ω0 depends on the size of Q. If Q is large, the

peak speed drops off rapidly as the driving frequency moves away from

the natural frequency in either direction - and we have what is called

a sharp resonance. On the other hand, if Q is small then the driving

frequency can go quite far away from the natural frequency without

before the peak speed drops appreciably and we have a flat resonance.

v

This can be seen from figure () which plots the values of vp0 against ωωd0

for a few different values of Q.

Note that the resonance curves are not really symmetric about ω0 .

This is easy to see, from the fact that the function

1

q

1 + Q2 x −

1 2

x

(here he have abbreviated the ratio ωωd0 by x ) vanishes at x = 0 and

x = ∞ - which is anything but symmetric about x = 1. It is easy to see

that the function takes the same value when x = ξ, say, as when x = 1ξ .

So there is a symmetry, but this is a symmetry in terms of ratios and

not in terms of differences. This means that if the resonance curve is

plotted using a logarithmic scale, we will get a symmetric curve. On the

other hand, if Q is large, the curve drops off rapidly away when x moves

away from unity and the important region is x ≈ 1. Now, we have seen

that the value of the function at x = 1 + δ is the same as its value when

1

x = 1+δ

- but when δ 1, which is all we need to worry about when Q

CHAPTER 3. THE FORCED HARMONIC OSCILLATOR

Figure 3.1: Resonance curves showing

Q

vp

v0

vs.

ωd

ω0

3.5

for different values of

1

is large, we have 1+δ

≈ 1 − δ. Thus, the sharp resonance curves that we

get for large values of Q are (nearly) symmetrical about ωd = ω0 .

To get a qualitative measure of the sharpness of a resonance curve,

we begin by defining the upper and lower cutoff frequencies (also known

as the upper and lower 3 dB frequencies). These are the frequencies (on

the upper and lower side of the natural √

frequency) at which the peak

steady state speed drops by a factor of 2 from the maximum value

attained at resonance. If you are wondering why such a complicated

factor - just remember that the power is proportional to the square of

the velocity - which is why they are also called half power frequencies.

In fact a drop by a factor of 2 in the power, means that the power measured in Bels drops by 0.3 (the Bel is a logarithmic unit and we have

log 2 ≈ 0.3 - remember that these are logarithms to the base 10!) - so

that the power decreases by 0.3 Bels, and thus by 3 decibels - justifying

the second nomenclature above for these frequencies.

From (3.8) these driving frequencies must satisfy

2

ω0

ωd

2

Q

−

=1

ωd

ω0

so that

ω0

ωd

1

−

=±

ωd

ω0

Q

CHAPTER 3. THE FORCED HARMONIC OSCILLATOR

and thus

ωd2 ±

3.6

ω0

ωd − ω02 = 0.

Q

It is easy to see that each of the two quadratic equations thus obtained

for ωd (one for each sign) has exactly one positive root. Solving for the

positive root in each case gives us the two 3 dB frequencies as

r

1

ω0

±

ω± 12 = ω0 1 −

.

2

4Q

2Q

The bandwidth is defined to be the difference between these two frequencies :

ω0

.

BW ≡ ω+ 21 − ω− 12 =

Q

The bigger the bandwidth, then, the flatter the resonance. Thus to

get a measure of the sharpness we should use the reciprocal of the

bandwidth, but this has to be scaled by the natural frequency of the

system ( a bandwidth of 500 s−1 may be very large when the resonance

occurs at, say, 2000 s−1 , but may not really amount to much if the

resonance frequency is, say 2 × 106 s−1 ). Thus we define

Quality factor ≡

ω0

.

BW

It is easy to see that according to this definition the quality factor turns

out to be our old friend Q - which is, of course, why we denoted it by

the letter Q in the first place.

Chapter 4

Coupled oscillations

4.0

Part II

Waves

4.1

Chapter 5

Waves in 1 dimension

5.1

Non-dispersive waves in 1 dimension

Consider a function f (x) - one whose graph is shown in figure (5.1).

What will be the function which has the same graph - but shifted to

the right by a distance a? Since the new function, say f (x), has to

have the same value at x + a as the original one at x, it must satisfy

f (x + a) = f (x), or, f (x) = f (x − a).

Now, let us consider a wave travelling to the right at a speed c

without spreading or changing shape. Such a wave is called a nondispersive wave. If such a wave is described by the function f (x) at

t = 0, at a time t, it will be shifted by a distance ct to the right, and will

hence be described by the function f (x − ct). Again, if a wave travelling

to the left is described by the function g (x) at t = 0, its description at a

later time tis furnished by g (x + ct).

A general wave could be travelling either to the left, or to the right,

or both! So the general function describing a non-dispersive wave in

one dimension is

y (x, t) = f (x − ct) + g (x + ct)

(5.1)

Note that at this point the functions f and g are arbitrary - but as we

f¯(x)

f (x)

x

a

x

Figure 5.1: Shifting a function

5.0

CHAPTER 5. WAVES IN 1 DIMENSION

5.1

will soon see, they can be determined from the initial and boundary

conditions that we impose on the wave.

5.2

The wave equation in 1-D

What we have in (5.1) is a solution to the problem of propagating waves

in 1 dimension. What we now seek is an equation that will have (5.1) as

its solution. In the best traditions of mathematical physics we will look

for a differential equation that is satisfied by y (x, t). Of course, since y

is a function of two variables, this must be a partial differential equation. In addition, since many (indeed, an infinite number ) of equations

will have (5.1) as their solution, we will try to keep this equation as

simple as possible. Also, in keeping with the dictat of simplicity, since

the solution is a sum of two terms, the most obvious choice would be a

linear equation.

Let us start by forming the partial derivatives of the solution :

∂y

(x, t)

∂x

∂f

∂g

(x − ct) +

(x + ct)

∂x

∂x

= f 0 (x − ct) + g 0 (x + ct)

=

where we have used the chain rule of differentiation and used the fact

∂

∂

(x − ct) = ∂x

(x + ct) = 1. Similarly

that ∂x

∂y

(x, t) = −cf 0 (x − ct) + cg 0 (x + ct)

∂t

∂

∂

where we have used ∂t

(x − ct) = − ∂t

(x + ct) = c.

Note that the primes denote differentiation with respect to the respective single arguments of the functions f and g. Since both f and g

are functions of a single variable each - these are ordinary (not partial)

derivatives1 .

But for the change in sign, we have almost reached the equation

∂y

that we wanted. However, while ∂x

is f 0 + g 0 , the other derivative is

0

0

−c (f − g ). To get the signs to match, we must push on to the second

1 In case you have forgotten how the chain rule goes, let me show you how you could

derive the above from first principles. For example, considering the function y1 (x, t) =

f (x − ct) we have

∂y1

y1 (x, t + k) − y1 (x, t)

f (x − c (t + k)) − f (x − ct)

(x, t) ≡ lim

= lim

k→0

k→0

∂t

k

k

= lim

k→0

f (x − ct − ck) − f (x − ct)

f (x − ct + (−ck)) − f (x − ct)

= −c lim

−ck→0

k

(−ck)

which is nothing but −cf 0 (x − ct) from the definition of the derivative!

CHAPTER 5. WAVES IN 1 DIMENSION

5.2

order :

∂2y

(x, t)

∂x2

∂2y

(x, t)

∂t2

= f 00 (x − ct) + g 00 (x + ct)

= c2 (f 00 (x − ct) + g 00 (x + ct))

so that we can eliminate the arbitrary function f and g and write

1 ∂2y

∂2y

− 2 2 =0

2

∂x

c ∂t

(5.2)

This is the famous wave equation in 1 dimension.

You may wonder why we are bothering to find out the equation - since we already have the solution! After all, isn’t the

aim really to find out solutions to the equations? Let me give

you a few reasons why going the opposite route (as we have

done here) is often a good idea

1. There are other ways of writing the solution (as we will

see) which may help shed light on various aspects that the

solution (5.1) may not reveal directly.

2. It is easy to generalize the equation. For example, the

wave equation in 3-D is obtained by replacing the derivative

∂2

∂x2 by, obviously, the Laplacian!

3. There are many physical situations where the basic equations of physics (e.g. those of mechanics or electromagnetism)

lead directly to the wave equation (5.2), or its three dimensional counterpart.

5.3

The general solution of 1-D wave equation

So far we hjave constructed the 1-D wave equation (5.2) strating from

the solution (5.1). So, even without doing anything else we are sure

that (5.1) will satisfy (5.2). However, the question that remains is :

are there other solutions to (5.2) which are not of the form (5.1)? The

answer is in the negative - (5.1) not only furnishes a solution of (5.2), it

actually furnishes the most general solution!

To see this, let us begin u = x−ct and v = x+ct. To be mathematically

rigorous ( a trend that we may not continue for long in this course) we

will also define Y (u, v) = y (x, t). Now

∂Y ∂u ∂Y ∂v

∂Y

∂Y

∂y

=

+

=

+

∂x

∂u ∂x

∂v ∂x

∂u

∂v

CHAPTER 5. WAVES IN 1 DIMENSION

5.3

Going ahead, we have

∂2y

∂x2

∂

∂Y

∂

∂Y

=

+

∂x ∂u

∂x ∂v

∂u ∂

∂Y

∂v ∂

∂Y

=

+

∂x ∂u ∂u

∂x ∂v ∂u

∂u ∂

∂Y

∂v ∂

∂Y

+

+

∂x ∂u ∂v

∂x ∂v ∂v

2

2

2

∂ Y

∂ Y

∂ Y

+2

=

+

∂u2

∂u∂v

∂v 2

A similar calculation gives

1 ∂2y

∂2Y

∂2Y

∂2Y

=

−2

+

2

2

2

c ∂t

∂u

∂u∂v

∂v 2

so that in terms of the variables u and v the wave equation becomes

4

∂2Y

=0

∂u∂v

(5.3)

Equation (5.3) is of a particularly simple form and thus can be

solved rather easily. To begin with, it says that the function ∂Y

∂v of u

and v vanishes when you differentiate it partially with respect to u - so

it must be a function of v alone :

∂Y

= G (v)

∂v

Integrating this once again gives

Z v

Y (u, v) =

G (v 0 ) dv 0 + f (u) .

Note that the “constant of integration” here is anything that vanishes

when you differentiate partially with respectR to v - so that it can be an

v

arbitrary function2 of u, f (u). Abbreviating

G (v 0 ) dv 0 by g (v) we get

Y (u, v) = f (u) + g (v)

so that the most general solution of the wave equation is

y (x, t) = f (x − ct) + g (x + ct)

where f and g are arbitrary (sufficiently smooth) functions - which is

what we started out with. This is called the d’Alembert solution, in

honor of the French mathematician, physicist, philospher Jean le Rond

d’Alembert.

2 Of course, this function can not be completely arbitrary - it must be sufficently

smooth for the differentiations to make sense.

CHAPTER 5. WAVES IN 1 DIMENSION

5.4

5.4

The wave equation in a strretched string

So far, we have seen that waves that travel either to the left or to the

right in 1 dimension without changing their shape or size has to obey

the wave equation (5.2). This has been arrived at from a purely mathematical point of view - and still leaves the question of the physical

relevance (or otherwise) of this equation open. In this section we will

see that there is at least one system (and we will find more of these in

the days to come) for which the wave equation is a very good approximation.

Consider a (initially) horizontal string having a mass m per unit

length that is stretched by an uniform tension T undergoing small vertical oscillations. The tension in the string is strong enough for us to

ignore all other forces, notably that of gravity. Let us consider an infinitesimally small segment of the string, between x and x + dx. The

mass of this segment is mdx. The net external force on this segment

is provided by the tensions exerted by the portions of the string to the

right and to the left of the segment, respectively. The net external force

in the vertical direction is, then,

dy

∂2y

dy

−T

= T 2 dx

T

dx x+dx

dx x

∂x

Using this in Newton’s second law yields

mdx

∂2y

∂2y

=

T

dx

∂t2

∂x2

or

∂2y

m ∂2y

=

∂x2

T ∂t2

which is of the form (5.2). This also shows that the speed of waves

travelling in a stretched string is given by

r

T

c=

.

(5.4)

m

5.5

Solving the initial value problem

So far we have established that the wave equation

∂2y

1 ∂2y

− 2 2 =0

2

∂x

c ∂t

has the general solution

y (x, t) = f (x − ct) + g (x + ct)

CHAPTER 5. WAVES IN 1 DIMENSION

5.5

where f and g are, as yet, unknown functions. Initial and boundary

conditions will allow us to choose this functions and hence solve for

the wave.

Let the initial conditions specified be

y (x, 0) =

∂y

(x, 0) =

∂t

y0 (x)

(5.5)

v0 (x)

(5.6)

Substituting these in (??) leads to the equations

f (x) + g (x)

= y0 (x)

v0 (x)

f 0 (x) − g 0 (x) = −

c

(5.7)

(5.8)

where the primes on f and g denote differentiation with respect to their

respective arguments. Integrating (5.8) leads to

Z

1 x

f (x) − g (x) = −

v0 (x0 ) dx0

(5.9)

c ξ

where ξ is an arbitrarily chosen fixed coordinate. These easily lead to

Z x

1

1

1

y0 (x) −

v0 (x0 ) dx0 = (y0 (x) − V0 (x)) (5.10)

f (x) =

2

2c ξ

2

Z x

1

1

1

y0 (x) +

g (x) =

v0 (x0 ) dx0 = (y0 (x) + V0 (x)) (5.11)

2

2c ξ

2

where we have used the abbreviation

Z

1 x

V0 (x) =

v0 (x0 ) dx0 .

c ξ

Equations (5.10) and (5.11) show that the functions f (x) and g (x) are

known only up to an additive constant (because of the arbitrary choice

for ξ) but this arbitrariness goes away when we consider the full solution

Z x+ct

1

1

y (x, t) = (y0 (x − ct) + y0 (x + ct)) +

v0 (x0 ) dx0

(5.12)

2

2c x−ct

Equation (5.12) is the complete solution to the initial value problem for

waves in one dimension. Below we consider the actual solution for a

couple of specific initial conditions.

CHAPTER 5. WAVES IN 1 DIMENSION

5.6

(a)

(b)

(c)

(d)

(e)

(f)

Figure 5.2: Wave propagation in an infinitely long plucked string. Figure (a) shows the initial configuration of the string, as well as the functions f (x) and g (x), while (b) - (f) shows various stages of the propagation of the wave. In each of these the red and blue lines depict f (x − ct)

and g (x + ct), respectively. As you can see, these shift respectively to

the left and the right as time progresses. The vertical dashed lines are

meant to be a guide to the eye. For example, in (a), for the region to the

left of the first such line, both f (x − ct) and g (x + ct) vanish - leading

to y (x, t) = 0. Between, the first and the second line, only g (x + ct) is

nonzero - leading to it being the same as y (x, t) here. Both f (x − ct)

and g (x + ct) are nonzero and increasing between the second and the

third vertical lines - leading to y increasing twice as fast here. Between

the thisrd and the fourth lines, g (x + ct) is falling while f (x − ct) is still

rising - and the symmetry of the functions in this example ensures that

the string is flat here.

CHAPTER 5. WAVES IN 1 DIMENSION

5.5.1

5.7

A plucked infinite string

Let us consider an infinite string that is given the initial shape shown

in figure (5.2a) and released from rest. Thus, the initial conditions are

v0 (x)

y0 (x)

=

=

0

0

h 1 + x a

x

h

1

−

a

0

for

for

for

for

x < −a

−a≤x<0

0≤x<a

x≥a

Equation (5.9) immediately leads to

f (x) = g (x)

so that (5.7) yields

f (x) = g (x) =

1

y0 (x)

2

Thus, the wave at time t is given by

y (x, t) =

y0 (x − ct) + y0 (x + ct)

2

This result has a very simple interpretation - the final wave consists of

two pieces, each half as high as the initial disturbance, one travelling

to the left and the other to the right. When the two pieces are well

separated, you can see them exactly as they are - two distinct pulses.

For a short while after t = 0, however, the two pulses overlap and the

resulting disturbance looks slightly more complicated.

5.5.2

A struck infinite string

Let us now consider the other extreme - a horizontal string that is dealt

a sudden impulsive blow. Immediately after the blow is struck we have

y (x, 0) ≡ y0 (x) = 0

so that in this case (5.12) leads to

Z x+ct

1

y (x, t) =

v0 (x0 ) dx0

2c x−ct

which gives us a complete solution of the problem.

On the other hand, it is quite instructive to look at this problem

from the point of view of the right and left going pulses. From (5.7) we

get that in this case

f (x) + g (x) = 0

CHAPTER 5. WAVES IN 1 DIMENSION

5.8

v0

a

c v0

−a

+a

(a)

(b)

(c)

(d)

(e)

(f)

Figure 5.3: Waves in a struck infinite string.

CHAPTER 5. WAVES IN 1 DIMENSION

5.9

Coupled with (5.9) this yields

1

f (x) = −g (x) = −

2c

Z

x

v0 (x0 ) dx0

ξ

For the sake of definiteness, we will discuss the (somewhat artificial)

situation where the initial velocity is a constant v0 in a small interval of

the string and zero everywhere else :

(

v0 for |x| ≤ a

v0 (x) =

0 for |x| > a

This gives us

g (x) = −f (x) =

0

for x < −a

for − a ≤ x ≤ a

for x > a

x+a

2c v0

a

c v0

Initially the right going and left going pulses cancel each other out.

But as they start separating from each other, their sum y (x, t) forms a

trapezium that grows in both height and width. This continues as long

as the inclined parts of the two pulses oevrlap - after sufficient time,

the pulses separate far enough to ensure that this is no longer the case.

From this point on, the trapezium keeps on growing in width - but the

height stays fixed at ac v0 . With a little thought you should be able to see

that the width of the trapezium at the base is given by 2 (a + ct), while

the height is given by v0 t for t ≤ ac . You can see all this graphically in

figure (5.3).

5.6

Boundary conditions

So far, we have not worried about boundary conditions - since the

strings we had dealt with were infinite anyway, there were no boundaries to worry about! However, once we move away from simple infinite

wires, we will have to worry about the boundaries of our system. We

will coonsider several different kinds of these conditions - which arise

in different physical conditions.

5.6.1

A semi-infinite string - fixed at one end

Let us consider a string that is stretched from x = 0 to x = ∞. Let the

end at x = 0 be fixed. This means that

y (0, t) = 0

∀t ≥ 0.

(5.13)

CHAPTER 5. WAVES IN 1 DIMENSION

5.10

g(−x)

g(x)

−g(−x)

Figure 5.4: Graphical interpretation of −g (−x).

As in the last section, we have the initial conditions

y (x, 0)

∂y

(x, 0)

∂t

=

y0 (x)

=

v0 (x)

but unlike the last section, where these were valid for all x, here they

are valid for x ≥ 0 only! As in the last section, we get

Z x

1

1

y0 (x) −

v0 (x0 ) dx0

f (x) =

2

2c ξ

Z x

1

1

g (x) =

y0 (x) +

v0 (x0 ) dx0

2

2c ξ

but, once, again, these will work only for nonnegative values of x (in

fact we must take the arbitrary constant ξ to be nonnegative as well in fact, it may be useful to take ξ = 0).

So it seems that we have all that we need to find the solution y (x, t)

using f (x − ct) + g (x + ct) - remember that we need this for x ≥ 0, anyway! There is no problem with the g (x + ct) part - the argument is

positive for all x ≥ 0 and t ≥ 0. However, the argument of f (x − ct) can

be negative for sufficiently large t even if x is positive - so we need to

know the function f (x) for negative x as well. This information can be

found by appealing to (5.13) - which leads to

f (−ct) + g (ct) = 0

∀t ≥ 0.

Thus, for x ≤ 0 we have

f (x) = −g (−x) .

Note that −x is non-negative in this case - so that we do know g (−x)

from the initial conditions. Note that we can get the graph of g (−x)

CHAPTER 5. WAVES IN 1 DIMENSION

5.11

by reflecting that of g (x) about the Y -axis. Reflection again about the

X-axis gives −g (−x) - as can be seen from (5.4).

To see how things work out in this case, consider the situation

where the string is plucked as before. As in the case of the infinite

string, the wave in this case gives rise to pulses that are half as high.

The right going wave f , however, has another piece that comes from

reflecting g (x) successively about the X and the Y axes. This part of

f (x) is not visible initially - since the string exists only for positive x. As

time goes by, the left going pulse g and the right going pulse f approach

x = 0 (f ,of course, has another part that moves away to the right).Once

they reach x = 0 and cross over - the g pulse passes into the unphysical region while the second f pulse passes into the physical one. For

a brief interval, parts of both the pulses overlap in the physical region

and then we are left with the f pulse only. You can see all this in figure

(5.5). As you can see from this, the result of all this is that a pulse

travelling towards the fixed end x = 0 gets reflected from the this end

and returns - with a reversal of sign. This is familiar feature of waves reflection from a fixed wall results in a phase reversal.

5.6.2

A semi-infinite string - free end

If the end at x = 0 is free to move, the boundary condition is quite

different . Since the net vertical force on the free end must be zero, this

leads to

∂y

(0, t) = 0

∀t ≥ 0

∂x

This means that

f 0 (−ct) + g 0 (ct) = 0

so that for x ≤ 0 we have

f 0 (x) = −g 0 (−x)

- integration leads to

f (x) = g (−x)

which allows us to find f for negative x from g (−x) which we can find

from the initial conditions.

5.6.3

A string fixed at both ends

Let us next turn to a case which is familiar from most stringed musical

instruments - a string that is fixed at both ends. This gives us the

boundary conditions :

y (0, t) = y (L, t) = 0

∀t ≥ 0.

CHAPTER 5. WAVES IN 1 DIMENSION

5.12

Figure 5.5: Reflection of pulse at a fixed end of a string. On the left

we have plotted f (x − ct) (in red) and g (x + ct) (in blue) for successive

values of t. The shaded region is unphysical (x < 0). On the right we

have plotted y (x, t) = f (x − ct) + g (x + ct) for the physical region x ≥ 0

only.

CHAPTER 5. WAVES IN 1 DIMENSION

5.13

Once again, the initial conditions will give us the values of f (x) and

g (x) in terms of the initial conditions y0 (x) and v0 (x) - but this time,

the values will be available for only the values of x ∈ [0, L]. In order to

find out y (x, t) ∀t ≥ 0, we must also know the values of f (x) for x < 0

and the values of g (x) for x > L. This is where the boundary conditions

come in. From y (0, t) = 0, ∀t ≥ 0 we get, as before

f (x) = −g (−x)

∀x < 0.

(5.14)

From the boundary at x = L we get

f (L − ct) + g (L + ct) = 0,

∀t > 0

Thus (substituting L + ct by x) gives

g (x) = −f (2L − x) ,

∀x > L.

(5.15)

Note that these two relations (5.14) and (5.15) combine to give

g (x + 2L) = −f (−x) = g (x)

or f (x − 2L) = −g (2L − x) = f (x). This shows that both f (x) and g (x)

are periodic functions with a period of 2L.

To see the effect of these boundary conditions, we consider a case

where the string is plucked at the middle and let go. In this case, the

initial conditions are

(

x

for 0 ≤ x < L2

2h L

y0 (x) =

x

2h 1 − L

for L2 ≤ x < L

v0 (x)

=

0

In the physical region, 0 ≤ x ≤ L, this means that f (x) and g (x) are both

half as high as the triangle described by y0 (x). These functions need

to be extended beyond the physical region by using (5.14) and (5.15).

Figure (5.6) describes the time evolution of the wave using y (x, t) =

f (x − ct) + g (x + ct).

You may find the nice trapezoidal shape of the string at any given

time something of a surprise. In fact, our experience with real plucked

strings tells us to expect that the shape of the string at any given instant is a sinusoidal curve. However, you must remember that real

strings have damping which is something we have ignored in our discussion so far. We are getting a bit ahead of the story here - but as we

will soon see, the wave can be thought of as a sum of lot off sinusoids

- what damping does is to make the higher harmonics die out rapidly,

leaving only the fundamental. This, of course, does not happen in our

fictitious undamped string.

It is in fact, rather easy to understand the time evolution of the

string on physical grounds. Note that the tensions at the two ends of

CHAPTER 5. WAVES IN 1 DIMENSION

5.14

Figure 5.6: Waves in a plucked string that is fixed at both ends. The

unphysical regions has been shaded. This sequence of pictures shows

only one-quarter of a time period.

CHAPTER 5. WAVES IN 1 DIMENSION

5.15

straight string cancels each other out - so that at the beginning there

is no force on any part of the string - except for the top corner! So it

is not a surprise that parts of the sides of the triangle stay stationary until the corner which is travelling down reaches them.

5.7

A tale of two strings

Let us now consider a situation where two semi-infinite strings are

joined together at x = 0. Let the speed of the wave for the string at x > 0

be c1 and that for x < 0 be c2 . So, the wave is described by

(

f1 (x − c1 t) + g1 (x + c1 t)

x>0

y (x, t) =

(5.16)

f2 (x − c2 t) + g2 (x + c2 t)

x<0

The initial conditions will yields the values of f1 , g1 for x > 0 and for

f2 , g2 for x < 0. What we need for a descrption of the wave at all times

t > 0 are the values of f1 for x < 0 and g2 for x > 0. To find these, we

need to examine what happens at the point where the two strings join.

Since the string is continuous at x = 0 (indeed, it has to be continuous everywhere - but its continuity elsewhere can be taken care of by

simply demanding that f1 , g1 , f2 and g2 be

R xcontinuous - which, in turn,

follows from the continuity of y0 (x) and ξ v0 (x0 ) dx0 ), we have

f1 (−c1 t) + g1 (c1 t) = f2 (−c2 t) + g2 (c2 t)

Writing x for −c1 t we see that ∀x ≤ 0:

f1 (x) + g1 (−x) = f2 (µx) + g2 (−µx)

where µ is the ratio cc12 . Note that in this equation the quantities g1 (−x)

and f2 (µx) are known from initial conditions - while f1 (x) and g2 (−µx)

are precisely the quantities that we need to know. This suggest rewriting the equation as

f1 (x) − g2 (−µx) = f2 (µx) − g1 (−x)

(5.17)

We need one more continuity condition. This follows from the fact that

the slopes of the two strings must match where they join (if they didn’t,

there would have been an unbalanced force on the junction - which is

not possible because the junction is massless!). This leads to

∂y

∂y

=

∂x x=0+

∂x x=0−

which means

f10 (−c1 t) + g10 (c1 t) = f20 (−c2 t) + g20 (c2 t)

CHAPTER 5. WAVES IN 1 DIMENSION

5.16

Writing x for c1 t yields

f10 (x) + g10 (−x) = f20 (µx) + g20 (−µx)

so that integration yields

f1 (x) − g1 (−x) =

1

1

f2 (µx) − g2 (−µx) .

µ

µ

Once again we can rearrange so that the terms we seek land up on the

left hand side :

f1 (x) +

1

1

g2 (−µx) = f2 (µx) + g1 (−x) .

µ

µ

(5.18)

Equations (5.17) and (5.18) can be easily solved to yield

f1 (x) =

2

µ−1

f2 (µx) +

g1 (−x)

1+µ

µ+1

and

g2 (−µx) =

∀x ≤ 0.

1−µ

2µ

f2 (µx) +

g1 (−x)

1+µ

µ+1

(5.19)

∀x ≤ 0

which can be rewritten as

1−µ

2µ

g2 (x) =

f2 (−x) +

g1

1+µ

µ+1

x

µ

∀x ≤ 0

(5.20)

Equations (5.19) and (5.20) give us all the information we need to

find the value of the wave everywhere using equation (5.16). To understand whats going on better, let us start with an initial condition in

which we only have a wave starting from a large positive x moving in

towards the junction at x = 0. In this case the initial conditions tell us

that

f1 (x) = 0,

x > 0,

and

f2 (x) = g2 (x) = 0,

x < 0.

Equation (5.19) and (5.20) then gives us the extra pieces needed to

complete the picture :

f1 (x)

=

g2 (x)

=

µ−1

g1 (−x)

µ+1

2µ

x

g1

µ+1

µ

x<0

x>0

Note that initially only g1 is nonzero in its physical region - which describes exactly a wave travelling towards the junction. If the leading

edge of the pulse is at a distance a from the junction, then the leading

CHAPTER 5. WAVES IN 1 DIMENSION

5.17

edge of the pulses f1 and g2 are at distances of a and aµ from it. It is

easy to see that all three pulses will impinge on the junction together.

At this instant, of course, only g1 is physical. After some time, g1 passes

completely into the unphysical region and disappears from view. What

we see now are the pulses f1 and g2 , which have passed into their

physical region. These are the reflected and transmitted pulses, respectively! Note that if µ = 1, there is no reflected pulse, while the

transmitted pulse is identical to the incident one. This makes perfect

sense - because when µ is 1, the two strings are really identical - there

is no junction in this case! Also note that if µ < 1 there is a sign change

between the incident pulse g1 and the reflected pulse f1 . This means

that if the pulse is travelling from a string where its speed is more to

one where its speed is less, there is a phase change on reflection. Also

realise that if the original pulse has a width δ, the transmitted pulse g2

has a width of µδ. If the incident pulse is a (perhaps modulated) sinusoid of wavelength λ - the transmitted pulse will have a wavelength of

µλ. This is consistent with the fact that the frequency of the pulse does

not change on transmission - so that the difference in speeds translate

directly to a change in wavelength.

Chapter 6

Waves and Fourier

analysis

We will now outline another way of solving the wave equation. This may

seem to be a waste of time - since we have already managed to solve the

equation! The method we will now adopt to solve the equation is a very

important one - and this will also give us the opportunity to introduce

one of the most important topics in mathematical physics.

6.1

Separation of variables

We will solve the wave equation for a string that is fixed at both ends.

Let us try to look for solutions having the special form :

y (x, t) = X (x) T (t) .

Substituting this form in the wave equation we get

T

d2 X

1 d2 T

= 2X 2

2

dx

c

dt

Next we divide both sides by XT and land up with

1 d2 X

1 d2 T

=

X dx2

c2 T dt2

This separates the two variables x and t completely - the left hand side

depends on x alone while the right hand side depends only on t. This is

what gives this method, the “separation of variables” its name. Now, if

either the left hand side or the right hand side had been non-constant

functions of x and t respectively, we could have destroyed the equality

by changing one but not the other of these two independent variables.

6.0

CHAPTER 6. WAVES AND FOURIER ANALYSIS

6.1

This means that their common value must be a constant! Now, if this

constant were positive - it would give us solutions that would grow

exponentially with time1 . To avoid this unphysical behavior2 , we conclude that this so called separation constant must be n egative and

hence write it as −k 2 . Thus we have

d2 X

+ k 2 X = 0.

dx2

This is a very familiar equation for us indeed and we can immediately

write down its solution

X (x) = A sin (kx) + B cos (kx) .

Let us now impose the boundary conditions. Since y (x, t) has to vanish

at x = 0 and x = L at all values of t, we must have

X (0) = X (L) = 0.

The first of these, X (0) = 0 tells us that B = 0, while the second leads

to

A sin (kL) = 0

Now, we can not have A = 0, for that would cause the solution to vanish

identically (y = 0 does satisfy the wave equation of course, but only it

is a rather uninteresting solution - don’t you think?) - so we have to

ensure that

sin (kL) = 0

This means that only certain values of k are allowed

k=

nπ

,

L

n ∈ Z.

so that the solution X consistent with the boundary conditions is

nπx X (x) = A sin

L

As for the function T , it obeys

1 d2 T

= −k 2

dt2

c2 T

or

d2 T

+ ω2 T = 0

dt2

2

2

±cκt . The solution with the + sign blows

solution of c21T ddtT

2 = κ > 0 is T (t) = e

up as t → ∞.

2 Note that we are really worried about the behavior of T (t). Although a positive separation constant will give us exponential behavior for X (x) as well, but since 0 ≤ x ≤ L this is not a matter of concern.

1 The

CHAPTER 6. WAVES AND FOURIER ANALYSIS

where ω = kc =

6.2

nπc

L .

Thus the general solution for T is

nπct

nπct

T (t) = C cos

+ D sin

L

L

Combining the two, we get that a solution to the wave equation of the

form y (x, t) = X (x) T (t) which obeys the boundary conditions

∀t

y (0, t) = y (L, t) = 0

is

y (x, t) = sin

nπx L

a cos

nπct

L

+ b sin

nπct

L

.

Note that I said “a solution” - not “the solution”. What we have is in

fact not one - but an infinite number of solutions - one for each integer

n! Thus we can write down the general solution as

y (x, t) =

∞

X

n=1

sin

nπx L

an cos

nπct

L

+ bn sin

nπct

L

.

(6.1)

You may have noticed that while all intger values were allowed for

n, in this final sum we have only taken positive n. This is because we

can combine the terms for +n and −n into a single term :

nπct

nπct

−nπct

−nπct

an cos

+ bn sin

+ a−n cos

+ b−n sin

L

L

L

L

nπct

nπct

= (an + a−n ) cos

+ (bn − b−n ) sin

L

L

This explain why it suffices to take positive integer values of n only all we have to do is to call the (as yet arbitrary) coefficients (an + a−n )

and (bn − b−n ) by their new names an and bn respectively. The reason

why n = 0 has been left out is slightly more subtle. At first glance the

reason is obvious - after all, if n = 0, then the term sin nπx

vanishes

L

identically - so we can easily leave it out of the sum. However, for n = 0,

which means k = 0 we actually have the equation

d2 X

=0

dx2

for X. Its solution is not A cos kx + B sin kx for k = 0, but rather X (x) =

Ax + B. It is easy to see that if we impose the two boundary conditions

this function does vanish identically - thus justifying our leaving the

n = 0 term out of (6.1).

CHAPTER 6. WAVES AND FOURIER ANALYSIS

6.2

6.3

Solving the initial value problem

Now that we have got ourselves a general solution to our boundary

value problem, it is time to move on to the next step. At this stage, our

solution (6.1) has an infinite number of arbitrary constants. To find

these constants, you need to know the initial conditions for the string :

y (x, 0) =

∂y

(x, 0) =

∂t

y0 (x)

v0 (x)

Substituting these in (6.1) gives us

y0 (x)

v0 (x)

=

=

∞

X

n=1

∞

X

n=1

an sin

bn

nπx L

nπx nπc

sin

L

L

This means that the task of finding the coefficients an and bn boils down

to the question Given a function f (x) defined on the interval [0, L] can one

write it as an infinite sum

f (x) =

∞

X

n=1

cn sin

nπx L

(6.2)

over all the sinusoids of period L, and if so, what are the

coefficients cn ?

The answer to the question as to whether this can be done is yes - at

least for the reasonable kind of functions that one expects to meet in

physics. The proof of this fact is rather too advanced for our present

course and, in any case, it is something for the mathematicians to

worry about! Once we accept that the expansion can be done, however,

finding the coefficients is a simple matter - especially if we leave the

mathematicians to worry about the little issues of rigor! For this we

simply need to know the integral

(

Z L

nπx mπx 0

for m 6= n

L

sin

dx = L

(6.3)

= δmn

sin

L

L

2

for m = n

0

2

CHAPTER 6. WAVES AND FOURIER ANALYSIS

6.4

which you can easily verify. Thus

Z

L

f (x) sin

0

mπx L

dx

∞

X

L

Z

=

0

∞

X

=

cn

sin

L

nπx 0

!

L

sin

sin

mπx L

mπx L

dx

dx

L

L

cn δmn = cm

2

2

n=1

and thus

2

L

n=1

Z L

n=1

∞

X

=

cn =

cn sin

nπx L

Z

f (x) sin

nπx L

0

dx

(6.4)

This means that we can easily find out the coefficients that we are

looking for by using

an

bn

6.2.1

2

L

=

Z