CBIR FINAL DOC

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

CHAPTER 1

INTRODUCTION

Department of ECE Page 1 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

INTRODUCTION

1.1

Introduction to CBIR

Due to exponential increase of the size of the so-called multimedia files in recent years because of the substantial increase of affordable memory storage on one hand and the wide spread of the World

Wide Web (www) on the other hand, the need for efficient tool to retrieve images from large dataset becomes crucial. This motivates the extensive research into image retrieval systems. From historical perspective, one shall notice that the earlier image retrieval systems are rather text-based search since the images are required to be annotated and indexed accordingly. However, with the substantial increase of the size of images as well as the size of image database, the task of userbased annotation becomes very cumbersome, and, at some extent, subjective and, thereby, incomplete as the text often fails to convey the rich structure of the images. This motivates the research into what is referred to as content-based image retrieval (CBIR) .

Content based image retrieval is based on (automated) matching of the features of the query image with that of image database through some image-image similarity evaluation. Therefore, the images will be indexed according to their own visual content in the light of the underlying (chosen) features like color (distribution of color intensity across image, texture (presence of visual patterns that have properties of homogeneity and do not result from the presence of single color, or intensity), shape

(boundaries, or the interiors of objects depicted in the image), or any other visual feature or a combination of a set of elementary visual features. Needless to say, the advantages and end users of such systems range from simple users searching a particular image on the web as well various type of professional bodies, like police force for picture recognition, journalists requesting pictures that match some query etc.

From historical perspective, probably the first use of CBIR goes back to Kato in early nineties where he implemented what sounds to be the first automated image retrieval system using color and shape features. Since Kato's pioneer work, many prototypes of CBIR systems have been developed, and some of them did go to commercial market, e.g., IBM's QBIC system,

Department of ECE Page 2 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD which supports color, shape and texture feature, Virage developed by Virage Inc and supportscolor, texture, color layout and shapes. However, it has been acknowledged the lack of maturity of current technology, which limited its large scale deployment. This motivated the intensive research carried out in many aspects of CBIR including image indexing, feature selection and extraction.

1.2 Problem Motivation

Image databases and collections can be enormous in size, containing hundreds, thousands or even millions of images. The conventional method of image retrieval is searching for a keyword that would match the descriptive keyword assigned to the image by a human categorizer . Currently under development, even though several systems exist, is the retrieval of images based on their content, called Content Based Image Retrieval, CBIR. While computationally expensive, the results are far more accurate than conventional image indexing. Hence, there exists a tradeoff between accuracy and computational cost. This tradeoff decreases as more efficient algorithms are utilized and increased computational power becomes inexpensive.

1.3 Problem Statement

The problem involves entering an image as a query into a software application that is designed to employ CBIR techniques in extracting visual properties, and matching them. This is done to retrieve images in the database that are visually similar to the query image.

1.4. Proposed Solution

The solution initially proposed was to extract the primitive features of a query image and compare them to those of database images. The image features under consideration were colour, texture and shape. Thus, using matching and comparison algorithms, the colour, texture and shape features of one image are compared and matched to the corresponding features of another image. This comparison is performed using colour, texture and shape distance metrics. In the end, these metrics are performed one after another, so as to retrieve database images that are similar to the query.

Department of ECE Page 3 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

CHAPTER 2

DETAILS OF THE PROJECT

Department of ECE Page 4 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

DETAILS OF THE PROJECT

2.1 Overview Of CBIR:

INPUT DATA BASE

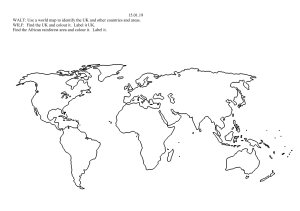

Figure 2.1:Block Diagram of image retrival system

Any CBIR system involves at least four main steps :

Feature extraction and indexing of image database according to the chosen visual features,which form the perceptual feature space ,e.g. color, shape, texture or any combination of the above.

Matching the query image to the most similar images in the data base according to

Some images.

Image similarity measure : This forms the search part of CBIR.

User interface and feedback which governs the display of the outcomes, their ranking the type of the user interaction with possibility of refining the search through some automatic or manual preference scheme.

Department of ECE Page 5 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

2.2. Feature extraction and indexing

One distinguishes two types of visual features in CBIR: primitive feature and domain-specific features. The former includes color, shape and texture features while the latter includes, for instance, face recognition, finger prints, handwriting, which form a sort of high level image description or meta-object.

2.3 Color

Color represents one of the most widely used visual features in CBIR systems. First a color space is used to represent color images. Typically, RGB (red Green Blue) space, where the grey level intensity is represented as the sum of red, green and blue grey level intensities, is widely used in practice. Next, a histogram, -in RGB space, one histogram for each basic color is needed-, is employed to represent the distributions of colors in image. The number of bins of the histogram determines the color quantization. Therefore, the histogram shows the number of pixels whose grey level fails within the range indicated by the corresponding bin. The comparison between images (query image and image in database) is accomplished through the use of some metric which determines the distance or similarity between the two histograms.

However, it is straightforward to see that the restriction to distribution of colors only across the whole image without accounting for spatial constraints is insufficient to discriminate between images, as illustrated in Figure 2 whose two (distinct) images have the same color histogram.

Figure 2.2:Two images with same color histograms

One of the most important features that make possible the recognition of images by humans is colour. Colour is a property that depends on the reflection of light to the eye and the processing of that information in the brain. We use colour everyday to tell the difference between objects,

Department of ECE Page 6 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD and the time of day . Usually colours are defined in three dimensional colour spaces. These could either be RGB (Red, Green, and Blue), HSV (Hue, Saturation, and Value) or HSB (Hue,saturation and Brightness). The last two are dependent on the human perception of hue, saturation, and brightness. Most image formats such as JPEG, BMP, GIF, use the RGB colour space to store information . The RGB colour space is defined as a unit cube with red, green, and blue axes. Thus, a vector with three co-ordinates represents the colour in this space. When all three coordinates are set to zero the colour perceived is black.

2.3.1 Methods of Representation

The main method of representing colour information of images in CBIR systems is through colour histograms. A colour histogram is a type of bar graph, where each bar represents a particular colour of the colour space being used. In MatLab for example you can get a colour histogram of an image in the RGB or HSV colour space. The bars in a colour histogram are referred to as bins and they represent the x-axis. The number of bins depends on the number of colours there are in an image.

The y-axis denotes the number of pixels there are in each bin. In other words how many pixels in an image are of a particular colour.

An example of a colour histogram in the HSV colour space can be seen with the following image:

Figure 2.3:Sample Image and its histogram

As one can see from the colour map each row represents the colour of a bin. The row is composed of the three coordinates of the colour space. The first coordinate represents hue, the second saturation, and the third, value, thereby giving HSV. The percentages of each of these coordinates are what make up the colour of a bin. Also one can see the corresponding pixel numbers for each bin, which

Department of ECE Page 7 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD are denoted by the blue lines in the histogram. Quantization in terms of colour histograms refers to the process of reducing the number of bins by taking colours that are very similar to each other and putting them in the same bin. By default the maximum number of bins one can obtain using the histogram function in MatLab is 256. For the purpose of saving time when trying to compare colour histograms, one can quantize the number of bins. Obviously quantization reduces the information regarding the content of images but as was mentioned this is the tradeoff when one wants to reduce processing time. There are two types of colour histograms, Global colour histograms (GCHs) and

Local colour histograms (LCHs). A GCH represents one whole image with a single colour histogram. An LCH divides an image into fixed blocks and takes the colour histogram of each of those blocks . LCHs contain more information about an image but are computationally expensive when comparing images. “The GCH is the traditional method for colour based image retrieval.

However, it does not include information concerning the colour distribution of the regions of an image. Thus when comparing GCHs one might not always get a proper result in terms of similarity of images.

EXAMPLES OF COLOUR HISTOGRAM

Figure 2.4:RGB and HSV Colour space

Department of ECE Page 8 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

2.4 Color layout

In this course, the spatial relationships are used in conjunction to color feature.

A natural way of doing so is by dividing the whole image into a set of sub images and takes the color histogram of each sub image. Several variants of the proposal have been put forward depending on the way the decomposition is accomplished. One shall mention, for instance, the color auto-correlogram ,which expresses how the spatial correlation of pairs of colors change with distance; that is, for a given distance d and row (i,j), it provides the probability of finding a pixel of color j at distance d away from color i, where i and j stands for any pair of quantum grey level intensity (or any bin in the histogram). Its computation complexity is O(k * n

2

), where k is the number of neighbour pixels, which is dependent on the distance selection.

Obviously such computation complexity grows fast when the distance becomes large. But it is also linear to the size of the image.

Although the global color feature is simple to calculate and can provide reasonable discriminating power in image retrieval, it tends to give too many false positives when the image collection is large. Many research results suggested that using color layout (both color feature and spatial relations) is a better solution to image retrieval. To extend the global color feature to a local one, a natural approach is to divide the whole image into sub blocks and extract color features from each of the sub blocks [1, 8]. A variation of this approach is the quad tree-based color layout approach , where the entire image was split into a quad tree structure and each tree branch had its own histogram to describe its color content. Although conceptually simple, this regular sub block-based approach cannot provide accurate local color information and is computation-and storage-expensive. A more sophisticated approach is to segment the image into regions with salient color features by color set back-projection and then to store the position and color set feature of each region. The advantage of this approach is its accuracy while the disadvantage is the general difficult problem of reliable image segmentation. To achieve a good trade-off between the above two approaches, several other color layout representations were proposed.

2.5. Shapes

Shape may be defined as the characteristic surface configuration of an object; an outline or contour. It permits an object to be distinguished from its surroundings by its outline . Shape

Department of ECE Page 9 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD representations can be generally divided into two categories 2: Boundary-based, and Regionbased.

Figure 2.5:Region based and boundary based shapes

Boundary-based shape representation only uses the outer boundary of the shape. This is done by describing the considered region using its external characteristics; i.e., the pixels along the object boundary. Region based shape representation uses the entire shape region by describing the considered region using its internal characteristics; i.e., the pixels contained in that region.

A number of features characteristic of object shape (but independent of size or orientation) are computed for every object identified within each stored image. Queries are then answered by computing the same set of features for the query image, and retrieving those stored images whose features most closely match those of the query.

2.6 Texture

Texture is that innate property of all surfaces that describes visual patterns, each having properties of homogeneity. It contains important information about the structural arrangement of the surface, such as; clouds, leaves, bricks, fabric, etc. It also describes the relationship of the surface to the surrounding environment [2]. In short, it is a feature that describes the distinctive physical composition of a surface. Texture properties include:

Coarseness

Contrast

Directionality

Line-likeness

Regularity

Roughness

Texture is one of the most important defining features of an image. It is characterized by the spatial distribution of gray levels in a neighbourhood . In order to capture the spatial dependence of graylevel values, which contribute to the perception of texture, a two-dimensional dependence texture analysis matrix is taken into consideration. This two-dimensional matrix is obtained by decoding the image file; jpeg, bmp, etc.

Department of ECE Page 10 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

2.7 Methods of Representation

There are three principal approaches used to describe texture; statistical, structural and spectral… Statistical techniques characterize textures using the statistical properties of the grey levels of the points/pixels comprising a surface image. Typically, these properties are computed using: the grey level co-occurrence matrix of the surface, or the wavelet transformation of the surface. Structural techniques characterize textures as being composed of simple primitive structures called “texels” (or texture elements). These are arranged regularly on a surface according to some surface arrangement rules. Spectral techniques are based on properties of the Fourier spectrum and describe global periodicity of the grey levels of a surface by identifying high-energy peaks in the Fourier spectrum .

Figure 2.6:Sample of Textures

For optimum classification purposes, what concern us are the statistical techniques of characterization… This is because it is these techniques that result in computing texture properties…

The most popular statistical representations of texture are:

Tamura Texture

Discrete Wavelet Transform

2.7.1Tamura Texture

By observing psychological studies in the human visual perception, Tamura explored the texture representation using computational approximations to the three main texture features of: coarseness, contrast, and directionality [2, 12]. Each of these texture features are approximately computed using algorithms.

Department of ECE Page 11 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

2.7.2 Discrete Wavelet Transform

Textures can be modeled as quasi-periodic patterns with spatial/frequency representation.

The wavelet transform transforms the image into a multi-scale representation with both spatial and frequency characteristics. This allows for effective multi-scale image analysis with lower computational cost . According to this transformation, a function, which can represent an image, a curve, signal etc., can be described in terms of a coarse level description in addition to others with details that range from broad to narrow scales Unlike the usage of sine functions to represent signals in Fourier transforms, in wavelet transform, we use functions known as wavelets. Wavelets are finite in time, yet the average value of a wavelet is zero . In a sense, a wavelet is a waveform that is bounded in both frequency and duration. While the Fourier transform converts a signal into a continuous series of sine waves, each of which is of constant frequency and amplitude and of infinite duration, most real-world signals (such as music or images) have a finite duration and abrupt changes in frequency. This accounts for the efficiency of wavelet transforms. This is because wavelet transforms convert a signal into a series of wavelets, which can be stored more efficiently due to finite time, and can be constructed with rough edges, thereby better approximating real-world signals. Generally we use haar wavelet

2.8 Semantic feature

So far the features described so far are based on primitive image features, referred to as level 1 feature. However, there are attempts to build bridge to level 2 type-features and image retrieval task.

An example of such system is the IRIS system , which uses color, texture, region and spatial information to derive the most likely interpretation of the scene, generating text descriptors which can then be input to any text retrieval system.

In contrast to these fully-automatic methods is a family of techniques which allow systems to learn associations between semantic concepts and primitive features from user feedback. Examples of such work include, where the user is invited to annotate selected regions of an image, and then proceeds to apply similar semantic labels to areas with similar characteristics, which, in turn, improves performance with additional user's feedback. Chang introduced the concept of semantic visual template where the user is asked to identify a possible range of color, texture, shape or motion parameters to express his or her query, which is then refined using relevance feedback techniques and the query is given to semantic label and stored.

Department of ECE Page 12 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

CHAPTER 3

IMPLEMENTATION OF THE PROJECT

Department of ECE Page 13 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

IMPLEMENTATION OF THE PROJECT

3.1.Matching query to image

First, one notices that the query-image matching model is very much linked to the type of features used to represent the images. One distinguishes at least three case Feature vector representation where each component of the vector represents the value of a specific item or attribute of the image feature, say Vi= [x1 x2 ...Xd] where d stands for number of attributes. For instance in case of greylevel color histogram, a component k represents the number of pixels whose values fall within the range specified by the k th

bin.

Region based representation where a set of vectors, possibly with different size, is used instead of a single vector. A typical example includes color segmentation, where, for instance, the image is split into four parts and the color histogram of each part is reported. Another example consists of use of multiple features, so each vector would correspond to an individual feature.

Text-based representation where the summary of local feature vectors is rather described as textbased information or a sort of coding to report on the outcome of the feature extraction. This corresponds to a high level description of the outcome.

Consequently, in each of the above case, the approach to the query-image matching is obviously different.

3.2.Feature Extraction

Calculation of color histogram

Color is an important visual attribute for both human perception and computer vision and it is widely used in image retrieval. The color histogram is one of the most direct and the most effective color feature representation. This paper incorporates spatial information to it by combining the color histograms for several sub-blocks defined in the minority clothing image. An appropriate color space and quantization must be specified along with the histogram representation. In this paper, three color spaces (RGB, HSV and CIE L*a*b*) with different quantification number are used to test the performance of our Method. The experimental results in Tables 1-3 demonstrate that the

RGB color space with 8×4×4=128 quantification number is the best choice in our framework. For an image with a size of M×N, we set the color quantification number to L and denote the image by the equation

Department of ECE Page 14 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

.................................................(1)

We divide the image into n blocks. The color values of each block is denoted by

............................(2)Then the color histogram of each block is defined as

..................................(3) where j num is the number of pixels in a sub-block whose color value is quantified to j.

3.3 Calculation of Edge Orientation Histogram

In the system of theory on computer vision, edge detection of image plays an important role.

This paper construct a feature descriptor namely edge orientation histogram, which can be seen as a texture feature and also a shape feature. The classic edge detection operator are Sobel, Roberts,

Prewitt and Canny. Sobel is one of the most popular operator [22], which is named after Irwin Sobel and Gary Feldman. The Sobel operator is based on convolving the image with a small, separable, and integer valued filter in the horizontal and vertical directions and is therefore relatively inexpensive in terms of computations. The operator uses two 3×3 kernels which are convolved with the original image to calculate approximations of the derivatives - one for horizontal changes, and one for vertical. If we define R, G, B as the unit vectors along the R, G, B axes in RGB color space, the computations are as follows:

...........................................(4) g(xx)and g( xy), g(yy)are defined as dot products of the vectors mentioned above:

(x ,y )is

..................................(5)

Using the above notations, it can be seen that the maximum gradient orientation of point (,)

...................................................(6)

And the gradient magnitude at (x, y) in the direction of (,) x y ϕ given by

.....................................(7)

Department of ECE Page 15 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

.............................(8)

3.4 Comprehensive feature representation

The comprehensive feature extraction algorithm proposed in this paper can be represented as follows:

Step1: Divide the minority costume image with a size of M×N into n sub-blocks, the experimental results in Table 4 demonstrate that our method gets the best results when n=2×2.

Step2: Calculate the color histogram of every sub-block and then linearly combine them as

................................(9)

Step3: Calculate the edge orientation histogram of each sub-block and then linearly combine them as follows:

............................(10)

Step4: Linearly Combine all the histograms mentioned in step2 and step3 as

.............................................(11)

3.5 Similarity Measurement

After feature extraction, each image in the minority costume image dataset is represented as a multidimensional feature vector .

.................................................(12)

If we use CSA and CSB to represent the dimension of color histogram HCxy and the dimension of edge orientation histogram H x y ϕ respectively

M= CSA+ CSB dimensional feature vector will be extracted and stored for every image in the minority costume image dataset.

.......................................(13) be the feature of query image Q and image T in the database. Then the retrieval results can be returned by computing a similarity measure of feature vector between query image and every image in the dataset.

Department of ECE Page 16 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

CHAPTER 4

RESULTS AND CONCLUSION

Department of ECE Page 17 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

RESULTS AND CONCLUSION

4.1 Introduction

In other words looks at the set of all image retrieved by the CBIR system and finds out how many of those images are really relevant to the given query, while the recall looks to the whole database of the images to find out whether there are some images in the database which are actually relevant to the query but not retrieved by the CBIR system. In both metrics, one requires the knowledge of the meaning of relevance, which maybe somehow subjective.

Traditionally results are summarized as precision-recall curve. Research in information retrieval systems has shown that precision and recall follow an inverse relationship. When dealing with several queries, one often uses the mean average precision, where precision is calculated over a number of different queries.

4.2 Simulation results

Here the given image is compared with the image present in the database and we will get the output

Department of ECE Page 18 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

Table 4.2.1 Average retrival precision and recall in the RGB color space

The quantization number for colour

216

Precision(%) Recall(%)

12 18 24 30 36 12 18 24 30 36

61.7 62.0 62.6 62.5 62.42 7.41 7.45 7.48 7.50 7.49

128

64

65.1 65 65.7 65.9 65.57 7.82 7.88 7.89 7.91 7.87

63.9 64 64.6 64.4 63.85 7.68 7.72 7.76 7.73 7.66

32 61.0 61 62.6 62.2 61.42 7.32 7.43 7.47 7.47 7.37

Table 4.2.2:Average retrival precision and recall in the HSV colour space

The quantization number for color Precision(%) Recall(%)

12 18 24 30 36 12 18 24 30 36

192

128

108

72

60.8 60.96 60.8 61.08 61.6 7.30 7.32 7.30 7.33 7.36

63.2 63.2 63.35 63.50 63.5 7.59 7.59 7.60 7.62 7.62

61.1 61.5 61.64 61.63 61.65 7.39 7.39 7.40 7.40 7.40

62.3 62.9 62.68 62.82 62.68 7.48 7.55 7.52 7.54 7.52

Department of ECE Page 19 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

Table 4.2.3:Average retrival precision and recall in the L*A*B Colour space

The quantization number for colour

Precision(%) Recall(%)

12 18 24 30 36 12 18 24 30

180 58.4 58.8 58.9 59.1 59.14 7.0 7.0 7.07 7.09

160

90

45

55.9 56.99

53.58 54.74

57.07

55.13

57.42

55.17

57.22

54.65

6.71

6.43

6.84

6.57

6.85

6.62

6.89

6.62

52.54 54.29 54.67 54.68 53.89 6.31 6.52 6.56 6.56

36

7.10

6.87

6.56

6.47

Department of ECE Page 20 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

4.3 Conclusion

This report reviewed the main components of a content based image retrieval system, including image feature representation, indexing, query processing, and query image matching and user's interaction, while highlighting the current state of the art and the key-challenges. It has been acknowledged that it remains much room for potential improvement in the development of content based image retrieval system due to semantic gap between image similarity outcome and user's perception. Contributions of soft-computing approaches and natural language processing methods are especially required to narrow this gap.

Standardization in the spirit of MPEG-7, which includes both feature descriptor and language annotation for description various entity relationships, is reported as a crucial step.

Department of ECE Page 21 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

APPENDIX

Department of ECE Page 22 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

APPENDIX

A.Software Description

The software used for the implementation of the project was MATLAB. The latest version of

MATLAB ,R2017a was used. It consists of many tool boxes. Among the many tool boxes, Image tool processing tool box used for this project .With the help of image processing tool box, many commands are used in a simple way. It provides all the basic commands which are used for the implementation of image processing tool projects.

Image processing tool box provides a comprehensive set of reference standard algorithms and work flow apps for image processing, analysis, visualization and algorithm development. It is a possible to perform image segmentation, image enhancement, noise reduction ,geometric transformations ,image registeration. Many operations can be performed using image processing tool box in MATLAB.

Image processing tool box apps lets you automate image processing workflows .you can interactively segment image data, compare image registeration techniques ,and batch process large data sets.

The following are the steps followed during the implementation of the project on MATLAB

R2017a . These are the not the actual steps to be followed depending on the version of the

MATLAB, the changes can be made accordingly. The commands available in the version of R2017 a may not be available in other versions.

Department of ECE Page 23 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

Step 1:

First of all run the cbires and we will get the GUI interface on which our further operations will be Perfomed.

Step 2:

GUI interface will perform the operations like retrival of images. erface, we need to load images from the dataset .

Department of ECE Page 24 CMRCET

Ste p 3:

I n

I

G

U t i n

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

Step 4:

In nextstep, we need to load the images (database ). In this we created a image database of

Different datasets like people, buses, flowers ,animals, buildings etc

Department of ECE Page 25 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD erwards, images are loaded succesfull y.

Step 6 :

Here we can see the images of different dataset.

Department of ECE Page 26 CMRCET step

5:

A f t

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

Here our query image which is nothing but input image which is compared to other images .

Images which are related to content will be displayed.

The number of images we want we can retrive from our database.

Department of ECE Page 27 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

In this interface we can apply different filters to images and we can observe the difference between them.

Department of ECE Page 28 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

B.source code function waveletMoments =waveletTransform(image,spaceColor)

%input :image to process and extract wavelet coefficients from

%output :1*20 feature vector containing the first 2 moments of wavelet

%coefficients if(strcmp(spacecolor,’truecolor’)==1)

imgGray=double(rgb2gray(image))/255;

imgGray=imresize(imgGray,[256 256]); elseif (strcmp(spacecolor,’grayscale’)==1)

imgGray=imresize(imgGray,[256 256]); end coeff1=dwt2(‘imgGray’,’coif1’); coeff2=dwt2(coeff1,’coif1’); coeff3=dwt2(coeff2,’coif1’); coeff4=dwt2(coeff3,’coif1’);

%construct the feature vector meanCoeff=mean(coeff4); stdCoeff=std(coeff4);

WaveletMoments=[meanCoeff stdCoeff]; end

Department of ECE Page 29 CMRCET

CONTENT BASED IMAGE RETRIVAL THROUGH SEARCHING METHOD

Department of ECE Page 30 CMRCET