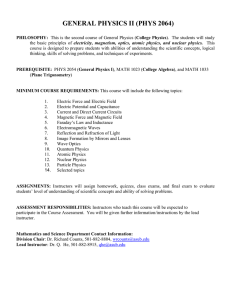

teaching-and-physics-education-research-bridging-the-gap

advertisement