OBEF(Odd Backward Even Forward) Page Replacement

advertisement

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 11, November 2012)

OBEF(Odd Backward Even Forward) Page Replacement

Technique

Kavit Maral Mehta1, Prerak Garg2, Ravikant Kumar3

1,2,3

G-752, Socrates block, VIT university, vellore -632014

As a student I have implemented this technique in C++

and have made a comparison between different other page

replacement techniques and I have got a positive result

from it showing that this method may prove to be a vital for

coming time. It’s time complexity is also equal to the other

techniques.

Abstract— This paper describes about a new technique of

page replacement algorithm that I have thought about and

gives more hit than other algorithms for a some kind of data

entries. My page replacement algorithm basically works in

two directions. For even cycles it will work on the right side

and will find the frequency of data entries that are going to be

used and for the odd cycles it works on the left side finding the

time elapsed for that data to be used again in other words

finds the least recently used element and replaces that entry .

II. EXISTING METHODS

First-in, first-out (FIFO):

The simplest page-replacement algorithm. FIFO is a

low-overhead algorithm that requires little book-keeping.

The pages is stored in queue with the most recent arrival at

the back and the earliest arrival in front. When a page

needs to be replaced, the page at the front of the queue is

selected. While FIFO is cheap and intuitive, it performs

poorly in practical application. Thus, it is rarely used in its

unmodified form.

Keywords— page replacement algorithm, cache, main

memory, time complexity, asymptotic notation.

I. INTRODUCTION

With the advancement of our computer world, there has

been a concurrent change in the components and different

terminologies related to the computer. In a computer

operating system that uses paging for virtual memory

management, page replacement algorithms decide which

memory pages to page out (swap out, write to disk) when a

page of memory needs to be allocated. Paging happens

when a page fault occurs and a free page cannot be used to

satisfy the allocation, either because there are none, or

because the number of free pages is lower than some

threshold. When the page that was selected for replacement

and paged out is referenced again it has to be paged in

(read in from disk), and this involves waiting for I/O

completion. This determines the quality of the page

replacement algorithm: the less time waiting for page-ins,

the better the algorithm. A page replacement algorithm

looks at the limited information about accesses to the pages

provided by hardware, and tries to guess which pages

should be replaced to minimize the total number of page

misses, while balancing this with the costs (primary storage

and processor time) of the algorithm itself. This was all the

basis why we require a page replacement algorithm. Now I

would tell how my page replacement technique works. All

the page replacement techniques till now implemented have

proved to be efficient for different values of data. Our basic

aim is to reduce the time of the CPU to search for the data

inside main memory. So, the technique that I have

developed also consists of some plus points and some

minus points.

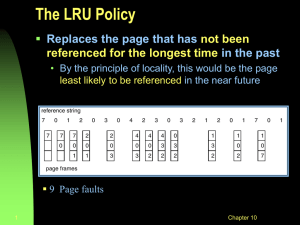

Least recently used (LRU):

LRU works on the ides that pages that have been most

heavily used in the past are more likely to be used in the

next few instructions too. LRU is little bit expensive to

implement.

Least frequently used (LFU):

LFU requires a counter and every page has a counter of

it’s own initially set to 0. At each clock interval, all pages

that have been referenced within that interval will have

their counter incremented by 1. Thus, the page with the

lowest counter can be swapped out when necessary. The

main problem with NFU is that it keeps track of the

frequency of use without regard to the time span of use.

Thus, in a multi-pass compiler, pages which were heavily

used during the first pass, but are not needed in the second

pass will be favoured over pages which are comparably

lightly used in the second pass, as they have higher

frequency counters. This results in poor performance. Other

common scenarios exist where NFU will perform similarly,

such as an OS boot-up. Thankfully, a similar and better

algorithm exists, and its description follows.

607

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 11, November 2012)

Aging:

It is similar to LFU, instead of just incrementing the

counters of the pages referenced , putting equal emphasis

on page references regardless of time, the reference counter

on a page is first shifted right, before adding the referenced

bit to the left of that binary number. For instance, if a page

has referenced bits 1,0,0,1,1,0 in the past 6 clock ticks, its

referenced counter will look like this: 10000000,

01000000, 00100000, 10010000, 11001000, 01100100.

Page references closer to the present time have more

impact than page references long ago. This ensures that

pages referenced more recently, though less frequently

referenced, will have higher priority over pages more

frequently referenced in the past. Thus, when a page needs

to be swapped out, the page with the lowest counter will be

chosen.

Let us suppose that Cache memory size is 3(i.e it can

hold 3 values) and main memory has size 10(i.e it can store

10 values).

Now CPU wants the data to perform mathematical

operations. The cache consists of some previous values.

First the CPU searches the cache for the data if it finds it

then it’s a hit and no need of refering to the main memory.

But if data is not found it will go to main memory for the

data and will perform the page replacement algorithm.

Now if count value is initially 1 and for the first time

only it gets a miss then OBEF will perform a search in the

backward direction and will compare the data values in

cache with the data values that CPU had taken from the

main memory and the value which least recently used gets

swapped out and the new data value comes in. Now this is

performed only for the odd cycles like when count=1, 3, 5,

7, 9..... and when the value of count is like 2, 4, 6, 8, 10....

then the comparison is made between the values in the

cache and values in the main memory( stored in pages)

which are going to be used in future and the value whose

frequency is less among all gets swapped out and the new

data comes in.

Clock:

Clock is a more efficient version of FIFO than Secondchance because pages don't have to be constantly pushed to

the back of the list, but it performs the same general

function as Second-Chance. The clock algorithm keeps a

circular list of pages in memory, with the "hand" (iterator)

pointing to the last examined page frame in the list. When a

page fault occurs and no empty frames exist, then the R

(referenced) bit is inspected at the hand's location. If R is 0,

the new page is put in place of the page the "hand" points

to, otherwise the R bit is cleared. Then, the clock hand is

incremented and the process is repeated until a page is

replaced.

IV. ALGORITHM DEVELOPED

The above proposed method works according to the

following algorithm:

I am considering array to store data in cache and in

main memory.

Let m be the size of the cache and n be the size oo the

main memory.(m<n)

Now input the data values in the main memory that

we would be requiring for calculations.

The cache by default contains some values.

Now we start our computation. CPU wants values , so

it first looks in the cache.

If it is a hit then CPU fetches the value from the cache

and uses them. If it’s a miss then the CPU looks in the

main memory and fetches the value from there.

Now if it is a miss, after fetching the value from the

main memory it performs OBEF by checking the

value of the count. If it odd then looks for the least

recently used value in the already used data values

and swaps out that value instead of a new one. If

count value is even then it looks for least frequently

occurring value in the forward direction (i.e the values

that are in the main memory and are going to be

referred in the future) and swaps that out instead of a

new value.

Random:

Random replacement algorithm replaces a random page

in memory. This eliminates the overhead cost of tracking

page references. Usually it fares better than FIFO, and for

looping memory references it is better than LRU, although

generally LRU performs better in practice

III.

PROPOSED METHOD

The basic purpose of the page replacement method is to

get as many hits as possible. We call our technique ―THE

HYBRID‖, Because this method works in two directions.

We have taken a counter. It’s initially value is 1.

Now whenever CPU checks for the data value in cache

the value of the count increments by 1. And this value of

count decides whether the page replacement algorithm will

consider the values that the CPU will encounter in the

future or the data values that the CPU has already come

across.

608

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 11, November 2012)

This process repeats until CPU has completed it’s

computation.

At the end of the process we calculate pagehit and

pagemiss for the above algorithm.

If pagehit > pagemiss then the above method is

feasibly for the provided data values.

For our method (OBEF) we have used Dev C++ to

compile and we have compared our developed program

with other page replacement methods. And for many data

value page-hit percentage > 60.

The comparison with other methods has been described

below.

V. COMPARISON WITH OTHER TECHNIQUES

Let m be the size of cache and n be the size of the main

memory.

Let m=5 and n=10 and a[] be the array keeping cache

data and s[] be the array storing main memory data.

Let initial values in a[]={1,2,3,4,5} and data required for

computation is stored in main memory i.e

s[]={9,1,8,2,7,3,6,4,5,5}.

Now at the student level I personally developed the code

for LRU,LFU,FIFO and my own method(OBEF) in c++

and compared them for the above assumed values.

1.

Output given when FIFO is runned

We can see that For our assumed data values we get

50% hits in case of OBEF and for the similar data value set

we get 10% hits when FIFO is runned.

This shows that OBEF is far more productive and useful

in terms of time and storage than FIFO.

Comparison with FIFO

Output Given When OBEF is runned.

609

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 11, November 2012)

2.

Comparison with LFU:

3. Comparison with LRU:

Output when LFU is runned

Output when LRU is runned

Now we see that Our method OBEF is of same

importance that LFU has.

Both the techniques are giving 50% hits and therefore

our method could be applied for the data values where LFU

is used.

Both the techniques have O(n2) time complexity.

We see that for the above mentioned values LRU gives

only 10% hits that means OBEF is more efficient than LRU

and can be used in the places where LRU is used and could

give more efficiency to the page replacement process.

610

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 11, November 2012)

VI. CONCLUSION

VII. AKNOWLEDGEMENT

The result of the following observations and tests tell us

that this method may prove to play a important role in

coming time as it proves to give more efficiency and taking

equal time to execute with respect to the other techniques.

The OBEF has performed better than FIFO,LFU,LRU and

following virtues of this technique must be noted :

1. It is a simple technique and easy to implement not

requiring mush of the hardware and technology.

2. It has an equal time complexity with respect to the

other techniques.

3. It has proved to be a good technique for scheduling

process like round-robin.

We would like to thank our parents for giving us such a

support in our research. And we hope that through your

publication we may be able to bring our work in front of

the whole world. As a student and lack of resources we

have implemented this technique only at the basic level.

REFERENCES

[1 ] Aho, Denning and Ullman, Principles of

Optimal Page

Replacement, Journal of the ACM, Vol. 18, Issue 1,January 1971,

pp 80–93.

[2 ] Denning, P. J., "Virtual Memory," Computing Surveys, Vol. 2,No. 3,

Sept. 1970, pp. 153-189.

[3 ] Elizabeth J. O'Neil and others, The LRU-K page

replacement

algorithm for database disk buffering PDF (1.17 MB), ACM

SIGMOD Conf., pp. 297–306, 1993.

611