Evaluation of Page Replacement Algorithms in a Geographic

advertisement

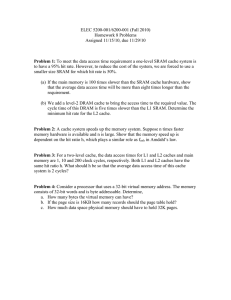

Evaluation of Page Replacement Algorithms in a Geographic Information System DANIEL HULTGREN Master of Science Thesis Stockholm, Sweden 2012 Evaluation of Page Replacement Algorithms in a Geographic Information System DANIEL HULTGREN DD221X, Master’s Thesis in Computer Science (30 ECTS credits) Degree Progr. in Computer Science and Engineering 300 credits Master Programme in Computer Science 120 credits Royal Institute of Technology year 2012 Supervisor at CSC was Alexander Baltatzis Examiner was Anders Lansner TRITA-CSC-E 2012:073 ISRN-KTH/CSC/E--12/073--SE ISSN-1653-5715 Royal Institute of Technology School of Computer Science and Communication KTH CSC SE-100 44 Stockholm, Sweden URL: www.kth.se/csc Abstract Caching can improve computing performance significantly. In this paper I look at various page replacement algorithms in order to make a cache in two levels – one in memory and one on a hard drive. Both levels present unique limitations and possibilities: the memory is limited in size but very fast while the hard drive is slow and so large that memory indexing often is not feasible. I also propose several hard drive based algorithms with varying levels of memory consumption and performance, allowing for a trade-off to be made. Further, I propose a variation for existing memory algorithms based on the characteristics of my test data. Finally, I choose the algorithms I consider best for my specific case. Referat Utvärdering av cachningsalgoritmer i ett geografiskt informationsystem Cachning av data kan förbättra datorers prestanda markant. I den här rapporten undersöker jag ett antal cachningsalgoritmer i syfte att skapa en cache i två nivåer – en minnescache och en diskcache. Båda nivåerna har unika begränsningar och möjligheter: minnet är litet men väldigt snabbt medan hårddisken är långsam och så stor att minnesindexering ofta inte är rimligt. Jag utvecklar flera hårddiskbaserade algoritmer med varierande grad av minneskonsumption och prestanda, vilket innebär att man kan välja algoritm baserat på klientens förutsättningar. Vidare föreslår jag en variation till existerande algoritmer baserat på min testdatas egenskaper. Slutligen väljer jag de algoritmer jag anser bäst för mitt specifika fall. Contents 1 Introduction 1.1 Problem statement . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1.2 Goal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1.3 About Carmenta . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 1 1 2 2 Background 2.1 What is a cache? . . . . . . . . . . . . 2.2 Previous work . . . . . . . . . . . . . . 2.2.1 In page replacement algorithms 2.2.2 Why does LRU work so well? . 2.2.3 In map caching . . . . . . . . . 2.3 Multithreading . . . . . . . . . . . . . 2.3.1 Memory . . . . . . . . . . . . . 2.3.2 Hard drive . . . . . . . . . . . 2.3.3 Where is the bottleneck? . . . 2.4 OGC – Open Geospatial Consortium . . . . . . . . . . . 3 3 3 4 5 6 6 6 7 7 8 . . . . . . . . . . . . . . . 9 9 9 9 10 10 10 10 10 11 11 11 11 11 12 12 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 Testing methodology 3.1 Aspects to test . . . . . . . . . . . . . . . . . . . . . . . 3.1.1 Hit rate . . . . . . . . . . . . . . . . . . . . . . . 3.1.2 Speed . . . . . . . . . . . . . . . . . . . . . . . . 3.1.3 Hit rate over time . . . . . . . . . . . . . . . . . 3.2 The chosen algorithms . . . . . . . . . . . . . . . . . . . 3.2.1 First In, First Out (FIFO) . . . . . . . . . . . . . 3.2.2 Least Recently Used (LRU) . . . . . . . . . . . . 3.2.3 Least Frequently Used (LFU) and modifications 3.2.4 Two Queue (2Q) . . . . . . . . . . . . . . . . . . 3.2.5 Multi Queue (MQ) . . . . . . . . . . . . . . . . . 3.2.6 Adaptive Replacement Cache (ARC) . . . . . . . 3.2.7 Algorithm modifications . . . . . . . . . . . . . . 3.3 Proposed algorithms . . . . . . . . . . . . . . . . . . . . 3.3.1 HDD-Random . . . . . . . . . . . . . . . . . . . 3.3.2 HDD-Random Size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3.3.3 3.3.4 3.3.5 3.3.6 3.3.7 HDD-Random Not Recently Used (NRU) HDD-Clock . . . . . . . . . . . . . . . . . HDD-True Random . . . . . . . . . . . . HDD-LRU with batch removal . . . . . . HDD-backed memory algorithm . . . . . 4 Analysis of test data 4.1 Data sources . . . 4.2 Pattern analysis . . 4.3 Locality analysis . 4.4 Access distribution 4.5 Size distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12 13 13 14 14 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17 17 17 18 19 20 5 Results 5.1 Memory cache . . . . . . . . . 5.1.1 The size modification 5.1.2 Pre-population . . . . 5.1.3 Speed . . . . . . . . . 5.2 Hard drive cache . . . . . . . 5.3 Conclusion . . . . . . . . . . 5.4 Future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23 23 24 24 27 27 28 29 Bibliography . . . . . . . . . . . . . . . . . . . . . . . . . 31 Chapter 1 Introduction 1.1 Problem statement Carmentas technology for web based map services, Carmenta Server, handles partitioning and rendering of map data into tiles in real time as they are requested. However, this is a processor intensive task which needs to be avoided as much as possible in order to get good performance. Therefore there is functionality for caching this data to a hard drive and in memory, but it is currently a very simple implementation which could be improved greatly. My task is to evaluate page replacement algorithms in their system and choose the one which gives the best performance. This is quite closely related to caching in processors, which is a well-researched area of computer science. Unlike those caches I do not have access to any special hardware, but I do have much more processing power and memory at my disposal. Another thing which differs is the data – data accesses generally show certain patterns which mine do not necessarily exhibit. Loops, for example, do not exist, but there is probably still locality in the form of popular areas. The cache will have two levels – first one in memory and then one on a hard drive. In most cases the client is a web browser with a built-in cache, making this cache a second and third level cache (excluding CPU caches and hard drive caches). This means that the access patterns for this cache will be different from the first level caches most algorithms are designed for. Also, since the hard drive cache is big and slow it is hard to create a smart algorithm for it without sacrificing speed or spending a lot of memory. 1.2 Goal My primary goal was to evaluate existing cache algorithms using log data and compare their accuracy. Next, I planned to implement my own algorithm and compare it to the others. Finally, I would implement the most promising algorithms and merge them into the Carmenta Server code. 1 CHAPTER 1. INTRODUCTION 1.3 About Carmenta Carmenta is a software/consulting company working with geographic information systems (GIS). The company was founded in 1985 and has about 60 employees. They have two main products: Carmenta Engine and Carmenta Server. Carmenta Engine is the system core which focuses on visualizing and processing complex geographical data. It has functionality for efficiently simulating things such as line of sight and thousands of moving objects. Carmenta Server is an abstraction layer used to simplify distribution of maps over intranets and the internet. It is compliant with several open formats making it easy to create custom-made client applications, and there are also several open source applications which can be used directly. Carmentas technology is typically used by customers who require great control over the way they present and interact with their GIS. For example, the Swedish military uses Carmenta Engine for their tactical maps and SOS Alarm uses it to overlay their maps with various information and images useful for the rescue workers. 2 Chapter 2 Background In this chapter I give a short presentation of what a cache is, why caching is important and some previous work in the area. 2.1 What is a cache? In short, a cache is a component which transparently stores data in order to access said data faster in the future. For example, if a program repeats a calculation several times the result could be stored in a cache (cached) after the first calculation and then retrieved from there the next time the program needs it. This has potential to speed up program execution greatly. The exact numbers vary between systems, but seeking on a hard drive takes roughly 10ms and referencing data in the L1 cache roughly 0.5ns. Thus, caching data from the hard drive in the L1 cache could in theory speed up the next request 2 × 107 times! There are caches on many different levels in a computer. For example, modern processors contain three cache levels, named L1-L3. L1 is closest to the processor core and L3 is shared among all cores. The closer they are to the core, the smaller and faster they are. Operating systems and programs typically cache data in memory as well. While the processor caches are handled by hardware, this caching is done in software and the cached data is chosen by the programmer. Also, hard drives typically have caches to avoid having to move the read heads when commonly used data is requested. Finally, programs can use the hard drive to cache data which would be very slow to get, for example data that would need substantial calculations or network requests. 2.2 Previous work Page replacement algorithms have long been an important area of computer science. I will mention a few algorithms which are relevant to this paper but there are many more, and several variations of them. 3 CHAPTER 2. BACKGROUND Figure 2.1. Example of caches in a typical web server. 2.2.1 In page replacement algorithms First In, First Out (FIFO) is a simple algorithm and the one currently in use in Carmenta Engine. The algorithm keeps a list of all cached objects and removes the oldest one when space is needed. The algorithm is fast both to run and implement, but performs poorly in practical applications. It was used in the VAX/VMS with some modifications.[1] The Least Recently Used (LRU) algorithm was the page replacement algorithm of choice until the early 80s.[2][3] As the name suggests it replaces the least recently used page. Due to the principle of locality this algorithm works well in clients.[4] It has weaknesses though, for example weakness to scanning (which is common in database environments) and does not perform as well when the principle of locality is weakened, which is often the case in second level caches.[5] Another approach is to replace the Least Frequently Used data (LFU), but this can create problems in dynamic environments where the interesting data changes. If something gets a lot of hits one day and then becomes obsolete this algorithm will keep it in the cache anyway, filling it up with stale data. This can be countered by using aging, which gives recent hits a higher value than older ones. Unfortunately, the standard implementation requires the use a priority queue, so the time complexity is O(log(n)). There are proposed implementations with time complexity O(1) though.[6] O-Neil, O-Neil and Weikum improved LRU with LRU-K, which chooses the page to replace based on the last k accesses instead of just the last one.[7] This makes LRU resistant to scanning, but requires the use of a priority queue which means accesses are logarithmic instead of constant. In 1994 Johnson and Shasha introduced Two Queue (2Q), which provides similar performance to LRU-K but with constant time complexity.[3] The basic idea is to have one FIFO queue where pages go first, and if they are referenced again while there they go into the main queue, which uses the LRU algorithm. That provides resistance to scanning as those pages never get into the main queue. 4 2.2. PREVIOUS WORK Megiddo and Modha introduced the Adaptive Replacement Cache (ARC) which bears some similarities to 2Q.[8] Just like 2Q it is composed of two main queues, but where 2Q requires that the user tunes the size of the different queues manually ARC uses so called ghost lists to dynamically adapt their sizes depending on the workload. More details can be found in their paper. This allows ARC to adapt better than 2Q, which further improves hit rate. However, IBM has patented this algorithm, which might cause problems if it is used in commercial applications. Megiddo and Modha also adapted Clock with adaptive replacement[9], but the performance is similar to ARC and it is also patented, so it will not be evaluated. In a slightly different direction, Zhou and Philbin introduced Multi-Queue (MQ), which aims to improve cache hit rate in second level buffers.[5] The previous algorithms mentioned in this paper were all aimed at first level caches, so this cache has different requirements. Accesses to a second level cache are actually misses from the first level, so the access pattern is different. In their report they show that the principle of locality is weakened in their traces and that frequency is more important than recency. Their algorithm is based around multiple LRU queues. As pages are referenced they get promoted to queues with other highly referenced pages. When pages need to be removed they are taken from the lesser referenced queues, and there is also an aging mechanism to remove stale pages. This strengthens frequency based choice while still having an aspect of recency. This proved to work well for second level caches and is interesting for my problem. Another notable page replacement algorithm is Bélády’s algorithm, also known as Bélády’s optimal page replacement policy.[10] It works by always removing the page whose next use will occur farthest in the future. This will always yield optimal results, but is generally not applicable in reality since it needs to know exactly what will be accessed and in which order. It is however used as an upper bound of how good hit rate an algorithm can have. We have a large number of page replacement algorithms, all with different strengths and weaknesses. It is hard to pinpoint the best one as that depends on how it will be used. Understanding access patterns is integral to designing or choosing the best cache algorithm, and that will be dealt with later in this paper. 2.2.2 Why does LRU work so well? As explained in Chapter 2.2.1 there are a lot of page replacement algorithms based around LRU. This is because of a phenomenon known as locality of reference. It is composed of several different kinds of locality, but the most important are spatial and temporal locality.[4] These laws state that if a memory location is referenced at a particular time it is likely that it or a nearby memory location will be referenced in the near future. This is because of how computer programs are written – they will often loop over arrays of data which are near each other in memory and access the same or nearby elements several times. With the rise of object oriented programming languages and more advanced data structures such as heaps, trees and hash tables locality of reference has been weakened somewhat, but it is still 5 CHAPTER 2. BACKGROUND important to consider.[11] Locality of reference is further weakened for caches in server environments that are not first level caches. This was explored by Zhou and Philbin and their MQ algorithm is the only one in the previous part that is made for server use.[5] They found that the close locality of reference was very weak since they had already been taken care of by previous caches. This depends heavily on the environment the cache is used in and does not necessarily apply to my situation. 2.2.3 In map caching Generally, caches are placed in front of the application server. They take the requests from the clients and relay only the requests they cannot fulfill from the cache. Then they transparently save the data they get from the application server before they send it to the client. This makes the cache completely transparent to the application server, but means it needs to provide a full interface towards the client. Also, unless the server also provides the same interface it will not be possible to turn it off. There are several free, open source alternatives that handle caching for WMScompatible servers. Examples include GeoWebCache, TileCache and MapCache. They are all meant to be placed in front of the server and handle caching transparently. They aim at handling things like coordinating information from multiple sources (something made possible and smooth by WMS, which I will mention later) rather than being smooth so set up. They also often allow for several different cache methods: MapCache can not only use a regular disk cache but also memcached, GoogleDisk, Amazon S3 and SQLite caches.[12] This makes them very flexible, but what Carmenta wants is a specific solution that is easy to set up. Carmentas cache is optional, so it is currently a slave of Carmenta Server. This simplifies things, but since even cached requests have to go through the engine it reduces performance slightly. The cache works the same though, the only difference is that requests come from the server instead of the client, and the server calls a store routine when the data is not in the cache. 2.3 Multithreading Multithreading could potentially improve program throughput, and in this section I explore the possibilities of multithreading memory and hard drive accesses. 2.3.1 Memory Modern memory architectures have a bus wide enough to allow for several memory accesses in parallel. This means that in order to get the full bandwidth from the memory several accesses need to run in parallel. Logically, this would mean that a multithreaded approach would run faster, but modern processors have already solved that for us. Not only do they reorder operations on the fly but they also 6 2.3. MULTITHREADING access memory in an asynchronous fashion, meaning that a single thread can run several accesses in parallel. I decided to test this to make sure there were no performance gains to be had by multithreading the memory reads. I wrote a simple program which fills 500MB of memory with random data and then reads random parts of it. The tests are each made 10 times and the average run time in milliseconds is presented. Computers used for this test: • Computer c1 is a 64 bit quad-core with dual-channel memory. • Computer c2 is a 32 bit dual-core with dual-channel memory. • Computer c3 is a 64 bit hexa-core with dual-channel memory. Thread count 1 4 8 25 Run time c1 101 95 96 102 Run time c2 165 189 174 156 Run time c3 240 201 204 198 Table 2.1. Results from test run of parallelizing memory accesses. As Table 2.1 shows there are no significant gains from parallel memory accesses on the computers I tested this program on except from one to two threads on c3. As mentioned earlier this is probably due to the fact that the memory accesses are asynchronous anyway, meaning a single thread can saturate memory bandwidth. As this test only reads memory there is no synchronization between threads, which in any real application would cause overhead. 2.3.2 Hard drive SATA hard drives are de facto standard today. SATA stands for Serial Advanced Technology Attachment, so this is by nature a serialized device. RAID setups (Redundant Array of Independent Disks) are parallel though, and common in server environments. They could gain speed from parallel reading, so I will parallelize the reads as the driver will serialize the requests if needed anyway. 2.3.3 Where is the bottleneck? The server is generally not running on the same computer as the client, so the tiles will be sent over network. Network speeds cannot compare to memory bandwidth, so the only thing parallelizing memory accesses would do in actual use is to increase CPU load due to overhead caused by synchronization. Bandwidth could compare to hard drive speeds though, and as such hard drive reading will be done in parallel where available. 7 CHAPTER 2. BACKGROUND When the cache misses the tile needs to be re-rendered. This should be avoided as much as possible, as re-rendering can easily be slower than the network connection. As such, the key to good cache performance is getting a good hit rate. Multithreading is not needed for the memory part but is worth implementing if there are heavy calculations involved. However, reading from disk and from memory should naturally be done in parallel. 2.4 OGC – Open Geospatial Consortium The Open Geospatial Consortium is an international industry consortium that develops open interfaces for location-based services. Using an OGC standard means that the program becomes interoperable with several other programs on the market, making it more versatile. Carmenta is a member of OGC and Carmenta Server implements a standard called WMS, which ”specifies how individual map servers describe and provide their map content”.[13] The exact specification can be found on their web page, but most of it is out of scope for this paper. In short, the client can request a rectangle of any size from any location of the map, with any zoom level, any format, any projection and a few other parameters. The large amount of parameters create a massive amount of possible combinations which makes this data hard and inefficient to cache. To counter this an unofficial standard has been developed: WMS-C.[14] In order to reduce the possible combinations the arguments are reduced, only one output format and projection is specified, clients can only request fixed size rectangles from a given map grid among other restrictions. Carmenta uses WMS-C in their clients, and this is the usage I will be developing the cache algorithm for. 8 Chapter 3 Testing methodology In this chapter I discuss which aspects of the caches to test and present the algorithms I will test. 3.1 Aspects to test There are two main aspects of a cache that are testable: speed and hit rate. These could both be summarized into a single measurement: throughput. The problem with that in my environment is that the time it takes to render a new tile differs greatly depending on how the maps are configured. I decided to measure them separately and weight them mostly based on hit rate. In the end their run times ended up very similar anyway, except for LFU. 3.1.1 Hit rate The primary objective for this cache is to get a good hit rate. Hit rate is compared at a specific cache size and a specific trace. In order to know where the cache found its data I simply added a return parameter which told the test program where the data was found. 3.1.2 Speed Speed is harder to test than hit rate for several reasons: • The hard drive has a cache. • The operating system maintains its own cache in memory. • The CPU has several caches. • JIT compilation and optimization can cause inconsistent results. • The testing hardware can work better with specific algorithms. 9 CHAPTER 3. TESTING METHODOLOGY Focus for this cache is on hit rate, but I have run some speed tests as well. When doing speed tests I took several precautions to get as good results as possible: • Hard drive cache and Carmenta Engine are not used for misses. Instead, I generate random data of correct size for the tiles. • The tests are run several times before the timed run to make sure any JIT is done before the actual run. • I did not run any speed test on the hard drive caches. Also, since the bottleneck is the memory rather than the CPU I ran some tests with it downclocked to the point where it was the bottleneck. The cache is generally run on the same machine as Carmenta Engine whose bottleneck is the CPU, so it makes sense to measure speed as CPU time consumed. 3.1.3 Hit rate over time When testing hit rate I display the average hit rate over the entire test. However, it is also interesting to see how quickly the cache reaches that hit rate and how well it sustains it. Also, this is an excellent way to show how the pre-population mechanism works. 3.2 The chosen algorithms The goal is to find the very best so I have tested all the most promising algorithms and a few classic ones as well. In cases where the caches have required tuning I have tested with several different parameter values and chosen the best results. 3.2.1 First In, First Out (FIFO) A classic and very simple algorithm, and also the algorithm Carmenta had in their basic implementation of the cache. I use this as a baseline to compare other caches with. 3.2.2 Least Recently Used (LRU) LRU is a classic algorithm and one of the most important page replacement algorithms of all time. The performance is also impressive, especially for such an old and simple algorithm. 3.2.3 Least Frequently Used (LFU) and modifications LFU is the last traditional page replacement algorithm I have tested. It has traditionally been good in server environments, and since this is a second level cache it should perform well. I also added aging to it and a size modification which I will describe later. 10 3.3. PROPOSED ALGORITHMS 3.2.4 Two Queue (2Q) 2Q is said to have a hit rate similar to that of LRU-2[3], which in turn is considerably better than the original LRU.[7] 3.2.5 Multi Queue (MQ) MQ is the only tested algorithm that is actually developed for second level caches, and as such a must to include. According to Zhou and Philbin’s paper it also outperforms all the previously mentioned algorithms on their data, which should be similar to mine.[5] 3.2.6 Adaptive Replacement Cache (ARC) An algorithm developed for and patented by IBM, which according to Megiddo and Modha’s article outperforms all the previously mentioned algorithms significantly with their data.[15] I have added this for reference; I will not actually be able to choose it due to the patent. 3.2.7 Algorithm modifications As previously mentioned, my data mostly differs from regular cache data in that there are significant size differences. Since the cache is limited by size rather than the amount of tiles it makes sense to give the smaller tiles some kind of priority, especially since one big tile can take as much room as fifty small. The way I chose to do it for the frequency-based algorithms I implemented it on (MQ and LFU) was to increase the access count by a bit less for big tiles and more for small tiles. I tested various formulas and constants for the increase as well. This modification is explained in detail in Chapter 5.1.1. 3.3 Proposed algorithms I found no previous work for non solid-state drives (SSDs), and the ones for SSDs deal specifically with the strengths and limitations of SSDs and are not applicable to regular hard drives (HDDs). Instead, I had to consider what I had access to: • A natural ordering with a folder structure and file names. • The last write time. • The last read time. • The cache already groups the files into a simple folder system based on various parameters. For Carmenta Engine, the overhead is a key aspect in choosing a good disk algorithm. Until now, it has not been possible to set a size limit to the disk cache 11 CHAPTER 3. TESTING METHODOLOGY and it has worked, so sacrificing some hit rate for lower overhead is a good choice here. However, mobile systems have different limitations, so even high overhead algorithms could be a good choice. Specifically, their secondary storage (which is generally flash based) is much smaller but also faster, and their memory is often very small and better utilized by other programs. These limitations make combined memory and hard drive algorithms much more feasible than they are in regular systems. The following subsections are listed in order of memory overhead, smallest first. 3.3.1 HDD-Random The folder structure created by Carmenta Engine puts the tiles into a structure based on various parameters which will sort files into different folders based on maps and settings, and more importantly based on zoom and x/y-coordinates. In the end, the folder structure will look like this: .../<zoom level>/<x-coordinate>/<ycoordinate>/<x>_<y>.<file type>, where the x- and y-coordinates which create folders are more coarse than the ones creating the file name. This algorithm starts at the cache root level and then picks a random folder (with uniform probabilities) until it has reached a leaf folder. Then it picks a random file, again with uniform probabilities, and deletes it. This does not give uniform probabilities for all files, but unlike a truly random algorithm it has no overhead. 3.3.2 HDD-Random Size The size modification of this algorithm uses another random aspect: once a file has been picked there is only a certain probability it will be removed. This chance depends on how large the file is compared to the average – it gets larger if the file is larger. The idea is very much the same as the size modifications for the memory algorithms: by giving smaller files a larger chance to stay the cache will be able to keep more files, resulting in a higher hit rate. This means the cache might need to try several times before actually deleting a file, but a higher hit rate could be worth it. However, this gave a slightly lower hit rate than the original algorithm so I did not even include it in the final comparison. 3.3.3 HDD-Random Not Recently Used (NRU) HDD-Clock attempts to remove only objects which have not been accessed recently (more details in Chapter 3.3.4). By adding this aspect to HDD-Random it should be possible to improve hit rate at the cost of (relatively high) overhead. However, as seen in the results the performance was not very impressive and does not warrant the overhead. 12 3.3. PROPOSED ALGORITHMS 3.3.4 HDD-Clock Using the information I had access to it is possible to create a clock-like algorithm. Clock is an LRU approximation algorithm which tries to remove only data which has not recently been accessed.[16] It keeps all data in a circle, one ”accessed” bit per object and a pointer to the last examined object. When it is time to evict an object it checks if the accessed bit of the current object is 1. If so it is changed to 0, otherwise the object is removed and the pointer changed to the next object. Also, if an object is accessed its accessed bit is 0 it is set to 1. The folders and file names created a natural structure which the HDD-Clock algorithm traversed using a DFS. By keeping a short list of timestamps over the last k accesses it makes a conservative approximation of the last time it read the current file by using the fol<cache size> lowing formula: <approximate tile size>×k × c× < time since oldest access >, where c decides how conservatively the time should be calculated. Since the cache load varies heavily this estimated time will also vary, but as long as this t is not lower than the actual access time for the current file it is fine. An- Figure 3.1. The clock algorithm other approach is to simply have a constant time keeps all data (A-J) in a circle and maintains a pointer to the last which is considered recent. If the current file has not been read since t it is examined data, in this case B. removed and the file pointer is set to point at the next file. However, last read time turned out to be disabled by default in most Windows versions and an important aspect of this cache is that it has to be very easy to use. Also, NTFS only updates the time if the difference between the access times is more than an hour, which makes testing the algorithm extremely time-consuming. Furthermore, enabling this timestamp decreases the overall performance of NTFS due to the overhead of constantly editing it. I wanted to try the algorithm though, so I faked it by implementing an actual accessed bit, just like in the original clock algorithm. I also had to add the actual write time since I ruined it with my accessed bit. However, this means the algorithm has to write to the accessed bit if it is not set, even if it only needed to read the file. In any real scenario, the read time option would need to be enabled. The results will be the same as for a perfect approximation of t, so in reality they will differ a little. 3.3.5 HDD-True Random By keeping a simple structure in memory describing all folders and their file count true pseudo-randomness can be achieved. The folders are then weighted depending on how many files they contain, and when a folder has been randomized a random 13 CHAPTER 3. TESTING METHODOLOGY file is removed in the same fashion as HDD-Random. Another way of achieving this is to simply store all the files in a single folder, but this can cause serious performance issues as well as making it hard to manually browse the files. This algorithm was mostly created because I was curious how the skewed randomness of HDD-Random affected the hit rate. 3.3.6 HDD-LRU with batch removal If the system has the last access time enabled (iOS and Android both do per default, Windows does not) the algorithm could be entirely hard drive-based until it is time to remove data. When more room is needed it could load the last access time of all files in the cache, order them and remove the x% least recently accessed files, where x can be configured to taste. For extra performance, it could also pre-emptively delete some files if the cache is almost full and the system is not used, for example at night. This will have a slightly lower hit rate than LRU, due to the fact that the cache on average will not be entirely full. When testing this I deleted 10% of the cache contents when it was full. Since this algorithm needs to read the metadata of all files in the cache it is not suitable for large caches. 3.3.7 HDD-backed memory algorithm Pick a memory algorithm of choice, but save only the header for each tile in memory. The data is instead saved on disk and a pointer to it is maintained in memory. This approach can create huge memory overhead for regular systems due to their immense storage capabilities, but for a mobile system it is not as big a problem. Also, since their secondary storage is much faster they can use this single, unified cache instead of two separate ones. MQ would be an excellent pick for this algorithm, but without the ghost list as it is suddenly just as expensive (in terms of memory) to keep a ghost as it is to keep a normal data entry. This will have the slightly lower hit rate than the memory algorithm on most file systems due to file sizes being rounded up to the nearest cluster size, but other than that it will work just like the regular algorithm. For my tiles and partitioning, the hard drive part is approximately 100 times larger than the memory part if MQ is used. One problem with this kind of cache is how to keep the data when the cache is restarted. One could traverse the entire cache directory and load only the metadata required (generally filename, size and last write time), but this is going to be very slow for larger caches. Another option is to write a file with the data to load, just like pre-loading is done in Chapter 5.1.2, but this is also slow for big caches. A third option is to not populate the memory part with anything, but instead load the data from the disk when it is accessed but not available in memory. This algorithm is more complex and sensitive, but for larger caches or when a very fast start is required this algorithm might be the best solution. In order to get this to work properly the algorithm for choosing which file is removed has to be modified slightly: 14 3.3. PROPOSED ALGORITHMS before deleting files which have memory metadata the cache folder is traversed (just like HDD-Clock in Chapter 3.3.4, except instead of the Accessed bit it checks the memory cache) and files which are not in the memory index are deleted. Once the end is reached the normal deletion algorithm takes over and the cache does not have to check the hard drive every time it thinks it does not contain a file. For the test I implemented the first version, but as long as they are all loaded in the same order they will get the same hit rate. Version one and three will be in the same order, but by making version two preserve the LRU order it could get a very small increase in hit rate. 15 Chapter 4 Analysis of test data In this chapter I put my test data through some tests to see if it exhibits any of the typical patterns for cache traces. 4.1 Data sources I have two proper data sources: the first is 739 851 consecutive accesses from hitta.se (hitta). They provide a service which allows anyone to search for people or businesses and display them on a map, or just browse the map. The second is 452 104 consecutive accesses from Ragn-Sells, who deliver recycling material (ragnsells). This is a closed service and the trucks move along predetermined paths. I also use a subset of Ragn-Sells, consisting of 100 000 accesses (ragn100) to test how the cache behaves in short term usage. 4.2 Pattern analysis In classic caching, loops and scans are a typical component of any real data set. However, map lookups do not exhibit the same patterns as programs do. It would make sense to see some scanning patterns though, when users look around on the map. I’ve made recency-reference graphs for the data. This type of graph is made by plotting how many other references are made between references to the same object over time. Also, very close references seldom give any important information so the most recent 1000 references are ignored. Horizontal lines in the graphs indicate standard loops. Steep upwards diagonals are objects re-accessed in the opposite order, also common in loops. Both of these could also appear if a user scanned a portion of the map and then went back to scan it again. As the scatter plots in Figure 4.1 show the data contains no loops and show no distinct access patterns. Interestingly though, the low density in the upper part of the plot show that the ragnsells trace exhibits stronger locality than the hitta trace. This is probably because the ragnsells was gathered over longer time with 17 CHAPTER 4. ANALYSIS OF TEST DATA Figure 4.1. Recency-reference graphs showing that none of the traces exhibit any typical data access patterns. fewer users than hitta, and the ragnsells users tend to look at the same area several times. 4.3 Locality analysis Locality of reference is an important phenomenon in caching, and the biggest reason it works so well. Typically, locality of reference is weakened in server caches since there are caches in front of it which take the bulk of related references.[5] The test traces are from clients who use a browser as the primary method of accessing the map, meaning that there is one cache on the client which should handle some of the more oftenly repeated requests. This should show on the temporal distance graphs as few close references, not peaking until the client cache starts getting significant misses. As Figure 4.2 shows less than half of the tiles are accessed more than once, and only 12% of hitta-data is accessed four or more times. This could be a product of relatively small traces (0.7 and 0.4 million accesses compared to about 23 million tiles on the map). It seems likely that tile cache traces simply do not exhibit the same locality aspects as regular cache traces as the consumer of the data is completely different (human vs. program). It is also most likely affected by the first level cache in the browser, which will handle most repeated requests from a single user. Regardless, this means that recency should not hold the same importance as it does in client caches. Instead, frequency should be more important. Frequency graphs measure how large the percentage of blocks that are accessed at least x times is. Temporal distance graphs measure the distance d in references between two references to the same object. Each point on the x axis is the amount of such references where d is between the current x and the next x on the axis. Figure 4.3 shows that the ragnsells trace exhibits much stronger temporal locality than hitta. This is probably a result of the way they work – several users will often look at the same place at the same time. It also means that recency will be of bigger importance than it is for hitta, something that is reflected in the hit rates of different algorithms in Chapter 5. 18 4.4. ACCESS DISTRIBUTION Figure 4.2. Graph showing how many tiles get accessed at least x times. Figure 4.3. The temporal distance between requests to the same tile. This graph shows that ragnsells exhibits much stronger temporal locality than hitta. 4.4 Access distribution As explained by the principle of locality, certain data point will be accessed more than others. However, it is interesting to see just how much this data is accessed. 19 CHAPTER 4. ANALYSIS OF TEST DATA Figure 4.4. Maximum hit rate when caching a given percentage of the tiles. Notice how few tiles are needed to reach a significant hit rate. As Figure 4.4 shows, some tiles are much more popular than others. So much, in fact, that 10% of the requests are for 0.05% of the tiles. Other points of interest are approximately 20% of requests to 1% of the tiles and 35% of requests to 5% of the tiles. Note that this is a percentage out of the tiles which were requested in the trace. With a typical map configuration there are between 10–100 times more tiles on the map. This proves that caching can be incredibly efficient even at small sizes if the page replacement algorithm keeps the right data. 4.5 Size distribution Most research regarding caching is done for low-level caching of blocks, and they are all of the same size. The tiles in this cache vary wildly though, between 500 bytes and about 25kB. The smallest tiles are single-colored, generally zoomed in views of forest or water. The big ones are of areas with lots of details, generally cities. Figure 4.5 is created with a subset of the ragnsells trace I had size data from. It shows that large tiles on average get slightly more accesses than small tiles, but by no means enough to compensate for their size. This is probably because the large tiles tend to show more densely populated areas, meaning the drivers are more likely to go there. Size does by no means increase constantly with increased accesses eighter, but varies wildly. Also, the fact that tiles with more accesses tend to be slightly larger does not necessarily mean that large tiles get more accesses; it could just mean that the large tiles that get any accesses at all tend to get slightly more of them. 20 4.5. SIZE DISTRIBUTION Figure 4.5. Average tile size for tiles with a certain amount of accesses. The graph shows that tiles with more accesses are typically slightly larger, probably because they show more populated areas. Differing sizes adds another aspect to consider in a page replacement algorithm though. The current algorithms use request order and access count, but do not take size into consideration since they are used on a much lower level. When size can differ by a factor 50 it is definitely worth taking it into account though. This modification is expanded upon in Chapter 5.1.1. 21 Chapter 5 Results In this chapter I present the test results and discuss the implications they have. 5.1 Memory cache Size (MB) LRU MQ MQ-S ARC FIFO LFU LFU-A LFU-S-A 2Q LRU MQ MQ-S ARC FIFO LFU LFU-A LFU-S-A 2Q 10 50 100 150 250 hitta (max 66.04% hit rate) 8.21 20.17 28.55 34.30 42.26 10.95 24.69 33.05 38.69 46.10 10.02 23.68 32.08 37.76 45.24 12.54 25.66 34.25 40.24 48.08 7.18 18.09 25.69 31.01 38.50 10.00 18.65 25.85 33.39 41.42 8.96 22.80 30.00 35.03 41.90 9.57 20.63 28.00 33.18 40.60 10.54 22.46 30.46 35.89 43.18 ragnsells (max 74.49% hit rate) 26.05 47.21 58.12 63.86 69.18 27.92 47.52 57.18 62.56 67.82 27.93 47.89 57.37 62.68 67.66 10.93 28.06 56.23 61.07 68.97 24.48 44.07 54.72 60.71 66.66 5.83 9.87 16.48 32.65 54.01 11.55 26.74 26.30 35.02 54.55 3.38 10.65 14.67 22.08 42.60 26.44 46.81 55.53 60.41 65.23 500 1000 53.55 55.20 54.48 56.59 49.33 53.18 53.37 52.41 52.30 62.71 62.27 61.81 63.45 59.40 62.55 62.10 62.44 56.89 73.39 72.72 72.58 73.27 72.39 66.97 67.68 63.71 68.39 74.49 74.49 74.49 74.49 74.49 74.46 74.46 74.49 70.03 Table 5.1. Results from the memory tests. The best results are in bold. For hitta, these results mirror the ones Megiddo and Modha received in their 23 CHAPTER 5. RESULTS tests[15], though the 2Q algorithm performs under expectations. I suspect this is either due to different configuration of the constants (I used the constants the authors recommended) or simply due to differences in data. The ragnsells trace gives more interesting results with big differences between the algorithms. There are two very noticeable groupings in there: group 1 containing the LFU based algorithms and for smaller sizes ARC, and group 2 with LRU, MQ, MQ-S, 2Q and FIFO. My hypothesis is that the reason for this is the strong temporal locality in the ragnsells trace. All algorithms in group 2 have strong recency aspects: FIFO and LRU are built around recency and MQ depends highly on recency. Especially so at small sizes, simply because it will not contain tiles long enough for them to get many hits. The LFU based algorithms in group 1 are almost purely frequency dependent and will miss a lot because of this. ARC is more recency-based and does join group 2 for cache sizes ≥ 100MB, but the low performance at smaller sizes is unexpected. Ragnsells has both strong temporal locality and some tiles with a lot of hits, and this probably confuses the adaption algorithm at small cache sizes, increasing the size of the second queue when it should have done the opposite. Interestingly, the classic LRU algorithm which was shadowed by more advanced algorithms for the hitta trace shines here. This goes to show just how important the environment is for the algorithm choice, and that even a simple algorithm such as LRU can still compete with more modern algorithms under the right circumstances. 5.1.1 The size modification As mentioned earlier, the tiles vary in size by a factor 50. Being able to fit up to 50 times as many tiles in the cache should allow for better hit rates as long as the tiles are chosen with care. I experimented with various ways of incorporating the size into the evaluation, and in the end I chose to increase the access counter by more for smaller tiles. The final formula is the following: Accesses = Accesses + tile size> 1 + 0.15× <average . This works out to increasing accesses by ≈1.3–2.5 for <tile size> every access, depending on tile size. In other words, the largest tiles need to be accessed twice as much as the smallest tiles to be considered equally valuable. Tweaking the constant which decides how much size affects tile priority turned out to be hard and there were no constants which performed well on all traces. It has not been shown here, but in the ragn100 trace size was important and a constant of 0.45 was optimal. However, in the longer hitta trace, frequency was more important and 0.15 was the optimal constant. In the end I chose to use 0.15 for the memory test, i.e. giving size a relatively small weight. 5.1.2 Pre-population So far, this has all been on-demand caching. There are three other ways to handle caching: 24 5.1. MEMORY CACHE • Pre-populating it with objects which are thought to be important. This is often done in a static fashion – the starting population of the cache is configured to values which are known to be good. If the cache always keeps this static data it is called static caching. This can give good results for very small caches which would otherwise throw away good data too fast. • Predict which objects will be loaded ahead of time. This can waste a lot of time if done wrong, but if the correct data is loaded response time can decrease greatly. Also, if objects are loaded during times when the load is low, throughput can be increased during high load times thanks to higher hit rate. • Pre-populating with objects based on some dynamic algorithm which takes recent history into account. Figure 5.1. Comparison of hit rate over time with a cold start versus pre-loading. Notice that pre-loading keeps a slightly higher average even after cold start has had time to catch up. I chose the third option and made a simple but efficient algorithm for it. When the cache is turned off it saves an identifier for all the files in the cache (along with their current rating) into a text file. Said text file is then loaded when the cache is started again, meaning it will look the same as when it was closed. No reasonable hard drive algorithm is going to be able to compete with the best memory algorithms, so by using their knowledge a simple but efficient pre-population algorithm 25 CHAPTER 5. RESULTS is achieved. The biggest downside with this algorithm is that it does not work the first time around. In order to have it work the first time the first option needs to be used – static caching. It requires more configuration though, and I do not feel it is worth it for the small performance increase. For Figure 5.1, I split hitta into two equal halves. The reason I did this is because in a real world situation the same long trace is extremely unlikely to be repeated, and it would give the algorithm an unrealistic advantage since it knows beforehand which tiles are often accessed. Their max hit rate are 59.04% for the cold start version and 57.88% for the pre-loaded one, so the hit rates are comparable. As expected, the hit rate for pre-loading starts off approximately where it left off. However, it also continues to have a slightly higher hit rate on average through the entire trace. At around 25% cold start has caught up with pre-loading, but even then hit rate is still slightly lower – 56.23% on average from 25% – 100% of the trace compared to 58.20% for the pre-loaded one (total averages are 52.64% for cold start and 57.79% for pre-loading). The pre-loaded one won despite having a worse max hit rate than the cold start trace – it even achieved a hit rate higher than the max theorical limit for a cold start since it did not have to miss every tile before loading it. 26 5.2. HARD DRIVE CACHE 5.1.3 Speed All algorithms are O(1) except LFU and its variations, which are O(log(n)). This means speeds should be similar, but some algorithms are definitely more complex than others which could result in higher constants. When running the tests I took all the precautions mentioned in Chapter 3.1.2 and also ran the test on both Intel and AMD, but all the graphs looked the same. The only thing that differed was the magnitude of the run times, otherwise memory-limited Intel, processor-limited Intel and memory-limited AMD are all almost identical. Figure 5.2. Result of speed run where the CPU was the limiting factor. There are obviously no big constants hidden in the Ordo notation. All algorithms except LFU are remarkably close in execution time; the difference is only a few seconds. In other words, there are no huge hidden constants in the Ordo notation. 5.2 Hard drive cache As Table 5.2 shows, performance follows overhead pretty closely and the differences are overall very small. HDD-Random performs surprisingly well – it is almost as good as memory FIFO (see Table 5.1). This is impressive, especially considering that the hard drive algorithms have to deal with cluster size overhead. 4kB cluster size is the default for modern NTFS drives, meaning that file sizes are rounded up to the nearest 4kB. This gives an overhead of approximately 20% with the current tiles. 27 CHAPTER 5. RESULTS Size (MB) 10 50 100 150 250 hitta (max 66.04% hit rate) HDD-MQ 9.95 22.78 31.10 36.73 44.17 Batch LRU 7.96 19.48 27.52 33.02 40.62 HDD-True Random 7.12 17.85 25.21 30.38 37.59 HDD-Clock 7.23 17.71 25.04 30.34 37.68 HDD-Random NRU 6.29 16.99 24.32 29.19 36.53 HDD-Random 6.49 16.67 24.48 29.23 36.25 ragnsells (max 74.49% hit rate) HDD-MQ 27.74 48.05 57.66 62.22 67.22 Batch LRU 25.66 45.95 56.82 62.73 67.98 HDD-True Random 23.56 42.91 53.00 58.28 64.07 HDD-Clock 24.71 43.54 54.07 60.10 65.55 HDD-Random NRU 23.73 43.48 53.34 58.87 65.12 HDD-Random 23.47 41.93 51.81 58.79 64.79 500 1000 53.90 51.64 48.48 48.88 47.96 47.18 61.58 61.19 58.95 59.61 59.45 59.22 72.33 72.95 70.97 69.71 71.41 71.17 74.49 74.49 74.49 74.49 74.49 74.49 Table 5.2. Results from the hard drive hit rate test. Unlike Table 5.1, this table does not have any bold results. The reason is that this one is not as simple as picking the one with the highest hit rate – instead it is a trade-off between speed and memory use. The overall hit rate winner is HDD-MQ, and it is not unexpected that the best algorithm has the higher memory requirements – more memory means more data can be saved, meaning better decisions can be made. However, the differences are fairly small and only in rare cases will it be worth it to take the memory overhead for those few percent extra hit rate. 5.3 Conclusion Until now, it has not been possible to limit the size of the hard drive cache. It has worked fine so far but it is not sustainable in the long run, especially not if the cache is run on a mobile device. However, as it has not been needed before a simple algorithm should definitely be chosen before one which has overhead. For a server environment I would choose HDD-Random, simply because it is unlikely to be used and has no overhead. For mobile devices it would make sense to choose an algorithm with overhead if it gives a higher hit rate though, as Internet connectivity tends to be slow and expensive. I would go with HDD-MQ as the small hard drive caches a mobile device can offer only require a small amount of memory, and even a few percent higher hit rate can make a significant difference when Internet connectivity is slow. However, HDD-Random is still a strong contender as memory also tends to be highly limited and the difference in hit rate is small. For the memory cache I would choose ARC if I could, but the IBM patent means that it is not a possibility. Instead, MQ offers solid performance on all tests and is always close to the hit rate of ARC, sometimes even higher. The size modification 28 5.4. FUTURE WORK of MQ happened to beat both MQ and ARC in the small ragnsells tests, but overall it is not as good as regular MQ. 5.4 Future work The size modification mentioned in Chapter 5.1.1 uses a constant which is hard to tweak without knowing the exact environment the algorithm will be used in. Also, the hit rate can probably be improved by changing this value over time. MQ uses a ghost list which gives information on which tiles have been deleted – it could be used in a manner similar to how ARC uses it to tweak this constant. 29 Bibliography [1] G. Gagne A. Silberschatz, P. B. Galvin. Operating Systems Concepts. Seventh edition, 2005. [2] A. S. Tanenbaum. Modern Operating System. Prentice-Hall, 1992. [3] T. Johnson and D. Shasha. 2q: A low overhead high performance buffer management replacement system, 1994. [4] P. J. Denning. The locality principle. Communication Networks and Computer Systems, pages 43–67, 2006. [5] Y. Zhou and J. F. Philbin. The multi-queue replacement algorithm for second level buffer caches, 2001. [6] D. Matani K. Shah, A. Mitra. An o(1) algorithm for implementing the lfu cache eviction scheme, 2010. [7] G. Weikum E. J. O’Neil, P. E. O’Neill. The lru-k page replacement algorithm for database disk buffering, 1993. [8] N. Megiddo and D. Modha. Arc: A self-tuning, low overhead replacement cache, 2003. [9] N. Megiddo and D. Modha. Car: Clock with adaptive replacement, 2004. [10] L. A. Bélády. A study of replacement algorithms for a virtual-storage computer, 1966. [11] M. Snir and J. Yu. On the theory of spatial and temporal locality, 2005. [12] Thomas Bonfort. Cache types (http://mapserver.org/trunk/mapcache/caches.html). 16. for mapcache Accessed 2012-05- [13] Opengis web map service (wms) implementation specification, 2006. [14] Wms tile caching (http://wiki.osgeo.org/wiki/wms_tile_caching). Accessed 2012-03-02. 31 BIBLIOGRAPHY [15] N. Megiddo and D. Modha. Outperforming lru with an adaptive replacement cache algorithm. IEEE Computer Magazine, pages 58–65, 2004. [16] F. J. Corbato. A paging experiment with the multics system, 1969. 32 TRITA-CSC-E 2012:073 ISRN-KTH/CSC/E--12/073-SE ISSN-1653-5715 www.kth.se