Experimental data on page replacement algorithm

advertisement

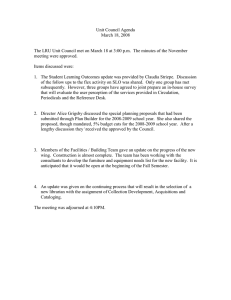

Experimental data on page replacement algorithm by N. A. OLIVER General M otars Research Labarataries Warren, Michigan INTRODUCTION time, regardless of the task to which it belongs. This RA, which is a varying partitions RA by default, is heavily considered in literature. 5,10,l1 Comparison results with various RAs obtained via simulation techniques and interpretive execution1,12,13 are available. However, few if any (non-simulation) system measurements have been conducted. Existing (and fully developed) VM operating systems utilizing variations of this RA known to the author are: CP/67,14,15 Multics,14,16 MTS,14 VSF7 and VS2.1S 2. Local LRU ",ith fixed main memory paging buffer per task RA: The least recently used selection is made from pages belonging to the task which generated the page fault. Some treatmentl,4,10,13,19,20 and measurements of this RA were found in literature. However, only one operating system (besides the interim version of MCTS) implements a remote variation of this RA. It is the original IBM version of TSS.14,21 3. Local LRU with varying (working set)5 partitions RA (WSRA): The replaced page is the least recently used page which does not belong to a working set of any task. Extensive literature is available. 4,5,6,7,l1,13,19, 20,22,23,24,25 Two true implementations (Burroughs B670026 and CP/67 at IRIA France26) and one approximation (the current version of TSS27) of this RA are known with limited measurement results. Although paged VM (Virtual Memory) systems are being implemented more and more, their full capabilities have not yet been realized. Early research in this field pointed to possible inefficiencies in their implementation. 1-3 Subsequent studies, however, led to the conclusion that paged VM systems could provide a productive means to run large programs on small main memory, if proper techniques are employed.4-7 One of the most influential of these is the choice of an efficient page replacement algorithm (RA) to minimize page traffic between the different levels of memory. This paper compares the performance of two RAs about which little system performance measurement data is available. They are: the Global Least Recently Used (LRU) and the Local LRU with fixed and equal size main memory buffer allotted to each task. The number of page faults caused during execution of programs under each RA is used as an inverse criterion for its effectiveness. These studies were conducted at the General Motors Research Laboratories (GMR) on the CDC STAR-IB* Virtual Memory computerS (core size = 65K of 64 bit words; auxiliary/main memory access time ratio of 50000) with the Multi-Console-Time-Sharing (MCTS) operating system. 9 PAGE REPLACEMENT ALGORITHMS Due to the implementation difficulties of the WSRA, only limited (special-case) measurements were taken of it. A basic problem in paged VM systems is deciding which page should be removed from main memory when an additional page of information is needed. Obviously, it should be a page with the least likelihood of being needed in the near future. Therefore a simple criterion for the "goodness" of a page RA is the minimization of page traffic between the main and auxiliary memories which is measured by the number of faults that occur during program execution. One of the most popular page replacement strategies is LRU (Least Recently Used) strategy. The following RAs are based on it: THE TESTING ENVIRONMENT System characteristics Page-table: The STAR computer page-table2S provides an address translation mechanism for all memory references. It points to pages of main memory in use and provides the mapping between the virtual address and the physical location of a page. The page-table ordering is hardware maintained; its entries (one for each page) are LRU ordered. Thus, the most recently accessed pages migrate to the top of the table while the least recently used move to the bottom. (The difference in address translation time between top and 1. Global LRU RA: The replaced page is the one that has not been referenced for the longest period of real "' STAR-IB is a microprogrammed prototype version of the STAR-IOO CDC computer. 179 From the collection of the Computer History Museum (www.computerhistory.org) 180 National Computer Conference, 1974 TABLE I-Results of Identical-tasks Test Customer tasks tested Number of terminals Malus compilation of 185 source lines 2 3 4 .5 Malus compilation of 450 source lines OPL compilation of 160 source lines OPL compilation of 575 source lines INV matrix inversion 2ooX200 6 1 2 3 1 2 3 1 2 1 2 3 LIST_CAT sorting routine P ANICD dump formatting routine 2 3 1 2 3 4 bottom entries of the page table, due to longer search time, is insignificant relative to the other system time parameters.) Level of multiprogramming: The MCTS operating system can be multiprogrammed up to a level corresponding to the maximum number of terminals supported by the system, which is seven. Scheduling: A round robin scheduling scheme among the multiprogrammed tasks is employed. Tasks which are in page or other I/O wait state are skipped. 'When a task's time-slice expires that task is replaced by a task waiting for service and which is also chosen in a round robin fashion. If none is waiting the task with expired time-slice is allowed to continue. (In this study the level of multiprogramming is always equal to the number of running tasks and thus the time-slice parameter is not utilized.) Paging space: It includes a maximum of 92 pages. Each page contains 512 64-bit words. On the interim MCTS system, this space is equally divided among the multiprogrammed tasks. Paging mechanisms In the interim version of ~1:CTS, each task has a private page-table. Depending on the paging space, each multiprogrammed task is allotted a fixed number of pages. The Local LRU RA is used for page replacement. For comparison purposes, MCTS was reprogrammed with the Global LRU RA. Only the paging mechanism was changed. No other system parameters such as multiprogramming, scheduling, paging space, etc., were modified. Global LRU (#P.F.) Local LRU (#P.F.) Local/Global LRU 88 245 627 3765 6049 9348 119 428 10474 86 133 446 143 382 103 15524 15628 104 116 125 448 461 490 520 88 488 2241 4674 7863 13901 119 4078 11943 86 242 751 143 917 103 15548 15688 104 120 190 448 477 498 519 1.000 1.991 3.574 1.241 1.299 1.487 1.000 9.528 1.140 1.000 1.819 1.683 1.000 2.400 1.000 1.001 1.001 1.000 1.034 1.520 1.000 1.035 1.016 0.998 Extreme paging buffer 23-68 18-73 30-61 24-67 45-46 43-48 44-47 The modifications for the Global LRU involved the use of a single page-table for all the customer tasks. All available pages in main memory were put into a general pool. When a page fault occurred, the Global LRU page which was the last entry in the hardwar~managed single page-table, was replaced. No sharing of pages was allowed. TESTING TECHNIQUES In an effort to choose typical and diverse applications, these GMR developed customer tasks were tested: Malus-A compiler for PL/I-like language designed to generate object code for the STAR computer. Two compilations, one of 185 and the other of 450 source lines were measured. OPL-A compiler for computer graphics language. Again, two compilations, one of 160 and the other of 575 source lines were examined. INV-A matrix inversion routine. Measurements were taken for inversion of a 200X200 order matrix. LIST_CAT-A sorting routine. For this study a list of 700 names was /Surted in several different orders. PANICD-A compute-bound routine designed to format MCTS core dumps for printing. It formatted about 50000 words for these tests. The tests were performed by running identical and nonidentical tasks simultaneously from a varying number of terminals. Each set of tests was executed twice; once with the Global LRU system and then repeated with the Local From the collection of the Computer History Museum (www.computerhistory.org) Experimental Data on Page Replacement Algorithm LRU system. The total paging space was held constant in each case for both systems. N = Numher of pag"s used by task .1. (Number of pages used by task t2 = 91 - 181 If) 01~~TTrM~~~~rM~~~~~~~Trnn~ RESULTS 9') Identical-tasks test I: 1'1 1111 ': 1 11 1 11 ;: I:: 11111111,111111'::1 I : : I : : : : : I : : : : : I I I I I I : I I ( I I : I I I I : II I I I ,I , 1111111111,11111111111 I1I1 1111111 'II I I I IIII 1111 , 111'1 I I I I 11 TASK 12 I 'I I I' I I' I I I ' : I , I I I I I : ' : 1 : ' I : : I : I' I II I II , I I II I' If: I I " II,:: : : 1'1' l: '1,1,,: III ' II ,1'111 11 ' ,II I, , III I III : I I'~'II: 1 1,1 1 \ : 11,1: I1III : t I 'Ill'll' III II I I " I I' " 111(11 I' I' '1'1 11 ~II'IIIII III f I ,11 11 11'11 I, ~I"!(T: Iii ~ .. '+rt-tp~l+t : I', , Table I displays the average number of page faults per task (# P.F.) generated by identical multiprogrammed tasks which are running simultaneously on each of the two paging systems for varying number of terminals. Also included are ratio values representing the relative performance of the Local LRU in relation to the Global LRU. (The "extrem~ paging buffer" column will be explained later.) While experimenting with the Global LRU system, it was observed that the number of pages used by each of the simultaneously running tasks varied considerably during execution. On the other hand the number of pages used by each task with the Local LRU system remained constant (by design). For example, in the two terminal Malus compilation of 450 source lines (Table I), which displays the most extreme difference, the Local LRU system divided the available 91 pages between the two tasks giving one 45 and the other 46 pages. With the Global LRU, on the other hand, tasks competed \vith each other for pages in main memory and the number of pages which each "owned" as a function of the elapsed execution time is displayed in Figure 1. AB these two identical tasks were started at the same instant, one would tend to think that they would split the core pages evenly among them and each would occupy close to half of memory at any given time (as in the Local LRU case). But as can be seen from Figure 1 this did not happen. These tasks dynamically changed the number of pages which they occupied with significant fluctuation. One explanation might be that while executing, most programs change their locality5 characteristics and consequently their working set size changes. If tasks are allowed to compete for pages, they tend to accumulate as many working set pages as they can in order to run effectively. For this purpose they use pages obtained from other tasks which at that moment (due to the negligible difference in starting time) are executing at other stages of the same program at which they usually have different locality properties and possibly require, or are forced to occupy, a smaller working set of pages. In addition, it was observed that even though the above two tasks, started virtually at the same time, one task finished executing well ahead of the other. This can be clearly observed in Figure 1. At the start of execution Task #1 had 51 pages while Task #2 had only 40. Afterwards in most cases Task #2 had more pages. At point A Task #2 completed its execution and its pages started migrating to Task #1. At point B all of main memory belonged to Task #1. Due to this page migration from one task to the other and vice versa, Task #2 ran better up to point A and thus Task #1 was able to run efficiently from point B to completion. 70 ~5 4S 40 25 I 'I'J ',I ~II" It: IIJ :II, , I 111I1I11 ,1 1 t r1 II I II t I ~ ll, 11 1'1,1 ~~' 10 TASK t 1 TIll 1 50 100 150 200 250- Elapsed execution time (sec.) Figure I-Variation in the number of pages "owned" by each of two tasks while executing under the Global LRU policy The elapsed execution time with the Global LRU system was considerably shorter for high Local/Global ratios. But whenever the ratio was close to one, this time was virtually equal. As an example, in the case displayed in Figure 1 the compilation under the Global LRU system lasted only 231 seconds while under the Local LRU system the same compilation took 746 seconds. The compilation cases generated considerably more pagefaults under the Local LRU RA. But the difference in number of page-faults generated is less severe for LIST_CAT, and there is almost no difference in the INV and PANICD cases. This could be explained by the different working set characteristics of these programs. The Malus and OPL compilers change their working set sizes dynamically at a high rate while the rest of the tasks tend to have a fixed size or slowly changing working sets of pages. An indication of the rate of change of the working set size, in each case, for a multiprogramming level of two, can be obtained from the "extreme paging buffer" column in Table I. The figures in this column represent the number of pages each of the two tasks "owned" during the most extreme situation for that run with the Global LRU RA. As the extreme buffer difference gets larger, so does the performance ratio which is displayed in the adjacent column of Table I. N on-identical-tasks test At this point we felt that although the Local LRU showed, in some cases, poor performance when running the same tasks one against the other, it might still be a useful tool to From the collection of the Computer History Museum (www.computerhistory.org) 182 National Computer Conference, 1974 TABLE II- Results of Non-identical-tasks Test Customer tasks tested Malus compilation of 450 source lines OPL compilation of 575 source lines INV matrix inversion 200X Global LRU Local LRU Local/Global (#P.F.) (#P.F.) LRU 1075 7239 6.734 1017 4271 4.200 1221 4822 3.949 519 557 1.073 200 PANICD dump formatting routine prevent a complete system degradation in cases where some of the running tasks are in a thrashing state while others are not. We thought that by having a separate page table and a fixed number of pages for each task, the thrashing task would only degrade itself without affecting the rest of the system. To test this situation, a four non-identical task mixture displayed in Table II was simultaneously run from four terminals. This experiment was designed so that all the tasks except P ANICD would thrash with both the Global and Local LRU systems. Under the Local LRU every task "owned" one fourth of core while under the Global LRU all tasks compete for pages. Thus P ANICD which does not thrash when running with one-fourth of core should have benefited from the "protection" provided to its paging buffer under the Local LRU algorithm whereas under the Global LRU the thrashing tasks could affect its performance by "taking away" its essential pages because they are in high need for pages. But as can be seen in Table II, the number of page-faults which P ANICD generated was not affected at all by the thrashing tasks. The explanation to these unexpected results might be that tasks which are running effectively (PANICD), reference frequently (and thus "protect") their slow changing working set of pages. On the other hand the thrashing task needs many pages, each page for a short interval, and does not reference the same pages too often. Thus the pages of the thrashing task are, in most cases, the least recently used pages which migrate to the bottom of the page-table and are consequently overwritten. The thrashing task is only slightly affected by this process since chances are that it will need many other pages before requiring the pages which were just lost. (It contains the procedure pages, one input and one output data pages.) This fact explains the similar performance of the Local and Global LRU for PANICD as shown in Tables I and II. The slight difference in the number of page faults is attributed to the execution of some system programs known as "command language" before and after the actual execution of PANICD. These programs require large and rapidly changing working set sizes and thus contribute to the fewer number of page faults for the Global LRU in most cases. In order to get relative performance measurements of the WSRA versus the Global LRU we decided to eliminate the effect of the "command language" by initiating the measurements only after all the multiprogrammed P ANICD tasks have started their actual execution and terminate the data collection just before the first PANICD task branches back to the "command language." Thus a fixed size working set was required by each P ANICD task at any time. Since the WSRA requires that each multiprogrammed task have at least its working set of pages in main memory at all times, a Local LRU level of multiprogramming which provides a partition larger then the working set size ",-ill actually satisfy the WSRA requirements. The results for running identical PANICD tasks from a different number of terminals (corresponding to different levels of multiprogramming) are presented in Table III. The column "dump units processed per second" presents the total number of dump-pages formatted by all the P ANICD tasks, divided by the elapsed time required to run all the tasks at each multiprogramming level. Thus this column represents the real throughput of the entire system. The other column "number of page faults per dump unit processed" shows the total number of page faults generated by all tasks, divided by the total of all the dump-pages formatted. The throughput of the system increases with the level of multiprogramming, for both systems, up to the level of three and four while the number of page faults per unit dump remains low. Beyond the level of four both systems are thrashing and the throughput consequently deteriorates. Thus under the WSRA policy we would have run the system TABLE III-System Throughput and Page Fault Frequency for Increasing Levels of Multiprogramming Number of terminals (multiprog. level) Dump units proc./sec. #PF/dump units proc. Dump units proc./sec. #PF/dump units proc. 2 3 4 5 6 7 0.37 0.42 0.46 0.46 0.42 0.39 0.29 4.21 4.22 4.23 4.24 7.55 11.90 20.30 0.37 0.42 0.46 0.46 0.42 0.40 0.31 4.21 4.22 4.23 4.23 7.82 10.19 21.10 Working set replacement algorithm measurements As we did not implement this RA which implies use of varying partitions with varying working-set sizes, we decided to test at least a special case of it, which is running a task with a fixed working set size in a fixed size partition. PANICD has a small and fixed size workhl.g set of pages. Global LRU Local LRU (WSRA) From the collection of the Computer History Museum (www.computerhistory.org) Experimental Data on Page Replacement Algorithm at a multiprogramming level not higher than four. But for levels one through four the Global and Local LRU, which in this case is identical to the WSRA, perform virtually the same. Above the level of four the Local LRU (WSRA) does show better performance and that is probably due to the fact that with the Global LRU system a task which is waiting the longest on the scheduler queue and is the next one to run its pages become the least recently used ones and are overwritten. As this happens only after reaching a thrashing level of multiprogramming, a Global LRU RA can be useful only if the system can detect when overloading occurs. Such a performance monitor (based, for example, on the page-faulting level of the entire system) could be used to reduce the multiprogramming level whenever thrashing occurs to prevent performance degradation. CONCLUSIONS The results of this study strongly indicate that artificially restricting the main memory space which a task may utilize in a paged VM system results in an increased page traffic between the different levels of memory and consequently in considerable loss of efficiency. Tasks, especially if they require rapidly changing working set sizes, should be allowed to compete freely for the space which each may occupy at any given time. The Global LRU RA performed better than the Local LRU RA with fixed partitions and matched the performance of the Local LRU with varying partitions (WSRA), for a non-thrashing situation, in this study. The following Global LRU virtues should be noted: 1. It is a simple varying partitions RA in which the partition size is controlled by the RA itself and not by the operating system. 2. It is highly unlikely that thrashing tasks can "overtake" main memory and thus "hurt" the performance of non-thrashing tasks. This is due to the fact that non-thrashing tasks reference frequently and thus "protect" their essential pages from becoming the LRU ones. On the other hand the thrashing task needs many pages, each for a short interval, and does not reference the same pages too often. Thus the pages of the thrashing task are, in most cases, the LRU ones and are consequently overwritten. 3. Critics of the Global LRU strategy (including the author 7) claim that with the Global LRU RA, the task which is idle for the longest time while waiting on the scheduler queue, and which is the next to run is most likely to find its pages missing. Evidence of this has been found in these studies. But it turns out that the space could be utilized more effectively by the current running task than it would have been if these pages had been reserved without utilization for the delayed task. 183 4. In addition to the performance advantage (reduction of execution time and number of page faults), the Global LRU with the single page-table is easier to manage and requires less operating system space than the Local LRU with multi-page-tables and fixed paging buffer system. The Global LRU algorithm is especially useful for simple round robin scheduled operating systems. For more sophisti~ cated systems, however, the multi-page-table approach might be useful due to requirements other than efficiency, such as: priority scheduling, etc. But since no artificial restrictions should be imposed on main memory space it seems that the working-set-partitions RA's such as the WSRA5 and PFF13 might well be the only class of paging strategies able to perform effectively utilizing multi-page-tables. However, the WSRA will require a "smart" mechanism to determine the follmving: (A) The size of the task's working set at anv given time. (B) When a task is in a working set expansio~ phase and needs more pages, which of the other multiprogrammed tasks will be the one to lose pages. (C) What action should be taken when there are a few available pages in core but not enough to start a new task. In the Global LRU case no information about (A) is needed; the decision about (B) is trivial; as for (C) the new task is started and it "fights" to build its working set from pages which are probably non-useful to other tasks. The WSRA has a clear advantage over the Global LRU; It prevents system overloading. Thus if the Global LRU is to be used, a special system performance monitor could be used to reduce the level of multiprogramming whenever overloading occurs. ACKNOWLEDGMENT I wish to thank G. G. Dodd for his support of this study and advice on organizing the paper. Also I am grateful to R. R. Brown, J. W. Boyse, M. Cianciolo and the rest of the MCTS personnel for their cooperation. Last but not least I wish to thank P. J. Denning and W. W. Chu for their constructive criticism of this paper. REFERENCES 1. Coffman, E. G. and L. C. Varian, "Further Experimental Data on the Behavior of Programs in a Paging Environment," Comm. ACM, 11, July 1968, 471-474. 2. Fine, G. H., C. W. Jackson and P. V. McIsaac, "Dynamic Program Behavior under Paging," Proc. 21st Nat. Conf. ACM, ACM Pub. P-66, 1966, pp. 223-228. 3. Kuehner, C. J. and B. Randell, "Demand Paging in Prospective," Proc. AFIPS 1968 Fall Joint Compo Conf., Vol. 33, pp. 1011-1018. 4. Denning, P. J., "Virtual Memory," Computing Surveys, Vol. 2, No.3, Sept. 1970, pp. 153-189. 5. Denning, P. J., "The working-set model for program behavior," Comm. ACM., Vol. 11, May 1968, pp. 323-333. 6. Chu, W. W., N. Oliver, and H. Opderbeck, "Measurement Data on the Working Set Replacement Algorithm and Their Applica- From the collection of the Computer History Museum (www.computerhistory.org) 184 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. National Computer Conference, 1974 tions," Proc. Brooklyn Polytechnic Institute Symposium on Computer-Communications Networks and Teletraphic, Vol. 22, Apr. 1972. Oliver, N., Optimization of Virtual Paged Memories, Master thesis, Univ. of Calif. Los Angeles, 1971. Holland, S. A., and C. L. Purcel, "The CDC STAR-1oo a large scale network oriented computer system," IEEE Proc. of the International Computer Society Conference, Boston, Mass., Sep. 22-24, 1971. Brown, R. R., J. L. Elshoff, and M. R. Ward, et al., Collection of MCTS Papers, to be published, G. M. Res. Labs., Warren, Mich. 1974. Belady, L. A., "A Study of Replacement Algorithms for a Virtualstorage Computer," IBM Syst. J., Vol. 5 No.2, 1966, pp. 78-101. Denning, P. J., "Thrashing: Its Causes and Prevention," Proc. AFIPS 1968 Fall Joint Compo Conf., Vol. 33, pp. 915-922. Thorington, J. M., J. D. Irvin, "An Adaptive Replacement Algorithm for Paged-memory Computer Systems," IEEE Trans. Vol. c-21, Oct. 1972, pp. 1053-1061. Chu, W. W. and H. Opderbeck, "The Page Fault Frequency Replacement Algorithm," Proc. AFIPS 1972 FJCC, Vol. 41, pp. 597609. Alexander, M. T., Time Sharing Supervisor Program, Univ. of Mich. Computing Center, May 1969. Bayels, R. A., et al., Control Program-67/CamJJridge Monitor System (CP-67/CMS), Program Number 360D 05.2.005, Cambridge, Mass., 1968. Organick, E. I., A Guide to Multics for Sub-System Writers, Project MAC, 1969. 17. IBM OS/Virtual Storage 1 Features Supplement, No. GC20-1752-0. 18. IBM OS/Virtual Storage 2 Features Supplement, No. GC20-1753-0. 19. Coffman, E. G. and T. A. Ryan, "A Study of Storage Partitioning Using a Mathematical Model of Locality," Comm. ACM 15, March 1972, pp. 185-190. 20. Oden, P. H. and G. S. Shedler, A Model of Memory Contention in a Paging Machine, IBM Res. Tech. Rep. RC3056, IBM Yorktown Heights, N. Y., Sept. 1970. 21. IBM System/360 Time Sharing Operating System Program Logic Manual, File No. S360-36 GY28-2oo9-2, New York 1970. 22. Denning, P. J. and S. C. Schwartz, "Properties of the WorkingSet Model," ACM, 15, March 1972, pp. 191-198. 23. DeMeis, W. M. and N. Weizer, "Measurement Data Analysis of a Demand Paging Time Sharing System," ACM Proc. 1969, pp. 201216. 24. Openheimer, G. and N. Weizer, "Resource Management for a Medium Scale Time-sharing System," Comm. ACM, Vol. 11, May, 1968, pp. 313-322. 25. Spirn, J. R. and P. J. Denning, "Experiments with Program Locality," Proc. AFIPS 1972 FJCC, Vol. 41, pp. 611-621. 26. Private communication with P. J. Denning. 27. Doherty, W. J., "Scheduling TSS/360 for Responsiveness," Proc. AFIPS 1970 FJCC, Vol. 37, AFIPS Press, Montvale, N. J., pp. 97-112. 28. Curtis, R. L., "Management of High Speed Memory in the STAR100 Computer," IEEE Proc. of the International Computer Society Conference, Boston, Mass., Sep. 22-24, 1971. From the collection of the Computer History Museum (www.computerhistory.org)