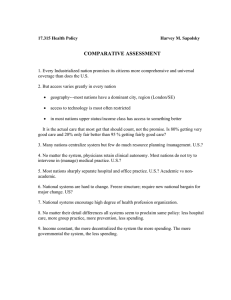

The RAND Health Insurance Experiment, Three

advertisement