Delay Lines Using Self-adapting Time Constants

advertisement

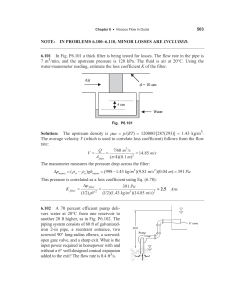

Delay Lines Using Self-Adapting Time Constants Shao-Jen Lim and John G. Harris Computational Neuro-Engineering Laboratory University of Florida Gainesville, FL 32611 Abstract- Transversal filters using ideal t a p delay lines are a popular form of short-term memory based filtering in adaptive systems. Some applications where these filters have attained considerable success include system identification, linear prediction, channel equalization and echo cancellation [l]. The gamma filter improves on t h e simple FIR delay line by allowing t h e system t o choose a single optimal time-constant by minimizing the Mean Squared Error of t h e system [8]. However, in practice it is difficult t o determine the optimal value of t h e time constant since the performance surface is nonconvex. Also, many times a single time constant is not sufficient t o well represent t h e input signal. We propose a nonlinear delay line where each stage of t h e delay line adapts its time constant so t h a t t h e average power at the output of t h e stage is a constant fraction of the power at the input t o t h e stage. Since this adaptation is independent of the Mean Square Error, there are no problems with local minima in t h e search space. Furthermore, since each stage adapts its own time constant, t h e delay line is able t o represent signals t h a t contain a wide variety of time scales. We discuss both discrete- and continuous-time realizations of this method. Finally, we are developing analog VLSI hardware t o implement these nonlinear delay lines. Such an implementation will provide fast, inexpensive, and low-power solutions for many adaptive signal processing applications. I. INTRODUCTION Infinite impulse response (IIR) filters are more costeffective than the widely used ideal delay lines in adaptive signal processing. The gamma filter is one of the successful IIR filter design which stability is guaranteed [8] [6] and it is a marked improvement over the FIR filter because of its adjustable memory depth [5][ 6 ] . The gamma filter has been applied to a variety of real-world problems such as echo cancelation, system identification, times series prediction, noise reduction, and dynamic modeling [7]. However, in practice it is hard to search for the optimal time constant of the gamma filter because of the nonconvex performance surface associated with the time-constant [6]. Also, many times a single valued time constant may not be able to fully represent the incoming signal. To deal with this problem, we introduce a nonlinear gamma delay line where each gamma unit adjusts its own time constant simultaneously such that the average power at the output of each gamma unit is a constant fraction of the power at the input. There are no local minima problems in this method because of the Mean Square Error is unrelated to the time scale adaptation. Moreover, since each stage adapts its own time constant, the delay line is able to represent signals that contain a wide variety of time scales. To provide fast, inexpensive, and low-power solutions to many adaptive signal processing applications, we are de- 0-7803-4053-1/97/$10.001997 IEEE @ x 4t "0 MSE IS computed as a function of mu values / 0.5 1 mu Fig. 1. The solid line shows the MSE of a third-order single p gamma filter as a function of p for identification of the filter of equation 8. The dashed dot line is the optimal solution of a thirdorder self-adjusting time constant delay line when the constant fraction R is set equal to 0.82 and the dashed lines represents R=0.75. Note that the mean square error here for both methods are computed b y using Wiener-Hopf solution. veloping analog VLSI hardware to implement these nonlinear delay lines. Each stage of the nonlinear delay line consists of a five-transistor transconductance amplifier and a capacitor configured to realize a first-order low-pass filter. The time constant of the filter is adapted so that the signal power is attenuated by a constant fraction at each stage. Sections I1 and I1 of this paper discuss the discreteand continuous-time realizations of this method. Section IV describes the continuous-time anaiog VLSI circuitry we have used to implement the self-adapting delay lines. 11. DISCRIETE DOMAIN The gamma filter in discrete domain is given by where xk[n] represents the 'output of a k stage delay line at iteration n, Z k - 1 [n]is the input of the kth stage gamma unit, and pk is the adaptive memory parameter for kth stage. If the input to the gamma model is a simple sinusoidal signal x k - ~ [ n = ] Acos(w0n); the input power spectrum and 2853 ~ MSE is computed as a function of mu values I 1 “li 4t \ / 2O 0.5 mu 1 Fig. 2. The solid line depicts the MSE of third-order single p gamma filter as a function of fi f o r identification of the filter of equation 9. The dashed-dot line is the optimal solution for a thirdorder self-adjusting time constant delay line when R is set equal to 0.87. Fig. 3. the average input power can be computed by We will discuss a few system-identification examples to illustrate how the self-adjusting p k delay line architecture performs compared to a conventional single-p adaptive gamma filter. The first LLunknown” system to be identified is A2 n =2 respectively and the average output power is 6aZle-I The solad lane depicts the Mean Square Error of thard order sangle p gamma filters as a functzon of p f o r adentaficataon of the filter of equatzon 10, and the dashed dot lane zs the optamal solution of a third-order self-adjustzng tame constant delay lanes when the constant fractaon R as set equal to 0.87. (3) H(z)= Dividing equation 4 by equation 3, gives a constant fraction that is related a function of the p k of the gamma unit and the signal frequency as shown in the following equation: In other words, the p k is a nonlinear monotonic function of the input signal frequency, while the value of the fraction % will distort this function. Each tap in a cascade of self-adjusting tap delays will converge to the same time constant provided a single frequency sine wave is input to the cascade. Using the properties of the discrete gamma filter, we designed the following stochastic gradient descent update equation for p: where d k is the gamma delayed output of the input signal d k - 1 when do stands for the desired signal and the weight update is calculated using the standard LMS rule given by: 0.005(1- 0.87312-1 - 0 . 8 7 3 1 ~ -+~ K 3 ) 1- 2 . 8 6 5 3 ~ ~2 .~7 5 0 5 ~ --~ 0 . 8 8 4 3 ~ - ~ (8) + The mean square error as a function of p was calculated by evaluating E = E(d2[n]) + WTRW - 2PTW while the optimal weight vector W * is computed by solving the WeinerHopf equation. We assumed a uniformly distributed zero mean white noise input. The results are displayed in Figure 1. Note that these results present only the theoretical rather than empirical results since the Wiener-Hopf equations were used to solve for the optimal solution in both methods. The solid line in Figure 1 depicts the Mean Square Error of a conventional third-order gamma filter as a function of the single-p value for identification of the filter of equation 8. The dashed-dot line shows the optimal solution of a third-order self-adjusting time constant delay lines when the constant fraction % is set equal to 0.82 while the dashed lines is for ? = I? 0.75. Thus, it i s clear that the self-adjusting time constant delay line can outperform the single p gamma filter for certain problems without requiring a complicated nonconvex search. In Figure 2 and 3, we show two more examples that demonstrate the performance of the self-adjusting time constant delay lines. Figure 2 is the performance surface for the third-order elliptic low-pass filter described by 2854 H ( z )= + 0.0563 - 0 . 0 0 0 9 ~ -~ 0 . 0 0 0 9 ~ - ~0 . 0 5 6 3 ~ - ~ 1 - 2 . 1 2 9 1 ~ ~1 ~. 7 8 3 4 ~ 0.54352-3 ~~ (9) + MSE is computed by usirig the continuous LMS update rule ,-a 6 8 10 12 14 16 18 20 22 mu Fig. 4. A schematic of a continuous-time system identification problem in which the upper left delay line is the unknown system to be modeled, the lower left delay line is an adaptive gamma system trained such that it approximates the system in mean square error sense, and the last delay line is used to adjust the time ) so that the average power at the constant n ( t ) and ~ ( tshown outputs of the stage d l ( t ) and d z ( t ) are a constant fraction of the average power of the inputs d o ( t ) and d l ( t ) respectively. Dividing equation 14 by equation 13, we get a constant fraction which is related oinly to the time constant of the gamma unit and the signal frequency: while Figure 3 shows the performance surface of + 0.3000 - 0 . 1 8 0 0 ~ -~0.2835~-~0.2572~-~ 1 - 2 . 1 0 0 0 ~ - ~ 1 . 4 3 0 0 ~ --~ 0 . 3 1 5 0 ~ - ~ (10) Note that, the constant fraction 3 for both equation 9 and 10 are set equal to 0.87. H ( z )= + 111. CONTINUOUS-TIME DOMAIN In the continuous-time domain, the gamma filter can be calculated by using [2] [3] [8] where ~ k ( t represents ) the output of a Ic-stage delay line at time t , Z k - 1 ( t ) stands for the input of the k-stage gamma unit, and pk is the reciprocal of time constant r k . If the input to an analog gamma model is a sinusoidal signal with frequency WO radians, z k - 1 (t)= A cos(wot), the input power spectrum and the average input power can be expressed as A2 MZb-1 = - 2 respectively and the average output power is 631E A2 =2 1 Fig. 5 . The solid line depicts (the ezperimental Mean Square Error of a continuous-time second-order single 1.1 gamma filter as a function of p for identification of the filter of equation 18, and the dashed dot line is the empirical optimal solution of a secondorder self-adjusting time constant delay lines when the constant fraction R is set equal to 0.65 with poles found at 16.99 and 9.5. + 1 (TkW0)2 As in the discrete-time case, the time constant computed by this method is a monotonic function of the frequency of the input sine wave. Bringing the behavior of each gamma stage together with the delay lines, we can design a self-adjusting time-constant delay line that adapts to the properties of the incoming signal. Figure 4 shows a schematic of an analog system identification problem in which the upper left delay line is an “unknown” system to be identified and the lower left delay line is an adaptive gamma system with weights trained to minimize the mean square error. The last delay line is used to adjust the time constant n ( t ) and rz(t) shown so that the average power at the outputs of the stage d l ( t ) and d 2 ( t ) are a constant fraction of the average power of the inputs d o ( t ) and d l ( t ) respectively. In other words, pk = l / r k is adapted by usiing the following learning rule: where rPk is a time constant of the pk update which is chosen to be much larger than ‘rk. Note that equation 16 (13) uses the instantaneous power of both input and output signal instead of the average power. Similar to the discrete-time adaptation of FIR and IIR adaptive filters, the weights wo(t), wl(t), and wa(t) are ad(14) justed according to the following continuous-time gradient 2855 ~ MSE IS computed by using the continuous LMS update rule ,o-7 h' I I I I , I 16 18 ,"-7 I MSE is computed by using the continuous LMS update rule I 1- 0- ' L 10 12 4 6 8 -i I I 14 mu I 20 22 24 mu Fig. 6. The solid line depicts the experimental Mean Square Error of a second order analog single p gamma filters as a function of b for identification of the filter of equation 19, and the dashed dot line is the empirical optimal solution of the second-order selfadjusting time constant delay line when the constant fraction R is set equal to 0.65 with poles found at 13.1 and 6.1. Fig. 7. The solad lane depzcts the experamental Mean Square Error of a contanuous-tame thard-order filters as a functaon of the sangle p for rdentzficatzon of the filter of equataon 20. The dashed dot lane as the emparzeal optamal solutaon of the thard-order self-adjustzng trme constant delay lanes when the constant fractzon R as set equal to 0.7 with poles found at 15.355, 8.998 and 2.05. descent update [2] [3] [l][6] [8]: which is modeled by three consecutive follower integrator filters. The constant fractions R ! of both examples are set equal to 0.65 and 0.7 respectively. where rw is a time constant of the weight update larger than r k , the time constant of each stage. Based on this signal and time constant relationship, we first model an analog system with poles located at 15.3564 and 1.5356 H ( s )= 0.3071s s2 + 0.5895 + 16.8920s + 23.5818 (18) by using 2 delay lines with self-adapting time constants. The solid line in Figure 5 depicts the experimental Mean Square Error of the conventional second-order single p gamma filters as a function of p for identification of the filter of equation 18. The dashed-dot line shows the empirical optimal solution of a second-order self-adjusting time constant delay lines when the constant fraction R! is set equal to 0.65 with poles found at 16.99 and 9.5. Figure 6 and 7 give two more examples that show the benefit of the MSE unrelated updating scheme. Figure 6 is the performance surface for a third order filter with poles located at 15.3564, 2.8793 and 1.5356 H(s)= + + 0 . 3 0 7 1 ~ ~1.7981s 2.7159 s3 1 9 . 7 7 1 3 ~ ~72.2184s 67.8976 + + + (19) by using two follower integrators to model, while Figure 7 gives the mean square error versus p of another third order filter with poles at 15.3564, 7.6782 and 1.5356 H(s)= s3 0 . 3 0 7 1 ~+~4.0089s + 7.2425 + 2 4 . 5 7 0 2 ~+~153.2814s+ 181.0618 IV. CIRCUITIMPLEMENTATION Since r equals CIG where C is the capacitance of an RC integrator and G is the transconductance of a follower which is equivalent to (20) as given in [4]. The relationship between the bias voltage of a follower and its input signal frequency can be derived by combining equations 15 and 21: and as depicted as shown in Figure 9. In equation 21 and 22, IC stands for Boltzmann's constant, T temperature, q electron charge, n a fabrication constant expressing the effectiveness of the gate in determining the surface potential for a CMOS transistor, and C capacitance in the followerintegrator circuit. Figure 8 gives an overview of how a cascade of follower integrators adjust their own time constants with respect to the incoming signal do as shown in Figure 4. The upper plot shows the circuit results when the input do is composed of two frequencies 500Hz and lOOOHz signal for the time duration Oms to 60ms. The signal changes abruptly to a single frequency 500Hz signal at 60ms. The lower graph depicts the learning path of two bias voltages. It is clear 2856 OELAY L I N E S U S I N G S E L F - G O G P T I N G T I M E CONSTANTS 2.560 2.540 V 0 L 2 L 520 2.50 N 2 480 2.46 0 2.440 1 0 1 0 1.0050 V L L N I 1 .o 9 9 5 . OM 9 9 0 . OM 9 8 5 . OM se 0 . OM 9 7 5 . OM 9 7 0 . OM . - 0. ........................................ , 1 0 8 I 2 0 . OM ;..... I I....................................................... I I I I , , I , , , ‘)0.011 6 0 . OM e o . OM T I M E C L I N I 4 d 1 0 0 . 0 M 100. O M Fig. 8. Time constant adaptation for a continuous-time two-stage delay line which is similar to the one shown on the right middle portion of Figure 4. The upper plot shows that the input signal do is composed of two frequencies 500Hz and lOOOHz from Oms to GOms, hut it changes abruptly to a single frequency 500Hz signal after 60ms. The lower graph depicts the learning path of two bias voltages. It is clear that when there are two different frequencies in do, the two bias voltages separate so that each of them corresponding to one of the input frequencies. When the input collapses to a single frequency, the two bias voltages converge to the same value. that when there are two different frequencies in the do, two bias voltages separate into two separate values corresponding to the two frequencies. When the input signal collapses t o a single frequency, the two bias voltages now converge to the same value. In actual practice, the time constant for update will be made much longer than what was used in this example, providing much smoother curves. Figure 10 shows a schematic of a self-adjusting time constant circuit consisting of three follower-integrators in the upper portion of the plot and three absolute-value circuits for computing the instantaneous power of each stage and automatically adjusting the time constant. This schematic is a three-tap delay-line version of the circuit shown in the middle right of Figure 4 which consists of only two delay lines. Figure 11 shows a detailed schematic of the absolute value circuit. V. CONCLUSION In this paper, we introduce a nonlinear delay line where each stage of the delay line adapts its time constant so that the average power at the output is a constant fraction of the average power of the input. There are no problems with local minima in the search space as long as the fraction 8 is set to a constant. Figure 12 shows the mean square error of equation 10 as a function of R. It is clear that when the number of delay elements increases, the performance surface of this self-adapting delay lines is nonconvex with respect to R. Nevertheless, the self-adapting time constant delay lines still be a favorable choice, since its simplicity makes it easier to be implemented by CMOS process and the optimal value of R stays mostly around 0.6 to 0.9 while the range of optimal p could be ranging from 0 to 1. Acknowledgments: Th.is work was supported by an NSF CAREER award #MI)?-9502307. REFERENCES [l] B.Widrow and S. Stearns. Adaptive Signal Processing. Prentice Hall, 1985. [2] J. Juan, J. G. Harris, and J. C. Principe. Analog VLSI implementations of continuous-time memory structures. In 1996 IEEE International Symposium on Circuits and Systems, volume 3, pages 338-340, 1996. [3] J. Juan, J. G. Harris, and 3. C. Principe. Analog hardware implementation of adative filter structures. In Proceedings of the International Conjerence on Neural Networks, 1997. [4] C. Mead. Analog VLSI ana! Neural Systems. Addison-Wesley, 1989. [5] J . C. Principe, J . Kuo, and 3. Celebi. An analysis of short term memory structures in dynamic neural networks. IEEE transactions on Neural Networks, 5(2):331-337, 1994. [6] J. C. Principe, B. De Vries, and P.G. de Oliveira. The gamma filter - a new class of adaptive IIR filters with restricted feedback. IEEE transactions on signal processing, 41(2):649-656, 1993. [7] J.C. Principe, S. Celebi, B. de Vries, and J.G. Harris. Locally recurrent networks: the gamma operator, properties, and extensions. In 0. Omidvar and J. Dayhoff, editors, Neural Networks and Pattern Recognition. Academic Press, 1997. [8] B. De Vries and J . C. Principe. The gamma model - a neural model for temporal processing. Neural Networks, 5:565-576, 1992. 2857 The relationship between a sinusoidal input and biased voltage of a follower 0 851 I Frequency in Hz Fig. 9. The relationship between bias voltage of the follower integrator and its input signal frequency while changing the constant C capacitance fractzon R. I n this figure, kT/(qtc) i s 43 x Farads and I o is 1 x Amps. of a capacator is 1 x Fig. 11. A detailed schematic of the absolute circuit. MSE is computedas a function of ratio values OS 0.c w (I) I 0.c 0.6 Fig 10 A schematac of the self-adjustang tame constant czrcuzt whzch consasts of the three follower-antegrators an the upper portaon of the plot and three absolute value czrcuzts f o r computang the anstantaneous power at each stage and automatacally adjustang the tame constant. Thas schematac as a three tap delay-lzne verszon of the carcuat shown zn the maddle rzght of Fagure 4. The detazied schematzc of the absolute value carcuat can be found an Fagure 11 0.8 1 ratio Fig. 12. Mean Square Error of equation 10 as a function of R. Note that the mean square error is calculated by evaluatang [ = E ( d 2 [ n ] ) WTRW - 2PTW, whale the optamal wezght vector W * is computed b y solving the Weiner-Hopf equation. 2858 +