Assessment of Peripheral and Central Auditory Function February

advertisement

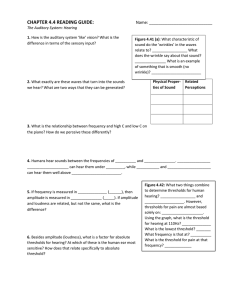

Assessment of Peripheral and Central Auditory Function February 2004 TITLE: Assessment of Peripheral and Central Auditory Function SOURCE: Grand Rounds Presentation, UTMB, Dept. of Otolaryngology DATE: February 11, 2004 RESIDENT PHYSICIAN: Steve Wright, MD FACULTY ADVISOR: Arun Gadre, MD SERIES EDITORS: Francis B. Quinn, Jr., MD and Matthew W. Ryan, MD ARCHIVIST: Melinda Stoner Quinn, MSICS "This material was prepared by resident physicians in partial fulfillment of educational requirements established for the Postgraduate Training Program of the UTMB Department of Otolaryngology/Head and Neck Surgery and was not intended for clinical use in its present form. It was prepared for the purpose of stimulating group discussion in a conference setting. No warranties, either express or implied, are made with respect to its accuracy, completeness, or timeliness. The material does not necessarily reflect the current or past opinions of members of the UTMB faculty and should not be used for purposes of diagnosis or treatment without consulting appropriate literature sources and informed professional opinion." The auditory pathway begins with simple interactions between mechanical sound wave energy with the pinna and results in the conscious interpretation of noise and communication. Clinical audiology has evolved new techniques and strategies to assess auditory function. It is an essential component of the otolaryngologist’s armament. The pure tone audiometry, speech audiometry, and acoustic immitance continue to be important for hearing assessment. New technologies such as ABR, EcoG, and OAEs have become the latest addition to the clinical audiologic test battery. The area of central auditory function is of relatively recent interest. Recently, efforts have been made to combine the knowledge of different specialty areas, such as otology, audiology, and speech pathology, to form a multidisciplinary approach to the diagnosis and treatment of central auditory processing disorders. Anatomy Auditory processing actually begins with the pinna and mechanical transmission through the ear canal and middle ear. As mechanical sound energy waves transmit through the tympanic membrane, ossicular chain, and oval window, a traveling wave is created through the scala vestibula with a resultant displacement of the basilar membrane and firing of cochlear hair cells. High frequency waves stimulate more proximal portions of the cochlea, while low frequency waves stimulate more distal regions of the cochlea. This neural discharge is tonotopically preserved as it travels through the eighth nerve. With low frequency sounds located ventrolaterally and high frequency sounds more dorsally. The transfer of neural impulses from the peripheral nervous system to the central nervous system occurs at the cochlear nuclei, located in the lower brainstem. The cochlear nuclei are thought to recode the auditory signal and transfer the data predominantly to the contralateral superior olivary complex through three main outputs. In addition, the cochlear nuclei pass information ipsilaterally to the lateral lemniscus. The superior olivary complex (SOC), located in the caudal portion of the pons, is thought to play an important role in binaural hearing. The SOC also appears to be an important relay station in the reflex arc of the acoustic stapedial reflex. The lateral lemniscus is considered to be the primary auditory pathway because it contains both ascending and descending pathways. The inferior colliculus is the largest auditory structure and is thought to play an important role in 1 Assessment of Peripheral and Central Auditory Function February 2004 sound localization and frequency resolution. On entering the thalamus, the auditory information takes two pathways to the temporal lobe. Primary auditory reception occurs in Heschl’s gyri in either hemisphere of the brain. Auditory linguistic processing occurs primarily on the left at Wernicke’s and the insular cortex. The processing of nonlinguistic information takes place primarily on the right side. Pathways from each hemisphere course to the opposite side, stimulating, inhibiting or transferring information. Peripheral Auditory Testing Pure tone Audiometry Pure tone audiometry is the most common measurement of hearing sensitivity. Signals are delivered through air and bone. Pure tones are generated by an electoacoustic source that produces acoustic energy that varies as a function of cycles per second (HZ). High frequency tones initiate traveling waves whose maximum intensity will occur at the basal end of the cochlea while a low frequency tone initiates a traveling wave whose maximum intensity will occur at the apical end of the cochlea. Air conduction assesses the function of the entire auditory system from the most peripheral aspect to the central portion. Air conduction testing alone provides little information regarding the etiology of hearing loss and specific auditory pathology. Air conduction thresholds are obtained from 250Hz to 8000Hz in octave intervals. Interoctave intervals, such as 3000 and 6000Hz, are tested when successive octave thresholds differ by 20dB. When used in conjunction with bone conduction testing, they help determine both the type and severity of the hearing loss. Bone conducted sound is transmitted directly to the cochlea and is thought to be a better reflection of sensory hearing. A bone oscillator is typically placed on the mastoid process which provides an enhanced dynamic range compared with other placements such as the frontal bone. When discussing the decibel levels in the human hearing, it is necessary to define the decibel scale that is being used. Three different units are used in audiology including dB sound pressure level (SPL), dB hearing level (HL), and dB sensation level (SL). On an audiogram the decibel scale has its reference at 0dB, which is described as audiometric 0. This is the standard for the intensity level that corresponds to the mean normal hearing threshold level, the minimal detectable intensity for each test frequency for persons with normal hearing. The human hearing extends from 20 to 20,000Hz, but the sensitivity at different frequencies varies in dB SPL. The human ear is differentially sensitive and can hear a 1000Hz tone at about 6.5dB SPL but hear a 125Hz tone at 45dB SPL and a 10,000Hz tone at about 20dB. Intensity range of human hearing extends from about 0dB HL to approximately 130dB (pain sensation). Decibels are quantified on a logarithmic basis, and the relation is described as dB = 10 log output intensity/reference intensity, and so a 1 dB increase in level signifies a ten fold increase in sound energy. Therefore, a 60dB HL is actually a million times more powerful than a 0dB HL. Sensation level is the intensity of the stimulus in decibels above an individual’s hearing threshold. For example, a word recognition test can be administered at an intensity level of 40dB SL, which is 40dB above the person’s pure tone average. The clinically normal region on an audiogram is 0 to 20dB HL. In children, hearing threshold level exceeding 15dB can be considered normal. Conversational speech is in the 40- 50db HL region; with the most significant frequencies for understanding 2 Assessment of Peripheral and Central Auditory Function February 2004 speech being 500 through 4000Hz. Hearing sensitivity within the speech frequency region is summarized by means of calculating the pure-tone average, which are the average hearing thresholds for the 500, 1000, and 2000Hz frequencies. Audiometric results are valid only when the patient’s responses are caused by stimulation of the test ear. ‘Crossover’ occurs when the acoustic energy presented to one ear can stimulates the non-test ear, resulting in obtained responses which represent the performance of the non-test rather than the test ear. The main mechanism of crossover is presumed to be bone conduction stimulation caused by vibration of the earphone cushion against the skull at high stimulus intensity levels. The amount that crosses over is a reflection of attenuation. The interaural attenuation of air conducted tones varies from 40 to 80dB depending on whether ear inserts or headphones are being used. Interaural attenuation values are also frequency dependent, being smaller for low frequencies and higher for high frequencies. Interaural attenuation values for bone conduction can occur even at about 0dB for bone conduction signals. Masking is the audiometric technique used to eliminate responses by the non-test ear whenever air and bone conduction stimulation exceeds interaural attenuation. An appropriate noise is presented to the ear not being tested when the stimulus is presented to the test ear. The level of masking noise must exceed the threshold for that ear. Excess levels of masking noise must be avoided in order to prevent crossover from the masking noise. Speech Audiometry Speech audiometry helps determine how well a person hears and understands speech. Spondee or spondaic words are the speech stimuli used to obtain the speech reception threshold (SRT). A spondee is defined as a two syllable word spoken with equal stress on both syllables and is presented in similar fashion as pure tone audiometry. The SRT is the softest intensity level at which a patient can correctly repeat 50% of the words. SRT is measured with speech signal, and the PTA is measured within the conversational range frequency, therefore these two values should be in close agreement. An unusually good SRT relative to the PTA should alert the physician to the possibility of nonorganic hearing loss, such as malingering. Word recognition scoring is a common clinical approach to evaluate a person’s ability to hear and understand speech. Lists of 20 to 50 words are presented to the patient at suprathreshold levels, usually 30dB above threshold. The list is phonetically balanced, which means it has speech sounds, or phonemes, that occur as often as they would in everyday conversation. Out of this list, a percentage correct is calculated. Word recognition scores of 90% or higher is considered normal while scores below this level indicate a problem with word recognition. Patients with conductive hearing loss usually show excellent word recognition. Patients with cochlear lesions have poorer discrimination. Patients with retrocochlear lesions usually have even poorer discrimination scores which sometimes may be exacerbated by the phenomenon of ‘rollover.’ Rollover is thought to occur as a result of changes in code intensity due to loss of monotonic stimulation and may be indicative of retrocochlear pathology. With ‘rollover’ the speech discrimination score goes down at higher sound intensities. 3 Assessment of Peripheral and Central Auditory Function February 2004 Acoustic Immittance Immittance is a term derived from the terms for two inversely related processes for assessing middle ear function, impedance and admittance. Impedance is the resistance to the flow of acoustic energy. Admittance is the ease of which acoustic energy can flow. A middle ear with low impedence (high admittance) more readily accepts acoustic energy, whereas a middle ear with high impedence (low admittance) tends to reflect energy. Immittance is usually evaluated by tympanometry and the acoustic reflex. It is a useful diagnostic tool to identify the presence of fluid in the middle ear, evaluate Eustachian tube function, evaluate the facial nerve, and help predict audiometry. Tympanometry reflects the mobility(compliance) of the tympanic membrane when air pressure is varied from +200 to -400dPa within the ear canal. There are three types of tympanograms: A, B, and C. A normal, or type A, tympanogram has peak compliance between 0 and -100dPa, and within a normal range of compliance. Peaks that are located within this pressure range may be overly compliant, ‘Ad,’ as with an atrophic tympanic membrane or under compliant, ‘As,’ such as with ossicular chain fixation or tympanosclerosis. A ‘type B’ tympanogram has no peak compliance and very little change in compliance with varying pressures. This pattern is most often associated with a middle ear effusion, or tympanic membrane perforations. ‘Type C’ tympanograms have a peak compliance that is located in the negative pressure ranges beyond -100dPa. This is usually seen in patients with Eustachian tube dysfunction and inadequate ventilation of the middle ear. ‘Type C’ may be a precursor to a ‘Type B’ tympanogram as the development of negative pressure precedes the presence of the effusion. The volume of air medial to the probe is also obtained with tympanometry. In general, ear canal volumes range from 0.5 to 1.0ml for children and 0.6 to 2.0ml in adults. Volume measurements greater than these may suggest tympanic membrane perforation or the presence of a patent ventilation tube. The acoustic stapedial reflex (ASR) is defined as the lowest intensity required to elicit a stapedial muscle contraction. The neural connections for the reflex are located in the lower brainstem. However, there are influences of higher CNS structures on the reflex via the olivocochlear bundle. The afferent portion of the reflex is the ipsilateral eighth nerve to cochlear nuclei. There is a complex interaction between the ipsilateral cochlear nuclei and the bilateral motor nuclei of the seventh nerve. The efferent limb of the reflex is the seventh nerve which innervates the stapedial muscle. Contraction of the stapedial muscle tilts the anterior stapes away from the oval window and stiffens the ossicular chain and results in increased impedance which is measured as a small decrease in compliance by an ear canal probe. There are three primary acoustic reflex characteristics that are typically evaluated; 1) presence or absence of the stapedial reflex, 2) acoustic reflex threshold, and 3) acoustic reflex decay or adaptation. The time delay of the acoustic reflex is thought to be 10ms. Acoustic reflex thresholds for tones in patients with normal hearing are usually 70-80dB above their tone thresholds, and about 5dB greater for the contra lateral threshold. Acoustic reflex decay measures the ability of the stapedius muscle to maintain sustained contraction, usually by presenting a signal 10dB above the reflex threshold for 10seconds. Even normal individuals may exhibit decay at higher frequencies, therefore the lower frequency, 500 to 1000Hz, tones are used. If the response decreases to less than half within 5 seconds, 4 Assessment of Peripheral and Central Auditory Function February 2004 retrocochlear pathology may be suspected. The acoustic reflex in ears with cochlear disorders is determined primarily by the degree of the sensorineural hearing loss. If auditory thresholds are below 50-55dB, the reflex thresholds are normal. If auditory thresholds are between 55- 80dB, the reflex thresholds are elevated in proportion to the increased auditory thresholds. In losses greater than 80dB, acoustic reflexes are usually absent. Normal acoustic reflex thresholds for a broadband noise are approximately 20-25dB lower than the reflex thresholds for tones. Clinically, this is important in the evaluation of malingerers because it is not physiologically possible for the patient to truly have behavioral thresholds that are higher (worse) than acoustic reflex thresholds for tones. In patients with sensorineural loss, the difference between the broadband and tone thresholds is decreased inversely to the amount of hearing loss. Therefore, as the degree of sensorineural hearing loss in dB increases, the difference in acoustic reflex thresholds for tones and noise decreases. The efferent limb of the reflex is explored in the diagnosis of ossicular chain disorders, such as otosclerosis and discontinuity, and facial nerve pathology. In a facial paralysis, the acoustic reflex is absent or abnormal when the recording probe is placed in the ear ipsilateral to the lesion. ASR can also be helpful in locating a seventh nerve lesion either proximal to distal to the stapedial muscle. Eighth nerve lesions will demonstrate an absent acoustic reflex when stimuli are presented to the affected ear. An important differentiation between the acoustic reflexes in eighth nerve versus cochlear lesions is that the reflex will be absent or abnormal regardless of the degree of hearing loss, whereas in cochlear lesions it is usually dependent on the degree of hearing loss. Also, rapid reflex decay is typically found. Abnormal reflexes are also recorded when stapedial muscle function is altered by myopathic diseases such myasthenia gravis and Eaton-Lambert syndrome and hyperthyroidism. Auditory brainstem responses Auditory brainstem responses are impulses generated by the auditory nerve and brainstem that can be recorded on the scalp after a transient stimulus. It is not a measure of hearing in terms of the conscious perception of sound. ABRs have been detected in the human neonate as early as 25 weeks of gestation. It is not affected by sleep, sedation, or attention, and is therefore very appropriate for estimation of auditory sensitivities in infants and children who have failed conventional testing secondary to behavioral techniques. The most widely used ABR is the click- or transient-evoked ABR. Using moderate intensity levels, an abrupt stimulus activates a large portion of the cochlea with a resultant firing of a wide range of neural frequency units. Repeatable waveforms of wave V can be detected at intensity levels within about 10dB of the average behavioral audiogram. Patterns of delay, latency, and waveform morphology can provide information about the auditory pathway from the middle ear to the lower brainstem. The click-evoked ABR is limited by its lack of frequency specificity, and may result in underestimation of the degree of hearing loss at a number of frequencies when the actual loss is sloping or unusual in shape. 5 Assessment of Peripheral and Central Auditory Function February 2004 Tone burst ABRs may yield more accurate results than the click-evoked ABR. Tone bursts are created with enough intensity to cause neural discharge, but at a brief enough duration to retain some frequency specificity. It is more reliable when the actual loss is sloping or unusual in shape. Latency periods are greater than for click evoked, especially at lower frequencies due to the increased travel time required to reach the more apical regions of the cochlea. Bone conduction ABR has shown to be as reliable and repeatable as air conduction ABRs. It is particularly useful in determining whether a functional cochlea exists in structural anomalies such as canal atresia. The primary goal in the evaluation of ABRs is to record a clear and reliable wave I component, which is the landmark for the remainder of the interpretation of peripheral auditory function. Wave I represents the compound action potentials from the distal end of the eighth cranial nerve. Wave II may arise from a more proximal end of the eighth nerve. Therefore, wave I and II are generated by structures that are ipsilateral to the stimulated ear. Wave III is usually prominent and is thought to arise from stimulation of the cochlear nuclei. Wave IV is likely generated by the superior olivary complex, with more contralateral than ipsilateral responses. Wave V is the most prominent and rostral component of the ABR and is thought to arise from the lateral lemniscus. When an ABR is confirmed by reproduction, absolute and interwave latencies between the individual components is measured in milliseconds. These are assessed for asymmetry, within 0.4ms between ears, and compared to normative data. The pattern of absolute and interwave latencies can characterize different types of auditory dysfunction. Conductive hearing loss will usually demonstrate good wave morphology and normal interwave latencies, but will have a markedly delayed Wave I latency. Sensory or cochlear loss will have poor wave morphology with a weak or small and delayed Wave I. Interwave latencies will be normal. The neural type hearing loss will show a normal Wave I or overall poor morphology, interwave delayed latencies, and Wave I-III delayed latency. ABR results differ in infants and children than those of the adult. The infant waveform shows less morphology than that of the adult. Absolute and interpeak latencies also tend to be prolonged initially but should correct by 18-24 months in patients with normal auditory development. Electrocochleograghy EcoG can be defined as a method of measuring stimulus-related potentials of the most peripheral portions of the auditory system. The three major components of the EcoG are the cochlear microphonic, summating potential, and the action potential. The cochlear microphonic and summating potential reflect cochlear bioelectric activity. The cochlear microphonic represents the potassium ion current flow through mainly the outer hair cells. As the stereocilia are bent away from the modiolus, the resistance decreases and flow increases. The summating potential is the DC potential recorded in the cochlea in response to sound. It may represent the shifts of outer hair cells and to a lesser extent, the inner hair cells. The action potential is generated by synchronous firing of the distal afferent eighth nerve fibers and is equivalent to ABR Wave I. The absolute amplitudes of the SP and AP vary considerably among individuals. 6 Assessment of Peripheral and Central Auditory Function February 2004 A more common approach is to use the SP/AP amplitude ratio. Electrode placement can be in the ear canal, on the tympanic membrane, or transtympanic, which is placed on the promontory. The non-invasive placement of the electrode in the ear canal or on the tympanic membrane is sufficient to create potentials but will have greater attenuation than the transtympanic location. Abnormal SP/AP ratio values are defined as more than 50% for the ear canal location, more than 40% for the tympanic membrane location, and more than 30% for a transtympanic location. This examination is performed most often for intraoperative monitoring of cochlear and eighth nerve status and in the diagnosis of Meniere’s disease. Transtympanic EcoG has proven itself a reliable test to detect the presence of endolymphatic hydrops. It is theorized that hydrops affects the elasticity of the basilar membrane and contributes to the increased amplitude of the SP relative to that of the AP. Otoacoustic emissions Otoacoustic emissions are low energy sounds produced by the cochlea. They are thought to be acoustic byproducts of the outer hair cells, which are thought to underlie the amplification of the basilar membrane. Clinically, they are most often evoked using transient and distorted product stimulation. The evoking response causes outer hair cell motility which results in a mechanical wave that travels from the cochlea through the middle ear and tympanic membrane to the ear canal where it is recorded. Spontaneous emissions are not present from the cochlea when there is a greater than 25dB hearing loss. Unfortunately, they are not present in all normal ears, which does not make this the test of choice to clinically assess cochlear functioning. Transient stimuli such as clicks evoke emissions from a large portion of the cochlea. The emissions are then sampled and signal-averaged to extract them from background noise. These alternating samples are then stored in one of two memory banks and compared. Reproducibility, expressed as a percentage, is the cross correlation between these two waveforms. A reproducibility score of 50% or greater indicates that a response is present. Waveforms may vary significantly between people, but they are highly reproducible within a given individual. When hearing thresholds are better than 35dB, TEOAEs are generally present. The advantages of TEOAE are that it can separate normal from abnormal ears at 20-30dB and that it is quick. The specificity of clean, dry ears of infants is 95%. The main disadvantage is that it fails to extract responses at higher frequencies. Distorted products are additional tones which are created when two tones, f1/lower frequency & f2/higher frequency, are presented simultaneously to a healthy cochlea. The most robust DPOAE occurs at the frequency determined by the equation 2f1-f2. Due to a nonlinear process within the cochlea, the DPOAE assesses the cochlear integrity of the region near f2. When hearing thresholds are better than 50dB, DPOAEs are generally present. The main advantage is that DPOAEs can recover OAEs above 6000Hz. The transmission properties of the middle ear directly influence the OAE characteristics. The presence of a middle ear effusion, as in otitis media, affects both the forward and backward transmission. Although otitis media often eliminates OAEs, it is possible to record OAEs in 7 Assessment of Peripheral and Central Auditory Function February 2004 some patients with middle ear effusion. OAE characteristics increase significantly over the first few days of life likely as a result of changes in the ear canal and middle ear. Small tympanic perforations will impede the forward transmission. This can usually be overcome with DPOAEs by increasing the amplitude. Central Auditory Processing There is no accepted definition of Central Auditory Processing (CAP). In its simplest form, it is what we do with what we hear. The Task Force on CAP Consensus Development defines CAP as the auditory system mechanisms and processes responsible for the following behavioral phenomena: Sound localization Auditory discrimination Auditory pattern recognition Temporal aspects of audition, including temporal resolution and masking Auditory performance decrements with competing and degraded acoustic signals Deficiencies in any of these behaviors are considered central auditory processing disorders (CAPD). Results of CAPD testing have revealed clustering of test results and characteristic behaviors. These four categories are decoding, tolerance-fading memory, integration, and organization. Each of the four categories has been associated with a specific region of the brain. The Buffalo model of CAPD assessment and management takes into account the classification of CAPD as well as speech language evaluation and academic characteristics. It is important to understand that there is no one test that is sensitive enough to detect CAPD, especially in children where the variability of the tests is very wide. Therefore a battery of tests is recommended. In the Buffalo model, the CAP battery always includes the Staggered Spondaic Word (SSW) test, the Phonemic Synthesis (PS) test, a speech-in-noise (SN) test, and the masking level difference (MLD) test. Most patients will have weaknesses in more than one category and the categories are not mutually exclusive. Decoding The decoding category is the most common, encompassing 50% of the weaknesses. There is an impairment of breakdown of auditory processing at the phonemic level. These patients will have difficulty accurately and quickly processing what they hear, and therefore they will need more time to figure out what they heard. They demonstrate difficulty with reading and speaking skills that depend upon phonics. This is especially noticeable when they read aloud. As children, they will often demonstrate articulation errors with the “r” and “l” sounds. Individuals of the decoding category are often compensated adequately if they have exceptional visual memory. The overlap of these deficits has suggested a relationship to the posterior temporal lobe, or Wernicke’s lobe. The management strategies center on improvement of phonemic and metaphonemical skills. These include Hooked on Phonics and Phonemic Synthesis Program. In the academic 8 Assessment of Peripheral and Central Auditory Function February 2004 setting, clear, concise, and repeated instructions, outlining objectives, and written instructions will benefit this category. Tolerance-fading Memory The second most common category is Tolerance-Fading Memory (TFM). These patients will have a poor auditory memory and poor figure ground skills, or difficulty understanding speech under adverse conditions. They often have problems with expressive language and writing. Individuals experiencing TFM typically are impulsive responders, are easily distracted and have short attention spans. They are similar to those expressed by ADHD patients. If ADD or ADHD is suspected, the Auditory Continuous Performance Test (ACPT) can be administered to screen for attention disorders. It is linked to Broca’s area of the inferior frontal lobe. Management skills focus on improving the signal-to-noise ratio and strengthening shortterm memory skills. Improving the signal-to-noise ratio can be accomplished by changing the acoustic environment of the classroom, use of assistive listening devices, and preferential seating. The use of the FM system has proved to be beneficial to this category. Noise desensitization has also shown to be beneficial. Other management strategies include use of earplugs and quiet study areas. Integration The Integration category includes those with difficulty integrating auditory information with other functions, such as visual and nonverbal aspects of speech. Type A patterns on the SSW are characteristic of this category. Weakness may be associated with the corpus collosum and the angular gyrus. Individuals are often labeled as dyslexics, and are usually very poor readers. Management strategies focus on improving phonemic and metaphonologic skills. A structured phonetic approach to reading is suggested. A word processor with an audio spellcheck can also be useful. Improving the signal to noise ratio is often necessary. Organizational The least commonly encountered category is the organizational category. It is characterized by reversals and sequencing errors. Individuals experiencing this condition are often disorganized at school and at home. It is most commonly seen secondary to another CAPD category, and is rarely found as an isolated CAPD category. Management strategies focus on improving sequencing skills and organizational habits. Consistent routines at school and home are essential, along with checklists, appointment books, and calendars. 9 Assessment of Peripheral and Central Auditory Function February 2004 Bibliography 1. Ge X, Shea J. Transtympanic electrocochleography: a 10 year experience. Otology and Neurotology. 2002 Sept; 23(5): 799-805. 2. Hall J, Antonelli P. Assessment of the peripheral and central auditory function. In: Bailey BJ, et al, eds. Head & Neck surgery- Otolaryngology. Philadelphia: Lippincott, 2001; 16591672. 3. Katz J, Stecker N, Henderson D, (Eds). Central auditory processing: A transdiscliplinary view. St. Louis: Mosby. 1992. 4. Katz J, Masters M, Stecker N. Central auditory processing disorders: Mostly management. Needham Heights: Allyn & Bacon. 1998. 5. Rovinett M, Glattke T. Otoacoustic emissions; Clinical Applications. New York: Theime. 1997. 6. Willeford J, Burleigh J. Handbook of central auditory processing disorders in children. Orlando: Grune & Stratton. 1985. 7. Wiley T, Fowler C. Acoustic Immittance measures in clinical audiology. San Diego: Singular Publishing Group. 1997. 10