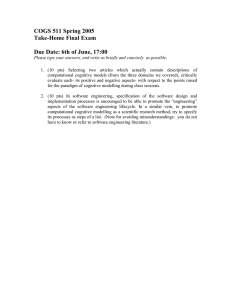

Computation and cognition - Electronics and Computer Science

advertisement