On Remote Procedure Call *

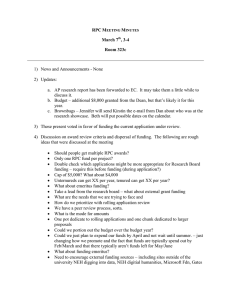

advertisement