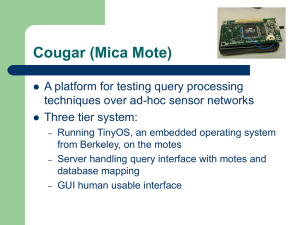

INDIAN INSTITUTE OF TECHNOLOGY KANPUR Design Issues and

advertisement