An inequality for the binary skew-symmetric broadcast channel and

advertisement

An inequality for the binary skew-symmetric

broadcast channel and its implications

Chandra Nair

arXiv:0901.1492v1 [cs.IT] 12 Jan 2009

Department of Information Engineering

Chinese University of Hong Kong

Sha Tin, N.T., Hong Kong

Email: chandra@ie.cuhk.edu.hk

Abstract— We establish an information theoretic inequality

concerning the binary skew-symmetric channel that was conjectured in [1]. This completes the proof that there is a gap

between the inner bound[2] and the outer bound[3] for the 2receiver broadcast channel; and constitutes the first example of

a provable gap between the bounds. The interesting aspect of the

proof is the technique employed, and is partly motivated by the

paper[4].

I. I NTRODUCTION

We consider the broadcast channel where a sender X

wishes to communicate independent messages M1 , M2 to

two receivers Y1 , Y2 . The capacity region for the broadcast

channel is an open problem and the best known inner bound

(achievable region) is the Marton’s inner bound, presented

below.

Bound 1: [2] The union of rate pairs (R1 , R2 ) satisfying

R1 ≤ I(U, W ; Y1 )

R2 ≤ I(V, W ; Y2 )

R1 + R2 ≤ min{I(W ; Y1 ), I(W ; Y2 )} + I(U ; Y1 |W )

+ I(V ; Y2 |W ) − I(U ; V |W )

over all (U, V, W ) ↔ X ↔ Y1 , Y2 constitutes an inner bound

to the capacity region.

Capacity regions have been established for a number of

special cases, and in every case where the capacity region is

known, the following outer bound and Marton’s inner bound

yields the same region.

Bound 2: ([2], [5], [3]) The union of rate pairs (R1 , R2 )

satisfying

R1 ≤ I(U ; Y1 )

R2 ≤ I(V ; Y2 )

R1 + R2 ≤ I(U ; Y1 ) + I(V ; Y2 |U )

R1 + R2 ≤ I(V ; Y2 ) + I(U ; Y1 |V )

over all (U, V ) ↔ X ↔ Y1 , Y2 constitutes an outer bound to

the capacity region.

There is no example where the Bounds 1 and 2 has been

explicitly evaluated and shown to be different. The difficulty

stems from the need for explicit evaluation of the bounds over

all choices of the auxiliary random variables.

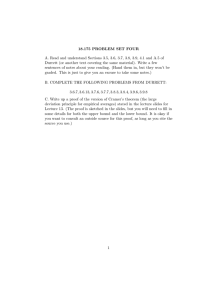

In [3] the authors studied Bound 2 for the binary skewsymmetric channel (Figure 1) and showed that a line-segment

1

2

1

2

0

0

Y

1

X

1

2

1

0

Z

1

2

1

Fig. 1.

Binary Skew Symmetric Channel

of R1 + R2 = 0.3711... lies on the boundary of the outer

bound. Much earlier, in [6] the authors studied the Cover-Vander-Muelen inner bound (same as Bound 1 except (U, V, W )

are restricted to be independent) for the same channel and

showed that a line-segment of R1 + R2 = 0.3616... lies on

the boundary of the bound. In [1] the authors studied that

Marton’s inner bound for the binary skew-symmetric broadcast

channel(BSSC) and showed that, provided an information

inequality (1) holds, a line-segment of R1 + R2 = 0.3616...

lies on the boundary of the Marton’s inner bound as well.

Therefore if (1) holds the maximum sum rate of the region

characterized by the Marton’s inner bound would be 0.3616..,

while the the maximum sum rate of the region characterized

by the bound 2 would be 0.3711... This in particular implies

that the bounds differ for the binary skew-symmetric channel.

It was conjectured in [1] that for the BSSC, shown in Figure

1, we have

I(U ; Y1 ) + I(V ; Y2 ) − I(U ; V ) ≤ max{I(X; Y1 ), I(X; Y2 )},

(1)

for all (U, V ) ↔ X ↔ Y1 , Y2 .

It should be noted that this inequality was established in [6]

when U, V were independent; and in [1] for dependent U, V

and P(X = 0) ∈ [0, 51 ] ∪ [ 45 , 1].

In this paper we establish the conjectured inequality in

totality, i.e. establish that equation (1) holds for the binary

skew-symmetric channel. Therefore, from [1], this establishes

that the regions characterized by Bounds 1 and 2 differ for the

broadcast channel. This calculation is straightforward and we

do not repeat the arguments from [1].

Remark 1: It may be worthwhile to note that the method

used here vastly differs from any of the previous approaches

in [6], [1]. It was indeed the inability of the author to

easily extend any of the existing methods that necessitated

the approach adopted in the paper.

Remark 2: While circulating this manuscript the author was

informed that Gohari and Anantharam have also established

this inequality (under an assumption that Marton’s inner bound

matches the capacity region). Their communication suggested

that the technique used was the following: they showed that

one can restrict the cardinalities of U and V to be binary and

then carried out numerical simulations to verify this inequality.

It is not known whether Marton’s inner bound matches the

capacity region for the BSSC, however it is very likely that

such is the case.

In [4] the authors compared the size of the images of a set

via two channels and used this to establish the capacity region

of a broadcast channel with degraded message sets. In this

paper we compare the sizes of the intersection of the images

of two codewords via the two channels in BSSC to obtain a

necessary ingredient in the proof. The proof is unusual and

may have deeper implications that are yet to be explored.

The outline of the proof is as follows:

(i) Using the random coding strategy developed by Marton,

we will show that for any broadcast channel there exists

a sequence of (2nR1 ×2nR2 )-codebooks for the broadcast

channel with R1 = I(U ; Y1 ) − ǫ, R2 = I(V ; Y2 ) −

I(U ; V ) − ǫ (for any ǫ > 0), such that the codebook

satisfies two properties A and B (to be defined). Properties A and B are related to the size of decoding error

events.

(ii) Separately, we will show that for any broadcast channel

if an (2nR1 × 2nR2 )-codebook satisfies two Properties A

and C, then the codebook can also be considered as a

single (2n(R1 +R2 ) )-codebook for the channel X ↔ Y1

whose average probability of error tends to zero. That

is, the receiver Y1 can distinguish both the messages

M1 and M2 with high probability. This implies from the

converse of the channel coding theorem (or the maximal

code lemma in [4]) that R1 + R2 ≤ I(X; Y1 ) + δ for

any δ > 0 (for n sufficiently large) where p(x) is the

empirical distribution of the code.

(iii) For the BSSC channel we will show that Property B

implies Property C (when P(X = 0) < 21 ), for n

sufficiently large, and hence combining the earlier two

results (and letting ǫ, δ → 0) we will obtain,

I(U ; Y1 ) + I(V ; Y2 ) − I(U ; V ) ≤ I(X; Y1 ).

II. P RELIMINARIES

Consider a codebook {xn (i, j)}, i = 1, ..., M ; j = 1, ..., N

for the broadcast channel X → (Y1 , Y2 ).

We define the following sets of sequences in Y1n .

C(i,j) : This is the set of output sequences y1n that is typical

with respect to xn (i, j).

n

• Ci : The set of output sequences y1 that is typical with at

n

n

least one of x (i, 1), . . . , x (i, N ), i.e. Ci = ∪N

j=1 C(i,j) .

Similarly, we define the corresponding sets in Y2n as follows:

n

• D(i,j) : The set of output sequences y2 that is typical with

n

respect to x (i, j).

n

• Dj : The set of output sequences y2 that is typical

n

n

with at least one of x (1, j), . . . , x (M, j), i.e. Dj =

∪M

i=1 D(i,j) .

Remark 3: We use the definition of typical output sequences as found in [4]. An output sequence y n is deemed

typical with respect to an transmitted sequence xn over a

channel characterized by the transition probability p(y|x) if

the following holds:

•

|N (a, b) − N (a)p(b|a)| ≤ ωn , ∀a ∈ X , b ∈ Y

where N (a, b) counts the number of locations i : x(i) =

a, y(i) = b, and N(a) counts the number of locations i :

x(i) = a. Further ωn is a fixed sequence that satisfies:

ωn

ωn

√

n → 0, n → ∞, as n → ∞. When p(b|a) = 0, one

can also require that N (a, b) = 0 for y n to be considered

typical w.r.t. xn .

We now define the properties A, B, and C that we referred

to in the outline.

Definition 1: A codebook is said to have Property A if the

following condition holds. Let ǫ > 0 be an arbitrary given

number. Then, we require

M N

1 XX X

P(y1n ∈ Ci1 ∩ Ci |xn (i, j) is transmitted) < ǫ.

M N i=1 j=1 i 6=i

1

Definition 2: A codebook is said to have Property B if the

following condition holds. Let ǫ > 0 be an arbitrary given

number. Then, we require

M N

1 XX X

P(y2n ∈ D(i,j1 ) ∩D(i,j) |xn (i, j) is transmitted) < ǫ.

M N i=1 j=1

j1 6=j

(2)

Definition 3: A codebook is said to have Property C if the

following condition holds. Let ǫ > 0 be an arbitrary given

number. Then, we require

M N

1 XX X

P(y1n ∈ C(i,j1 ) ∩C(i,j) |xn (i, j) is transmitted) < ǫ.

M N i=1 j=1

j1 6=j

The motivation for defining these properties will become

clear later. However the following relation is also worth

mentioning. Observe that the average probability of error for

receiver 1 is related to the size of

M N

1 XX n

P y1 ∈ ∪i1 6=i Ci1 ∩Ci |xn (i, j) is transmitted .

M N i=1 j=1

In traditional random-coding arguments the union bound (i.e.

replacing union with sum) is used to limit the size of this

error event. Property A subsumes the union bound in its very

definition, and hence random coding arguments will suffice to

show the existence of codes satisfying Properties A and B.

III. M AIN

We restate the main result.

Theorem 1: For the binary skew-symmetric broadcast channel shown in Figure 1, when P(X = 0) ≤ 12 , we have

I(U ; Y1 ) + I(V ; Y2 ) − I(U ; V ) ≤ I(X; Y1 ),

(3)

for all (U, V ) ↔ X ↔ (Y1 , Y2 ).

The result for P(X = 0) ≥ 12 follows by symmetry and

interchanging the roles of receivers Y1 and Y2 . (Note that

P(X = 0) ≤ 12 corresponds to the region where I(X; Y1 ) ≥

I(X; Y2 ).)

Before we proceed into the proof, we present the key lemma

that is particular to the binary skew-symmetric broadcast

channel. (This corresponds to step (iii) in the outline of the

proof.)

Lemma 1: For any two sequences xn (i, j) and xn (i1 , j1 )

that belong to the same type with P(X = 0) < 12 , and n

sufficiently large,

P(y1n ∈ C(i1 ,j1 ) ∩ C(i,j) |xn (i, j) is transmitted)

P(y2n

Let us now compute the probability of events (averaged over

the choice of the codebooks) corresponding to Properties A

and B respectively.

Since xn (i1 , j1 ) is jointly typical with un (i1 ) with high

probability and Y1 ↔ X ↔ U is a Markov chain it follows

(from the strong Markov property) that if y1n is jointly typical

with xn (i1 , j1 ) then y1n is also jointly typical with un (i1 ).

Therefore (ignoring the errors events already considered by

Marton [2] like atypical sequences being generated, bins j

having no V n (k) jointly typical with a U n (i), etc.)

P(y1n ∈ Ci1 ∩ Ci , xn (i, j) is transmitted)

≤ P(y1n jointly typ. with un (i1 ), xn (i, j) is transmitted)

1 −n(I(U;Y1 )−0.5ǫ)

2

.

≤

MN

Since un (i1 ) is generated independent of xn (i, j) (when i1 6=

i) we see that the probability

M N

1 XX X

P(y1n ∈ Ci1 ∩ Ci |xn (i, j) is transmitted))

M N i=1 j=1 i 6=i

1

≤ 2nR1 2−n(I(U ;Y1 )−0.5ǫ) ≤ 2−n0.5ǫ .

n

≤

∈ D(i1 ,j1 ) ∩ D(i,j) |x (i, j) is transmitted).

Proof: The proof of this comparison lemma is provided

in the Appendix.

Corollary 1: If a codebook for the BSSC channel, with the

empirical distribution P(X = 0) < 12 , satisfies Property B

then the codebook also satisfies Property C.

Proof: The proof follows from Lemma 1 and the definitions of properties B and C.

A. Revisiting Márton’s coding scheme

The first step of the proof of Theorem 1 is to show

the existence of a codebook with R1 = I(U ; Y1 ) − ǫ and

R2 = I(V ; Y2 ) − I(U ; V ) − ǫ that satisfies Properties A

and B. Consider the random coding scheme involving random

binning proposed by Márton[2], that achieves the corner point

R1 = I(U ; Y1 ) − ǫ and R2 = I(V ; Y2 ) − I(U ; V ) − ǫ.

Let us recall the code-generation strategy. Given Q

a p(u, v)

one generates 2nR1 sequences U n i.i.d. according to i p(ui );

and independently

generates 2nT2 sequences V n i.i.d. accordQ

ing to i p(vi ). Here one can assume T2 = I(V ; Y2 ) − 0.5ǫ.

The sequences V n are randomly and uniformly binned into

2nR2 bins. Each bin therefore roughly contains 2n(I(U;V )+0.5ǫ)

sequences V n . For every pair (i, j) we select a sequence V n

from the bin numbered j that is jointly typical with U n (i)

n

(declare error if no such

Q V exists) and generate a sequence

n

X (i, j) according to i p(xi |ui , vi ).

Marton[2] showed that with high probability1 there will

be at least one sequence V n from the bin numbered j that

is jointly typical with U n (i); and further that the triple

(U n (i), V n (j), X n (i, j)) is jointly typical. Here the probability is over the uniformly random choice of the pair (i, j) as

well as over the choice of the codebooks.

1 An event E is said to have high probability if P(E ) ≥ 1 − a where

n

n

n

an → 0 as n → ∞.

Therefore Property A is satisfied with high probability over

the choice of the codebooks.

Similar reasoning (again ignoring the events already considered by Marton[2]) implies that

P(y2n ∈ D(i,j1 ) ∩ D(i,j) , xn (i, j) is transmitted)

≤ P(y2n jointly typ. with v n (k), xn (i, j) is transmitted).

Here v n (k) refers to the v n sequence (belonging to bin j1 )

that was used in the generation of xn (i, j1 ). Let v n (1) be the

v n sequence used for xn (i, j). Since v n (k), k 6= 1 is generated

independent of xn (i, j) we see that

X

P(y2n jointly typ. with v n (k), xn (i, j) is transmitted)

k6=1

1 n(I(V ;Y2 )−0.5ǫ) −n(I(V ;Y2 )−0.25ǫ)

2

2

MN

1 −n0.25ǫ

2

.

≤

MN

≤

This implies

M N

1 XX X

P(y2n ∈ D(i,j1 ) ∩ D(i,j) |xn (i, j) is transmitted)

M N i=1 j=1

j1 6=j

< ǫ.

Therefore Property B is satisfied with high probability over

the choice of the codebooks. Since intersection of two events

of high probability is also an event of high probability Properties A and B are simultaneously satisfied with high probability

over the choice of the codebooks.

Therefore, there exists at least one codebook with rates

R1 = I(U ; Y1 ) − ǫ and R2 = I(V ; Y2 ) − I(U ; V ) − ǫ that

satisfies both Properties A and B.

B. Converting a broadcast channel codebook to a single

receiver codebook

By symmetry in the channel and interchanging the roles of

Y1 and Y2 , we also obtain the result

The second step of the proof of Theorem 1 is to show

that if a codebook satisfies the Properties A and C (again to

be defined), then receiver Y1 can decode both the messages

(M1 , M2 ) with very small average probability of error.

Claim 1: Suppose a codebook satisfies Properties A and C,

i.e.

I(U ; Y1 ) + I(V ; Y2 ) − I(U ; V ) ≤ I(X; Y2 ),

(5)

1

2

follows

M N

1 XX X

P(y1n ∈ Ci1 ∩ Ci |xn (i, j) is transmitted) < ǫ,

M N i=1 j=1 i 6=i

1

2.

The result for P(X = 0) =

when P(X = 0) >

from continuity.

This completes the proof of Theorem 1.

IV. D ISCUSSION

The proof of the inequality presented in this paper completes

the argument in [1] and shows that regions obtained by 1 and

2 are indeed different for the binary skew symmetric channel.

M N

1 XX X

Interestingly, this inequality also establishes that Marton’s

n

n

P(y1 ∈ C(i,j1 ) ∩C(i,j) |x (i, j) is transmitted) < ǫ,

M N i=1 j=1 j 6=j

inner bound for the BSSC without the random variable W

1

reduces to the time-division region.

then there exists a decoding map (M̂1 , M̂2 ) for receiver Y1

The capacity region of the BSSC remains unknown. It is

such that the average probability of error is smaller than 3ǫ.

natural to believe at first sight that time-division is optimal for

Proof: Consider the following decoding

map. If y1n ∈ the BSSC. However as was shown in [6], one can improve on

S

F

:= Ci \ (Ei ∪ E(i,j) ) where Ei = i1 6=i Ci ∩ Ci1 , E(i,j) = the time-division region using the ”common information” ranS(i,j)

dom variable W . In [3] it was shown that this improved region

j1 6=j C(i,j) ∩ C(i,j1 ) , then set (M̂1 = i, M̂2 = j). It is

can be re-interpreted as a randomized time-division strategy,

straightforward to check that the sets Fi,j are disjoint.

thus reaffirming the belief that some form of time-division

The average probability of decoding error is

is optimal for BSSC. The comparison property (Lemma 1)

M N

1 XX

n

n

seems to give a tool to quantify our intuition in a mathematical

P(y1 ∈

/ Fi,j |x (i, j) is transmitted)

M N i=1 j=1

framework.

M

N

It would be very interesting to see if a similar reasoning can

1 XX

=

P(y1n ∈

/ Ci |xn (i, j) is transmitted)

be

used to derive an outer bound for the BSSC that matches the

M N i=1 j=1

Marton’s inner bound (at least the sum rate). Essentially, this

+ P(y1n ∈ Ei ∪ E(i,j) |xn (i, j) is transmitted)

paper proves that the rates of any codebook for the BSSC that

M X

N

X

satisfies properties A and B must lie within the time-division

`

1

ǫ + P(y1n ∈ Ei |xn (i, j) is transmitted)

≤

region. Marton’s random coding scheme with U, V is just one

M N i=1 j=1

´

way

obtain such a codebook, while the codebook obtained by

+ P(y1n ∈ E(i,j) |xn (i, j) is transmitted)

Marton’s coding scheme with U, V, W do not satisfy properties

M N

X

1 XX`

A and B simultaneously.

P(y1n ∈ Ci1 ∩ Ci |xn (i, j) is transmitted)

ǫ+

≤

M N i=1 j=1

It would also be interesting to obtain a routine proof of

i1 6=i

X

´

this

inequality. Hopefully a routine proof may lead to better

P(y1n ∈ C(i,j1 ) ∩ C(i,j) |xn (i, j) is transmitted)

+

understanding of the types of information inequalities possible

j1 6=j

under a specific channel coding setting. (See Remark 2 on a

≤ 3ǫ.

new development along these lines.)

Therefore any (R1 , R2 )-codebook for the broadcast channel

ACKNOWLEDGEMENT

X → (Y1 , Y2 ) satisfying properties A and C forms an R1 +R2 The author is grateful to Vincent Wang for extensive nucodebook for the channel X → Y1 .

merical simulations that he performed which formed the basis

From Corollary 1 we know that if Property B is satisfied of the conjecture in [1]. The author is also grateful for several

and P(X = 0) < 12 , then Property C is satisfied. In Section discussions he had with Profs. Bruce Hajek and Abbas El

III-A we have shown the existence of a codebook with rates Gamal.

R1 = I(U ; Y1 ) − ǫ and R2 = I(V ; Y2 ) − I(U ; V ) − ǫ that

R EFERENCES

satisfies both Properties A and B. Therefore from Claim 1 and

and Corollary 1 we have a rate R = R1 + R2 = I(U ; Y1 ) + [1] C. Nair and V. W. Zizhou, “On the inner and outer bounds for 2receiver discrete memoryless broadcast channels,” Proceedings of the ITA

I(V ; Y2 )−I(U ; V )−2ǫ code for the channel X → Y1 . Clearly

Workshop, 2008, cs.IT/0804.3825.

from the converse of the channel coding theorem, we require [2] K. Marton, “A coding theorem for the discrete memoryless broadcast

channel,” IEEE Trans. Info. Theory, vol. IT-25, pp. 306–311, May, 1979.

R ≤ I(X; Y1 ). Combining the above two results we have the

[3] C. Nair and A. El Gamal, “An outer bound to the capacity region of the

inequality (letting ǫ → 0)

broadcast channel,” IEEE Trans. Info. Theory, vol. IT-53, pp. 350–355,

1

I(U ; Y1 ) + I(V ; Y2 ) − I(U ; V ) ≤ I(X; Y1 ),

when P(X = 0) < 12 .

(4)

January, 2007.

[4] J. Körner and K. Marton, “Images of a set via two channels and their

role in mult-user communication,” IEEE Trans. Info. Theory, vol. IT-23,

pp. 751–761, Nov, 1977.

[5] A. El Gamal, “The capacity of a class of broadcast channels,” IEEE Trans.

Info. Theory, vol. IT-25, pp. 166–169, March, 1979.

[6] B. Hajek and M. Pursley, “Evaluation of an achievable rate region for

the broadcast channel,” IEEE Trans. Info. Theory, vol. IT-25, pp. 36–46,

January, 1979.

The probability of obtaining any sequence in A is 2−na+o(n)

and the probability of obtaining any sequence in B is

2−n(1−a)+o(n) , when xn (1) is transmitted. Therefore,

A PPENDIX

P (A2 |xn (1)) = 2n(1−a−b)H((1−a)/(2(1−a−b)))−n(1−a)+o(n) .

Recalling Lemma 1. Consider two sequences xn (1) and

n

x (2) that belong to the same type (a, 1 − a), when a = m

n

for some 0 ≤ a < 12 . Let A represent the intersection of the

typical sequences of xn (1) and xn (2) in the space Y1n ; and let

B represent the intersection of the typical sequences of xn (1)

and xn (2) in the space Y2n . Then Lemma 1 states that

P(y1n ∈ A|xn (1) is transmitted)

≤ P(y2n ∈ B|xn (1) is transmitted).

Proof: Let k = nb be the number of locations i where

Xi (1) = 0 and Xi (2) = 1. The typical sequences in A are

those sequences that have n a2 ± ωn zeros in the n(a − b)

locations where xi (1) = xi (2) = 0, and ones everywhere

else. The typical sequences in B are those sequences that have

n 1−a

2 ± ωn ones in the n(1 − a − b) locations where xi (1) =

xi (2) = 1, and zeros everywhere else. (Recall ωn from the

definition of typical sequences in Remark

3.)

P+ω

n(a−b)

n

and

The number of sequences in A is

k=−ωn n a

+k

2

P+ωn

n(1−a−b) the number of sequences in B is k=−ωn n(1−a)/2+k . Note

that when b > a2 we can see that the set A is essentially

empty (By essentially empty we mean that the size is subexponential.). Therefore the lemma is trivially valid when b >

a

a

2 . The interesting case occurs when 0 ≤ b ≤ 2 .

P (A1 |xn (1)) = 2n(a−b)H(a/(2(a−b)))−na+o(n) ,

Thus, for large n, it suffices to show that for 0 ≤ b ≤

a

)−a

(a − b)H(

2(a − b)

1−a

) − 1 − a,

≤ (1 − a − b)H(

2(1 − a − b)

a

2

≤

1

4

or rewriting, one needs to establish

1−a

a

) − (a − b)H(

).

2(1 − a − b)

2(a − b)

(6)

Observe that when b = 0 we have an equality; therefore

it suffices to show that the right hand side is an increasing

function of b for 0 ≤ b ≤ a2 .

x

Define the function g(x, b) = (x − b)H( 2(x−b)

). Then

x − 2b

x

∂

.

g(x, b) = log2

= log2 1 −

∂b

2(x − b)

2(x − b)

1 − 2a ≤ (1 − a − b)H(

Since

∂

∂b g(x, b)

is an increasing function of x we have

∂

∂

1

g(1 − a, b) ≥

g(a, b), 0 ≤ a ≤

∂b

∂b

2

and hence the right hand side is an increasing function of b.

This proves Equation (6) and completes the proof of the

Lemma 1.