On the adaptive elastic-net with a diverging number of parameters

advertisement

The Annals of Statistics

2009, Vol. 37, No. 4, 1733–1751

DOI: 10.1214/08-AOS625

© Institute of Mathematical Statistics, 2009

ON THE ADAPTIVE ELASTIC-NET WITH A DIVERGING

NUMBER OF PARAMETERS

B Y H UI Z OU1

AND

H AO H ELEN Z HANG2

University of Minnesota and North Carolina State University

We consider the problem of model selection and estimation in situations

where the number of parameters diverges with the sample size. When the dimension is high, an ideal method should have the oracle property [J. Amer.

Statist. Assoc. 96 (2001) 1348–1360] and [Ann. Statist. 32 (2004) 928–961]

which ensures the optimal large sample performance. Furthermore, the highdimensionality often induces the collinearity problem, which should be properly handled by the ideal method. Many existing variable selection methods

fail to achieve both goals simultaneously. In this paper, we propose the adaptive elastic-net that combines the strengths of the quadratic regularization and

the adaptively weighted lasso shrinkage. Under weak regularity conditions,

we establish the oracle property of the adaptive elastic-net. We show by simulations that the adaptive elastic-net deals with the collinearity problem better

than the other oracle-like methods, thus enjoying much improved finite sample performance.

1. Introduction.

1.1. Background. Consider the problem of model selection and estimation in

the classical linear regression model

(1.1)

y = Xβ ∗ + ε,

where y = (y1 , . . . , yn )T is the response vector and xj = (x1j , . . . , xnj )T , j =

1, . . . , p, are the linearly independent predictors. Let X = [x1 , . . . , xp ] be the predictor matrix. Without loss of generality, we assume the data are centered, so the

intercept is not included in the regression function. Throughout this paper, we assume the errors are identically and independently distributed with zero mean and

finite variance σ 2 . We are interested in the sparse modeling problem where the

true model has a sparse representation (i.e., some components of β ∗ are exactly

zero). Let A = {j : βj∗ = 0, j = 1, 2, . . . , p}. In this work, we call the size of A the

Received December 2007; revised March 2008.

1 Supported by NSF Grant DMS-07-06733.

2 Supported by NSF Grant DMS-06-45293 and National Institutes of Health Grant NIH/NCI R01

CA-085848.

AMS 2000 subject classifications. Primary 62J05; secondary 62J07.

Key words and phrases. Adaptive regularization, elastic-net, high dimensionality, model selection, oracle property, shrinkage methods.

1733

1734

H. ZOU AND H. H. ZHANG

intrinsic dimension of the underlying model. We wish to discover the set A and

estimate the corresponding coefficients.

Variable selection is fundamentally important for knowledge discovery with

high-dimensional data [Fan and Li (2006)] and it could greatly enhance the prediction performance of the fitted model. Traditional model selection procedures

follow best-subset selection and its step-wise variants. However, best-subset selection is computationally prohibitive when the number of predictors is large. Furthermore, as analyzed by Breiman (1996), subset selection is unstable; thus, the

resulting model has poor prediction accuracy. To overcome the fundamental drawbacks of subset selection, statisticians have recently proposed various penalization

methods to perform simultaneous model selection and estimation. In particular,

the lasso [Tibshirani (1996)] and the SCAD [Fan and Li (2001)] are two very popular methods due to their good computational and statistical properties. Efron et

al. (2004) proposed the LARS algorithm for computing the entire lasso solution

path. Knight and Fu (2000) studied the asymptotic properties of the lasso. Fan and

Li (2001) showed that the SCAD enjoys the oracle property, that is, the SCAD

estimator can perform as well as the oracle if the penalization parameter is appropriately chosen.

1.2. Two fundamental issues with the 1 penalty. The lasso estimator [Tibshirani (1996)] is obtained by solving the 1 penalized least squares problem

β(lasso) = arg min y − Xβ2 + λβ1 ,

(1.2)

β

2

p

where β1 = j =1 |βj | is the 1 -norm of β. The 1 penalty enables the lasso

to simultaneously regularize the least squares fit and shrink some components of

β(lasso) to zero for some appropriately chosen λ. The entire lasso solution paths

can be computed by the LARS algorithm [Efron et al. (2004)]. These nice properties make the lasso a very popular variable selection method.

Despite its popularity, the lasso does have two serious drawbacks: namely, the

lack of oracle property and instability with high-dimensional data. First of all, the

lasso does not have the oracle property. Fan and Li (2001) first pointed out that

asymptotically the lasso has nonignorable bias for estimating the nonzero coefficients. They further conjectured that the lasso may not have the oracle property

because of the bias problem. This conjecture was recently proven in Zou (2006).

Zou (2006) further showed that the lasso could be inconsistent for model selection

unless the predictor matrix (or the design matrix) satisfies a rather strong condition.

Zou (2006) proposed the following adaptive lasso estimator

(1.3)

β(AdaLasso) = arg min y − Xβ2 + λ

β

2

p

j =1

ŵj |βj |,

1735

ADAPTIVE ELASTIC-NET

p

where {ŵj }j =1 are the adaptive data-driven weights and can be computed by

ŵj = (|β̂jini |)−γ , where γ is a positive constant and β is an initial root-n consistent estimate of β. Zou (2006) showed that, with an appropriately chosen λ,

the adaptive lasso performs as well as the oracle. Candes, Wakin and Boyd (2008)

used the adaptive lasso idea to enhance sparsity in sparse signal recovery via the

reweighted 1 minimization.

Secondly, the 1 penalization methods can have very poor performance when

there are highly correlated variables in the predictor set. The collinearity problem

is often encountered in high-dimensional data analysis. Even when the predictors

are independent, as long as the dimension is high, the maximum sample correlation

can be large, as shown in Fan and Lv (2008). Collinearity can severely degrade the

performance of the lasso. As shown in Zou and Hastie (2005), the lasso solution

paths are unstable when predictors are highly correlated. Zou and Hastie (2005)

proposed the elastic-net as an improved version of the lasso for analyzing highdimensional data. The elastic-net estimator is defined as follows:

λ2

(1.4)

β(enet) = 1 +

arg min y − Xβ22 + λ2 β22 + λ1 β1 .

n

β

ini

If the predictors are standardized (each variable has mean zero and L2 -norm one),

then we should change (1 + λn2 ) to (1 + λ2 ) as in Zou and Hastie (2005). The 1

part of the elastic-net performs automatic variable selection, while the 2 part stabilizes the solution paths and, hence, improves the prediction. In an orthogonal

design where the lasso is shown to be optimal Donoho et al. (1995), the elasticnet automatically reduces to the lasso. However, when the correlations among the

predictors become high, the elastic-net can significantly improve the prediction

accuracy of the lasso.

1.3. The adaptive elastic-net. The adaptively weighted 1 penalty and the

elastic-net penalty improve the lasso in two different directions. The adaptive lasso

achieves the oracle property of the SCAD and the elastic-net handles the collinearity. However, following the arguments in Zou and Hastie (2005) and Zou (2006),

we can easily see that the adaptive lasso inherits the instability of the lasso for

high-dimensional data, while the elastic-net lacks the oracle property. Thus, it is

natural to consider combining the ideas of the adaptively weighted 1 penalty and

the elastic-net regularization to obtain a better method that can improve the lasso

in both directions. To this end, we propose the adaptive elastic-net that penalizes

the squared error loss using a combination of the 2 penalty and the adaptive 1

penalty. Since the adaptive elastic-net is designed for high-dimensional data analysis, we study its asymptotic properties under the assumption that the dimension

diverges with the sample size.

Pioneering papers on asymptotic theories with diverging number of parameters

include [Huber (1988) and Portnoy (1984)] which studied the M-estimators. Recently, Fan, Peng and Huang (2005) studied a semi-parametric model with a growing number of nuisance parameters, whereas Lam and Fan (2008) investigated the

1736

H. ZOU AND H. H. ZHANG

profile likelihood ratio inference for the growing number of parameters. In particular, our work is influenced by Fan and Peng (2004) who studied the oracle property

of nonconcave penalized likelihood estimators. Fan and Peng (2004) provocatively

argued that it is important to study the validity of the oracle property when the dimension diverges. We would like to know whether the adaptive elastic-net enjoys

the oracle property with a diverging number of predictors. This question will be

thoroughly investigated in this paper.

The rest of the paper is organized as follows. In Section 2, we introduce the

adaptive elastic-net. Statistical theory, including the oracle property, of the adaptive

elastic-net is established in Section 3. In Section 4, we use simulation to compare

the finite sample performance of the adaptive elastic-net with the SCAD and other

competitors. Section 5 discusses how to combine SIS of Fan and Lv (2008) and the

adaptive elastic-net to deal with the ultra-high dimension cases. Technical proofs

are presented in Section 6.

2. Method. The adaptive elastic-net can be viewed as a combination of the

elastic-net and the adaptive lasso. Suppose we first compute the elastic-net estimaβ(enet) as defined in (1.4), and then we construct the adaptive weights by

tor ŵj = (|β̂j (enet)|)−γ ,

(2.1)

j = 1, 2, . . . , p,

where γ is a positive constant. Now we solve the following optimization problem

to get the adaptive elastic-net estimates

(2.2)

β(AdaEnet)

λ2

= 1+

n

arg min y − Xβ22

β

+ λ2 β22

+ λ∗1

p

ŵj |βj | .

j =1

β =

β(AdaEnet) for the sake of convenience.

From now on, we write If we force λ2 to be zero in (2.2), then the adaptive elastic-net reduces to the

adaptive lasso. Following the arguments in Zou and Hastie (2005), we can easily

show that in an orthogonal design the adaptive elastic-net reduces to the adaptive

lasso, regardless the value of λ2 . This is desirable because, in that setting, the

adaptive lasso achieves the optimal minimax risk bound [Zou (2006)]. The role of

the 2 penalty in (2.2) is to further regularize the adaptive lasso fit whenever the

collinearity may cause serious trouble.

We know the elastic-net naturally adopts a sparse representation. One can use

ŵj = (|β̂j (enet)| + 1/n)−γ to avoid dividing zeros. We can also define ŵj = ∞

enet = {j : β̂j (enet) = 0} and A

c denotes its complewhen β̂j (enet) = 0. Let A

enet

ment set. Then, we have β Acenet = 0 and

β

Aenet

= 1+

(2.3)

×

λ2

n

arg min y − XAenet β22

β

+ λ2 β22

+ λ∗1

enet

j ∈A

ŵj |βj | ,

1737

ADAPTIVE ELASTIC-NET

enet |, the size of A

enet .

where β in (2.3) is a vector of length |A

∗

The 1 regularization parameters λ1 and λ1 are directly responsible for the sparsity of the estimates. Their values are allowed to be different. On the other hand,

we use the same λ2 for the 2 penalty component in the elastic-net and the adaptive

elastic-net estimators, because the 2 penalty offers the same kind of contribution

in both estimators.

3. Statistical theory. In our theoretical analysis, we assume the following

regularity conditions throughout:

(A1) We use λmin (M) and λmax (M) to denote the minimum and maximum

eigenvalues of a positive definite matrix M, respectively. Then, we assume

1 T

1

X X ≤ λmax XT X ≤ B,

n

n

where b and B are two positive

constants.

b ≤ λmin

maxi=1,2,...,n

p

x2

j =1 ij

= 0;

(A2) limn→∞

n

(A3) E[|ε|2+δ ] < ∞ for some δ > 0;

(A4) limn→∞ log(p)

log(n) = ν for some 0 ≤ ν < 1.

2ν

To construct the adaptive weights (ω̂), we take a fixed γ such that γ > 1−ν

. In

2ν

our numerical studies, we let γ = 1−ν + 1 to avoid the tuning on γ . Once γ is

chosen, we choose the regularization parameters according to the following conditions:

λ2

λ1

= 0,

lim √ = 0

lim

(A5)

n→∞ n

n→∞ n

and

λ∗

lim √1 = 0,

n→∞ n

(A6)

lim min

n→∞

λ∗

lim √1 n((1−ν)(1+γ )−1)/2 = ∞.

n→∞ n

λ2 ∗2

lim √

βj = 0,

n→∞ n

j ∈A

√ 1/γ n

n

∗

,

min

|β

|

→ ∞.

√

√ ∗

j

j ∈A

λ1 p

pλ1

Conditions (A1) and (A2) assume the predictor matrix has a reasonably good

behavior. Similar conditions were considered in Portnoy (1984). Note that in

the linear regression setting, condition (A1) is exactly condition (F) in Fan and

Peng (2004). Condition (A3) is used to establish the asymptotic normality of

β(AdaEnet).

It is worth pointing out that condition (A4) is weaker than that used in Fan and

Peng (2004), in which p is assumed to satisfy p4 /n → 0 or at most p3 /n → 0.

It means their results require ν < 13 . Our theory removes this limitation. For any

1738

H. ZOU AND H. H. ZHANG

0 ≤ ν < 1, we can choose an appropriate γ to construct the adaptive weights and

2ν

the oracle property holds as long as γ > 1−ν

. Also note that, in the finite dimension

setting, ν = 0; thus, any positive γ can be used, which agrees with the results in

Zou (2006).

Condition (A6) is similar to condition (H) in Fan and Peng (2004). Basically,

condition (A6) allows the nonzero coefficients to vanish but at a rate that can be

distinguished by the penalized least squares. In the finite dimension setting, the

condition is implicitly assumed.

T HEOREM 3.1. Given the data (y, X), let ŵ = (ŵ1 , . . . , ŵp ) be a vector

whose components are all nonnegative and can depend on (y, X). Define

β ŵ (λ2 , λ1 ) = arg min

β

y − Xβ22

+ λ2 β22

+ λ1

p

ŵj |βj |

j =1

for nonnegative parameters λ2 and λ1 . If ŵj = 1 for all j , we denote β ŵ (λ2 , λ1 )

by β(λ2 , λ1 ) for convenience.

If we assume the model (1.1) and condition (A1), then

p

λ22 β ∗ 22 + Bpnσ 2 + λ21 E( j =1 ŵj2 )

∗ 2

E β ŵ (λ2 , λ1 ) − β 2 ≤ 4

.

2

(bn + λ2 )

In particular, when ŵj = 1 for all j , we have

β(λ2 , λ1 ) − β ∗ 22 ≤ 4

E λ22 β ∗ 22 + Bpnσ 2 + λ21 p

.

(bn + λ2 )2

It is worth mentioning that the derived risk bounds are nonasymptotic. Theorem 3.1 is very useful for the asymptotic analysis. A direct corollary of Theorem 3.1 is that, under conditions (A1)–(A6), β(λ2 , λ1 ) is a root-(n/p)-consistent

estimator. This consistent rate is the same as the result of SCAD [Fan and

Peng (2004)]. The root-(n/p) consistency result suggests that it is appropriate to

use the elastic-net to construct the adaptive weights.

T HEOREM 3.2.

(3.1)

Let us write β ∗ = (β ∗A , 0) and define

∗

2

2

∗

β A = arg min y − XA β2 + λ2

βj + λ1

ŵj |βj | .

β

j ∈A

Then, with probability tending to 1, ((1 +

λ2 ∗

n )β A , 0)

j ∈A

is the solution to (2.2).

Theorem 3.2 provides an asymptotic characterization of the solution to the

∗

β A borrows the concept of “oraadaptive elastic-net criterion. The definition of cle” [Donoho and Johnstone (1994), Fan and Li (2001), Fan and Peng (2004) and

Zou (2006)]. If there was an oracle informing us the true subset model, then we

ADAPTIVE ELASTIC-NET

1739

would use this oracle information and the adaptive elastic-net criterion would become that in (2.3). Theorem 3.2 tells us that, asymptotically speaking, the adaptive

elastic-net works as if it had such oracle information. Theorem 3.2 also suggests

that the adaptive elastic-net should enjoy the oracle property, which is confirmed

in the next theorem.

T HEOREM 3.3. Under conditions (A1)–(A6), the adaptive elastic-net has the

β(AdaEnet) must satisfy:

oracle property; that is, the estimator 1. Consistency in selection: Pr({j : β̂(AdaEnet)j = 0} = A) → 1,

I+λ −1

A

2. Asymptotic normality: α T 1+λ2 2 /n

A (

β(AdaEnet)A − β ∗A ) →d N(0, σ 2 ),

where A = XTA XA and α is a vector of norm 1.

1/2

By Theorem 3.3, the selection consistency and the asymptotic normality of the

adaptive elastic-net are still valid when the number of parameters diverges. Technically speaking, the selection consistency result is stronger than that Theorem 3.2

implies, although Theorem 3.2 plays an important role in the proof of Theorem 3.3.

As a special case, when we let λ2 = 0, which is a choice satisfying conditions (A5)

and (A6), Theorem 3.3 tells us that the adaptive lasso enjoys the selection consistency and the asymptotical normality

d

1/2 β(AdaLasso)A − β ∗A → N(0, σ 2 ).

αT A 4. Numerical studies. In this section, we present simulations to study the

finite sample performance of the adaptive elastic-net. We considered five methods in the simulation study: the lasso (Lasso), the elastic-net (Enet), the adaptive

lasso (ALasso), the adaptive elastic-net (AEnet) and the SCAD. In our implementation, we let λ2 = 0 in the adaptive elastic-net to get the adaptive lasso fit. There

are several commonly used tuning parameter selection methods, such as crossvalidation, generalized cross-validation (GCV), AIC and BIC. Zou, Hastie and

Tibshirani (2007) suggested using BIC to select the lasso tuning parameter. Wang,

Li and Tsai (2007) showed that for the SCAD, BIC is a better tuning parameter selector than GCV and AIC. In this work, we used BIC to select the tuning parameter

for each method.

Fan and Peng (2004) considered simulation models in which pn = [4n1/4 ] − 5

and |A| = 5. Our theory allows pn = O(nν ) for any ν < 1. Thus, we are interested

in models in which pn = O(nν ) with ν > 13 . In addition, we allow the intrinsic

dimension (A) to diverge with the sample size as well, because such designs make

the model selection and estimation more challenging than in the fixed |A| situations.

E XAMPLE 1.

We generated data from the linear regression model

y = xT β ∗ + ε,

1740

H. ZOU AND H. H. ZHANG

where β ∗ is a p-dim vector and ε ∼ N(0, σ 2 ), σ = 6, and x follows a p-dim

multivariate normal distribution with zero mean and covariance whose (j, k)

entry is j,k = ρ |j −k| , 1 ≤ k, j ≤ p. We considered ρ = 0.5 and ρ = 0.75. Let p =

pn = [4n1/2 ] − 5 for n = 100, 200, 400. Let 1m /0m denote a m-vector of 1’s/0’s.

The true coefficients are β ∗ = (3 · 1q , 3 · 1q , 3 · 1q , 0p−3q )T and |A| = 3q and q =

[pn /9]. In this example ν = 12 ; hence, we used γ = 3 for computing the adaptive

weights in the adaptive elastic-net.

β, its estimation accuracy is measured by the mean squared

For each estimator β − β ∗ )T (

β − β ∗ )]. The variable selection perforerror (MSE) defined as E[(

mance is gauged by (C, I C), where C is the number of zero coefficients that are

correctly estimated by zero and I C is the number of nonzero coefficients that are

incorrectly estimated by zero.

Table 1 documents the simulation results. Several interesting observations can

be made:

1. When the sample size is large (n = 400), the three oracle-like estimators outperform the lasso and the elastic-net which do not have the oracle property.

That is expected according to the asymptotic theory.

2. The SCAD and the adaptive elastic-net are the best when the sample size is

large and the correlation is moderate. However, the SCAD can perform much

worse than the adaptive elastic-net when the correlation is high (ρ = 0.75) or

the sample size is small.

3. Both the elastic-net and the adaptive lasso can do significantly better than the

lasso. What is more interesting is that the adaptive elastic-net often outperforms

the elastic-net and the adaptive lasso.

E XAMPLE 2. We considered the same setup as in Example 1, except that we

let p = pn = [4n2/3 ] − 5 for n = 100, 200, 800. Since ν = 23 , we used γ = 5 for

computing the adaptive weights in the adaptive elastic-net and the adaptive lasso.

The estimation problem in this example is even more difficult than that in Example 1. To see why, note that when n = 200 the dimension increases from 51

in Example 1 to 131 in this example, and the intrinsic dimension (|A|) is almost

tripled.

The simulation results are presented in Table 2, from which we can see that the

three observations made in Example 1 are still valid in this example. Furthermore,

we see that, for every combination of (n, p, |A|, ρ), the adaptive elastic-net has

the best performance.

5. Ultra-high dimensional data. In this section, we discuss how the adaptive

elastic-net can be applied to ultra-high dimensional data in which p > n. When p

is much larger than n, Candes and Tao (2007) suggested using the Dantzig selector

which can achieve the ideal estimation risk up to a log(p) factor under the uniform

1741

ADAPTIVE ELASTIC-NET

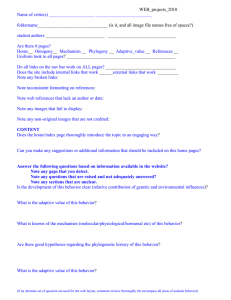

TABLE 1

Simulation I: model selection and fitting results based on 100 replications

n

pn

|A|

100

35

9

200

400

100

200

400

51

75

35

51

75

15

24

9

15

24

Model

ρ = 0.5

Truth

Lasso

ALasso

Enet

AEnet

SCAD

Truth

Lasso

ALasso

Enet

AEnet

SCAD

Truth

Lasso

ALasso

Enet

AEnet

SCAD

ρ = 0.75

Truth

Lasso

ALasso

Enet

AEnet

SCAD

Truth

Lasso

ALasso

Enet

AEnet

SCAD

Truth

Lasso

ALasso

Enet

AEnet

SCAD

MSE

7.57 (0.31)

6.78 (0.42)

5.91 (0.29)

5.07 (0.35)

10.55 (0.68)

6.63 (0.24)

3.78 (0.18)

4.86 (0.19)

3.46 (0.17)

4.76 (0.33)

4.99 (0.15)

2.76 (0.09)

3.37 (0.12)

2.47 (0.08)

2.42 (0.09)

5.93 (0.26)

8.49 (0.39)

4.18 (0.24)

5.24 (0.32)

11.59 (0.56)

5.10 (0.18)

5.32 (0.31)

3.79 (0.17)

3.32 (0.17)

5.99 (0.31)

3.83 (0.12)

2.85 (0.12)

3.24 (0.11)

2.71 (0.09)

3.64 (0.17)

C

IC

26

24.08

25.50

24.06

25.47

22.54

36

33.32

35.46

33.36

35.47

34.63

51

47.31

50.33

48.00

50.45

50.88

0

0.01

0.42

0

0.15

0.35

0

0

0.02

0

0.01

0.10

0

0

0

0

0

0

26

24.80

25.76

24.77

25.70

22.46

36

34.66

35.70

34.79

35.80

33.10

51

49.03

50.53

49.07

50.54

48.43

0

0.14

1.84

0.05

0.74

1.34

0

0.02

0.87

0

0.19

0.35

0

0

0.09

0

0.03

0.09

uncertainty condition. Fan and Lv (2008) showed that the uniform uncertainty condition may easily fail and the log(p) factor is too large when p is exponentially

large. Moreover, the computational cost of the Dantzig selector would be very high

1742

H. ZOU AND H. H. ZHANG

TABLE 2

Example 2: model selection and fitting results based on 100 replications

n

100

200

800

100

200

800

pn

|A|

81

27

131

339

81

131

339

42

111

27

42

111

Model

ρ = 0.5

Truth

Lasso

ALasso

Enet

AEnet

SCAD

Truth

Lasso

ALasso

Enet

AEnet

SCAD

Truth

Lasso

ALasso

Enet

AEnet

SCAD

ρ = 0.75

Truth

Lasso

ALasso

Enet

AEnet

SCAD

Truth

Lasso

ALasso

Enet

AEnet

SCAD

Truth

Lasso

ALasso

Enet

AEnet

SCAD

MSE

31.73 (1.06)

28.78 (1.22)

27.61 (1.04)

20.27 (0.94)

44.88 (2.65)

23.41 (0.67)

12.70 (0.48)

18.94 (0.61)

10.68 (0.37)

14.14 (0.64)

13.72 (0.23)

6.44 (0.12)

11.02 (0.18)

6.00 (0.10)

7.79 (0.30)

22.04 (0.73)

33.98 (1.08)

17.37 (0.62)

16.18 (0.80)

31.84 (1.77)

16.71 (0.50)

20.98 (0.92)

14.12 (0.48)

11.16 (0.46)

15.27 (0.61)

10.01 (0.16)

6.39 (0.12)

8.01 (0.13)

6.23 (0.11)

6.62 (0.17)

C

IC

54

47.06

53.01

46.35

53.00

47.79

89

80.51

87.99

80.27

87.97

87.42

228

212.10

226.61

213.91

226.75

228.00

0

0.19

2.12

0.13

1.15

2.37

0

0

0.14

0

0

0.25

0

0

0

0

0

0.33

54

50.74

53.73

50.82

53.67

50.55

89

85.17

88.64

85.35

88.60

87.20

228

221.74

226.89

222.74

226.94

228.00

0

0.71

7.19

0.46

2.36

4.74

0

0.06

3.98

0.05

0.87

1.33

0

0

0

0

0

0.29

when p is large. In order to overcome these difficulties, Fan and Lv (2008) introduced the Sure Independence Screening (SIS) idea, which reduces the ultra-high

dimensionality to a relatively large scale dn but dn < n. Then, the lower dimension

1743

ADAPTIVE ELASTIC-NET

TABLE 3

A demonstration of SIS + AEnet: model selection and fitting results based on 100 replications

dn = [5.5n2/3 ]

188

Model

MSE

C

IC

Truth

SIS + AEnet

SIS + SCAD

0.71 (0.18)

1.48 (0.90)

992

987.45

982.20

0

0.05

0.06

methods such as the SCAD can be used to estimate the sparse model. This procedure is referred to as SIS + SCAD. Under regularity conditions, Fan and Lv (2008)

proved that SIS misses true features with an exponentially small probability and

SIS + SCAD holds the oracle property if dn = o(n1/3 ). Furthermore, with the help

of SIS, the Dantzig selector can achieve the ideal risk up to a log(dn ) factor, rather

than the original log(p).

Inspired by the results of Fan and Lv (2008), we consider combining the adaptive elastic-net and SIS when p > n. We first apply SIS to reduce the dimension

to dn and then fit the data by using the adaptive elastic-net. We call this procedure

SIS + AEnet.

T HEOREM 5.1. Suppose the conditions for Theorem 1 in Fan and Lv (2008)

hold. Let dn = O(nν ), ν < 1; then, SIS + AEnet produces an estimator that holds

the oracle property.

We make a note here that Theorem 5.1 is a direct consequence of Theorem 1

in Fan and Lv (2008) and Theorem 3.3; thus, its proof is omitted. Theorem 5.1 is

similar to Theorem 5 in Fan and Lv (2008), but there is a difference. SIS + AEnent

can hold the oracle property when dn exceeds O(n1/3 ), while Theorem 5 in Fan

and Lv (2008) assumes dn = o(n1/3 ).

To demonstrate SIS + AEnet, we consider the simulation example used in

Fan and Lv (2008), Section 3.3.1. The model is y = xT β ∗ + 1.5N(0, 1), where

β ∗ = (β T1 , 0p−|A| )T with |A| = 8. Here, β 1 is a 8-dim

√ vector and each component

has the form (−1)u (an + |z|), where an = 4 log(n)/ n, u is randomly drawn from

Ber(0.4) and z is randomly drawn from the standard normal distribution. We generated n = 200 data from the above model. Before applying the adaptive elasticnet, we used SIS to reduce the dimensionality from 1000 to dn = [5.5n2/3 ] = 188.

The estimation problem is still rather challenging, as we need to estimate 188 parameters by using only 200 observations. From Table 3, we see that SIS + AEnet

performs favorably compared to SIS + SCAD.

6. Proofs.

P ROOF OF T HEOREM 3.1.

We write

β(λ2 , 0) = arg min y − Xβ2 + λ2 β2 .

β

2

2

1744

H. ZOU AND H. H. ZHANG

By the definition of β ŵ (λ2 , λ1 ) and β(λ2 , 0), we know

y − X

β ŵ (λ2 , λ1 )22 + λ2 β ŵ (λ2 , λ1 )22 ≥ y − X

β(λ2 , 0)22 + λ2 β(λ2 , 0)22

and

β(λ2 , 0)22 + λ2 β(λ2 , 0)22 + λ1

y − X

p

ŵj |β̂(λ2 , 0)j |

j =1

β ŵ (λ2 , λ1 )22 + λ2 β ŵ (λ2 , λ1 )22 + λ1

≥ y − X

p

ŵj |β̂ŵ (λ2 , λ1 )j |.

j =1

From the above two inequalities, we have

λ1

p

ŵj |β̂(λ2 , 0)j | − |β̂ŵ (λ2 , λ1 )j |

j =1

≥ y − X

β ŵ (λ2 , λ1 )22 + λ2 β ŵ (λ2 , λ1 )22

(6.1)

β(λ2 , 0)22 + λ2 β(λ2 , 0)22 .

− y − X

On the other hand, we have

y − X

β ŵ (λ2 , λ1 )22 + λ2 β ŵ (λ2 , λ1 )22

− y − X

β(λ2 , 0)22 + λ2 β(λ2 , 0)22

T

= β ŵ (λ2 , λ1 ) − β(λ2 , 0) (XT X + λ2 I) β ŵ (λ2 , λ1 ) − β(λ2 , 0)

and

p

ŵj |β̂(λ2 , 0)j | − |β̂ŵ (λ2 , λ1 )j |

j =1

≤

p

ŵj |β̂(λ2 , 0)j − β̂ŵ (λ2 , λ1 )j |

j =1

p

≤

ŵj2 β(λ2 , 0) − β ŵ (λ2 , λ1 )2 .

j =1

Note that λmin (XT X + λ2 I) = λmin (XT X) + λ2 . Therefore, we end up with

(6.2)

λmin (XT X) + λ2 β ŵ (λ2 , λ1 ) − β(λ2 , 0)22

T

≤ β ŵ (λ2 , λ1 ) − β(λ2 , 0) (XT X + λ2 I) β ŵ (λ2 , λ1 ) − β(λ2 , 0)

p

ŵj2 β(λ2 , 0) − β ŵ (λ2 , λ1 )2 ,

≤ λ1 j =1

1745

ADAPTIVE ELASTIC-NET

which results in the inequality

β ŵ (λ2 , λ1 ) − β(λ2 , 0)2 ≤

(6.3)

λ1

p

2

j =1 ŵj

λmin (XT X) + λ2

.

Note that

β(λ2 , 0) − β ∗ = −λ2 (XT X + λ2 I)−1 β ∗ + (XT X + λ2 I)−1 XT ε,

which implies that

E β(λ2 , 0) − β ∗ 22

≤ 2λ22 (XT X + λ2 I)−1 β ∗ 22 + 2E (XT X + λ2 I)−1 XT ε22

(6.4)

−2

−2

≤ 2λ22 λmin (XT X) + λ2

+ 2 λmin (XT X) + λ2

β ∗ 22

E(ε T XXT ε)

−2 2 ∗ 2

λ2 β 2 + Tr(XT X)σ 2

−2 2 ∗ 2

≤ 2 λmin (XT X) + λ2

λ2 β 2 + pλmax (XT X)σ 2 .

= 2 λmin (XT X) + λ2

Combing (6.3) and (6.4), we have

E β ŵ (λ2 , λ1 ) − β ∗ 22

≤ 2E β(λ2 , 0) − β ∗ 22 + 2E β ŵ (λ2 , λ1 ) − β(λ2 , 0)22

(6.5)

(6.6)

≤

4λ22 β ∗ 22 + 4pλmax (XT X)σ 2 + 2λ21 E[

p

2

j =1 ŵj ]

(λmin (XT X) + λ2 )2

≤4

λ22 β ∗ 22 + Bpnσ 2 + λ21 E[

p

2

j =1 ŵj ]

(bn + λ2 )2

.

We have used condition (A1) in the last inequality. When ŵj = 1 for all j , we have

λ2 β ∗ 22 + Bpnσ 2 + pλ21

β(λ2 , λ1 ) − β ∗ 22 ≤ 4 2

.

E (bn + λ2 )2

∗

P ROOF OF T HEOREM 3.2. We show that ((1+ λn2 )

β A , 0) satisfies the Karush–

Kuhn–Tucker (KKT) conditions of (2.2) with probability tending to 1. By the def∗

inition of β A , it suffices to show

∗

∗

Pr ∀j ∈ Ac |−2XjT (y − XA β A )| ≤ λ∗1 ŵj → 1

or, equivalently,

Pr ∃j ∈ Ac |−2XjT (y − XA β A )| > λ∗1 ŵj → 0.

1746

H. ZOU AND H. H. ZHANG

Let η = minj ∈A (|βj∗ |) and η̂ = minj ∈A (|β̂(enet)∗j |). We note that

∗

Pr ∃j ∈ Ac |−2XjT (y − XA β A )| > λ∗1 ŵj

≤

j ∈Ac

∗

Pr |−2XjT (y − XA β A )| > λ∗1 ŵj , η̂ > η/2 + Pr(η̂ ≤ η/2),

Pr(η̂ ≤ η/2) ≤ Pr β(enet) − β ∗ 2 ≥ η/2 ≤

E(

β(enet) − β ∗ 22 )

.

η2 /4

Then, by Theorem 3.1, we obtain

Pr(η̂ ≤ η/2) ≤ 16

(6.7)

λ22 β ∗ 22 + Bpnσ 2 + λ21 p

.

(bn + λ2 )2 η2

λ∗

Moreover, let M = ( n1 )1/(1+γ ) , and we have

j ∈Ac

∗

Pr |−2XjT (y − XA β A )| > λ∗1 ŵj , η̂ > η/2

≤

j ∈Ac

+

∗

Pr |−2XjT (y − XA β A )| > λ∗1 ŵj , η̂ > η/2, |β̂(enet)j | ≤ M

Pr |β̂(enet)j | > M

j ∈Ac

≤

j ∈Ac

+

j ∈Ac

(6.8)

∗

Pr |−2XjT (y − XA β A )| > λ∗1 M −γ , η̂ > η/2

Pr |β̂(enet)j | > M

4M 2γ

∗

≤ ∗2 E

|XjT (y − XA β A )|2 I (η̂ > η/2)

λ1

j ∈Ac

1

+ 2E

|β̂(enet)j |2

M

c

j ∈A

4M 2γ

∗

≤ ∗2 E

|XjT (y − XA β A )|2 I (η̂ > η/2)

λ1

j ∈Ac

+

E(

β(enet) − β ∗ 22 )

M2

4M 2γ

∗

≤ ∗2 E

|XjT (y − XA β A )|2 I (η̂ > η/2)

λ1

j ∈Ac

+4

λ22 β ∗ 22 + Bpnσ 2 + λ21 p

,

(bn + λ2 )2 M 2

1747

ADAPTIVE ELASTIC-NET

where we have used Theorem 3.1 in the last step. By the model assumption, we

have

j ∈Ac

∗

|XjT (y − XA β A )|2 =

∗

j ∈Ac

≤2

|XjT (XA β ∗A − XA β A ) + XjT ε|2

j ∈Ac

∗

|XjT (XA β ∗A − XA β A )|2 + 2

∗

≤ 2BnXA (β ∗A − β A )22 + 2

∗

≤ 2Bn · Bnβ ∗A − β A 22 + 2

which gives us the inequality

E

j ∈Ac

(6.9)

∗

|XjT (y − XA β A )|2 I (η̂

j ∈Ac

j ∈Ac

j ∈Ac

|XjT ε|2

|XjT ε|2

|XjT ε|2 ,

> η/2)

∗

β A 22 I (η̂ > η/2) + 2Bnpσ 2 .

≤ 2B 2 n2 E β ∗A − ∗

We now bound E(β ∗A − β A 22 I (η̂ > η/2)). Let

∗

2

2

β A (λ2 , 0) = arg min y − XA β2 + λ2

βj .

β

j ∈A

Then, by using the same arguments for deriving (6.1), (6.2) and (6.3), we have

√

√

λ∗1 · maxj ∈A ŵj |A| λ∗1 η̂−γ p

∗

∗

(6.10)

≤

.

β A − β A (λ2 , 0)2 ≤

bn + λ2

λmin (XTA XA ) + λ2

Note that λmin (XTA XA ) ≥ λmin (XT X) ≥ bn and λmax (XTA XA ) ≤ λmax (XT X) ≤

Bn. Following the rest arguments in the proof of Theorem 3.1, we obtain

∗

E β ∗A − β A 22 I (η̂ > η/2)

(6.11)

≤4

−2γ |A|

λ22 β ∗A 22 + λmax (XTA XA )|A|σ 2 + λ∗2

1 (η/2)

(λmin (XTA XA ) + λ2 )2

≤4

−2γ p

λ22 β ∗ 22 + Bpnσ 2 + λ∗2

1 (η/2)

.

(bn + λ2 )2

The combination of (6.7), (6.8), (6.9) and (6.11) yields

∗

β A )| > λ∗1 ŵj

Pr ∃j ∈ Ac |−2XjT (y − XA ≤

∗ 2

2

∗2

2

−2γ p

4M 2γ n

2 λ2 β 2 + Bpnσ + λ1 (η/2)

8B

n

+ 2Bpσ 2

∗2

2

(bn

+

λ

)

λ1

2

1748

H. ZOU AND H. H. ZHANG

+

λ22 β ∗ 22 + Bpnσ 2 + λ21 p 4

λ22 β ∗ 22 + Bpnσ 2 + λ21 p 16

+

(bn + λ2 )2

M2

(bn + λ2 )2

η2

K1 + K2 + K3 .

≡

We have chosen γ >

K1 = O

2ν

1−ν ;

then, under conditions (A1)–(A6), it follows that

∗

λ

√1 n((1+γ )(1−ν)−1)/2

n

(6.12)

p n

K2 = O

n λ∗1

K3 = O

p 1

n η2

λ∗1

=O

2/(1+γ ) −2/(1+γ ) → 0,

→ 0,

p −γ

η

n

2/γ p n

n λ∗1

2/(1+γ ) (1+γ )/γ

p

−2/γ

→ 0.

Thus, the proof is complete. P ROOF OF T HEOREM 3.3. From Theorem 3.2, we have shown that, with prob∗

β A , 0).

ability tending to 1, the adaptive elastic-net estimator is equal to ((1 + λn2 )

Therefore, in order to prove the model selection consistency result, we only need

to show Pr(minj ∈A |β̃j∗ | > 0) → 1. By (6.10), we have

√

λ∗1 pη̂−γ

∗

∗

min |β̃j | > min |β̃ (λ2 , 0)j | −

.

j ∈A

j ∈A

bn + λ2

Note that

∗

min |β̃ ∗ (λ2 , 0)j | > min |βj∗ | − β A (λ2 , 0) − β ∗A 2 .

j ∈A

j ∈A

Following (6.6), it is easy to see that

λ22 β ∗ 22 + Bpnσ 2

p

∗

∗ 2

=O

.

E β A (λ2 , 0) − β A 2 ≤ 4

2

Moreover,

√

λ∗1 p η̂−γ

bn+λ2

= O( √1n )(

2 E

η̂

η

√

λ∗1 p

√

n

(bn + λ2 )

η−γ )( η̂η )−γ and

≤2+

2 2

E

(

η̂

−

η)

η2

≤2+

2 ∗ 2

E

β(λ

,

λ

)

−

β

2

2

1

η2

≤2+

8 λ22 β ∗ 22 + Bpnσ 2 + λ21 p

.

η2

(bn + λ2 )2

n

1749

ADAPTIVE ELASTIC-NET

In (6.12) we have shown η2 pn → ∞. Thus,

√

λ∗1 pη̂−γ

1

(6.13)

= o √ OP (1).

bn + λ2

n

Hence, we have

min |β̃j∗ | > η −

j ∈A

p

1

OP (1) − o √ OP (1)

n

n

and Pr(minj ∈A |β̃j∗ | > 0) → 1.

We now prove the asymptotic normality. For convenience, we write

zn = α T

Note that

α

T

I + λ2 −1

1/2 A

β(AdaEnet)A − β ∗A .

A 1 + λ2 /n

1/2 ∗

(I + λ2 −1

A ) A β A

β ∗A

−

1 + λ2 /n

= α T (I + λ2 −1

A ) A

1/2

λ2 β ∗A

1/2 ∗

∗

+ α T (I + λ2 −1

A ) A β A − β A (λ2 , 0)

n + λ2

1/2 ∗

∗

+ α T (I + λ2 −1

A ) A β A (λ2 , 0) − β A .

In addition, we have

1/2 ∗

−1/2 ∗

−1/2 T

∗

(I + λ2 −1

A ) A β A (λ2 , 0) − β A = −λ2 A β A + A XA ε.

Therefore, by Theorem 3.2, it follows that, with probability tending to 1, zn =

T1 + T2 + T3 , where

T1 = α T (I + λ2 −1

A ) A

1/2

λ2 β ∗A

−1/2

− α T λ2 A β ∗A ,

n + λ2

1/2 ∗

∗

T2 = α T (I + λ2 −1

A ) A β A − β A (λ2 , 0) ,

−1/2

T3 = α T A

XTA ε.

We now show that T1 = o(1), T2 = oP (1) and T3 → N(0, σ 2 ) in distribution.

Then, by Slutsky’s theorem, we know zn →d N(0, σ 2 ). By (A1) and α T α = 1,

we have

T12

∗

1/2 λ2 β A

≤ 2(I + λ2 −1

)

A

A

n+λ

≤2

2 2

λ22

λ2

1/2

β ∗ 2 1 +

(n + λ2 )2 A A 2

bn

≤

2

+ 2λ2 −1/2 β ∗ 2

A 2

A

2λ22 Bn

λ2

1+

2

(n + λ2 )

bn

2

2

+ 2λ2 β ∗A 22

β ∗A 22 + 2λ2 β ∗A 22

1

.

bn

1

bn

1750

H. ZOU AND H. H. ZHANG

Hence, it follows by (A6) that T1 = o(1). Similarly, we can bound T2 as follows:

T22

2

2

λ2

≤ 1+

bn

λ2

≤ 1+

bn

≤ 1+

λ2

bn

1/2 ∗

2

∗

A β A − β A (λ2 , 0) 2

∗

∗

Bn

βA − β A (λ2 , 0)22

2

Bn

λ∗1 η̂−γ

bn + λ2

2

,

where we have used (6.10) in the last step. Then, (6.13) tells us that T22 = n12 OP (1).

Next, we consider T3 . Let XA [i,

] denote the ith row of the matrix XA . With such

notation, we can write T3 = ni=1 ri εi , where ri = α T (XTA XA )−1/2 (XA [i, ])T .

Then, it is easy to see that

n

ri2 =

i=1

n

α T (XTA XA )−1/2 (XA [i, ])T (XA [i, ])(XTA XA )−1/2 α

i=1

= α T (XTA XA )−1/2 (XTA XA )(XTA XA )−1/2 α

(6.14)

= α T α = 1.

Furthermore, we have for k = 2 + δ, δ > 0

n

E[|εi |2+δ ]|ri2+δ |

≤ E[|ε|2+δ ]

n

i=1

|ri2 | max |ri |δ

i

i=1

δ/2

= E[|ε|2+δ ] max |ri2 |

i

−1/2

Note that ri2 ≤ A

(6.15)

n

E[|εi |

(XA [i, ])T ≤ (

2+δ

.

−1

2

j ∈A xij )(λmax ( A )) ≤

]|ri2+δ | ≤ E[|ε|2+δ ]

max (p

i=1

i

p

2

j =1 xij

2 δ/2

j =1 xij )

bn

bn

. Hence,

→ 0.

From (6.14) and (6.15), Lyapunov conditions for the central limit theorem are

established. Thus, T3 →d N(0, σ 2 ). This completes the proof. Acknowledgments. We sincerely thank an associate editor and referees for

their helpful comments and suggestions.

REFERENCES

B REIMAN , L. (1996). Heuristics of instability and stabilization in model selection. Ann. Statist. 24

2350–2383. MR1425957

C ANDES , E. and TAO , T. (2007). The Dantzig selector: Statistical estimation when p is much larger

than n. Ann. Statist. 35 2313–2351. MR2382644

ADAPTIVE ELASTIC-NET

1751

C ANDES , E., WAKIN , M. and B OYD , S. (2008). Enhancing sparsity by reweighted l1 minimization.

J. Fourier Anal. Appl. To appear.

D ONOHO , D. and J OHNSTONE , I. (1994). Ideal spatial adaptation via wavelet shrinkage. Biometrika

81 425–455. MR1311089

D ONOHO , D., J OHNSTONE , I., K ERKYACHARIAN , G. and P ICARD , D. (1995). Wavelet shrinkage:

Asymptopia? (with discussion). J. Roy. Statist. Soc. Ser. B 57 301–337. MR1323344

E FRON , B., H ASTIE , T., J OHNSTONE , I. and T IBSHIRANI , R. (2004). Least angle regression. Ann.

Statist. 32 407–499. MR2060166

FAN , J. and L I , R. (2001). Variable selection via nonconcave penalized likelihood and its oracle

properties. J. Amer. Statist. Assoc. 96 1348–1360. MR1946581

FAN , J. and L I , R. (2006). Statistical challenges with high dimensionality: Feature selection in

knowledge discovery. In International Congress of Mathematicians 3 595–622. MR2275698

FAN , J. and LV, J. (2008). Sure independence screening for ultra-high-dimensional feature space.

J. Roy. Statist. Soc. Ser. B 70 849–911.

FAN , J. and P ENG , H. (2004). Nonconcave penalized likelihood with a diverging number of parameters. Ann. Statist. 32 928–961. MR2065194

FAN , J., P ENG , H. and H UANG , T. (2005). Semilinear high-dimensional model for normalization of

microarray data: A theoretical analysis and partial consistency (with discussion). J. Amer. Statist.

Assoc. 100 781–813. MR2201010

H UBER , P. (1988). Robust regression: Asymptotics, conjectures and Monte Carlo. Ann. Statist. 1

799–821. MR0356373

K NIGHT, K. and F U , W. (2000). Asymptotics for lasso-type estimators. Ann. Statist. 28 1356–1378.

MR1805787

L AM , C. and FAN , J. (2008). Profile-kernel likelihood inference with diverging number of parameters. Ann. Statist. 36 2232–2260.

P ORTNOY, S. (1984). Asymptotic behavior of M-estimatiors of p regression parameters when p2 /n

is large. I. Consistency. Ann. Statist. 12 1298–1309. MR0760690

T IBSHIRANI , R. (1996). Regression shrinkage and selection via the lasso. J. Roy. Statist. Soc. Ser. B

58 267–288. MR1379242

WANG , H., L I , R. and T SAI , C. (2007). Tuning parameter selectors for the smoothly clipped absolute deviation method. Biometrika 94 553–568. MR2410008

Z OU , H. (2006). The adaptive lasso and its oracle properties. J. Amer. Statist. Assoc. 101 1418–1429.

MR2279469

Z OU , H. and H ASTIE , T. (2005). Regularization and variable selection via the elastic net. J. R. Stat.

Soc. Ser. B Stat. Methodol. 67 301–320. MR2137327

Z OU , H., H ASTIE , T. and T IBSHIRANI , R. (2007). On the degrees of freedom of the lasso. Ann.

Statist. 35 2173–2192. MR2363967

S CHOOL OF S TATISTICS

U NIVERSITY OF M INNESOTA

M INNEAPOLIS , M INNESOTA 55455

USA

E- MAIL : hzou@stat.umn.edu

D EPARTMENT OF S TATISTICS

N ORTH C AROLINA S TATE U NIVERSITY

R ALEIGH , N ORTH C AROLINA 27695-8203

USA

E- MAIL : hzhang2@stat.ncsu.edu