Lectures in Modern Economic Time Series Analysis. 2 ed. c

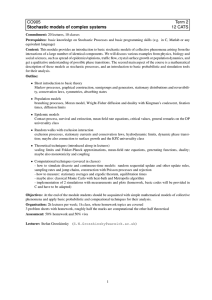

advertisement

Lectures in Modern Economic

Time Series Analysis. 2 ed. c

Bo Sjö

Linköping, Sweden

email:bo.sjo@liu.se

October 30, 2011

2

CONTENTS

1 Introduction

1.1

1.2

1.3

7

Outline of this Book/Text/Course/Workshop . . . . . . . . . . . .

Why Econometrics? . . . . . . . . . . . . . . . . . . . . . . . . . .

Junk Science and Junk Econometrics . . . . . . . . . . . . . . . . .

8

8

9

2 Introduction to Econometric Time Series

2.1 Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

11

12

I

2.2

Di¤erent types of time series . . . . . . . . . . . . . . . . . . . . .

13

2.3

Repetition - Your First Courses in Statistics and Econometrics . .

15

Basic Statistics

19

3 Time Series Modeling - An Overview

21

3.1

3.2

3.3

3.4

Statistical Models . . . . . . . . . . . .

Random Variables . . . . . . . . . . .

Moments of random variables . . . . .

Popular Distributions in Econometrics

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

22

23

24

26

3.5

3.6

3.7

3.8

Analysing the Distribution . . . . . . . . . . . . . . . . .

Multidimensional Random Variables . . . . . . . . . . .

Marginal and Conditional Densities . . . . . . . . . . . .

The Linear Regression Model — A General Description

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

27

29

30

30

4 The Method of Maximum Likelihood

4.1 MLE for a Univariate Process . . . . . . . . . . . . . . . . . . . . .

4.2 MLE for a Linear Combination of Variables . . . . . . . . . . . . .

35

35

38

5 The Classical tests - Wald,LM and LR tests

41

II

43

Time Series Modeling

6 Random Walks, White noise and All That

6.1 Di¤erent types processes . . . . . . . . . . . .

6.2 White Noise . . . . . . . . . . . . . . . . . . .

6.3 The Log Normal Distribution . . . . . . . . .

6.4 The ARIMA Model . . . . . . . . . . . . . . .

6.5 The Random Walk Model . . . . . . . . . . .

6.6 Martingale Processes . . . . . . . . . . . . . .

6.7 Markov Processes . . . . . . . . . . . . . . . .

6.8 Brownian Motions . . . . . . . . . . . . . . .

6.9 Brownian motions and the sum of white noise

6.9.1

6.9.2

CONTENTS

.

.

.

.

.

.

.

.

.

45

45

46

47

47

48

50

52

54

55

The geometric Brownian motion . . . . . . . . . . . . . . .

A more formal de…nition . . . . . . . . . . . . . . . . . . . .

56

57

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3

7 Introductioo to Time Series Modeling

59

7.1 Descriptive Tools for Time Series . . . . . . . . . . . . . . . . . . . 62

7.1.1 Weak and Strong Stationarity . . . . . . . . . . . . . . . . . 64

7.1.2 Weak Stationarity, Covariance Stationary and Ergodic Processes 64

7.1.3 Strong Stationarity . . . . . . . . . . . . . . . . . . . . . . . 65

7.1.4 Finding the Optimal Lag Length and Information Criteria . 66

7.2

7.3

7.4

7.5

7.1.5 The Lag Operator . . . . . . . . . . . . . . . . . . . . . .

7.1.6 Generating Functions . . . . . . . . . . . . . . . . . . . .

7.1.7 The Di¤erence Operator . . . . . . . . . . . . . . . . . . .

7.1.8 Filters . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7.1.9 Dynamics and Stability . . . . . . . . . . . . . . . . . . .

7.1.10 Fractional Integration . . . . . . . . . . . . . . . . . . . .

7.1.11 Building an ARIMA Model. The Box-Jenkin’s Approach

7.1.12 Is the ARMA model identi…ed? . . . . . . . . . . . . . . .

Theoretical Properties of Time Series Models . . . . . . . . . . .

7.2.1 The Principle of Duality . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

67

68

69

70

70

71

71

71

72

72

7.2.2 Wold’s decomposition theorem . . . .

Additional Topics . . . . . . . . . . . . . . . .

7.3.1 Seasonality . . . . . . . . . . . . . . .

7.3.2 Non-stationarity . . . . . . . . . . . .

Aggregation . . . . . . . . . . . . . . . . . . .

Overview of Single Equation Dynamic Models

.

.

.

.

.

.

73

75

75

76

76

78

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

8 Multipliers and Long-run Solutions of Dynamic Models.

83

9 Vector Autoregressive Models

9.0.1 How estimate a VAR? . . . . . . . . . . . . . . . . . . . . .

9.0.2 Impulse responses in a VAR with non-stationary variables

and cointegration. . . . . . . . . . . . . . . . . . . . . . . .

9.1 BVAR, TVAR etc. . . . . . . . . . . . . . . . . . . . . . . . . . . .

85

90

III

93

Granger Non-causality Tests

10 Introduction to Exogeneity and Multicollinearity

10.1 Exogeneity . . . . . . . . . . . . . . . . . . . .

10.1.1 Weak Exogeneity . . . . . . . . . . . . .

10.1.2 Strong Exogeneity . . . . . . . . . . . .

10.1.3 Super Exogeneity . . . . . . . . . . . . .

10.2 Multicollinearity and understanding of multiple

97

. . . . . . .

. . . . . . .

. . . . . . .

. . . . . . .

regression. .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

11 Univariate Tests of The Order of Integration

11.0.1 The DF-test: . . . . . . . . . . .

11.0.2 The ADF-test . . . . . . . . . . .

11.0.3 The Phillips-Perron test . . . . .

11.0.4 The LMSP-test . . . . . . . . . .

11.0.5 The KPSS-test . . . . . . . . . .

11.0.6 The G(p; q) test. . . . . . . . . .

11.1 The Alternative Hypothesis in I(1) Tests

11.2 Fractional Integration . . . . . . . . . .

4

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

90

91

97

97

98

99

99

101

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

101

102

103

104

104

104

105

106

CONTENTS

12 Non-Stationarity and Co-integration

109

12.0.1 The Spurious Regression Problem . . . . . . . . . . . . . . 110

12.0.2 Integrated Variables and Co-integration . . . . . . . . . . . 111

12.0.3 Approaches to Testing for Co-integration . . . . . . . . . . 112

13 Integrated Variables and Common Trends

117

14 A Deeper Look at Johansen’s Test

121

15 The

15.1

15.2

15.3

15.4

15.5

15.6

15.7

Estimation of Dynamic Models

Deterministic Explanatory Variables . . . . . . . . . . . .

The Deterministic Trend Model . . . . . . . . . . . . . . .

Stochastic Explanatory Variables . . . . . . . . . . . . . .

Lagged Dependent Variables . . . . . . . . . . . . . . . . .

Lagged Dependent Variables and Autocorrelation . . . . .

The Problems of Dependence and the Initial Observation

Estimation with Integrated Variables . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

16 Encompassing

125

125

127

127

129

130

131

133

137

17 ARCH Models

139

17.0.1 Practical Modelling Tips . . . . . . . . . . . . . . . . . . . . 141

17.1 Some ARCH Theory . . . . . . . . . . . . . . . . . . . . . . . . . . 141

17.2 Some Di¤erent Types of ARCH and GARCH Models . . . . . . . . 143

17.3 The Estimation of ARCH models . . . . . . . . . . . . . . . . . . . 146

18 Econometrics and Rational Expectations

18.0.1 Rational v.s. other Types of Expectations . . .

18.0.2 Typical Errors in the Modeling of Expectations

18.0.3 Modeling Rational Expectations . . . . . . . .

18.0.4 Testing Rational Expectations . . . . . . . . .

19 A Research Strategy

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

147

147

148

150

150

153

20 References

157

20.1 APPENDIX 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

20.2 Appendix III Operators . . . . . . . . . . . . . . . . . . . . . . . . 160

20.2.1 The Expectations Operator . . . . . . . . . . . . . . . . . . 161

20.2.2 The Variance Operator . . . . . . . . . . . . . . . . . . . . 162

20.2.3 The Covariance Operator . . . . . . . . . . . . . . . . . . . 162

20.2.4 The Sum Operator . . . . . . . . . . . . . . . . . . . . . . . 162

20.2.5 The Plim Operator . . . . . . . . . . . . . . . . . . . . . . . 163

20.2.6 The Lag and the Di¤erence Operators . . . . . . . . . . . . 164

Abstract

CONTENTS

5

6

CONTENTS

1. INTRODUCTION

”He who controls the past controls the future.” George Orwell in

"1984".

Please respect that this is work in progress. It has never been my intention to

write a commercial book, or a perfect textbook in time series econometrics. It is

simply a collection of lectures in a popular form that can serve as a complement

to ordinary textbooks and articles used in education. The parts dealing with

tests for unit roots (order of integration) and cointegration are not well developed.

These topics have a memo of their own "A Guide to testing for unit roots and

cointegration".

When I started to put these lecture notes together some years ago I decided

on title "Lectures in Modern Time Series Econometrics" because I thought that

the contents where a bit "modern" compared to standard econometric textbook.

During the fall of 2010 as I started to update the notes I thought that it was

time to remove the word "modern" from the title. A quick look in Damodar

Gujarati’s textbook "Basic Econometrics" from 2009 convinced my to keep the

word "modern" in te title. Gujarati’s text on time series hasn’t changed since the

1970’s even though time series econometrics has changed completely since the 70s.

Thus, under these circumstances I see no reason to change the title, at least not

yet.

There are four ways in which one do time series econometrics. The …rst is to use

the approach of the 1970s, view your time series model just like any linear regression, and impose a number of ad hoc restrictions that will hide all problems you

…nd. This is not a good approach. This approach is only found in old textbooks

and never in today’s research. You might only see it used in very low scienti…c

journals. Second, you can use theory to derive a time series model, and interesting parameters, that you then estimate with appropriate estimators. Examples

of this ti derive utility functions, assume that agents have rational expectations

etc. This is a proper research strategy. However, it typically takes good data,

and you need to be original in your approach, but you can get published in good

journals. The third, approach is simply to do statistical description of the data

series, in the form of a vector autoregressive system, or reduced form of the vector

error correction model. This system can used for forecasting, analysing relationships among data series and investigated with respect to unforeseen shocks such

as drastic changes in energy prices, money supply etc. The fourth way is to go

beyond the vector autoregressive system and try to estimate structural parameters

in the form of elasticities and policy intervention parameters. If you forget about

the …rst method, the choice depends on the problem at hand and you chose to

formulate it. This book aims at telling you how to use methods three and four.

The basic thinking is that your data is the real world, theories are abstractions

that we use to understand the real world. In applied econometric time series you

should always strive to build well-de…ned statistical models, that is models that

are consistent with the data chosen. There is a complex statistical theory behind

all this, that I will try to popularize in this book. I do not see this book as a

substitute for an ordinary textbook. It is simply a complement.

INTRODUCTION

7

1.1 Outline of this Book/Text/Course/Workshop

This book is intended for people who has done a basic course in statistics and

econometrics, either at the undergraduate or at the graduate level. If you did an

undergraduate course I assume that you did it well. Econometrics is a type of

course were every lecture, and every textbook chapter leads to the next level. The

best way to learn econometrics is to be active, read several books, work on your

own with econometric software. No teacher can learn you how to run a software.

That is something you have to learn on your own by practicing how to use the

software. There are some very good software out there, and some The outline

di¤erences between graduate and Ph.D. level mainly in the theoretical parts. At

the Ph.D. level, there is more stress on theoretical backgrounds.

1) I will begin by talking about why econometrics is di¤erent from statistics,

and why econometric time series is di¤erent from the econometrics your meet in

many basic textbooks.

2) I will repeat very brie‡y basic statistics, and linear regression and stress

what you should know in terms of testing and modeling dynamic models. For

most students that will imply going back and do some quick repetition.

3) Introduction into statistical theory including maximum likelihood, random

variables, density functions and stochastic processes.

4) Fourth, basic time series properties and processes.

5) Using and understanding ARFIMA and VAR modelling techniques.

6) Testing for non-stationary in the form of stochastic trends, i.e. test for unit

roots.

7) The spurious regression problem

8) Testing and understanding cointegration.

9) Testing for Granger non-causality

10) The theory of reduction, exogeneity and building dynamic models and

systems

11) Modelling time varying variances, ARCH and GARCH models

12) The implications and consequences of rational expectations on econometric

modelling

13) Non-linearities

14) Additional topics

For most of these topics I have developed more or less self-instructing exercises.

1.2 Why Econometrics?

Why is there a subject called econometrics? Why study econometrics, instead

of statistics? Why not let the statisticians teach statistics, and in particular time

series techniques? These are common questions, raised during seminars and in private, by students, statisticians and economists. The answer is that each scienti…c

area tends to create its own special methodological problems often heavily interrelated with theoretical issues. These problems, and the ways of solving them, are

important in a particular area of science but not necessarily in others. Economics

is a typical example, were the formulation of the economic and the statistical

problem is deeply interrelated from the beginning.

In everyday life we are forced to make decisions based on limited information.

Most of our decisions deal with the an uncertain stochastic future. We all base our

8

INTRODUCTION

decisions on some view of the economy where we assume that certain events are

linked to each other in more or less complex ways. Economists call this a model

of the economy. We can describe the economy and the behavior of the individuals in terms of multivariate stochastic processes. Decisions based on stochastic

sequences play a central role economics and in …nance. Stochastic processes are

the basis for our understanding about the behavior of economic agents and of how

their behavior determine the future path of the economy. Most econometric text

books deal with stochastic time series as a special application of the linear regression technique. Though this approach is acceptable for an introductory course in

econometrics, it is unsatisfactory for students with a deeper interest in economics

and …nance. To understand the empirical and theoretical work in these areas, it

is necessary to understand some of the basic philosophy behind stochastic time

series.

This work is a work in progress. It is based on my lectures on Modern Economic Time Series Analysis at the Department of Economics …rst at University

of Gothenburg and later at University of Skovde and Linköping University in

Sweden. The material is not ready for a widespread distribution. This work, most

likely, contains lots of errors, some are known by the author, and some are not

yet detected. The di¤erent sections do not necessarily follow in a logical order.

Therefore, I invite anyone who has opinions about this work to share them me.

The …rst part of this work provides a repetition of some basic statistical concepts, which are necessary understanding modern economic time series analysis.

The motive for repeating these concepts is that they play a larger role in econometrics than many contemporary textbooks in econometrics indicate. Econometrics did not change much from the …rst edition of Johnston in the 60s until the

revised version of Kmenta in the mid 80s. However, as a consequence of the critique against the use of econometrics delivered by Sims, Lucas, Leamer, Hendry

and others, in combination with new insights into the behavior of non-stationary

time series and the rapid development of computer technology, have revolutionized

econometric modeling, and resulted in an explosion of knowledge. The demand for

writing a decent thesis, or a scienti…c paper, based on econometric methods has

risen far beyond what one can learn in an introductory course in econometrics.

1.3 Junk Science and Junk Econometrics

In media you often hear about this and that being proved by scienti…c research.

In the late 1990s newspapers told that someone had proved that genetic modi…ed

(GM) food could be dangerous. The news were spread quickly, and according to

the story the original article had been stooped from being published by scientists

with suspicious motives. Various lobby groups immediately jumped up. GM food

were dangerous, should be banned and more money should go into this line of

research. What had happened was the following. A researcher claimed to have

shown that GM food were bad for health. He claimed this results for a number

of media people, who distributed the results. (Remember the fuss about ’cold

fusion’). The result were presented in a paper sent to a scienti…c journal for

publication. The journal however, did not publish the article. It was dismissed

because the results were not based on a sound scienti…c method. The researcher

had feed rats with potatoes. One group of rats got GM potatoes, the other group

of rats got normal non-GM potatoes. The rats that got GM potatoes seemed

to develop cancer more often than the control group. The statistical di¤erence

JUNK SCIENCE AND JUNK ECONOMETRICS

9

between the groups were not big, but su¢ ciently big for those wanting to con…rm

their a priori beliefs that GM food is bad. A somewhat embarrassing detail, never

reported in the media, is that rats in general do not like potatoes. As a consequence

both groups of rats in this study were su¤ering from starvation, which severely

a¤ected the test. It was not possible to determine if the di¤erence between the two

groups were caused by starvation, or by GM food. Once the researcher conditioned

on the e¤ects of starvation, the di¤erence became insigni…cant. This is an example

of ”Junk science”, bad science getting a lot of media exposure because the results

…ts the interests of lobby groups, and can be used to scare people.

The lesson for econometricians is obvious, if you come up with ”good” results

you get rewarded, ”bad” results on the other hand can quickly be forgotten. The

GM food example is extreme econometric work. Econometric research seldom get

such media coverage, though there are examples such as Sweden’s economic growth

is less than other similar countries, the assumed dynamic e¤ects of a reduction

of marginal taxes. There are signi…cant results that depend on one single outlier.

Once the outlier is removed, the signi…cance is gone, and the whole story behind

this particular book is also gone.

In these lectures we will argue that the only way to avoid junk econometrics

is careful and systematic construction and testing of models. Basically, this is the

modern econometric time series approach. Why is this modern, and why stress the

idea of testing? The answers are simply that careers have been build on running

junk econometric equations, most people are unfamiliar with scienti…c methods in

general and the consequences of living in a world surrounded by random variables

in particular.

10

INTRODUCTION

2. INTRODUCTION TO ECONOMETRIC TIME SERIES

"Time is a great teacher, but unfortunately it kills all its pupils" Louis Hector

Berlioz

A time series is simply data ordered by time. For an econometrician time series

is usually data that is also generated over time in such a way that time can be

seen as a driving factor behind the data. Time series analysis is simply approaches

that look for regularities in these data ordered by time.

In comparison with other academic …elds, the modeling of economic time series

is characterized by the following problems, which partly motivates why econometrics is a subject of its own:

The empirical sample sizes in economics are generally small, especially compared with many applications in physics or biology. Typical sample sizes

ranges between 25 - 100 observations. In many areas anything below 500

observations is considered a small sample.

Economic time series are dependent in the sense that they are correlated with

other economic time series. In the economic science, problems are almost

never concerned with univariate series. Consumption, as an example, is a

function of income, and at the same time, consumption also a¤ects income

directly and through various other variables.

Economic time series are often dependent over time. Many series display

high autocorrelation, as well as cross autocorrelation with other variables

over time.

Economic time series are generally non-stationary. Their means and variances change over time, implying that estimated parameters might follow unknown distributions instead of standard tabulated distributions like the normal distribution. Non-stationarity arises from productivity growth and price

in‡ation. Non-stationary economic series appear to be integrated, driven by

stochastic trends, perhaps as a result of stochastic changes in the total factor productivity. Integrated variables, and in particular the need to model

them, are not that common outside economics. In some situations, therefore,

inference in econometrics become quite complicated, and requires the development of new statistical techniques for handling stochastic trends. The

concepts of cointegration and common trends, and the recently developed

asymptotic theory for integrated variables are examples of this.

Economic time series cannot be assumed to be drawn from samples in the

way assumed in classical statistics. The classical approach is to start from

a population from which a sample is drawn. Since the sampling process can

be controlled the variables which make up the sample can be seen as random variables. Hypothesis are then formulated and tested conditionally on

the assumption that the random variables have a speci…c distribution. Economic time series are seldom random variables drawn from some underlying

population in the classical statistical sense. Observations do not represent

INTRODUCTION TO ECONOMETRIC TIME SERIES

11

a random sample in the classical statistical sense, because the econometrician cannot control the sampling process of variables. Variables like, GDP,

money, prices and dividends are given from history. To get a di¤erent sample we would have to re-run history, which of course is impossible. The way

statistic theory deals with this situation is to reverse the approach taken in

classical statistic analysis, and build a model that describes the behavior of

the observed data. A model which achieves this is called a well de…ned statistical model, it can be understood as a parsimonious time invariant model

with white noise residuals, that makes sense from economic theory.

Finally, from the view of economics, the subject of statistics deals mainly

with the estimation and inference of covariances only. The econometrician,

however, must also give estimated parameters an economic interpretation.

This problem cannot always be solved ex post, after the a model has been estimated. When it comes to time series, economic theory is an integrated part

of the modeling process. Given a well de…ned statistical model, estimated

parameters should represent behavior of economic agents. Many econometric

studies fail because researchers assume that their estimates can be given an

economic interpretation without considering the statistical properties of the

model, or the simple fact there is in general not a one to one correspondence

with observed variables and the concepts de…ned in economic theory.1

2.1 Programs

Here is a list of statistical software that you should be familiar with, please goggle,

(those recommended for time series are marked with *):

– *RATS and CATS in RATS, Regression Analysis of Time Series and Cointegrating Analysis of Time Series (www.estima.com)

- *PcGive - Comes highly recommended. Included in Oxmetrics modules, see

also Timberlake consultants for more programs.

- *Gretl (Free GNU license, very good for students in econometrics)

- *JMulti (Free for multivariate time series analysis, updated? The discussion

forum is quite dead, www.jmulti.com)

- *EViews

- Gauss (good for simulation)

- STATA (used by the World Bank, good for microeconometrics, panel data,

OK on time series)

- LIMDEP (’Mostly free’ with some editions of Green’s Econometric text

book?, you need to pay for duration models?)

- SAS - Statistical Analysis System (good for big data sets, but not time series,

mainly medicine, "the calculus program for decision makers")

- Shazam

And more, some are very special programs for this and that, ... but I don’t

…nd them worth mentioning in this context.

1 For a recent discussion about the controversies in econometrics see The Economic Journal

1996.

12

INTRODUCTION TO ECONOMETRIC TIME SERIES

There is a bunch of software that allows you to program your own models or

use other peoples modules:

- Matlab

- R (Free, GNU license, connects with Gretl)

- Ox

You should also know about C, C++, and LaTeX to be a good econometrician.

Please google.

For Data Envelopment Analysis (DEA) I recommend Tom Coelli’s DEAP 2.1

or Paul W. Wilson’s FEAR.

2.2 Di¤erent types of time series

Given the general de…nition of time series above, there many types of time series.

The focus in econometrics, macroeconomics and …nance is in stochastic time series

typically in the time domain, which are non-stationarity in levels but becomes what

is called covariance stationary after di¤erencing.

In a broad perspective, time series analysis typically aims at making time series

more understandable by decomposing them into di¤erent parts. The aim of this

introduction is to give a general overview of the subject. A time series is any

sequence ordered by time. The sequence can be either deterministic or stochastic.

The primary interest in economics is in stochastic time series, where the sequence

of observations is made up by the outcome of random variables. A sequence of

stochastic variables ordered by time is called a stochastic time series process.

The random variables that make up the process can either be discrete random variables, taking on a given set of integer numbers, or be continuous

random variables taking on any real number between 1: While discrete random variables are possible they are not that common in economic time series

research.

Another dimension in modeling time series is to consider processes in discrete

time or in continuous time. The principal di¤erence is that stochastic variables

in continuous time can take di¤erent values at any time. In a discrete time process,

the variables are observed at …xed intervals of time (t), and they do not change

between these observation points. Discrete time variables are not common in

…nance and economics. There are few, if any variables that remain …xed between

their points of observations. The distinction between continuous time and discrete

time is not matter of measurability alone. A common mistake is to be confused the

fact that economic variables are measured at discrete time intervals. The money

stock is generally measured and recorded as an end-of-month value. The way of

measuring the stock of money does not imply that it remains unchanged between

the observation interval, instead it changes whenever the money market is open.

The same holds for variables like production and consumption. These activities

take place 24 hours a day, during the whole year. The are measured as the ‡ow

of income and consumption over a period, typically a quarter, representing the

integral sum of these activities.

Usually, a discrete time variable is written with a time subscript (xt ) while

continuous time variables written as x(t). The continuous time approach has

a number of bene…ts, but the cost and quality of the empirical results seldom

motivate the continuous time approach. It is better to use discrete time approaches

DIFFERENT TYPES OF TIME SERIES

13

as an approximation to the underlying continuous time system. The cost for

doing this simpli…cation is small compared with the complexity of continuous

time analysis. This should not be understood as a rejection of all continuous

time approaches. Continuous time is good for analyzing a number of well de…ned

problems like aggregation over time and individuals. In the end it should lead to

a better understanding of adjustment speeds, stability conditions and interactions

among economic time series, see Sjöö (1990, 1995).2

In addition, stochastic time series can be analysed in the time domain or

in the frequency domain. In the time domain the data is analysed ordered in

given time periods such as days, weeks, years etc. The frequency approach decomposes time series into frequencies by using trigonometric functions like sinuses,

etc. Spectral analysis is an example of analysis that uses the frequency domain, to

identify regularities such as seasonal factors, trends, and systematic lags in adjustment etc. The main advantage with analysing time series in the frequency domain

is that it is relatively easy to handle continuous time processes and observations

observed as aggregations over time such as consumption.

However, in economics and …nance, where we are typically faced with given

observations at given frequencies and we seek to study the behavior of agents

operating in real time. Under these circumstances, the time domain is the most

interesting road ahead because it has a direct intuitive appeal to both economists

and policy makers.

A dimension in modeling time series is to consider processes in discrete time

or in continuous time. The principal di¤erence here is that the stochastic variables in a continuous time process can take on di¤erent values at any time. In

a discrete time process, the variables are observed at …xed intervals of time (t),

and they are assumed not to change during the frequency interval. Discrete time

variables are not common in …nance and economics. There are few, if any variables

that remain …xed between their points of observations. The distinction between

continuous time and discrete time is not matter of measurability alone. A common mistake is to be confused the fact that economic variables are measured at

discrete time intervals. The money stock is generally measured and recorded as

an end-of-month value. The way of measuring the stock of money does not imply

that it remains unchanged between the observation interval, instead it changes

whenever the money market is open. The same holds for variables like production

and consumption. These activities take place 24 hours a day, during the whole

year. The are measured as the ‡ow of income and consumption over a period,

typically a quarter, representing the integral sum of these activities.

Our interest is usually in analysing discrete time stochastic processes in the

time domain.

A time series process is generally indicated with brackets, like fyt g: In some

situations it is necessary to be more precise about the length of the process. Writing fyg1

1 indicates that he process start at period one and continues in…nitely.

The process consists of random variables because we can view each element in

fyt g as a random variable. Let the process go from the integer values 1 up to T:

If necessary, to be exact, the …rst variable in the process can be written as yt1 the

second variable yt2 etc. up until ytT : The distribution function of the process can

then be written as F (yt1 ; yt2 ; :::; ytT ):

2 We can also mention the di¤erent types of series that are used; stocks, ‡ows and price

variables. Stocks are variables that can be observed at a point in time like, the money stock,

inventories. Flows are variables that can only be observed over some period, like consumption or

GDP. In this context price variables include prices, interest rates and similar variables which can

be observed at a market at a given point in time. Combining these variables into multivariate

process and constructing econometric models from observed variables in discrete time produces

further problems, and in general they are quite di¢ cult to solve without using continuous time

methods. Usually, careful discrete time models will reduce the problems to a large extent.

14

INTRODUCTION TO ECONOMETRIC TIME SERIES

In some situation it is necessary to start from the very beginning. A time series

is data ordered by time. A stochastic time series is a set of random variables

ordered by time. Let Y~it represent the stochastic variable Y~i given at time t.

Observations on this random variable is often indicated as yit . In general terms

a stochastic time series is a series of random variables ordered by time. A series

starting at time t = 1 and

n ending at timeo t = T , consisting of T di¤erent random

variables is written as Y~1;1 ; Y~2;2 ; :::Y~T;T . Of course, assuming that the series is

built up by individual random variables, with their own independent probability

distributions is a complex thought. But, nothing in our de…nition of stochastic

time series rules out that the data is made up by completely di¤erent random

variables. Sometimes, to understand and …nd solutions to practical problems, it

will be necessary to go all the way back to the most basic assumptions.

Suppose we are given a time series consisting of yearly observations of interest

rates, f6:6; 7:5; 5:9; 5:4; 5:5; 4:5; 4:3; 4:8g, the …rst question to ask is this a stochastic

series in the sense that these number were generated by one stochastic process or

perhaps several di¤erent stochastic processes? Further questions would be to ask

if the process or processes are best represented as continuous or discrete, are the

observations independent or dependent? Quite often we will assume that the series

are generated by the same identical stochastic process in discrete time. Based on

these assumptions the modelling process tries to …nd systematic historical patters

and cross-correlations with other variables in the data.

All time series methods aim at decomposing the series into separate parts in

some way. The standard approach in time series analysis is to decompose as

yt = Tt;d + St;d + Ct;d + It ;

where Td and Sd represents (deterministic) trend and seasonal components, Ct;d is

deterministic cyclical components and I is process representing irregular factors3 .

For time series econometrics this de…nition is limited, since the econometrician

is highly interested in the irregular component. As an alternative, let fyt g be a

stochastic time series process, which is composed as,

yt

=

systematic components + unsystematic components

=

Td + Ts + Sd + Ss + fyt g + et ,

(2.1)

where the systematic components include deterministic trends Td , stochastic trend

Ts ; deterministic seasonals Sd stochastic seasonals Ss , a stationary process (or the

short-run dynamics) yt , and …nally a white noise innovation term et : The modeling

problem can be described as the problem of identifying the systematic components

such that the residual becomes a white noise process. For all series,remember

that any inference is potentially wrong, if not all components have been modeled

correctly. This is so, regardless of whether we model a simple univariate series

with time series techniques, a reduced system, a or a structural model. Inference

is only valid for a correctly speci…ed model.

2.3 Repetition - Your First Courses in Statistics and

Econometrics

1. To be completed...

3 For

simplicity we assume a linear process. An alternative is to assume that the components

are multiplicative, xt = Tt;d St;d Ct;d It :

REPETITION - YOUR FIRST COURSES IN STATISTICS AND ECONOMETRICS

15

In you …rst course in statistics you learned how to use descriptive statistics;

the mean and the variance. Next you learned to calculate the mean and variances

from a sample that represents the whole underlying population. For the mean and

the variance to work as a description of the underlying population it is necessary

to construct the sample in such a way that the di¤erence between the sample

mean and the true population mean is non-systematic meaning that the di¤erence

between the sample mean and the population is unpredictable. This man that

your estimated sample mean is random variable with known characteristics.

The most important thing is to construct a sampling mechanism so that the

mean calculated from the sample has the characteristics you want to have. That

is the estimated mean should be unbiased, e¢ cient and consistent. You learn

about random variables, probabilities, distributions functions and frequency distributions.

Your …rst course in econometrics

"A theory should be as simple as possible, but not simpler" Albert Einstein

To be completed...

Random variables, OLS, minimize the sum of squares, assumptions 1 - 5(6),

understanding, multiple regression, multicollinearity, properties of OLS estimator

Matrix algebra

Tests and ’solutions’for heteroscedasticity (cross-section), and autocorrelation

(time series).

If you read a good course you should have learned the three golden rules: test

test test, and learned about the probabilities of the OLS estimator.

Generalized least squares GLS

System estimation: demand and supply models.

Further extensions:

Panel data, Tobit, Heckit, discrete choice, probit/logit, duration

Time series: distributed lag models, partial adjustment models, error correction models, lag structure, stationarity vs. non-stationarity, co-integration

What need to know ...

What you probably do not know but should know.

OLS

Ordinary least squares is a common estimation method. Suppose there are two

series fyt ; xt g

yt = + xt + "t

Minimize

sample t = 1; :2:::T ,

PT the sum

PTof Squares over the

2

S = t=1 "2t = t=1 (yt

xt )

Take the derivative of S with respect to and , set the expressions to zero,

and solve for and :

S

=

S

=

^ =s

T SS = ESS + RSS

RSS

1 = ESS

T SS + T SS

RSS

2

R = 1 T SS = ESS

T SS

Basic assumptions

1) E("t ) = 0 for all t

16

INTRODUCTION TO ECONOMETRIC TIME SERIES

2)

3)

4)

5)

E("t )2 = 2 for all t

E("t "t k ) = 0 for all k 6= t

E(Xt "t ) = 0

E(X 0 X) 6= 0

6) "t s N ID(0; 2 )

Discuss these properties

Properties

Gauss-Markow BLUE

Deviations

Misspeci…cation, add extra variable, forget relevant variable

Multicollinearity

Error in variables problem

Homoscedasticity Heteroscedasticity

Autocorrelation

REPETITION - YOUR FIRST COURSES IN STATISTICS AND ECONOMETRICS

17

18

INTRODUCTION TO ECONOMETRIC TIME SERIES

Part I

Basic Statistics

19

3. TIME SERIES MODELING - AN

OVERVIEW

Economists are generally interested in a small part of what is normally included in

the subject ”Time Series Analysis”. Various techniques such as …ltering, smoothing and interpolation developed for deterministic time series are of relative minor

interest for economists. Time series econometrics is more focused on the stochastic

part of time series. The following is an brief overview of time series modeling, from

an econometric perspective. It is not text book in mathematical statistics, nor is

the ambition to be extremely rigorous in the presentation of statistical concepts.

The aim more to be a guide for the yet not so informed economist who wants to

know more about the statistical concepts behind time series econometrics.

When approaching time series econometrics the statistical vocabulary quickly

increases and can become overwhelming. These …rst two chapters seek to make it

possible for people without deeper knowledge in mathematical statistics to read

and follow the econometric and …nancial time series literature.

A time series is simply a set of observations ordered by time. Time series

techniques seeks to decompose this ordered series into di¤erent components, which

in turn can be used to generate forecasts, learn about the dynamics of the series,

and how it relates to other series. There is a number of dimensions and decision

to keep account of when approaching this subject.

First, the series, or the process, can be univariate or multivariate, depending on

the problem at hand. Second, the series can be stochastic or purely deterministic.

In the former case a stochastic random process is generating the observations.

Third, given that the series is stochastic, with perhaps deterministic components,

it can be modeled in the time domain or in the frequency domain. Modeling in

the frequency domain implies describing the series in terms cosines functions of

di¤erent wave lengths. This is a useful approach for solving some problems, but not

a general approach for economic time series modeling. Fourth, the data generating

process and the statistical model can constructed in continuous or discrete time.

Continuous time econometrics is good for some problems but not all. In general it

leads to more complex models. A discrete time approach builds on the assumption

that the observed data is unchanged between the intervals of observation. This is

a convenient approximation, that makes modeling easier, but comes at a cost in

the form of aggregation biases. However, in the general case, this is a low cost,

compared with the costs of general misspeci…cation. A special chapter deals with

the discussion of discrete versus continuous time modeling.

The typical economic time series is a discrete stochastic process modeled in

the time domain. Time series can be modelled by smoothing and …lter techniques.

For economists these techniques are generally uninteresting, though we will brie‡y

come back to the concept of …lters.

The simplest way to model an economic time series is to use autoregressive

techniques, or ARIMA techniques in the general case. Most economic time series,

however, are better modeled as a part of a multivariate stochastic process. Economic theory systems of economic variables, leading to single equation transfer

functions and systems of equations in a VAR model.

These techniques are descriptive, they do not identify structural, or ”deep parameters”like elasticities, marginal propensities to consume etc. The estimate more

TIME SERIES MODELING - AN OVERVIEW

21

speci…c economic models, we turn to techniques as VECM, SVAR, and structural

VECM.

What is outlined above is quite di¤erent from the typical basic econometric

textbook approach, which starts with OLS and ends in practice with GLS as the

solution to all problems. Here we will develop methods, which …rst describes the

statistical properties of the (joint) series at hand, and then allows the researcher

to answer economic questions in such a way that the conclusions are statistically

and economically valid. To get there we have to start with some basic statistics.

3.1 Statistical Models

A general de…nition of statistical time series analysis is that it …nds a mathematical

model that links observed variables with the stochastic mechanism that generated

the data. This sounds abstract, but the purpose of this abstraction is understand

the analytical tools of time series statistics. The practical problem is the following;

we have some stochastic observations over time. We know that these observations

have been generated by a process, but we do not know what this process looks

like. Statistical time series analysis is about developing the tools needed to mimic

the unknown data generating function (DGP).

We can formulate some general features of the model. First, it should be “a

well-de…ned statistical model”in the sense that the assumptions behind the model

should be valid for the data chosen. Later we will de…ne more exactly what this

implies for an econometric model. For the time being, we can say that single

most important criteria of models is that the residuals should be a white noise

process. Second, the parameters of the model should be stable over time. Third,

the model should be simple, or parsimonious, meaning that its functional form

should be simple. Fourth, the model should be parameterized in such a way that

it is possible to give the parameters a clear interpretation and identify them with

events in the real world. Finally, the model should be able to explain other rival

models describing the dependent variable(s).

The way to build a “well-de…ned-statistical-model”is to investigate the underlying assumptions of the model in a systematic way. It can easily be shown that

t-values, R2 , and Durbin-Watson values are not su¢ cient for determining the …t

of a model. In later chapters we will introduce a systematic test procedure.

The …nal aim of econometric modelling is to learn about economic behavior. To

some extent this always implies using some a priori knowledge about in the form

of theoretical relationships. Economists, in general, have extremely strong a priori

belief about the size and sign of certain parameters. This way of thinking has lead

to much confusion, because a priori believes can be driven too far. Econometrics is

basically about measuring correlations. It is a common misunderstanding among

non-econometricians that correlations can be too high or too low, or be deemed

right or wrong. Measured correlations are the outcome of the data used, only.

Anyone who thinks of an estimated correlation as “wrong”, must also explain what

went wrong in the estimation process, which requires knowledge of econometrics

and the real world.

22

TIME SERIES MODELING - AN OVERVIEW

3.2 Random Variables

The basic reason for dealing with stochastic models rather than deterministic

models is that we are faced with random variables. A popular de…nition of

random variables goes like this: a random variable is a variable that can take on

more than one value. 1 For every possible value that a random variable can take

on there is a number between zero and one that describes the probability that

the random variable will take on this value. In the following a random variable is

indicated with .

In statistical terms, a random variable is associated with the outcome of a

statistical experiment. All possible outcomes of such an experiment can be called

~

the sample space. If S is a sample space with a probability measure and if X

~

is real valued function de…ned over S then X is called a random variable.

There are two types of random variables; discrete random variables, which

only take on a speci…c number of real values, and (absolute) continuous random

variables, which can take on any value between 1. It is also possible to examine

discontinuous random variables, but we will limit ourselves to the …rst two types.

~ can take k numbers of values (x1 , ..., xk ),

If the discrete random variable X

the probability of observing a value xj can be stated as,

P (xj ) = pj :

(3.1)

Since probabilities of discrete random variables are additive, the probability of

observing one of the k possible outcomes is equal to 1.0, or using the notation just

introduced,

P (x1 ; x2 ; :::; or xk ) = p1 + p2 + ::: + pk = 1:

(3.2)

A discrete random variable is described by its probability function, F (xi ),

~ takes on a certain value. (The term

which speci…es the probability with which X

cumulative distribution is used synonymous with probability function).

In time series econometrics we are in most applications dealing with continuous

random variables. Unlike discrete variables, it is not possible to associate a speci…c

observation with a certain probability, since these variables can take on an in…nite

range of numbers. The probability that a continuous random variable will take

on a certain value is always zero. Because it is continuous we cannot make a

di¤erence between 1.01 and 1.0101 etc. This does not mean that the variables do

not take on speci…c values. The outcome of the experiment, or the observation, is

of course always a given number.

Thus, for a continuous random variable, statements of the probability of an

~

observation must be made in terms of the probability that the random variable X

is less than or equal to some speci…c value. We express this with the distribution

~ as follows,

function F (x) of the random variable X

~

F (x) = P (X

x) f or

1 < x < 1;

(3.3)

~ taking a value less than or equal to x.

which states the probability of X

The continuous analogue of the probability function is called the density

function f (x), which we get by derivation of the distribution function, w:r:t the

observations (x),

dF (x)

= f (x):

(3.4)

dx

1 Random

variables (RV:s) are also called stochastic variables, chance variables, or variates.

RANDOM VARIABLES

23

The fundamental theorem of integral calculus gives us the following expression

~ takes on a value less that or equal to x,

for the probability that X

Z x

F (x) =

f (u)du:

(3.5)

1

It follows that for any two constants (a) and (b), with a < b, the probability

~ takes on a value on the interval from (a) to (b) is given by

that X

F (b)

F (a)

=

=

Z

Z

b

f (u)du

1

b

Z

a

f (u)du

(3.6)

1

f (u)du

(3.7)

a

The term density function is used in a way that is analogous to density in

physics. Think of a rod of variable density, measured by the function f (x). To

obtain the weight of some given length of this rod, we would have to integrate its

density function over that particular part in which we are interested.

Random variables care described by their density function and/or by their

moments; the mean, the variance etc. Given the density function, the moments

can be determined exactly. In statistical work, we must …rst estimate the moments,

from the moments we can learn about density function. For, instance we can test,

if the assumption of an underlying normal density function is consistent with the

observed data.

A random variable can be predicted, in other words it is possible to form an

expectation of its outcome based on its density function. Appendix III deals with

the expectations operator and other operators related to random variables.

3.3 Moments of random variables

Random variables are characterized by their probability density functions pdf : s)

or their moments. In the previous section we introduced pdf : s: Moments refers to

measurements such as the mean, the variance, skewness, etc. If we know the exact

density function of a random variable then we would also know the moments. In

applied work, we will typically …rst calculate the moments from a sample, and

from the moments …gure out the density function of variables. The term moment

originates from physics and the moment of a pendulum. For our purposes it can be

though of as a general term which includes the de…nition of concepts like the mean

and the variance, without referring to any speci…c distribution. Starting with the

…rst moment, the mathematical expectation of a discrete random variable is given

by,

~ =

E(X)

X

xf (x)

(3.8)

where E is the expectation operator and f (x) is the value of its probability

~ Thus, E(X)

~ represents the mean of the discrete random variable

function at X.

~

X: Or, in other words, the …rst moment of the random variable. For a continuous

~ the mathematical expectation is

random variable (X),

Z 1

~ =

x f (x)dx

(3.9)

E(X)

1

24

TIME SERIES MODELING - AN OVERVIEW

where f (x) is the value of its probability density at x. The …rst moment can

also be referred to as the location of the random variable. Location is a more

generic concept than the …rst moment or the mean.

The term moments are used in situations where we are interested in the expected value of a function of a random variable, rather than the expectation of the

speci…c variable itself. Say that we are interested in Y~ , whose values are related

~ by the equation y = g(x). The expectation of Y~ is equal to the expectation

to X

of g(x), since E(Y~ ) = E [g(x)]. In the continuous case this leads to,

Z 1

~ =

g(x)f (x)dx:

(3.10)

E(Y~ ) = E[g(X)]

1

Like density, the term moment, or moment about the origin, has its explanation

in physics. (In physics the length of a lever arm is measured as the distance from

the origin. Or if we refer to the example with the rod above, the …rst moment

around the mean would correspond to horizontal center of gravity of the rod.)

Reasoning from intuition, the mean can be seen as the midpoint of the limits of

the density. The midpoint can be scaled in such a way that its becomes the origin

of the x- axis.

The term ”moments of a random variable” is a more general way of talking

about the mean and variance of a variable. Setting g(x) equal to x, we get the

r:th moment around the origin,

X

0

~r

xr f (x)

(3.11)

r = E(X ) =

~ is a discrete variable. In the continuous case we get,

when X

Z 1

0

~r

xr f (x)dx:

r = E(X ) =

(3.12)

1

~

The …rst moment is nothing else than the mean, or the expected value of X.

The second moment is the variance. Higher moments give additional information

about the distribution and density functions of random variables.

0

~ = (X

~

Now, de…ning g(X)

r ) we get what is called the r:th moment about

~ For r = 0, 1, 2, 3 ... we

the mean of the distribution of the random variable X.

get for a discrete variable,

X

0 r

0 r

~

~

(X

(3.13)

r = E[(X

r) ] =

r ) f (x)

~ is continuous

and when X

r

~

= E[(X

0 r

r) ]

=

Z

1

~

(X

0 r

) f (x)dx:

(3.14)

1

The second moment about the mean, also called the second central moment,

is nothing else than the variance of g(x) = x;

Z 1

~

~ E(X)]

~ 2 f (x)dx

var(X)

=

[X

(3.15)

1

Z 1

~ 2 f (x)dx [E(X)]

~ 2

=

X

(3.16)

1

=

~ 2)

E(X

~ 2;

[E(X)]

(3.17)

where f (x) is the value of probability density function of the random variable

~ at x:A more generic expression for the variance is dispersion. We can say that

X

MOMENTS OF RANDOM VARIABLES

25

the second moment, or the variance, is a measure of dispersion, in the same way

as the mean is a measure of location.

The third moment, r = 3, measures asymmetry around the mean, referred

to as skewness. The normal distribution is asymmetric around the mean. The

likelihood of observing a value above or below the mean is the same for a normal

distribution. For a right skewed distribution, the likelihood of observing a value

higher than the mean is higher than observing a lower value. For a left skewed

distribution, the likelihood of observing a value below the mean is higher than

observing a value above the mean.

The fourth moment, referred to as kurtosis, measures the thickness of the

tails of the distribution. A distribution with thicker tails than the normal, is

characterized by a higher likelihood of extreme events compared with the normal distribution. Higher moments give further information about the skewness,

tails and the peak of the distribution. The …fth, the seventh moments etc. give

more information about the skewness. Even moments, above four, give further

information the thickness of the tails and the peak.

3.4 Popular Distributions in Econometrics

In time series econometrics, and …nancial economics, there is a small set of distributions that one has to know. The following is a list of common distributions:

Distribution

Normal distribution

N ; 2

Log Normal distribution LogN ; 2

Student t distribution

St ; ; 2

Cauchy distribution

Ca ; 2

Gamma distribution

Ga ; ; 2

Chi-square distribution

( )

F distribution

F (d1 ; d2 )

Poisson distribution

P ois ( )

Uniform distribution

U (ja; bj)

The pdf of a normal distribution is written as

p

1

(x

)2

2 2

:

2 2

The normal distribution characterized by the following: the distribution is

symmetric around its mean, and it has only two moments, the mean and the

variance, N ( ; 2 ). The normal distribution can be standardised to have a mean of

zero and variance of unity (say ( x E(x)) and is consequently called a standardised

normal distribution, N (0; 1).

In addition, it follows that the …rst four moments, the mean, the variance, the

~ = , V ar(X)

~ = 2 ; Sk(X)

~ = 0;and Ku(X)

~ =

skewness and kurtosis, are E(X)

3:There are random variables that are not normal by themselves but becomes

normal if they are logged. The typical examples are stock prices and various

macroeconomic variables. Let St be a stock price. The dollar return over a given

interval, Rt = St St 1 is not likely to be normally distributed due to simple

fact that the stock price is raising over time, partly due to the fact that investors

demand a return on their investment but mostly due to in‡ation. However, if you

take the log of the stock price and calculate the per cent return (approximately),

f (x)

26

e

TIME SERIES MODELING - AN OVERVIEW

rt = ln St ln St 1 , this variable are much more likely to have a normal distribution

(or a distribution that can be approximated with a normal distribution). Thus,

since you have taken logs of variables in your econometric models, you have already

worked with log normal variables. Knowledge about log normal distributions is

necessary if you want to model, or better understand, the movements of actual

stock prices and dollar returns.

The Student t distribution is similar to the normal distribution, it is symmetric

around the mean, it has a variance but has thicker tail than the normal distribution. The Student t distribution is described by ; ; 2 where refers to

the mean and 2 refers to the variance. The parameter is called the degrees

of freedom of the Student t distribution and refers to the thickness of tails. A

random variable that follows a Student t distribution will converge to a normal

random variable as the number of observations goes to in…nity.

The Cauchy distribution is related to the normal distribution and the Student

t distribution. Compared with the normal it is symmetric and has two moments,

but it has fatter tails and is therefore better suited for modelling random variables

which takes on relatively more extreme events than the normal. The set back for

empirical work is that higher moment are not de…ned meaning that it is di¢ cult

to use empirical moments to test for Cauchy distribution against say the normal

or the Student t distribution.

The gamma and the chi-square distributions are related to variances

n of normal

o

random variables. If we have a set of normal random variables Y~1 ; Y~2 :::; Y~v

~

and for a new variable as X

Y~12 + Y~22 + ::: + Y~v2 , then this new variable will

~

have a gamma distribution as X

Ga( ; ; 2 ):A special case of the gamma

distribution is when we have = 0 and 2 = 1, the distribution is then called

a chi-square distribution 2 ( ) with degrees of freedom. Thus, take the square

of an estimated regression parameter and divide it with it variance and you get a

2

chi-square distributed test for signi…cance of the estimated , ( ^ = ^ )

( ):

The F distribution comes about when you compare the ration (or log di¤erence)

of two squared normal random variables. The Poisson distribution is used to model

jumps in the data, usually in combination with a geometric Brownian motions,

(jump di¤usion models). The typical example is stock prices that might move up

or down drastically. The parameter measures the probability of jump in the

data.

3.5 Analysing the Distribution

In practical work we need to know the empirical distribution of the variables we

are working with, in order to make any inference. All empirical distributions can

analysed with the help of their …rst four moments. Through the …rst four moments

we get information …rst about the mean and the variance and second about the

skewness and kurtosis. The latter moments are often critical when we decide if a

certain empirical distribution should be seen as normal or at least approximately

normal.

It is, of course, extremely convenient to work with the assumption of a normal

distribution, since a normal distribution is described by its …rst two moments

only. In …nance, the expected return is given be the mean, and the risk of the

asset is given by its variance. An approximation to the holding period return of

an asset is the log di¤erence of its price. In the case of a normal distribution,

there is no need to consider higher moments. Furthermore, linear combinations of

ANALYSING THE DISTRIBUTION

27

normal variates result in new normally distributed variables. In econometric work,

building regression equations, the residual process is assumed to be a normally

independent white noise process, in order to allow for inference and testing.

It is by calculating the sample moments we learn about the distribution of the

series at hand. The most typical problem in empirical work is to investigate how

well the distribution a variable can be approximated with a normal distribution.

If the normal distribution is rejected for the residuals in a regression, the typical

conclusion is that there something important missing in the regression equation.

The missing part is either an important explanatory variable, or the direct cause

of an outlier.

To investigate the empirical distribution we need to calculate the sample moments of the variable. The sample mean, of fxt g = fx1 ; x2 ; :::xT g; can be estiPT

mated as ^ x = x = (1=T ) t=1 xt . Higher moments can be estimated with the

PT

formula mr = (1=T ) t=1 (xt x)r :2

~ t N ( x ; 2x ); subtracting the mean and diA series is normally distributed, X

viding with the standard error lead to a standardised normal variable, distributed

~ N (0; 1): For a standardised normal variable the third and fourth moments

as X

equal 0 and 3, respectively. The standardised third moment is now as Skewness,

given as b1 = m23 =m32 . A skewness with a negative value indicates a left skew

distribution, compared with the normal. If the series is the return on an asset it

means that ’bad’or negative surprises dominates over ’good’positive surprises. A

positive value of skewness implies a right skewed distribution. In terms of asset

returns, ’good’or positive surprises are more likely than ’bad’negative surprises.

The fourth moment, kurtosis is calculated as b2 = m4 =m22 : A value above

3, implies that the distribution generates more extreme values than the normal

distribution. The distribution has fatter tails than the normal. Referring to asset

returns, approximating the distribution with the normal, would underestimate the

risk associated with the asset.

An asymptotic test, with a null of a normal distribution is given by3 ,

JB = T

m23 =m32

6

[(m4 =m22 )

24

3]2

+T

3m21

m1 m3

+

2m2

m22

2

(2):

This test is known as the Jarque-Bera (JB) test and is the most common

test for normality in regression analysis. The null hypothesis is that the series is

normally distributed. Let 1 ; 2 , 3 and 4 represent the mean, the variance, the

skewness and the kurtosis. The null of a normal distribution is rejected if the test

statistics is signi…cant. The fact that the test is only valid asymptotically, means

that we do not know the reason for a rejection in a limited sample. In a less than

asymptotic sample rejection of normality is often caused by outliers. If we think

the most extreme value(s) in the sample are non-typical outliers, ’removing’them

from the calculation the sample moments usually results in a non-signi…cant JB

test. Removing outliers is add hoc. It could be that these outliers are typical

values of the true underlying distribution.

2 For these moments to be meaningful, the series must be stationary. Also, we would like

fxt g to an independent process. Finally, notice that the here suggested estimators of the higher

moments are not necessarily e¢ cient estimators.

3 This test statistics is for a variable with a non-zero mean. If the variable is adjusted for its

mean (say an estimated residual), the second should be removed from the expression.

28

TIME SERIES MODELING - AN OVERVIEW

3.6 Multidimensional Random Variables

We will now generalize the work of the previous sections by considering a vector

of n random variables,

~ = (X

~1; X

~ 2 ; :::; X

~n)

X

(3.18)

whose elements are continuous random variables with density functions f (x1 )

..., f (xn ), and distribution functions F (x1 ) ..., F (xn ). The joint distribution will

look like,

F (x1 ; x2 ; :::; xn ) =

Z

xn

1

Z

x1

f (x1 ; x2 ; :::; xn )dx1

dxp ;

(3.19)

1

where f (x1 , x2 , ..., xn ) is the joint density function.

If these random variables are independent, it will be possible to write their

joint density as the product of their univariate densities,

f (x1 ; x2 ; :::; xn ) = f (x1 )f (x2 )

f (xn ):

(3.20)

For independent random variables we can de…ne the r:th product moment as,

=

~ 1 r1 ; X

~ 2 r2 ; :::; X

~ n rn )

E(X

Z 1

Z 1

x1 r1 x2 r2

1

(3.21)

xn rn f (x1 ; x2 ; :::; xn )dx1 dx2

dxn ; (3.22)

1

which, if the variables are independent, factorizes into the product

~ 1 r1 )E(X

~ 2 r2 )

E(X

~ n rn ):

E(X

(3.23)

It follows from this result that the variance of a sum of independent random

variables is merely the sum of these individual variances,

~1 + X

~ 2 + ::: + X

~ n ) = var(X

~ 1 ) + var(X

~ 2 ) + ::: + var(X

~ n ):

var(X

(3.24)

We can extend the discussion of covariance to linear combinations of random

variables, say

~ = a1 X

~ 1 + a2 X

~ 2 + ::: + ap X

~p;

a0 X

(3.25)

which leads to,

~ =

cov(a0 X)

p X

p

X

ai aj

ij :

(3.26)

i=1 j=1

~ Z• = B X,

~ and the

These results hold for matrices as P

well. If we have Y~ = AX,

~

~

covariance matrix between X and Y ( ), we have also that,

cov(Y• ; Y• ) = A

• Z)

• =B

cov(Z;

and

• =A

cov(Y• ; Z)

MULTIDIMENSIONAL RANDOM VARIABLES

X

X

X

A0 ;

(3.27)

B0;

(3.28)

B0:

(3.29)

29

3.7 Marginal and Conditional Densities

Given a joint density function of n random variables, the joint probability of a

subsample of them is called the joint marginal density. We can also talk about

joint marginal distribution functions. If we set n = 3 we get the joint density

function f (x1 , x2 , x3 ). Given the marginal distribution g(x2 x3 ), the conditional

~ 1 , given that the random

probability density function of the random variable X

~

~

variables X2 and X3 takes on the values x2 and x3 is de…ned as,

'(x1 j x2 ; x3 ) =

f (x1 ; x2 ; x2 )

;

g(x2 ; x3 )

(3.30)

or

f (x1 ; x2 ; x3 ) = '(x1 j x2 ; x3 )g(x2 x3 ):

(3.31)

~1,

Of course we can de…ne a conditional density for various combinations of X

~

~

X2 and X3 , like, p(x1 , x3 ; j x2 ) or g(x3 j x1 , x2 ). And, instead of three di¤erent

variables we can talk about the density function for one random variable, say Y~t ,

for which we have a sample of T observations. If all observations are independent

we get,

f (y1 ; y2 ; :::; yt ) = f (y1 )f (y2 ):::f (yt ):

(3.32)

Like before we can also look at conditional densities, like

f (yt j y1 ; y2 ; :::; yt

1 );

(3.33)

which in this case would mean that (yt ) the observation at time t is dependent

on all earlier observations on Y~t .

It is seldom that we deal with independent variables when modeling economic

time series. For example, a simple …rst order autoregressive model like yt =

yt 1 + t , implies dependence between the observations. The same holds for all

time series models. Despite this shortcoming, density functions with independent

random variables, are still good tools for describing time series modelling, because

the results based on independent variables carries over to dependent variables in

almost every case.

3.8 The Linear Regression Model — A General Description

In this section we look at the linear regression model starting from two random

~ Two regressions can be formulated,

variables Y~ and X.

y=

+ x+ ;

(3.34)

x=

+ y+ :

(3.35)

and

Whether one chooses to condition y on x, or x on y depends on the parameter

of interest. In the following it is shown how these regression expression are constructed from the correlation between x and y, and their …rst moments by making