Swift: Compiled Inference for Probabilistic Programming

advertisement

Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI-16)

Swift: Compiled Inference for Probabilistic Programming Languages

Yi Wu

UC Berkeley

jxwuyi@gmail.com

Lei Li

Toutiao.com

lileicc@gmail.com

Stuart Russell

Rastislav Bodik

UC Berkeley

University of Washington

russell@cs.berkeley.edu bodik@cs.washington.edu

Abstract

computation [Mansinghka et al., 2013]. While better algorithms are certainly possible, our focus in this paper is on

achieving orders-of-magnitude improvement in the execution

efficiency of a given algorithmic process.

A PPL system takes a probabilistic program (PP) specifying a probabilistic model as its input and performs inference to compute the posterior distribution of a query given

some observed evidence. The inference process does not (in

general) execute the PP, but instead executes the steps of an

inference algorithm (e.g., Gibbs) guided by the dependency

structures implicit in the PP. In many PPL systems the PP exists as an internal data structure consulted by the inference

algorithm at each step [Pfeffer, 2001; Lunn et al., 2000;

Plummer, 2003; Milch et al., 2005a; Pfeffer, 2009]. This process is in essence an interpreter for the PP, similar to early

Prolog systems that interpreted the logic program. Particularly when running sampling algorithms that involve millions of repetitive steps, the overhead can be enormous. A

natural solution is to produce model-specific compiled inference code, but, as we show in Sec. 2, existing compilers for general open-universe models [Wingate et al., 2011;

Yang et al., 2014; Hur et al., 2014; Chaganty et al., 2013;

Nori et al., 2014] miss out on optimization opportunities and

often produce inefficient inference code.

The paper analyzes the optimization opportunities for PPL

compilers and describes the Swift compiler, which takes as

input a BLOG program [Milch et al., 2005a] and one of three

inference algorithms (LW, PMH, Gibbs) and generates target

code for answering queries. Swift includes three main contributions: (1) elimination of interpretative overhead by joint

analysis of the model structure and inference algorithm; (2) a

dynamic slice maintenance method (FIDS) for incremental

computation of the current dependency structure as sampling

proceeds; and (3) efficient runtime memory management for

maintaining the current-possible-world data structure as the

number of objects changes. Comparisons between Swift and

other PPLs on a variety of models demonstrate speedups

ranging from 12x to 326x, leading in some cases to performance comparable to that of hand-built model-specific code.

To the extent possible, we also analyze the contributions of

each optimization technique to the overall speedup.

Although Swift is developed for the BLOG language, the

overall design and the choices of optimizations can be applied

to other PPLs and may bring useful insights to similar AI

A probabilistic program defines a probability measure over its semantic structures. One common goal

of probabilistic programming languages (PPLs)

is to compute posterior probabilities for arbitrary

models and queries, given observed evidence, using a generic inference engine. Most PPL inference engines—even the compiled ones—incur significant runtime interpretation overhead, especially

for contingent and open-universe models. This

paper describes Swift, a compiler for the BLOG

PPL. Swift-generated code incorporates optimizations that eliminate interpretation overhead, maintain dynamic dependencies efficiently, and handle

memory management for possible worlds of varying sizes. Experiments comparing Swift with other

PPL engines on a variety of inference problems

demonstrate speedups ranging from 12x to 326x.

1

Introduction

Probabilistic programming languages (PPLs) aim to combine

sufficient expressive power for writing real-world probability

models with efficient, general-purpose inference algorithms

that can answer arbitrary queries with respect to those models. One underlying motive is to relieve the user of the obligation to carry out machine learning research and implement

new algorithms for each problem that comes along. Another

is to support a wide range of cognitive functions in AI systems and to model those functions in humans.

General-purpose inference for PPLs is very challenging;

they may include unbounded numbers of discrete and continuous variables, a rich library of distributions, and the ability

to describe uncertainty over functions, relations, and the existence and identity of objects (so-called open-universe models). Existing PPL inference algorithms include likelihood

weighting (LW) [Milch et al., 2005b], parental Metropolis–

Hastings (PMH) [Milch and Russell, 2006; Goodman et

al., 2008], generalized Gibbs sampling (Gibbs) [Arora et

al., 2010], generalized sequential Monte Carlo [Wood et

al., 2014], Hamiltonian Monte Carlo (HMC) [Stan Development Team, 2014], variational methods [Minka et al., 2014;

Kucukelbir et al., 2015] and a form of approximate Bayesian

3637

Classical Probabilistic

Program Optimizations

(insensitive to inference

algorithm)

1

2

3

4

5

6

7

8

9

10

Classical Algorithm

Optimizations

(insensitive to model)

Inference Code 𝑷𝑰

Probabilistic

Program

(Model)

𝑷𝑴

𝓕𝑰 𝑷𝑴 → 𝑷𝑰

Algorithm-Specific

Code

𝓕cg 𝑷𝑰 → 𝑷𝑻

Target Code

(C++)

𝑷𝑻

Model-Specific Code

Our Optimization: FIDS

(combine model and algorithm)

type Ball; type Draw; type Color; //3 types

distinct Color Blue, Green; //two colors

distinct Draw D[2]; //two draws: D[0], D[1]

#Ball ~ UniformInt(1,20);//unknown # of balls

random Color color(Ball b) //color of a ball

~ Categorical({Blue -> 0.9, Green -> 0.1});

random Ball drawn(Draw d)

~ UniformChoice({b for Ball b});

obs color(drawn(D[0])) = Green; // drawn ball is green

query color(drawn(D[1])); // query

Figure

2: The

urn-ball

model

Figure

2: The

urn-ball

model

Our Optimization:

Data Structures for FIDS

1 type Cluster; type Data;

which

track all

theD[20];

dependencies

at runtime

2 distinct

Data

// 20 data

points even though typ3 #Cluster

Poisson(4);

// the

number

of clusters

ically

only a~ tiny

portion of

dependency

structure may

4 random Real mu(Cluster c) ~ Gaussian(0,10); //cluster mean

change

per

iteration.

Church

simply

avoids

keeping

track of

5 random Cluster z(Data d)

dependencies

and uses Eq.(1),

including

6

~ UniformChoice({c

for Cluster

c}); a potentially huge

7 random for

Real

x(Data d)

~ Gaussian(mu(z(d)),

overhead

redundant

probability

computations.1.0); // data

8 obs x(D[0]) = 0.1; // omit other data points

For

the

second

point,

in

order

to

track the existence of

9 query size({c for Cluster c});

the variables in an open-universe model, similar to BLOG,

Church also maintains a complicated dynamic string-based

Figure 3: The infinite Gaussian mixture model

hashing scheme in the target code, which again causes interpretation overhead.

Lastly,

by Hur et

al. [2014]such

, Chaganty

generictechniques

approach proposed

is Monte-Carlo

sampling,

as likeli[

]

[

]

et al.

2013

and

Nori

et

al.

2014

primarily

focus

on optihood weighting [Milch et al., 2005a] (LW) and Metropolismizing

PM by

analyzing

the static

properties

ofidea

the input

PP,

Hastings

algorithm

(MH).

For LW,

the main

is to sample

which

arerandom

complementary

Swift.

every

variable to

from

its prior per iteration and collect weighted samples for the queries, where the weight is the

3 likelihood

Background

of the evidences. For MH, the high-level procedure

ofpaper

the algorithm

is summarized

in Alg. 1.although

M denotes

an inThis

focuses on

the BLOG language,

our apput BLOG

program

model),

its evidence,

Q asemanquery, N

proach

also applies

to (or

other

PPLsEwith

equivalent

the number

samples,

denotes

world,

[McAllester

ticsdenotes

et al., of

2008;

Wu etWal.,

2014].a possible

Other PPLs

(x)also

is the

value of xtoinBLOG

possiblevia

world

W ,single

Pr[x|W

] denotes

canWbe

converted

static

assigntheform

conditional

probability

of x in W[,Cytron

and Pr[W

] denotes

ment

(SSA form)

transformation

et al.,

1989; the

of].possible world W . In particular, when the proHurlikelihood

et al., 2014

posal distribution g in Alg.1 is the prior of every variable, the

3.1algorithm

The BLOG

Language

becomes

the parental MH algorithm (PMH). Our

in the paper

focuses

LW and

PMHprobabilbut our pro[Milch

] defines

Thediscussion

BLOG language

et al.,on2005a

solution

to other

Monte-Carlo

methods

well.

ity posed

measures

over applies

first-order

(relational)

possible

worlds;asin

this sense it is a probabilistic analogue of first-order logic. A

BLOG

declares

types for objects and defines distri4 program

The Swift

Compiler

butions over their numbers, as well as defining distributions

has the

following

design applied

goals for

handlingObopenfor Swift

the values

of random

functions

to objects.

universe

probabilistic

models.

servation

statements

supply

evidence, and a query statement

specifies

the posterior

probability

of interest.

A random vari• Provide

a general

framework

to automatically

and efable in BLOG

to exact

the application

of a random

ficientlycorresponds

(1) track the

Markov blanket

for each

functionrandom

to specific

objects

a possible

BLOG natvariable

andin (2)

maintainworld.

the minimum

set of

urally supports open universe probability models (OUPMs)

and context-specific dependencies.

Algorithm

1: Metropolis-Hastings

Fig.

2 demonstrates

the open-universealgorithm

urn-ball (MH)

model. In

this version

the, E

query

asks

for the

color ofHthe next random

Input: M

, Q, N

; Output

: samples

pick1 from

an urn

given world

the colors

of balls

drawn previously.

initialize

a possible

W0 with

E satisfied;

Line2 4forisi a number

statement stating that the number vari1 to N do

randomly

pick a variable

x from

able3 #Ball,

corresponding

to the

totalWnumber

of balls, is

i 1 ;

4

Wdistributed

Wi 1 between

and v

i

i 1 (x)

uniformly

1Wand

20.; Lines 5–6 declare a

5

propose color(·),

a value v 0applied

for x viatoproposal

g;

random

function

balls, picking

Blue or

0

6

Wi (x)

v

and

ensure WiLines

is supported;

Green

with

a biased

probability.

7–8

state

that

each

⇣

⌘

0

!v) Pr[W

i ] the urn, with replacedraw

a ball1,at g(v

random

from

7 chooses

↵

min

;

g(v!v 0 ) Pr[Wi 1 ]

ment.

8

if rand(0, 1) ↵ then Wi

Wi 1

Fig. 3 describes

OUPM,

the infinite

Gaussian mixH

H +another

(Wi (Q))

;

ture model (1-GMM). The model includes an unknown

Figure 1: PPL compilers and optimization opportunities.

systems for real-world applications.

2

Existing PPL Compilation Approaches

In a general purpose programming language (e.g., C++), the

compiler compiles exactly what the user writes (the program).

By contrast, a PPL compiler essentially compiles the inference algorithm, which is written by the PPL developer, as

applied to a PP. This means that different implementations of

the same inference algorithm for the same PP result in completely different target code.

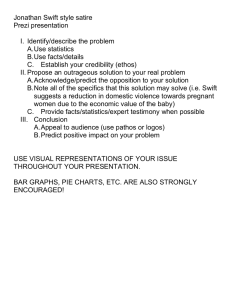

As shown in Fig. 1, a PPL compiler first produces an intermediate representation combining the inference algorithm (I)

and the input model (PM ) as the inference code (PI ), and then

compiles PI to the target code (PT ). Swift focuses on optimizing the inference code PI and the target code PT given a

fixed input model PM .

Although there have been successful PPL compilers, these

compilers are all designed for a restricted class of models

with fixed dependency structures: see work by Tristan [2014]

and the Stan Development Team [2014] for closed-world

Bayes nets, Minka [2014] for factor graphs, and Kazemi and

Poole [2016] for Markov logic networks.

Church [Goodman et al., 2008] and its variants [Wingate

et al., 2011; Yang et al., 2014; Ritchie et al., 2016] provide a lightweight compilation framework for performing

the Metropolis–Hastings algorithm (MH) over general openuniverse probability models (OUPMs). However, these approaches (1) are based on an inefficient implementation of

MH, which results in overhead in PI , and (2) rely on inefficient data structures in the target code PT .

For the first point, consider an MH iteration where we are

proposing a new possible world w0 from w accoding to the

proposal g(·) by resampling random variable X from v to v 0 .

The computation of acceptance ratio ↵ follows

✓

◆

g(v 0 ! v) Pr[w0 ]

↵ = min 1,

.

(1)

g(v ! v 0 ) Pr[w]

Since only X is resampled, it is sufficient to compute ↵ using merely the Markov blanket of X, which leads to a much

simplified formula for ↵ from Eq.(1) by cancelling terms in

Pr[w] and Pr[w0 ]. However, when applying MH for a contingent model, the Markov blanket of a random variable cannot be determined at compile time since the model dependencies vary during inference. This introduces tremendous runtime overhead for interpretive systems (e.g., BLOG, Figaro),

3638

r

t

1

t

• P

s

i

For

(Fram

gener

goal,

code (

For

demo

discus

Sec. 4

Swift

only s

contin

4.1

Our d

thoug

includ

adapti

ing (R

and R

Dyna

Dynam

a dyn

variab

comp

piler):

and q

during

For

input

is use

and (2

the cu

infere

DB

is to r

struct

target

For

x(d) i

doubl

//

mem

//

val

ret

Since

mu(c

Notab

dency

non-e

8

~ UniformChoice({b for Ball b});

9 obs color(drawn(D[0])) = Green; // drawn ball is green

10 query color(drawn(D[1])); // query

Figure 2: The urn-ball model

type Cluster; type Data;

distinct Data D[20]; // 20 data points

#Cluster ~ Poisson(4); // number of clusters

random Real mu(Cluster c) ~ Gaussian(0,10); //cluster mean

random Cluster z(Data d)

~ UniformChoice({c for Cluster c});

random Real x(Data d) ~ Gaussian(mu(z(d)), 1.0); // data

Figure 2: The urn-ball model

obs x(D[0]) = 0.1; // omit other data points

query size({c for Cluster c});

1

2

3

4

5

6

7

8

9

Figure3:3:The

Theinfinite

infiniteGaussian

Gaussianmixture

mixture model

model

Figure

Algorithm

1: Metropolis–Hastings

algorithmsuch

(MH)

generic approach

is Monte-Carlo sampling,

as likelihood

et al.,

2005a] (LW)

and MetropolisInput:weighting

M, E, Q,[Milch

N ; Output

: samples

H

(0) LW, the main idea is to sample

(MH).wFor

1 Hastings

initialize aalgorithm

possible world

with E satisfied;

every

random

variable

from

its prior per iteration and col2 for i

1 to N do

(i

1) themodel

Figure 3:samples

The

infinite

mixture

weighted

for theGaussian

weight is the

3 lect randomly

pick

a variable

Xqueries,

from wwhere

;

likelihood

of

the

For

MH,

the

high-level

procedure

(i)

(i evidences.

1)

(i 1)

4

w

w

and v

X(w

);

of the algorithm is summarized

in Alg. 1. M denotes an in0

5

propose

a value

v for X via proposal

g;

generic

approach

is (or

Monte-Carlo

sampling,

as likeliput BLOG

program

model), E

its

evidence,such

Q a query,

N

(i)

0

(i)

6

X(wthe )number

vMilch

and

ensure

w W is

self-supporting;

[

]

hood

weighting

et

al.,

2005a

(LW)

and

Metropolisdenotes

of

samples,

denotes

a

possible

world,

⌘

⇣

0

(i)

g(v !v)For

Pr[wLW,

] the main idea is to sample

Hastings

(MH).

the

value

of

x in0 )possible

world

7 W (x)

↵ isalgorithm

min

1, g(v!v

; W , Pr[x|W ] denotes

Pr[w(i 1) ]

every

random

variable

from

its

prior

per

iteration

and colthe conditional probability of (i)

x in W ,(iand

] denotes

the

1) Pr[W

8

if rand(0,

1) ↵ for

thenthewqueries,

w where

;

lect

weighted

thewhen

weight

the

likelihood

ofsamples

possible(i)

world W . In particular,

the ispro9

+ (Q(w

)); For

likelihood

ofHthe

evidences.

MH,

the of

high-level

procedure

posalHdistribution

g in Alg.1

is the

prior

every variable,

the

becomes

the parentalinMH

algorithm

(PMH).anOur

ofalgorithm

the algorithm

is summarized

Alg.

1. M denotes

indiscussion

in the paper

focuses on

LWevidence,

and PMHQbut

our proput

BLOG program

(or model),

E its

a query,

N

posed

solution

applies

to

other

Monte-Carlo

methods

as

well.

denotes

the

number

of

samples,

W

denotes

a

possible

world,

number of clusters as stated in line 3, which is to be inferred

W (x)the

is data.

the value of x in possible world W , Pr[x|W ] denotes

from

the4 conditional

probability

of x in W , and Pr[W ] denotes the

The Swift

Compiler

likelihood

of

possible

world

W . In particular, when the pro3.2Swift

Generic

Algorithms

has theInference

following design

goals for handling openposal

distribution

g

in

Alg.1

is

the prior of every variable, the

universe

probabilistic

models.

All

PPLs

need

to

infer

the

posterior

of a query

algorithm becomes the parental MH distribution

algorithm (PMH).

Our

given

observed

evidence.

The

majority

use

• the

Provide

a general

framework

to and

automatically

and proefdiscussion

in the

paper

focuses

ongreat

LW

PMHof

butPPLs

our

Monte

Carlo

sampling

methods

such

as

likelihood

weighting

ficiently

(1)

track

the

exact

Markov

blanket

for

each

posed solution applies to other Monte-Carlo methods as well.

(LW) and

Metropolis–Hastings

MCMC the

(MH).

LW samples

random

variable and (2) maintain

minimum

set of

unobserved random variables sequentially in topological or4 The Swift Compiler

der, conditioned on evidence and sampled values earlier in

Algorithm

1:following

Metropolis-Hastings

algorithm

(MH)

Swift

has theeach

design

goals

for handling

the

sequence;

complete

sample

is

weighted

by theopenlikeuniverse

Input

:the

M,evidence

E, Q, N ;models.

Output

: samples

H in the sequence.

lihood

of probabilistic

variables

appearing

a possible

W0 with E

The1• initialize

MH

algorithm

is world

summarized

in

Alg.

1. M denotes

a

Provide

aN

general

framework

tosatisfied;

automatically

and ef2 for i

1 toE

do evidence, Q a query, N the number of

BLOG

model,

its

ficiently

(1)

track

the

exact

Markov

blanket

for

each

3

randomly pick a variable x from Wi 1 ;

samples,

w a possible

world,

X(w)

the value

of randomset

varivariable

and

maintain

the minimum

of

4 random

W

Wi 1 and

v (2)

W

i

i 1 (x);

able

X

in

w,

Pr[X(w)|w

the

conditional

proba0 -X ] denotes

random

variables

necessary

to

evaluate

the

query

and

5

propose a value v for x via proposal g ;

0

bility

of W

Xi (x)

in w,vand

the likelihood of pos6

andPr[w]

ensuredenotes

Wi is⌘supported;

sible world w. In⇣ particular,

when

the proposal distribution

g(v 0 !v) Pr[W

i]

↵

min

1, g(v!v0 ) Pr[Wi 1 ] algorithm

;

1: Metropolis-Hastings

g Algorithm

in7 Alg.1

samples

a variable conditioned

on its(MH)

parents, the

8

if

rand(0,

1)

↵

then

W

W

i

i 1

algorithm

becomes

the

parental

MH algorithm

(PMH). Our

Input: M

, E, Q , N

; Output

: samples

H

H

H +paper

(Wi (Q))

;

discussion

the

focuses

on LW

and PMH but our pro1 initialize in

a possible

world

W0 with

E satisfied;

2 for isolution

1 to N

do to other Monte Carlo methods as well.

posed

applies

structures for Monte Carlo sampling algorithms to avoid

interpretive

overhead

at runtime.

the evidence

(the dynamic

slice [Agrawal and Horgan,

], or goal,

equivalently

the minimum

self-supported

For 1990

the first

we propose

a novel framework

FIDSpar[Milch et al., updating

tial world

2005a]). Dynamic Slices) for

(Framework

of Incrementally

generating

the inference

code

(PI in

Fig. 1). For and

the second

Provide

efficient memory

memory

management

fast data

data

•• Provide

efficient

management

and

fast

goal,

we

carefully

choose

data

structures

in

the

target

C++

structures

for

Monte

Carlo

sampling

algorithms

to

avoid

structures

for Monte Carlo sampling algorithms to avoid

code (P

T ).

interpretive

overhead at

at runtime.

runtime.

overhead

Forinterpretive

convenience,

r.v. is short

for random variable. We

For

the

first

goal,

we

propose

novel

framework

FIDS

demonstrate

examples

of

compiled

inframework,

the following

For the first goal, we propose aacode

novel

FIDS

discussion:

the

inference

code

(P

)

generated

by

FIDS

in

(Framework

of

Incrementally

updating

Dynamic

Slices)

for

I

(Framework for Incrementally updating Dynamic Slices), for

Sec.

4.1 are all

ininference

pseudo-code

while

theFig.

target

code

(P

by

generating

the

code

(P

in

1).

For

the

T )second

I

generating the inference code (PI in Fig. 1). For the second

Swift

in Sec. 4.2

are indata

C++.structures

Due to limited

goal,shown

we carefully

carefully

choose

in the

thespace,

targetwe

C++

goal,

we

choose

data structures

in

target

C++

only

show

the detailed transformation rules for the adaptive

code

(P

).

T

code (PT ). updating (ACU) technique and omit the others.

contingency

For convenience,

convenience, r.v.

r.v. isis short

short for

for random

random variable.

variable. We

We

For

demonstrate

examples

of

compiled

code

in

the

following

demonstrate

examplesinof

compiled code in the following

4.1

Optimizations

FIDS

discussion: the

the inference

inference code

code (P

(PI)) generated

generated by

by FIDS

FIDS in

in

discussion:

I on LW and PMH,

Our

discussion

ininthis

section focuses

al-) by

Sec.

4.1

are

all

pseudo-code

while

the

target

code

(P

T

Sec. 4.1FIDS

is in can

pseudo-code

while the

targetalgorithms.

code (PT ) by

Swift

though

be also

applied

to other

FIDS

Swift shown

Sec.

are inDue

C++.

to limited

we

shown

inthree

Sec.in

4.2

is 4.2

in C++.

toDue

limited

space, space,

we

show

includes

optimizations:

dynamic

backchaining

(DB),

only

show

the

detailed

transformation

rules

for

the

adaptive

the detailed

transformation

rules

only and

for the

adaptive

continadaptive

contingency

updating

(ACU)

reference

countcontingency

updating (ACU)

technique

andthe

omit

the others.

gency

updating

andand

omit

others.

ing

(RC).

DB is (ACU)

applied technique

to both LW

PMH while

ACU

and RC are particularly for PMH.

randomly pick a variable x from Wi 1 ;

4

Wi

W 1 and v

Wi 1 (x);

45 The

Swifti Compiler

propose a value v 0 for x via proposal g ;

0

6

Wi (x)

Wi isgoals

Swift

has

the following

design

for handling open⇣v and ensure

⌘supported;

3

g(v 0 !v) Pr[Wi ]

universe

7

↵ probability

min 1, models.

0

g(v!v ) Pr[Wi 1 ]

8

4.1 Optimizations

Optimizations in

in FIDS

FIDS

4.1

Dynamic

backchaining

Our discussion

discussion

in this

this section

section focuses

focuses on

on LW

LW and

and PMH,

PMH, alalOur

in

Dynamic

backchaining

(DB)applied

aims toto

incrementally

construct

though

FIDS

can be

be also

also

other algorithms.

algorithms.

FIDS

though

FIDS

can

applied

to

other

FIDS

aincludes

dynamic three

slice in

each LW iteration

and only

sample those

optimizations:

dynamic

backchaining

(DB),

includes necessary

three optimizations:

dynamic

backchaining

variables

at runtime. DB

is adaptive

from idea (DB),

of

adaptive

contingency

updating

(ACU)

and

reference

countadaptive contingency

updating

(ACU),toand

countcompiling

lazy evaluation

(also similar

thereference

Prolog coming (RC).

(RC). DB

DB isis applied

applied to

to both LW

LW and PMH

PMH while ACU

ACU

ing

piler):

in each iteration,

DB both

backtracksand

from the while

evidence

and

RC

are

particularly

for

PMH.

and

RC

are

specific

to

PMH.

and query and samples a r.v. only when its value is required

during

inference.

Dynamic

backchaining

Dynamic

backchaining

For

every

r.v. declaration

random

Tincrementally

X ⇠ CX ; in

the

Dynamic

backchaining

(DB)

toet

construct

[aims

] constructs

Dynamic

backchaining

Milch

al.,

2005a

input

PP, FIDS

generates (DB)

a getter

function

get

X(),

which

a dynamic slice

sliceincrementally

in each LW iteration

and iteration

only sample

those

andCX

samisa dynamic

used to (1) sample

a value forinXeach

fromLW

its declaration

variables

necessary

at

runtime.

DB

is

adaptive

from

idea

of

ples

only

those

variables

necessary

at

runtime.

DB

in

Swift

and (2) memoize the sampled value for later references in

compiling

lazy

evaluation

(also

similar

to

the

Prolog

comis an

example

of compiling

lazy aevaluation:

in each

iteration,

the

current

iteration.

Whenever

r.v. is referred

to during

piler):

initseach

iteration,

DB

from

evidence

DB

backtracks

from

the evidence

and query

andthe

samples

an

inference,

getter

function

will backtracks

be evoked.

and

query

and

samples

a

r.v.

only

when

its

value

is

required

r.v.

only

when

its

value

is

required

during

inference.

DB is the fundamental technique for Swift. The key insight

inference.

every

declaration

random

T interpretive

X ⇠ CX ;data

in the

isduring

toFor

replace

ther.v.

dependency

look-up

in some

For

every

r.v.generates

declaration

random

T X

⇠ X(),

CXin

; which

in the

structure

direct

machine

seeking

the

input PP,byFIDS

aaddress/instruction

getter

function

get

input

PP,

FIDS

generates

a

getter

function

get

X(),

which

target

executable

file.

is used to (1) sample a value for X from its declaration CX

isFor

used

to

(1) sample

asampled

valuecode

for

X from

its declaration

example,

the the

inference

for

the

function

ofCin

X

and

(2)

memoize

value

forgetter

later

references

andcurrent

(2)thememoize

the

sampled

value

for

references

in

x(d)

in

1-GMM

model

(Fig. 3)

shown

below.

the

iteration.

Whenever

anis r.v.

is later

referred

to during

the current

Whenever

a evoked.

r.v. is referred to during

inference,

itsiteration.

getter function

double

get_x(Data

d)

{ will be

inference,

its

getter

function

will

be

evoked.

//

some

code

memoizing

the

sampled for

value

Compiling DB is the fundamental technique

Swift. The

memoization;

DB

is theisfundamental

technique for

Swift. and

Themethod

key insight

key

insight

to replace dependency

look-ups

inif notthesampled,

sample

a innew

value

is//

to replace

dependency

look-up

some

interpretive

data

vocations

from some

internal

PP data

structure

with direct

val = by

sample_gaussian(get_mu(get_z(d)),1);

structure

directaccessing

machine and

address/instruction

seeking

the

machine

branching in the

targetinexereturnaddress

val; }

target

executable

file.

cutable file.

Since

· ) the

is called

before

get_mu(

·getter

), only

those of

Forget_z(

example,

the

inference

code

for the

the getter

function

of

For

example,

inference

code

for

function

mu(c)

corresponding

to

non-empty

clusters

will

be

sampled.

x(d) in

in the

the 1-GMM

1-GMM model

model (Fig.

(Fig. 3)

3) isis shown

shown below.

below.

x(d)

Notably, with the inference code above, no explicit dependouble

get_x(Data

d) { at runtime to discover those

dency

look-up

is ever required

//

some

code

memoizing

the sampled value

non-empty clusters.

memoization;

// if not sampled, sample a new value

val = sample_gaussian(get_mu(get_z(d)),1);

return val; }

memoization

some pseudo-code

for

Since

get_z( · ) isdenotes

called before

get_mu( · ), snippet

only those

performing

memoization.

Since get_z(·)

is called

before

mu(c) corresponding

to non-empty

clusters will

be sampled.

get_mu(·),

only

mu(c)

corresponding

to non-empty

Notably, with

thethose

inference

code

above, no explicit

depenclusters

will be issampled.

Notably,

with thetoinference

code

dency look-up

ever required

at runtime

discover those

above,

no explicit

dependency look-up is ever required at runnon-empty

clusters.

time to discover those non-empty clusters.

When sampling a number variable, we need to allocate

memory for the associated variables. For example, in the urnball model (Fig. 2), we need to allocate memory for color(b)

;

• Provide

a general

framework

toi automatically

and efif rand(0,

1) ↵ then

Wi

W

1

H

H(1)

+ (W

; exact Markov blanket for each

ficiently

track

the

i (Q))

random variable and (2) maintain the minimum set of

random variables necessary to evaluate the query and

the evidence (the dynamic slice [Agrawal and Horgan,

1990], or equivalently the minimum self-supporting partial world [Milch et al., 2005a]).

3639

When sampling a number variable, we need to allocate

When sampling

a number variables,

variable, we

to allocate

memory

for the associated

i.e.,need

in the

urn-ball

memory

for 2),

thewe

associated

variables,

i.e., in

urn-ball

model (Fig.

need to allocate

memory

forthe

color(b)

afmodel

(Fig. 2),#Ball.

we needThe

to allocate

memorygenerated

for color(b)

ter sampling

corresponding

codeaf-is

ter

sampling

is

after

sampling

#Ball.The

Thecorresponding

correspondinggenerated

generatedcode

infershown

below.#Ball.

shown

below.

ence

code

is shown below.

int get_num_Ball() {

int//get_num_Ball()

some code for {memoization

//

some

code for memoization

memoization;

memoization;

// sample a value

//

a value

valsample

= sample_uniformInt(1,20);

val

= sample_uniformInt(1,20);

// some

code allocating memory for color

//

some code allocating memory for color

allocate_memory_color(val);

allocate_memory_color(val);

return val; }

return val; }

allocate_memory_color(val) denotes some pseudoallocate_memory_color(val)

denotes some

some pseudoallocate_memory_color(val)

denotes

code segment for allocating val chunks

of memory for the

code segment for allocating val chunks of memory for the

values of color(b).

values of color(b).

Reference Counting

Reference Counting

Reference counting (RC) generalize the idea of DB to increReference

counting

(RC)

generalize

the idea of

to increReference

counting the

(RC)

generalize

of DB

DB

mentally maintain

dynamic

slicetheinidea

PMH.

RC to

is increa effimentally

maintain

the

dynamic

slice

ininPMH.

RC

isisana effimentally

maintain

the

dynamic

slice

PMH.

RC

cient compilation strategy for the interpretive BLOG toeffidycient

compilation

strategy

for

interpretive

BLOG to

dycient

compilation

strategy

for the

the

interpretive

dynamically

maintain

references

to variables

andBLOG

excludetothose

namically

maintain

references

to

and

those

namically

maintain

references

to variables

variables

and exclude

exclude

without any

references

from the

current possible

worldthose

with

without

any

references

from

the

current

possible

world

with

without

any

references

from

the

current

possible

world

with

minimal runtime overhead. RC is also similar to dynamic

minimal

runtime

RC

to

minimal

runtime overhead.

overhead.

RC is

is also

also similar

similar

to dynamic

dynamic

garbage collection

in programming

language

community.

garbage

collection

in

programming

language

community.

garbage

collection

in

programming

language

community.

For an

a r.v.

being

tracked,

say X,

X, RC

RC maintains

maintains aa referreferFor

r.v. being

being tracked,

tracked, say

say

Forcounter

a r.v.

X,cnt(X)

RC maintains

a reference

cnt(X)

defined

by

=

|Ch(X|W

)|.

ence

counter cnt(X)

cnt(X) defined

defined by

by cnt(X)

cnt(X) ==|Ch(X|W

|Chw (X)|.

ence

counter

)|..

Ch(X|W

)

denote

the

children

of

X

in

the

possible

world

W

Ch

the

of X

in the

possible world

world W

w.

w (X) denote

Ch(X|W

) denote

thechildren

children

the possible

When

cnt(X)

becomes

zero,of

XXis

isin

removed

from w;

W ;when

when.

When

cnt(X)

becomes

zero,

X

removed

from

When

cnt(X)

becomes

zero,

X

is

removed

from

W

;

when

cnt(X)become

becomepositive,

positive,X

X isinstantiated

instantiatedand

and addedback

back

cnt(X)

cnt(X)

become

positive,

X is

is instantiated

and added

added back

to

W

.

This

procedure

is

performed

recursively.

to

is performed recursively.

to w.

W

.This

Thisprocedure

procedure

Tracking

referencesistotoperformed

everyr.v.

r.v.recursively.

mightcause

causeunnecessary

unnecessary

Tracking

references

every

might

Tracking

references

to

every

r.v.

unnecessary

overhead.For

Forexample,

example,for

forclassical

classicalmight

Bayescause

nets,RC

RC

neverexexoverhead.

Bayes

nets,

never

overhead.

For

example,

for classical

Bayes nets,

RC

never

excludes

any

variables.

Hence,

as

a

trade-off,

Swift

only

counts

cludes

any

Hence,

aa trade-off,

Swift

cludes

any variables.

variables.

Hence, as

asmodels,

trade-off,

Swift only

onlytocounts

counts

references

in open-universe

open-universe

particularly,

those

references

in

models,

particularly,

to

references

in open-universe

models,

particularly,

to those

those

variables

associated

with

number

variables

(i.e.,

color(b)

in

variables

associated

with

variables

associated

with number

number variables

variables (e.g.,

(i.e., color(b)

color(b) in

in

the

urn-ball

model).

the

urn-ball

model).

theTake

urn-ball

model).

theurn-ball

urn-ballmodel

modelas

asan

an example. When

Whenresampling

resampling

Take

the

Take

the

urn-ball

modelthe

as proposed

an example.

example.

When

resampling

drawn(d)

and

accepting

value

v,

the

generated

drawn(d)

and

the

value

drawn(d)

and accepting

accepting

the proposed

proposed

value v,

v, the

the generated

generated

code

for

accepting

the

proposal

will

be

code

code for

for accepting

accepting the

the proposal

proposal will

will be

be

void inc_cnt(X) {

void

if inc_cnt(X)

(cnt(X) == {0) W.add(X);

if

(cnt(X)

== 0) +W.add(X);

cnt(X)

= cnt(X)

1; }

cnt(X)

= cnt(X){ + 1; }

void

dec_cnt(X)

void

dec_cnt(X)

{ - 1;

cnt(X)

= cnt(X)

cnt(X)

= cnt(X)

1;

if (cnt(X)

== 0)- W.remove(X);

}

if (cnt(X)

== 0) W.remove(X);

void

accept_value_drawn(Draw

d, }Ball v) {

void

accept_value_drawn(Draw

d, Ball

v) {

// code

for updating dependencies

omitted

// dec_cnt(color(val_drawn(d)));

code for updating dependencies omitted

dec_cnt(color(val_drawn(d)));

val_drawn(d) = v;

val_drawn(d)

= v; }

inc_cnt(color(v));

inc_cnt(color(v)); }

The

The function

function inc_cnt(X)

inc_cnt(X) and

and dec_cnt(X)

dec_cnt(X) update

update the

the

The function

dec_cnt(X) update the

references

totoX.

The

accept_value_drawn()

isis

references

X.inc_cnt(X)

Thefunction

functionand

accept_value_drawn()

references

function Swift

accept_value_drawn()

is

specialized

r.v.

drawn(d):

specializedtototoX.

r.v.The

drawn(d):

Swift analyzes

analyzes the

the input

input propro-

specialized

to r.v. drawn(d):

the variables.

input

program

specialized

codes

for

gramand

andgenerates

generates

specializedSwift

codesanalyzes

fordifferent

different

variables.

gram and generates specialized codes for different variables.

Adaptive

Adaptivecontingency

contingencyupdating

updating

Adaptive

contingency

updating

The

goal

of

ACU

is

to

The goal of ACU is to incrementally

incrementally maintain

maintain the

the Markov

Markov

The

goalfor

ofevery

ACUr.v.

is with

to

incrementally

maintain the Markov

Blanket

minimal

Blanket

for

every

r.v.

with

minimalefforts.

efforts.

Blanket

for every

with minimal

CX denotes

ther.v.declaration

of efforts.

r.v. X in the input PP;

P arw (X) denote the parents of X in the possible world w;

w[X

v] denotes the new possible world derived from w

by only changing the value of X to v. Note that deriving

3640

CX denotes the declaration of r.v. X in the input PP;

the declaration

X the

in possible

the inputworld

PP;

X denotes

PC

ar(X|W

) denote

the parents of

of r.v.

X in

PWar(X|W

the parents

X in the

possible

; W (X ) denote

v) denotes

the newof

possible

world

derivedworld

from

W

v) denotes

newofpossible

from

W; W

by (X

only changing

thethe

value

X to v.world

Notederived

that deriving

W

by

only

changing

the

value

of

X

to

v.

Note

that

deriving

w[X

v]

may

require

instantiating

new

variables

not

existW (X

v) may require instantiating new variables not exW

(X

v) due

may

instantiating

new variables not exing

in windue

to

dependency

changes.

isting

W

torequire

dependency

changes.

isting

in W due

toar(X|W

dependency

Computing

PPar

X changes.

is

by executComputing

) for

X straightforward

is straightforward

by exew (X) for

Computing

P ar(X|W

)C

for

is straightforward

by

exeing

its generation

process,

,Xwithin

w, which

is often

incuting

its generation

process,

W , which

is often

XC

X , within

cuting

its

generation

process,

C

,

within

W

,

which

is

often

X

expensive.

Hence,

the

principal

challenge

for

maintaining

inexpensive. Hence, the principal challenge for maintaining

inexpensive.

Hence, isthe

challenge

for

maintaining

the

efficiently

maintain

aa children

the Markov

Markov blanket

blanket

is totoprincipal

efficiently

maintain

children set

set

the

Markov

blanket

efficiently

a children

Ch(X)

for

r.v.

X

with

the aim maintain

identical set

to

Ch(X)

for each

each

r.v. is

Xto

of keeping

identical

the

Ch(X)

for

each

r.v. X with

thethe

aimcurrent

of keeping

the

the

true set

Ch

current

possible

world

ground

truth

Ch(X|W

under

PW

Widentical

. w.

w (X) for) the

0 W.

ground

Ch(X|W

) under

the current

PW

With

aaproposed

possible

world

(PW)

Withtruth

proposed

possible

world

(PW)w

W=

(Xw[X v), itv],is

With

a

proposed

possible

world

(PW)

W

(X

v), variit is

iteasy

is

easy

to

add

all

missing

dependencies

from

a particular

to add all missing dependencies from a particular

easy

add

all

dependencies

varir.v.

. .That

is,is,missing

for

U and everyfrom

2 PPar(U

arw0 (U

ableUto

U

That

forana r.v.

r.v. Va particular

2

|W),),

able

UU. must

That be

is,whether

forCh

aw

r.v.

U

and

Vadd

2P

|W ),

0 (V

since

in

we every

can

Ch(V)

up-to-date

we can

verify

U

2),Ch(V

|Wkeep

)r.v.

and

Uar(U

to Ch(X)

we

can

verify

whether

U

2

Ch(V

|W

)

and

add

U

to

Ch(X)

by

adding

U

to

Ch(V)

if

U

2

6

Ch(V).

Therefore,

the

if U 62 Ch(X). Therefore, the key step becomes trackingkey

the

ifsetU of

62 variables,

Ch(X).

thev)),

key which

step (w[X

becomes

tracking

the

step

is

tracking Therefore,

the(W

set(X

of variables,

v]),

which

will have

a different

set

variables,

(W

v)), which

will have

a different

will

have

a set in

of W

parents

w[X

v] different

from

w.

setof

of

parents

(X(Xinv).

set of parents in W (X

v).

(W(X

[X v))

v]) =

= {Y : P arw (Y ) 6= P arw[X v] (Y )}

(W

(W (X

v)){Y

= : P ar(Y |W ) 6= P ar(Y |W (X

v))}at

Precisely computing (w[X

v]) is very expensive

{Y : P ar(Y |W ) 6= P ar(Y |W (X

v))}

runtime but computing an upper bound for (w[X

v])

Precisely

computing

(W (X

v))the

is very

expensive

at

does

not influence

the correctness

of

inference

code.

Precisely

computing

(W

(X

v))

is

very

expensive

at

runtime

but

computing

an

upper

bound

for

(W

(X

v))

Therefore,

we

proceed to

find

an bound

upper bound

that(X

holds v))

for

runtime

computing

ancorrectness

upper

(W

doesvalue

notbut

influence

the

of for

the inference

code.

any

v.

We

call

this

over-approximation

the

contingent

does

not influence

the tocorrectness

of the

inference

code.

Therefore,

we proceed

find an upper

bound

that holds

for

set

Contw (X).

Therefore,

weWe

proceed

to find

an upper bound the

thatcontingent

holds for

any

value

v.

call

this

over-approximation

Note

thatv.for

r.v. Uover-approximation

with dependency that

in

any

value

Weevery

this

the changes

contingent

set Cont(X|W

).call be

w(X

v), X must

reached in the condition of a control

set Note

Cont(X|W

).

that

for

every

r.v. Vpath

with dependency

that changes

in

statement

onfor

theevery

execution

CU within w.

implies

Note

r.v.

Vreached

withofdependency

thatThis

changes

in

W(w[X

(X thatv),

X

must

be

in

the

condition

of

a

conv])X✓must

Chwbe

(X).

One straightforward

idea

set

W

(Xstatement

v),

reached

of, namely

aiscontrol

on

the(X).

execution

pathinofthe

CVcondition

within

W

Cont

Ch

However,

this

leads

toW

too

much

w (X) =on

w execution

trol(W

statement

the

path

of

C

within

,

namely

V

(X

v)) ✓ Ch(X|W

One straightforward

ideaforis

overhead.

(·)).).should

be always empty

(WCont(X|W

(X For

v))example,

✓=Ch(X|W

One

straightforward

idea

is

set

)

Ch(X|W

).

However,

this

leads

to too

models

with fixed

dependencies.

set

Cont(X|W

)

=

Ch(X|W

).

However,

this

leads

to

too

much

overhead.

For example,

( · ) should

be always

empty

Another

approach

is to first find

the be

set

of switching

much

overhead.

For

example,

( · ) out

should

always

empty

for models

with

fixed

dependency.

variables

S

for

every

r.v.

X,

which

is

defined

by

for Another

models X

with

fixed dependency.

approach

is to first find out the set of switching

Another

approach

is

toarfirst

find6=out

the

set of

switching

SX =S{Y

: 9w,

vP

Pisar

(X)},

variables

every

r.v.

X,

which

defined

w (X)

w[Y v]by

X for

variables SX for every r.v. X, which is defined by

This

immediate

advantage:

SX can (Y

be statically

SXbrings

= {Y an

: 9W,

v P ar(X|W

) 6= P ar(X|W

v))},

SX = {Yat :compile

9W, v Ptime.

ar(X|W

) 6= P ar(X|W

(Y SX v))},

computed

However,

computing

is NP1

This brings

Complete

. an immediate advantage: SX can be statically

This

brings

immediate

SX canbbeSstatically

computed

atancompile

time.advantage:

However,

computing

is NPX taking

Our

solution

is to derive

the

approximation

S by

computed

1at compile time. However, computingXSX is NPComplete

.

the

union

1of free variables of if/case conditions, function arComplete .

Our solution

to derive conditions

the approximation

SbX then

by taking

guments,

and setisexpression

in CX , and

set

solution

is variables

to derive of

theif/case

approximation

SbXfunction

by taking

theOur

union

of free

conditions,

arbY }.

the

union

of

conditions,

function

arCont

(X)

= {Y : Yofconditions

2if/case

Chw (X)

S

wfree

guments,

and

set variables

expression

in^CXX ,2and

then set

guments, and set expression conditions in CX , and then set

Contw (X) is a function of the sampling variable Xb and the

Cont(X|W

) = {Y : Y 2 Ch(X|W

) ^ we

X 2 bSY }. a

current

PW w. Likewise,

r.v. )X,

Cont(X|W

) = {Y : Yfor2 every

Ch(X|W

^ X 2maintain

SY }.

runtime

contingent

set Cont(X)

identical

to theXtrue

Cont(X|W

) is a function

of the sampling

variable

andset

the

Cont(X|W

)W

is .a Likewise,

function

ofwe

the

sampling

X

and

Cont

the current

PW

w. Cont(X)

be the

inw (X)

current

PWunder

maintain

a variable

runtime can

contingent

current

PW W

.for

Likewise,

weXmaintain

a runtime

contingent

crementally

updated

as well.

set Cont(X)

every

r.v.

corresponding

to true

continset

Cont(X)

for

every

r.v.

X

corresponding

to

true

continBack

to

the

original

focus

of

ACU,

suppose

we

are

adding

gent set Cont(X|W ) under the current

PW

W

.

Cont(X)

gent

setincrementally

Cont(X|W ) updated.

the PW

current

W . Cont(X)

new

dependencies

inunder

the new

w0 =PW

w[X

v]. There

can the

be

can

be

incrementally

updated.

are Back

three to

steps

accomplish

goal: (1)

enumerating

the

theto

original

focusthe

of ACU,

suppose

we are all

adding

Back

focus

ACU,

are

0 (U

variables

Uthe

2 original

Cont(X),

(2)of

for

all

P arwe

), adding

add are

U

wx).

new

thetodependencies

in the

new

PWVsuppose

W2(X

There

new

the

dependencies

new

(X

There

0 (U

to

Ch(V),

and

(3) forinalltheVthe

2goal:

PPW

arwW

) \ Sbx).

U are

to

three

steps

to accomplish

(1)

enumerating

the

U , addall

three steps These

to accomplish

(1) enumerating

allway

the

Cont(V).

steps canthe

be goal:

also repeated

in a similar

1

Since the

CX may contain arbitrary boolean formuto remove

thedeclaration

vanished dependencies.

1

Since

thereduce

declaration

CX may

contain computing

arbitrary boolean

las,

one the

can

the model

3-SAT

problem

SX . formuTake

1-GMM

(Fig. 3)toto

ascomputing

an example

las, one can reduce the 3-SAT problem

SX,. when resampling z(d), we need to change the dependency of r.v. x(d)

1

Since the declaration CX may contain arbitrary boolean formulas, one can reduce the 3-SAT problem to computing SX .

{ Fcc (C, Id, {}) }

void Id::accept_value(T v)

for(uT in

Cont(Id))

u.del_from_Ch();

Fc (M = {random

Id([T

Id ,]⇤ ) ⇠ C)

=

Id

=

v;

void Id::add_to_Ch()

for(u

inId,

Cont(Id))

u.add_to_Ch(); }

{

Fcc (C,

{}) }⇤

Fcc(M

(M =

=void

random

T Id([T

Id([T Id

Id ,]

,]⇤ )) ⇠

⇠ C)

C) =

=

F

random

T

Id::accept_value(T

v)

void

Id::add_to_Ch()

Fcc (Cvoid

={Exp,

X, seen)

= Fcc (Exp, X,

seen)

Id::add_to_Ch()

for(u

in Cont(Id))

u.del_from_Ch();

{IdF

F=cc

(C,

Id,

{}) }

}= Fcc (Exp, X, seen)

Fcc (C = Dist(Exp),

X, seen)

cc (C,

{

{})

v; Id,

void

Id::accept_value(T

v) seen) =

Fcc (C void

= iffor(u

(Exp)

then

C1 else Cu.add_to_Ch();

2 , X,

Id::accept_value(T

v)

in

Cont(Id))

}

{ccfor(u

for(u

inseen)

Cont(Id)) u.del_from_Ch();

u.del_from_Ch();

F

(Exp, X,

{

in

Cont(Id))

Id

=

v;

(Exp)

Id

=

Fcc (C =ifExp,

X,v;

seen) = Fcc (Exp, X, seen)

for(u

in

Cont(Id))

u.add_to_Ch();

}

{for(u

Fcc (Cin

, X,

seen

FV

(Exp))

} seen)

1

u.add_to_Ch();

}

Fcc (C = Dist(Exp),

X,Cont(Id))

seen)[=

Fcc

(Exp, X,

Fcc (C =else

if (Exp) then C1 else C2 , X, seen) =

Fcc

(C =

=FExp,

Exp,

X,(C

seen)

=

Fcc

(Exp,

X, seen)

seen)}

{(Exp,

FX,

X,=

seen

F V (Exp))

cc (C

cc[

cc

F

seen)

F

(Exp,

X,

X,2 ,seen)

cc

Fcc

(C =

=if

Dist(Exp),

X, seen)

seen) =

=F

Fcc

(Exp, X,

X, seen)

seen)

cc (C

cc (Exp,

F

Dist(Exp),

(Exp) X,

FFcc

(C

= if

if

(Exp)

then

C11 else

else

C22,, X,

X, seen)

seen)

=

cc

(Exp,

=, X, seen

F

=

C

{X,(Exp)

Fseen)

[ FV C

(Exp))

} =

cc(C

cc (C1then

F

(Exp,

X, seen);

seen);

ccline

(Exp,

X,

else

/* emitF

acc

of code

for every r.v. U in the corresponding set */

if

(Exp)

if

(C

, X, seen

[XF)\seen)

V (Exp))

}

8{

U(Exp)

2Fcc

((F

V2(Exp)

\ Sb

: Cont(U)

+= X;

{ F

Fcc

(C11,, X,

X, seen

seen [

[F

FV

V (Exp));

(Exp)); }

cc (C

{

8 U 2 (F V (Exp)\seen)

: Ch(U) +=} X;

else

else

Fcc (Exp,

X, seen) =

{ F

Fcc

(C22,, X,

X, seen [

[F

FV

V (Exp));

(Exp)); }

}

cc (C

/* emit4:

a{line

of

code for seen

every

r.v.for

U in

the corresponding

setcode

*/

Figure

Transformation

rules

outputting

inference

8UF

2V

((F

V (Exp)

\ SbX )\seen)

Cont(U)in+=

X;

with ACU.

(Expr)

denotes

the free: variables

Expr.

Fcc

(Exp, X,

X, seen) =

=

cc (Exp,

F

8 U 2seen)

(F V

(Exp)\seen)

: Ch(U)

+= X;already been

We

assume

switching

SbXcorresponding

have

/* emit

emit

line the

of code

code

for every

everyvariables

r.v. U

U in

in the

the

set */

*/

/*

aa line

of

for

r.v.

corresponding set

computed.

The

rules

for

number

statements

and case

statebX )\seen) : Cont(U)

8

U

2

((F

V

(Exp)

\

S

+=

X;

b

8

2 ((F V (Exp) \

SX )\seen)

: Cont(U)

+= code

X;

Figure

4:UTransformation

rules

for outputting

inference

ments are

shown

for conciseness—they

to

8U

Unot

2

(F

V (Exp)\seen)

(Exp)\seen)

Ch(U)

+=are

X; analogous

8

2

V

Ch(U)

+=

X;

with ACU.

F (F

V (Expr)

denotes:: the

free variables

in Expr.

the rules for random variables and if statements respectively.

We assume

the switchingrules

variables

SbX haveinference

already code

been

Figure

4: Transformation

Transformation

for outputting

outputting

Figure

4:

rules

for

inference

code

computed.

The

rules

for

number

statements

and

case

statewith ACU.

ACU. F

F V (Expr)

(Expr) denote

denote the

the free variables

variables in

in Expr.

with

variables

Cont(X),

(2) for allfree

Ub 2 P ar(V

|W ),Expr.

add to

V

ments

areVnot2Vshown

for conciseness—they

are analogous

b

We

assume

the

switching

variables

S

have

already

been

X have already

We

assume

the

switching

variables

SX

been

brespectively.

the

rules

forand

random

variables

and

if statements

to

Ch(U),

(3)

for

all

U

2

P

ar(V

|W

)

\

S

,

add

V

to

V

computed. The

The rules

rules for

for number

number statements

statements and

and case

case statestatecomputed.

Cont(U).

These

steps

can

be

also

repeated

in

a

similar

way

ments are

are not

not shown

shown for

for conciseness—they

conciseness—they are

are analogous

analogous to

to

ments

to remove

the

vanished

dependencies.

the

rules

for

random

variables

and

if

statements

respectively.

the

rules

for

random

variables

and

if

statements

respectively.

variables

V 1-GMM

2 Cont(X),

(2)(Fig.

for all

2 Pexample

ar(V |W, when

), addreV

Take the

model

3) U

as an

to

Ch(U),z(d),

and we

(3)need

for all

U 2 P ar(V

|W ) \ SbV of

, add

to

sampling

to change

the dependency

r.v. Vx(d)

Cont(U).

These

steps

can

be

also

repeated

in

a

similar

way

sinceCont

Cont(z(d)|W

)

=

{x(d)}

for

any

W

.

The

generated

since

Cont

(z(d))

=

{x(d)}

for

any

w.

The

generated

code

w

since

(z(d))

=

{x(d)}

for

any

w.

The

generated

code

w

to

remove

theprocess

vanished

dependencies.

code

this

is shown

for

thisforprocess

process

is shown

shown

below.below.

for

this

is

below.

Takex(d)::add_to_Ch()

the 1-GMM model (Fig.

void

{ 3) as an example , when

resampling

z(d), +=

wex(d);

need to change the dependency of

Cont(z(d))

r.v.Ch(z(d))

x(d) since +=

Cont(z(d)|W

) = {x(d)} for any W . The

x(d);

Ch(mu(z(d)))

+= process

x(d); is}shown below.

generated

code for this

void z(d)::accept_value(Cluster v) {

// accept the proposed value v for r.v. z(d)

// code for updating references omitted

for (u in Cont(z(d))) u.del_from_Ch();

val_z(d) = v;

for (u in Cont(z(d))) u.add_to_Ch(); }

We

omitthe

the code

code for

for del_from_Ch()

del_from_Ch() for

for

conciseness,

We

omit

the

code

for

We omit

for conciseness,

conciseness,

which

isisessentially

essentially

the

same

as

add_to_Ch()

which

whichis

essentiallythe

thesame

sameas

asadd_to_Ch()

add_to_Ch()...

The

formal

transformation

rules

for

ACU

are

demonstrated

The

Theformal

formaltransformation

transformationrules

rulesfor

forACU

ACUare

aredemonstrated

demonstrated

in

Fig.

4.

F

takes

in

a

random

variable

declaration

statement

c

ininFig.

4.4.FFcctakes

ininaarandom

variable

declaration

statement

Fig.

takes

random

variable

declaration

statement

We

omit

theprogram

code for

del_from_Ch()

forcode

conciseness,

in

the

BLOG

program

and

outputs

the

inference

code

containin

the

BLOG

and

outputs

the

inference

containin

the

BLOG

program

and

outputs

the

inference

code

containwhich

is essentially

thevalue()

same as add_to_Ch()

. Ch().

ing

methods

accept

and

add

to

FFcc

cc

ing

value()and

andadd

add to

to Ch().

Ch(). F

ingmethods

methodsaccept

accept value()

cc

The

transformation

rules

for ACUthe

areinference

demonstrated

takes

in

BLOG

expression

and

generates

the

inference

code

takes

in

aaaBLOG

expression

generates

code

takes

informal

BLOG

expressionand

and

generates

the

inference

code

in

Fig.method

4. Fc takes

a random

statement

inside

method

add

to

Ch().

ItItkeeps

keeps

track

of

already

seen

inside

add

Ch().

It

track

seen

inside

method

addinto

to

Ch().variable

keepsdeclaration

trackof

ofalready

already

seen

in

the

BLOG

program

and

outputs

the

inference

code

containvariables

in

seen,

which

makes

sure

that

a

variable

will

be

variables

in

seen,

which

makes

sure

that

a

variable

will

variables in seen, which makes sure that a variable will be

be

ing

methods

accept

and

addset

toat

Ch().

Fcc

added

to

the

contingent

set

or

the

children

set

atatmost

most

once.

added

to

set

added

tothe

thecontingent

contingentvalue()

setor

orthe

thechildren

children

set

mostonce.

once.

takes

in

BLOG expression

generates

the similarly.

inference

Code

for

removing

variables

can

be

generated

similarly.

Code

variables

can

Codefor

foraremoving

removing

variablesand

canbe

begenerated

generated

similarly. code

inside

method

add

to

Ch().

It

keeps

track

of

already

seen

Since

we

maintain

the

exact

children

for

each

r.v.

with

Since we

we maintain

maintain the

the exact

exact children

children for

for each

each

r.v. with

with

Since

r.v.

variables

in

seen, which

makes sure

that↵

variable

be

ACU,

the

computation

ofofacceptance

acceptance

ratio

↵↵ain

in(line

Eq. 711 for

forwill

PMH

ACU,the

thecomputation

computation

acceptance

ratio

in

Alg.

1)

ACU,

of

ratio

Eq.

PMH

added

to the contingent

set or the children set at most once.

can

be simplified

simplified

to

can

be

to

Code for removing variables can be generated similarly.

Since we maintain the0 exact

!

Q children for each0 r.v.0 with

!

Q

0acceptance

0in

0 ] 1)

|w|

Pr[X =

= v|w

v|w

Pr[U

(w

)|wAlg.

ACU, the|w|

computation

of-X

ratio

↵

(line

7

Pr[X

Pr[U

(w

)|w

]

-X ]]

-U

U

2Ch

(X)

0

w

-U

U 2Chw0 (X)

Q

min 1,

1, 0

Q

min

|w0 || Pr[X

Pr[X =

= vv00|w

|w-X

Pr[V (w)|w

(w)|w-V

-X ]]

-V ]]

|w

V 2Ch

2Chw

(X) Pr[V

w (X)

V

(2)

(2)

3641

!

Q

= v|Wi ] u2Ch(x|Wi ) Pr[u|Wi ]

Q

min 1,

|Wi | Pr[x = v 0 |Wi 1 ] v2Ch(x|Wi 1 ) Pr[v|Wi 1 ]