What is the set cover problem

advertisement

18.434 Seminar in Theoretical Computer Science

1 of 5

Tamara Stern 2.9.06

Set Cover Problem (Chapter 2.1, 12)

What is the set cover problem?

Idea: “You must select a minimum number [of any size set] of these sets so that the sets you have picked

contain all the elements that are contained in any of the sets in the input (wikipedia).” Additionally, you want

to minimize the cost of the sets.

Input:

Ground elements, or Universe U = {u1 , u 2 ,..., u n }

Subsets S1 , S 2 ,..., S k ⊆ U

Costs c1 , c 2 ,..., c k

Goal:

Find a set I ⊆ {1,2,..., m} that minimizes

∑c

i∈I

i

, such that U S i = U .

i∈I

(note: in the un-weighted Set Cover Problem, c j = 1 for all j)

Why is it useful?

It was one of Karp’s NP-complete problems, shown to be so in 1972.

Other applications: edge covering, vertex cover

Interesting example: IBM finds computer viruses (wikipedia)

elements- 5000 known viruses

sets- 9000 substrings of 20 or more consecutive bytes from viruses, not found in ‘good’ code

A set cover of 180 was found. It suffices to search for these 180 substrings to verify the existence of

known computer viruses.

Another example: Consider General Motors needs to buy a certain amount of varied supplies and there are

suppliers that offer various deals for different combinations of materials (Supplier A: 2 tons of steel + 500 tiles

for $x; Supplier B: 1 ton of steel + 2000 tiles for $y; etc.). You could use set covering to find the best way to

get all the materials while minimizing cost.

18.434 Seminar in Theoretical Computer Science

2 of 5

Tamara Stern 2.9.06

How can we solve it?

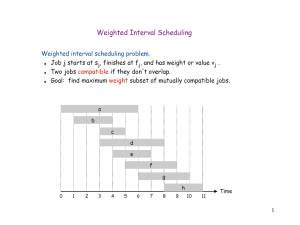

1. Greedy Method – or “brute force” method

Let C represent the set of elements covered so far

Let cost effectiveness, or α , be the average cost per newly covered node

Algorithm

1. C Å 0

2. While C ≠ U do

Find the set whose cost effectiveness is smallest, say S

Let α =

c( S )

S −C

For each e ∈ S-C, set price(e) = α

CÅC ∪S

3. Output picked sets

Example

U

X

.

.

.. . . .

Y

. .

.

.

.

Z

c(X) = 6

c(Y) = 15

c(Z) = 7

c( Z )

7

= =1

Choose Z: α Z =

| S −C | 7

c( X )

6

Choose X: α X =

= =2

| S −C | 3

c(Y )

15

Choose Y: α Y =

=

= 7.5

| S −C | 2

U

X

.

.

.2 .2 .2 . .1 1

Y

.1 1.

7.5.

1.

.7.5

Z

1

1

Total cost = 6 + 15 + 7 = 28

(note: The greedy algorithm is not optimal. An optimal solution would have chosen Y and Z for a cost

of 22)

18.434 Seminar in Theoretical Computer Science

3 of 5

Tamara Stern 2.9.06

Theorem:

The greedy algorithm is an H n factor approximation algorithm for the minimum set

cover problem, where H n = 1 +

1

1

+ ... + ≈ log n .

2

n

Proof:

(i) We know

∑ price(e) = cost of the greedy algorithm = c(S ) +c(S

1

e∈U

2

) + ... + c( S m )

because of the nature in which we distribute costs of elements.

(ii) We will show price(ek ) ≤

OPT

, where ek is the kth element covered.

n − k +1

Say the optimal sets are O1 , O2 ,..., O p .

a

= c(O1 ) +c(O2 ) + ... + c(O p ) .

So, OPT

Now, assume the greedy algorithm has covered the elements in C so far. Then we

know the uncovered elements, or U − C , are at most the intersection of all of the

optimal sets intersected with the uncovered elements:

U −C

b

≤

O1 ∩ (U − C ) + O2 ∩ (U − C ) + ... + O p ∩ (U − C )

In the greedy algorithm, we select a set with cost effectiveness α , where

c

α ≤

c(Oi )

Oi ∩ (U − C )

, i = 1... p . We know this because the greedy algorithm

will always choose the set with the smallest cost effectiveness, which will either be

smaller than or equal to a set that the optimal algorithm chooses.

c

Algebra:

c(Oi ) ≥ α ⋅ Oi ∩ (U − C )

a

=

OPT

c

∑ c(Oi ) ≥ α ⋅ ∑ Oi ∩ (U − C )

i

α ≤

b

≥ α ⋅U −C

i

OPT

,

|U − C |

Therefore, the price of the k-th element is:

α ≤

OPT

n − (k − 1)

=

OPT

n − k +1

Putting (i) and (ii) together, we get the total cost of the set cover:

n

∑ price(ek ) ≤

k =1

n

OPT

∑ n − k +1

k =1

1⎞

⎛ 1

= OPT ⋅ ⎜1 + + ... + ⎟ = OPT ⋅ H n

n⎠

⎝ 2

18.434 Seminar in Theoretical Computer Science

4 of 5

Tamara Stern 2.9.06

2. Linear Programming (Chapter 12)

“Linear programming is the problem of optimizing (minimizing or maximizing) a linear function

subject to linear inequality constraints (Vazirani 94).”

For example,

n

min imize

∑c

j =1

j

n

subject to

∑a

j =1

ij

xj

x j ≥ bi

i = 1,..., m

xj ≥ 0

j = 1,..., n

There exist P-time algorithms for linear programming problems.

Example Problem

U = {1,2,3,4,5,6}, S1 = {1,2}, S 2 = {1,3}, S 3 = {2,3}, S 4 = {2,4,6}, S 5 = {3,5,6}, S 6 = {4,5,6}, S 7 = {4,5}

⎧1 if select S j

- variable x j for set S j = ⎨

⎩0 if not

k

- minimize cost: Min

∑c

j =1

j

x j = OPT

- subject to:

x1

x1

+

x2

x2

+

+

x3

x3

+

x4

+

x5

x4

x4

possible solution: x1 = x 2 = x3 =

1

,

2

+

x5

x5

+

+

+

x6

x6

x6

+

+

x7

x7

x 4 = x5 = x 7 = 0, x 6 = 1 Æ

≥

≥

≥

≥

≥

≥

∑x

1

1

1

1

1

1

i

= 2.5

This would be a feasible solution – however, we need x j ∈ {0,1}, which is not a valid

constraint for linear programming. Instead, we will use x j ≥ 0 , which will give us all correct

solutions, plus more (solutions where x j is a fraction or greater than 1).

18.434 Seminar in Theoretical Computer Science

5 of 5

Tamara Stern 2.9.06

Given a primal linear program, we can create a dual linear program. Let’s revisit linear program:

n

min imize

∑c

j =1

j

n

subject to

∑a

j =1

ij

xj

x j ≥ bi

i = 1,..., m

xj ≥ 0

j = 1,..., n

Introducing variables yi for the ith inequality, we get the dual program:

m

max imize

∑b y

i =1

i

m

subject to

∑a

i =1

ij

i

y j ≤ ci

j = 1,..., n

yi ≥ 0

i = 1,..., m

LP-duality Theorem (Vazirani 95): The primal program has finite optimum iff its dual has finite

(

)

(

)

optimum. Moreover, if x * = x1* ,..., x n* and y * = y1* ,..., y m* are optimal solutions for the primal

and dual programs, respectively, then

n

m

j =1

i =1

∑ c j x *j = ∑ bi yi* .

By solving the dual problem, for any feasible solution for y1 → y 6 , you can guarantee that the

optimal result is that solution or greater.

References:

http://en.wikipedia.org/wiki/Set_cover_problem.

Vazirani, Vijay. Approximation Algorithms. Chapters 2.1 & 12.

http://www.orie.cornell.edu/~dpw/cornell.ps. p7-18.