Parallel Reverse Mode Automatic Differentiation for OpenMP

Deutsche Forschungsgemeinschaft

Priority Program 1253

Optimization with Partial Differential Equations

Christian Bischof, Niels G¨urtler, Andreas Kowarz and Andrea

Walther

Parallel Reverse Mode Automatic Differentiation for OpenMP Programs with ADOL-C

December 2007

Preprint-Number SPP1253-04-01 http://www.am.uni-erlangen.de/home/spp1253

Parallel reverse mode automatic differentiation for

OpenMP programs with ADOL-C

C. Bischof

1

, N. Guertler

1

, A. Kowarz

2

, and A. Walther

2

1

2

Institute for Scientific Computing, RWTH Aachen University, D–52056 Aachen, Germany, bischof@sc.rwth-aachen.de

, Niels.Guertler@rwth-aachen.de

Institute of Scientific Computing, Technische Universit¨at Dresden, D–01062 Dresden,

Germany.

{ Andreas.Kowarz,Andrea.Walther

} @tu-dresden.de

Summary.

Shared-memory multicore computing platforms are becoming commonplace, and loop parallelization with OpenMP offers an easy way for the user to harness their power. As a result, tools for automatic differentiation (AD) should be able to deal with such codes in a fashion that preserves their parallel nature also for the derivative evaluation. In this paper, we explore this issue using a plasma simulation code. Its structure, which in essence is a time stepping loop with several parallelizable inner loops, is representative of many other computations. Using this code as an example, we develop a strategy for the efficient implementation of the reverse mode of AD with trace-based AD-tools and implement it with the ADOL-C tool. The strategy combines checkpointing at the outer level with parallel trace generation and evaluation at the inner level. We discuss the extensions necessary for ADOL-C to work in a multithreaded environment and the setup necessary for the user code and present performance results on a shared-memory multiprocessor.

Key words: Parallelism, OpenMP, reverse mode, checkpointing, ADOL-C

1 Introduction

Automatic differentiation (AD) [24, 39, 25] is a technique for evaluating derivatives of functions given in the form of computer programs. The associativity of the chain rule of differential calculus allows a wide variety of ways to compute derivatives that differ in their computational complexity, the best-known being the so-called forward and reverse modes of AD. References to AD tools and applications are collected at www.autodiff.org

and in [4, 18, 26, 13]. AD avoids errors and drudgery and reduces the maintenance effort of a numerical software system, in particular as derivative computations become standard numerical tasks in the context of sensitivity analysis, inverse problem solving, optimization or optimal experimental design.

These more advanced uses of a simulation model are also fueled by the continuing availability of cheap computing power, most recently in the form of inexpensive multicore sharedmemory computers. In fact, we are witnessing a paradigm shift in software development in that parallel computing will become a necessity as shared-memory multiprocessors (SMPs) will become the fundamental building block of just about any computing device [2]. In the context of numerical computations, OpenMP(see [19] or www.openmp.org

) has proven to be

2 C. Bischof 1 , N. Guertler 1 , A. Kowarz 2 , and A. Walther 2 a powerful and productive programming paradigm for SMPs that can be effectively combined with MPI message-programming for clusters of SMPs (see, for example [11, 10, 42, 22, 41]).

The fact that derivative computation is typically more expensive than the function evaluation itself has made it attractive to exploit parallelism in AD-based derivative computations of serial codes. These approaches exploit the independence of different directional derivatives [5, 36], the associativity of the chain rule of differential calculus [6, 7, 3, 8], the inherent parallelism in vector operations arising in AD-generated codes [37, 14, 40, 15] or high-level mathematical insight [1].

In addition, rules for the differentiation of MPI-based message-passing parallel programs have been derived and implemented in some AD tools employing a source-transformation approach [31, 9, 20, 21, 17, 38, 30] for both forward and reverse mode computations. The differentiation of programs employing OpenMP has received less attention, so far [16, 23], and has only been considered in the context of AD source transformation. However, in particular for languages such as C or C++, where static analysis of programs is difficult, AD-approaches based on taping have also proven successful, and in this paper, we address the issue of deriving efficient parallel adjoint code from a code parallelized with OpenMP using the ADOL-C [27] tool.

To illustrate the relevant issues, we use a plasma simulation code as an example. Its structure, which in essence is a time stepping loop with several parallelizable inner loops, is representative of many other computations. We then develop a strategy how trace-based AD-tools can efficiently implement reverse-mode AD on such codes through the use of ”parallel tapes” and implement it with the ADOL-C tool. The strategy combines checkpointing at the outer level with parallel trace generation and evaluation at the inner level. We discuss the extensions necessary for ADOL-C to work in a multithreaded environment and the setup necessary for the user code and present detailed performance results on a shared-memory multiprocessor.

The paper is organized as follows. After a brief description of the relevant features of the plasma code, we address the issues that have to be dealt with in parallel taping evaluation. Then we present measurements on a shared-memory mulitprocessor and discuss implementation improvements. Lastly, we summarize our findings and outline profitable directions of future research.

2 The Quantum-Plasma Code

In this section we briefly describe our example problem. An extensive description can be found in [28, 29]. A quantum-plasma can be described as a one-dimensional quantum-mechanical system of N particles with their corresponding wave-functions Ψ i

, i ∈ [ 1 , N ] , Eq. 1, using the atomic unit system. The particle interaction is provided by the charge densities τ i where all particles contribute to. One possible set of initial states is of Gaussian type as stated in Eq. 2.

ı ∂Ψ i

∂ t

= − 1

2

∂

∂

2 z

Ψ

2 i + V i

Ψ i i ∈ [ 1 , N ]

(1)

∆ V i

= − 4 πτ i

Ψ i

= p √

1

πσ i e

−

( z − zi

2 σ i

2

) 2 e ık i z (2)

With the initial peak locations at z i

, the initial widths σ i and the initial impulses of k i after applying the Crank-Nicholson discretization scheme and reduction to pure tridiagonal

,

Parallel reverse mode differentiation for OpenMP 3 matrices, a simple discrete set of equations can be derived, see Fig. 1. The equidistant discretizations z = j · ∆ z , j ∈ [ 0 , K ] and t = n · ∆ t , n ∈ [ 0 , T ] with K and T grid-points are used for space- and time-discretization.

1 M

V x

V

2 M

V

3 V y

V

= ω [ 1 − L

N

N

∑ l = 1

| Ψ l

| 2 ]

4 V i , j

= u

V

=

= x

V

V j

− v

V

· x

V

1 + v

V

· y

V y y

V

− 2 π [ j ∆ z − 2 ∆ z 2 j − 1

∑ m = 1

5 M x = U

6 M y = u

7 Ψ n + 1

−

= x −

Ψ n v · x

1 + v · y m

∑ k = 1

| Ψ i , k

| 2 ]

Fig. 1.

Complete equation set for one time step iteration and wave-function.

In quantum mechanics the wave-functions Ψ i contain all information about the system.

However, the observables are always expected values which are calculated after the propagation terminates. As an example the expected value < η > given by

< η > =

N

∑

i

K

∑

j z ( j ) ∆ z | Ψ i , j

| 2 (3) is computed at the end of the plasma code.

The matrix M

V is constant throughout the propagation, since it is only the discrete representation of the Laplacian and therefore its LU decomposition can be precomputed. The potentials can be computed simultaneously since all wave-functions are only accessed by read commands in the corresponding steps 1 to 4 in figure 1. The previously computed potentials then allow for independent computation of the wave propagations in steps 5 through 7.

Therefore one time-step propagation is fully parallelizable with a synchronization after the potentials computation. Figure 2 depicts the basic structure of the resulting code for the computation of the potentials. The outer loop in line 1 is serial, driving the time-step evaluation

1 for( t = 0 ; t < T ; t + 1 )

2 #omp parallel

3 #omp for

4 for( i = 0 ; i < N ; i + 1 )

5 loop1( ...

);

6 #omp for

7 for( i = 0 ; i < N ; i + 1 )

8 loop2( ...

);

Fig. 2.

Primary iteration code structure of the quantum plasma code [28].

for T time-steps, with the result of the next iteration requiring the completion of the previous one. The inner loops are in a parallel region, they are both parallelizable, but there is a barrier after the first loop.

Using Gaussian waves as initializations we need 3 parameters to describe each wave function. In addition, we have to choose time and space discretizations, i.e.

∆ t and ∆ z . Thus, if

4 C. Bischof 1 , N. Guertler 1 , A. Kowarz 2 , and A. Walther 2 after completion of the code shown in Figure 2 we compute the expected value < η > as shown in (3) by summing over the wave functions, we obtain overall a code with 3 N + 2 input parameters and only one output parameter.

3 Parallel reverse mode using ADOL-C

ADOL-C is an operator overloading based AD-tool that allows the computation of derivatives for functions given as C or C++ source code [27]. A special data type adouble is provided as replacement of relevant variables of double type. The values of these variables are stored in a large array, and are accessed using the location component of the adouble data type, which provides a unique identifier for each variable. For derivative computation an internal representation of the user function, the so-called ”tape”, is created in a so-called taping process. Therein, for all instructions based on adoubles , an operation code and the locations of the result and all arguments are written onto a serial tape. Once the taping phase is completed, specific drivers provided by ADOL-C may be applied to the tape to reevaluate the function, or compute derivative information of any order using the forward or reverse mode of AD.

ADOL-C has been developed over a long period of time under strict serial aspects. Although the generated tapes have been used for a more detailed analysis and construction of parallel derivative codes, e.g., [7], ADOL-C could not really be deployed out of the box within a parallel environment and extensive enhancements have been added to ADOL-C to allow parallel derivative computations in shared memory environments using OpenMP. Originally, the location of an adouble variable is assigned during its construction utilizing a specific counter. Parallel creation of several variables results in the possible loss of the correctness of the computed results due to a data race in this counter. The obvious solution of protecting this counter in a critical section ensures correctness, but performance was disappointing - even when using only two threads in the parallel program, runtime increased by a factor of roughly two rather than being decreased. For this reason, a separate copy of the complete ADOL-C environment must be provided for every worker thread, requiring a thread-safe implementation of ADOL-C.

Initialization of the OpenMP-parallel regions for ADOL-C is only a matter of adding a macro to the outermost OpenMP statement. Two macros are available that only differ in the way the global tape information is handled. Using ADOLC OPENMP , this information, including the values of the augmented variables, is always transferred from the serial to the parallel region using firstprivate directives for initialization. For the special case of iterative codes where parallel regions, working on the same data structures, are called repeatedly the

ADOLC OPENMP NC macro can be used. Then, the information transfer is performed only once within the iterative process upon encounter of the first parallel region through use of the threadprivate feature of OpenMP that makes use of thread-local storage, i.e., global memory local to a thread. Due to the inserted macro, the OpenMP statement has the following structure:

#pragma omp ... ADOLC OPENMP or

#pragma omp ... ADOLC OPENMP NC

Inside the parallel region, separate tapes may then be created. Each single thread works in its own dedicated AD-environment, and all serial facilities of ADOL-C are applicable as usual.

Parallel reverse mode differentiation for OpenMP 5

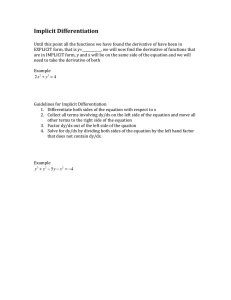

If we consider the inner loop structure of our plasma code, then its derivative computation can be regarded as in Fig. 3, involving serial parts before and after the parallel parts, both for the function and derivative evaluation. The global derivatives can be computed using the tapes

Function evaluation

.

..

.

..

Reverse mode differentiation

.

..

.

..

Fig. 3.

Basic layout of mixed function and the corresponding derivation process created in the serial and parallel parts of the function evaluation. User interaction is indispensable for the correct derivative concatenation of the various tapes, but applying the concept of external differentiated functions [32], the requirement on the user can be reduced significantly. External differentiated functions are pieces of code that typically are left unchanged by ADOL-C and where the user is expected to provide its own derivative routine. By applying

ADOL-C in a hierarchical fashion, and encapsulating the parallel execution of the inner loops in each time-step using the external differentiated function concept [32],we can evaluate, tape and adjoin different independent loop iterations in a parallel fashion. In addition, we have to ensure that threads are locked to tapes in the parallel taping of one time step in Figure 2, so that the same thread writes the tape belonging to a particular time iteration before and after the barrier, and that the integer information describing the pivot sequence in each iteration his stored as well. Detailed explanations and descriptions can be found in [28, 29, 32, 33].

4 Experimental Results

Reasonably realistic plasmas require at least 1000 wave functions, so the reverse mode of differentiation is clearly the method of choice for the computation of derivatives of < η > .

However, for our purposes a reduced constellation is sufficient:

• simulation time t = 30 atomic time units, T = 40000 time steps

• length of the simulation interval L = 200 atomic length scale, discretized with K = 10000 steps

We will not run the code through all time steps, but to preserve the numerical stability of the code, the time discretization is based on 40000 steps nevertheless.

If we were to use N = 24 particles, the derivation of only one time step serially would require taping storage of about 2.4 GB of data, or roughly 96 TB for the full run of 40000 time steps. Hence, checkpointing is a necessity, and appropriate facilities offering binomial checkpointing [43] have been recently incorporated into ADOL-C [34].

To get a first idea of code behavior, we ran a simulation of just 8 particles for only 4 timesteps under the control of the performance analysis software VNG – V ampir N ext G eneration

[12] on the SGI ALTIX 4700 system installed at the TU Dresden. This system contains 1024

6 C. Bischof 1 , N. Guertler 1 , A. Kowarz 2 , and A. Walther 2

Intel Itanium II Montecito processors (Dual Core), running at 1.6 GHz, with 4 GB main memory per core, connected with the SGI-proprietary NumaLink4 interconnection, thus offering a peak performance of 13.1 TFops/sec and 6,5 TB of total main memory.

The observed runtime behavior is shown in Fig. 4. As can be seen, the initial computations

Fig. 4.

Parallel reverse mode differentiation of the plasma code – original version

(0-2s), their derivative counterpart (15.7-17.2s) as well as the evaluation of the target function and its derivative counterpart (4.4-5.2s) require a significant part of the computation time.

However, when computing all 40000 time-steps, the relevance of this part of the computation will be extremely low. However, we also note significant gaps in the parallel execution between different time steps due to serial calculations.

Further analysis identified the relevant serializing routines of ADOL-C, as discussed in

[32]. Most of them could either be removed from the code, or be replaced by parallel versions.

The performance of the resulting code, which also makes use of threadprivate storage is shown in Fig. 5. Due to the virtual elimination of serial sections between time step iterations in the parallel reverse phase (5-14s), it will achieve much better scaling, in particular when applied to higher numbers of processors and more time steps. These two variants of the reverse mode code will be referred to as the ”original” and ”new” variant in the sequel.

As a more realistic example, we now consider a plasma with N = 24 particles, for which we compute the first 50 of the 40000 time steps. After this period, the correctness of the derivatives can already be validated. In addition to the reverse mode based versions of the derivative code discussed so far, we also ran a version that uses the tapeless forward mode, evaluating derivative tapes in parallel during the original parallel execution (see [29, 35] for details).

Figure 6 depicts the speedup and runtime results measured for the three code versions when using 5 checkpoints.

We note that all variants of the derivative code achieved a higher speedup than the original code they are based on. This is not entirely surprising as the derivative computation requires more time, thus potentially decreasing the importance of existing synchronization points. As expected, the tapeless forward mode, which simply mimics the parallel behavior of the original code, achieves the best speedups, and for a larger number of processors, the reduced synchro-

Parallel reverse mode differentiation for OpenMP 7

Fig. 5.

Parallel reverse mode differentiation of the plasma code – new version

Fig. 6.

Speedups and runtimes for the parallel differentiation of the plasma code for N = 24 wave functions, 50 time-steps, and 5 checkpoints nization overhead in the ”new” reverse mode code considerably improves scaling behavior over the ”original” code. In terms of runtime, though, the parallel reverse mode is clearly superior, as shown in the right part of Figure 6, and clearly the key to derivative computations for realistic configurations.

5 Conclusions

Using a plasma code as an example for typical time stepping computations with inner loops parallelized with OpenMP, we developed a strategy for efficient reverse mode computations for tape-based AD tools. The strategy involves a hierarchial approach to derivative computation, encapsulating parallel taping iterations in a threadsafe environment. Extensions to the

8 C. Bischof 1 , N. Guertler 1 , A. Kowarz 2 , and A. Walther 2

ADOL-C tools, which was the basis for these experiments, then result in an AD environment which allow the parallel execution of function evaluation, tracing and adjoining in a fashion that requires relatively little user input and can easily be combined with the a checkpointing approach that is necessary at the outer loop level to keep memory requirements realistic.

Employing advanced OpenMP features such as threadprivate variables and through a careful analysis of ADOL-C utility routines, the scaling behavior of an initial reverse mode implementation could be enhanced considerably. Currently, as can be seen in Fig. 4 and 5, the taping processes, shown in dark gray, are the most time consuming, suggesting that replacement of taping steps by a reevaluation of tapes is the key to further improvements. This is true in particular in the multicore and multithreaded shared-memory environments that will be the building blocks of commodity hardware in the years to come, as parallel threads will be lying around waiting for work. Benchmarks have already shown that efficient multiprogramming techniques can mask main memory latency even for memory-intensive computations [11].

References

1. Automatic parallelism in differentiation of Fourier transforms. In: Proc. 18th ACM Symp.

on Applied Computing, Melbourne, Florida, USA, March 9–12, 2003, pp. 148–152. ACM

Press

2. Asanovic, K., Bodik, R., Catanzaro, B.C., Gebis, J.J., Husbands, P., Keutzer, K., Patterson, D.A., Plishker, W.L., Shalf, J., Williams, S.W., Yelick, K.A.: The landscape of parallel computing research: A view from Berkeley. Tech. rep., EECS Department, University of California, Berkeley (2006)

3. Benary, J.: Parallelism in the reverse mode. In: Berz et al. [4], pp. 137–147

4. Berz, M., Bischof, C., Corliss, G., Griewank, A. (eds.): Computational Differentiation:

Techniques, Applications and Tools. SIAM, Philadelphia, PA (1996)

5. Bischof, C., Green, L., Haigler, K., Knauff, T.: Parallel calculation of sensitivity derivatives for aircraft design using automatic differentiation

6. Bischof, C., Griewank, A., Juedes, D.: Exploiting parallelism in automatic differentiation.

In: E. Houstis, Y. Muraoka (eds.) Proc. 1991 Int. Conf. on Supercomputing, pp. 146–153.

ACM Press, Baltimore, Md. (1991)

7. Bischof, C.H.: Issues in Parallel Automatic Differentiation. In: Griewank and Corliss

[26], pp. 100–113

8. Bischof, C.H., B¨ucker, H.M., Wu, P.: Time-parallel computation of pseudo-adjoints for a leapfrog scheme. International Journal of High Speed Computing 12 (1), 1–27 (2004)

9. Bischof, C.H., Hovland, P.D.: Automatic differentiation: Parallel computation. In: C.A.

Floudas, P.M. Pardalos (eds.) Encyclopedia of Optimization, vol. I, pp. 102–108. Kluwer

Academic Publishers, Dordrecht, The Netherlands (2001)

10. Bischof, C.H., an Mey, D., Terboven, C., Sarholz, S.: Parallel computers everywhere. In:

Proc. 16th Conf. on the Computation of Electromagnetic Fields (COMPUMAG2007)), pp. 693–700. Informationstechnische Gesellschaft im VDE (2007)

11. Bischof, C.H., an Mey, D., Terboven, C., Sarholz, S.: Petaflops basics - performance from

SMP building blocks. In: D. Bader (ed.) Petascale Computing - Algorithms and Applications, pp. 311–331. Chapmann & Hall/CRC (2007)

12. Brunst, H., Nagel, W.E.: Scalable Performance Analysis of Parallel Systems: Concepts and Experiences. In: G.R. Joubert, W.E. Nagel, F.J. Peters, W.V. Walter (eds.) PAR-

ALLEL COMPUTING: Software Technology, Algorithms, Architectures and Applications, Advances in Parallel Computing , vol. 13, pp. 737–744. Elsevier, Dresden, Germany

(2003)

Parallel reverse mode differentiation for OpenMP 9

13. B¨ucker, H.M., Corliss, G.F., Hovland, P.D., Naumann, U., Norris, B. (eds.): Automatic

Differentiation: Applications, Theory, and Implementations, Lecture Notes in Computational Science and Engineering , vol. 50. Springer, New York, NY (2005)

14. B¨ucker, H.M., Lang, B., Rasch, A., Bischof, C.H., an Mey, D.: Explicit loop scheduling in OpenMP for parallel automatic differentiation. In: J.N. Almhana, V.C. Bhavsar (eds.)

Proc. 16th Annual Int. Symp. on High Performance Computing Systems and Applications, Moncton, NB, Canada, June 16–19, 2002, pp. 121–126

15. B¨ucker, H.M., Rasch, A., Vehreschild, A.: Automatic Generation of Parallel Code for

Hessian Computations. Preprint of the Institute for Scientific Computing RWTH–CS–

SC–06–01, RWTH Aachen University (2006)

16. B¨ucker, H.M., Rasch, A., Wolf, A.: A class of OpenMP applications involving nested parallelism. In: Proc. 19th ACM Symp. on Applied Computing, Nicosia, Cyprus, March 14–

17, 2004, pp. 220–224. ACM Press

17. Carle, A., Fagan, M.: Automatically differentiating MPI-1 datatypes: The complete story.

In: Corliss et al. [18], chap. 25, pp. 215–222

18. Corliss, G., Faure, C., Griewank, A., Hasco¨et, L., Naumann, U. (eds.): Automatic Differentiation of Algorithms: From Simulation to Optimization, Computer and Information

Science. Springer, New York, NY (2002)

19. Dagum, L., Menon, R.: OpenMP: An Industry-Standard API for Shared-Memory Programming. IEEE Computational Science and Engineering 05 (1), 46–55 (1998)

20. Faure, C., Dutto, P.: Extension of Odyssee to the MPI Library - Direct Mode. INRIA

Rapport de Recherche 3715, RWTH Aachen University, Sophia-Antipolis (1999)

21. Faure, C., Dutto, P.: Extension of Odyssee to the MPI Library - Reverse Mode. INRIA

Rapport de Recherche 3774, RWTH Aachen University, Sophia-Antipolis (1999)

22. Gerndt, A., Sarholz, S., Wolter, M., an Mey, D., Bischof, C., Kuhlen, T.: Nested OpenMP for efficient computation of 3d critical points in multi-block cfd datasets. In: Proc.

ACM/IEEE SC 2006 Conference (2006)

23. Giering, R., Kaminski, T., Todling, R., Errico, R., Gelaro, R., Winslow, N.: Tangent linear and adjoint versions of NASA/GMAO’s Fortran 90 global weather forecast model. In:

B¨ucker et al. [13], pp. 275–284

24. Griewank, A.: Evaluating Derivatives: Principles and Techniques of Algorithmic Differentiation. No. 19 in Frontiers in Appl. Math. SIAM, Philadelphia, PA (2000)

25. Griewank, A.: A mathematical view of automatic differentiation. Acta Numerica 12 , 1–78

(2003)

26. Griewank, A., Corliss, G.F. (eds.): Automatic Differentiation of Algorithms: Theory, Implementation, and Application. SIAM, Philadelphia, PA (1991)

27. Griewank, A., Juedes, D., Utke, J.: Algorithm 755: ADOL-C: A Package for the Automatic Differentiation of Algorithms Written in C/C++. ACM Transactions on Mathematical Software 22 (2), 131–167 (1996)

28. Guertler, N.: Simulation eines eindimensionalen idealen Quantenplasmas auf Parallelrechnern (2006). Physics diploma thesis, RWTH-Aachen University

29. Guertler, N.: Parallel Automatic Differentiation of a Quantum Plasma Code (2007). Computer science diploma thesis, RWTH-Aachen University

30. Heimbach, P., Hill, C., Giering, R.: Automatic generation of efficient adjoint code for a parallel Navier-Stokes solver. In: P.M.A. Sloot, C.J.K. Tan, J.J. Dongarra, A.G. Hoekstra

(eds.) Computational Science – ICCS 2002, Proc. Int. Conf. on Computational Science,

Amsterdam, The Netherlands, April 21–24, 2002. Part II, Lecture Notes in Computer

Science , vol. 2330, pp. 1019–1028. Springer, Berlin (2002)

10 C. Bischof 1 , N. Guertler 1 , A. Kowarz 2 , and A. Walther 2

31. Hovland, P.D., Bischof, C.H.: Automatic differentiation of message-passing parallel programs. In: Proc. 1st Merged Int. Parallel Processing Symposium and Symposium on

Parallel and Distributed Processing, pp. 98–104. IEEE Computer Society Press

32. Kowarz, A.: Advanced Concepts of Automatic Differentiation based on Operator Overloading. Ph.D. thesis, TU Dresden (2008). To appear

33. Kowarz, A., Walther, A.: Parallel derivative computation using ADOL-C. Tech. rep.,

Institute of Scientific Computing, Technische Universit¨at Dresden, 01062 Dresden, Germany

34. Kowarz, A., Walther, A.: Optimal Checkpointing for Time-Stepping Procedures in

ADOL-C. In: V.N. Alexandrov, G.D. van Albada, P.M.A. Sloot, J. Dongarra (eds.) Computational Science – ICCS 2006, Lecture Notes in Computer Science , vol. 3994, pp. 541–

549. Springer, Heidelberg (2006)

35. Kowarz, A., Walther, A.: Efficient Calculation of Sensitivities for Optimization Problems.

Discussiones Mathematicae. Differential Inclusions, Control and Optimization 27 , 119–

134 (2007). To appear

36. Luca, L.D., Musmanno, R.: A parallel automatic differentiation algorithm for simulation models (1997)

37. Mancini, M.: A parallel hierarchical approach for automatic differentiation. In: Corliss et al. [18], chap. 27, pp. 231–236

38. P. Heimbach and C. Hill and R. Giering: An efficient exact adjoint of the parallel MIT general circulation model, generated via automatic differentiation. Future Generation

Computer Systems 21 (8), 1356–1371 (2005)

39. Rall, L.B.: Automatic Differentiation: Techniques and Applications, Lecture Notes in

Computer Science , vol. 120. Springer, Berlin (1981)

40. Rasch, A., B¨ucker, H.M., Bischof, C.H.: Automatic Computation of Sensitivities for a

Parallel Aerodynamic Simulation. Preprint of the Institute for Scientific Computing

RWTH–CS–SC–07–10, RWTH Aachen University, Aachen (2007)

41. Spiegel, A., an Mey, D., Bischof, C.: Hybrid parallelization of CFD applications with dynamic thread balancing. In: Proc. PARA04 Workshop, Lyngby, Denmark, June 2004,

Lecture Notes in Computer Science , vol. 3732, pp. 433–441. Springer Verlag (2006)

42. Terboven, C., Spiegel, A., an Mey, D., Gross, S., Reichelt, V.: Parallelization of the C++ navier-stokes solver DROPS with OpenMP. In: Parallel Computing (ParCo 2005): Current & Future Issues of High-End Computing, Malaga, Spain, September 2005, NIC Series , vol. 33, pp. 431–438 (2006)

43. Walther, A., Griewank, A.: Advantages of binomial checkpointing for memory-reduces adjoint calculations. In: M. Feistauer, V. Dolejsi, P. Knobloch, K. Najzar (eds.) Numerical mathematics and advanced applications, Proceedings ENUMATH 2003, pp. 834–843.

Springer (2004)