Frequency domain representation and singular value decomposition

advertisement

EOLSS Contribution 6.43.13.4

Frequency domain representation and singular value

decomposition A.C. Antoulas

Department of Electrical and Computer Engineering

Rice University

Houston, Texas 77251-1892, USA

e-mail: aca@rice.edu - fax: +1-713-348-5686

URL: http://www.ece.rice.edu/˜aca

June 12, 2001

Abstract

This contribution reviews the external and the internal representations of linear time-invariant systems.

This is done both in the time and the frequency domains. The realization problem is then discussed. Given

the importance of norms in robust control and model reduction, the final part of this contribution is dedicated

to the definition and computation of various norms. Again, the interplay between time and frequency norms

is emphasized.

Key words: linear systems, internal representation, external representation, Laplace transform, Z -transform,

vector norms, matrix norms, Singular Value Decomposition, convolution operator, Hankel operator, reachability and observability gramians.

This work was supported in part by the NSF through Grants DMS-9972591 and CCR-9988393.

1

Introduction

EOLSS 6.43.13.4

Contents

1

Introduction

2

Preliminaries

2.1 Norms of vectors, matrices and the SVD . . . . . . . . .

2.1.1 Norms of finite-dimensional vectors and matrices

2.1.2 The singular value decomposition . . . . . . . .

2.1.3 The Lebesgue spaces `p and Lp . . . . . . . . .

2.1.4 The Hardy spaces h p and Hp . . . . . . . . . . .

2.1.5 The Hilbert spaces `2 and L2 . . . . . . . . . . .

2.2 The Laplace transform and the Z -transform . . . . . . .

2.2.1 Some properties of the Laplace transform . . . .

2.2.2 Some properties of the Z -transform . . . . . . .

3

4

5

2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3

3

3

5

7

8

9

10

10

11

The external and the internal representation of linear systems

3.1 External representation . . . . . . . . . . . . . . . . . . . .

3.2 Internal representation . . . . . . . . . . . . . . . . . . . .

3.2.1 Solution in the time domain . . . . . . . . . . . . .

3.2.2 Solution in the frequency domain . . . . . . . . . .

3.2.3 The concepts of reachability and observability . . . .

3.2.4 The infinite gramians . . . . . . . . . . . . . . . . .

3.3 The realization problem . . . . . . . . . . . . . . . . . . . .

3.3.1 The solution of the realization problem . . . . . . .

3.3.2 Realization of proper rational matrix functions . . .

3.3.3 The partial realization problem . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

12

13

15

17

18

18

21

24

27

29

31

Time and frequency domain interpretation of various norms

4.1 The convolution operator and the Hankel operator . . . . .

4.2 Computation of the singular values of S . . . . . . . . . .

4.3 Computation of the singular values of H . . . . . . . . . .

4.4 Computation of various norms . . . . . . . . . . . . . . .

4.4.1 The H2 norm . . . . . . . . . . . . . . . . . . . .

4.4.2 The H1 norm . . . . . . . . . . . . . . . . . . .

4.4.3 The Hilbert-Schmidt norm . . . . . . . . . . . . .

4.4.4 Summary of norms . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

32

32

33

35

36

36

37

37

39

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

Appendix: Glossary

42

List of Tables

1

2

3

4

5

Basic Laplace transform properties . . . . . . . . . . . . . . .

Basic Z -transform properties . . . . . . . . . . . . . . . . . .

I/O and I/S/O representation of continuous-time linear systems

I/O and I/S/O representation of discrete-time linear systems . .

Norms of linear systems and their relationships . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

11

12

19

20

40

1 Introduction

One of the most powerful tools in the analysis and synthesis of linear time-invariant systems is the equivalence

between the time domain and the frequency domain. Thus additional insight into problems in this area is

obtained by viewing them both in time and in frequency. This dual nature accounts for the presence and great

success of linear systems both in engineering theory and applications.

2

Preliminaries

EOLSS 6.43.13.4

In this contribution we will provide an overview of certain results concerning the analysis of linear dynamical systems. Time and frequency domain frameworks are inextricably connected. Therefore together with

frequency domain considerations in the sequel, unavoidably, a good deal of time domain considerations are

included as well.

Our goals are as follows. First, basic system representations will be introduced, both in time and in frequency. Then the ensuing realization problem is formulated and solved. Roughly speaking the realization

problem entails the construction of a state space model from frequency response data.

The second goal is to introduce various norms for linear systems. This is of great importance both in robust

control and in system approximation/model reduction. For details see e.g. [14, 31, 7, 24, 6, 4].

First it is shown that besides the convolution operator we need to attach a second operator to every linear

system, namely the Hankel operator. The main attribute of this operator is that it has a discrete set of singular

values, known as the Hankel singular values. These singular values are main ingredients of numerous computations involving robust control and model reduction of linear systems. Besides the Hankel norm, we discuss

various p-norms, where p = 1; 2; 1. It turns out that norms which are obtained for p = 2 have both a time

domain and a frequency domain interpretation. The rest have an interpretation in the time domain only.

The contribution is organized as follows. The next section is dedicated to a collection of useful results on

two topics: Norms and the SVD on the one hand and the Laplace and discrete-Laplace transforms on the other.

Two tables 1, 2, summarize the salient properties of these two transforms. Section 3 develops the external

and internal representations of linear systems. This is done both in the time and frequency domains, with the

results summarized in two further tables 3, 4. This discussion is followed by the formulation and solution of

the realization problem. The final section 4 is dedicated to the introduction of various norms for linear systems.

The basic features of these norms are summarized in the fifth and last table 5.

2 Preliminaries

2.1 Norms of vectors, matrices and the SVD

In this section we will first review some material from linear algebra which pertains to norms of vectors, norms

of operators (matrices), both in finite and infinite dimensions. The latter are of importance because a linear

system can be viewed as a map between infinite dimansional spaces. The Singular Value Decomposition (SVD)

will also be introduced and its properties briefly discussed. Textbooks pertaining to the material discussed in

this section are [16, 18, 19, 21, 27].

2.1.1

Norms of finite-dimensional vectors and matrices

Let X be a linear space over the field K which is either the field of reals R or that of complex numbers C . A

norm on X is a function : X ! R , such that the following three properties are satisfied. Strict positiveness:

(x) 0; 8 x 2 X , with equality if x = 0; triangle inequality: (x + y) (x) + (y), 8 x; y 2 X ;

positive homogeneity: (x) = jj (x), 8 2 K , 8 x 2 X . For vectors x 2 R n or x 2 C n the Hölder or

p-norms are defined as follows:

k x kp :=

8 P

>

>

<

>

>

:

i2n

1

j xi jp ; 1 p < 1

p

maxi2n j xi j; p = 1

0

; x=B

x1

1

.. C

. A

xn

where n := f1; 2; ; ng, n 2 N . The 2-norm satisfies the Cauchy-Schwartz inequality:

jx yj k x k2 k y k2

3

(2.1)

Preliminaries

EOLSS 6.43.13.4

with equality holding iff y = x, 2 K . An important property of the 2-norm is that it is invariant under unitary

(orthogonal) transformations. Let U be n n and UU = In . It follows that k Ux k22 = x U Ux = x x =k

x k22 . The following relationship between the Hölder norms for p = 1; 2; 1 holds:

k x k1 k x k 2 k x k 1

One type of matrix norms are those which are induced by the vector p-norms defined above. More precisely

A

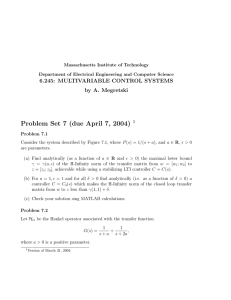

Figure 1:

ellipsoid.

A maps the unit sphere into an ellipsoid.

The singular values are the lengths of the semi-axes of the

for A 2 C mn

kq

k A kp;q := sup kkAx

x6=0 x kp

is the induced p; q -norm of A. In particular, for p = q = 1; 2; 1 the following expressions hold

X

X

1

k A k1 = max

j

Aij j; k A k1 = max jAij j; k A k2 = [max (AA )℄ 2

i2n

j 2m

j 2m

(2.2)

i2n

Besides the induced matrix norms, there exist other norms. One such class is the Schatten p-norms of matrices.

These non-induced norms are unitarily invariant. Let i (A), 1 i min(m; n), be the singular values of A,

i.e. the square roots of the eigenvalues of AA . Then

0

11

p

X p

A

k A kp :=

i (A) ; 1 p < 1

(2.3)

i2m

It follows that the Schatten norm for p = 1 is

k A k1= max(A)

which is the same as the 2-induced norm of A. For p = 1 we obtain the trace norm

k A k1 =

X

i2m

4

i (A)

Preliminaries

EOLSS 6.43.13.4

For p = 2 the resulting norm is also known as the Frobenius norm, the Schatten 2-norm, or the HilbertSchmidt norm of A:

0

11

2

X

1

1

2

A

k A kF =

i (A) = (trae (A A)) 2 = (trae (AA )) 2

(2.4)

i2m

where trae () denotes the trace of a matrix.

2.1.2

The singular value decomposition

Given a matrix A 2 K nm , n m, let the nonnegative numbers 1 2 n 0 be the positive

square roots of the eigenvalues of AA . There exist unitary matrices U 2 K nn , UU = In , and V 2 K mm ,

V V = Im , such that

0

B

B

A = U V where = ( 0) 2 R nm and := B

B

1

1

2

..

.

n

C

C

C

C

A

2 R nn

(2.5)

The decomposition (2.5) is called the singular value decomposition (SVD) of the matrix A; the i ’s are called

the singular values of A while the columns of U , V

un); V = (v1 v2 vm )

U = (u1 u2

are called the left, right singular vectors of A, respectively. These singular vectors are the eigenvectors of

AA , A A respectively. Thus

Avi = i ui ; i = 1; ; n

Example 2.1 Consider the matrix

p2

2

p

2

2

!

A=

!

1 1

1 3

and A A =

1

0

p1

2

!

. The eigenvalue decomposition of the matrices

are:

AA = U 2 U ; A A = V 2 V where

U=

p1

2

q

AA =

1

1

p

1

1

!

1 0

0 2

; =

p

q

1 = 2 + 2; 2 = 2

0

!

B

; V =B

p1

2 1

p

1+

p2 2

1

p1

1

22 C

1p

p2

C

A

22

2

Notice that A maps the unit disc in the plane to the ellipse with half-axes 1 and 2 ; more precisely v1 7! 1 u1

and v2 7! 2 u2 (see figure 1). It follows that X = 2 u2 v2 is a perturbation of smallest 2-norm (equal to 2 )

such that A X is singular:

1

X=

2

p

1 1p 2

1 2 1

!

)

5

1

A X=

2

p

1 1 + p2

1 1+ 2

!

Preliminaries

EOLSS 6.43.13.4

The singular values of A are unique. The left-, right-singular vectors corresponding to singular values of

multiplicity one are also uniquely determined (up to a sign). Thus the SVD is unique in case the matrix A is

square and the singular values have multiplicity one.

Lemma 2.1 The 2-induced norm of A is equal to its largest singular value 1

Proof. By definition

=k A k2

ind .

k2 = sup x A Ax

k A k22 = sup kkAx

x x

x k2

2

x=

6 0

x=

6 0

2

Let y be defined as y := V x where V is the matrix containing the eigenvectors of A A, i.e. A A = V V .

Substituting in the above expression we obtain

n2

2 2

2 2

x xAxAx = 1 yy12 ++ ++ y2nyn 12

n

1

This expression is maximized and equals 12 , for y

= e1 , i.e. x = v1 , where v1 is the first column of V .

Theorem 2.1 Every matrix A with entries in K has a singular value decomposition.

Proof. We will give two proofs of this result. (a) The first is based on the lemma above. Let 1 be the 2norm of A; there exist unity length vectors x1 2 K m , x1 x1 = 1, and y1 2 K n , y1 y1 = 1, such that Ax1 = 1 y1 .

Define the unitary matrices V1 , U1 so that their first column is x1 , y1 respectively:

V1 := [x1 V1 ℄; U1 := [y1 U1 ℄

It follows that

0

U1 AV1 = B

1 w B

0

and consequently

1

C

A

=: A1 where w 2 K m

0

U1 AA U1 = A1 A1 = B

12 + w w w B BB Bw

1

1

C

A

Since the 2-norm of every matrix is bigger than or equal to the norm of any of its submatrices, we conclude that

12 + w w

k AA k = 12

The implication is that w must be the zero vector w

= 0. Thus

0

1 0

U1 AV1 = B

0 B

1

C

A

1) (m 1).

= 0; the matrices U , , V are partitioned in two blocks the first

The procedure is now repeated for B which has size (n

Assume that in (2.5) r

having r columns:

> 0 while r+1

U = [U1 U2 ℄; =

0

1 = B

1

1 0

0 2

!

and V = [V1 V2 ℄

1

..

.

r

C

A

> 0; 2 = 0 2 R (n r)(n

6

r)

(2.6)

Preliminaries

EOLSS 6.43.13.4

Corollary 2.1 Given (2.5) and (2.6) the following statements hold.

rank A = r

span ol A = span ol U1

ker A = span ol V2

Dyadic decomposition. A has a decomposition as a sum of r matrices of rank one:

A = 1 u1 v1 + 2 u2 v2 + r ur vr

(2.7)

The orthogonal projection onto the span of the columns of A is U1U1

The orthogonal projection onto the kernel of A is V2V2

The orthogonal projection onto the orthogonal complement of the span of the columns of A is U2 U2

The orthogonal projection onto the orthogonal complement of the kernel of A is V1V1

q

The Frobenius norm of A is k A kF = 12 + + n2 .

For symmetric matrices the SVD can be readily obtained from the EVD (Eigenvalue Decomposition).

Let the latter be: A = V V . Define by S := diag (sgn1 ; ; sgnn ), where sgn is the signum

function; it equals +1 if > 0, 1 if < 0 and 0 if = 0. Then A = U V where U := V S and

:= diag (j1 j; ; jn j).

2.1.3

The Lebesgue spaces `p and Lp

In this section we will define the p-norms of infinite sequences and functions. These are functions of one real

variable, which in the context of system theory is taken to be time. Consequently, these are time-domain spaces

and norms. Let

`n (I ) := ff : I ! K n ; I Zg

denote the set of sequences of vectors in K n which is either R or C . Frequent choices of I :

I = Z . The p-norms of the elements of this space are defined as:

8 1

P

>

p p; 1p<1

>

<

k

f

(

t

)

k

p

t2I

; f 2 `n (I )

k f kp:= >

>

:

The corresponding `p spaces are:

`np (I )

supt2I k f (t) kp ; p = 1

:= ff

= R , I = R + or I = R

8 R

>

>

<

k f kp:= >

>

:

The corresponding

t2I

(2.8)

2 `n(I ); k f kp< 1g; 1 p 1

For functions of a continuous variable, let

Ln(I ) := ff

Frequent choices of I : I

I = Z, I = Z+ or

:

I !

K n;

I Rg

, and the p-norms are:

1

k f (t) kpp dt p ; 1 p < 1

supt2I k f (t) kp ; p = 1

; f 2 Ln (I )

Lp spaces are:

Lnp(I ) := ff 2 Ln(I ); k f kp< 1g; 1 p 1

7

(2.9)

Preliminaries

2.1.4

EOLSS 6.43.13.4

The Hardy spaces hp and Hp

In this section we consider norms of functions of one complex variable. Thus in the system theoretic context,

this variable is taken to be complex frequency and the resulting spaces and norms are frequency-domain ones.

Let D C denote the (open) unit disc, and let F : C ! C qr be a matrix-valued function, analytic in

D. Its p-norm is defined as follows:

k F kh

p

Z 2

1

:=

k F (rej ) kpp d

sup

2 jrj<1 0

!1

p

; 1p<1

k F kh1 := sup k F (z) kp; p = 1

z 2D

We will choose k F (z0 ) kp to be the Schatten p-norm of F evaluated at z

choices. The resulting hp spaces are defined as follows:

hpqr

:= hpqr (D) := fF as above with :

= z0 ; however, there are other possible

k F kh < 1g

p

The following special cases are worth noting:

!1

Z 2

2

h

i

k F kh2 = 21 sup trae F (re j )F (rej ) d

jrj<1 0

where trae () denotes the trace, and () denotes complex conjugation and transposition; furthermore

k F kh1 = sup max (F (z))

(2.10)

(2.11)

z 2D

Let C C denote the (open) left half of the complex plane: s = x + jy 2 C ,

complex-valued functions F as defined above, which are analytic in C . Then

x < 0.

Consider the

qr

1

1

p

k F (x + jy) kp dy ; 1 p < 1

k F kH := sup

x<0 1

Z

p

p

k F kH1 := sup k F (z) kp; p = 1

z 2C

Again k F (s0 ) kp is chosen to be the Schatten p-norm of

defined analogously to the hp spaces:

F

evaluated at s

= s0 .

The resulting

Hp spaces are

Hpqr := Hpqr (C ) := fF as above with : k F kH < 1g

p

As before, the following special cases are worth noting:

1

1

k F kH2 = sup trae [F (x jy)F (x + jy)℄ dy 2

(2.12)

x<0 1

where trae () denotes the trace, and () denotes complex conjugation and transposition; furthermore

Z

k F kH1 = sup max (F (s))

s2C

(2.13)

The suprema in the formulae above can be computed by means of the maximum modulus theorem, which

states that a function f continuous inside a domain D C as well as on its boundary D and analytic inside

D, attains its maximum on the boundary D of D. Thus (2.10), (2.11), (2.12), (2.13) become:

8

Preliminaries

EOLSS 6.43.13.4

Z 2

h

k F kh2 := 21 trae F (e

0

j )F (ej )

i

d

1

2

k F kh1 := sup max F (ej )

2[0;2℄

k F kH2 :=

Z

(2.14)

1

1

2

trae [F ( jy)F (jy)℄ dy

1

k F kH1 := sup max (F (jy))

y2R

(2.15)

(2.16)

(2.17)

If F has no poles on the unit circle or the j! -axis, but is not necessarily analytic in the corresponding

domains, the h1 , H1 norms are not defined. Instead the `1 , L1 norm of F is defined respectively as follows:

k F k`1 := sup max (F (ej )); k F kL1 := sup

max (F (jy))

y

where in the first expression the supremum is taken over 2 [0; 2 ℄, while in the second the supremum is taken

over y 2 ( 1; 1).

2.1.5

The Hilbert spaces `2 and L2

The spaces `2 (I ) and L2 (I ) are Hilbert spaces, that is linear spaces where not only a norm but an inner product

is defined as well.1 For I = Z and I = R respectively, the inner product is defined as follows:

hx; yi`2 :=

X

t2I

x (t)y(t)

(2.18)

1 Z hx; yiL2 := 2 x (t)y(t)dt

I

(2.19)

where as before () denotes complex conjugation and transposition. For I = Z and I = R respectively,

elements (vectors or matrices) with entries in `2 (Z) and L2 (R ) have a transform defined as follows:

f

8 P

1 f (t) t

>

<

1

7 ! F () := > R 1

:

1 f (t)e

t dt

It follows that if the domain of f is discrete, F (ej ) =: F (f )( ) is the Fourier transform of f and belongs to

L2[0; 2℄; analogously, if the domain of f is continuous, F (j!) =: F (f )(!) is the Fourier transform of f and

belongs to the space denoted by L2 (j R ) and defined as follows:

L2(j R ) := fF : C ! C pm; suh that (2:16) < 1g

Furthermore the following bijective correspondences hold:

`2 (Z) = `2 (Z ) `2 (Z+ ) Z! L2 [0; 2℄ = h2 (D) h2 (D )

1

The spaces ` (I ) and L (I ), p 6= 2, do not share this property; they are Banach spaces. For details see [12, 18].

p

p

9

(2.20)

Preliminaries

and

EOLSS 6.43.13.4

L2 (R ) = L2 (R ) L2 (R +) L! L2(j R ) = H2 (C ) H2 (C +)

For simplicity the above diagram is shown for spaces containing scalars. It is however equally valid for the

corresponding spaces containing matrices of arbitrary dimension.

There are two results connecting the spaces introduced above. We will only state the continuous-time

versions. The first has the names of Parseval, Plancherel and Paley-Wiener attached to it.

Proposition 2.1 The Fourier transform F is a Hilbert space isometric isomorphism between L2 (R ) and L2 (j R ).

It maps L2 (R + ), L2 (R ) onto H2 (C + ), H2 (C ) respectively.

The second one shows that the L1 and H1 norms can be viewed as induced norms. Recall that if (X; )

and (Y; ) are two normed spaces with norms , , respectively, just as in the finite-dimansional case, the

; -induced norm of an operator T with domain X and range Y is:

k T k; := sup kkTxxkk

x6=0

Proposition 2.2 Let F 2 L1 ; then F L2 (j R )

in the frequency domain space L2 (j R ):

(2.21)

L2(j R ) and the L1 norm can be viewd as an induced norm

k F X k L2

k X k L2

In this last expression, X can be restricted to lie in H2 . Let F 2 H1 ; then F H2 (C + ) H2 (C + ) and the H1

norm can be viewd as an induced norm both in the frequency domain space H2 as well as in the time domain

space L2 :

k F kH1 =k F kH2 ind= sup kkFXXkkH2 = sup kkFxxkkL2 =k F kL2 ind

H2

L2

X 6=0

x6=0

k F kL1 =k F kL2

ind

= sup

X=

6 0

2.2 The Laplace transform and the Z -transform

The logarithm can be considered as an elementary transform. It assigns a real number to any positive real

number. It was invented in the middle ages and its purpose was to convert the multiplication of multi-digit

numbers to addition. In the case of linear, time-invariant systems the operation which one wishes to simplify

is the derivative with respect to time in the continuous-time case or the shift in the discrete-time case. As a

consequence, one also wishes to simplify the operation of convolution, both in discrete- and continuous-time.

Thus an operation is sought which will transform derivation into simple multiplication in the transform domain.

In order to achieve this however, the transform needs to operate on functions of time. The resulting function

will be one of complex frequency. This establishes two equivalent ways of dealing with linear, time-invariant

systems, namely in the time domain and in the frequency domain. In the next two section we will briefly review

some basic properties of this transform, which is called Laplace transform in continuous-time and discreteLaplace or Z -transform in discrete-time. For further details we refer to any introductory book in signals and

systems, e.g. [9].

2.2.1

Some properties of the Laplace transform

Consider a function of time f (t). The unilateral Laplace transform of f is a function denoted by F (s) of the

complex variable s = + j! . The definition of F is as follows:

Z 1

f (t) L! F (s) :=

f (t)e

0

10

st dt

(2.22)

Preliminaries

EOLSS 6.43.13.4

Therefore the values of f for negative time are ignored by this transform. Instead, in order to capture the

influence of the past, initial conditions at time zero are required (see Differentiation in time below).

Basic Laplace transform properties

Property

Time signal

L-transform

Linearity

af1 (t) + bf2(t)

aF1 (s) + bF2 (s)

Shifting in the s-domain

es0 t f (t)

F (s s0 )

Time scaling

f (at); a > 0

s

1

aF a

Convolution

f1 (t) f2 (t)

F1 (s)F2 (s)

f1 (t) = f2 (t) = 0; t < 0

Differentiation in time

d

dt f (t)

Differentiation in freq.

tf (t)

sF (s) f (0 )

d

ds F (s)

Integration in time

Rt

Impulse

Æ(t)

1

Exponential

eat 1(t)

s a

0

f ( )d

Initial value theorem:

Final value theorem:

s F (s)

1

1

f (0+ ) = lims!1 sF (s)

limt!1 f (t) = lims!0 sF (s)

Table 1: Basic Laplace transform properties

The last 2 properties hold provided that

2.2.2

f (t) contains no impulses or higher-order singularities at t = 0.

Some properties of the Z -transform

Consider a function of time f (t), where time is discrete t 2 Z. The unilateral Z -transform of f is a function

denoted by F (z ) of the complex variable z = rej . The definition of F is as follows:

1

X

f (t) Z! F (z ) := z t f (t)

t=0

11

(2.23)

The external and the internal representation of linear systems

EOLSS 6.43.13.4

Basic Z -transform properties

Property

Time signal

Z -transform

Linearity

af1 (t) + bf2 (t)

aF1 (z ) + bF2 (z )

Forward shift

f (t 1)

z 1 F (z ) + f ( 1)

Backward shift

f (t + 1)

zF (z ) zf (0)

Scaling in freq.

at f (t)

F ( az )

Conjugation

f (t)

F (z )

Convolution

f1 (t) f2 (t)

F1 (z )F2 (z )

f1 (t) = f2 (t) = 0; n < 0

Differentiation in freq.

tf (t)

Impulse

Æ(t)

1

Exponential

an I(t)

z

z a

First difference

f (t) f (t 1)

(1 z 1 )F (z ) f ( 1)

Accumulation

Pn

z dFdz(z)

k=0 f (t)

1

Initial value theorem:

z 1 F (z )

1

f [0℄ = limz!1 F (z )

Table 2: Basic Z -transform properties

3 The external and the internal representation of linear systems

In this section we will review some basic results concerning linear dynamical systems. General references

for the material in this chapter are [31], [28], [29], [9], [7], [15]. For an introduction to linear systems from

basic principles the reader may consult the book by Willems and Polderman [26]. Here we will assume that

the external variables have been partitioned into input variables u and into output variables y , and will be

concerned with convolution systems, i.e. systems where the relation between u and y is given by a convolution

12

The external and the internal representation of linear systems

sum or integral

EOLSS 6.43.13.4

y =hu

(3.1)

where h is an appropriate weighting pattern. This will be called the external representation. We will also be

concerned with systems where besides the input and output variables, the state x has been declared as well.

Furthermore, the relationship between x and u is given by means of a set of first order difference or differential

equations with constant coefficients, while that of y with x and u is given by a set of linear algebraic equations.

It will also be assumed that x lives in a finite-dimensional space:

x = Ax + Bu; y = Cx + Du

(3.2)

where is the derivative or shift operator and A, B , C , D are linear constant maps. This will be called internal

representation.

We will also consider an alternative external representation, in terms of two polynomial matrices Q 2

p

R p [ ℄, P 2 R pm [ ℄:

Q()y = P ()u

(3.3)

where as above, is the derivative or the backwards shift operator. It is usually assumed that det Q 6= 0. This

representation is given in terms of differential or difference equations linking the input and the output.

The first subsection is devoted to the discussion of systems governed by (3.1), (3.3) while the following

subsection investigates some structural properties of systems represented by (3.2). These equations are solved

both in the time and the frequency domains. The third subsection discusses the equivalence of the external and

the internal representation, As it turns out going from the latter to the former involves the elimination of x and

is thus straightforward. The converse however is far from trivial as it involves the construction of state. It is

called the realization problem. This problem can be interpreted as deriving a time domain representation from

frequency domain data.

3.1 External representation

A discrete-time linear system

, with m input and p output channels can be viewed as an operator

S : `m (Z) ! `p(Z), which is linear. There exists a sequence of matrices S (i; j ) 2 K pm (recall that K

is

either R or C ) such that

:

u

7 ! y := S (u); y(i) =

X

j 2Z

S (i; j )u(j ); i 2 Z

(3.4)

This relationship can be written in matrix form as follows

0

B

B

B

B

B

B

B

B

B

..

.

y( 2)

y( 1)

y(0)

y(1)

..

.

0

1

C

C

C

C

C

C

C

C

C

A

B

B

B

B

B

B

B

B

B

..

.

=

..

.

S ( 2;

S ( 1;

S (0;

S (1;

..

.

..

.

..

.

2) S ( 2; 1) S ( 2; 0) S ( 2; 1)

2) S ( 1; 1) S ( 1; 0) S ( 1; 1)

2) S (0; 1) S (0; 0) S (0; 1)

2) S (1; 1) S (1; 0) S (1; 1)

..

.

..

.

The system described by S is called causal iff

S (i; j ) = 0; i j

and time invariant iff

..

.

S (i; j ) =: Si

13

j

2 K pm

..

.

10

..

.

CB

CB

CB

CB

CB

CB

CB

CB

CB

A

..

.

u( 2)

u( 1)

u(0)

u(1)

..

.

1

C

C

C

C

C

C

C

C

C

A

(3.5)

The external and the internal representation of linear systems

For a time invariant system

EOLSS 6.43.13.4

, we can define the sequence of p m constant matrices

h = (

It will be called the impulse response of

; S 2 ; S 1 ; S0 ; S1 ; S2 ; )

(3.6)

because it is the output obtained in response to a unit pulse

(

u(t) = Æ(t) =

1; t = 0

0; t 6= 0

Operation (3.4) can now be represented as a convolution sum:

S : u 7! y = S (u) = h u where (h u)(t) =

Moreover, the matrix representation of

0

B

B

B

B

B

B

B

B

B

..

.

1

y( 2)

y( 1)

y(0)

y(1)

..

.

C

C

C

C

C

C

C

C

C

A

1

X

1

k=

S in this case is a Toeplitz matrix

0

B

B

B

B

B

B

B

B

B

..

.

=

..

.

S0

S1

S2

S3

..

.

..

.

..

.

S 1

S0

S1

S2

S 2

S 1

S0

S1

..

.

..

.

..

.

S 3

S 2

S 1

S0

..

.

..

St k u(k); t 2 Z

10

CB

CB

CB

CB

CB

CB

CB

CB

CB

A

..

.

u( 2)

u( 1)

u(0)

u(1)

.

..

.

(3.7)

1

C

C

C

C

C

C

C

C

C

A

(3.8)

In the sequel we will restrict our attention to both causal and time-invariant linear systems. The matrix representation of S in this case is lower triangular and Toeplitz ( Sk = 0, k < 0).

In analogy to the discrete-time case, a continuous-time linear system , with m input and p output channels

can be viewed as an operator S mapping Lm (R ) onto Lp (R ), which is linear. In particular we will be concerned

with systems which can be expressed by means of an integral S : Lm (R ) ! Lp (R ):

S : u 7 ! y; y(t) :=

Z

1

h(t; )u( )d; t 2 R

1

(3.9)

where h(t; ), is a matrix-valued function called the kernel or weighting pattern of S . The system just defined

is causal iff

h(t; ) = 0; t and time invariant iff h depends on the difference of the two arguments:

h(t; ) = h(t )

In this case S is a convolution operator

S : u 7! y = S (u) = h u where (h u)(t) =

Z

1

h(t

1

)u( )d; t 2 R

(3.10)

In the sequel we will assume that S is both causal and time-invariant which means that the upper limit of

integration can be replaced by t. In addition, we will assume that h can be expressed as

h(t) = S0 Æ(t) + ha (t); S0 2 K pm ; t 0

(3.11)

where Æ denotes the Æ -distribution and ha is analytic. Hence ha is uniquely determined by means of the

coefficients of its Taylor series expansion at t = 0+ :

t2

t

ha (t) = S1 + S2 + S3 +

1!

2!

k+1

+ Sk (kt + 1)! + ; Sk 2 K pm

14

The external and the internal representation of linear systems

EOLSS 6.43.13.4

It follows that if (3.11) is satisfied the output y is at least as smooth as the input u and is consequently called

a smooth system. Hence just like in the case of discrete-time systems, smooth continuous-time linear system

can be described by means of the infinite sequence of p m matrices Si , i 0. We formalize this conclusion

next.

Definition 3.1 The external representation of a time-invariant, causal and smooth continous-time system and

that of a time-invariant, causal discrete-time linear system with m inputs and p outputs is given by an infinite

sequence of p m matrices

; Sk ; ); Sk 2 R pm

The matrices Sk are often referred to as the Markov parameters of the system S .

h := (S0 ; S1 ; S2 ;

(3.12)

The (continuous- or discrete-time) Laplace transform of the impulse response yields the transfer function of

the system

H ( ) := (Lh)( )

(3.13)

The Laplace transform is denoted for simplicity by L for both discrete- and continuous-time, and the Laplace

variable is denoted by for both cases. It readily follows that H can be expanded in a formal power series in :

H ( ) = S0 + S1 1

+ S2 This can also be regarded as a Laurent expansion of

written as

2

+

+ Sk k + H around infinity.

(3.14)

Consequently (3.7) and (3.10) can be

Y ( ) = H ( )U ( )

An alternative way for describing linear systems externally is by specifying a differential or difference

equation which relates one of the input and one of the output channels. Given that the input has m and the

output p channels. This representation assumes the existence of polynomials qi;j ( ), i; j = 1; ; p and pi;j ( ),

i = 1; ; p, j = 1; ; m, such that

) Q () y(t) = P () u(t)

(3.15)

where P; Q are polynomal matrices Q 2 R pp [ ℄, P 2 R pm [ ℄. If we make the assumption that Q is nonsingular, that is, its determinant is not identically zero: det Q =

6 0, the transfer function of this system is the

qi;j () yj (t) = pi;j () ui (t)

rational matrix H = Q 1 P . If in addition this is proper rational, that is, the degree of the numerator of each

entry is less that the degree of the corresponding denominator, we can expand this as follows:

H ( ) = Q 1 ( )P ( ) = S0 + S1 1

+

+ Sk k

+

(3.16)

Recall that the variable is used to denote the transform variable s or z , depending on whether we are dealing

with continuous- or discrete-time systems. We will not further dwell on this polynomial representation of

linear systems since it is the subject of the following contribution in this volume, namely EOLSS Contribution

6.43.13.5.

3.2 Internal representation

An alternative description for linear systems is the internal representation which uses in addition to the input

u and the output y, the state x. For a first-principles treatment of the concept of state we refer to the book

by Willems and Poldeman [26]. For our purposes, given are three linear finite-dimensional spaces: the state

15

The external and the internal representation of linear systems

EOLSS 6.43.13.4

space X = K n 2 , the input space U = K m , and the output space Y = K p (recall that K denotes the field of

real numbers R or that of complex numbers C ). The state equations describing a linear system are a set of first

order linear differential or difference equations, according to whether we are dealing with a continuous- or a

discrete-time system:

dx(t)

= Ax(t) + Bu(t); t 2 R or

dt

x(t + 1) = Ax(t) + Bu(t); t 2 Z

(3.17)

(3.18)

In both cases x(t) 2 X is the state of the system at time t, while u(t) 2 U; is the value of the input function at

time t. Moreover,

B: U

! X; A : X ! X;

are linear maps; the first one is called the input map, while the second one describes the dynamics or internal

evolution of the system. Equations (3.17) and (3.18) can be written in a unified way as follows:

x = Ax + Bu

(3.19)

where denotes the derivative operator for continuous-time systems, and the (backwards) shift operator for

discrete-time systems.

The output equations, for both discrete- and continuous-time linear systems, are composed of a set of linear

algebraic equations

y = Cx + Du

(3.20)

where y is the output function (response), and

C: X

! Y; D : U ! Y

are linear maps; C is called the output map. It describes how the system interacts with the outside world.

In the sequel the term linear system

in internal representation will be used to denote a linear, timeinvariant, continuous- or discrete-time system which is finite-dimensional. Linear means: U , X , Y are linear

spaces, and A, B , C , D are linear maps; finite-dimensional means: U , X , Y are all finite dimensional; timeinvariant means: A, B , C , D do not depend on time; their matrix representations are constant n n, n m,

p n, p m matrices. In the sequel (by slight abuse of notation) we will denote the linear maps A, B , C , D

as well as their matrix representations (in some appropriate basis) with the same symbols. We are now ready to

give the

Definition 3.2 (a) A linear system in internal or state space representation is a quadruple of linear maps

(matrices)

!

B

A

; A 2 K nn ; B 2 K nm ; C 2 K pn; D 2 K pm

:=

(3.21)

C D

The dimension of the system is defined as the dimension of the associated state space:

dim = n

(3.22)

(b) is called stable if the eigenvalues of A have negative real parts or lie inside the unit disc, depending on

whether is a continuous-time or a discrete-time system.

X

X

n

The notation = K n means that is a linear space which is isomorphic to the -dimensional space K n ; as an example the space

of all polynomials of degree less than is isomorphic to R n , since there is a one-to-one correspondence between each polynomial

and an -vector consisting of its coefficients.

X

2

n

n

16

The external and the internal representation of linear systems

3.2.1

EOLSS 6.43.13.4

Solution in the time domain

Let (u; x0 ; t) denote the solution of the state equations (3.19), i.e., the state of the system at time t attained

from the initial state x0 at time t0 ; under the influence of the input u. In particular, for the continuous-time state

equations (3.17)

Z t

A

(t t0 )

(u; x0 ; t) = e

x0 +

(3.23)

eA(t ) Bu( )d; t t0 ;

t0

while for the discrete-time state equations (3.18)

(u; x0 ; t) = At t0 x0 +

t 1

X

j =t0

At

1

j Bu(j );

t

t0 :

(3.24)

In the above formulae we may assume without loss of generality, that t0 = 0, since the systems we are dealing

with are time-invariant. The first summand in the above expressions is called zero input and the second zero

state part of the solution. The nomenclature comes from the fact that the zero input part is obtained when the

system is excited exclusively by means of initial conditions and the zero state part is the result of excitation by

some input u and zero initial conditions. In the tables that follow these parts are denoted with the subscripts

”zi” and ”zs”.

For both discrete- and continuous-time systems it follows that the output is given by:

y(t) = C(u; x(0); t) + Du(t) = C(0; x(0); t) + C(u; 0; t) + Du(t)

(3.25)

Again the same remark concerning the zero-input and the zero state parts of the output holds.

If we compare the above expressions for t0 = 1 and x0 = 0, with (3.7) and (3.10) it follows that the

impulse response h has the form below. For continuous-time systems:

(

h(t) :=

CeAt B + Æ(t)D; t 0

0; t < 0

(3.26)

where Æ denotes the Æ -distribution. For discrete-time systems

8

>

<

h(t) := >

:

CAt 1 B; t > 0

D; t = 0

0; t < 0

(3.27)

The corresponding external representation given by means of the Markov parameters (3.12), is:

h = (D; CB; CAB; CA2 B;

; CAk 1B; )

(3.28)

By transforming the state the matrices which describe the system will change. Thus, if the new state is

x~ := T x, det T =

6 0, (3.19) and (3.20) in the new state x~, will be become

x~ = T| AT

{z

A~

~ + |{z}

T B u;

}x

1

B~

1

y = CT

~ + Du

| {z } x

C~

where D remains unchanged. The corresponding triples are called equivalent. Put differently,

equivalent if there exists T such that:

T

!

Ip

A B

C D

!

=

A~

C~

17

B~

D~

!

T

!

Im

; det T =

6 0

and ~ are

(3.29)

The external and the internal representation of linear systems

Let

EOLSS 6.43.13.4

and ~ be equivalent with equivalence transformation T . It readily follows that

H ( ) = D + C (I A) 1 B = D + CT 1 T (I A) 1 T 1 T B

= D + CT 1(I T AT 1 ) 1 T B = D~ + C~ (I A~) 1 B~ = H~ ( )

This immediately implies that Sk

= S~k , k 2 N . We have thus proved

Proposition 3.1 Equivalent triples have the same transfer function and therefore the same Markov parameters.

3.2.2

Solution in the frequency domain

= 0. Let ( ) = L()( ), where is defined by (3.23),

In this section we will assume that the initial time is t0

(3.24); there holds

( ) = (I

A) 1 x0 + (I

A) 1 BU ( )

Thus, by (3.13), (3.26), (3.27), the transfer function of

is:

H ( ) = D + C (I

) Y () = C () + DU ()

(3.30)

A) 1 B

(3.31)

A summary of these relationships are provided in the table that follows.

3.2.3

The concepts of reachability and observability

The concept of reachability provides the tool for answering questions related to the extend to which the state of

the system x can be manipulated through the input u. The related concept of controllability will be discussed

subsequently. Both concepts involve only the state equations. For additional information on these issues we

refer to [5].

=

; A 2 K nxn ; B 2 K nxm . A state x 2 X is reachable from the zero

(t) and a time T < 1, such that

state iff there exist an input function u

x = (u; 0; T)

Definition 3.3 Given is

A

B

The reachable subspace X reah X of , is the set which contains all reachable states of

the system (completely) reachable iff X reah = X . Furthermore

Rn(A; B ) := [B AB A2 B An 1B ℄

will be called the reachability matrix of

follows. For continuous-time systems:

. We will call

(3.32)

. The finite reachability gramians at time t < 1 are defined as

P (t) :=

Z t

0

eA BB eA d; t 2 R +

(3.33)

while for discrete-time systems

P (t) := Rt (A; B )Rt (A; B ) =

t 1

X

k=0

Ak BB (A )k ; t 2 Z+

(3.34)

Theorem 3.1 Consider the pair (A; B ) as defined above. (a) X reah = span ol Rn = span ol P (t), where

t > 0, t n 1, for continuous-, discrete-time systems, respectively. (b) Reachability conditions. The

following are equivalent:

18

The external and the internal representation of linear systems

EOLSS 6.43.13.4

I/O and I/S/O representation of continuous-time linear systems

I/O

I/S/O

variables:

(u; y) variables: (u; x; y )

d

d

d

Q dt y(t) = P dt u(t),

dt x(t) = Ax(t) + Bu(t); y (t) = Cx(t) + Du(t)

u(t); y(t) 2 R

d

dt

x(t) 2 R n ,

d

dt

A B

C D

!

2 R (n+p)(n+m)

Impulse response

Q

h(t) = P

Æ(t)

H (s) = L(h(t)) = Q 1 (s)P (s)

h(t) = DÆ(t) + CeAt B; t 0

H (s) = D + C (sI A) 1 B

Poles - characteristic roots

det(i I A) = 0

Zeros

zi 2 C : 9 vi 2 C m satisfying

zi 2 C : 9 w i 2 C n !

, vi

zi I A B

H (zi )vi = 0

C

D

i ; detQ(i ) = 0; i = 1; ; n

2 C m!, such that

wi

vi

Matrix exponential

P

tk k

d At

eAt = 1

i=0 k! A ) dt e

At

1

L(e ) = (sI A)

y(t) = h(t) u(t) )

y(t) = yzi(t) + yPzs(t)

where yzi (t) = ni=1 i e t

i

and

yzs(t) =

Rt

0

=0

= AeAt

Solution in the time domain

x(t) = xzi (t) + xzs(Rt)

x(t) = eAt x(0 ) + 0tR eA(t ) Bu( )d

y(t) = CeAt x(0 ) + t (DÆ(t ) + CeA(t ) B ) u( )d

0

h(t )u( )d

|

{z

h()

}

) y(t) = CeAtx(0 ) + R0t h(t )u( )dt

Solution in the frequency domain

Y (s) = Q 1 (s)R(s) + H (s)U (s) X (s) = (sI A) 1 x(0 ) + (sI A) 1 BU (s)

Y (s) = C (sI A) 1 x(0 ) + (|D + C (sI{z A) 1 B}) U (s)

H (s)

) Y (s) = C (sI A) 1 x(0 ) + H (s)U (s)

Table 3: I/O and I/S/O representation of continuous-time linear systems

1. The pair (A; B ), A 2 K nxn , B

2.

3.

4.

5.

2 K nxm, is completely reacable.

The rank of the reachability matrix is full: rank R(A; B ) = n.

The reachability gramian is positive definite P (t) > 0, for some t > 0.

No left eigenvector v of A is in the left kernel of B : v A = v ) v B 6= 0.

rank (In A

B ) = n, for all 2 C

6. The polynomial matrices I

A and B are left coprime.

The fourth and fifth conditions in the theorem above are known as the PHB or Popov-Hautus-Belevich

tests for reachability.

19

The external and the internal representation of linear systems

EOLSS 6.43.13.4

I/O and I/S/O representation of discrete-time linear systems

I/O

I/S/O

variables: (u; y )

variables: (u; x; y )

Q () y(t) = P () u(t),

x(t) = Ax(t) + Bu(!t); y(t) = Cx(t) + Du(t)

u(t); y(t) 2 R

x(t) 2 R n ,

A B

C D

2 R (n+p)(n+m)

Impulse response

h(0) = D; h(t) = CAt 1 B; t > 0

H (z ) = D + C (zI A) 1 B

Poles - zeros: same as for t 2 R

Exponents of a martix: Z (At ) = (zI A) 1

Q () h(t) = P () Æ(t)

H (z ) = Z (h(t)) = Q 1 (z )P (z )

Solution in the time domain

y(t) = h(t) u(t) )

x(t) = xzi (t) + xP

zs (t)

t 1 At 1 Bu( )

y(t) = yzi(t) + yPzs(t)

x(t) = At x(0) + P

=0

where yzi (t) = ni=1 i ti

y(t) = CAt x(0) + t =01 (|DÆ(t ) +{zCAt h()

P 1

P

and yzs (t) = t =0

h(t )u( ) y(t) = CAt x(0) + t =01 h(t )u( )

Solution in the frequency domain

1

B}) u( )

Y (z ) = Q 1 (z )R(z ) + H (z )U (z ) X (z ) = (zI A) 1 x(0) + (zI A) 1 BU (z )

Y (z ) = C (zI A) 1 x(0) + (|D + C (zI{z A) 1 B}) U (z )

H (z )

) Y (z) = C (zI A) 1 x(0) + H (z)U (z)

Table 4: I/O and I/S/O representation of discrete-time linear systems

We now turn our attention to the concept of observability. In order to be able to modify the dynamical

behavior of a system, very often the state x needs to be available. Typically however the state variables are

inaccessible and only certain linear combinations y thereof, given by the output equations (3.20) are known.

Thus we need to discuss the problem of reconstructing the state x(T ) from observations y ( ) where is in

some appropriate interval. If 2 [T; T + t℄ we have the state observation problem, while if 2 [T t; T ℄ we

have the state reconstruction problem.

We will first discuss the observation problem. Without loss of generality we will assume that T = 0. Recall

(3.23), (3.24) and (3.25). Since the input u is known, the latter two terms in (3.25) are also known for t 0.

Therefore, in determining x(0) we may assume without loss of generality that u() = 0. Thus, the observation

problem reduces to the following: given C(0; x(0); t) for t 0, find x(0). Since B and D are irrelevant, for

this subsection

!

A

=

; A 2 K nxn ; C 2 K pxn

C

Definition 3.4 A state x

2 X is unobservable iff y(t) = C(0; x; t) = 0, for all t 0, i.e. iff x is

indistinguishable from the zero state for all t 0. The unobservable subspace X unobs of X is the set of all

unobservable states of . is (completely) observable iff X unobs = 0. The observability matrix of is

On(C; A) = (C A C (A )n 1C )

20

(3.35)

The external and the internal representation of linear systems

EOLSS 6.43.13.4

The finite observability gramians at time t < 1 are:

Q(t) :=

Theorem 3.2 Given

=

Z t

0

(t ) A(t )

C Ce

d;

eA

t 2 R+

Q(t) := Ot (C; A)Ot (C; A); t 2 Z+

A

C

, for both t 2 Z and t 2 R , X unobs is a linear subspace of X given by

where t > 0, t n 1, depending on whether the system is continuous-, or discrete-time. Thus,

observable if, and only if, rank O (C; A) = n.

Y0 := (y (0) y (1)

where D

:=

(3.37)

X unobs = ker On (C; A) = ker Q(t) = fx 2 X : CAi 1 x = 0; i > 0g

Remark 3.1 (a) Given y (t);

(3.36)

(3.38)

is completely

t 0, let Y0 denote the following np 1 vector:

y(n 1)) t 2 Z; Y0 := (y (0) Dy(0) Dn 1y(0)) t 2 R ;

d

dt . The observation problem reduces to the solution of the linear set of equations

On(C; A)x(0) = Y0

This set of equations is solvable for all initial conditions x(0), i.e. it has a unique solution if and only if is

observable. Otherwise x(0) can only be determined modulo X unobs , i.e. up to an arbitrary linear combination

of unobservable states.

(b) If x1 ; x2 , are not reachable, there is a trajectory passing through the two points if, and only if, x2

f (A; T )x1 2 X reah , for some T , where f (A; T ) = eAT for continuous-time systems and f (A; T ) = AT for

discrete-time systems. This shows that if we start from a reachable state x1 6= 0 the states that can be attained

are also within the reachable subspace.

A concept which is closely related to reachability is that of controllability. Here, instead of driving the

2 X is

zero state to a desired state, a given non-zero state is steered to the zero state. Furthermore, a state x

unreconstructible iff y (t) = C(0; x; t) = 0, for all t 0, i.e. iff x

is indistinguishable from the zero state for

all t 0.

The next result shows that for continuous-time systems the concepts of reachability and controllability are

equivalent while for discrete-time systems the latter is weaker. Similarly, while for continuous-time systems

the concepts of observability and reconstructibility are equivalent, for discrete-time systems the latter is weaker.

For this reason, only the concepts of reachability and observability are used in the sequel.

Proposition 3.2 Given is the triple (C; A; B ). (a) For continuous-time systems X ontr = X reah and X unre

= X unobs . (b) For discrete-time systems X reah X ontr and X unre X unobs ; in particular X ontr =

X reah + ker An and X unre = X unobs \ im An .

3.2.4

The infinite gramians

Consider a continuous-time linear system

=

A

B

C

D

which is stable, i.e. all eigenvalues of

A have

negative real parts. In this case both (3.33) as well as (3.36) are defined for t = 1. In addition because of

Plancherel’s formula, the gramians can be expressed also in the frequency domain (expressions on the righthand side):

Z 1

Z 1

(j! A) 1 BB ( j! A ) 1 d!

P := eA BB eA d = 1

(3.39)

0

2

1

21

The external and the internal representation of linear systems

Q

EOLSS 6.43.13.4

1 A A

1 Z1

( j!

:=

e C Ce d =

2 1

0

Z

A ) 1 C C (j! A) 1 d!

(3.40)

P , Q are the infinite reachability and infinite observability gramians associated with . These gramians satisfy

the following linear matrix equations, called Lyapunov equations; see also [21, 8].

Proposition 3.3 Given the stable, continuous-time system

gramian P satisfies the continuous-time Lyapunov equation

as above, the associated infinite reachability

AP + P A + BB = 0

(3.41)

while the associated infinite observability gramian satisfies

A Q + QA + C C = 0

(3.42)

Proof. Due to stability

Z 1

Z 1

A

A

d(eA BB eA ) = BB Ae BB e A d =

AP + P A =

0

0

This proves (3.41); (3.42) is proved similarly.

If the discrete-time system

d =

F

G

H

J

is stable, i.e. all eigenvalues of F are inside the unit disc, the

gramians (3.34) as well as (3.37) are defined for t = 1

P := R(F; G)R(F; G) =

X

Q := O(H; F ) O(H; F ) =

X

t>0

t>0

F t 1 GG(F )t

(F )t 1 H HF t

1

1

=

1 Z 2 j

(e I

2 0

=

1 Z 2

(e

2 0

Notice that P can be written as P = GG + F P F ; moreover Q

discrete Lyapunov or Stein equations:

j I

A) 1 BB (e

j I

A ) 1 C C (ej I

= H H + F QF .

A ) 1 d

(3.43)

A) 1 d

(3.44)

These are the so-called

Proposition 3.4 Given the stable, discrete-time system d as above, the associated infinite reachability gramian

P satisfies the while the associated infinite observability gramian Q satisfies discrete-time Lyapunov equation

AP A + BB = P ; A QA + C C = Q

(3.45)

We conclude this section by summarizing some properties of the system gramians. For details see, e.g. [23, 14,

7].

Lemma 3.1 Let P and Q denote the infinite gramians of a linear stable system.

(a) The minimal energy required to steer the state of the system from 0 to xr is xr P 1 xr .

(b) The maximal energy produced by observing the output of the system whose initial state is xo is xo Qxo .

(c) The states which are difficult, i.e. require large amounts of energy, to reach are in the span of those

eigenvectors of P which correspond to small eigenvalues. Furthermore, the states which are difficult to observe,

i.e. produce small observation energy, are in the span of those eigenvectors of Q which correspond to small

eigenvalues.

22

The external and the internal representation of linear systems

EOLSS 6.43.13.4

Remark 3.2 Computation of the reachability gramian. Given the pair A 2 R nn , B 2 R nm , the reachability

gramian is defined by (3.33). We will assume that the eigenvalues of A are distinct. Then A is diagonalizable;

let the EVD (Eigenvalue Decomposition) be

A = V V

1

vn℄; = diag (1 ; ; n )

where V = [v1 v2

vi denotes the eigenvector corresponding to the eigenvalue i . Notice that if the ith eigenvalue is complex, the

corresponding eigenvector will also be complex. Let

W = V 1 B 2 C nm

and denote by Wi

2 C 1m the ith row of W . With the notation introduced above the following formula holds:

P (T ) = V R(T )V where [R(T )℄ij = W+iWj 1 exp [(i + j )T ℄ 2 C

(3.46)

i

j

Furthermore, if i + j = 0, [R(T )℄ij = (Wi Wj ) T . If in addition A is stable, the infinite gramian (3.41)

W W

is given by P = V RV , where Rij = + . This formula accomplishes both the computation of the

i

i

j

j

exponential and the integration explicitely, in terms of the EVD of A.

Example 3.1 Consider the example of the parallel connection of two branches, the first consisting of the series

connection of an inductor L with a resistor RL , and the other consisting of the series connection of a capacitor

C with a resistor RC . Assume that the values of these elements are L = 1, RL = 1, C = 1, RC = 12 ; then

"

A=

1

0

#

0

;B=

2

"

1

2

#

)

"

e

eAt B =

2e

t #

2t

The gramian P (T ) and the infinite gramian P are:

P (T ) =

"

1=2 e

2=3 e

2T

3T

+ 1=2

+ 2=3

#

2=3 e 3T + 2=3

;

e 4T + 1

P = Tlim

P (T ) =

!1

"

1=2 2=3

2=3 1

#

If the system is asymptotically stable, i.e. Re(i (A)) < 0, the reachability gramian is defined for T = 1, and it

satisfies (3.41). Hence, the infinite gramian can be computed as the solution to the above linear matrix equation;

no explicit calculation of the matrix exponentials, mutliplication and subsequent intergration is required. In

matlab, if in addition the pair (A; B ) is controllable, we have:

P = lyap (A; B B 0)

For the matrices defined earlier, using the ’lyap’ command in the format ’long e’, we get:

P=

"

5:000000000000000e

6:666666666666666e

01 6:666666666666666e 01

01 1:000000000000000e + 00

#

Example 3.2 A second simple example is the following:

A=

0

2

1

3

!

;B=

0

1

!

)

eAt

23

=

e 2t + 2e

2e t + 2e

t

2t

e t e 2t

2e 2t e t

!

The external and the internal representation of linear systems

EOLSS 6.43.13.4

This implies

6e 2T + 8e 3T 3e 4T + 1

12e 3T + 6e 2T + 6e 4T

P (T ) = 121

12e 3T + 6e 2T + 6e 4T

12e 4T + 16e 3T 6e 2T + 2

And finally

P = lyap (A; B B 0) =

1

12

0

0

!

!

1

6

A transformation between continuous and discrete time systems

One transformation between continuous- and discrete-time systems is given by the bilinear transformation of

the complex plane onto itself given by z = 11+ss . In particular, the transfer function H (s) of a continuous-time

system is obtained from that of a discrete-time one Hd (z ) as follows:

H(s) = Hd

1+s

1 s

This transformation maps the left-half of the complex plane onto the unit disc and vice-versa. The matrices

:=

A B

C D

!

F G

C J

d :=

;

!

of these two systems are related as given in the following table.

Continuous-time

A; B; C; D

A = (pF + I ) 1 (F I )

B = p2(F + I ) 1 G

C = 2H (F + I ) 1

D = J H (F + I ) 1 G

9

>

>

>

=

>

>

>

;

z=

1+s

1 s

s=

z 1

z +1

Proposition 3.5 Given the stable continuous-time system

let

d

:=

F G

H J

!

, with infinite gramians

8

>

>

>

<

>

>

>

:

:=

Discrete-time

F = (pI + A)(I A) 1

G = p2(I A) 1 B

H = 2C (I A) 1

J = D + C (I A) 1 B

F; G; H; J

A B

C D

!

with infinite gramians

P , Q ,

Pd , Qd , be the discrete-time system obtained by means of

the transformation given above. It follows that the bilinear transformation introduced above preserves the

gramians: P = Pd and Q = Qd . Furthermore, this transformation preserves the infinity norms (see section

4.3).

3.3 The realization problem

In the preceding sections we have presented two ways of representing linear systems: the internal and the

external. The former makes use of the inputs u, states x, and outputs y . The latter makes use only of the inputs

u and the outputs y. The question thus arises as to the relationship between these two representations.

24

The external and the internal representation of linear systems

In one direction this problem is trivial. Given the internal representation

EOLSS 6.43.13.4

=

A B

C D

!

of a system,

the external representation is readily derived. As shown earlier, the transfer function of the system is given by

(3.31) H ( ) = D + C (I A) 1 B , while from (3.28), the Markov parameters are given by

S0 = D; Sk := CAk 1B 2 R pm ; k 2 N

(3.47)

The converse problem, i.e. given the external representation, derive the internal one, is far from trivial.

This is the realization problem: given the external representation of a linear system construct an internal or

h, or equivalently the transfer function

state variable representation. In other words, given the impulse response

!

A B

H , or the Markov parameters Sk of a system, construct

, such that (3.47) hold.

C D

without computation that D = S0 . Hence the following problem results:

It readily follows

Definition 3.5 Given the sequence of p m matrices Sk , k 2 N , the realization problem consists in finding a

positive integer n and constant matrices (C; A; B ) such that

Sk = CAk 1B; C; A; B 2 R pn R nn R nm ; k 2 N

The triple

sequence.

A B

C

(3.48)

!

is then called a realization of the sequence Sk , and the latter is called a realizable

The realization problem is sometimes referred to as the problem of construction of state for linear systems

described by convolution relationships.

Remark 3.3 Realization can also be considered as the problem of converting frequency domain data into time

domain data. The reason is that measurement of the Markov parameters is closely related to measurement of

the frequency response.

Example 3.3 Consider the following (scalar) sequences:

(Sk )1

k=0

(Sk )1

k=0

(Sk )1

k=0

(Sk )1

k=0

(Sk )1

k=0

f1; 1; 1; 1; 1; 1; 1; 1; 1; g

f1; 2; 3; 4; 5; 6; 7; 8; 9; g natural numbers

f1; 2; 3; 5; 8; 13; 21; 34; 55; g Fibonai numbers

f1; 2; 3; 5; 7; 11; 13; 17; 19; g primes

1 1 1 1 1 1

= f1; ; ; ; ; ; ; g inverse fatorials

1! 2! 3! 4! 5! 6!

=

=

=

=

Which sequences are realizable?

Problem 3.1 The following problems arise:

(a) Existence: given a sequence Sk , k > 0, determine whether there exist a positive integer n and

a triple of matrices A; B; C such that (3.48) holds.

(b) Uniqueness: in case such an integer and triple exist, are they unique in some sense?

(c) Construction: in case of existence, find n and give an algorithm to construct such a triple.

25

The external and the internal representation of linear systems

EOLSS 6.43.13.4

The main tool for answering the above questions is the matrix H of Markov parameters:

0

H :=

B

B

B

B

B

B

B

B

B

B

B

B

B

B

B

B

B

B

S1

S2

S2

S3

..

.

..

.

Sk Sk+1 Sk+1 Sk+2 ..

.

Sk Sk+1

Sk+1 Sk+2

..

.

..

.

S2k 1 S2k S2k S2k+1 ..

.

..

.

..

.

1

C

C

C

C

C

C

C

C

C

C

C

C

C

C

C

C

C

C

A

(3.49)

This is the Hankel matrix; it has infinitely many rows, infinitely many columns, and block Hankel structure,

i.e. (H)i;j = Si+j 1 , for i; j > 0. We start by listing conditions related to the realization problem.

Lemma 3.2 Each statement below implies the one which follows:

(a) The sequence Sk , k 2 N , is realizable.

P

(b) The formal power series k>0 Sk k is rational.

(c) The sequence Sk , k 2 N , satisfies a recursion with constant coefficients, i.e. there exist a positive integer r

and constants i , 0 i < r , such that

0 Sk + 1 Sk+1 + 2 Sk+2 +

+ r 2Sr+k 2 + r 1 Sr+k 1 + Sr+k = 0; k > 0

(3.50)

(d) The rank of H is finite.

Proof. (a) ) (b) Realizability implies (3.48). Hence

X

k>0

Sk This proves (b).

(b) ) (c) Let det(I

implies

k

=

X

k>0

0

CAk 1B

k

=C

A) =: 0 + 1 +

0

A ( ) X

k>0

X

k>0

1

Ak 1 kA B

= C (I

A) 1 B

+ r 1r 1 + r =: A(). The previous relationship

1

Sk k A = C [adj (I

A)℄ B

where adj (M ) denotes the adjoint of the matrix M . On the left-hand side there are terms having both positive

and negative powers of , while on the right-hand side there are only terms having positive powers of . Hence

the coefficients of the negative powers of on the left-hand side must be identically zero; this implies precisely

(3.50).

(c) ) (d) Relationships (3.50) imply that the (r + 1)-st block column of H is a linear combination of the

previous r block columns. Furthermore, because of the block Hankel structure, every block column of H is a

sub-column of the previous one; this implies that all block columns after the r -th are linearly dependent on the

first r , which in turn implies the finiteness of the rank of H.

The following lemma describes a fundamental property of H; it also provides a direct proof of the implication (a) ) (d).

26

The external and the internal representation of linear systems

EOLSS 6.43.13.4

Lemma 3.3 Factorization of H

If the sequence of Markov parameters is realizable by means of the triple

follows:

(C; A; B ),

H can be factored as

H = O(C; A)R(A; B )

(3.51)

Consequently, if the sequence of Markov parameters is realizable the rank of H is finite.

Proof. If Sn , n 2 N , is realizable the relationships Sn

0

H=

B

B

B

B

CB CAB

CAB CA2 B

..

.

..

.

= CAn 1B , n 2 N , hold true. Hence:

1

C

C

C

C

A

= O(C; A)R(A; B )

It follows that: rank H maxfrank O ; rank Rg size (A).

In order to discuss the uniqueness issue of realizations, we need to recall the concept of equivalent systems

defined by (3.29). In particular, proposition 3.1 asserts that equivalent triples have the same Markov parameters.

Hence the best one can hope for in connection with the uniqueness question is that realizations be equivalent.

Indeed as shown in the next section this holds for realizations with the smallest possible dimension.

3.3.1

The solution of the realization problem

We are now ready to answer the three questions posed at the beginning of the previous sub-subsection. This

also proves the implication (d) ) (a), and hence the equivalence of the statemenrs of lemma 3.2.

Theorem 3.3 Main Result.

(1) The sequence Sk , k 2 N , is realizable if, and only if, rank H =: n < 1.

(2) The state space dimension of any solution is at least n. All realizations which are minimal are both reachable

and observable. Conversely, every realization which is reachable and observable is minimal.

(3) All minimal realizations are equivalent.

Lemma 3.3 proves part (1) of the main theorem in one direction. To prove (1) in the other direction we will

actually construct a realization assuming that the rank of H is finite.

Lemma 3.4 Silverman Realization Algorithm

Let rank H = n. Find an n n submatrix of H which has full rank. Construct the following matrices:

(i) 2 R nn ; it is composed of the same rows as ; its columns are obtained by shifting those of by one

block column (i.e. m columns).

(ii) 2 R nm is composed of the same rows as ; its columns are the first m columns of H.

(iii) 2 R pn is composed of the same columns as ; its rows are the first p rows of H.