Information Design

advertisement

Information Design:

The Random Posterior Approach

Laurent Mathevet

Jacopo Perego

Ina Taneva∗

New York University

New York University

University of Edinburgh

April 1, 2016

Abstract

Information affects behavior by influencing beliefs. Information design

studies how to disclose information in order to incentivize players to behave

in a desired way. This paper is a theoretical investigation of information

design, culminating in a representation theorem and a fundamental application of it. We adopt a random posterior perspective, viewing information

design as belief manipulation rather than information disclosure. The representation theorem shows that it is as if the designer manipulated beliefs in a

specific way, giving form to the random posterior approach in games, as did

Kamenica and Gentzkow (2011) in one-agent problems. The representation

theorem can also be implemented in specific problems, for example in the

beauty contest and multiple-agent problems. We focus on an application

that we call the Manager’s Problem.

∗

We thank Elliot Lipnowski, Qingmin Liu, Efe Ok, David Pearce, József Sákovics, Ennio

Stacchetti, Max Stinchcombe and Siyang Xiong for helpful comments and conversations. We

also thank seminar audiences at Columbia University, New York University, and the SIRE BIC

Workshop.

E-mail: lmath@nyu.edu and jacopo.perego@nyu.edu and ina.taneva@ed.ac.uk

1

2

1.

L. Mathevet, J. Perego, I. Taneva

Introduction

In incomplete information environments, behavior is determined by payoffs and

beliefs. In principle, this suggests two ways to create incentives. On the one hand,

we can affect an agent’s behavior by manipulating his payoffs, for example by

taxing bad behavior, rewarding efforts, offering insurance, etc. On the other hand,

if the environment allows it, we can do so by manipulating his beliefs. Information

designs studies the latter. In information design, a designer has information that

is unknown to a group of agents, and she commits to which information she will

disclose so as to induce desired behaviors.

This paper is a theoretical investigation of information design, culminating in

a representation theorem and a fundamental application of it. In this exploration,

we define the central concepts of the information design problem, identify the constituents of the solution, and the representation theorem assembles them, giving

the expression of the random posterior approach in games, as did Kamenica and

Gentzkow (2011) in one-agent problems.

The representation theorem also comes with practical insights and can be implemented in specific problems. In particular, we propose a fundamental application that we call the Manager’s Problem. A manager oversees a supervisor and a

worker and wants them to exert high effort in all circumstances. The manager has

more experience and will know whether the project is easy or hard. She is deciding

what to tell her subordinates about it, then they will make up their mind and act.

The manager’s problem is that the supervisor only wants to work hard when the

project is difficult and the worker wants to adjust his effort to his supervisor’s.

The manager’s objective is for both of them to always work hard. How should she

inform them about the difficulty of the project, given that they all share the same

prior beliefs about it?

Information design is about manipulating beliefs, and information is only a

means to that end. Therefore, we cannot understand information design if we do

not understand beliefs. In interactive environments, a player formulates beliefs

about the uncertain state of world given the information he received, but also formulates beliefs about the other players’ beliefs about the state, and beliefs about

their beliefs about his beliefs about the state, etc. These higher-order beliefs,

as they are called in the literature, are absent from one-agent problems, but are

an inevitable part of strategic interactions under incomplete information. As a

result, the information designer must harness hierarchies of beliefs to manipulate

Information Design in Games

3

behavior. In this sense, information design is, in its practical form, applied epistemic game theory or belief engineering. In the manager’s problem, for example,

the supervisor’s behavior is essentially determined by his first-order beliefs, while

the worker’s behavior is essentially determined by his beliefs about his supervisor’s beliefs (called second-order beliefs). The manager must then manipulate the

supervisor’s first-order beliefs and the worker’s second-order beliefs.

In the scheme of all games and desirable outcomes thereof, especially when

asymmetries are considered, there is no reason to expect public information to be

predominantly optimal.1 Because of that, private information—having different

players hold different beliefs—will also play a major role in information design,

giving rise to non-trivial hierarchies of beliefs and raising fundamental questions.

When exactly is private information better than public information? Which players should the designer “convince” primarily? How do properties of games affect

optimal information disclosure? These questions are also relevant in the real world,

as in the communication of stress test results, firms’ intra-communication, marketing, political campaigning, etc.

Model. Players interact in an environment where the state of nature is distributed according to prior µ0 . A designer (possibly, a third party) will learn the

state and she is deciding which messages she will send the players in every realized

state that might occur. That map together with the message space is the information structure. The designer commits ex-ante to an information structure, an

assumption that has been used and discussed in Kamenica and Gentzkow (2011)

and Bergemann and Morris (2015). The designer has (possibly state-dependent)

preferences about the players’ action profiles, as the manager who would want her

subordinates to adjust their effort to the state. The interaction following the designer’s choice of an information structure is a standard Bayesian game, in which

players are assumed to play a Bayes Nash equilibrium. A linear selection rule picks

one equilibrium in case of multiplicity. For example, if the designer is optimistic

(pessimistic) and believes that the best (worst) equilibrium will occur, then the

max-rule (min-rule) prevails. The information design problem is the designer’s

choice of an information structure that maximizes her ex-ante expected utility in

the selected equilibrium.

Results. An information structure induces a distribution over profiles of hier1

Just to mention some examples: in multiple agent problems alone, public information is

only optimal when the designer’s objective is concave (see the Supplement). In the manager?s

problem, optimal disclosure involves private information.

4

L. Mathevet, J. Perego, I. Taneva

archies of beliefs, called a random posterior. The random posterior approach

establishes that the information design problem is as if the designer was choosing a random posterior. The premise is that we can learn a great deal from this

equivalence, even sometimes implement the designer’s choice more easily with this

knowledge.

Our representation theorem tells us that the designer’s problem proceeds as

if she optimally mixed over minimal consistent random posteriors, subject to the

prior beliefs averaging to µ0 across these random posteriors. That is to say, the

theorem narrows down the designer’s focus to specific random posteriors: those

minimal consistent ones that satisfy a form of Bayes’ plausibility. A priori the

designer should contemplate all distributions over hierarchies of beliefs. Clearly,

the property that a player’s (first-order) beliefs must average to µ0 , a key insight

from the one-agent case, must apply here to every player. In fact, it must hold in

a strong form, because every player knows it applies to everyone, knows that everyone knows it applies to everyone, etc., so that this property must be commonly

believed. However, even common belief in Bayesianism leaves room for players

to agree to disagree. By insisting on consistent random posteriors, the theorem

says that the designer cannot possibly hope to manipulate beliefs in a way that

would leave players to agree to disagree. What is more, the set of consistent random posteriors is convex and admits extreme points. Those extreme points can

be characterized: they are the (consistent) random posteriors having ‘minimal’

support. In the tradition of extremal representation theorems,2 the designer only

needs to be concerned with this specific subclass and distributions thereof. In

the one-agent case, (the Dirac measures on) the first-order beliefs of the agent

are the minimal random posteriors, so that there can be only one realization of

beliefs for every minimal random posterior. In contrast, there can be many belief

realizations for minimal random posteriors in games, and the theorem provides

the appropriate form of Bayes’ plausibility.

In the representation given by the theorem, every minimal consistent random

posterior has a value given by the designer’s utility evaluated in equilibrium, and

a “cost” or a “price” measured by the prior belief distance from µ0 . In that sense,

the constraint can be seen as a form of budget constraint. More generally, the

designer might not have the tools to induce all possible consistent distributions

on the universal type space. In applied works, for example, the designer is often

2

For example, the Minkowski–Caratheodory theorem, the Krein-Milman theorem, Choquet’s

integral representation, etc.

Information Design in Games

5

restricted to a subset of information structures. Fortunately, the representation

theorem carries over to the constrained case. Moreover, given the importance of

minimal random posteriors, in both the constrained and the unconstrained case,

we spend some time characterizing them in the context of public and conditionally

independent information, both being widely used in applications. We also study

the mathematical structure of the set of minimal random posteriors and draw

important implications from it.

From a practical viewpoint, the theorem reduces the information design problem from information structures to random posteriors—this reduction is drastic

under redundant information—and then shifts attention from all random posteriors to the minimal ones. This still leaves the designer contemplating a very large

set of options. In fact, if she wants to contemplate all the minimal random posteriors that she might ever need, given any group of players she might face, any

underlying game they might play, any preferences that she might have about the

outcomes of that game, and given any prior she might have, then we prove that

she will have to hold on to all of them. That is, every (finite-support) minimal

random posterior is the (essentially unique) optimal solution to some problem,

which is why the specifics of the problem, namely the designer’s preference and

the game, must be used to trim the set of minimal random posteriors.

To understand the representation theorem and implement it, we unpack its

content into smaller problems that can be solved separately and then re-assembled.

This process broadly separates the value of public and private information and

delivers a concave-envelope representation of optimality. This is done in Corollary

1, which decomposes the representation theorem in two parts. First, there is

a maximization within, taking place among the minimal subspaces with equal

average prior beliefs. At this stage, we can limit attention to payoff-equivalent

subspaces, a simple observation that invites us to use the specifics of the problem

to solve it. Second, there is a maximization between that patches together the

minimal random posteriors that are solutions to maximizations within. This step is

akin to a public signal, sending players to an optimal minimal random posterior. In

one-agent problems, only maximization between remains and the corollary reduces

to Kamenica and Gentzkow (2011)’s concave envelope result.

The Manager’s Problem Solved. In the manager’s problem, the supervisor’s

and the worker’s hierarchies of beliefs appear directly in equilibrium and in a simple truncated form, making this problem the epitome of belief manipulation in

games. Our representation theorem leads to a crisp analysis. A revelation argu-

6

L. Mathevet, J. Perego, I. Taneva

ment reduces the set of random posteriors to consider. As a result, it is actually

sufficient to consider random posteriors over “truncated” hierarchies, consisting

of only the first-order beliefs of the supervisor and the second-order beliefs of the

worker. Such random posteriors admit an instrumental characterization of consistency and of minimality. The representation theorem can then be described

as a tractable nested maximization problem. We solve this problem for a specific

designer who cares about the worker’s action only. This example clearly illustrates

the respective roles of maximization within and maximization between. Optimal

information disclosure prescribes silence for low priors, talking to the supervisor

only (that is, private partial information) for intermediate priors, and talking to

both for high priors.

Literature Review. This paper is part of a recently active literature on information design. In general, this literature differs from the literature on cheap

talk formulated by Crawford and Sobel (1982) because the designer can commit

to a communication protocol. This commitment assumption has been discussed

extensively. The one-agent problem has been a rich subject of study since the

seminal work of Kamenica and Gentzkow (2011) (KG hereafter), in relation to

Aumann and Maschler (1995), Brocas and Carrillo (2007), and Benoı̂t and Dubra

(2011). Kamenica and Gentzkow (2011) have provided an elegant approach to

single-agent information design problems, leading to a clear characterization of

optimality. From there, Gentzkow and Kamenica (2014) study the problem with

costly information disclosure; Galperti (2015) studies the problem when the designer has greater knowledge than the agent (described as heterogenous priors);

Lipnowski and Mathevet (2015) provide methods that simplify the computation of

an optimal disclosure policy, bringing KG’s abstract characterization to concrete

disclosure prescriptions for a psychological audience; Kolotilin et al. (2015) study

the problem with a privately informed agent, drawing connections to the standard

linear mechanism design with transfers; and Ely, Frankel, and Kamenica (2015)

study dynamic information disclosure to an agent who gets satisfaction from being

surprised or feeling suspense.

The theory of information design in games, however, is at an early stage,

although optimal solutions have been derived in specific environments. There is,

for example, a literature in the global game tradition (e.g., Morris and Shin (2002)

and Angeletos and Pavan (2007)) that studies optimal information precision and

its underpinnings in linear environments. The problem usually assumes additively

separable, normally distributed, either conditionally independent or public signals.

Information Design in Games

7

The computation of the welfare optimal variance of the private and of the public

signal is the information design problem solved directly in these papers. More

recent work derives optimal information structures in voting games, but focuses

on public information (as in Alonso and Camara (2015)), so that hierarchies of

beliefs are characterized by first-order beliefs.

This paper is most closely related to Bergemann and Morris (2015) and Taneva

(2015), as they provide a systematic approach to general information design problems in games. They develop a general method based on a notion of Bayes’ correlated equilibrium that characterizes all outcomes under all information structures.

This formulation is amenable to linear programming and, as such, it is an effective

way to compute the optimal solution to an information design problem (under the

max selection rule). This paper offers a different perspective, adopting a random

posterior approach to the problem, as in KG, and providing an as if representation

of the structure of the problem and of its solution. Specifically, the representation theorem puts beliefs at the center of the theory, while viewing the problem

as concavification (of a previously built value function) in practice. Section 8.2

detail the differences between both approaches.

In the next section, we present the manager’s problem. In Section 3, we present

the framework and the information design problem. In Section 4, we define key

concepts from epistemic game theory and random posteriors. Section 5 develops

the theory leading to the representation theorem. Section 6 studies the implications of the theorem. With this in mind, we return to the manager’s problem

and apply the theorem to solve it. In Section 8, we discuss some of our assumptions and highlight differences between our approach and the linear programming

approach. Finally, Section 9 concludes.

2.

The Manager’s Problem

A manager is overseeing a supervisor and a worker who collaborate on a project.

An unknown variable θ determines the type of project that they are facing.

Projects come in two types, θ ∈ {0, 1} (easy or hard), which only the manager is

able to eventually observe. The project type is distributed according to µ0 ∈ ∆Θ,

which is common knowledge. Let µ0 := µ0 (θ = 0) = 65 . The manager must decide

what she will tell her subordinates about the state, after which they will make

up their mind and act. The interaction between the supervisor and the worker is

described by the following game:

8

L. Mathevet, J. Perego, I. Taneva

θ=0

0

1

θ=1

0

1

0

1

1, 1

0, 0

1, 0

0, 1

0

1

0, 1

1, 0

0, 0

1, 1

Table 1: The Game between Supervisor and Worker

The supervisor (P) is the row player and the worker (W) is the column player.

Both have to choose an effort level in {0, 1}, to be interpreted as low or high.

Suppose the worker’s effort level ultimately determines the quality of the firm’s

product and therefore the manager limits her concern to the worker’s behavior:

v(a, θ) = aW .3 Thus, the manager gets a payoff of 1 if W exerts high effort and 0

otherwise. The manager’s problem is that the supervisor has a dominant action

to adjust his effort level to the state and the worker wants to adjust his effort

level to his supervisor’s. In other words, P wants to choose aP = 1 in state 1 and

aP = 0 in state 0, and W wants to choose aW = aP regardless of the state.

Let µ1 := PrP (θ = 0) be P’s belief that the state is 0. Since P wants to match

the state, his equilibrium action for any µ1 is given by

(

0 if µ1 > 1/2

a∗P (µ1 ) =

1 if µ1 ≤ 1/2.

Let λ2 := PrW (µ1 ≤ 1/2) be W’s belief that P’s belief is less than 1/2. Since W

cares about mimicking P’s action, his equilibrium action for any λ2 is given by 4

(

0 if λ2 < 1/2

a∗W (λ2 ) =

1 if λ2 ≥ 1/2.

Given the equilibrium (a∗P (·), a∗W (·)), the manager quickly realizes that, because

prior µ0 is high, she is better off telling them everything (full information) than

telling them nothing (no information). Both subordinates play 0 all the time when

they receive no information, while they both play 1 61 of the time (and 0 the rest

of the time) when they are fully informed. So, the manager gets Ev = 0 under no

information and Ev = 16 under full information.

Once the manager reads the related literature (Aumann and Maschler (1995),

Brocas and Carrillo (2007), Benoı̂t and Dubra (2011), Lipnowski and Mathevet

3

4

In Section 7, we consider general manager’s utility functions. We keep it simple here.

Indifference is broken in the manager’s favor.

Information Design in Games

9

(2015) and especially Kamenica and Gentzkow (2011)), she thinks about public

information: she could talk to them simultaneously and induce belief µ01 = 12 with

probability 31 and µ001 = 1 with probability 23 .5 Under that new scheme, W plays 1 31

of the time and play 0 the rest of the time. The manager is quite satisfied because

her expected utility, Ev = 13 , is double what she received in the full information

case. Yet, she can do strictly better by making use of private information, as we

will see in Section 7.

3.

Framework

In this paper, for any compact metric space Y , ∆Y denotes the space of Borel

probability measures on Y , endowed with the weak*-topology, and so is itself compact and metrizable. All product spaces are endowed with the product topology

and subspaces with the relative topology. Given µ ∈ ∆Y , let supp µ denote the

support of µ, i.e., the smallest closed subset of Y with measure one under µ. For

measures on product spaces, µ ∈ ∆(A × B), margA µ is the marginal on A.

A (base) game G = (Ai , ui )i∈N describes a set of players, N = {1, . . . , n},

interacting in an environment with uncertain state of nature θ ∈ Θ (state space

Θ is assumed to be finite). Every i ∈ N has finite action set Ai ⊆ R and utility

Q

function ui : A × Θ → R, where A = i Ai is the set of action profiles. Players

have a common full-support prior µ0 ∈ ∆Θ about the state, and receive additional

information about θ from a designer.

The designer is an external agent who controls the information about the

state of nature available to the players, and otherwise does not participate in

the strategic interaction. She shares the same prior, µ0 , as the players and must

decide which information she will reveal to them about θ, before observing its

realization. The designer’s utility function is given by a continuous v : A×Θ → R.

An information design environment is a pair hv, Gi consisting of a designer’s

preference and a base game. Typically, one models information as an information

structure (S, π) (often denoted π for short) where Si is player i’s finite message

Q

space; S = i Si is the set of message profiles; and π : Θ → ∆S is the information

map. In any state θ, the message profile s = (si ) is drawn according to π(s|θ) and

Q

player i observes si . Let S−i = j6=i Sj and assume without loss that, for all i and

si ∈ Si , there is s−i ∈ S−i such that s ∈ ∪θ supp π(·|θ) (otherwise delete si ).

5

This distribution is Bayes plausible:

beliefs is the same.

1

2

×

1

3

+1×

2

3

= 56 . The distribution of W’s first-order

10

L. Mathevet, J. Perego, I. Taneva

The designer chooses the information structure (the only information received

by the players) and, in doing so, commits ex-ante to disclosing information that

she will learn in the future. Kamenica and Gentzkow (2011) and Bergemann and

Morris (2015) have discussed this assumption extensively. One can think of an

information structure as an experiment concerning the state, such as an audit, a

stress test, or a medical analysis, chosen by the designer in ignorance of the state

and whose results will be revealed to the players accordingly.

Given prior µ0 and information structure (S, π), every player i forms posterior

beliefs µi : Si → ∆(Θ × S−i ) about the state and other players’ messages.6 Given

this, an information structure is said to be: public if for all s ∈ S, margΘ µi (·|si ) =

margΘ µj (·|sj ) for all i and j and margS−i µi = δs−i (the Dirac measure centered at

s−i ) for all i; private if it is not public; uninformative if margΘ µi (·|si ) = µ0 for all

s ∈ S and i ∈ N ; informative if it is not uninformative.

Once the designer has chosen an information structure, the pair hG, (S, π)i

defines a Bayesian game, in which players use strategies σi : Si → ∆(Ai ) and

play a Bayes Nash equilibrium (BNE). We extend ui and v to mixed actions by

Q

taking expectations. Let Σi be player i’s strategy set and let Σ = i Σi . Since the

game and the information structure are assumed to be finite, Σ is compact in the

natural topology.

Definition 1. A Bayes Nash equilibrium of hG, (S, π)i is a distribution γ ∈ ∆(A×

Θ) such that there exists σ ∈ Σ such that

(i) γ(a, θ) =

PQ

s

i σi (ai |si )π(s|θ)µ0 (θ)

(ii) supp σi (si ) ⊆ argmax

ai

X

for all (a, θ) and

ui (ai , σ−i (s−i ), θ)µi (θ, s−i |si ) for all si and i.

s−i ,θ

The designer chooses an information structure assuming that players will play

a BNE. Let BNE(π) ⊆ ∆(A×Θ) be the set of BNEs under (S, π). For finite games

and finite (S, π), BNE(π) is nonempty7 and compact (by standard arguments the

set of fixed points of a continuous map is closed). Beyond equilibrium existence,

uniqueness is another important property, not usually guaranteed, unless one puts

further restrictions on the base game. When there are multiple equilibria, there is

no unambiguous way of choosing one. This gives us the opportunity to model the

6

Let π̂(θ × Ŝ) = π(Ŝ|θ)µ0 (θ) for any θ and Borel Ŝ ⊆ S. Then, for any Borel Ŝ−i ⊆ S−i ,

µi (θ × Ŝ−i |·) : Si → [0, 1] is the conditional expectation of 1{θ×Ŝ−i } conditional on si . In terms

of notation, we write µi (·|si ) in place of µi (si )[·].

7

Existence is not guaranteed in infinite games (see, e.g., Stinchcombe (2011a,b))

11

Information Design in Games

designer’s attitude toward equilibrium multiplicity by studying selection. Define a

selection rule as a nonempty-valued function fv : D ⊆ ∆(A × Θ) 7→ fv (D) ∈ D,

and consider those selection rules satisfying a natural linearity requirement: for

all D0 , D00 and 0 ≤ α ≤ 1, assume fv (αD0 + (1 − α)D00 ) = αfv (D0 ) + (1 − α)fv (D00 ).

Linearity demands that the selection criterion do not change with respect to the

subset of equilibrium distributions it is applied to. The “best” and the “worst”

equilibrium are natural linear selection criteria capturing the designer’s degree of

optimism. Letting fvπ := fv (BNE(π)), the optimistic design corresponds to the

max-rule

X

γ(a, θ)v(a, θ).

fvπ ∈ argmax

γ∈BNE(π) a,θ

If the designer is pessimistic and believes that the worst equilibrium will occur,

then the min-rule prevails and argmin replaces argmax. Other criteria satisfy linearity, such as random choice rules. From here, the value of information structure

(S, π) to the designer is given by

V (π) :=

X

fvπ (a, θ) v(a, θ),

(1)

a,θ

and the information design problem is the optimization program sup(S,π) V (π).

4.

4.1.

Preliminaries

Hierarchies of Beliefs

We first introduce standard concepts and results (see Mertens and Zamir (1985),

Brandenburger and Dekel (1993) (BD hereafter) and Chen et al. (2010)). Let

X0 = Θ and for all k ≥ 1, define inductively Xk = Xk−1 × ∆(Xk−1 )n−1 . Then let

n

o

Q

T̃i = (βk )k≥1 ∈ k≥1 ∆(Xk−1 ) : margXk−2 βk = βk−1 ∀k ≥ 2 .

Player i’s beliefs in T̃i are coherent (margXk−2 βk = βk−1 ), but he may assign

positive probability to other players’ beliefs not being coherent. To close the

model, coherency must be common knowledge among the players. To ensure

Q

it, for all i consider the homeomorphism fi : T̃i → ∆(Θ × ( k≥1 ∆(Xk−1 ))n−1 )

(Proposition 1 in BD). For ` ≥ 1, let Ti,` = {ti ∈ T̃i : fi (Θ × T−i,`−1 |ti ) = 1}

where Ti,0 = T̃i and define Ti = ∩` Ti,` to be the space of i’s hierarchies of

beliefs. Given that Θ is finite, Ti is compact metrizable. Define Tik = projk Ti

12

L. Mathevet, J. Perego, I. Taneva

Q

Q

to be the set of k-order beliefs. Let T = i Ti and T−i = j6=i Tj . From here, we

∗

k

can show the existence of a belief-preserving (i.e., margT−i

k βi = (β` )

`=1 for all k)

∗

8

homeomorphism βi : Ti → ∆(Θ × T−i ) (Proposition 2 in BD).

A belief-closed subspace is a Borel T 0 ⊆ T such that βi∗ (Θ × {t−i : (ti , t−i ) ∈

T 0 }|ti ) = 1 for all t ∈ T 0 and i. A (Harsanyi) type space is a n-tuple (Ti , φi ), where

each Ti is a space of types and each φi : Ti → ∆(Θ × T−i ) is a measurable function.

Of special interest is the type space (Ti , βi∗ ), called the universal type space. Given

prior µ0 and an information structure, recall that every i constructs posterior

beliefs µi : Si → ∆(Θ×S−i ). Note that (Si , µi ) forms a type space and every si ∈ Si

induces a hierarchy of beliefs hµi (si ) = (hµk,i (si ))k≥1 where hµ1,i (si ) = margΘ µi (si )

and, for k ≥ 2, hµk,i (si )[θ × E] = µi (si )[θ × (hµk−1,−i )−1 (E)] for all θ and Borel

k−1

E ⊆ T−i

. From here, we can define redundant information structures:

Definition 2. An information structure (S, π) is non-redundant if, given prior

µ0 , the mappings hµi are injective for all i ∈ N . Let Π denote the space of all

non-redundant information structures.9

Non-redundancy demands that distinct messages induce distinct hierarchies.

In most of the paper, we assume that the designer uses non-redundant information structures, because redundancy is well-known to interfere in the relationship

between information and beliefs under BNE. The interested reader should go to

Section 8.1 for details. In that section, we re-incorporate redundancy by working

with a notion of correlated equilibrium instead of BNE.

4.2.

Random Posteriors

Given an information structure, any realized θ leads to a realized message profile

s = (si ) (according to map π(·|θ)), which, in turn, leads players to form hierarchies

of beliefs. As the state is ex-ante uncertain, an information structure induces a

distribution over players’ hierarchies of beliefs. These distributions are called

random posteriors. Let ∆T be the set of all random posteriors. To be precise,

let hµ : s 7→ (hµi (si )) and say that an information structure (S, π) induces random

P

posterior τ if τ (t) = θ π((hµ )−1 (t)|θ)µ0 (θ) for all t ∈ supp τ . In this definition,

(hµ )−1 (t) is the set of messages that produce hierarchy profiles t and, thus, the

When no confusion results, we use the same symbol βi∗ for the marginals on T−i or Θ. That

P

is, for any Borel T̂−i ⊆ T−i , we write βi∗ (T̂−i |ti ) instead of θ βi∗ (θ × T̂−i |ti ) and βi∗ (θ|ti ) instead

of βi∗ (θ × T−i |ti ).

9

Take S̄ that has at least the cardinality of the universal type space and choose finite S ⊆ S̄.

8

Information Design in Games

π(·|0) s1

s1

s2

1

0

s2

0

0

π(·|1) s1

s1

s2

13

s2

1

2

0

0

1

2

Table 2: An (Public) Information Map

rhs gives the probability of t under π. For notation purposes, the marginal of τ

on Ti will be denoted τi = margTi τ .

In the binary state space Θ = {0, 1}, the information structure ({s1 , s2 }2 , π)

defined in Figure 2 induces τ = 43 t1/3 + 14 t1 where tµ is the profile of belief hierarchies in which all players have first-order belief µ := prob(θ = 1) and this

is commonly believed among them. Indeed, note that prob(s = (s1 , s1 )) = 34 ,

prob(s = (s2 , s2 )) = 41 , and a player receiving message s` has beliefs (2` − 1)/3

that θ = 1 and is certain the other player also received s` .

5.

Theory

The information design problem is cast as a choice of information structure, but

the universe of beliefs is most instructive to the designer. For example, the manager in Section 2 does not care about how she informs her subordinates per se,

as long as she can manipulate P’s first-order beliefs and W’s second-order beliefs.

This perspective is in line with the fact that every information structure induces

a random posterior. In the world of beliefs, the random posterior becomes the

star concept and the object of choice. Of course, the designer does not actually

choose a random posterior, but it is as if she did—and if she chooses information

without understanding beliefs, then she cannot really understand the role of information. The representation theorem is the expression of the belief perspective on

information design. This section introduces the constituents of the theorem and

the result assembles them in a representation of optimality.

5.1.

Consistent Random Posteriors

The class of consistent random posteriors plays a special role in the analysis. Let

n

C = τ ∈ ∆T : |supp τ | < ∞, and ∃p ∈ ∆(Θ × T ) s.t. margT p = τ

o

and p(θ, t) = βi∗ (θ, t−i |ti )τi (ti ) ∀i, θ, t ∈ supp τ

(2)

14

L. Mathevet, J. Perego, I. Taneva

be the space of consistent random posteriors. A random posterior is consistent

if it is the marginal on T of a measure p whose conditional on ti yields i’s beliefs

βi∗ (·|ti ) for any i and ti . In the literature, p is often referred to as a “common

prior.” For any consistent τ , denote by pτ the distribution p in (2) (which is

unique due to finite support and Mertens and Zamir (1985)).

For any τ, τ 0 ∈ C, say τ ⊆ τ 0 if supp τ ⊆ supp τ 0 . Then, a consistent random posterior is defined as minimal, the set of which is denoted C M , if supp τ

contains no proper belief-closed subspace. By basic inclusion arguments, C M 6= ∅,

which Proposition 1 below establishes indirectly. Now, we illustrate minimality

for standard families of information.

Definition 3. A random posterior τ ∈ C is: public if all t ∈ supp τ t1i = t1j

and tki = δtk−1 for all i, j and k ≥ 2 (δ is the Dirac measure);10 conditionally

−i

P

Q

independent if there exists µ such that τ (t) = θ µ(θ) i pτ (ti |θ) for all t ∈ supp τ .

A random posterior is public if all players have equal first-order beliefs and this

is common knowledge. A conditionally independent random posterior is one for

which hierarchies are independent conditionally on the state. Hence, the correlation between beliefs is a function of how informative the random posterior is about

the state. A random posterior τ ∈ C is perfectly informative if for all t ∈ supp τ ,

βi∗ (θ|ti ) = 1 for all i and some θ. For public and conditionally independent random

posteriors, minimality means the following.

Proposition 1. (a) Suppose τ ∈ C is conditionally independent. If µ = margΘ pτ

is not degenerate, τ is minimal iff it is not perfectly informative. If µ is degenerate,

τ is minimal. (b) A public τ ∈ C is minimal iff supp τ is a singleton.

5.2.

The Representation Theorem

Given random posterior τ , a BNE of hG, τ i is a distribution γ ∈ ∆(A × Θ) such

that there exists σ ∈ Σ such that

γ(a, θ) =

PQ

t

i σi (ai |ti )pτ (t, θ)

for all (a, θ) and

supp σi (ti ) ⊆ argmax

ai

10

X

ui (ai , σ−i (t−i ), θ)βi∗ (θ, t−i |ti )

θ,t−i

Here, we have made use of the coherency condition to write the k-order beliefs tki .

Information Design in Games

15

for all ti ∈ supp τi and i. Let BNE(τ ) denote the set of BNEs under τ , from

which fvτ := fv (BNE(τ )) represents the selected equilibrium. The designer’s ex

ante expected payoff can then be written as a function of the random posterior

X

w : τ 7→

fvτ (a, θ) v(a, θ).

(3)

θ,t

When the designer evaluates her expected payoff from τ , she is always better

informed about θ than the players, because she uses all the hierarchies (t1 , . . . , tn )

to form her own belief about θ. Under public information or when there is a single

agent, this differential disappears and the designer’s first-order belief coincides

with that of the players or the agent.

Here is the main theorem of the paper.

Theorem 1 (Representation Theorem). The designer’s maximization problem can

be represented as

X

max V (π) = max

w(e)λ(e)

(S,π)∈Π

λ∈∆(C M )

subject to

e

X

(4)

margΘ pe λ(e) = µ0 .

e

The designer maximizes her expected utility as if she were optimally mixing

over minimal consistent random posteriors, subject to prior beliefs averaging to µ0

across these random posteriors. Every (minimal consistent) random posterior is a

universe of its own in which some equilibrium occurs and, in this representation,

it has a value given by the designer’s utility evaluated in equilibrium. Every such

random posterior also has a prior µ, and the “further” µ is from µ0 , the costlier it

is in some sense to use that random posterior. The constraint in (4) can therefore

be seen as a form of budget constraint.

The designer should a priori contemplate all (finite-support) random posteriors

in ∆T , but the theorem narrows down the focus to specific random posteriors: the

minimal consistent random posteriors satisfying a form of Bayes’ plausibility. We

know that every player’s first-order beliefs must be equal to µ0 on average, which

is known as Bayes’ plausibility (see KG). Every player knows this, hence every

player knows that others’ first-order beliefs are equal to µ0 on average, that others

know that his first-order beliefs are equal to µ0 on average, and so on, so that

Bayes’ plausibility must in fact be common knowledge. In particular, any player

i’s expectation of any j’s first-order beliefs must also on average equal µ0 , and

16

L. Mathevet, J. Perego, I. Taneva

so on. Still, this can violate consistency. For example, in the environment with

Θ = {0, 1}, n = 2 and µ0 = 1/2, the random posterior τ = 12 t + 12 t0 where

t = (t1 , t2 ) is the pair of hierarchies

player 1 believes that θ = 0 and player 2 believes that θ = 1 and that

joint event is commonly known by the players

and t0 = (t01 , t02 ) is the pair of hierarchies

player 1 believes that θ = 1 and player 2 believes that θ = 0 and that

joint event is commonly known by the players

satisfies common knowledge of Bayes’ plausibility. Indeed, Bayes’ plausibility holds

for every player under τ and {t, t0 } is a belief-closed subspace. However, τ is clearly

not consistent and for this reason is discarded by the theorem. The intuition is

that no information structure can induce an inconsistent random posterior and all

(Bayes’ plausible) consistent random posteriors can be induced by some information structure (Proposition 7 in the appendix).

Since even common knowledge of Bayesianism does not suffice, the designer

should be contemplating consistent random posteriors. If so, the theorem says

that she may as well contemplate the minimal consistent ones and distributions

thereof. To see why, define a convex combination between any e, e0 ∈ C M ,

αe + (1 − α)e0 ∈ ∆(supp e ∪ supp e0 ) (0 ≤ α ≤ 1)

as a probability distribution over {e, e0 }. Unless e = e0 , the random posterior

αe + (1 − α)e0 so formed is not minimal. It turns out that C is convex (Lemma 1

in Appendix B) and has extreme points that coincide with the minimal consistent

random posteriors (Lemma 2 in Appendix B); and that the designer’s payoff in

(3) is linear (Lemma 4 in Appendix B). From here, the focus on minimal random

posteriors and measures thereof is an extremal representation result (Proposition

8 in Appendix B).

In the one-agent case, C M ∼

= ∆Θ, that is, the set of minimal consistent random

posteriors is “equal” to the set of first-order beliefs of the agent. Thus, once in a

minimal subspace, there can be only one realization of first-order beliefs. This is

no longer true in games, for e ∈ C M may contain many hierarchies of beliefs and a

given player can have different first-order beliefs across hierarchies. The theorem

offers a Bayes’ plausibility condition

X

margΘ pe λ(e) = µ0

e

Information Design in Games

17

which is akin to a double average. The average first-order beliefs within every

minimal random posterior, {margΘ pe : e ∈ C M }, must on average equal the prior.

But which player’s first-order beliefs should we look at within and across minimal

subspaces? By consistency, it does not matter. If it is true for one player, then it

will be for all.

Constrained Representation. In general, the designer may not have the ability

or the permission to generate all possible (consistent) random posteriors. In effect,

she is then restricted to C 0 ⊆ C. Theorem 1’ deals with this case. In applied work,

for example, the designer is often restricted to a subset of information structures

Π0 ⊆ Π, public or conditionally independent information structures being the most

common restrictions.11 If ρ : (S, π) 7→ τ denotes the map that gives the (unique,

admissible) random posterior τ induced by a given (S, π),12 then C 0 = ρ(Π0 ).

Given C 0 and µ, let A(C 0 , µ) := {τ ∈ C 0 : margΘ pτ = µ} denote the consistent

random posteriors in C 0 with prior µ and assume it is nonempty for all µ. Say that

a convex subset C 0 of C is a face if τ 0 ∈ C 0 and τ ⊆ τ 0 imply τ ∈ C 0 .13

Theorem 1’. Suppose C 0 ⊆ C is a face. Then,

X

max

w(τ

)

=

max

w(e)λ(e)

0

τ ∈A(C ,µ0 )

λ∈∆(C M ∩C 0 )

subject to

e

X

(5)

margΘ pe λ(e) = µ0 .

e

The constrained analog to the representation theorem shows how constraints

can be incorporated in the analysis. As long as constraints take the form of a face

of C, the same lessons apply. It is as if the designer was mixing optimally over the

minimal random posteriors in C 0 in a way that satisfies Bayes’ plausibility. The

unconstrained version follows by setting C 0 = C. As an example, the set of public

random posteriors is a face of C, so the result applies. Likewise, although the set of

conditionally independent random posteriors is non-convex, we can still maximize

over the convex hull of that set by mixing over its extreme points, which are,

11

Public information is realistic in various contexts. For example, a financial institution disclosing information to its clients cannot, by law, tailor the type of information it discloses to the

type of clients . In marketing, personalized advertisement is difficult and public information is

often a reasonable approximation.

12

This map is well-defined by Proposition 7 in the Appendix.

13

The standard definition is that a face F of a convex set X is a nonempty convex subset of

X with the property that if x, y ∈ X, α ∈ (0, 1) and αx + (1 − α)y ∈ F , then x, y ∈ X. By

Proposition 8 in Appendix B, both definitions are equivalent here.

18

L. Mathevet, J. Perego, I. Taneva

by definition, the conditionally independent random posteriors. The theorem can

easily be extended to any convex C 0 ⊆ C, but then the extreme points of C 0 may

not coincide with those of C. If those extreme points are difficult to characterize,

the theorem loses its appeal.

6.

Implementation

The representation theorem places the information design problem in the world of

beliefs. Beyond its conceptual contribution, the theorem can also be implemented,

especially if the number of options faced by the designer is not unreasonably large.

From a pragmatic perspective, the theorem does reduce the problem from information structures to consistent random posteriors14 and then focuses attention

on the minimal ones, but the minimal ones are plenty. And if the designer contemplates all the minimal random posteriors that she might ever need, given any

group of players she might face, any underlying game they might play, given any

preferences that she might have about the outcomes of that game, and given any

prior she might have, then she will have to contemplate all of them. This is the

following result.

Proposition 2. For any minimal random posterior e ∈ C M , there exist an environment hG, vi and a prior µ0 ∈ ∆Θ for which λ∗ = δe is the essentially15 unique

optimal solution.

By choosing a constant game, it is easy to make all minimal random posteriors

optimal since the designer is indifferent between them all. Although uniqueness

ties our hands considerably, it is still the case that every minimal random posterior

is the (essentially) unique optimal solution in some environment. This delivers a

resonant message. If the designer wants to carry out the representation theorem

and face a manageable set of minimal random posteriors, she ought to use the

specifics of the environment to first trim C M , namely her own preference v and

the game G. Fortunately, this can be done by standard arguments, akin to the

Revelation Principle, or more specialized reduction arguments.

14

When redundant information is allowed, this reduction can be drastic because there exist

infinitely many information structures that induce every given random posterior (see Section

8.1).

15

A minimal random posterior e is the essentially unique optimal solution if for all > 0, there

is a game G such that all e0 ∈ C M with d(e, e0 ) > are strictly suboptimal.

Information Design in Games

6.1.

19

Primal Decomposition

To better understand the representation theorem and implement it, the maximization problem in (4) or (5) can be decomposed into smaller problems that can be

solved separately and then re-assembled. This process broadly separates the value

of public and of private information, delivers a concave-envelope representation of

optimality, and points to directions for reducing the dimensionality of the problem. In the next result, we make use of Eµ := {e ∈ C M : margΘ pe = µ}, the set of

minimal (consistent) random posteriors with prior µ ∈ ∆Θ.

Corollary 1 (Private–Public Information Decomposition). Fix an environment

hv, Gi and suppose C 0 ⊆ C is a face. For any µ and Cµ∗ ⊆ Eµ ∩ C 0 such that

w(Cµ∗ ) = w(Eµ ∩ C 0 ), let

w∗ (µ) = max∗ w(e).

(6)

e∈Cµ

If w∗ : ∆Θ → R is well-defined and upper semi-continuous, then the designer’s

maximization problem over C 0 can be represented as

X

w∗ (µ)λ(µ)

max

w(τ

)

=

max

0

τ ∈A(C ,µ0 )

λ∈∆∆Θ

subject to

supp λ

X

(7)

µλ(µ) = µ0 .

supp λ

The representation theorem can be decomposed in two parts.16 First, there is a

maximization within, given by (6), taking place among the minimal subspaces

with equal average prior beliefs. Among them, the maximization is not subject

to further constraints, since all subspaces are “equidistant” to µ0 . The corollary

points to a simple observation: without loss, we can maximize over any subset

Cµ∗ of Eµ ∩ C 0 that is payoff-equivalent to Eµ ∩ C 0 . This observation is simple

but important, because it invites us to use the specifics of the problem—without

which, as we know, C M cannot be reduced further and the problem might not be

tractable. At this level of generality, it is not possible to name a useful Cµ∗ for all

problems, but this can be done for specific problems. Some examples follow:

(a)(One-agent or Public Information). In those situations, maximization within

is trivial because Cµ∗ is a singleton, hence the problem comes down to concavification in the maximization between.

16

Decomposition is common practice in optimization theory (see Bertsekas (1999)).

20

L. Mathevet, J. Perego, I. Taneva

(b) (Multiple-agent Problems). In the Supplement, we study the following

multiple-agent problems. Say Θ = {0, 1} and each i ∈ {1, . . . , n} has one unit of

income that he can use to purchase a good. If i makes no purchase (a = 0), his

utility is ui (a, θ) = 1 for all θ. If he does (a = 1), then his utility is u(1, θ) > 1

if θ = 1 and 0 if θ = 0. This scenario is a non-trivial departure from KG when v

is not additively-separable, and is a central scenario in the Cheap Talk literature

P

with multiple receivers.17 There, we show that if v(a, θ) = ṽ( i ai ) and ṽ : R → R

is convex, then Cµ∗ = {τ ∈ Eµ : τ is public}. Thus, the problem essentially comes

down to maximization between. This is not the case when v is concave, because

the designer will benefit from private information. In general, maximization within

will play an important role when v is concave.

(c) (Manager’s Problem). Section 7 gives a detailed analysis.

(d) (Beauty Contest). In the Supplement, we study the well-known beauty

contest (as in Morris and Shin (2002), Angeletos and Pavan (2007), Bergemann

and Morris (2013), etc) and provide a random posterior perspective.18 In particular, we show that if the designer has polynomial preferences of degree 2 and is

concerned with conditional independence, then the set of binary conditionally independent (minimal) random posteriors is sufficient: Cµ∗ = {τ ∈ EµCI : |supp τ | = 2}.

For more general designers, we also illustrate the idea that, when maximization

within remains difficult in an environment hv, Gi, for one because it may be difficult

to find a manageable payoff-equivalent set Cµ∗ , we may be able to bound w∗ from

above and below, by values of the maximization within in related environments,

say hv 0 , G0 i and hv 00 , G00 i, for which it is easy to name a small payoff-equivalent Cµ∗ .

Then, we can compute w0 and w00 such that w0 (·) ≥ w∗ (·) ≥ w00 (·). It turns out

that w0 and w00 take the form of bi concave envelopes.

Second, there is a maximization between that patches together the minimal

subspaces that are solutions to maximizations within. This step is akin to a public

signal, sending players to an optimal minimal subspace (their presence in that

subspace being common knowledge). The corollary delivers a concave-envelope

characterization of optimal information design. Let

(cav w∗ )(µ) = inf{g(µ) : g concave and g ≥ w∗ }

17

(8)

In that literature, much attention has been devoted to receivers “interacting” with the sender

but not with one another (e.g., Farrell and Gibbons (1989)).

18

For simplicity, we study a two state and two player version of the game, but the approach

applies more broadly.

Information Design in Games

21

be the concave envelope of w∗ evaluated at µ. The concave envelope is the smallest

concave function that majorizes w∗ . It follows from standard arguments, as in

Rockafellar (1970, p.36), that the rhs of (7) is one of many equivalent definitions

or characterizations of the concave envelope of function w∗ . Sums replace integrals

by Caratheodory’s theorem, reducing again the set of random posteriors to be

considered. This step is familiar to the readers of KG. In the one-agent case,

|Eµ | = 1 for all µ and, thus, the theorem comes down to maximization between

(Kamenica and Gentzkow (2011, Corollary 1)). For information design problems

with many players, the corollary shows that concavification is one of the two steps

toward optimality.

6.2.

On the Structure of the Set of Minimal Random Posteriors

In a given game, not all minimal random posteriors will necessarily be relevant.

The manager’s problem is an example of this. Surely, the fewer the relevant

minimal random posteriors are, the more powerful the representation theorem.

The one-agent case is a good illustration of this: since there is a unique minimal

random posterior for each µ, max within is immediate and max between alone

achieves optimality. When the set of relevant minimal random posteriors at µ is

too “rich,” max within can be complicated and max between weakened. To make

this clear, we explore two ways in which minimal random posteriors could be rich,

a measure-theoretic one and a topological one, then draw some implications.

In a measure-theoretic sense, the set of minimal random posteriors, being extreme points of C, ought to be “small” within that set. In infinite dimensional

spaces, however, such as C or A(C, µ), there is no analog of Lebesgue measure.19

In response, Christensen (1974) and Hunt (1992) have developed the notions of

shyness to capture the idea of Lebesgue measure 0.20 Here, we use the convenient notion of finite shyness proposed by Anderson and Zame (2001), which is a

sufficient condition for a set to be shy.

Definition 4. A measurable subset A of a convex subset C of a vector space S

is finitely shy if there exists a finite dimensional vector space V ⊆ S for which

19

No infinite dimensional Banach space admits any translation invariant measure that assigns

finite, strictly positive measure to each open set.

20

For example, the countable union of shy sets is shy, no relatively open subset is shy, prevalent

sets (i.e., sets whose complement is shy) are dense, and a subset of Rn is shy in Rn if and only

if it has Lebesgue measure 0.

22

L. Mathevet, J. Perego, I. Taneva

λV (C + s) > 0 for some s ∈ S and λV (A + s) = 0 for all s ∈ S, where λV is the

Lebesgue measure defined on V .

Proposition 3. C M is finitely shy in C.

Although the set of minimal random posteriors is small in a measure-theoretic

sense, it is large in a topological sense. The minimal random posteriors are dense

in the set of consistent random posteriors.

Proposition 4. Let n > 1. For all µ, Eµ and A(C, µ)\Eµ are dense in A(C, µ).

Any non-minimal random posterior can be approximated arbitrarily well by minimal ones. Thus, absent any restrictions (i.e., C 0 = C) or reductions (i.e., Cµ∗ = Eµ ),

max within always gets us arbitrarily close to the optimal value,21 in which case

max between plays nearly no role at all. This reaffirms in different terms the

point already made in Proposition 2 that implementation can be difficult under

all minimal random posteriors. In other words, the implementation corollary will

be most useful after reductions or restrictions. There are various ways to do this:

the Revelation Principle is a standard reduction, and some restrictions are widely

used in applied work, such as conditional independence and public information.

With reductions or restrictions, max within no longer approximates max between in general. For example, no public random posteriors—except full informa

tion—can be approximated by a sequence of conditionally independent random

posteriors.22 That is, under the standard restriction to conditional independence

and public information, max between will often be necessary to achieve optimality. The manager’s problem is also interesting to this respect, because the

environment will allow us to work in a space of truncated hierarchies in which,

without reductions or ad hoc restrictions, the set of minimal random posteriors is

small topologically, so that both max within and between (concavification) play

an important role.

7.

The Manager’s Problem Solved

In the manager’s problem, intuition suggests that only P’s first-order beliefs and

W’s second-order beliefs should matter to the manager. This intuition is true as

21

Technically, sup should replace max in (6).

For example, consider τ = 34 t1/3 + 41 t1 and look at Table 2. Under conditional independence

(noting that conditionally independent random posteriors are generically minimal by Proposition

1), both matrices in Table 2 would be product distributions, in which case entries in one diagonal

could not approach strictly positive numbers while entries in the other approach 0.

22

Information Design in Games

23

long as the manager’s utility is of the form v : A → R. In general, there are

subtle reasons why a state-dependent manager might want to exploit both of her

subordinates’ first and second-order beliefs. Let us briefly convey the intuition

behind this here, and then focus on v : A → R for the remainder of the section.

Let µ0 = 1/2 and suppose the manager says nothing to P while fully informing

W. Since P is indifferent, he could decide to mix between effort levels with equal

probability. Since W knows that P has learned nothing, P’s mixing makes him

indifferent between exerting high or low effort. In particular, W could play 1

when the state is 1 and 0 when the state is 0—since he is fully informed, he can

do this. In this BNE, W’s first-order beliefs drives his action, in a way that would

please a manager wanting her subordinates to align their effort choice to the state.

Although these BNEs exist, no state-independent manager would need to exploit

them.

Consider finite-support distributions η over P’s first-order beliefs and W’s

second-order beliefs,23

A := {η ∈ ∆(∆Θ × ∆∆Θ)}.

Second-order beliefs technically include a first-order belief (Section 4.1), but here

it will be enough to define W’s second-order beliefs as elements of ∆∆Θ instead

of ∆(Θ × ∆Θ). Refer to P’s first-order beliefs as µ1 ∈ ∆Θ and W’s second-order

beliefs as λ2 ∈ ∆∆Θ. Therefore, η is a joint distribution over pairs (µ1 , λ2 ).

Denote η1 := margµ1 η and η2 := margλ2 η. In what follows, we abuse terminology

and refer to η as a random posterior while we mean a random posterior with

marginal η over (µ1 , λ2 ).

The next proposition characterizes consistency in A. Given first-order beliefs

of P and second-order beliefs of W, the condition guarantees the existence of a

joint distribution over those pairs of beliefs, such that Bayesian updating leads

W to the specified truncated second-order beliefs. Since η does not specify the

second-order beliefs of P, ensuring consistency of the second-order beliefs of W is

sufficient to guarantee consistency of the random posterior.

Proposition 5. A random posterior η ∈ A is consistent if and only if η(µ1 , λ2 ) =

η2 (λ2 )λ2 (µ1 ) for all (µ1 , λ2 ) ∈ supp η.

As far as distributions over equilibrium action profiles are concerned, we can

work with consistent random posteriors in A only, because they generate all such

23

Finite support is sufficient since we have finitely many actions. We will later show that we

need at most four points in the support to generate any BNE distribution over action profiles.

24

L. Mathevet, J. Perego, I. Taneva

distributions. To establish this, we first define what an equilibrium is in this world

of truncated hierarchies. In the following, we index the actions and strategies of

P by 1, and those of W by 2. Given η ∈ A, a BNE of hG, ηi is a distribution

γ ∈ ∆(A × Θ) such that there exists σ with σi : supp ηi → ∆({0, 1}), i = 1, 2,

such that

X

γ(a, θ) =

η(µ1 , λ2 )σ1 (a1 |µ1 )σ2 (a2 |λ2 )

µ1 ,λ2

for all (a, θ) and

supp σ1 (µ1 ) ⊆ argmax

a1

X

u1 (a1 , σ2 (λ2 ), θ)µ1 (θ)

θ

for all µ1 ∈ supp η1 and

supp σ2 (λ2 ) ⊆ argmax

a2

X

u2 (σ1 (µ1 ), a2 , θ)λ2 (µ1 )

µ1

for all λ2 ∈ supp η2 .

Proposition 6. For any γ ∈ ∪τ BNE(τ ), there exist γ 0 ∈ ∪η BNE(η) such that γ

and γ 0 generate the same distribution over A, i.e., margA γ = margA γ 0 .

The proof of this proposition shows that the designer can use random posteriors

η with at most two first-order beliefs for P and two second-order beliefs for W,

η

λ02

λ002

µ01

λ02 η2

λ002 (1 − η2 )

µ001

(1 − λ02 )η2

(1 − λ002 )(1 − η2 )

where λ2 (µ01 ) = λ2 , and η2 (λ02 ) = η2 . Having two distinct beliefs per player in this

game is enough to generate all equilibrium distributions; thus, the 2 × 2 random

posteriors η are all the manager needs to maximize her expected payoff. Notice

that the expression of η above guarantees consistency.

On the way to the representation, there remains to identify the minimal random

posteriors within the class of consistent and sufficient ones (2×2) in A. It becomes

evident that every consistent 2 × 2-η can be reproduced by a convex combination

of some η 0 and η 00 given as

Information Design in Games

η0

λ02

η0

λ002

µ01

λ02

µ01

λ002

µ001

1 − λ02

µ001

1 − λ002

25

showing that the 2 × 1 and the 1 × 1 random posteriors form the set of minimal

random posteriors in A. Again, consistency of W’s second-order beliefs is already

incorporated into η 0 and η 00 . From η, η 0 and η 00 , it is clear that η = η2 η 0 +(1−η2 )η 00 ,

meaning that η can be reproduced by sending both P and W to η 0 with probability

η2 and to η 00 with probability 1 − η2 .

The minimal random posteriors are such that either the manager talks to

both P and W simultaneously and minimally (these correspond to minimal public

random posteriors, necessarily of dimension 1×1 by Proposition 1) or the manager

informs P privately but says nothing further to W (these correspond to random

posteriors of dimension 2 × 1). This does not mean that the manager will never

inform W in optimum; however, W is never informed more than P. In particular,

the manager informs both brothers publicly about the minimal subspace she is

sending them to, but beyond that, she either says nothing further or talks to P

privately. Therefore, W only receives, if anything, the public information used in

combining the minimal random posteriors as part of the maximization between.

Representation Theorem in the Manager’s Problem The manager’s maximization problem can be represented as maximization within

X

v a∗1 (µ1 ), a∗2 (λ2 ) λ2 (µ1 )

w∗ (µ) = max

λ2 ∈∆∆Θ

s.t.

X

supp λ2

µ1 λ2 (µ1 ) = µ

(9)

supp λ2

and then maximization between

w∗ (µ0 ) =

s.t.

max

η2 ∈∆Θ

X

X

w∗ (µ)η2 (µ)

supp η2

µ η2 (µ) = µ0 .

(10)

supp η2

In the maximization within, the manager informs P optimally by choosing his

distribution of first-order beliefs, which is equivalent to the second-order beliefs

of W in that minimal subspace. In the maximization between, the manager then

26

L. Mathevet, J. Perego, I. Taneva

informs W optimally by choosing his distribution of second-order beliefs, η2 . This

distribution can also be interpreted as a distribution over priors µ of minimal

subspaces. While maximization between takes the form of a concave envelope, it

is not the case of maximization within, because λ2 enters the objective function

directly through a∗2 . In this problem, both maximizations are tractable, aided by

the binary feature of the optimal solution.

7.1.

An Illustration

Consider the manager v(a, θ) = aW from the motivating example. With this

objective in mind, she wants to make sure that W believes it more likely that P will

play 1 rather than 0, because then W will follow suit. To formalize this, consider

two possible first-order beliefs for P: µ01 ≤ 21 and µ001 > 12 . Given P’s incentives

of mismatching the state, his action choices are a∗P (µ01 ) = 1 and a∗P (µ001 ) = 0.

Denote W’s second-order belief by λ2 = λ2 (µ01 ). That is, λ2 is the probability

that W assigns to P having a first-order belief weakly less than 21 . Since W’s only

incentive is to match P’s action, W’s action choices are determined by his beliefs

about P’s first-order beliefs as follows: a∗W (λ2 ≥ 1/2) = 1 and a∗W (λ2 < 1/2) = 0.

The manager’s payoff can be written as:

(

1 if λ2 ≥ 1/2

v(a∗W (λ2 )) =

0 if λ2 < 1/2

where ties are broken in her favor. From here, the optimal random posterior can

be computed by solving the maximizations within and between.

Maximization Within.

In this example, (9) becomes

w∗ (µ) =

s.t.

max 1{λ2 ≥1/2}

λ2 ∈∆∆Θ

µ01 λ2 + µ001 (1 − λ2 )

=µ

This is simple to solve (see Figure 1). When µ ≤ 1/2, 1{λ2 ≥1/2} is maximized

by choosing µ01 = µ001 = µ, so that λ∗2 = 1. Both P and W will choose action 1,

just acting under the prior belief, and so the manager’s payoff is maximal under

silence: w∗ (µ) = 1{λ∗2 ≥1/2} = 1. The optimal minimal subspace is public with no

information revealed to either player.

When 3/4 ≥ µ > 1/2, 1{λ2 ≥1/2} is maximized by choosing µ01 = 12 and µ001 = 1,

so that λ∗2 = 2(1 − µ). In this region, the manager once again achieves maximal

27

Information Design in Games

payoff, w∗ (µ) = 1{λ∗2 ≥1/2} = 1, this time by using private partial information to P

only. The optimal minimal subspace is

e∗µ

µ01 =

λ2

1

2

2(1 − µ)

µ001 = 1

2µ − 1

When µ > 34 , it is no longer possible to satisfy the constraint with λ2 ≥ 1/2.

That is, if the manager gives no further information to W, he will choose to play

action 0. In this region, the manager’s payoff is w∗ (µ) = 0 in every minimal

subspace.

w∗ (µ)

1

Information

to both

Private to P

Silence

µ

1

2

3

4

1

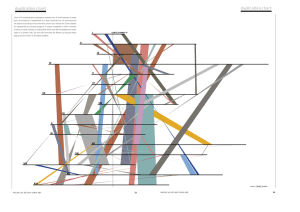

Figure 1: Value of maximization within (dashed) and between (solid).

Maximization Between.

In the region where µ0 ∈ ( 34 , 1], randomization between minimal subspaces

increases the manager’s expected payoff. The optimal randomization is given the

concave envelope of w∗ (solid line in Figure 1).

For any µ0 ∈ ( 34 , 1] the manager maximizes her expected payoff by randomizing

between e∗3/4 and e∗1 . In this range, it is optimal to send both brothers to e∗3/4 with

probability 4(1 − µ0 ), and to e∗1 with probability 4µ0 − 3. When in e∗3/4 , it is an

equilibrium for both brothers to play 1, and when in e∗1 the only equilibrium is for

both to play 0. Through this randomization, the manager achieves an expected

payoff of w∗ (µ0 ) = 4(1 − µ0 ).

28

L. Mathevet, J. Perego, I. Taneva

As an example, consider µ0 = 56 . The solution above tells us to send both

agents to e∗3/4 with probability 23 , and both agents to e∗1 with probability 13 . The

resulting non-minimal random posterior is given by

λ02 = 0 λ002 =

η

µ01 =

1

2

0

µ001 = 1

1

3

1

2

1

3

1

3

which results in an expected value to the designer of w∗ ( 65 ) = 4(1−µ0 ) = 23 . Notice

that this random posterior is admissible: it was generated by a randomization over

two minimal subspaces, which were themselves admissible, and the priors of which

average to µ0 .

8.

8.1.

8.1.1.

Discussion

Redundant Information

Justification for Non-Redundancy

To understand what is at stake, consider a simple example. Let Θ = {0, 1} and

µ0 := 21 . Each player can invest (I) or not invest (NI), and the payoffs are given

in Table 3.

0

I

NI

1

I

NI

I

NI

1, 1

0, −2

−2, 0

0, 0

I

NI

2, 2

0, 1

1, 0

0, 0

Table 3: An Investment Game

Let S1 = S2 = {0, 1}. In information structure (S, π 1 ), π 1 (0|θ) = 1 for all θ,

so that the same message is sent in all states. In information structure (S, π 2 ),

1

3

π 2 (1, 1|θ) = 16

, π 2 (1, 0|θ) = 0, π 2 (0, 1|θ) = 16

, and π 2 (0, 0|θ) = 43 for all θ.

Both information structures are completely uninformative about θ and induce the

same random posterior: with probability 1, each player has first-order beliefs µ0

and this is commonly believed among them. Under (S, π 1 ), there are two BNEs:

1

γ∗1 (I, I|θ) = 1 for all θ and γ∗∗

(NI, NI|θ) = 1 for all θ. Under (S, π 2 ), the pair

Information Design in Games

29

of strategies in which every player invests iff he receives message 1 induces BNE

γ∗2 (·|θ) = π 2 (·|θ). This equilibrium does not exist under (S, π 1 ).

The problem raised by the example is that both (S, π 1 ) and (S, π 2 ) induce

the same random posterior but they do not have the same set of BNEs. Indeed,

(S, π 2 ) is redundant and redundancy is known to cause these discrepancies (e.g.,

Liu (2009)). So, a random posterior approach based on BNE cannot capture

redundant information.

Clearly, we can either assume non-redundant information and work with BNE

or work with a different solution concept and incorporate redundant information.

We first argue that non-redundant information can be a reasonable assumption,

then we use a notion of correlated equilibrium to recover redundant information in

the next section. The designer has the monopoly of information, but she does not

have the monopoly of correlation,24 since any random event can serve as correlating

device among the players, from the weather to the daily lottery. While players are

aware that the designer manipulates their beliefs, one can argue that the desire

to listen is greater when the messages are informative about the topic at hand

(that is, θ) or about the other players’ information (that is, s−i ), than when the

messages have nothing to do with both. In (S, π 2 ), players receive messages known

to be manipulative and completely uninformative.

8.1.2.

Incorporating Redundant Information

Incorporating redundant information structures in our analysis is appealing, because our approach lets us work with random posteriors instead of information

structures. And there are infinitely many (necessarily redundant) information

structures inducing every random posterior. Hence, the representation theorem

reduces drastically the set of objects to consider. As we will see, incorporating

redundancy is only a matter of solution concept.

Definition 5. Given random posterior τ ∈ C, a correlated equilibrium is a distribution γ ∈ ∆(A × Θ) such that there exists σ : supp τ → ∆A such that

(i) γ(a, θ) =

24

P

t

σ(a|t)pτ (t, θ) for all (a, θ) and

Information about the state affects the correlation between players’ actions, but players can

also correlate their behavior based on events that are completely uninformative.

30

L. Mathevet, J. Perego, I. Taneva

(ii) for all i, ai , a0i and ti ,

X

pτ (t, θ)σ(ai , a−i |ti , t−i )(ui (ai , a−i , θ) − ui (a0i , a−i , θ)) ≥ 0

(11)

a−i ,t−i ,θ

and

P

P

p

(t

,

t

,

θ)

a ,θ pτ (t, θ)σ(a|ti , t−i )

τ

i

−i

P θ

= P −i

θ,t−i pτ (ti , t−i , θ)

a−i ,t−i ,θ pτ (t, θ)σ(a|ti , t−i )

(12)

(11) and (12) describe the agent normal-form correlated equilibrium of Forges

(1993, 2006). Every player i has a hierarchy ti and agent (i, ti ) is recommended

to play ai via σ. This recommendation carries no information about θ beyond

ti , so that first-order beliefs are unaffected. But the recommendation could carry

information about others’ types. (12) guarantees that this is not the case: for all i,

prob(t−i |ti ) = prob(t−i |ti , ai ) for all ai , ensuring that σ preserves the beliefs from τ .

As before, the designer manipulates players’ beliefs as if she randomized between

minimal random posteriors; once one is drawn, the realization of a belief hierarchy

is accompanied by an action recommendation (from the designer) according to σ.

Every agent (i, ti ) will follow this recommendation because of (11). In the above

example, we can check that γ(a, θ) = π 2 (a|θ)µ0 (θ) is a correlated equilibrium

under τ , so that redundancy is now taken into account.

From here, the representation theorem holds in the same way as before, since

the correlated equilibrium operator is also linear. The designer now evaluates her

expected payoff in a random posterior at some selected correlated equilibrium.25

8.2.

Bayes Correlated Equilibrium Approach to Information Design

In their seminal work, Bergemann and Morris (2015) (BM hereafter) introduce a

notion of correlated equilibrium in incomplete information games—Bayes correlated equilibrium (BCE)—that can be used in information design. Taneva (2015)

is one of the first papers to apply it to information design. This section presents

this alternative approach.

25

Several notions of correlated equilibrium are available in incomplete information games (see

Forges (1993)). As we will see in the next section, the Bayes correlated equilibrium concept

(BCE) of Bergemann and Morris (2015) captures the implications of common knowledge of

rationality and the fact that the players have observed at least the information contained in τ

(assuming τ is chosen by the designer). In this case, random posterior τ has no raison d’être

since the action recommendations in σ supplant it. For example, choosing to give no information

is always optimal, since the set of BCEs is largest in this situation.

Information Design in Games

31

Instead of working with random posteriors, the information design problem can

be solved by working directly with information structures. The epistemic relationship between BNE and BCE (Theorem 1 in BM) shows that the set of BCE of a

game (assuming that players have no information beyond their prior) is the union

of all BNE under all information structures. Accordingly, the designer’s favorite

BCE corresponds to her favorite BNE under an optimal information structure. In

fact, the optimal BCE so found is a map σ ∗ : Θ → ∆A that can be used as a (direct) information structure π ∗ := σ ∗ , in which it is incentive compatible for players

to follow the recommendations made by σ ∗ . In a nutshell, the BCE approach reduces the information design problem to a choice of an incentive-compatible direct

information structure:

X

v(a, θ)π(a|θ)µ0 (θ)

max

π:Θ→∆A

s.t.

X

θ,a

(ui (a, θ) − ui (a0i , a−i , θ))π(a|θ)µ0 (θ) ≥ 0 ∀i, ai , a0i ∈ Ai .

(13)

θ,a−i

Since all incentive constraints and the designer’s expected payoff are linear in π,

this method formulates the problem as a linear program. In the manager’s problem, the solution gives the point on the concave envelope (Figure 1) corresponding

to the optimal value at µ := µ0 .

Just as our extremal representation takes advantage of convexity, the set of

BCEs {π : Θ → ∆A} characterized by (13) is also convex. Can the same type

of extremal representation, available in the world of beliefs, also be of use in the

world of BCEs? Since every linear program has an extreme point that is an optimal

solution, finding the extreme BCEs is important but convexifying is unnecessary.

In some environments, the random posterior approach allows the problem to

be deconstructed into max within and concavification. In these cases, as in the

manager’s problem, working with beliefs is most instructive and the whole concave

envelope can be computed. In other instances, working hierarchies of beliefs may

be impractical, in which case the BCE approach offers an elegant and tractable

way to bypass the complexity of the universal type space given a specific µ0 .

The random posterior approach has other benefits, of applied interest, that

come from deconstructing the problem. For one, the decomposition of random

posteriors into extremal ones, in each of which a BNE is selected, gives us the

opportunity to study the designer’s attitude toward equilibria by varying the selection rule and observing how it changes her optimal choice. The BCE approach

does not allow such study because BCE includes all BNEs of all (consistent) belief

32

L. Mathevet, J. Perego, I. Taneva