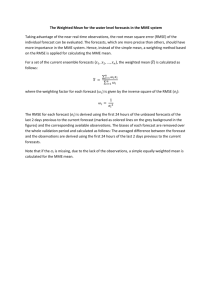

Measurement validation by observation predictions

advertisement