Matrix-Group Algorithm via Improved Whitening Process for

advertisement

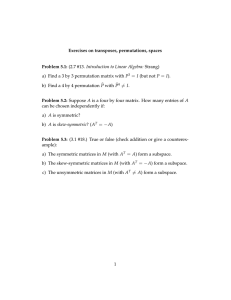

962 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 55, NO. 3, MARCH 2007 Matrix-Group Algorithm via Improved Whitening Process for Extracting Statistically Independent Sources From Array Signals Da-Zheng Feng, Member, IEEE, Wei Xing Zheng, Senior Member, IEEE, and Andrzej Cichocki Abstract—This paper addresses the problem of blind separation of multiple independent sources from observed array output signals. The main contributions in this paper include an improved whitening scheme for estimation of signal subspace, a novel biquadratic contrast function for extraction of independent sources, and an efficient alterative method for joint implementation of a set of approximate diagonalization-structural matrices. Specifically, an improved whitening scheme is first developed by estimating the signal subspace jointly from a set of diagonalization-structural matrices based on the proposed cyclic maximizer of an interesting cost function. Moreover, the globally asymptotical convergence of the proposed cyclic maximizer is analyzed and proved. Next, a novel biquadratic contrast function is proposed for extracting one single independent component from a slice matrix group of any order cumulant of the array signals in the presence of temporally white noise. A fast fixed-point algorithm that is a cyclic minimizer is constructed for searching a minimum point of the proposed contrast function. The globally asymptotical convergence of the proposed fixed-point algorithm is analyzed. Then, multiple independent components are obtained by using repeatedly the proposed fixed-point algorithm for extracting one single independent component, and the orthogonality among them is achieved by the wellknown QR factorization. The performance of the proposed algorithms is illustrated by simulation results and is compared with three related blind source separation algorithms. Index Terms—Biquadratic contrast function, blind source separation, cyclic maximizer, cyclic minimizer, diagonalization, eigenvalue decomposition (EVD), independent components, JADE, signal subspace extraction, whitening technique. I. INTRODUCTION HE separation of multiple unknown sources from array (multisensor) data has been widely applied in many areas. For example, extraction of individual speech signals from a mixture of simultaneous speakers (as in video conferencing or the so-called “cocktail party” environment), elimination of cross interference between horizontally and vertically polarized microwaves in wireless communications and radar, and separation T Manuscript received March 12, 2005; revised April 17, 2006. The associate editor coordinating the review of this manuscript and approving it for publication was Prof. Tulay Adali. This work was supported in part by the National Natural Science Foundation of China (60372049) and in part by a Research Grant from the Australian Research Council. D.-Z. Feng is with the National Laboratory of Radar Signal Processing, Xidian University, 710071, Xi’an, China (e-mail: dzfeng@rsp.xidian.edu.cn). W. X. Zheng is with the School of Computing and Mathematics, University of Western Sydney, Penrith South DC, NSW 1797, Australia (e-mail: w.zheng@uws.edu.au). A. Cichocki is with the Brain Science Institute, Riken Saitama 351-0198, Japan (e-mail: cia@bsp.brain.riken.go.jp). Digital Object Identifier 10.1109/TSP.2006.887126 of multiple mobile telephone signals at a base station, to just mention a few. During the past two decades, the problem of blind source separation (BSS) has been extensively studied in the literature and a number of efficient algorithms have been developed [1]–[28]. Here, we only review several representative methods. The existing BSS algorithms approximately fall into two broad classes. The first class comprises the stochastic-type adaptive algorithms that are also often called the online algorithms in the signal processing field. The first adaptive algorithm for BSS was established by Jutten and Herault [1], which works well in many applications, particularly in cross interference situations where a relatively modest amount of mixing occurs. After Jutten and Herault’s work, a lot of adaptive BSS methods were proposed [3]–[12], [19], [20]. Especially, an efficient signal processing framework, independent component analysis (ICA), was established in [2], and ICA has since become popular. The adaptive BSS algorithms based on natural gradient were proposed in [3]–[9], while an adaptive BSS technique based on relative gradient was given in [12]. The BSS algorithms based on natural gradient and relative gradient can work very satisfactorily and have been successfully applied in adaptive separation of mixed speech signals observed by a realistic microphone array [29], [30]. The well-known nonlinear Hebbian learning given in [10], [11] can also be used to efficiently implement ICA. A significant advantage of all the adaptive BSS algorithms is that they can track the time-varying mixing matrix and blindly extract nonstationary source signals from a nonstationary mixture. However, global convergence of most adaptive BSS algorithms including the well-known natural gradient and relative gradient remains to be proved. Moreover, in order to achieve good estimation performance of the source signals, an appropriate contrast function dependent on the source signals must be elaborately selected. Hence, it is still very necessary to find more efficient adaptive BSS algorithms with lower computational complexity, better performance, established global convergence, and higher robustness against interference, noise, and dynamic change of source signal number in the future. The second class of BSS algorithms, which is considered mainly in this paper, includes the offline or batch algorithms. One of the most important works in offline BSS is the jointly approximate diagonalization of a set of fourth-order cumulant eigenmatrices (termed as JADE for short), which was developed by Cardoso et al. in [13] and [14]. The first step in the JADE process is to “whiten” the array received vector, i.e., transform its unknown mixture matrix to some unknown unitary matrix. 1053-587X/$25.00 © 2007 IEEE Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. FENG et al.: MATRIX-GROUP ALGORITHM The second step is then to estimate the unitary matrix by “joint diagonalization” of the whole set of fourth-order cumulant or covariance matrices of the whitened process. The JADE cost function based on the notion of the “contrast function” was shown to be the least-squares solution to the joint diagonalization problem [17]. On the basis of JADE, Belouchrani et al. [15] developed second-order-statistics based joint diagonalization for blindly extracting the sources with different second-order spectra, which is known as the second-order blind identification (SOBI) algorithm. Moreover, Moreau [16] presented the jointly approximate diagonalization of the cumulant matrices of any order greater or equal to three. In fact, JADE itself is a very general signal processing framework that can implement the jointly approximate diagonalization of a set of any order cumulant matrices (including second-order correlation matrices with diagonal structure). In addition, the matrix-pencil approach [25], [26] possesses such attractive features as low computational complexity and good performance comparable to JADE. All these batch algorithms are essentially very robust algebraic methods based on the offline matrix-computation techniques, and thus they are not applicable to nonstationary circumstances, for example, adaptive speech-source retrieval. It is worth mentioning that the first step in these offline algorithms can be adaptively implemented by using so-called tracking techniques of the principal eigenvalues and the corresponding vectors of the autocorrelation matrix [31], [32]. On the other hand, however, their second step is implemented by using a series of Jacobi rotations that are not easily converted into an adaptive scheme. This is the intrinsic reason why these algorithms are not workable in adaptive processing. The two broad classes of the BSS algorithms that are briefly surveyed above are generally concerned with extracting jointly all source signals or all independent components. In fact, there exist some efficient algorithms [19]–[28] for finding one single independent component at a time. These algorithms can usually reduce computational complexity and possess global convergence. Similarly, such algorithms can also be broadly classified into two major categories: the online-type and offline-type approaches. In the online algorithm category, the adaptive approach given by Delfosse and Loubation [20] appears to be an efficient adaptive algorithm with global convergence for separating an arbitrary number of sources. Delfosse and Loubation’s algorithm extracts one single independent source at each stage and get multiple independent sources via the deflation transformation. An adaptive unsupervised algorithm for extracting one single independent component was proposed in [19]. Although this algorithm can also reduce computational complexity, it sometimes converges to a previously obtained independent source again for some initial values during the multistage separation process. In the offline-type approaches for extracting one single independent component, a representative work is fast fixed-point algorithms for ICA [21], [22]. Recently, using kurtosis maximization contrast function and Gram–Schmidt orthogonalization, Ding and Nguyen [23] and Papadias [24] developed some efficient algorithms for ICA. The fixed-point algorithms can extract sources one by one and obtain all the sources by explicitly or implicitly using the deflation transformation introduced by Delfosse and Loubation [20]. Note that these fast fixed-point algorithms can further 963 improve convergence of the adaptive algorithms. An efficient online algorithm for extracting sequentially one independent component from simultaneously mixed data corrupted by spatially colored noise was established in [27] and [28]. The biquadratic contrast function was defined and can be efficiently solved by the so-called cyclic minimizers [27], [33]. Moreover, all independent components can be achieved by multistage decomposition, but the multistage decomposition may result in a cumulative error problem. By combining together the basic concept of the approaches for finding one single independent component and the fundamental idea of JADE for implementing the jointly approximate diagonalization of a set of any order cumulant matrices (including second-order correlation matrices with diagonal structure), this paper aims to develop an efficient alternative approach for the JADE problem. This approach will fully exploit the diagonalization-structural information in any order statistics of the independent sources. An improved whitening technique will be established by virtue of the basic idea of JADE, and a novel biquadratic contrast function will be derived. We will also propose an efficient iterative algorithm for finding the optimal solution of the contrast function. More important, the two steps in the proposed method are essentially the gradient-type search techniques, and thus can be easily converted into adaptive algorithms. The rest of the paper is arranged as follows. In Section II, we formulate the BSS problem. Section III establishes an efficient whitening scheme by extracting jointly the signal subspace from a set of diagonalization-structural matrices based on the so-called cyclic maximizer. In Section IV, BSS is achieved in the presence of temporally white noise. A novel biquadratic contrast function is defined for extracting one single independent component, while multiple independent components are obtained by Gram–Schmidt orthogonalization. Moreover, global convergence of the algorithm for extracting one single independent component is analyzed. Section V gives two computer simulation examples. Some conclusions are summarized in Section VI. II. BLIND SOURCE SEPARATION PROBLEM A. Signal Model sensors with arbitrary loConsider an array composed of cations and arbitrary directional characteristic. Assume that narrowband sources centered on a known frequency impinge on . Using complex the array from distinct directions and envelope representation, the vector received by the array can be expressed by (2.1) where is an array mixture matrix, denotes an signal vector, represents an array output vector; and is an noise vector. samples of the array output vector, the Given blind source separation problem is to estimate the array mixture matrix from the available sampled data with unknown array manifold and characteristic. To make this problem solvable, we make the following four commonly used assumptions. A1) The unknown mixture matrix is of full column rank. Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. 964 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 55, NO. 3, MARCH 2007 A2) The source signal vector is a stationary vector process with the second-order delayed correlation matrices (2.2) denotes the correlawhere tion coefficient of the th independent source signal for delay time , and it is also called the energy or power of the th independent source signal. In (2.2), the superscript denotes the complex conjugate transpose, and represents a diagonal matrix formed from the elements of its vector valued argument. The fourth-order cumulants are defined by in which denotes element of where the matrix . It is worth mentioning that the fourth-order cumulant slice matrices and the nonzero delayed correlation matrices possess the ideal diagonalization structure as shown in (2.7) and (2.8), respectively. However, since in practice, these matrices must be of the array output vector, estimated by samples they can only have an approximate diagonalization structure. For illustration convenience, a general matrix symbol is used to denote a slice matrix of any order cumulant or a delayed correlation matrix. Hence, from the viewpoint of matrix theory [34], the BSS problem should be normally accomplished by estimating the array mixture matrix from a set of approximate diagonalization-structural matrices . It can be envisaged that estimating the array mixture matrix from a set of approximate diagonalization-structural matrices poses an interesting and yet challenging algebraic problem. B. Indeterminacy of Blind Source Separation for (2.3) where the superscript denotes the conjugate operator, stands for the discrete-time Kronecker function, and represents the kurtosis of the th source. is Gaussian A3) At most, one of the source signals in white. is a Gaussian stationary, A4) The additive noise vector temporally white, zero-mean complex random process independent of the source signals, i.e., the following statistical relations hold: (2.4) (2.5) denotes the Kronecker function and repwhere resents the noise variance. It is easy to see that under the above assumptions, the correlation matrices of the array output vector possess the following diagonalization structure: (2.6) In the blind context [18], a full source separation of the is impossible because an exchange of a mixture matrix fixed scalar factor between a given source signal and the corresponding column of does not affect observations, as shown by the following relation: (2.9) where is an arbitrary nonzero complex factor and denotes the th column of . Notice that even though the magnitude of the corresponding columns of can be undetermined, we can still uniquely find the direction of the corresponding . True indeterminacy arises in the case of degenerate eigenvalues [13]–[15]. However, if we consider simultaneous diagof slice matrices onalization of a set of cumulant or correlation, then there may be little chance of having such eigenvalue degeneracy. In Sections III and IV, a new least-squares approach to extraction of one single independent component will be developed. For BSS problems [18], the indeterminacy associated with the order, in terms of which the source signals are arranged, is is acceptable if acceptable. In other words, an estimated , where is any permutation matrix and denotes the true source signal vector. (2.7) Moreover, it can be shown from (2.3) and (2.5) that the fourthorder cumulant slice matrices of the array output vector sequence also have the diagonalization structure for (2.8) III. IMPROVED WHITENING SCHEME A. Classical Whitening Method The classical whitening process is a key step in many BSS techniques [13], [15], [18], [35], [36]. When the noise is spatially–temporally white, the autocorrelation matrix with zero time delay has the ideal form (2.6), which can be used to implement separation of the signal subspace and the noise subspace [37], [38]. Notice that in order to implement the classical whitening process, we require two necessary conditions: i) the autocorrelation matrix with zero time delay is positive definite, and ii) the covariance matrix of the noise vector is a diagonal matrix with identical elements. Under such conditions, Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. FENG et al.: MATRIX-GROUP ALGORITHM 965 a separation of the signal subspace and the noise subspace can be achieved by eigenvalue decomposition (EVD) of [10]–[15], [18], [35], [36]. The EVD of is defined by (3.1) where denotes the eigenvector matrix in which each column vector represents an eigenvector, and indicates the eigenvalue matrix in which each diagonal element represents an eigenvalue and all entries are arranged in a decreasing order. In addition, if the number is unknown, then it can be usually estimated by of sources the information theoretic contrast function [39] from the eigenvalue distribution. So here we assume the number of sources is known, and . Let represent the signal subspace, and let stand for the signal eigenvalue matrix associated with the source signals. Considering (2.6), the noise variance can be computed by (3.2) , the above formula can be Since rewritten as the following useful form: vector sequence, then we have the following similar results. Per, where is form EVD the eigenvector matrix and is the corresponding eigenvalue matrix in which all elements are arranged in a decreasing order. So the estimate of the signal sub, the estimate of the space is given by noise variance is obtained by , and the estimate of the signal eigenvalue matrix is derived by . Thus, the estimate . of the whitening matrix is given by B. Alternative Whitening Method It is noticed that the above whitening technique is dependent on EVD of the positive definite autocorrelation matrix . So it may not be easily extended to more general cases [40]. Moreover, even though there is the exploitable autocorrelation , the whitening technique may also have an almatrix terative form. In order to provide the basis for an improved whitening method to be proposed in Section III-C, we will first present an alterative whitening technique. It is easily shown that also repall column vectors of the matrix resent a set of basis vectors of the signal subspace, where is an arbitrary unitary matrix. We will show that it is sufficient to find arbitrary complete basis vectors of the signal subspace [41], [42]. Let (3.7) (3.3) be also called the principal subspace. As shown in [41], the iterative algorithm for maximizing the following cost function: Then (3.1) can be expressed as (3.4) subject to (3.8) Comparing (3.4) with (2.6) yields (3.5) , then it can be shown that Defining a matrix is a whitening matrix. In fact, pre- and post-multiplying (3.5) , respectively, we by and its complex conjugate transpose can obtain that is formed by a set of complete basis vectors converges to in the signal subspace. . A funcDefinition 3.1 [43], [44]: Let be any set in defined in is called a Lyapunov or energy function tion if i) is conassociated with a discrete sequence for a discrete time , and tinuous, ii) iii) is bounded for each finite . . It can be verified that the following lemma holds for Lemma 3.1 [41]: Given the cost function (3.8) and provided , then the global maximum point set of that is given by (3.6) is a unitary maThis shows that trix, which is denoted by . Since there is a scaling indeterminacy of BSS, without loss of generality, we can also assume . Then has a simple form: that . Now the diagonalization-structural information of has been fully exploited by the whitening matrix . is replaced by the estimate that In practice, if is calculated by using samples of the array output for arbitrary unitary matrix (3.9) while all other points are unstable or saddle. So can be taken as a Lyapunov function of a discrete sequence . If the constraint condition in (3.8) is not is given by considered, then the gradient of . Thus, taking the old gradient as the updating matrix and performing column orthogonalization of the updating matrix may result in the following classical power iteration [34]. Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. 966 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 55, NO. 3, MARCH 2007 Randomly produce perform ; for , (3.10a) (3.10b) where # represents the uninteresting term; if , then stop iteration. It can be shown by matrix theory [34] that the following Theorem 3.1 holds for the above iteration. Theorem 3.1: Given the power iteration (3.10) and provided and , then globally that exponentially converges to a point in as . In particular, it should be noticed that the signal subspace can also be obtained by performing singular value decomposition in the set [37], (SVD) of a matrix [45], if the term in the matrix related to noise is assumed to be low enough so that the principal singular subspace spanned by the principal singular vectors associated with the largest sinis equal to the signal subspace. gular values of the matrix Perform SVD (3.11) where and are matrices formed by all the left singular vectors and all the right is a singular vectors, respectively, and diagonal matrix in which all the singular values are arranged in a non-increasing order. Similarly, suppose that the signal subspace is spanned by the left singular vectors or the right singular vectors associated with the largest singular values of , . Then the signal subspace is spanned by and that or . Again, as shown in [46], the iterative algorithm for maximizing the cost function be extended to a general case in which the signal subspace may be efficiently extracted from a set of diagonalization-structural . matrices In order to provide the groundwork for establishing an efficient extraction technique of the signal subspace, we define a positive as follows: definite cost function associated with subject to and (3.14) Also, define a new point set as follows: for arbitrary unitary matrices and (3.15) It is easy to show that . The following lemma holds for . the newly defined cost function Lemma 3.3: Given the cost function (3.15) and provided that and , then the global maxis described by (3.15), and all other imum point set of points are unstable or saddle. Proof: See Appendix A. is obIt can be seen from (3.15) that if a point in the set tained, then the signal subspace is also obtained. Once a matrix is acquired which is constructed by a set of basis vectors in the signal subspace, considering (3.1), (3.4) and (3.7) and with and , respectively, pre- and post-multiplying yield the following dimension-reducing autocorrelation matrix: (3.16) where the equality subject to and . Moreover, it is shown by using that (3.12) converges to the following global maximum point set of : (3.17) and for arbitrary unitary matrices and (3.13) where Thus, after the signal subspace is obtained, combining (3.3) and (3.17) together we can deduce that the noise variance is given by and . It can be verified that is also a Lyapunov function and the following lemma holds. Lemma 3.2 [46]: Given the cost function (3.12) and provided and , then the global maximum that is given by (3.13), and all other points are point set of unstable or saddle. In addition, it has been shown in [46] that the iterative algoconverges to a point in the set rithm for maximizing . However, since is not positive definite, it cannot (3.18) Moreover, let be a matrix defined by Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. (3.19) FENG et al.: MATRIX-GROUP ALGORITHM 967 where is a unitary matrix. Since is a Hermitian positive definite matrix, there must exist a Hermitian matrix such that signal subspace from a set of diagonalization-structural magiven in trices, we now extend the cost function (3.14) to the following more general case: (3.20) Thus, is referred to as the square root matrix. It is directly deduced from (3.20) that the matrix is given by (3.23a) (3.21) With and , we now get the alterative whitening matrix (3.22) It is straightforward to verify that such is indeed a whitening of the signal subspace is a spematrix. Notice that the basis cial form of , while the root of the signal eigenvalue matrix is a special form of . and are replaced by In practice applications, if and that are calculated by using their estimates samples of the array output vector, then we can get of the signal subspace and the estimate the estimate of the square root matrix . Consequently, the estimated . whitening matrix is given by Remark 3.1: We have seen that the estimated whitening matrix is constructed by pre-multiplying the complex conjugate transpose of the signal subspace matrix associated with the first largest eigenvalues of the positive autocorrelation matrix , with the inverse of the square root matrix . It is obvious that the degraded performance of the classical whitening matrix may be caused by the estimation error of the signal subspace and the estimation error of the square root matrix associated with the source signals. In particular, we have noticed that estimation error of the signal subspace may result in a significant performance loss of the efficient JADE and SOBI techniques, although the JADE and SOBI techniques can solve with very high precision once the whitening process is accurately implemented. However, most of the existing whitening techniques es, and thus timate the whitening matrix by using mainly suffer from the two estimation errors of the signal subspace and the square root matrix . In what follows, we will present a method for improving estimation of the signal subspace , which can in turn reduce the estimation error of the whitening matrix, while estimation of the square root matrix can also be improved by using the methods given in [35] and [36]. C. Improved Whitening Method In order to get a more accurate estimate of the signal , we can also exploit a set of diagonalizationsubspace structural matrices , like JADE. We will elaborately implement estimation of the signal subspace by extracting the signal subspace jointly from a set of diagonalization-structural matrices . Unfortunately, the above methods cannot be applied for extracting a synthetic signal subspace from a set of diagonalization-structural matrices . In order to make efficient extraction of the subject to and (3.23b) and . It is obvious that the where cost function defined by (3.23) is a positive definite energy function with upper bound [43], [44], so it has at least one global maximum point. Hence, we can solve the optimization problem (3.23) by use of the gradient ascent techniques. However, the gradient ascent algorithms usually have the slow convergence speed. Under certain conditions, the following theorem holds . for the maximum point set of defined Theorem 3.2: Given the cost function by (3.23), if a set of diagonalization-structural matrices do not include the error term, that is, , then corresponds to the signal the maximum point set of subspace, while all other points are unstable or saddle. Proof: See Appendix B. , then Remark 3.2: It is worth mentioning that if let (3.23) can be converted into the following fourth-order function [40]: (3.24a) (3.24b) subject to Though the problem (3.24) may be solved by the well-known gradient ascent algorithms, the slow convergence speed of the classical gradient ascent algorithms is rather undesirable. Note that this optimization problem can also be solved by the joint block diagonalization approach [40]. However, since is generally nonsquared, the joint block diagonalization approach may include some nonsquared Givens rotations that are not available. Interestingly, the biquadratic cost function (3.23) can be efficiently solved by the following cyclic maximizer, a very generalized optimization technique. The cyclic maximizer is given by the following iteration. , for Given , compute: for for An obvious alternative form to (3.25) is to start with , if so desired. Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. (3.25a) (3.25b) 968 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 55, NO. 3, MARCH 2007 By using the Lagrange multiplier method and matrix differential calculus [47], forcing that the gradient of with respect to is equal to zero yields (3.26) where and Theorem 3.3: Given the cyclic maximizer 1, then are globally asymptotically convergent. is a positive definite function for Proof: Since and and is clearly bounded, possesses the global maximum points. Moreover, it is readily shown that is the Lagrange multiplier matrix. Since (3.26) represents a form of EVD of the matrix , in order for (3.25a) to be satisfied, the eigenvector matrix formed by the eigenvectors associated with the largest eigenvalues of can be taken as . Similarly, for (3.25b), we have (3.27) and the eigenvector matrix formed by the eigenvectors associated with the largest eigenvalues of should be taken as . Thus, the above iteration can be concretely rewritten as the following cyclic maximizer 1. Produce randomly the initial values as ; for : a) carry out EVD (3.29) (3.28a) , , , and . This is an energy function of the discrete shows that and . By using the well-known energy sequence approaches [43] or Lyapunov function methods [44], we can and globally asymptotically converge conclude that to an invariance point. This completes the proof of Theorem 3.3. Remark 3.3: Since the above iteration based on (3.28) exploits the multiple eigenmatrices with approximate diagonalizable structure, it may get a better estimate of the signal subspace. Thus, the signal subspace obtained by the iteration based on (3.28) may be significantly superior to that derived only by . Moreover, the above updating iteration is baEVD of sically the gradient-type algorithm and can be easily converted into an adaptive algorithm by using the similar techniques to the subspace tracking approaches [31], [32], [41], [42], [46]. It can be tested by simulations that the iteration given by (3.28) is indeed fast convergent. As an example, randomly prosubject to an error. duce seven The fifth-dimensional synthetic principal subspace is extracted by the iteration based on (3.28), and the corresponding versus the iteration number is plotted in Fig. 1. The results show that the iteration given by (3.28) possesses fast convergence. The performance of the cyclic maximizer 1 will be further illustrated in Section V. where maof and represents the eigen, value matrix with is constructed by the first columns of ; then b) perform EVD where trix denotes the eigenvector (3.28b) where denotes the eigenvector matrix of and represents the eigenvalue matrix with , then is ; constructed by the first columns of c) if , then stop iteration and let or . of the diagonalizationIn practice, the estimate structural matrix is calculated by using the finite samples of the observed array output vector sequence. In this case, if in (3.28) is replaced by , then we can get the corresponding updating iteration for estimating the . practical Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. FENG et al.: MATRIX-GROUP ALGORITHM 969 . Usually, it is difficult quadratic function of is to directly solve (4.1) or (4.3). Since [17], we can implement minimization of equivalent to by using JADE of a set of eigenmatrices . is unitary, JADE of a set of eigenWe notice that as matrices is intrinsically a joint eigenvalue decomposition. In of , then with no estimafact, given a column vector , that is, tion error in a set of eigenmatrices , we have (4.4) Fig. 1. The evolution curve of the cost function (3.23) versus the iteration number shows that iteration (3.28) is fast convergent. Pre- and postmultiplying by and its complex conju, respectively, yields the following relation: gate transpose where is a nonzero element of . Considering (4.4) and that there exists some estimation error in , we can propose a novel least-squares contrast function, as follows: subject to (4.5) for (3.30) This shows that the mixture matrix is whitened. Furthermore, it is required to extract from one or multiple eigenmatrices with approximate diagonalizable structure. In the matrix-pencil algorithm (MPA) [25], [26], is acquired by performing EVD of one of eigenmatrices , while in JADE [13], [14], can be efficiently obtained by performing JADE of a group of eigenmatrices . IV. DIAGONALIZATION TECHNIQUES Apparently, this is a special least-squares criterion. Remark 4.1: Compared with (4.1) and (4.3), is a biquadratic contrast function, which may be the simplest to the best of our knowledge. So it is reasonable to expect that a simple algorithm can be developed for finding its solution. We should note that although only one single independent component can be obtained by minimizing , all other independent components may be found by using the deflation transformation [19], [20], [23], [24], [27], [28]. The joint contrast functions for solving multiple independent components are defined as A. Contrast Function The contrast function of JADE [12]–[17] is described by (4.1) where is defined as (4.2) denotes an element of an matrix . It can be seen is the fourth-order function of that the contrast function . By using the joint Givens rotations and minimizing , Cardoso et al. [13], [14] first achieved JADE of a set of eigen. matrices Wax and Anu [17] proposed the following least-squares contrast function: (4.3) where for (4.6a) for (4.6b) subject to where , for and for . Thus, the solution of multiple independent components can be obtained by solving jointly the above constrained optimal problems (4.6). B. Some Properties of Contrast Function Here, we will present some useful properties of the contrast function (4.5). Property 4.1: The contrast function (4.5) is unbounded from above and greater than or equal to zero. This property indicates that the contrast function , at least, has a global minimum point. Thus, a gradient descent method can be used to solve (4.5). with Differentiating (4.5) with respect to fixed, we have are diagonal. It is obvious that is a fourth-order function of and a Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. (4.7) 970 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 55, NO. 3, MARCH 2007 Moreover, substituting (4.7) into (4.5), we can obtain the following equivalent form of (4.5): (4.8) Property 4.2: Given that is a projection matrix, when there is no estimation error in a set of eigenma, we have , which corretrices sponds to an independent component, if and only if all are parallel to . Note that the equation may be not achieved for and . However, the noisy matrices will make all we may expect that minimizing be as parallel to as possible. Property 4.3: By differentiating with reand , we get all the stationary points despect to scribed by (4.9a) (4.9b) (4.9c) where is an eigenvalue. Then in the presence of no estimation , it can be error in a set of eigenmatrices is given shown that a stationary point set of by is minimized. The Lagrange multiplier method can be utilized to subject to the constraint minimize . This immediately gives the following Lagrange equation: or where is the Lagrange multiplier and (4.13a) is generally Since the cost function is a positive definite matrix. So the depositive definite, should correspond to the smallest eigenvalue of the sired , namely matrix (4.13b) Now we are able to summarize the proposed fixed-point algorithm (FPA) as follows. be the eigenvector associated with an eigenvalue of Let ; for a) find by and and (4.10) where is a column of . , then Proof: It is easily verified that if (4.9) is satisfied. This completes the proof of Property 4.3. b) find the unit-norm weight vector following EVD: by performing the C. Iterative Algorithm for One Single Component can be implemented Minimization of by an iterative algorithm. At the th iteration step, a set of are first computed such that parameters is minimized. It is easily shown that differentiating with respect to yields for (4.11) Second, with the unit norm constraint computed in such a way that is c) repeat steps a) and b) until . Each of steps a) and b) poses a least-squares problem with an exact solution which is easily found. As will be seen from the simulation results given in the next section, the resulting iteration can fast converge to a global stable equilibrium point, like the fixed-point algorithms given in [21] and [22]. In the following, we will theoretically investigate the convergence of the above proposed algorithm. D. Global Convergence be defined by (4.5). Then Theorem 4.1: Let in the the proposed FPA ensures that presence of no estimation error in a set of eigenmatrices , . that is Proof: Since there exists no estimation error in a set of , that is, eigenmatrices for (4.12) Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. (4.14) FENG et al.: MATRIX-GROUP ALGORITHM 971 TABLE I STATISTICAL RESULTS OF THE ITERATION NUMBER OF THE PROPOSED FPA substituting (4.14) into (4.13a) yields It follows from Property 4.1 and the above inequality that is an energy or Lyapunov function [43], [44]. Moreover, it can be shown by Lyapunov indirect method that in the proposed FPA globally converges to the so-called invariance set [44]. This completes the proof of Theorem 4.2. E. Matrix-Group Algorithm for Finding Multiple Independent Components (4.15) It is obvious that represents an eigenmatrix of . Let the eigenvector associated with of the smallest eigenvalue of be . Then, it can be seen from (4.13b) and (4.15) . This completes the proof of Theorem 4.1. that Remark 4.2: From the above analysis, we know that the proposed fixed-point algorithm converges to a stable equilibrium point at one step iteration, when there is no estimation , that is, error in . Moreover, in the presence of estimation error, it can be shown through simulations that the proposed FPA can fast converge to a stable equilibrium point . For example, consider the experiment of where in the convergence criterion is chosen as 0.00001, the initial value is randomly generated, and a set of eleven 9 9 diagonalization-structural matrices with errors is randomly produced at each trial. Let the ratio of the error-free to the error be called EER. Then, the number of iterations, which the proposed FPA requires to converge to a stable point, versus EER is shown in Table I, where 10 000 independent trials are conducted for each EER. In contrast, it should be mentioned that although JADE is a highly efficient technique, its global convergence may not be , that is, proved, even if there is no estimation error in . Theorem 4.2: Let be defined by (4.5). Then in the proposed FPA is globally convergent, even though , there is some estimation error in a set of eigenmatrices . that is, Proof: Since each of steps a) and b) in the proposed FPA implements a least-squares solution, we have Multiple independent components can be acquired by solving the optimal problem (4.6) via some techniques given in [27] and [28]. For this purpose, we propose the following matrix-group algorithm (MGA). Matrix-Group Algorithm (MGA): be the matrix Let formed by the eigenvectors associated with all the eigenvalues ; for of a) find ( ; ) by for and (4.16) by b) find the unit-norm eigenvector minimizing for (4.17) where is equal to the eigenvector associated with the smallest eigenvalue corresponding to the following EVD: (4.18) where (4.19) , and perform QR factorc) let , where # denotes an ization of uninteresting upper triangular matrix; d) repeat steps a), b) and c) until . Similarly, each of steps a) and b) is to solve multiple least-squares problems, while step c) is to implement a Gram–Schmidt orthogonalization via QR factorization. It should be noticed that the orthogonalization step usually changes the convergence properties of MGA. Remark 4.3: Since the above updating iteration is basically a gradient-based algorithm, it can be easily converted into an adaptive algorithm by combining together the updating formulae of a set of diagonalization-structural matrices. Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. 972 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 55, NO. 3, MARCH 2007 Fig. 2. In these subfigures, three nonzero-time-delay correlation matrices and four cumulant slice matrices are used, and all the mean, the maximum and the minimum are computed statistically over 100 independent trials. As shown in Fig. 2(a)–(c), curves of the estimated signal subspace error versus sample number are obtained by the classical whitening technique and the cyclic maximizer 1. As shown in Fig. 2(d)–(f), curves of the estimated GRL versus the sample number are acquired by JADE, JADE1, MPA and MGA. (a) Mean of the estimated signal subspace error; (b) maximum of the estimated signal subspace error; (c) minimum of the estimated signal subspace error; (d) mean of the estimated GRL; (e) maximum of the estimated GRL; and (f) the minimum of the estimated GRL. V. SIMULATION ILLUSTRATIONS Furthermore, the estimated signal-subspace error is given by In this section, two simulated examples are given to show the performance of JADE, SOBI, MPA, cyclic maximizer 1, and MGA. Here, JADE and SOBI are conventionally combined with the classical whitening technique, while JADE1 and SOBI1 represent JADE and SOBI combined with the improved whitening method, respectively. MGA is combined only with the improved whitening method, while MPA is combined only with the classical whitening method. It should be noticed that JADE also involves jointly approximate diagonalization of a set of the fourthorder cumulant matrices and the second order correlation matrices with zero or nonzero delays, while SOBI only involves jointly approximate diagonalization of a set of the second-order correlation matrices with zero or nonzero delays. In order to test validity of these algorithms, the global rejection level (GRL) defined in [15] is used as the performance index. Moreover, the estimated waveform error between the true and the estimated source signals is desource signals fined as , where each of the (estimated) source signals is normalized. Notice that indeterminacy of the estimated source signals can be eliminated, since the source signals are assumed to be known in all simulations. , where represents the signal subdenotes space obtained by the known mixture matrix and the estimated signal subspace. Example 1: Consider a set of 11 sensors receiving a mixture of five zero-mean independent source signals. In the experiment, we use a mixture of the following five source signals: (5.1a) (5.1b) (5.1c) (5.1d) (5.1e) A parameter (mixing) matrix is randomly produced by ones rand in each MATLAB software trial. It is assumed that the 11-dimensional mixing signal vector is observable in the presence of temporally stationary white 7.5 dB, the estimated GRL and noise. In the case of SNR the estimated signal-subspace error versus the sample number are plotted in Fig. 2, where the mean, the maximum, and the minimum are computed statistically over 100 independent trials. Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. FENG et al.: MATRIX-GROUP ALGORITHM 973 Fig. 3. In these subfigures, seven nonzero-time-delay correlation matrices are estimated by 3000 samples of array output vector, and all the mean, the maximum, and the minimum are computed statistically over 100 independent trials. As shown in Fig. 7(a)–(c), curves of the estimated signal subspace error versus SNR are obtained by the classical whitening technique and the cyclic maximizer 1. As shown in Fig. 7(d)–(f), the estimated waveform error versus SNR is acquired by SOBI, SOBI1 and MGA. (a) Mean of the estimated signal subspace error; (b) maximum of the estimated signal subspace error; (c) minimum of the estimated signal subspace error; (d) mean of the estimated GRL; (e) maximum of the estimated GRL; and (f) minimum of the estimated GRL. The four curves shown in Fig. 2(d)–(f) are associated with the experimental results obtained by JADE, JADE1, MPA, and MGA, where a correlation matrix, three nonzero-time-delay correlation matrices and four cumulant slice matrices are used in JADE, JADE1 and MGA, MPA uses a correlation matrix and a cumulant matrix. Moreover, in order to more sufficiently test the efficiency of the proposed methods, we have also conducted the following two experiments. In the first experiment, a correlation matrix and seven cumulant slice matrices are used by JADE, JADE1 and MGA, while a correlation matrix and a cumulant matrix are exploited by MPA. The similar results to those displayed in Fig. 2 are obtained by JADE, JADE1 and MGA, and MPA. In the second experiment, 50 nonzero-time-delay correlation matrices are used by SOBI, SOBI1, MPA and MGA, while a correlation matrix and a nonzero-time-delay correlation are exploited by MPA. Again, the similar results to those displayed in Fig. 2 are also obtained by SOBI, SOBI1, MPA, and MGA. The experimental results show that the improved whitening method can usually extract the signal subspace with higher precision than the classical whitening technique on the average. In terms of BSS measured in GRL, it can be seen that the performance of MGA is better than that of MPA, and is comparable with that of JADE1 and SOBI1 on the average. Example 2: The second example is concerned with separating three speech sources. With six sensors, the mixture , where matrix is given by denotes the response of a six-element uniform linear array with half wavelength sensor spacing. Let the direction of arrival of three rand , sources be randomly generated by rand and rand in each trial. The array receives the above three source signals in the presence of temporally stationary complex white noise. Fig. 3 displays the estimated signal-subspace error and the estimated waveform error versus SNR (in decibels), where the mean, the maximum and the minimum are computed statistically over 100 independent trials. Here, seven nonzero-time-delay correlation matrices are used by SOBI, SOBI1 and MGA. In addition, another experiment is conducted, where two nonzero-time-delay correlation matrices and five cumulant slice matrices are utilized by JADE, JADE1 and MGA. The experimental results similar to those displayed in Fig. 3 are also obtained by JADE, JADE1, and MGA. As seen in Fig. 3(a)–(c), the improved whitening method can again extract the signal subspace with higher precision than the classical Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. 974 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 55, NO. 3, MARCH 2007 whitening technique, which further confirms our observations from Example 1. Moreover, such improvement in the signal subspace estimation given by the proposed whitening technique is particularly significant in the presence of high noise. This example also further exhibits that the performance of MGA is comparable with that of SOBI1 and JADE1 on the average. It is interesting to note that in this example all the five BSS algorithms, i.e., SOBI, SOBI1, JADE, JADE1, and MGA, display a much similar performance at high SNR. The explanation for this may be that when the noise level becomes relatively low, the estimates of the signal subspace given by the classical methods and the improved scheme both are good enough to successfully fulfil BSS so that the effect of estimation accuracy of the signal subspace on BSS becomes relatively small. VI. CONCLUSION In this paper, an improved whitening scheme has first been established for blind source separation. Similarly to the idea used in JADE, the improved whitening scheme extracts the signal subspace jointly from a set of approximate diagonalizationstructural matrices based on the proposed cyclic maximizer of a biquadratic cost function. Under some conditions, we have proven that the proposed cyclic maximizer is globally asymptotically convergent. The simulation results have shown that the performance of the improved whitening scheme is significantly better than that of the classical whitening methods on the average. Second, the novel biquadratic contrast function has been developed for implementing joint eigen-decomposition of a matrix group of any order cumulant of the observable array signals. A fixed-point algorithm for searching the minimum point of the proposed contrast function has been developed, which can obtain one single independent component effectively. The global convergence of the proposed fixed-point algorithm has been established. It has been shown that multiple independent components can be derived by using repeatedly the proposed fixed-point algorithm in conjunction with QR factorization. The simulation results have been presented that demonstrate the satisfactory performance of the proposed MGA in comparison with JADE, SOBI and MPA. Finally, it can be seen by careful comparison that JADE [13], [14] and SOBI [15] are an extension of the conventional Jacobi methods [34] for eigenvalue decomposition of a symmetric matrix, while MGA is an extension of the conventional power iteration methods [34] for eigenvalue decomposition of a symmetric matrix. Since the conventional power iteration methods are easily converted into adaptive algorithms for tracking the signal subspace [31], [41], an interesting future work will be to develop an adaptive MGA with the improved whitening technique for BSS of array signals received by a microphone array. is turned into Correspondingly, condition the following equivalent condition (A.2) . Thus, it suffices to study the properties of We now use the Lagrange multiplier method and matrix difwith respect to ferential calculus [47]. Differentiating leads to (A.3a) where larly, we can get is the Lagrange multiplier matrix. Simi(A.3b) is the Lagrange multiplier matrix. It is seen where from (A.3) and (A.2) that and where and are two appropriate (A.4) full-rank matrices. It is deduced from (A.4) that rank rank (A.5) Moreover, it is shown by Property 2.4 given in [46] that span span (A.6) Furthermore, from Property 2.3 given in [46], we can obtain that (A.7) is an where (A.4) yields unitary matrix. Substituting (A.7) into (A.8) . It is obvious that must be positive definite. Let Let , where is a row vector. Then the alternative form of (A.8) is (A.9) Evidently, (A.9) expresses EVD for . Since is an symmetric positive definite matrix, it has only orthonormal left eigenvectors, which means that has only othonormal row vectors. Moreover, all the nonzero row vectors in form an orthonormal matrix, which shows that can always be represented as (A.10) where denotes an permutation matrix in which each row and each column have exactly one nonzero entry equal to represents an unitary matrix. Similarly, we 1, and can get APPENDIX A PROOF OF LEMMA 3.3 Consider SVD (3.11) of . Then, the cost function . Let (A.11) where set of and is changed into represents an is given by unitary matrix. So the stationary for arbitrary subject to and (A.1) unitary matrices Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. and (A.12) FENG et al.: MATRIX-GROUP ALGORITHM 975 It can be directly verified that if propriate zero matrix, then , where is an apis a global max- . Moreover, it can be deduced by using imum point of the infinitesimal disturbance analysis technique in [46] that except for the set and , where , , and , then considering (B.4), we get from (B.5) that If we partition into and for arbitrary unitary matrices (B.6a) and all other stationary points are saddle (unstable). Thus, belongs to the stable point set . This completes the proof of Lemma 3.3. APPENDIX B PROOF OF THEOREM 3.2 subject to and (B.6b) and It follows from (B.5b) that all the eigenvalues of are nonnegative and are smaller than or equal to 1. Thus, by virtue of the properties of matrix trace, from (B.6a) we have Define a column-orthonormal matrix as (B.1) spans the signal space. Let an orthogonal comClearly, be represented by plement of . Then is an unitary matrix, i.e., . Since , we have or (B.2) It follows from (B.2) that (B.3a) (B.3b) Using (B.3), we can get (B.7) , , , It can be directly verified that if , where and are two unitary matrices, and then . This implies that the : following set is the global maximum point set of for arbitrary (B.4a) unitary matrices and (B.8) Furthermore, by using the infinitesimal disturbance analysis technique in [46], we can show that all other points of are unstable or saddle. Correspondingly, the global maximum is given by point set of where (B.4b) and . Substituting them into Introduce the cost function (3.23) and using (B.4), we have the equivalent form of the cost function and and unitary matrices for arbitrary and (B.9) correThis shows that a global maximum point of sponds to the signal subspace. Thus, the proof of Theorem 3.2 is completed. ACKNOWLEDGMENT (B.5a) subject to and (B.5b) The authors would like to thank the Associate Editor Prof. T. Adali and the anonymous reviewers very much for their valuable comments and suggestions that have significantly improved the manuscript. Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. 976 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 55, NO. 3, MARCH 2007 REFERENCES [1] C. Jutten and J. Herault, “Blind separation of sources, Part I: An adaptive algorithm based on neuromatic architecture,” Signal Process., vol. 24, no. 1, pp. 1–10, Jul. 1991. [2] P. Comon, “Independent component analysis: A new concept?,” Signal Process., vol. 36, no. 3, pp. 287–314, Apr. 1994. [3] A. Cichocki, R. Unbehauen, and E. Rummert, “Robust learning algorithm for blind separation of signals,” Electron. Lett., vol. 30, no. 17, pp. 1386–1387, Aug. 1994. [4] A. Cichocki and R. Unbehauen, “Robust neural networks with on-line learning for blind identification and blind separation of sources,” IEEE Trans. Circuits Syst. I, Fundam. Theory Appl., vol. 43, no. 11, pp. 894–906, Nov. 1996. [5] S. Amari, A. Cichocki, and H. H. Yang, “A new learning algorithm for blind sources separation,” in Advances in Neural Information Processing, D. Touretzky, M. Mozer, and M. Hasselmo, Eds. Cambridge, MA: MIT Press, 1996, vol. 8, pp. 757–763. [6] S. Amari and A. Cichocki, “Adaptive blind signal processing-neural network approaches,” Proc. IEEE, vol. 86, no. 10, pp. 2026–2048, Oct. 1998. [7] S. A. Cruces-Alvarez, A. Cichocki, and S. Amari, “From blind signal extraction to blind instantaneous signal separation: contrast function, algorithms, and stability,” IEEE Trans. Neural Netw., vol. 15, no. 4, pp. 859–873, Jul. 2004. [8] A. J. Bell and T. J. Sejnowski, “An information-maximization approach to blind separation and blind disconsolation,” Neural Comput., vol. 7, no. 6, pp. 1129–1159, Nov. 1995. [9] A. Cichocki and S. Amari, Adaptive Blind Signal and Image Processing: Learning Algorithms and Applications. West Sussex, U.K.: Wiley, 2003. [10] J. Karhunen, E. Oja, L. Wang, R. Vigario, and J. Joutsensalo, “A class of neural networks for independent component analysis,” IEEE Trans. Neural Netw., vol. 8, no. 3, pp. 486–504, May 1997. [11] A. Hyvarinen and E. Oja, “Independent component analysis by general nonlinear Hebbian like learning rules,” Signal Process., vol. 64, no. 3, pp. 301–313, Feb. 1998. [12] J. F. Cardoso and B. Laheld, “Equivariant adaptive source separation,” IEEE Trans. Signal Process., vol. 44, no. 12, pp. 3017–3030, Dec. 1996. [13] J. F. Cardoso, “Source separation using higher order moments,” in Proc. IEEE Int. Conf. Acoustics, Speech, Signal Processing (ICASSP), Glasgow, UK, May 1989, vol. 4, pp. 2109–2112. [14] J. F. Cardoso and A. Souloumiac, “Blind signal separation for non-Gaussian signals,” Proc. Inst. Elect. Eng.—F, vol. 140, no. 6, pp. 362–370, Dec. 1993. [15] A. Belouchrani, K. Abed-Meraim, J. F. Cardoso, and E. Moulines, “A blind source separation technique using second-order statistics,” IEEE Trans. Signal Process., vol. 45, no. 2, pp. 434–444, Feb. 1997. [16] E. Moreau, “A generalization of joint-diagonalization contrast function for source separation,” IEEE Trans. Signal Process., vol. 49, no. 3, pp. 530–541, Mar. 2001. [17] M. Wax and Y. Anu, “A least squares approach to blind signal separation,” IEEE Trans. Signal Process., vol. 47, no. 1, pp. 231–234, Jan. 1999. [18] L. Tong, R.-W. Liu, V. C. Soon, and Y.-F. Huang, “Indeterminacy and identifiability of blind source separation,” IEEE Trans. Circuits Syst., vol. 38, no. 5, pp. 499–509, May 1991. [19] Z. Malouche and O. Macchi, “Adaptive unsupervised extraction of one component of a linear mixture with a single neuron,” IEEE Trans. Neural Netw., vol. 9, no. 1, pp. 123–138, Jan. 1998. [20] N. Delfosse and P. Loubaton, “Adaptive blind separation of independent sources: A deflation approach,” Signal Process., vol. 45, no. 1, pp. 59–83, Jul. 1995. [21] A. Hyvarinen and E. Oja, “A fast fixed-point algorithm for independent component analysis,” Neural Comput., vol. 9, no. 7, pp. 1483–1492, Jul. 1997. [22] A. Hyvarinen, “Fast and robust fixed-point algorithms for independent component analysis,” IEEE Trans. Neural Netw., vol. 10, no. 3, pp. 626–634, May 1999. [23] Z. Ding and T. Nguyen, “Stationary points of a kurtosis maximization algorithm for blind signal separation and antenna signal separation,” IEEE Trans. Signal Process., vol. 48, no. 6, pp. 1587–1596, Jun. 2000. [24] C. B. Papadias, “Globally convergent blind source separation based on a multi-user kurtosis maximization criterion,” IEEE Trans. Signal Process., vol. 48, no. 12, pp. 3508–3519, Dec. 2000. [25] C. Chang, Z. Ding, S. F. Yau, and F. H. Y. Chan, “A matrix-pencil approach to blind separation of colored nonstationary signals,” IEEE Trans. Signal Process., vol. 48, no. 3, pp. 900–907, Mar. 2000. [26] L. Tong, V. C. Soon, Y.-F. Huang, and R. Liu, “AMUSE: a new blind source separation algorithm,” in Proc. IEEE Int. Symp. Circuits Systems (ISCAS), New Orleans, LA, May 1990, vol. 3, pp. 1784–1887. [27] D.-Z. Feng, X.-D. Zhang, and Z. Bao, “An efficient multistage decomposition approach for independent components,” Signal Process., vol. 83, no. 1, pp. 181–197, Jan. 2003. [28] D.-Z. Feng, Z. Bao, H.-Q. Zhang, and X.-D. Zhang, “An efficient algorithm for extracting independent components one by one,” in Proc. 16th IFIP World Computer Congr. (ICSP), Beijing, China, Aug. 2000, vol. 1, pp. 421–424. [29] L. Para and C. Spence, “Convolutive blind separation of nonstationary sources,” IEEE Trans. Speech Audio Process., vol. 8, no. 3, pp. 320–327, May 2000. [30] H. Sawada, R. Mukai, S. Araki, and S. Makino, “A robust and precise method for solving the permutation problem of frequency-domain blind sources separation,” IEEE Trans. Speech Audio Process., vol. 12, no. 5, pp. 530–538, Sep. 2004. [31] K.-B. Yu, “Recursive updating the eigenvalue decomposition of a covariance matrix,” IEEE Trans. Signal Process., vol. 39, no. 5, pp. 1136–1145, May 1991. [32] B. Champagne and Q. G. Liu, “Plane rotation-based updating schemes for efficient subspace tracking,” IEEE Trans. Signal Process., vol. 46, no. 7, pp. 1886–1900, Jul. 1998. [33] P. Stoica and Y. Selen, “Cyclic minimizers, majorization techniques, and the expectation maximization algorithm: A refresher,” IEEE Signal Process. Mag., vol. 21, no. 1, pp. 112–114, Jan. 2004. [34] G. H. Golub and C. F. Van Loan, Matrix Computations, 2nd ed. Baltimore, MD: The John Hopkins Univ. Press, 1989. [35] A. Belouchrani and A. Cichocki, “Robust whitening procedure in blind source separation context,” Electron. Lett., vol. 36, no. 24, pp. 2050–2951, Nov. 2000. [36] S. Choi, A. Cichocki, and A. Belouchrani, “Second order nonstationary source separation,” J. VLSI Signal Process., vol. 32, no. 1–2, pp. 93–104, Aug. 2002. [37] R. Roy and T. Kailath, “ESPRIT-estimation of signal parameters via rotational invariance techniques,” IEEE Trans. Acoust., Speech, Signal Process., vol. 37, no. 7, pp. 984–995, Jul. 1989. [38] R. Schmidt, “Multiple emitter location and signal parameter estimation,” IEEE Trans. Antennas Propag., vol. 34, no. 3, pp. 276–280, Mar. 1986. [39] M. Wax and T. Kailath, “Detection of signals by information theoretic contrast function,” IEEE Trans. Acoust., Speech, Signal Process., vol. 33, no. 2, pp. 387–392, Apr. 1985. [40] A. Belouchrani, M. G. Amin, and K. Abed-Meraim, “Direction finding in correlated noise fields based on joint block-diagonalization of spatio–temporal correlation matrices,” IEEE Signal Process. Lett., vol. 4, no. 9, pp. 266–268, Sep. 1997. [41] J. Yang and M. Kaveh, “Adaptive eigenspace algorithms for direction or frequency estimation and tracking,” IEEE Trans. Acoust., Speech, Signal Process., vol. 36, no. 2, pp. 241–251, Feb. 1988. [42] B. Yang, “Projection approximation subspace tracking,” IEEE Trans. Signal Process., vol. 43, no. 1, pp. 95–107, Jan. 1995. [43] J. J. Hopfield, “Neural networks and physical systems with emergent collective computational abilities,” Proc. Nat. Acad. Sci. USA, vol. 79, pp. 2554–2558, 1982. [44] J. P. LaSalle, The Stability of Dynamical Systems. Philadelphia, PA: SIAM, 1976. [45] M. Viberg, P. Stoica, and B. Ottersten, “Array processing in correlated noise fields based on instrumental variables and subspace fitting,” IEEE Trans. Signal Process., vol. 43, no. 5, pp. 1187–1199, May 1995. [46] D.-Z. Feng, X.-D. Zhang, and Z. Bao, “A neural network leaning for adaptively extracting cross-correlation features between two high-dimensional data stream,” IEEE Trans. Neural Netw., vol. 15, no. 6, pp. 1541–1554, Nov. 2004. [47] J. R. Magnus and H. Neudecker, Matrix Differential Calculus With Applications in Statistics and Econometrics, 2nd ed. New York: Wiley, 1991. Da-Zheng Feng (M’02) was born in December 1959. He received the Diploma degree from Xi’an University of Technology, Xi’an, China, in 1982, the M. S. degree from Xi’an Jiaotong University, Xi’an, China, in 1986, and the Ph.D. degree in electronic engineering from Xidian University, Xi’an, China, in 1995. From May 1996 to May 1998, he was a Postdoctoral Research Affiliate and an Associate Professor with Xi’an Jiaotong University, China. From May 1998 to June 2000, he was an Associate Professor with Xidian University. Since July 2000, he has been a Professor at Xidian University. He has published more than 50 journal papers. His current research interests include signal processing, intelligence and brain information processing, and InSAR. Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply. FENG et al.: MATRIX-GROUP ALGORITHM Wei Xing Zheng (M’93–SM’98) was born in Nanjing, China. He received the B.Sc. degree in applied mathematics and the M.Sc. and Ph.D. degrees in electrical engineering, all from the Southeast University, Nanjing, China, in 1982, 1984, and 1989, respectively. From 1984 to 1991, he was with the Institute of Automation at the Southeast University, Nanjing, China, first as a Lecturer and later as an Associate Professor. From 1991 to 1994, he was a Research Fellow in the Department of Electrical and Electronic Engineering at the Imperial College of Science, Technology and Medicine, University of London, London, U.K.; in the Department of Mathematics at the University of Western Australia, Perth, Australia; and in the Australian Telecommunications Research Institute at the Curtin University of Technology, Perth, Australia. In 1994. He joined the University of Western Sydney, Sydney, Australia, where he has been an Associate Professor since 2001. He has also held various visiting positions in the Institute for Network Theory and Circuit Design at the Munich University of Technology, Munich, Germany; in the Department of Electrical Engineering at the University of Virginia, Charlottesville, VA; and in the Department of Electrical and Computer Engineering at the University of California, Davis, CA. His research interests are in the areas of systems and controls, signal processing, and communications. He coauthored the book Linear Multivariable Systems: Theory and Design (Nanjing, China: SEU Press, 1991). Dr. Zheng has received several science prizes, including the Chinese National Natural Science Prize awarded by the Chinese Government in 1991. He has served on the technical program or organizing committees of many international conferences, including the joint Forty-Fourth IEEE Conference on Decision and Control and the 2005 European Control Conference (CDC-ECC’2005), the Forty-Fifth IEEE Conference on Decision and Control (CDC’2006), and the Fourteenth IFAC Symposium on System Identification (SYSID’2006). He has also served on several technical committees, including the IEEE Circuits and Systems Society’s Technical Committee on Neural Systems and Applications (2002 to present), the IEEE Circuits and Systems Society’s Technical Committee on Blind Signal Processing (2003 to present), and the IFAC Technical Committee on Modelling, Identification and Signal Processing (1999 to present). He served as an Associate Editor for the IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—I: FUNDAMENTAL THEORY AND APPLICATIONS (2002–2004). Since 2004, he has been an Associate Editor for the IEEE TRANSACTIONS ON AUTOMATIC CONTROL and, since 2000, an Associate Editor of the IEEE Control Systems Society’s Conference Editorial Board. 977 Andrzej Cichocki received the M.Sc. (with honors), Ph.D., and Dr.Sc. (Habilitation) degrees, all in electrical engineering, from the Warsaw University of Technology, Warsaw, Poland. Since 1972, he has been with the Institute of Theory of Electrical Engineering, Measurement and Information Systems, Faculty of Electrical Engineering at the Warsaw University of Technology, where he received the title of a full Professor in 1995. He spent several years at University Erlangen-Nuerenberg, Germany, as the Chair of Applied and Theoretical Electrical Engineering directed by Prof. R. Unbehauen, as an Alexander-von-Humboldt Research Fellow and Guest Professor. From 1995 to 1997, he was a team leader of the Laboratory for Artificial Brain Systems, at Frontier Research Program RIKEN, Japan, in the Brain Information Processing Group. He is currently the head of the Laboratory for Advanced Brain Signal Processing, RIKEN Brain Science Institute, Saitama, Japan, in the Brain-Style Computing Group directed by Prof. S. Amari. He is coauthor of more than 200 technical papers and three internationally recognized monographs (two of them translated to Chinese): Adaptive Blind Signal and Image Processing (New York: Wiley, 2003, rev. ed.), CMOS Switched-Capacitor and Continuous-Time Integrated Circuits and Systems (New York: Springer-Verlag, 1989), and Neural Networks for Optimizations and Signal Processing (Chichester, U.K.: Teubner-Wiley, 1994). Dr. Cichocki is Editor-in-Chief of the International Journal Computational Intelligence and Neuroscience and Associate Editor of IEEE TRANSACTIONS ON NEURAL NETWORKS. Authorized licensed use limited to: XIDIAN UNIVERSITY. Downloaded on August 14, 2009 at 08:49 from IEEE Xplore. Restrictions apply.